Don't wanna be here? Send us removal request.

Text

How Blockchain Technology is Revolutionizing Cybersecurity

When I began my journey as a solution architect, I knew that the foundation of my expertise needed to be solid. One of the key milestones in shaping my career was enrolling in a cybersecurity training online program. The comprehensive curriculum and hands-on approach provided by the ACTE Institute opened new doors for me and gave me the confidence to tackle complex challenges in the tech space. This training not only equipped me with the necessary skills but also sparked my interest in how emerging technologies like blockchain could redefine cybersecurity.

Blockchain technology has become one of the most transformative innovations of the 21st century, fundamentally changing the way industries operate—and cybersecurity is no exception. As organizations face increasing threats from sophisticated cyberattacks, blockchain offers unique advantages that traditional systems often lack. Here's how blockchain is revolutionizing cybersecurity:

The Rise of Blockchain in Cybersecurity

Cybersecurity has always been about safeguarding data, networks, and systems from unauthorized access or attacks. However, with the exponential increase in the volume of data and the growing complexity of cyber threats, traditional solutions often fall short. Blockchain’s decentralized and tamper-proof architecture is becoming a game-changer in this context.

Unlike centralized systems, where a single point of failure can compromise the entire infrastructure, blockchain distributes data across a network of nodes. This decentralized approach ensures that even if one node is compromised, the integrity of the data remains intact. For cybersecurity professionals, this means creating systems that are inherently more secure and resilient to attacks.

Key Applications of Blockchain in Cybersecurity

1. Data Integrity and Protection

One of blockchain’s most significant contributions to cybersecurity is its ability to guarantee data integrity. By using cryptographic hashes, blockchain ensures that once data is written to the ledger, it cannot be altered without detection. This makes it nearly impossible for hackers to tamper with sensitive information, whether it's financial records, personal data, or intellectual property.

For example, in supply chain management, blockchain can track the provenance of goods and ensure that the data remains unaltered throughout its journey. Similarly, in healthcare, patient records can be securely stored and accessed only by authorized personnel, reducing the risk of breaches.

2. Securing Internet of Things (IoT) Devices

The rapid adoption of IoT devices has introduced a new frontier of cybersecurity challenges. These devices often have limited security protocols, making them prime targets for cyberattacks. Blockchain technology can address this issue by enabling secure and decentralized communication between devices.

Through blockchain, IoT devices can authenticate each other and establish secure communication channels without relying on a central authority. This reduces the likelihood of Distributed Denial-of-Service (DDoS) attacks and other vulnerabilities associated with IoT networks.

3. Identity Management

Traditional identity management systems often rely on centralized databases, which are attractive targets for hackers. Blockchain introduces a decentralized model for identity verification, where users have control over their data. This concept, known as Self-Sovereign Identity (SSI), allows individuals to store their credentials on a blockchain and share them securely with third parties when required.

By leveraging blockchain, organizations can reduce the risk of identity theft and fraud while enhancing user privacy. This is particularly relevant for industries like finance, healthcare, and e-commerce, where identity verification is critical.

4. Preventing DDoS Attacks

Distributed Denial-of-Service (DDoS) attacks are a significant threat to businesses, causing downtime and financial losses. Blockchain can mitigate this risk by decentralizing Domain Name System (DNS) infrastructure. Traditional DNS systems are centralized, making them vulnerable to attacks. By using blockchain, DNS records are distributed across a network, making it nearly impossible for attackers to target a single point of failure.

Blockchain Challenges in Cybersecurity

While blockchain holds immense potential for enhancing cybersecurity, it’s not without its challenges. Some of the key hurdles include:

Scalability: Blockchain networks often struggle to handle large volumes of transactions quickly, which can be a bottleneck for certain applications.

Energy Consumption: The consensus mechanisms used in blockchain, such as Proof of Work (PoW), are energy-intensive and may not be sustainable for all use cases.

Regulatory Compliance: Blockchain’s decentralized nature poses challenges for regulatory compliance, especially in industries with strict data protection laws.

Overcoming these challenges requires continued innovation and collaboration between blockchain developers and cybersecurity professionals.

Real-World Use Cases of Blockchain in Cybersecurity

Several organizations and industries are already leveraging blockchain to enhance their cybersecurity measures. Here are a few examples:

Financial Services: Blockchain-based solutions are being used to secure financial transactions, prevent fraud, and streamline Know Your Customer (KYC) processes.

Healthcare: Blockchain ensures the secure storage and sharing of electronic health records, reducing the risk of breaches and unauthorized access.

Supply Chain: Companies are adopting blockchain to verify the authenticity of products and prevent counterfeit goods from entering the market.

Government: Blockchain is being used to secure voting systems, ensuring transparency and reducing the risk of election fraud.

The Role of Cybersecurity Training in Embracing Blockchain

As a solution architect, my journey into the world of blockchain and cybersecurity would not have been possible without the foundational knowledge I gained from my training at the ACTE Institute. Their cybersecurity training in chennai equipped me with the practical skills and theoretical understanding necessary to navigate this complex field.

The training covered essential topics like cryptography, network security, and risk management, which laid the groundwork for understanding how blockchain technology could be applied to cybersecurity. The hands-on projects and real-world case studies provided me with the confidence to implement blockchain-based solutions in my current role.

The Future of Blockchain in Cybersecurity

The integration of blockchain technology into cybersecurity is still in its early stages, but the potential is undeniable. As organizations continue to adopt digital transformation initiatives, the demand for secure, scalable, and efficient solutions will only grow. Blockchain’s unique attributes position it as a critical tool in the fight against cyber threats.

However, realizing its full potential requires a collaborative effort between technology providers, cybersecurity experts, and regulatory bodies. By addressing the challenges and investing in education and training, we can unlock new possibilities and create a safer digital world.

Conclusion

Blockchain technology is revolutionizing the field of cybersecurity by addressing some of its most pressing challenges. From ensuring data integrity to securing IoT devices and preventing DDoS attacks, the applications of blockchain are vast and varied. For professionals looking to make an impact in this space, investing in comprehensive training programs like those offered by the ACTE Institute can be a game-changer.

Reflecting on my journey, I can confidently say that my cybersecurity training was instrumental in helping me land my current role and understand the transformative power of blockchain. With the right skills and knowledge, anyone can be a part of this exciting revolution

#ai#artificialintelligence#digitalmarketing#marketingstrategy#database#adtech#machinelearning#cybersecurity

0 notes

Text

Cloud-Native Security: Transforming Cyber Defense in a Multi-Cloud World

Cloud-Native Security: Transforming Cyber Defense in a Multi-Cloud World

As a solution architect, my work often involves designing and implementing robust systems in increasingly complex cloud environments. Recently, as I enhanced my expertise through cloud computing training online, I encountered the critical importance of cloud-native security in safeguarding multi-cloud ecosystems. The growing reliance on multi-cloud infrastructures demands a fundamental transformation in how we approach cyber defense.

What is Cloud-Native Security?

Cloud-native security refers to a framework tailored for cloud environments, where applications and services are designed, built, and deployed in the cloud. Unlike traditional security models, which focus on perimeter defenses, cloud-native security emphasizes integrating protection into every layer of the cloud infrastructure. Key principles include:

1. Microservices Security: Protecting each service within a distributed architecture.

2. Zero Trust: Assuming no inherent trust within the network, requiring strict verification.

3. Automation and Scalability: Leveraging automated tools to address dynamic cloud environments.

4. Integration Across CI/CD Pipelines: Embedding security into development workflows.

Why Multi-Cloud Adoption is Changing the Game

Organizations increasingly adopt multi-cloud strategies, leveraging the strengths of various providers like AWS, Azure, and Google Cloud. This approach offers flexibility and redundancy but introduces unique challenges:

● Complexity: Managing security policies across multiple platforms can be overwhelming.

● Inconsistency: Different providers have distinct tools and security configurations.

● Increased Attack Surface: More platforms mean more entry points for attackers.

Key Challenges in Cloud-Native Security

1. Visibility: Monitoring and managing assets spread across multiple clouds.

2. Compliance: Ensuring adherence to diverse regulatory requirements across regions.

3. Data Security: Protecting sensitive information during transfer, storage, and processing.

4. Misconfigurations: A common cause of breaches due to human error or lack of expertise.

Strategies for Cloud-Native Security

To address these challenges, organizations must adopt proactive and innovative strategies:

1. Unified Security Posture: Deploy centralized security tools to monitor and manage multi-cloud environments effectively.

2. Automation and AI: Utilize AI-driven solutions to detect anomalies, automate threat responses, and reduce manual workloads.

3. Identity and Access Management (IAM): Enforce least-privilege access across users, applications, and systems.

4. Shift-Left Security: Integrate security measures early in the development lifecycle.

5. Continuous Monitoring: Employ real-time monitoring tools to identify and address vulnerabilities swiftly.

The Role of Training and ExpertiseWorking with multi-cloud infrastructures demands continuous learning and adaptation.

Training programs, such as cloud computing training in Bangalore, equip professionals with the knowledge and skills to navigate complex cloud ecosystems. These program emphasize hands-on experience with the latest tools and frameworks, empowering architects and engineers to build secure systems tailored to their organization's needs.

Real-World Implications

Organizations that prioritize cloud-native security are better positioned to:

● Mitigate Breaches: Prevent unauthorized access and data leaks.

● Ensure Business Continuity: Minimize downtime caused by attacks.

● Achieve Compliance: Meet regulatory standards across industries and regions.

● Drive Innovation: Focus on growth without being hindered by security concerns.

Conclusion

Cloud-native security is no longer optional; it is essential in today’s multi-cloud world. As I’ve learned through my professional experiences and training, the journey toward robust cloud security involves both strategic planning and technical expertise. By leveraging resources like cloud computing training in Bangalore, professionals can stay ahead of evolving threats and contribute to creating resilient, secure infrastructures. The future of cybersecurity lies in our ability to adapt and innovate, ensuring a safer digital ecosystem for all.

0 notes

Text

Common Myths About Data Analytics and the Truth Behind Them

As a solution architect, my journey has been deeply intertwined with the evolving landscape of data analytics. Throughout this journey, I've encountered numerous misconceptions that often deter organizations from fully leveraging the power of data. Enrolling in data analytics training online at ACTE Institute was a pivotal decision that equipped me with the knowledge to debunk these myths and harness data analytics effectively.

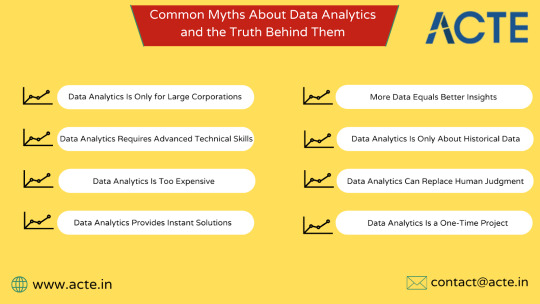

Myth 1: Data Analytics Is Only for Large Corporations

A prevalent belief is that data analytics is a domain exclusive to large enterprises with substantial resources. This misconception leads smaller businesses to shy away from adopting data-driven strategies.

Truth: Data analytics is scalable and accessible to businesses of all sizes. With the advent of user-friendly tools and cloud-based solutions, even small and medium-sized enterprises can implement data analytics to gain valuable insights. ACTE's training emphasized practical approaches, demonstrating that with the right tools and strategies, any organization can benefit from data analytics.

Myth 2: Data Analytics Requires Advanced Technical Skills

Many assume that a deep technical background is a prerequisite for engaging in data analytics, which can be intimidating for professionals from non-technical fields.

Truth: Modern data analytics tools are designed to be intuitive and user-friendly, lowering the barrier to entry. During my training at ACTE, I learned that with proper guidance and practice, individuals from diverse professional backgrounds can acquire the necessary skills to perform effective data analysis.

Myth 3: Data Analytics Is Too Expensive

The perceived high cost of data analytics solutions often discourages organizations from investing in them.

Truth: There are numerous cost-effective and even free data analytics tools available today. Open-source platforms like R and Python, along with affordable software solutions, make data analytics financially accessible. ACTE's curriculum included training on these tools, highlighting how organizations can implement data analytics without significant financial burdens.

Myth 4: Data Analytics Provides Instant Solutions

Some believe that data analytics offers immediate answers to complex business questions.

Truth: Data analytics is a process that involves data collection, cleaning, analysis, and interpretation. It requires time and iterative refinement to yield meaningful insights. ACTE's training instilled in me the importance of patience and diligence in the analytical process, ensuring that conclusions are well-founded and actionable.

Myth 5: More Data Equals Better Insights

There's a common assumption that accumulating vast amounts of data will automatically lead to better insights.

Truth: The quality of data is far more critical than quantity. Focusing on relevant and accurate data is essential for meaningful analysis. ACTE emphasized the importance of data governance and the need to prioritize data quality to derive valuable insights.

Myth 6: Data Analytics Is Only About Historical Data

Many view data analytics as a tool solely for examining past performance.

Truth: While historical data analysis is fundamental, data analytics also encompasses predictive and prescriptive analytics, which forecast future trends and recommend actions. ACTE's comprehensive training covered these advanced aspects, enabling me to apply analytics proactively rather than reactively.

Myth 7: Data Analytics Can Replace Human Judgment

There's a fear that data analytics might supplant human decision-making.

Truth: Data analytics is a tool that supports and enhances human judgment but does not replace it. The insights derived from data require contextual understanding and domain expertise to be effectively applied. ACTE highlighted the symbiotic relationship between data-driven insights and human intuition in decision-making processes.

Myth 8: Data Analytics Is a One-Time Project

Some organizations treat data analytics as a one-off initiative rather than an ongoing process.

Truth: Data analytics should be an integral part of an organization's continuous improvement strategy. Regular analysis allows businesses to stay agile and responsive to changing dynamics. ACTE's training reinforced the importance of embedding data analytics into the organizational culture for sustained success.

The Transformative Role of ACTE Institute

Myth-busting and skill acquisition in data analytics were significantly enhanced by the training I received at ACTE Institute. Their structured and practical approach demystified complex concepts and provided hands-on experience with industry-relevant tools. This training was instrumental in advancing my career and effectiveness as a solution architect.

Real-World Applications of Data Analytics

Implementing data analytics has led to tangible benefits in various projects:

Enhanced Operational Efficiency: By analyzing workflow data, we identified bottlenecks and streamlined processes, leading to increased productivity.

Improved Customer Insights: Data analytics enabled a deeper understanding of customer preferences, allowing for personalized marketing strategies that boosted engagement.

Risk Management: Predictive analytics facilitated the identification of potential risks, enabling proactive mitigation strategies.

Conclusion

Dispelling these common myths is crucial for organizations aiming to leverage data analytics effectively. The comprehensive training provided by ACTE Institute was pivotal in enhancing my understanding and application of data analytics. For professionals seeking to deepen their expertise, programs like data analytics training in Hyderabad offer valuable opportunities to develop practical skills and knowledge.

Embracing the realities of data analytics empowers organizations to make informed decisions, drive innovation, and maintain a competitive edge in today's data-driven world.

#adtech#artificialintelligence#digitalmarketing#database#ai#marketingstrategy#machinelearning#cybersecurity

0 notes

Text

The Impact of 5G on Network Security: What You Need to Know

Embarking on my journey as a solution architect, I quickly realized the growing significance of staying ahead in the realm of cybersecurity. To fortify my knowledge, I enrolled in a cybersecurity training online program at ACTE Institute. This decision proved to be the cornerstone of my professional growth, equipping me with the expertise necessary to tackle challenges in advanced technologies like 5G.

The rollout of 5G networks represents a monumental leap in connectivity, offering unparalleled speed, low latency, and the capacity to connect millions of devices simultaneously. However, with these advancements come profound security challenges that must be addressed to fully harness the potential of 5G.

What Makes 5G Unique?

Unlike its predecessors, 5G is not merely an upgrade in speed; it’s a transformative technology designed to support diverse applications, such as:

IoT Ecosystems: Connecting billions of devices, from smart appliances to industrial equipment.

Enhanced Mobile Broadband: Delivering ultra-fast internet for seamless streaming and communication.

Critical Communications: Enabling real-time responses for applications like autonomous vehicles and remote surgeries.

While these innovations are groundbreaking, they introduce complex vulnerabilities that expand the attack surface for potential cyber threats.

Key Security Challenges in 5G

1. Broader Attack Surface

With the integration of IoT and edge computing, 5G networks host a multitude of endpoints, each presenting a potential entry point for malicious actors. Securing this vast and distributed architecture is a significant challenge.

2. Network Slicing Vulnerabilities

5G allows for network slicing, where virtual networks operate independently on shared infrastructure. While this enhances flexibility, it also raises concerns about isolation breaches and unauthorized access between slices.

3. Supply Chain Risks

The global supply chain for 5G infrastructure components increases the risk of tampering and compromise during manufacturing or deployment.

4. Increased Dependency on Software

The software-driven nature of 5G networks introduces risks related to bugs, misconfigurations, and vulnerabilities that can be exploited by attackers.

How to Address 5G Security Challenges

1. Adopt Zero Trust Principles

Zero trust architectures ensure that every device and user is verified before gaining access to the network. Continuous monitoring and strict authentication protocols are essential to mitigate risks.

2. Secure IoT Devices

Manufacturers must prioritize security in IoT devices by implementing strong encryption, regular updates, and robust authentication mechanisms.

3. Enhance Collaboration

Governments, industry leaders, and technology providers must work together to establish unified security standards and share threat intelligence to counteract emerging risks.

4. Utilize Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML can help detect anomalies, predict potential threats, and automate responses, thereby enhancing the overall security posture of 5G networks.

Real-World Applications of 5G and Their Security Implications

1. Smart Cities

5G enables smart city initiatives, such as intelligent traffic systems and connected infrastructure. However, breaches in these systems could disrupt critical services and endanger public safety.

2. Healthcare

Remote surgeries, telemedicine, and real-time health monitoring are revolutionized by 5G. Protecting patient data and ensuring the integrity of these systems are vital to maintaining trust and safety.

3. Autonomous Vehicles

The real-time communication capabilities of 5G are critical for self-driving cars. Securing these communication channels is essential to prevent accidents and malicious interference.

4. Industrial Automation

5G facilitates advanced automation in industries. Cybersecurity measures are required to safeguard intellectual property and prevent disruptions in production.

Reflecting on My Training and Experience

When I look back at my professional journey, the cybersecurity training I received at ACTE Institute in Bangalore stands out as a defining moment. This training not only deepened my understanding of network security but also provided hands-on experience in addressing real-world scenarios. From exploring advanced encryption techniques to implementing zero trust frameworks, the program laid the groundwork for my success as a solution architect.

Without this training, navigating the complexities of technologies like 5G would have been an uphill battle. It reinforced my ability to design robust security solutions, making me an indispensable asset to my organization.

Conclusion

The introduction of 5G networks signifies a new era of connectivity and innovation. While the technology offers transformative benefits, it also demands a proactive approach to security. By addressing its unique vulnerabilities and fostering collaboration among stakeholders, we can ensure a secure and resilient future.

For professionals aiming to excel in this dynamic field, investing in comprehensive training programs is imperative. My experience with ACTE’s cybersecurity training in Bangalore has been a game-changer, enabling me to embrace challenges and contribute meaningfully to the evolving landscape of network security. The knowledge and skills I gained continue to shape my career, proving that the right training can unlock endless opportunities.

#adtech#digitalmarketing#marketingstrategy#artificialintelligence#ai#machinelearning#cybersecurity#database

0 notes

Text

Data Warehousing vs. Data Lakes: Choosing the Right Approach for Your Organization

As a solution architect, my journey into data management has been shaped by years of experience and focused learning. My turning point was the data analytics training online, I completed at ACTE Institute. This program gave me the clarity and practical knowledge I needed to navigate modern data architectures, particularly in understanding the key differences between data warehousing and data lakes.

Both data warehousing and data lakes have become critical components of the data strategies for many organizations. However, choosing between them—or determining how to integrate both—can significantly impact how an organization manages and utilizes its data.

What is a Data Warehouse?

Data warehouses are specialized systems designed to store structured data. They act as centralized repositories where data from multiple sources is aggregated, cleaned, and stored in a consistent format. Businesses rely on data warehouses for generating reports, conducting historical analysis, and supporting decision-making processes.

Data warehouses are highly optimized for running complex queries and generating insights. This makes them a perfect fit for scenarios where the primary focus is on business intelligence (BI) and operational reporting.

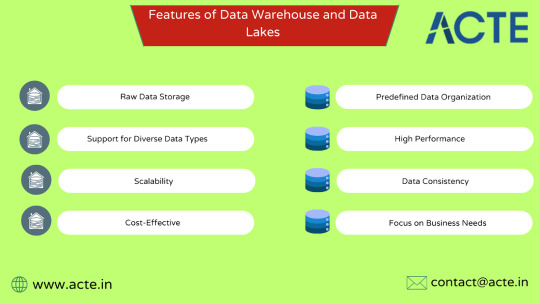

Features of Data Warehouses:

Predefined Data Organization: Data warehouses rely on schemas that structure the data before it is stored, making it easier to analyze later.

High Performance: Optimized for query processing, they deliver quick results for detailed analysis.

Data Consistency: By cleansing and standardizing data from multiple sources, warehouses ensure consistent and reliable insights.

Focus on Business Needs: These systems are designed to support the analytics required for day-to-day business decisions.

What is a Data Lake?

Data lakes, on the other hand, are designed for flexibility and scalability. They store vast amounts of raw data in its native format, whether structured, semi-structured, or unstructured. This approach is particularly valuable for organizations dealing with large-scale analytics, machine learning, and real-time data processing.

Unlike data warehouses, data lakes don’t require data to be structured before storage. Instead, they use a schema-on-read model, where the data is organized only when it’s accessed for analysis.

Features of Data Lakes:

Raw Data Storage: Data lakes retain data in its original form, providing flexibility for future analysis.

Support for Diverse Data Types: They can store everything from structured database records to unstructured video files or social media content.

Scalability: Built to handle massive amounts of data, data lakes are ideal for organizations with dynamic data needs.

Cost-Effective: Data lakes use low-cost storage options, making them an economical solution for large datasets.

Understanding the Differences

To decide which approach works best for your organization, it’s essential to understand the key differences between data warehouses and data lakes:

Data Structure: Data warehouses store data in a structured format, whereas data lakes support structured, semi-structured, and unstructured data.

Processing Methodology: Warehouses follow a schema-on-write model, while lakes use a schema-on-read approach, offering greater flexibility.

Purpose: Data warehouses are designed for business intelligence and operational reporting, while data lakes excel at advanced analytics and big data processing.

Cost and Scalability: Data lakes tend to be more cost-effective, especially when dealing with large, diverse datasets.

How to Choose the Right Approach

Choosing between a data warehouse and a data lake depends on your organization's goals, data strategy, and the type of insights you need.

When to Choose a Data Warehouse:

Your organization primarily deals with structured data that supports reporting and operational analysis.

Business intelligence is at the core of your decision-making process.

You need high-performance systems to run complex queries efficiently.

Data quality, consistency, and governance are critical to your operations.

When to Choose a Data Lake:

You work with diverse data types, including unstructured and semi-structured data.

Advanced analytics, machine learning, or big data solutions are part of your strategy.

Scalability and cost-efficiency are essential for managing large datasets.

You need a flexible solution that can adapt to emerging data use cases.

Combining Data Warehouses and Data Lakes

In many cases, organizations find value in adopting a hybrid approach that combines the strengths of data warehouses and data lakes. For example, raw data can be ingested into a data lake, where it’s stored until it’s needed for specific analytical use cases. The processed and structured data can then be moved to a data warehouse for BI and reporting purposes.

This integrated strategy allows organizations to benefit from the scalability of data lakes while retaining the performance and reliability of data warehouses.

My Learning Journey with ACTE Institute

During my career, I realized the importance of mastering these technologies to design efficient data architectures. The data analytics training in Hyderabad program at ACTE Institute provided me with a hands-on understanding of both data lakes and data warehouses. Their comprehensive curriculum, coupled with practical exercises, helped me bridge the gap between theoretical knowledge and real-world applications.

The instructors at ACTE emphasized industry best practices and use cases, enabling me to apply these concepts effectively in my projects. From understanding how to design scalable data lakes to optimizing data warehouses for performance, every concept I learned has played a vital role in my professional growth.

Final Thoughts

Data lakes and data warehouses each have unique strengths, and the choice between them depends on your organization's specific needs. With proper planning and strategy, it’s possible to harness the potential of both systems to create a robust and efficient data ecosystem.

My journey in mastering these technologies, thanks to the guidance of ACTE Institute, has not only elevated my career but also given me the tools to help organizations make informed decisions in their data strategies. Whether you're working with structured datasets or diving into advanced analytics, understanding these architectures is crucial for success in today’s data-driven world.

#machinelearning#artificialintelligence#digitalmarketing#marketingstrategy#adtech#database#cybersecurity#ai

0 notes

Text

Vendor Lock-in or Vendor Partnership: Navigating the New Cloud Ecosystem

As a solution architect, my journey through the evolving landscape of cloud computing has been both challenging and rewarding. The comprehensive cloud computing training online provided by ACTE Institute played a pivotal role in shaping my understanding and expertise in this domain. This training equipped me with the knowledge to navigate complex cloud ecosystems and make informed decisions that align with organizational goals.

In today's digital era, cloud computing has become the backbone of modern enterprises, offering scalability, flexibility, and cost-efficiency. However, with these advantages comes the critical consideration of how organizations engage with their cloud service providers. The debate between vendor lock-in and vendor partnership is central to this discussion, as it influences an organization's agility, innovation potential, and long-term success.

Understanding Vendor Lock-in

Vendor lock-in refers to a situation where a customer becomes dependent on a single cloud provider's products and services, making it challenging to switch to another provider without incurring substantial costs or facing technical difficulties. This dependency can arise from the use of proprietary technologies, unique service offerings, or data formats that are not easily transferable.

The implications of vendor lock-in are significant. Organizations may find themselves constrained by the limitations of their chosen provider, unable to leverage innovative solutions from other vendors. Additionally, they may face escalating costs, as the lack of competition can lead to unfavorable pricing models. The risk of service disruptions also looms large, as any issues with the provider can directly impact the organization's operations.

Embracing Vendor Partnership

On the other hand, viewing the relationship with cloud providers as a partnership can yield numerous benefits. A vendor partnership is characterized by collaboration, mutual trust, and shared objectives. In such a relationship, the provider becomes more than just a service supplier; they become a strategic ally invested in the organization's success.

This partnership approach fosters innovation, as both parties work together to develop customized solutions that drive business growth. It also enhances flexibility, allowing organizations to adapt to changing market dynamics swiftly. Moreover, a strong partnership can lead to better support and service quality, as the provider is more attuned to the organization's specific needs and challenges.

Strategies to Mitigate Vendor Lock-in

While the benefits of vendor partnerships are clear, it's essential to implement strategies that mitigate the risks associated with vendor lock-in. Here are some approaches that have proven effective in my experience:

Adopt Open Standards and Interoperability: Utilizing open-source technologies and adhering to industry standards can reduce dependency on a single provider. This approach ensures that applications and data are compatible across different platforms, facilitating easier migration if needed. citeturn0search0

Implement a Multi-Cloud Strategy: Distributing workloads across multiple cloud providers can prevent over-reliance on one vendor. This strategy not only mitigates the risk of lock-in but also allows organizations to leverage the unique strengths of different providers.

Regularly Review Contracts and SLAs: It's crucial to negotiate terms that provide flexibility and protect the organization's interests. Regular reviews ensure that the services align with evolving business needs and market conditions.

Invest in Staff Training and Development: Equipping the team with diverse cloud skills reduces reliance on vendor-specific solutions. Training programs, such as those offered by ACTE Institute, can broaden the team's expertise and enhance their ability to manage multi-cloud environments effectively.

The Role of ACTE Institute in My Professional Journey

Reflecting on my career, the cloud computing training in Bangalore provided by ACTE Institute stands out as a cornerstone of my professional development. The curriculum was meticulously designed to cover both foundational concepts and advanced topics, ensuring a holistic understanding of cloud ecosystems.

The instructors, with their extensive industry experience, provided practical insights that bridged the gap between theory and real-world application. The hands-on projects and case studies enabled me to apply the learned concepts, fostering a deeper comprehension and honing my problem-solving skills.

Moreover, the emphasis on emerging trends and best practices prepared me to navigate the complexities of vendor relationships effectively. The knowledge gained empowered me to establish strategic partnerships with cloud providers, leveraging their capabilities while safeguarding my organization's autonomy.

Conclusion

Navigating the new cloud ecosystem requires a delicate balance between leveraging vendor capabilities and maintaining organizational independence. By understanding the dynamics of vendor lock-in and embracing strategic partnerships, organizations can harness the full potential of cloud computing.

My journey, enriched by the training at ACTE Institute, has equipped me with the skills and insights to make informed decisions in this realm. As cloud technologies continue to evolve, staying abreast of best practices and fostering collaborative vendor relationships will be pivotal in driving sustained success.

#adtech#digitalmarketing#artificialintelligence#marketingstrategy#ai#machinelearning#database#cybersecurity

0 notes

Text

The Future of Multi-Cloud Architectures: Benefits and Challenges

When I started my journey as a solution architect, one of the most transformative decisions I made was enrolling in a cloud computing training online program at ACTE Institute. This training laid the foundation for my understanding of multi-cloud architectures and their increasing relevance in today’s dynamic IT landscape. Without this specialized training, stepping into my current role would have been incredibly challenging, if not impossible.

The rapid adoption of cloud computing has transformed how organizations operate, enabling scalability, flexibility, and cost-efficiency. As businesses grow, the need to diversify and adopt multi-cloud strategies has become paramount. Multi-cloud architectures allow organizations to leverage the strengths of multiple cloud providers, reducing dependency on a single vendor and ensuring greater resilience. However, these architectures come with their own set of challenges, which must be carefully navigated to maximize their potential.

What is a Multi-Cloud Architecture?

Multi-cloud refers to the use of multiple cloud computing services from different providers within a single heterogeneous architecture. Unlike hybrid cloud—which combines private and public clouds—multi-cloud involves leveraging multiple public cloud services to distribute workloads, optimize costs, and ensure redundancy.

Key characteristics of multi-cloud architectures include:

Flexibility: Organizations can select the best cloud services for specific workloads.

Redundancy: Ensures high availability and disaster recovery by distributing data across multiple providers.

Cost Optimization: Enables businesses to negotiate better pricing and avoid vendor lock-in.

Benefits of Multi-Cloud Architectures

1. Avoiding Vendor Lock-In

Relying on a single cloud provider can lead to dependency, limiting an organization’s flexibility and negotiating power. Multi-cloud strategies mitigate this risk by diversifying resources across providers.

2. Enhanced Resilience and Redundancy

By leveraging multiple cloud providers, organizations can ensure continuous service availability. In the event of a failure with one provider, workloads can seamlessly shift to another, minimizing downtime.

3. Optimized Performance

Different cloud providers excel in specific areas. A multi-cloud strategy allows organizations to choose the best-in-class services for their unique requirements, ensuring optimal performance.

4. Regulatory Compliance

Operating in multiple regions often involves adhering to varying regulatory requirements. Multi-cloud architectures enable businesses to use providers that meet specific regional compliance standards.

5. Cost Efficiency

Multi-cloud strategies allow businesses to optimize costs by choosing the most cost-effective solutions for specific workloads and taking advantage of pricing competition among providers.

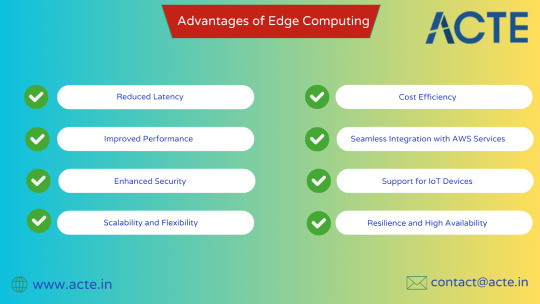

Challenges of Multi-Cloud Architectures

1. Increased Complexity

Managing multiple cloud environments requires robust orchestration and monitoring tools. The complexity of maintaining consistent performance, security, and compliance across providers can be overwhelming.

2. Data Security and Compliance

Storing and processing data across multiple platforms increases the risk of breaches and compliance violations. Ensuring data protection and adhering to regulatory requirements necessitates a comprehensive security strategy.

3. Interoperability Issues

Different cloud providers often use proprietary technologies, making it challenging to ensure seamless interoperability between platforms.

4. Skill Requirements

Adopting a multi-cloud strategy demands expertise in various cloud platforms. Organizations must invest in upskilling their teams or hiring specialists to manage these environments effectively.

5. Cost Management

While multi-cloud can optimize costs, the lack of centralized cost monitoring tools can lead to inefficiencies. Organizations need to implement robust cost management practices to avoid overspending.

Key Strategies for Implementing Multi-Cloud Architectures

1. Define Clear Objectives

Before adopting a multi-cloud strategy, organizations should identify their goals, whether it’s cost optimization, improved performance, or enhanced resilience.

2. Invest in Training and Upskilling

Training programs, such as the one I completed at ACTE Institute, are crucial for equipping professionals with the knowledge and skills needed to manage multi-cloud environments effectively.

3. Leverage Cloud Management Tools

Cloud management platforms enable organizations to monitor and orchestrate resources across multiple providers, ensuring consistency and efficiency.

4. Focus on Security

Implementing a zero-trust architecture, encrypting data, and conducting regular security audits are essential for maintaining data integrity and compliance.

5. Plan for Interoperability

Organizations should prioritize using open standards and APIs to facilitate seamless integration and interoperability between different cloud platforms.

Real-World Use Cases of Multi-Cloud Architectures

1. Disaster Recovery and Business Continuity

Organizations can ensure uninterrupted service delivery by distributing workloads across multiple cloud providers. In the event of a failure, critical systems can quickly shift to a secondary provider.

2. Global Reach and Regional Compliance

Multi-cloud architectures allow businesses to operate in multiple regions while adhering to local regulatory requirements. For instance, data can be stored in compliance with GDPR in Europe and HIPAA in the United States.

3. Optimized Application Performance

By leveraging the strengths of different providers, businesses can optimize performance for specific applications. For example, a latency-sensitive application might run on a provider known for low-latency services, while data storage might be handled by a cost-efficient provider.

4. E-commerce Platforms

E-commerce companies often use multi-cloud strategies to handle traffic spikes during sales events. This ensures scalability and reliability, even during peak demand periods.

My Journey and the Role of Training

Reflecting on my career, the cloud computing training I received at ACTE Institute in Bangalore was instrumental in shaping my understanding of multi-cloud architectures. The course provided hands-on experience with leading cloud platforms, teaching me how to design, implement, and manage complex multi-cloud environments.

The training also emphasized practical applications, such as configuring disaster recovery solutions and optimizing workloads across providers. This knowledge has been invaluable in my role, enabling me to deliver innovative solutions that drive business success.

Conclusion

The future of multi-cloud architectures is undeniably promising. As organizations continue to embrace digital transformation, the flexibility, resilience, and performance benefits of multi-cloud strategies will play a critical role in shaping the IT landscape. However, navigating the challenges requires a proactive approach, robust tools, and skilled professionals.

For anyone aspiring to excel in this field, investing in comprehensive training is essential. My experience with ACTE’s cloud computing training in Bangalore was a game-changer, equipping me with the skills and confidence to thrive in this dynamic industry. With the right knowledge and tools, the possibilities in the world of multi-cloud architectures are truly limitless.

#machinelearning#artificialintelligence#digitalmarketing#adtech#marketingstrategy#cybersecurity#ai#database

0 notes

Text

The Impact of 5G on Network Security: What You Need to Know

Embarking on my journey as a solution architect, I quickly realized the growing significance of staying ahead in the realm of cybersecurity. To fortify my knowledge, I enrolled in a cybersecurity training online program at ACTE Institute. This decision proved to be the cornerstone of my professional growth, equipping me with the expertise necessary to tackle challenges in advanced technologies like 5G.

The rollout of 5G networks represents a monumental leap in connectivity, offering unparalleled speed, low latency, and the capacity to connect millions of devices simultaneously. However, with these advancements come profound security challenges that must be addressed to fully harness the potential of 5G.

What Makes 5G Unique?

Unlike its predecessors, 5G is not merely an upgrade in speed; it’s a transformative technology designed to support diverse applications, such as:

IoT Ecosystems: Connecting billions of devices, from smart appliances to industrial equipment.

Enhanced Mobile Broadband: Delivering ultra-fast internet for seamless streaming and communication.

Critical Communications: Enabling real-time responses for applications like autonomous vehicles and remote surgeries.

While these innovations are groundbreaking, they introduce complex vulnerabilities that expand the attack surface for potential cyber threats.

Key Security Challenges in 5G

1. Broader Attack Surface

With the integration of IoT and edge computing, 5G networks host a multitude of endpoints, each presenting a potential entry point for malicious actors. Securing this vast and distributed architecture is a significant challenge.

2. Network Slicing Vulnerabilities

5G allows for network slicing, where virtual networks operate independently on shared infrastructure. While this enhances flexibility, it also raises concerns about isolation breaches and unauthorized access between slices.

3. Supply Chain Risks

The global supply chain for 5G infrastructure components increases the risk of tampering and compromise during manufacturing or deployment.

4. Increased Dependency on Software

The software-driven nature of 5G networks introduces risks related to bugs, misconfigurations, and vulnerabilities that can be exploited by attackers.

How to Address 5G Security Challenges

1. Adopt Zero Trust Principles

Zero trust architectures ensure that every device and user is verified before gaining access to the network. Continuous monitoring and strict authentication protocols are essential to mitigate risks.

2. Secure IoT Devices

Manufacturers must prioritize security in IoT devices by implementing strong encryption, regular updates, and robust authentication mechanisms.

3. Enhance Collaboration

Governments, industry leaders, and technology providers must work together to establish unified security standards and share threat intelligence to counteract emerging risks.

4. Utilize Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML can help detect anomalies, predict potential threats, and automate responses, thereby enhancing the overall security posture of 5G networks.

Real-World Applications of 5G and Their Security Implications

1. Smart Cities

5G enables smart city initiatives, such as intelligent traffic systems and connected infrastructure. However, breaches in these systems could disrupt critical services and endanger public safety.

2. Healthcare

Remote surgeries, telemedicine, and real-time health monitoring are revolutionized by 5G. Protecting patient data and ensuring the integrity of these systems are vital to maintaining trust and safety.

3. Autonomous Vehicles

The real-time communication capabilities of 5G are critical for self-driving cars. Securing these communication channels is essential to prevent accidents and malicious interference.

4. Industrial Automation

5G facilitates advanced automation in industries. Cybersecurity measures are required to safeguard intellectual property and prevent disruptions in production.

Reflecting on My Training and Experience

When I look back at my professional journey, the cybersecurity training I received at ACTE Institute in Bangalore stands out as a defining moment. This training not only deepened my understanding of network security but also provided hands-on experience in addressing real-world scenarios. From exploring advanced encryption techniques to implementing zero trust frameworks, the program laid the groundwork for my success as a solution architect.

Without this training, navigating the complexities of technologies like 5G would have been an uphill battle. It reinforced my ability to design robust security solutions, making me an indispensable asset to my organization.

Conclusion

The introduction of 5G networks signifies a new era of connectivity and innovation. While the technology offers transformative benefits, it also demands a proactive approach to security. By addressing its unique vulnerabilities and fostering collaboration among stakeholders, we can ensure a secure and resilient future.

For professionals aiming to excel in this dynamic field, investing in comprehensive training programs is imperative. My experience with ACTE’s cybersecurity training in Bangalore has been a game-changer, enabling me to embrace challenges and contribute meaningfully to the evolving landscape of network security. The knowledge and skills I gained continue to shape my career, proving that the right training can unlock endless opportunities.

#adtech#artificialintelligence#digitalmarketing#marketingstrategy#ai#machinelearning#database#cybersecurity

0 notes

Text

Python for Data Analytics: A Solution Architect’s Perspective

As a solution architect, my career has been centered on designing robust, scalable systems tailored to meet diverse business needs. Over the years, I’ve worked on projects spanning various domains—cloud computing, infrastructure optimization, and application development. However, the growing emphasis on data-driven decision-making reshaped my perspective. Organizations now rely heavily on extracting actionable insights from their data, which made me realize that understanding and leveraging data analytics is no longer optional.

This journey into the world of data analytics began with an enriching data analytics training online program. This training not only introduced me to foundational concepts but also provided a structured pathway to mastering Python for data analytics—a skill I now consider indispensable for any tech professional.

Why Python for Data Analytics?

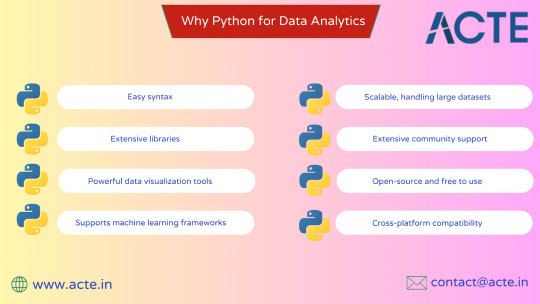

Python has emerged as a game-changer in the data analytics space, and for good reasons:

Simplicity and Versatility: Python’s straightforward syntax makes it accessible for beginners, while its versatility allows professionals to handle complex tasks seamlessly.

Extensive Libraries: Libraries like Pandas, NumPy, Matplotlib, and Seaborn enable efficient data manipulation, visualization, and analysis. For advanced analytics, Scikit-learn and TensorFlow are the go-to tools for machine learning and predictive modeling.

Integration Capabilities: Python integrates effortlessly with other technologies and platforms, making it a preferred choice for end-to-end data solutions.

Community Support: With its vast global community, Python ensures you’ll always find support, tutorials, and updates to keep pace with the ever-evolving analytics landscape.

My First Steps with Python for Data Analytics

My initial foray into Python for data analytics was both exciting and challenging. While I was familiar with programming concepts, understanding the nuances of data manipulation required a shift in mindset. The training program I enrolled in emphasized hands-on projects, which was instrumental in solidifying my understanding.

One of my first projects involved analyzing system performance metrics. Using Python, I could process large datasets to identify patterns and anomalies in server utilization. Here’s what made Python stand out:

Data Manipulation with Pandas: I used Pandas to clean and restructure the data. Its DataFrame object made it easy to filter, sort, and aggregate information.

Visualization with Matplotlib and Seaborn: These libraries allowed me to create interactive and visually appealing graphs to present my findings to stakeholders.

Automation: By writing reusable scripts, I automated the process of monitoring and reporting, saving significant time and effort.

Diving Deeper: Advanced Applications of Python

As I delved deeper, I realized Python’s potential extended beyond basic analysis. It became a tool for solving complex business problems, such as:

Predictive Analytics: Using Scikit-learn, I developed models to forecast system downtimes based on historical data. This proactive approach helped in optimizing resources and minimizing disruptions.

Data Pipeline Development: Python’s integration capabilities allowed me to build ETL (Extract, Transform, Load) pipelines, ensuring seamless data flow between systems.

Real-Time Dashboards: By combining Flask (a lightweight web framework) with Python’s visualization libraries, I created dashboards that displayed real-time analytics, empowering teams to make informed decisions instantly.

The Role of Structured Training

While self-learning has its merits, structured training programs offer a unique edge, especially for professionals with limited time to explore on their own. My decision to undergo data analytics training in Hyderabad through ACTE Institute proved transformative.

Here’s what made this experience invaluable:

Expert Guidance: Industry professionals led the sessions, sharing insights that went beyond textbook knowledge.

Collaborative Environment: Engaging with peers from diverse backgrounds helped me understand different perspectives and approaches to problem-solving.

Hands-On Projects: Real-world scenarios provided a platform to apply theoretical concepts, bridging the gap between learning and implementation.

Feedback and Mentorship: Regular feedback from trainers ensured I stayed on track, while mentorship sessions helped me align my learning with career goals.

Key Learnings and Insights

The transition from a solution architect to a professional proficient in data analytics wasn’t without its challenges. However, every hurdle taught me something valuable:

Start Small, Think Big: It’s tempting to dive into complex machine learning models immediately. However, mastering the basics—data cleaning, exploration, and visualization—lays a strong foundation for advanced techniques.

Iterate and Experiment: Data analytics is an iterative process. The more you experiment, the better you understand the data and the tools you’re using.

Stay Curious: The field of data analytics is dynamic. Keeping up with the latest tools, techniques, and best practices ensures you remain relevant and effective.

Collaborate: Engaging with a community—be it through forums, training sessions, or professional networks—accelerates learning and opens doors to new opportunities.

Real-World Impact of Python for Data Analytics

Equipping myself with Python for data analytics has had a tangible impact on my work:

Enhanced Problem-Solving: Data-driven insights have enabled me to identify bottlenecks, predict outcomes, and design more effective solutions.

Improved Communication: Visualizations and dashboards created using Python help convey complex information in a clear and impactful way.

Career Growth: The ability to bridge technical expertise with analytical skills has positioned me as a more versatile and valuable professional.

Future Trends in Data Analytics

As I continue to explore Python for data analytics, I’m excited about the possibilities it holds for the future. Emerging trends like AI-driven analytics, natural language processing, and edge analytics are set to redefine how we interact with data. Python’s adaptability ensures it will remain a cornerstone of these advancements.

Final Thoughts

My journey into the world of data analytics has been transformative, both personally and professionally. From starting with a simple data analytics training online program to applying Python to solve complex business problems, the experience has been nothing short of rewarding.

If there’s one piece of advice I would offer to anyone contemplating this path, it’s this: invest in learning, embrace challenges, and don’t hesitate to experiment. Whether you’re an aspiring data analyst, a seasoned IT professional, or someone intrigued by the power of data, Python for data analytics is a skill worth mastering.

The training I received in data analytics training in Hyderabad served as a turning point, equipping me with the knowledge and confidence to navigate this exciting field. As organizations continue to prioritize data-driven strategies, the demand for professionals proficient in data analytics will only grow.

So, take that first step. Enroll in a training program, start exploring Python, and discover the endless possibilities that data analytics offers. Who knows? It might just redefine your career, as it did mine.

#ai#artificialintelligence#digitalmarketing#marketingstrategy#database#machinelearning#adtech#cybersecurity

0 notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Python for Data Analytics: A Solution Architect’s Perspective

As a solution architect, my career has been centered on designing robust, scalable systems tailored to meet diverse business needs. Over the years, I’ve worked on projects spanning various domains—cloud computing, infrastructure optimization, and application development. However, the growing emphasis on data-driven decision-making reshaped my perspective. Organizations now rely heavily on extracting actionable insights from their data, which made me realize that understanding and leveraging data analytics is no longer optional.

This journey into the world of data analytics began with an enriching data analytics training online program. This training not only introduced me to foundational concepts but also provided a structured pathway to mastering Python for data analytics—a skill I now consider indispensable for any tech professional.

Why Python for Data Analytics?

Python has emerged as a game-changer in the data analytics space, and for good reasons:

Simplicity and Versatility: Python’s straightforward syntax makes it accessible for beginners, while its versatility allows professionals to handle complex tasks seamlessly.

Extensive Libraries: Libraries like Pandas, NumPy, Matplotlib, and Seaborn enable efficient data manipulation, visualization, and analysis. For advanced analytics, Scikit-learn and TensorFlow are the go-to tools for machine learning and predictive modeling.

Integration Capabilities: Python integrates effortlessly with other technologies and platforms, making it a preferred choice for end-to-end data solutions.

Community Support: With its vast global community, Python ensures you’ll always find support, tutorials, and updates to keep pace with the ever-evolving analytics landscape.

My First Steps with Python for Data Analytics

My initial foray into Python for data analytics was both exciting and challenging. While I was familiar with programming concepts, understanding the nuances of data manipulation required a shift in mindset. The training program I enrolled in emphasized hands-on projects, which was instrumental in solidifying my understanding.

One of my first projects involved analyzing system performance metrics. Using Python, I could process large datasets to identify patterns and anomalies in server utilization. Here’s what made Python stand out:

Data Manipulation with Pandas: I used Pandas to clean and restructure the data. Its DataFrame object made it easy to filter, sort, and aggregate information.

Visualization with Matplotlib and Seaborn: These libraries allowed me to create interactive and visually appealing graphs to present my findings to stakeholders.

Automation: By writing reusable scripts, I automated the process of monitoring and reporting, saving significant time and effort.

Diving Deeper: Advanced Applications of Python

As I delved deeper, I realized Python’s potential extended beyond basic analysis. It became a tool for solving complex business problems, such as:

Predictive Analytics: Using Scikit-learn, I developed models to forecast system downtimes based on historical data. This proactive approach helped in optimizing resources and minimizing disruptions.

Data Pipeline Development: Python’s integration capabilities allowed me to build ETL (Extract, Transform, Load) pipelines, ensuring seamless data flow between systems.

Real-Time Dashboards: By combining Flask (a lightweight web framework) with Python’s visualization libraries, I created dashboards that displayed real-time analytics, empowering teams to make informed decisions instantly.

The Role of Structured Training

While self-learning has its merits, structured training programs offer a unique edge, especially for professionals with limited time to explore on their own. My decision to undergo data analytics training in Hyderabad through ACTE Institute proved transformative.

Here’s what made this experience invaluable:

Expert Guidance: Industry professionals led the sessions, sharing insights that went beyond textbook knowledge.

Collaborative Environment: Engaging with peers from diverse backgrounds helped me understand different perspectives and approaches to problem-solving.

Hands-On Projects: Real-world scenarios provided a platform to apply theoretical concepts, bridging the gap between learning and implementation.

Feedback and Mentorship: Regular feedback from trainers ensured I stayed on track, while mentorship sessions helped me align my learning with career goals.

Key Learnings and Insights

The transition from a solution architect to a professional proficient in data analytics wasn’t without its challenges. However, every hurdle taught me something valuable:

Start Small, Think Big: It’s tempting to dive into complex machine learning models immediately. However, mastering the basics—data cleaning, exploration, and visualization—lays a strong foundation for advanced techniques.

Iterate and Experiment: Data analytics is an iterative process. The more you experiment, the better you understand the data and the tools you’re using.

Stay Curious: The field of data analytics is dynamic. Keeping up with the latest tools, techniques, and best practices ensures you remain relevant and effective.

Collaborate: Engaging with a community—be it through forums, training sessions, or professional networks—accelerates learning and opens doors to new opportunities.

Real-World Impact of Python for Data Analytics

Equipping myself with Python for data analytics has had a tangible impact on my work:

Enhanced Problem-Solving: Data-driven insights have enabled me to identify bottlenecks, predict outcomes, and design more effective solutions.

Improved Communication: Visualizations and dashboards created using Python help convey complex information in a clear and impactful way.

Career Growth: The ability to bridge technical expertise with analytical skills has positioned me as a more versatile and valuable professional.

Future Trends in Data Analytics

As I continue to explore Python for data analytics, I’m excited about the possibilities it holds for the future. Emerging trends like AI-driven analytics, natural language processing, and edge analytics are set to redefine how we interact with data. Python’s adaptability ensures it will remain a cornerstone of these advancements.

Final Thoughts

My journey into the world of data analytics has been transformative, both personally and professionally. From starting with a simple data analytics training online program to applying Python to solve complex business problems, the experience has been nothing short of rewarding.

If there’s one piece of advice I would offer to anyone contemplating this path, it’s this: invest in learning, embrace challenges, and don’t hesitate to experiment. Whether you’re an aspiring data analyst, a seasoned IT professional, or someone intrigued by the power of data, Python for data analytics is a skill worth mastering.

The training I received in data analytics training in Hyderabad served as a turning point, equipping me with the knowledge and confidence to navigate this exciting field. As organizations continue to prioritize data-driven strategies, the demand for professionals proficient in data analytics will only grow.

So, take that first step. Enroll in a training program, start exploring Python, and discover the endless possibilities that data analytics offers. Who knows? It might just redefine your career, as it did mine.

0 notes

Text

When I began my journey as a solution architect, I knew that the foundation of my expertise needed to be solid. One of the key milestones in shaping my career was enrolling in a cybersecurity training online program. The comprehensive curriculum and hands-on approach provided by the ACTE Institute opened new doors for me and gave me the confidence to tackle complex challenges in the tech space. This training not only equipped me with the necessary skills but also sparked my interest in how emerging technologies like blockchain could redefine cybersecurity.

Blockchain technology has become one of the most transformative innovations of the 21st century, fundamentally changing the way industries operate—and cybersecurity is no exception. As organizations face increasing threats from sophisticated cyberattacks, blockchain offers unique advantages that traditional systems often lack. Here's how blockchain is revolutionizing cybersecurity:

The Rise of Blockchain in Cybersecurity

Cybersecurity has always been about safeguarding data, networks, and systems from unauthorized access or attacks. However, with the exponential increase in the volume of data and the growing complexity of cyber threats, traditional solutions often fall short. Blockchain’s decentralized and tamper-proof architecture is becoming a game-changer in this context.

Unlike centralized systems, where a single point of failure can compromise the entire infrastructure, blockchain distributes data across a network of nodes. This decentralized approach ensures that even if one node is compromised, the integrity of the data remains intact. For cybersecurity professionals, this means creating systems that are inherently more secure and resilient to attacks.

Key Applications of Blockchain in Cybersecurity

1. Data Integrity and Protection

One of blockchain’s most significant contributions to cybersecurity is its ability to guarantee data integrity. By using cryptographic hashes, blockchain ensures that once data is written to the ledger, it cannot be altered without detection. This makes it nearly impossible for hackers to tamper with sensitive information, whether it's financial records, personal data, or intellectual property.

For example, in supply chain management, blockchain can track the provenance of goods and ensure that the data remains unaltered throughout its journey. Similarly, in healthcare, patient records can be securely stored and accessed only by authorized personnel, reducing the risk of breaches.

2. Securing Internet of Things (IoT) Devices

The rapid adoption of IoT devices has introduced a new frontier of cybersecurity challenges. These devices often have limited security protocols, making them prime targets for cyberattacks. Blockchain technology can address this issue by enabling secure and decentralized communication between devices.

Through blockchain, IoT devices can authenticate each other and establish secure communication channels without relying on a central authority. This reduces the likelihood of Distributed Denial-of-Service (DDoS) attacks and other vulnerabilities associated with IoT networks.

3. Identity Management

Traditional identity management systems often rely on centralized databases, which are attractive targets for hackers. Blockchain introduces a decentralized model for identity verification, where users have control over their data. This concept, known as Self-Sovereign Identity (SSI), allows individuals to store their credentials on a blockchain and share them securely with third parties when required.

By leveraging blockchain, organizations can reduce the risk of identity theft and fraud while enhancing user privacy. This is particularly relevant for industries like finance, healthcare, and e-commerce, where identity verification is critical.

4. Preventing DDoS Attacks