Globose Technology Solutions Pvt Ltd (GTS) is an Al data collection Company that provides different Datasets like image datasets, video datasets, text datasets, speech datasets, etc. to train your machine learning model.

Don't wanna be here? Send us removal request.

Text

0 notes

Text

The Power of Video Data Collection Services for AI & Machine Learning

Introduction:

In the age of artificial intelligence (AI) and machine learning (ML), data serves as the primary catalyst for innovation. Among the diverse types of data, video data has become a vital component in training intelligent models for various applications, including autonomous vehicles, facial recognition, smart surveillance, and robotics. Services that specialize in video data collection offer businesses high-quality datasets that are crucial for improving the accuracy and efficiency of AI systems.

The Importance of Video Data Collection

Video Data Collection Services is essential for training AI models that necessitate an understanding of continuous motion, object detection, and behavior analysis. Unlike static images, videos provide extensive contextual information, enabling AI systems to identify patterns, monitor movements, and make instantaneous decisions.

Key Advantages of Video Data Collection for AI:

Enhanced Contextual Understanding - Video data captures sequences of actions, allowing AI models to grasp context that extends beyond a single frame.

Improved Object Recognition - The dynamic nature of moving objects in videos enables AI to recognize variations in angles, lighting conditions, and occlusions.

Training for Real-World Scenarios - AI models trained with video data are better equipped to adapt to real-life environments, thereby improving applications in automation and surveillance.

Higher Accuracy and Precision - With an abundance of data points and temporal consistency, AI models can achieve greater accuracy in predictions and decision-making.

Applications of Video Data Collection in AI

Autonomous Vehicles

Self-driving cars necessitate extensive amounts of video data to train their computer vision systems. AI-enabled vehicles depend on annotated video datasets to accurately identify pedestrians, traffic signs, and obstacles.

Retail and Customer Analytics

Retailers leverage AI-driven video analytics to analyze customer behavior, optimize store layouts, and enhance security through facial recognition technology.

Intelligent Surveillance and Security

Surveillance systems enhanced by artificial intelligence utilize video data to identify irregularities, deter theft, and improve public safety by recognizing suspicious behaviors in real time.

AI in Healthcare and Medicine

In the healthcare sector, AI models that analyze video data support diagnostics, surgical operations, and patient monitoring, leading to better health outcomes.

Video Data Collection Services

Global Technology Solutions (GTS) is dedicated to delivering high-quality, varied, and scalable video datasets specifically designed for artificial intelligence and machine learning training. Our areas of expertise encompass:

Bespoke Video Dataset Collection – Acquiring high-resolution videos across a range of industries.

Accurate Annotation and Labeling – Providing precise training data essential for AI model development.

Varied Data Formats – Accommodating multiple formats suitable for diverse AI applications.

Scalable Offerings – Serving both startups and large enterprises with customized video datasets.

Conclusion

The collection of video data is transforming artificial intelligence by facilitating the development of more intelligent, efficient, and precise machine learning models. Globose Technology Solutions organizations that invest in high-quality video datasets can significantly improve their AI-driven solutions across various sectors. Collaborating with a reputable video data collection service provider, such as GTS, guarantees access to superior data for your AI initiatives.

0 notes

Text

0 notes

Text

0 notes

Text

How Data Annotation Companies Improve AI Model Accuracy

Introduction:

In the rapidly advancing domain of artificial intelligence (AI), the accuracy of models is a pivotal element that influences the effectiveness of applications across various sectors, including autonomous driving, medical imaging, security surveillance, and e-commerce. A significant contributor to achieving high AI accuracy is the presence of well-annotated data. Data Annotation Companies are essential in this process, as they ensure that machine learning (ML) models are trained on precise and high-quality datasets.

The Significance of Data Annotation for AI

AI models, particularly those utilizing computer vision and natural language processing (NLP), necessitate extensive amounts of labeled data to operate efficiently. In the absence of accurately annotated images and videos, AI models face challenges in distinguishing objects, recognizing patterns, or making informed decisions. Data annotation companies create structured datasets by labeling images, videos, text, and audio, thereby enabling AI to learn from concrete examples.

How Data Annotation Companies Enhance AI Accuracy

Delivering High-Quality Annotations

The precision of an AI model is closely linked to the quality of annotations within the training dataset. Reputable data annotation companies utilize sophisticated annotation methods such as bounding boxes, semantic segmentation, key point annotation, and 3D cuboids to ensure accurate labeling. By minimizing errors and inconsistencies in the annotations, these companies facilitate the attainment of superior accuracy in AI models.

Utilizing Human Expertise and AI-Assisted Annotation

Numerous annotation firms integrate human intelligence with AI-assisted tools to boost both efficiency and accuracy. Human annotators are tasked with complex labeling assignments, such as differentiating between similar objects or grasping contextual nuances, while AI-driven tools expedite repetitive tasks, thereby enhancing overall productivity.

Managing Extensive and Varied Data

AI models require a wide range of datasets to effectively generalize across various contexts. Data annotation firms assemble datasets from multiple sources, ensuring a blend of ethnic backgrounds, lighting conditions, object differences, and scenarios. This variety is essential for mitigating AI biases and enhancing the robustness of models.

Maintaining Consistency in Annotations

Inconsistent labeling can adversely affect the performance of an AI model. Annotation companies employ rigorous quality control measures, inter-annotator agreements, and review processes to ensure consistent labeling throughout datasets. This consistency is crucial to prevent AI models from being misled by conflicting labels during the training phase.

Scalability and Accelerated Turnaround

AI initiatives frequently necessitate large datasets that require prompt annotation. Data annotation firms utilize workforce management systems and automated tools to efficiently manage extensive projects, enabling AI developers to train models more rapidly without sacrificing quality.

Adherence to Data Security Regulations

In sectors such as healthcare, finance, and autonomous driving, data security is paramount. Reputable annotation companies adhere to GDPR, HIPAA, and other security regulations to safeguard sensitive information, ensuring that AI models are trained with ethically sourced, high-quality datasets.

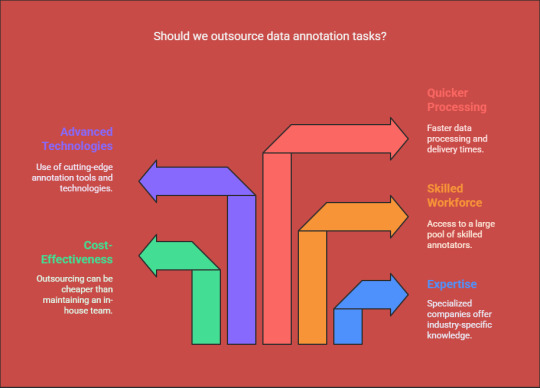

Reasons to opt for a Professional Data Annotation Company

Outsourcing annotation tasks to a specialized company offers several benefits over relying on in-house teams:

Expertise in specific industry annotations

Cost-effective alternatives to establishing an internal annotation team

Access to a skilled workforce and advanced annotation technologies

Quicker data processing and delivery

Conclusion

Data annotation companies are essential for enhancing the accuracy of Globose Technology Solutions AI models through the provision of high-quality, meticulously labeled training datasets. Their proficiency in managing extensive, varied, and intricate annotations greatly boosts model performance, thereby increasing the reliability and effectiveness of AI applications. Collaborating with a professional annotation service provider enables businesses to expedite their AI development while maintaining optimal precision and efficiency.

0 notes

Text

0 notes

Text

0 notes

Text

What Are OCR Datasets? A Comprehensive Guide for Machine Learning

Introduction:

In the swiftly advancing domain of Artificial Intelligence (AI) and Machine Learning (ML), Optical Character Recognition (OCR) has become an essential technology that empowers machines to interpret and extract text from images, scanned documents, and handwritten materials. The foundation of any effective OCR model is a high-quality OCR dataset, which trains the machine to comprehend and identify text proficiently. This detailed guide will delve into the nature of OCR datasets, their importance, and their role in enhancing machine learning models.

What Constitutes an OCR Dataset?

An OCR Dataset refers to a compilation of images that contain text, accompanied by corresponding labels that denote the textual content within those images. These datasets are indispensable for the training, validation, and testing of OCR models. The labeled text data enables machine learning models to identify and extract text from various image types, including scanned documents, handwritten notes, street signs, printed receipts, and more.

Typically, OCR datasets include:

Images: Featuring either printed or handwritten text.

Annotations/Labels: The corresponding text found in the images, provided in a digital format.

Metadata: Supplementary information such as font type, language, or text format.

The Significance of OCR Datasets in Machine Learning

High-quality OCR datasets are crucial for the development and efficacy of OCR models. Below are several key reasons highlighting the importance of OCR datasets:

Enhanced Text Recognition Precision:

Well-annotated OCR datasets enable models to achieve greater accuracy in recognizing text from images.

Improved Machine Learning Models:

Training OCR models on extensive datasets enhances their capability to read various text styles, handwriting, and document formats.

Facilitation of Multilingual Text Recognition:

OCR datasets can be specifically curated for multiple languages, assisting models in understanding and processing text from a wide array of linguistic backgrounds.

Facilitate Document Digitization:

OCR datasets play a crucial role in the digitization of historical records, invoices, legal documents, and various other materials.

Enhance AI Model Generalization:

Familiarity with a diverse array of text formats, including handwritten, typed, and printed texts, enables OCR models to enhance their text recognition abilities.

Categories of OCR Datasets

OCR datasets are categorized based on their application, source of text, and specific use cases. Some of the most prevalent types of OCR datasets include:

Handwritten Text Datasets:

These datasets comprise images of handwritten text accompanied by relevant annotations.

Example: Handwritten notes, signatures, or address labels.

Printed Text Datasets:

These datasets include printed text extracted from newspapers, documents, books, or signage.

Example: Scanned pages from books, newspapers, and advertisements.

Scene Text Datasets:

These datasets are derived from natural environments, capturing text from street signs, product packaging, license plates, and more.

Example: Road signs, advertisements, and product tags.

Document OCR Datasets:

These datasets consist of structured information from documents such as invoices, receipts, forms, and identification cards.

Example: Scans of passports, medical records, or billing invoices.

Multilingual OCR Datasets:

These datasets feature text data in multiple languages, aiding OCR models in processing text on a global scale.

Example: Multilingual documents or forms.

Advantages of Utilizing High-Quality OCR Datasets

Employing a high-quality OCR dataset can greatly enhance the efficacy of an OCR model. Key advantages include:

Increased Accuracy:

High-quality OCR datasets reduce errors in text extraction.

Minimized Bias:

A varied dataset helps mitigate bias, ensuring the model performs effectively across different text types and languages.

Enhanced Generalization:

Exposure to various handwriting styles and printed text formats fosters improved model generalization.

Greater Applicability in Real-World Contexts:

Well-organized OCR datasets enable AI models to be effectively utilized in practical applications such as document scanning, banking, healthcare, and legal sectors.

Constructing a high-quality OCR dataset necessitates a methodical strategy. The following are the essential steps involved in creating an OCR dataset:

Data Collection:

Acquire a variety of text images from multiple sources, including books, documents, handwritten notes, and street scenes.

Data Annotation:

Either manually or automatically label the text within the images to produce accurate ground truth labels.

Data Preprocessing:

Enhance the images by cleaning them, adjusting their resolutions, and eliminating any noise to ensure optimal quality.

Dataset Division:

Divide the dataset into training, validation, and testing subsets.

Quality Assurance:

Confirm the precision of the annotations to uphold the quality of the dataset.

Conclusion

OCR datasets are crucial for the advancement of precise and effective machine learning models aimed at text recognition. Whether your focus is on digitizing documents, streamlining data entry processes, or enhancing text recognition capabilities in images, utilizing a superior OCR dataset can greatly improve the performance of your model.

For those seeking high-quality OCR datasets for their AI or machine learning initiatives, we invite you to explore our case study on improving Globose Technology Solutions AI reliability through our OCR dataset: Enhance AI Reliability with Our OCR Dataset for Precise Data.

Investing in top-tier OCR datasets is fundamental to achieving exceptional accuracy in text recognition models and facilitating smooth integration into practical applications.

0 notes

Text

0 notes

Text

0 notes

Text

Improving TTS Models Using Rich Text to Speech Datasets

Introduction:

Text To Speech Dataset models have fundamentally transformed the interaction between machines and humans. From virtual assistants to audiobook narrators, TTS technology is essential in enhancing user experiences across a multitude of sectors. Nevertheless, the effectiveness and precision of these models are significantly influenced by the quality of the training data utilized. One of the most impactful methods to enhance TTS models is through the use of comprehensive Text-to-Speech (TTS) datasets.

This article will examine how the collection of high-quality text data contributes to the advancement of TTS models and the reasons why investing in rich datasets can markedly improve model performance.

The Significance of High-Quality Text Data for TTS Models

A Text-to-Speech model fundamentally transforms written text into natural-sounding human speech. To produce high-quality, human-like audio output, the TTS model necessitates a diverse, clean, and contextually rich text dataset. In the absence of comprehensive and high-quality text data, the model may generate speech output that is robotic, monotonous, or fraught with errors.

Key elements of high-quality text datasets for TTS models include:

Linguistic Diversity: The text data should encompass a broad spectrum of linguistic contexts, including various accents, dialects, and speaking styles. This diversity enables TTS models to perform accurately across different demographics and regions.

Emotional Tone: Rich text datasets encapsulate a variety of emotions, tones, and expressions, allowing the TTS model to emulate human-like speech.

Contextual Accuracy: Ensuring that the text data is contextually precise aids the TTS model in understanding and generating natural speech patterns.

Balanced Representation: The dataset should reflect various age groups, genders, cultural backgrounds, and speaking styles to create a truly adaptable TTS model.

How Rich Text Data Enhances TTS Model Performance

Improved Pronunciation and Clarity A significant challenge faced by TTS models is achieving accurate pronunciation. By utilizing a wide range of precise text data during training, the model becomes adept at recognizing the correct pronunciation of various words, phrases, and specialized terminology across different contexts. This leads to a marked improvement in both clarity and pronunciation.

Better Handling of Multilingual Content In an increasingly interconnected world, the demand for multilingual TTS models is on the rise. High-quality text datasets that encompass multiple languages allow TTS systems to transition seamlessly between languages. This capability is particularly advantageous for applications such as language translation, virtual assistants, and international customer support.

Enhanced Emotional Intelligence Emotion is a crucial aspect of human interaction. TTS models that are trained on text datasets reflecting a variety of tones and emotional nuances can generate audio output that is more engaging and natural. This is especially relevant in sectors such as gaming, content creation, and virtual customer service.

Reduced Bias and Enhanced Inclusivity A well-organized and diverse text dataset is essential to prevent TTS models from exhibiting biased speech patterns. Biases arising from data collection can lead to skewed model behavior, adversely affecting user experience. By gathering a wide range of text data, TTS models can provide a more inclusive and impartial communication experience.

Challenges in Text Data Collection for TTS Models

Despite the essential role of text data in training TTS models, the collection of high-quality data presents several challenges:

Data Diversity: Achieving a text dataset that reflects a variety of sources, languages, and demographics is both time-consuming and complex.

Data Quality: Inadequately curated or unrefined data can detrimentally affect model performance. Ensuring high data quality necessitates thorough processing and filtering.

Data Privacy and Compliance: The collection of text data must adhere to applicable data privacy laws and regulations to prevent any legal consequences. It is essential to implement appropriate data anonymization techniques and security protocols.

How Text Data Collection Services Can Assist

To address the difficulties associated with gathering high-quality text data, numerous organizations collaborate with specialized text data collection service providers. These services offer:

Access to Extensive and Varied Datasets: Professional text data collection services can supply substantial amounts of text data from a wide range of linguistic and demographic sources.

Data Cleaning and Annotation: Service providers guarantee that the text data is meticulously cleaned, contextually precise, and thoroughly annotated for seamless processing.

Tailored Data Collection: Based on the specific requirements of a project, organizations can obtain customized text datasets designed to meet their unique model training needs.

By utilizing these services, organizations can markedly enhance the performance and precision of their TTS models.

Conclusion

High-quality text datasets serve as the foundation for effective Text-to-Speech (TTS) models. Investing in comprehensive and diverse text data enables TTS models to produce human-like speech output, minimizing pronunciation errors, improving emotional expressiveness, and promoting inclusivity. Globose Technology Solutions Organizations aiming to boost their TTS model performance should focus on acquiring high-quality text data from trustworthy sources.

0 notes

Text

0 notes

Text

How to Choose the Right OCR Dataset for Your Project

Introduction:

In the realm of Artificial Intelligence and Machine Learning, Optical Character Recognition (OCR) technology is pivotal for the digitization and extraction of textual data from images, scanned documents, and various visual formats. Choosing an appropriate OCR dataset is vital to guarantee precise, efficient, and dependable text recognition for your project. Below are guidelines for selecting the most suitable OCR dataset to meet your specific requirements.

Establish Your Project Specifications

Prior to selecting an OCR Dataset, it is imperative to clearly outline the scope and goals of your project. Consider the following aspects:

What types of documents or images will be processed?

Which languages and scripts must be recognized?

What degree of accuracy and precision is necessary?

Is there a requirement for support of handwritten, printed, or mixed text formats?

What particular industries or applications (such as finance, healthcare, or logistics) does your OCR system aim to serve?

A comprehensive understanding of these specifications will assist in refining your search for the optimal dataset.

Verify Dataset Diversity

A high-quality OCR dataset should encompass a variety of samples that represent real-world discrepancies. Seek datasets that feature:

A range of fonts, sizes, and styles

Diverse document layouts and formats

Various image qualities (including noisy, blurred, and scanned documents)

Combinations of handwritten and printed text

Multi-language and multilingual datasets

Data diversity is crucial for ensuring that your OCR model generalizes effectively and maintains accuracy across various applications.

Assess Labeling Accuracy and Quality

A well-annotated dataset is critical for training a successful OCR model. Confirm that the dataset you select includes:

Accurately labeled text with bounding boxes

High fidelity in transcription and annotation

Well-organized metadata for seamless integration into your machine-learning workflow

Inadequately labeled datasets can result in inaccuracies and inefficiencies in text recognition.

Assess the Size and Scalability of the Dataset

The dimensions of the dataset are pivotal in the training of models. Although larger datasets typically produce superior outcomes, they also demand greater computational resources. Consider the following:

Whether the dataset's size is compatible with your available computational resources

If it is feasible to generate additional labeled data if necessary

The potential for future expansion of the dataset to incorporate new data variations

Striking a balance between dataset size and quality is essential for achieving optimal performance while minimizing unnecessary resource consumption.

Analyze Dataset Licensing and Costs

OCR datasets are subject to various licensing agreements—some are open-source, while others necessitate commercial licenses. Take into account:

Whether the dataset is available at no cost or requires a financial investment

Licensing limitations that could impact the deployment of your project

The cost-effectiveness of acquiring a high-quality dataset compared to developing a custom-labeled dataset

Adhering to licensing agreements is vital to prevent legal issues in the future.

Conduct Tests with Sample Data

Prior to fully committing to an OCR dataset, it is prudent to evaluate it using a small sample of your project’s data. This evaluation assists in determining:

The dataset’s applicability to your specific requirements

The effectiveness of OCR models trained with the dataset

Any potential deficiencies that may necessitate further data augmentation or preprocessing

Conducting pilot tests aids in refining dataset selections before large-scale implementation.

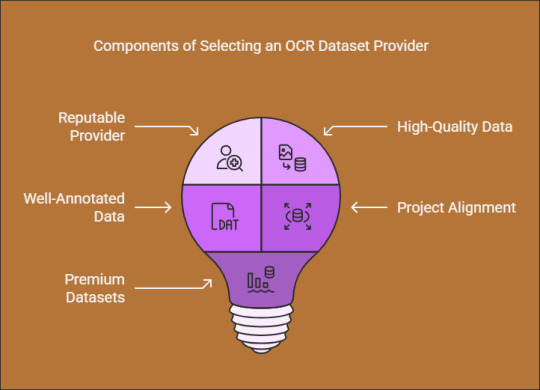

Select a Trustworthy OCR Dataset Provider

Choosing a reputable dataset provider guarantees access to high-quality, well-annotated data that aligns with your project objectives. One such provider. which offers premium OCR datasets tailored for accurate data extraction and AI model training. Explore their OCR dataset solutions for more information.

Conclusion

Selecting an appropriate OCR dataset is essential for developing a precise and effective text recognition model. By assessing the requirements of your project, ensuring a diverse dataset, verifying the accuracy of labels, and considering licensing agreements, you can identify the most fitting dataset for Globose Technology Solutions AI application. Prioritizing high-quality datasets from trusted sources will significantly improve the reliability and performance of your OCR system.

0 notes

Text

0 notes

Text

0 notes