#(I can also give the raw data and/or python code

Explore tagged Tumblr posts

Text

I saw that this was completely luck-based and apparently had nothing better to do so I whipped up some Python code to play this game one hundred thousand times. (Well, maybe "whipped up" is an exaggeration; the whole thing took upwards of 40 minutes for me to program. It'd been a while since I'd done Python.) Here are some of my observations:

Quickest and easiest question to answer: How hard is this game? Pretty hard. 7% chance of victory, 93% chance of defeat. Someone's probably gonna get smashed with a rock.

Most games last between seven and twelve rounds. 3% of games lasted the minimum length of five rounds, meaning at least one Guy just never kept their cool and bashed the other Guy's head in at the earliest opportunity. Exactly 5 games of the hundred-thousand lasted the maximum length of nineteen rounds. (Predictably, all five of these games were losses.)

In the likely event of a loss, it was common that one of the Guys was actually quite chill. 25% of losses occurred with one player at a full 6 Cool. Still, though, 5.6% of losses (5.2% of games overall) ended with Full Rage, by which I mean that both Guys bashed each others' skulls in at the exact same time.

A similar trend can be seen with wins; generally both people are chill. There's a bit of a feedback loop, as you might expect, so victory with low Cool values was uncommon. Still, a full 10 games ended with victory for two Guys with 2/6 Cool, just about to snap and kill each other.

In line with the previous paragraph, winning games typically ended early. Over 26% of winning games just involved no betrayal at all, and another 27% had exactly one round during which a Guy did not cooperate.

My apologies to @moon-of-curses if this was not her intended method of interacting with the game. If it's any consolation, I have had a lot of fun.

200 Word RPGs 2024

Each November, some people try to write a novel. Others would prefer to do as little writing as possible. For those who wish to challenge their ability to not write, we offer this alternative: producing a complete, playable roleplaying game in two hundred words or fewer.

This is the submission thread for the 2024 event, running from November 1st, 2024 through November 30th, 2024. Submission guidelines can be found in this blog's pinned post, here.

#numbers#math#human interaction#Thanksgiving break means I can burn time on silly things#(I can also give the raw data and/or python code#to anyone who is interested)#I also ran the code with a d8 instead of a d12#winning chance went up to 25%#with a d6 it's 45% win chance#which seems reasonable to me#for a silly game of chance#I guess it depends on the vibes you're going for#the intended vibes here seem to be#kill a Guy with a Rock#which I can respect

14K notes

·

View notes

Text

Can I Use Python for Web Development?

Yes, you can use Python for web development! Python is a versatile and powerful programming language that is widely used for various types of software development, including web development. Here’s an overview of how Python can be used to create dynamic, robust, and scalable web applications.

Why Use Python for Web Development?

Readability and Simplicity: Python’s syntax is clear and easy to read, making it an excellent choice for beginners and experienced developers alike. The simplicity of Python allows developers to focus on solving problems rather than getting bogged down by complex syntax.

Rich Ecosystem: Python boasts a vast collection of libraries and frameworks that simplify the development process. These tools provide pre-built functionalities that can save time and effort.

Versatility: Python is not only used for web development but also for data analysis, artificial intelligence, machine learning, automation, and more. This versatility allows developers to use Python across different domains within the same project.

Popular Python Frameworks for Web Development

Several frameworks can be used for web development in Python, each catering to different needs:

Django:

Overview: Django is a high-level web framework that encourages rapid development and clean, pragmatic design.

Features: It includes an ORM (Object-Relational Mapping), an admin panel, authentication, URL routing, and more.

Use Cases: Ideal for building large-scale applications, such as social media platforms, e-commerce sites, and content management systems.

Flask:

Overview: Flask is a micro-framework that gives developers the freedom to choose the tools and libraries they want to use.

Features: It is lightweight and modular, with a focus on simplicity and minimalism.

Use Cases: Suitable for small to medium-sized applications, RESTful APIs, and prototyping.

Pyramid:

Overview: Pyramid is designed for flexibility and can be used for both simple and complex applications.

Features: It offers robust security, scalability, and the ability to choose the components you need.

Use Cases: Perfect for applications that may start small but are expected to grow over time.

How Python Web Development Works

Server-Side Scripting: Python is used to write server-side scripts that interact with databases, manage user authentication, handle form submissions, and perform other backend tasks.

Web Frameworks: Frameworks like Django, Flask, and Pyramid provide the structure and tools needed to build web applications efficiently. They handle many of the complexities involved in web development, such as routing requests, managing sessions, and securing applications.

Template Engines: Python frameworks often include template engines like Jinja2 (used in Flask) or Django’s templating system. These engines allow developers to create dynamic HTML content by embedding Python code within HTML templates.

Database Integration: Python’s ORM tools, such as Django ORM or SQLAlchemy, simplify database interactions by allowing developers to interact with databases using Python code instead of raw SQL.

Testing and Deployment: Python offers robust testing frameworks like unittest and pytest, which help ensure code quality and reliability. Deployment tools and services, such as Docker, Heroku, and AWS, make it easier to deploy and scale Python web applications.

Examples of Python in Web Development

Instagram: Built using Django, Instagram is a prime example of Python’s capability to handle large-scale applications.

Pinterest: Utilizes Python for its backend services, allowing for rapid development and iteration.

Spotify: Uses Python for data analysis and backend services, demonstrating Python’s versatility beyond traditional web development.

Conclusion

Python is a powerful and flexible language that is well-suited for web development. Its ease of use, rich ecosystem of libraries and frameworks, and strong community support make it an excellent choice for developers looking to build robust web applications. Whether you are building a simple website or a complex web application, Python provides the tools and flexibility needed to create high-quality, scalable solutions.

0 notes

Text

Version 422

youtube

windows

zip

exe

macOS

app

linux

tar.gz

🎉🎉 It was hydrus's birthday this week! 🎉🎉

I had a great week. I mostly fixed bugs and improved quality of life.

tags

It looks like when I optimised tag autocomplete around v419, I accidentally broke the advanced 'character:*'-style lookups (which you can enable under tags->manage tag display and search. I regret this is not the first time these clever queries have been broken by accident. I have fixed them this week and added several sets of unit tests to ensure I do not repeat this mistake.

These expansive searches should also work faster, cancel faster, and there are a few new neat cache optimisations to check when an expensive search's results for 'char' or 'character:' can quickly provide results for a later 'character:samus'. Overall, these queries should be a bit better all around. Let me know if you have any more trouble.

The single-tag right-click menu now always shows sibling and parent data, and for all services. Each service stacks siblings/parents into tall submenus, but the tall menu feels better to me than nested, so we'll see how that works out IRL. You can click any sibling or parent to copy to clipboard, so I have retired the 'copy' menu's older and simpler 'siblings' submenu.

misc

Some websites have a 'redirect' optimisation where if a gallery page has only one file, it moves you straight to the post page for that file. This has been a problem for hydrus for some time, and particularly affected users who were doing md5: queries on certain sites, but I believe the downloader engine can now handle it correctly, forwarding the redirect URL to the file queue. This is working on some slightly shakey tech that I want to improve more in future, but let me know how you get on with it.

The UPnPc executables (miniupnp, here https://miniupnp.tuxfamily.org/) are no longer bundled in the 'bin' directory. These files were a common cause of anti-virus false positives every few months, and are only used by a few advanced users to set up servers and hit network->data->manage upnp, so I have decided that new users will have to install it themselves going forward. Trying to perform a UPnP operation when the exe cannot be found now gives a popup message talking about the situation and pointing to the new readme in the bin directory.

After working with a user, it seems that some clients may not have certain indices that speed up sibling and parent lookups. I am not totally sure if this was due to hard drive damage or broken update logic, but the database now looks for and heals this problem on every boot.

parsing (advanced)

String converters can now encode or decode by 'unicode escape characters' ('\u0394'-to-'Δ') and 'html entities' ('&'-to-'&'). Also, when you tell a json formula to fetch 'json' rather than 'string', it no longer escapes unicode.

The hydrus downloader system no longer needs the borked 'bytes' decode for a 'file hash' content parser! These content parsers now have a 'hex'/'base64' dropdown in their UI, and you just deliver that string. This ugly situation was a legacy artifact of python2, now finally cleared up. Existing string converters now treat 'hex' or 'base64' decode steps as a no-op, and existing 'file hash' content parsers should update correctly to 'hex' or 'base64' based on what their string converters were doing previously. The help is updated to reflect this. hex/base64 encodes are still in as they are used for file lookup script hash initialisation, but they will likely get similar treatment in future.

birthday

🎉🎉🎉🎉🎉

On December 14th, 2011, the first non-experimental beta of hydrus was released. This week marks nine years. It has been a lot of work and a lot of fun.

Looking back on 2020, we converted a regularly buggy and crashy new Qt build to something much faster and nicer than we ever had with wx. Along with that came mpv and smooth video and finally audio playing out of the client. The PTR grew to a billion mappings(!), and with that came many rounds of database optimisation, speeding up many complicated tag and file searches. You can now save and load those searches, and most recently, search predicates are now editable in-place. Siblings and parents were updated to completely undoable virtual systems, resulting in much faster boot time and thumbnail load and greatly improved tag relationship logic. Subscriptions were broken into smaller objects, meaning they load and edit much faster, and several CPU-heavy routines no longer interrupt or judder browsing. And the Client API expanded to allow browsing applications and easier login solutions for difficult sites.

There are still a couple thousand things I would like to do, so I hope to keep going into 2021. I deeply appreciate the feedback, help, and support over the years. Thank you!

If you would like to further support my work and are in a position to do so, my simple no-reward Patreon is here: https://www.patreon.com/hydrus_dev

full list

advanced tags:

fixed the search code for various 'total' autocomplete searches like '*' and 'namespace:*', which were broken around v419's optimised regular tag lookups. these search types also have a round of their own search optimisations and improved cancel latency. I am sorry for the trouble here

expanded the database autocomplete fetch unit tests to handle these total lookups so I do not accidentally kill them due to typo/ignorance again

updated the autocomplete result cache object to consult a search's advanced search options (as under _tags->manage tag display and search_) to test whether a search cache for 'char' or 'character:' is able to serve results for a later 'character:samus' input

optimised file and tag search code for cases where someone might somehow sneak an unoptimised raw '*:subtag' or 'namespace:*' search text in

updated and expanded the autocomplete result cache unit tests to handle the new tested options and the various 'total' tests, so they aren't disabled by accident again

cancelling a autocomplete query with a gigantic number of results should now cancel much quicker when you have a lot of siblings

the single-tag right-click menu now shows siblings and parents info for every service, and will work on taglists in the 'all known tags' domain. clicking on any item will copy it to clipboard. this might result in megatall submenus, but we'll see. tall seems easier to use than nested per-service for now

the more primitive 'siblings' submenu on the taglist 'copy' right-click menu is now removed

right-click should no longer raise an error on esoteric taglists (such as tag filters and namespace colours). you might get some funky copy strings, which is sort of fun too

the copy string for the special namespace predicate ('namespace:*anything*') is now 'namespace:*', making it easier to copy/paste this across pages

.

misc:

the thumbnail right-click 'copy/open known urls by url class' commands now exclude those urls that match a more specific url class (e.g. /post/123456 vs /post/123456/image.jpg)

miniupnpc is no longer bundled in the official builds. this executable is only used by a few advanced users and was a regular cause of anti-virus false positives, so I have decided new users will have to install it manually going forward.

the client now looks for miniupnpc in more places, including the system path. when missing, its error popups have better explanation, pointing users to a new readme in the bin directory

UPnP errors now have more explanation for 'No IGD UPnP Device' errortext

the database's boot-repair function now ensures indices are created for: non-sha256 hashes, sibling and parent lookups, storage tag cache, and display tag cache. some users may be missing indices here for unknown update logic or hard drive damage reasons, and this should speed them right back up. the boot-repair function now broadcasts 'checking database for faults' to the splash, which you will see if it needs some time to work

the duplicates page once again correctly updates the potential pairs count in the 'filter' tab when potential search finishes or filtering finishes

added the --boot_debug launch switch, which for now prints additional splash screen texts to the log

the global pixmaps object is no longer initialised in client model boot, but now on first request

fixed type of --db_synchronous_override launch parameter, which was throwing type errors

updated the client file readwrite lock logic and brushed up its unit tests

improved the error when the client database is asked for the id of an invalid tag that collapses to zero characters

the qss stylesheet directory is now mapped to the static dir in a way that will follow static directory redirects

.

downloaders and parsing (advanced):

started on better network redirection tech. if a post or gallery URL is 3XX redirected, hydrus now recognises this, and if the redirected url is the same type and parseable, the new url and parser are swapped in. if a gallery url is redirected to a non-gallery url, it will create a new file import object for that URL and say so in its gallery log note. this tentatively solves the 'booru redirects one-file gallery pages to post url' problem, but the whole thing is held together by prayer. I now have a plan to rejigger my pipelines to deal with this situation better, ultimately I will likely expose and log all redirects so we can always see better what is going on behind the scenes

added 'unicode escape characters' and 'html entities' string converter encode/decode types. the former does '\u0394'-to-'Δ', and the latter does '&'-to-'&'

improved my string converter unit tests and added the above to them

in the parsing system, decoding from 'hex' or 'base64' is no longer needed for a 'file hash' content type. these string conversions are now no-ops and can be deleted. they converted to a non-string type, an artifact of the old way python 2 used to handle unicode, and were a sore thumb for a long time in the python 3 parsing system. 'file hash' content types now have a 'hex'/'base64' dropdown, and do decoding to raw bytes at a layer above string parsing. on update, existing file hash content parsers will default to hex and attempt to figure out if they were a base64 (however if the hex fails, base64 will be attempted as well anyway, so it is not critically important here if this update detection is imperfect). the 'hex' and 'base64' _encode_ types remain as they are still used in file lookup script hash initialisation, but they will likely be replaced similarly in future. hex or base64 conversion will return in a purely string-based form as technically needed in future

updated the make-a-downloader help and some screenshots regarding the new hash decoding

when the json parsing formula is told to get the 'json' of a parsed node, this no longer encodes unicode with escape characters (\u0394 etc...)

duplicating or importing nested gallery url generators now refreshes all internal reference ids, which should reduce the liklihood of accidentally linking with related but differently named existing GUGs

importing GUGs or NGUGs through Lain easy import does the same, ensuring the new objects 'seem' fresh to a client and should not incorrectly link up with renamed versions of related NGUGs or GUGs

added unit tests for hex and base64 string converter encoding

next week

Last week of the year. I could not find time to do the network updates I wanted to this week, so that would be nice. Otherwise I will try and clean and fix little things before my week off over Christmas. The 'big thing to work on next' poll will go up next week with the 423 release posts.

1 note

·

View note

Text

THIS ISSUE HAS BEEN RESOLVED!

(Source: https://modthesims.info/showthread.php?t=687747 )

TL;DR: What happened?

Two creators had unfortunately been victim to their passwords being leaked. The people who are behind these types of TS4 malware issues tend to find leaked passwords and then sharing their Trojan file.

IF you downloaded any of these 4 items in the last 24 hours: 1. No Mosaic / Censor Mod for The Sims 4 - Toddler Compatibility Update! 2. AllCheats - Get your cheats back! 3. CAS FullEditMode Always On (Updated 6/26/18) 4. Full House Mod - Increase your Household Size! [Still Compatible as of 1/25/18] Just know that they were only live for 1,5 hours. The chances that you downloaded something malware are quite low due to this. However, just to be safe, it's good to delete them anyways if you did download them 24 hours before as of this reblog post.

So: Just a reminder to, well, everyone using the internet: Make sure to change your passwords periodically! (and, if possible, use an authentication app).

As far as I know, MTS is working on making it much harder to update posts when you've been inactive for a while! So in the future, the hackers would need access to your email provider to include malware in your mods. I believe this code is already live as we speak.

How to stay safe downloading anything CC related in the future:

Know that this issue is seemingly a big issue in The sims 4 community! While the other communities are certainly not ruled out to be able to have malware in them, it seems this group of hackers are really focused on The Sims 4 community as a whole.

What files are the issue?

ts4script files. Because it's raw python AND TS4 doesn't have great restrictions for script mods in place, these people can modify the python file to create a .dll file on running the game. That's how they get information if they're lucky.

.exe files or files that look like another file type but are an .exe file. (or some executable file like a bash script, etc). MTS does check these things before approving, but do be careful when downloading these things from tumblr or github. Make sure to check the comments there instead.

What files CANNOT ever get malware in them?

Simply said: .Package files. Exception for maybe the .package files that are actually ts4script files, but that's really from the ancient TS4 days.

With other words, your: CasParts, Lots, Cosmetics, Hair, Sims, Recolours, Objects CANNOT have malware in them

The only "kind of" malware we saw back in the days in Package files was the infamous TS3 Doll corruption bug. But that didn't collect your data, just corrupted your save/game 😉

What ways can I detect if something is malware at first sight?

99% of script modders, when updating their mods, WILL add WHY they updated their mod in the first place. If you do NOT see any update reasons in the description, it's probably malware.

Check the comments! If you're not sure, always check if someone left a comment (or in Tumblr's case, a Reblog).

Trust your gut feeling! Does something seem strange? A bit out of place from the usual? Give it a few days before you download the mod.

Package files SHOULD NEVER have a way of "installing your content" through an .exe file "For simplicity", because 99% of the cases, it's malware to trick you. Unless there is a excellent reason for it (and I mean REALLY good reason).

More or less a download site related thing: If a download site has a billion buttons saying "Download". Please don't press these. They are most likely Malware too, but definitely shady ads. For those pages, it would be best to leave the item alone, unless you really know what you're doing!

Conclusion

While these discord server announcements mean well, it frustrates me to see that they mention that EVERYTHING is compromised. Whereas in reality it's only TS4Scripts and .exe files that can do harm.

I know they mean well! And wanting to protect people! But at the same time, it also spreads a sense of misinformation that can harm creators, websites, you name it.

So, instead, I would love to advise them to educate their members instead on what files can be the problem! And how to detect them. The more we get this into the world, the better we will be able to protect one another from downloading bad things!

And of course, websites that share CC, should make an effort to prevent this in the future. I'm happy MTS is doing this at the moment.

Stay safe!

(Sourced from the Sims After Dark discord server)

DO NOT DOWNLOAD ANY MODS FROM MODTHESIMS! Numerous mods there (including those by TwistedMexi) are being compromised by hackers adding a malicious file with the mods

Please reblog!!

#Signal boost#please reblog#the sims 4#ts3#ts4#sims 4#mod the sims#sims 4 community#sims community#sims 2 community#ts2 community#ts4 community#the sims 2#ts2#sims 2#mts

4K notes

·

View notes

Text

zero to pandas

There are alot of Python courses out there that we can jump into and get started with. But to a certain extent in that attempt to learn the language, the process becomes unbearably long and frustratingly slow. We all know the feeling of wanting to run before we could learn how to walk; we really wanna get started with some subtantial project but we do not know enough to even call the data into the terminal for viewing.

Back in August, freeCodeCamp in collaboration with Jovian.ai, organized a very interesting 6-week MOOC called Data Analysis with Python: Zero to Pandas and as a self-proclaimed Python groupie, I pledged my allegiance!

If there are any expectation that I've managed to whizz myself through the course and obtained a certificate, nothing of that sort happened; I missed the deadline cause I was busy testing out every single code I found and work had my brain on overdrive. I can't...I just...can't. Even with the extension, I was short of 2 Pythonic answers required to earn the certificate. But don't mistake my blunders for the quality of the content this course has to offer; is worth every gratitude of its graduates!

Zero to Pandas MOOC is a course that spans over 6 weeks with one lecture webinar per week that compacts the basics of Python modules that are relevant in executing data analysis. Like the play on its name, this course assumes no prior knowledge in Python language and aims to teach prospective students the basics on Python language structure AND the steps in analyzing real data. The course does not pretend that data analytics is easy and cut-corners to simplify anything. It is a very 'honest' demonstration that effectively gives overly ambitious future data analysts a flick on the forehead about data analysis. Who are we kidding? Data analysis using programming language requires sturdy knowledge in some nifty codes clean, splice and feature engineer the raw data and real critical thinking on figuring out 'Pythonic' ways to answer analytical questions. What does it even mean by Pythonic ways? Please refer to this article by Robert Clark, How to be Pythonic and Why You Should Care. We can discuss it somewhere down the line, when I am more experienced to understand it better. But for now, Packt Hub has the more comprehensive simple answer; it simply is an adjective coined to describe a way/code/structure of a code that utilizes or take advantage of the Python idioms well and displays the natural fluency in the language.

The bottom line is, we want to be able to fully utilize Python in its context and using its idioms to analyze data.

The course is conducted at Jovian.ai platform by its founder; Aakash and it takes advantage of Jupyter-like notebook format; Binder, in addition to making the synchronization available at Kaggle and Google's Colab. Each webinar in this course spans over close to 2 hours and each week, there are assignments on the lecture given. The assignments are due in a week but given the very disproportionate ratio of students and instructors, there were some extensions on the submission dates that I truly was grateful for. Forum for students is available at Jovian to engage students into discussing their ideas and question and the teaching body also conducts office hours where students can actively ask questions.

The instructor's method of teaching is something I believe to be effective for technical learners. In each lectures, he will be teaching the codes and module requires to execute certain tasks in the thorough procedure of the data analysis task itself. From importing the .csv formatted data into Python to establishing navigation to the data repository...from explaining what the hell loops are to touching base with creating functions. All in the controlled context of two most important module for the real objective of this course; Numpy and Pandas.

My gain from this course is immensely vast and that's why I truly think that freeCodeCamp and Jovian.ai really put the word 'tea' to 'teachers'. Taking advantage of the fact that people are involuntarily quarantined in their house, this course is something that should not be placed aside in the 'LATER' basket. I managed to clear my head to understand what 'loop' is! So I do think it can solve the world's problem!

In conclusion, this is the best course I have ever completed (90%!) on data analysis using Python. I look forward to attending it again and really finish up that last coursework.

Oh. Did I not mention why I got stuck? It was the last coursework. We are required to demonstrate all the steps of data analysis on data of our choice, create 5 questions and answer them using what we've learned throughout the course. Easy eh? Well, I've always had the tendency of digging my own grave everytime I get awesome cool assignments. But I'm not saying I did not do it :). Have a look-see at this notebook and consider the possibilities you can grasp after you've completed the course. And that's just my work...I'm a standard C-grade student.

And the exciting latest news from Jovian.ai is that they have upcoming course at Jovian for Deep Learning called Deep Learning with PyTorch: Zero to GANS! That's actually yesterday's news since they organized it earlier this year...so yeah...this is an impending second cohort! Tentatively, the course will start on Nov 14th. Click the link below to sign-up and get ready to attack the nitty-gritty. Don't say I didn't warn ya.

And that's me, reporting live from the confinement of COVID pandemic somewhere in a developing country at Southeast Asia....

1 note

·

View note

Text

DTC Prediction & Analysis

Data from the vehicle can be very useful for predicting faults and errors in the vehicle, but most of the data which comes from the sensors is redundant data. In this paper Diagnostic Trouble Code (DTC) in a vehicle is being predicted along with, eliminating the issue of redundant data.

The data used in my project was from Eicher's heavy-duty trucks. Some of them have sensors installed in them, which sends a combination of vehicle sensor data and vehicle data. Before feeding this data to Deep Neural Network (DNN), data is divided into multiple clusters based on the requirement which will be discussed in a later section of this paper. These clusters are then pre-processed in-order to remove redundancy from the data, as much as possible. Once the dataset is clean and ready to use, the important features are extracted from the data to feed into the machine learning model.

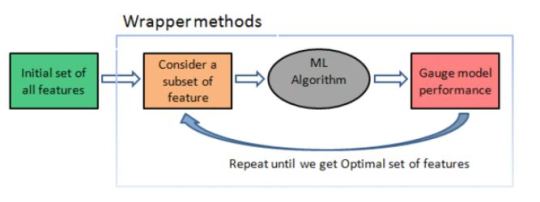

Feature extraction can be done using a method called wrapper method. Wrapper method is used to find out the number of minimum features that are most significant in predicting the output. The wrapper method takes all features as input parameters, a machine learning model for prediction, scoring technique (in this case r-square), and a significance level (default 0.05). For instance, if we are using a linear regression model, then it will calculate the p-value for each feature, which is also the null hypothesis. If we are using a backward elimination technique in the wrapper method, then it will eliminate a feature if its p-value is greater than the significance level.

Once we have all the required features, we applied 4 different models to predict the output. The models which we have used in this paper are Decision Tree, Random Forest Regressor, Logistic Regression, and Deep Neural Network. The reason behind using Decision Tree, Random Forest Regressor, and Logistic Regression along with DNN are, these models also work well on this kind of dataset and we can use it to compare results with each other.

The outcome of anything depends eventually upon the results which are generated from it. In this section, we will discuss the result for each phase of the project. Starting from data collection to model prediction. As each step is dependent on one another and gives crucial information about the data. But a certain part of each stage is independent of each other. Hence, it is important to know about the results of each stage. Starting from the data collection, we take raw data from the sensor and pre-process it in a way so that we can use it for DTC predicting and further use it for analysis of various other things as well. Therefore, the result of the initial part of the project is data, loaded with valuable information, which the organization can use as they want.

The next part was based on the prediction of DTC. As stated earlier that we used 4 different models and compared the output of each model with one another. Amongst which Deep Neural Network (DNN) was producing the best result. This is because DNN can easily identify patterns and meaningful information from the dataset if the data are sufficient.

Once the model has successfully started predicting DTCs, this information can be used in many places. For instance, if there is a critical error or fault which might occur in a vehicle, the driver can be alerted in advance. This way, any major damage to the vehicle can be avoided. Such prediction is very helpful and beneficial for the organization, customer, and the dealer.

This project has helped me in many ways. I got to learn a lot from it. Also, it provided me with a platform to test my skills in machine learning and data analytics. The main learning outcome of this project is listed below:

§ I got to learn about real-world data. How it is generated and transmitted from the vehicle to the server.

§ I learnt about the different models of vehicle and its parts.

§ Attain more clarity on data mining and data pre-processing.

§ Learnt about the different data and their relationship with each other.

§ Got a better understanding of machine learning models.

§ Learnt about the visualizing techniques in Python using Matplotlib.

§ Learnt, how to handle big data using python and techniques to work upon it.

§ Learnt more about Deep Neural Network (DNN) and its layers.

#bennett university#machine learning#Bennett University CSE Handle#Dr. Deepak Garg#Dr. Suneet Gupta#VE Commercial Vehicles Ltd.

1 note

·

View note

Quote

The coronavirus outbreak is taking over headlines. Due to the spread of COVID-19, remote work is suddenly an overnight requirement for many. You might be working from home as you are reading this article. With millions working from home for many weeks now, we should seize this opportunity to improve our skills in the domain we are focusing on. Here is my strategy to learn Data Science while working from home with few personal real life projects. "So what should we do?" "Where should we start learning?" Grab your coffee as I explain the process of how you can learn data science sitting at home. This blog is for everyone, from beginners to professionals. Photo by Nick Morrison on Unsplash Prerequisites To start this journey, you will need to cover the prerequisites. No matter which specific field you are in, you will need to learn the following prerequisites for data science. Logic/Algorithms: It’s important to know why we need a particular prerequisite before learning it. Algorithms are basically a set of instructions given to a computer to make it do a specific task. Machine learning is built from various complex algorithms. So you need to understand how algorithms and logic work on a basic level before jumping into complex algorithms needed for machine learning. If you are able to write the logic for any given puzzle with the proper steps, it will be easy for you to understand how these algorithms work and you can write one for yourself. Resources: Some awesome free resources to learn data structures and algorithms in depth. Statistics: Statistics is a collection of tools that you can use to get answers to important questions about data. Machine learning and statistics are two tightly related fields of study. So much so that statisticians refer to machine learning as “applied statistics” or “statistical learning”. Image source : http://me.me/ The following topics should be covered by aspiring data scientists before they start machine learning. Measures of Central Tendency — mean, median, mode, etc Measures of Variability — variance, standard deviation, z-score, etc Probability — probability density function, conditional probability, etc Accuracy — true positive, false positive, sensitivity, etc Hypothesis Testing and Statistical Significance — p-value, null hypothesis, etc Resources: Learn college level statistics in this free 8 hour course. Business: This depends on which domain you want to focus on. It basically involves understanding the particular domain and getting domain expertise before you get into a data science project. This is important as it helps in defining our problem accurately. Resources: Data science for business Brush up your basics This sounds pretty easy but we tend to forget some important basic concepts. It gets difficult to learn more complex concepts and the latest technologies in a specific domain without having a solid foundation in the basics. Here are few concepts you can start revising: Python programming language Python is widely used in data science. Check out this collection of great Python tutorials and these helpful code samples to get started. Image source : memecrunch.com You can also check out this Python3 Cheatsheet that will help you learn new syntax that was released in python3. It'll also help you brush up on basic syntax. And if you want a great free course, check out this Python for Everybody course from Dr. Chuck. General data science skills Want to take a great course on data science concepts? Here's a bunch of data science courses that you can take online, ranked according to thousands of data points. Resources: Data science for beginners - free 6 hour course, What languages should you learn for data science? Data Collection Now it is time for us to explore all the ways you can collect your data. You never know where your data might be hiding. Following are a few ways you can collect your data. Web scraping Web scraping helps you gather structured data from the web, select some of that data, and keep what you selected for whatever use you require. You can start learning BeautifulSoup4 which helps you scrape websites and make your own datasets. Advance Tip: You can automate browsers and get data from interactive web pages such as Firebase using Selenium. It is useful for automating web applications and automating boring web based administration Resources: Web Scraping 101 in Python Cloud servers If your data is stored on cloud servers such as S3, you might need to get familiar with how to get data from there. The following link will help you understand how to implement them using Amazon S3. Resources : Getting started with Amazon S3, How to deploy your site or app to AWS S3 with CloudFront APIs There are millions of websites that provide data through APIs such as Facebook, Twitter, etc. So it is important to learn how they are used and have a good idea on how they are implemented. Resources : What is an API? In English, please, How to build a JSON API with Python, and Getting started with Python API. Data Preprocessing This topic includes everything from data cleaning to feature engineering. It takes a lot of time and effort. So we need to dedicate a lot of time to actually learn it. Image source : https://www.pinterest.com/pin/293648838181843463/ Data cleaning involves different techniques based on the problem and data type. The data needs to be cleaned from irrelevant data, syntax erros, data inconsistencies and missing data. The following guide will get you started with data cleaning. Resources : Ultimate guide to data cleaning Data Preprocessing is an important step in which the data gets transformed, or encoded, so that the machine can easily parse it. It requires time as well as effort to preprocess different types of data which include numerical, textual and image data. Resources : Data Preprocessing: Concepts, All you need to know about text preprocessing for NLP and Machine Learning, Preprocessing for deep learning. Machine Learning Finally we reach our favourite part of data science: Machine Learning. Image source : https://in.pinterest.com/pin/536209899383255279/ My suggestion here would be to first brush up your basic algorithms. Classification — Logistic Regression, RandomForest, SVM, Naive Bayes, Decision Trees Resources : Types of classification algorithms in Machine Learning, Classification Algorithms in Machine Learning Regression — Linear Regression, RandomForest, Polynomial Regression Resources : Introduction to Linear Regression , Use Linear Regression models to predict quadratic, root, and polynomial functions, 7 Regression Techniques you should know, Selecting the best Machine Learning algorithm for your regression problem, Clustering — K-Means Clustering, DBSCAN, Agglomerative Hierarchical Clustering Resources : Clustering algorithms Gradient Boosting — XGBoost, Catboost, AdaBoost Resources : Gradient boosting from scratch, Understanding Gradient Boosting Machines I urge you all to understand the math behind these algorithms so you have a clear idea of how it actually works. You can refer to this blog where I have implemented XGBoost from scratch — Implementing XGBoost from scratch Now you can move on to Neural Networks and start your Deep Learning journey. Resources: Deep Learning for Developers, Introduction to Deep Learning with Tensorflow, How to develop neural networks with Tensorflow, Learn how deep neural networks work You can then further dive deep into how LSTM, Siamese Networks, CapsNet and BERT works. Hackathons: Image Source : https://me.me/ Now we need to implement these algorithms on a competitive level. You can start looking for online Data Science Hackathons. Here is the list of websites where I try to compete with other data scientists. Analytics Vidhya — https://datahack.analyticsvidhya.com/contest/all/ Kaggle — https://www.kaggle.com/competitions Hackerearth — https://www.hackerearth.com/challenges/ MachineHack — https://www.machinehack.com/ TechGig — https://www.techgig.com/challenge Dare2compete — https://dare2compete.com/e/competitions/latest Crowdanalytix — https://www.crowdanalytix.com/community To have a look at a winning solution, here is a link to my winning solution to one online Hackathon on Analytics Vidhya — https://github.com/Sid11/AnalyticsVidhya_DataSupremacy Projects: We see people working on dummy data and still don’t get the taste of how actual data looks like. In my opinion, working on real life data gives you a very clear idea how data in real life looks like. The amount of time and effort required in cleaning real life data takes about 70% of your project’s time. Here are the best free open data sources anyone can use Open Government Data — https://data.gov.in/ Data about real contributed by thousands of users and organizations across the world — https://data.world/datasets/real 19 public datasets for Data Science Project — https://www.springboard.com/blog/free-public-data-sets-data-science-project/ Business Intelligence After you get the results from your project, it is now time to make business decisions from those results. Business Intelligence is a suite of software and services that helps transform data into actionable intelligence and knowledge. This can be done by creating a dashboard from the output of our model. Tableau is a powerful and the fastest growing data visualization tool used in the Business Intelligence Industry. It helps in simplifying raw data into the very easily understandable format. Data analysis is very fast with Tableau and the visualizations created are in the form of dashboards and worksheets.

http://damianfallon.blogspot.com/2020/03/how-to-improve-your-data-science-skills_31.html

1 note

·

View note

Text

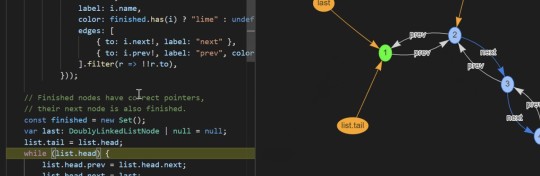

Using Python to recover SEO site traffic (Part three)

When you incorporate machine learning techniques to speed up SEO recovery, the results can be amazing.

This is the third and last installment from our series on using Python to speed SEO traffic recovery. In part one, I explained how our unique approach, that we call “winners vs losers” helps us quickly narrow down the pages losing traffic to find the main reason for the drop. In part two, we improved on our initial approach to manually group pages using regular expressions, which is very useful when you have sites with thousands or millions of pages, which is typically the case with ecommerce sites. In part three, we will learn something really exciting. We will learn to automatically group pages using machine learning.

As mentioned before, you can find the code used in part one, two and three in this Google Colab notebook.

Let’s get started.

URL matching vs content matching

When we grouped pages manually in part two, we benefited from the fact the URLs groups had clear patterns (collections, products, and the others) but it is often the case where there are no patterns in the URL. For example, Yahoo Stores’ sites use a flat URL structure with no directory paths. Our manual approach wouldn’t work in this case.

Fortunately, it is possible to group pages by their contents because most page templates have different content structures. They serve different user needs, so that needs to be the case.

How can we organize pages by their content? We can use DOM element selectors for this. We will specifically use XPaths.

For example, I can use the presence of a big product image to know the page is a product detail page. I can grab the product image address in the document (its XPath) by right-clicking on it in Chrome and choosing “Inspect,” then right-clicking to copy the XPath.

We can identify other page groups by finding page elements that are unique to them. However, note that while this would allow us to group Yahoo Store-type sites, it would still be a manual process to create the groups.

A scientist’s bottom-up approach

In order to group pages automatically, we need to use a statistical approach. In other words, we need to find patterns in the data that we can use to cluster similar pages together because they share similar statistics. This is a perfect problem for machine learning algorithms.

BloomReach, a digital experience platform vendor, shared their machine learning solution to this problem. To summarize it, they first manually selected cleaned features from the HTML tags like class IDs, CSS style sheet names, and the others. Then, they automatically grouped pages based on the presence and variability of these features. In their tests, they achieved around 90% accuracy, which is pretty good.

When you give problems like this to scientists and engineers with no domain expertise, they will generally come up with complicated, bottom-up solutions. The scientist will say, “Here is the data I have, let me try different computer science ideas I know until I find a good solution.”

One of the reasons I advocate practitioners learn programming is that you can start solving problems using your domain expertise and find shortcuts like the one I will share next.

Hamlet’s observation and a simpler solution

For most ecommerce sites, most page templates include images (and input elements), and those generally change in quantity and size.

I decided to test the quantity and size of images, and the number of input elements as my features set. We were able to achieve 97.5% accuracy in our tests. This is a much simpler and effective approach for this specific problem. All of this is possible because I didn’t start with the data I could access, but with a simpler domain-level observation.

I am not trying to say my approach is superior, as they have tested theirs in millions of pages and I’ve only tested this on a few thousand. My point is that as a practitioner you should learn this stuff so you can contribute your own expertise and creativity.

Now let’s get to the fun part and get to code some machine learning code in Python!

Collecting training data

We need training data to build a model. This training data needs to come pre-labeled with “correct” answers so that the model can learn from the correct answers and make its own predictions on unseen data.

In our case, as discussed above, we’ll use our intuition that most product pages have one or more large images on the page, and most category type pages have many smaller images on the page.

What’s more, product pages typically have more form elements than category pages (for filling in quantity, color, and more).

Unfortunately, crawling a web page for this data requires knowledge of web browser automation, and image manipulation, which are outside the scope of this post. Feel free to study this GitHub gist we put together to learn more.

Here we load the raw data already collected.

Feature engineering

Each row of the form_counts data frame above corresponds to a single URL and provides a count of both form elements, and input elements contained on that page.

Meanwhile, in the img_counts data frame, each row corresponds to a single image from a particular page. Each image has an associated file size, height, and width. Pages are more than likely to have multiple images on each page, and so there are many rows corresponding to each URL.

It is often the case that HTML documents don’t include explicit image dimensions. We are using a little trick to compensate for this. We are capturing the size of the image files, which would be proportional to the multiplication of the width and the length of the images.

We want our image counts and image file sizes to be treated as categorical features, not numerical ones. When a numerical feature, say new visitors, increases it generally implies improvement, but we don’t want bigger images to imply improvement. A common technique to do this is called one-hot encoding.

Most site pages can have an arbitrary number of images. We are going to further process our dataset by bucketing images into 50 groups. This technique is called “binning”.

Here is what our processed data set looks like.

Adding ground truth labels

As we already have correct labels from our manual regex approach, we can use them to create the correct labels to feed the model.

We also need to split our dataset randomly into a training set and a test set. This allows us to train the machine learning model on one set of data, and test it on another set that it’s never seen before. We do this to prevent our model from simply “memorizing” the training data and doing terribly on new, unseen data. You can check it out at the link given below:

Model training and grid search

Finally, the good stuff!

All the steps above, the data collection and preparation, are generally the hardest part to code. The machine learning code is generally quite simple.

We’re using the well-known Scikitlearn python library to train a number of popular models using a bunch of standard hyperparameters (settings for fine-tuning a model). Scikitlearn will run through all of them to find the best one, we simply need to feed in the X variables (our feature engineering parameters above) and the Y variables (the correct labels) to each model, and perform the .fit() function and voila!

Evaluating performance

After running the grid search, we find our winning model to be the Linear SVM (0.974) and Logistic regression (0.968) coming at a close second. Even with such high accuracy, a machine learning model will make mistakes. If it doesn’t make any mistakes, then there is definitely something wrong with the code.

In order to understand where the model performs best and worst, we will use another useful machine learning tool, the confusion matrix.

When looking at a confusion matrix, focus on the diagonal squares. The counts there are correct predictions and the counts outside are failures. In the confusion matrix above we can quickly see that the model does really well-labeling products, but terribly labeling pages that are not product or categories. Intuitively, we can assume that such pages would not have consistent image usage.

Here is the code to put together the confusion matrix:

Finally, here is the code to plot the model evaluation:

Resources to learn more

You might be thinking that this is a lot of work to just tell page groups, and you are right!

Mirko Obkircher commented in my article for part two that there is a much simpler approach, which is to have your client set up a Google Analytics data layer with the page group type. Very smart recommendation, Mirko!

I am using this example for illustration purposes. What if the issue requires a deeper exploratory investigation? If you already started the analysis using Python, your creativity and knowledge are the only limits.

If you want to jump onto the machine learning bandwagon, here are some resources I recommend to learn more:

Attend a Pydata event I got motivated to learn data science after attending the event they host in New York.

Hands-On Introduction To Scikit-learn (sklearn)

Scikit Learn Cheat Sheet

Efficiently Searching Optimal Tuning Parameters

If you are starting from scratch and want to learn fast, I’ve heard good things about Data Camp.

Got any tips or queries? Share it in the comments.

Hamlet Batista is the CEO and founder of RankSense, an agile SEO platform for online retailers and manufacturers. He can be found on Twitter @hamletbatista.

The post Using Python to recover SEO site traffic (Part three) appeared first on Search Engine Watch.

from Digtal Marketing News https://searchenginewatch.com/2019/04/17/using-python-to-recover-seo-site-traffic-part-three/

2 notes

·

View notes

Text

Python Training in Tirupati

Are you a student? Searching for a Python Course? “Takeoff Upskill” is the Best Python Training Institute with Internship program in both Online & Offline.

Best placement is given to students to learn. After the completion of the course within 4 months placement is provided and mock test also provided during the course

First of all, what is Python?

Python is an easy language to learn.Python is one of the best HPPL (high- position programming languages). Core design of Python is code readability & syntax - which allows programmers to express code.

For me, the first reason to learn Python was that we can use coding in Python in multiple ways: data science, web development, and machine learning all shine then.

What exactly can Python be used for?

We can use python mainly three types of application :

Web Development

Data Science ( including machine learning, data analysis, and data visualisation)

Scripting

Web development:

Python is a popular programming language that's extensively used in the development of web operations

We will also be covering classes and objects in Python.You will learn how to produce and use classes and objects in your web development PROJECTS, and how they can help you organise and structure your CODE.

In this web development

We can use python as frameworks in that Bootstrap is a CSS framework that gives you a bunch of easily customizable interface elements, and allows you to create responsive websites very quickly.

Data Science

Python for Data Science is a must- learn. With the growth in the IT industry, there's a booming demand for professed Data Scientists and Python has evolved as the most favoured programming language. how to dissect data and also produce some beautiful visualisations using Python.

Machine Learning

In simple words, Machine Learning( ML) is a type of artificial intelligence that excerpts patterns out of raw data by using an algorithm or method. The main focus of ML is to allow computer systems to learn from experience without being explicitly programmed or mortal intervention.

Python for machine learning

There are popular machine learning libraries and frameworks for Python.Two of the most popular ones are scikit-learn and TensorFlow.

1. scikit-learn comes with some of the more popular machine learning algorithms built-in. I mentioned some of them above.

2. TensorFlow is more of a low-level library that allows you to build custom machine learning algorithms.

Data Analysis

It is the technique to collect, transform, and organise data to make future predictions, and make informed data- driven opinions. It also helps to find possible results for a business problem. There are six ways for Data Analysis. They are

Ask or Specify Data Conditions

Prepare or Collect Data

Clean and Process

Analyze

Share

Act or Report

data visualisation

Data visualisation is the discipline of trying to understand data by placing it in a visual environment so that patterns, trends, and correlations that might not else be detected can be exposed.

Python offers multiple great graphing libraries packed with lots of different features. Whether you want to produce interactive or highly customised plots, Python has an excellent library for you.

Scripting

Scripting is a veritably common practice among Python programmers. It’s used for automation of daily tasks, reporting, server operation, security, social media operation, business growth and development, financial trading, automating software and many other intelligent results.

Is Python good for scripting?

Python isn't just good for scripting, it's perfect for scripting.

1. Easy entry

2. Rich libraries

3. Community support

4. Language interoperability “Takeoff Upskill” is the Best Python Training in Tirupati - https://takeoffupskill.com

1 note

·

View note

Text

How many apps use Swift in 2019?

Three years ago, I read a blog post by Ryan Olsen where he explored how many of the top 100 apps on the app store were using Swift. He was surprised that at the time, only 11% of the top 100 apps were using Swift (I wasn’t).

I thought it would be interesting to revisit this in early 2019. Swift has been out for going on 5 years now, Swift 5 will be released soon, and my perception is that Swift has been broadly adopted across the iOS development community. But, what do the numbers say?

I downloaded the top 110 free apps on the app store on January 15, 2019. I decrypted them, then wrote a script that does some simple analysis of their contents to determine whether or not they’re using Swift, and roughly how much of the app is written in Swift.

Results

According to this analysis, of the top 110 apps on the app store on January 15, 2019, 42% are using Swift, while 58% are not. If only the 79 non-game apps are considered, the results are that 57% are using Swift, while 43% are not.

Interestingly, of the 31 games, none are using Swift at all. My guess is that most if not all of them are written using Unity or another cross-platform game engine, and therefore don’t have much if any iOS-specific code. I did look at a few of them myself and noticed that while the games I analyzed do have Objective-C classes, they seem to be mostly code from various analytics and social media frameworks, not code that was actually written specifically for the game itself.

Methodology

The apps were analyzed using a Python script that I wrote. You can find the script in this GitHub repo. A few notes about the way the script works:

In order for an app to be considered to “use Swift”, it must include libswiftCore.dylib in its Frameworks folder, and it must have at least one Objective-C compatible Swift class in the main executable. Some apps don’t use Swift in the main executable but include dynamically linked frameworks that use Swift. For this analysis, those apps are not counted as using Swift, because I wanted to get an idea of how many apps themselves were being developed in Swift.

However, this way of doing analysis is not perfect. For one thing, it will still count an app as using Swift if that app includes a staticly linked Swift library, even if the app’s own code doesn’t use Swift. There’s no foolproof way (that I know of) to automate figuring that out.

For apps using Swift, the script also tries to determine the percentage of each app’s main executable that is written in Swift. It determines the percentage of the app written in Swift by finding all the Objective-C exposed classes, and counting those written in Swift vs. those written in Objective-C. Again, this is imperfect because it doesn’t include Swift types that are not @objc classes. But it does give you a rough ballpark figure for how heavily each app uses Swift. The values here range from a minimum of 1% for the Google Drive app, up to a maximum of 80% for the Walmart app. The average percentage of each Swift-using app written in Swift is 34%.

The determination of whether an app was a game or not was made by hand by me, and is by nature somewhat subjective. For example, I didn’t consider Bitmoji or TikTok games, despite them both being fun entertainment apps.

Takeaway

In the past 3 years, Swift has gone from being used in a small minority of the most popular apps to being used in roughly half of them, which is a huge increase and shows how well Apple has done with introducing a new language. However, even for apps using Swift, they continue to use Objective-C fairly heavily. So, Objective-C is far from dead. Games continue to be written using tools that allow for cross-platform deployment, and are therefore written in languages other than Objective-C and Swift.

Data

You can find the raw data I generated in this GitHub Gist. If you do your own analysis that turns up other interesting insights, I'd love to hear about it!

1 note

·

View note

Text

Pandas Github

Pandas Challenge Github

Pandas Github

Github Pandas Tutorial

Panda Vpn Pro

Pandas Github Issues

Pandas Github License

Up to date remote data access for pandas, works for multiple versions of pandas.

< Data Indexing and Selection | Contents | Handling Missing Data >

Since Python 3.4, pathlib has been included in the Python standard library. Path objects provide a simple and delightful way to interact with the file system. The pandas-path package enables the Path API for pandas through a custom accessor.path.Getting just the filenames from a series of full file paths is as simple as myfiles.path.name. Dask uses existing Python APIs and data structures to make it easy to switch between NumPy, pandas, scikit-learn to their Dask-powered equivalents. You don't have to completely rewrite your code or retrain to scale up. A REST API based on Flask for serving Pandas Dataframes to Grafana. This way, a native Python application can be used to directly supply data to Grafana both easily and powerfully. It was inspired by and is compatible with the simple json datasource. Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language. Install pandas now!

One of the essential pieces of NumPy is the ability to perform quick element-wise operations, both with basic arithmetic (addition, subtraction, multiplication, etc.) and with more sophisticated operations (trigonometric functions, exponential and logarithmic functions, etc.).Pandas inherits much of this functionality from NumPy, and the ufuncs that we introduced in Computation on NumPy Arrays: Universal Functions are key to this.

Pandas includes a couple useful twists, however: for unary operations like negation and trigonometric functions, these ufuncs will preserve index and column labels in the output, and for binary operations such as addition and multiplication, Pandas will automatically align indices when passing the objects to the ufunc.This means that keeping the context of data and combining data from different sources–both potentially error-prone tasks with raw NumPy arrays–become essentially foolproof ones with Pandas.We will additionally see that there are well-defined operations between one-dimensional Series structures and two-dimensional DataFrame structures.

Ufuncs: Index Preservation¶

Because Pandas is designed to work with NumPy, any NumPy ufunc will work on Pandas Series and DataFrame objects.Let's start by defining a simple Series and DataFrame on which to demonstrate this:

If we apply a NumPy ufunc on either of these objects, the result will be another Pandas object with the indices preserved:

ABCD0-1.0000007.071068e-011.000000-1.000000e+001-0.7071071.224647e-160.707107-7.071068e-012-0.7071071.000000e+00-0.7071071.224647e-16

Any of the ufuncs discussed in Computation on NumPy Arrays: Universal Functions can be used in a similar manner.

UFuncs: Index Alignment¶

Pandas Challenge Github

For binary operations on two Series or DataFrame objects, Pandas will align indices in the process of performing the operation.This is very convenient when working with incomplete data, as we'll see in some of the examples that follow.

Index alignment in Series¶

As an example, suppose we are combining two different data sources, and find only the top three US states by area and the top three US states by population:

Let's see what happens when we divide these to compute the population density:

The resulting array contains the union of indices of the two input arrays, which could be determined using standard Python set arithmetic on these indices:

Pandas Github

Any item for which one or the other does not have an entry is marked with NaN, or 'Not a Number,' which is how Pandas marks missing data (see further discussion of missing data in Handling Missing Data).This index matching is implemented this way for any of Python's built-in arithmetic expressions; any missing values are filled in with NaN by default:

If using NaN values is not the desired behavior, the fill value can be modified using appropriate object methods in place of the operators.For example, calling A.add(B) is equivalent to calling A + B, but allows optional explicit specification of the fill value for any elements in A or B that might be missing:

Index alignment in DataFrame¶

A similar type of alignment takes place for both columns and indices when performing operations on DataFrames:

Notice that indices are aligned correctly irrespective of their order in the two objects, and indices in the result are sorted.As was the case with Series, we can use the associated object's arithmetic method and pass any desired fill_value to be used in place of missing entries.Here we'll fill with the mean of all values in A (computed by first stacking the rows of A):

The following table lists Python operators and their equivalent Pandas object methods:

Python OperatorPandas Method(s)+add()-sub(), subtract()*mul(), multiply()/truediv(), div(), divide()//floordiv()%mod()**pow()

Ufuncs: Operations Between DataFrame and Series¶

When performing operations between a DataFrame and a Series, the index and column alignment is similarly maintained.Operations between a DataFrame and a Series are similar to operations between a two-dimensional and one-dimensional NumPy array.Consider one common operation, where we find the difference of a two-dimensional array and one of its rows:

According to NumPy's broadcasting rules (see Computation on Arrays: Broadcasting), subtraction between a two-dimensional array and one of its rows is applied row-wise.

In Pandas, the convention similarly operates row-wise by default:

If you would instead like to operate column-wise, you can use the object methods mentioned earlier, while specifying the axis keyword:

Note that these DataFrame/Series operations, like the operations discussed above, will automatically align indices between the two elements:

This preservation and alignment of indices and columns means that operations on data in Pandas will always maintain the data context, which prevents the types of silly errors that might come up when working with heterogeneous and/or misaligned data in raw NumPy arrays.

< Data Indexing and Selection | Contents | Handling Missing Data >

Display pandas dataframes clearly and interactively in a web app using Flask.

Web apps are a great way to show your data to a larger audience. Simple tables can be a good place to start. Imagine we want to list all the details of local surfers, split by gender. This translates to a couple of pandas dataframes to display, such as the dataframe females below.

Transforming dataframes into html tables

Using the pandas function to_html we can transform a pandas dataframe into a html table. All tables have the class dataframe by default. We can add on more classes using the classes parameter. For example, writing

results in a html table with the classes dataframe female as shown below.

Prepare the file structure for flask app

The simple_tables directory will contains all the scripts, css and html needed for the web app to run. The script site_tables.py will sit in this directory, and from here we will run the app and populate the app’s pages. Any html templates must be stored in the templates directory. Any css sheets must be within the static directory.

Below is the file structure I have used for this surfing example.

Create a flask app that pulls the dataframes

We can create a page on our web app called tables. Every time this page loads, we pull the data, filter and format to get two dataframes, females and males.

The dataframes are then transformed into html tables with classes dataframe female and dataframe male respectively. These html tables are sent as a list to the template view.html, which is stored in the templates directory. We also send a list of titles to use as a heading for each table.

Running the app using debug=True allows the app to auto-update every time the code gets edited.

Define the html template using jinja2

The html template view.html pulls css from the style sheet style.css in the static directory. We will check out the css in the next section.

Next, the jinja2 language allows us to loop through the html table list tables. Using loop.index provides the index of the loop. This starts from 1 so we need to convert between python list indices and those for jinja2 loops. Then we can pull out the correct title for each table.

For each table in the list, the table title is shown, and then the table itself. safe tells jinja2 to show this parameter as a html object.

Style the tables with css

We can use the following styling to make the tables a bit more pretty. The classes male and female have been defined with different header colours. This enables us to highlight different groups of tabled data from the initial site_tables.py script.

Github Pandas Tutorial

Some nice touches include using tr:nth-child(odd) and tr:nth-child(even) to have alternate row colours. Also tr:hover gives an interactive feel to the tables.

View the web app

Panda Vpn Pro

Running the script site_tables.py from bash will serve the web app on your local host. Your web page should look like the one below.

Pandas Github Issues

Feedback

Pandas Github License

Always feel free to get in touch with other solutions, general thoughts or questions.

0 notes

Text

Version 324

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. The downloader overhaul is almost done.

pixiv

Just as Pixiv recently moved their art pages to a new phone-friendly, dynamically drawn format, they are now moving their regular artist gallery results to the same system. If your username isn't switched over yet, it likely will be in the coming week.

The change breaks our old html parser, so I have written a new downloader and json api parser. The way their internal api works is unusual and over-complicated, so I had to write a couple of small new tools to get it to work. However, it does seem to work again.

All of your subscriptions and downloaders will try to switch over to the new downloader automatically, but some might not handle it quite right, in which case you will have to go into edit subscriptions and update their gallery manually. You'll get a popup on updating to remind you of this, and if any don't line up right automatically, the subs will notify you when they next run. The api gives all content--illustrations, manga, ugoira, everything--so there unfortunately isn't a simple way to refine to just one content type as we previously could. But it does neatly deliver everything in just one request, so artist searching is now incredibly faster.

Let me know if pixiv gives any more trouble. Now we can parse their json, we might be able to reintroduce the arbitrary tag search, which broke some time ago due to the same move to javascript galleries.

twitter

In a similar theme, given our fully developed parser and pipeline, I have now wangled a twitter username search! It should be added to your downloader list on update. It is a bit hacky and may be ultimately fragile if they change something their end, but it otherwise works great. It discounts retweets and fetches 19/20 tweets per gallery 'page' fetch. You should be able to set up subscriptions and everything, although I generally recommend you go at it slowly until we know this new parser works well. BTW: I think twitter only 'browses' 3200 tweets in the past, anyway. Note that tweets with no images will be 'ignored', so any typical twitter search will end up with a lot of 'Ig' results--this is normal. Also, if the account ever retweets more than 20 times in a row, the search will stop there, due to how the clientside pipeline works (it'll think that page is empty).

Again, let me know how this works for you. This is some fun new stuff for hydrus, and I am interested to see where it does well and badly.

misc

In order to be less annoying, the 'do you want to run idle jobs?' on shutdown dialog will now only ask at most once per day! You can edit the time unit under options->maintenance and processing.

Under options->connection, you can now change max total network jobs globally and per domain. The defaults are 15 and 3. I don't recommend you increase them unless you know what you are doing, but if you want a slower/more cautious client, please do set them lower.

The new advanced downloader ui has a bunch of quality of life improvements, mostly related to the handling of example parseable data.

full list

downloaders:

after adding some small new parser tools, wrote a new pixiv downloader that should work with their new dynamic gallery's api. it fetches all an artist's work in one page. some existing pixiv download components will be renamed and detached from your existing subs and downloaders. your existing subs may switch over to the correct pixiv downloader automatically, or you may need to manually set them (you'll get a popup to remind you).

wrote a twitter username lookup downloader. it should skip retweets. it is a bit hacky, so it may collapse if they change something small with their internal javascript api. it fetches 19-20 tweets per 'page', so if the account has 20 rts in a row, it'll likely stop searching there. also, afaik, twitter browsing only works back 3200 tweets or so. I recommend proceeding slowly.

added a simple gelbooru 0.1.11 file page parser to the defaults. it won't link to anything by default, but it is there if you want to put together some booru.org stuff

you can now set your default/favourite download source under options->downloading

.

misc:

the 'do idle work on shutdown' system will now only ask/run once per x time units (including if you say no to the ask dialog). x is one day by default, but can be set in 'maintenance and processing'

added 'max jobs' and 'max jobs per domain' to options->connection. defaults remain 15 and 3

the colour selection buttons across the program now have a right-click menu to import/export #FF0000 hex codes from/to the clipboard

tag namespace colours and namespace rendering options are moved from 'colours' and 'tags' options pages to 'tag summaries', which is renamed to 'tag presentation'

the Lain import dropper now supports pngs with single gugs, url classes, or parsers--not just fully packaged downloaders

fixed an issue where trying to remove a selection of files from the duplicate system (through the advanced duplicates menu) would only apply to the first pair of files

improved some error reporting related to too-long filenames on import

improved error handling for the folder-scanning stage in import folders--now, when it runs into an error, it will preserve its details better, notify the user better, and safely auto-pause the import folder

png export auto-filenames will now be sanitized of \, /, :, *-type OS-path-invalid characters as appropriate as the dialog loads

the 'loading subs' popup message should appear more reliably (after 1s delay) if the first subs are big and loading slow

fixed the 'fullscreen switch' hover window button for the duplicate filter

deleted some old hydrus session management code and db table

some other things that I lost track of. I think it was mostly some little dialog fixes :/

.

advanced downloader stuff:

the test panel on pageparser edit panels now has a 'post pre-parsing conversion' notebook page that shows the given example data after the pre-parsing conversion has occurred, including error information if it failed. it has a summary size/guessed type description and copy and refresh buttons.

the 'raw data' copy/fetch/paste buttons and description are moved down to the raw data page

the pageparser now passes up this post-conversion example data to sub-objects, so they now start with the correctly converted example data

the subsidiarypageparser edit panel now also has a notebook page, also with brief description and copy/refresh buttons, that summarises the raw separated data

the subsidiary page parser now passes up the first post to its sub-objects, so they now start with a single post's example data