#1:0.385

Explore tagged Tumblr posts

Text

i <3 epubs soososoosos muchhhh

#BEST file type#they r so tiny#1 mgb vs 384 kb#1:0.385#great ratio#that is comparing epubs to pdfs#which yeah 1 mb is pretty small too#but the file i was downloading was tiny#at larger sizes the difference is much more noticeable

0 notes

Text

in 2011, referring to the final phase of the war in 2009, the Report of the Secretary-General's Panel of Experts on Accountability in Sri Lanka stated, "A number of credible sources have estimated that there could have been as many as 40,000 civilian deaths."[6] The large majority of these civilian deaths in the final phase of the war were said to have been caused by indiscriminate shelling by the Sri Lankan Armed Forces.[7][8] Civilian casualties that occurred in 2009 is of major controversy, as there were no organizations to record the events during the final months of the war.

given that the population of sri lanka at the time was around 20m, this puts the reported death toll as a proportion of population (0.2%) lower than the currently reported death toll in gaza, by almost half (0.385%)

this is perhaps a more comparable historical precedent (than covid, personally my only frame of reference for a death toll of this scale), since it was a remarkably similar war to eradicate a militant separatist organization, the tamil tigers. it was also largely ignored on the global stage at the time, and it was ultimately successful (the tamil tigers no longer exist). this likely displaced as many as 800,000 sri lankans (4% of population)

the united states also provided military aid to the sri lankan government up until 2007, when it ceased due to human rights violations. (the link to the 2007 report on human rights practices is broken on CFR.org but remains available on the US state dept's website)

i don't know, but that may be a hard number worth citing to policymakers, if you're invested in the idea that that can have any impact, despite the clear and bipartisan 2/3 majority of the united states populace supporting ceasefire, even including 56% of republican voters, compared to at most, what, 1% of politicians? maybe 2%? they might want to be apprised of the fact that precedent in recent memory shows we were willing to cease military aid, on the basis of human rights violations, years before the civilian death toll would ultimately become half as large, proportionally

then again, on the other hand, you would be combating the fact that our investment in sri lanka was never comparable to our investment in israel. (correct me if i'm way out of line to draw this comparison, i guess, but deep down my thought is that tragedies on the scale of a measurable percentage of population are usually just flat out not comparable, each one being wholly unique and distinct in too many ways to list)

7 notes

·

View notes

Text

You are exactly the problem I'm railing against, I'm very sorry.

A shaped charge of 40cm diameter (thus, very large; we're talking tank charges here) has a lining of about 0.1 cm in a conical shape (and usually a length of about equal to double length, so let's go with 40cm). That's a total volume of about 167 cm^3, or mass of about 1500 g. The volume of explosive (let's say HMX) in this case is cylinder volume minus cone volume (roughly; it's actually less but let's ignore that for a moment). So that's about 33500 cm^3, at a density of 1.91 g/cm^3, for a total mass of about 64000 g (64 kg.) HMX detonates with an energy of 6.84 kJ/g (per NIST), so this is 437,760,000 J. Shaped charges do not in fact create plasma, they create (partially) melted metal; in order to melt metal, you need to heat it up to its melting point (q=mcΔT) and melt it (q=mΔHf). Copper's melting point is 1084 degrees C and heat capacity is 0.385 J/g deg C, so starting at 25 degrees C (standard ambient temperature) you get 611,572 J. Copper's heat of fusion is 13.2 kJ/mol; converting that to our current units, that's 13200 J/0.01574 g. That takes 1.2*10^9 J to melt fully; evidently we are not melting fully, therefore this isn't even truly a "jet" of melted metal. (Not that the heat of the metal is that important anyway; the important thing is that the metal is accelerated very fast into a small area). Let's just say all 437 MJ of HMX explosion energy is applied to an area of around 315 cm^2 (due to spread) and call it a day. (It isn't all applied, because of deceleration and inefficient explosion, but let's carry on).

(Remember, we are talking about this force being applied to something roughly humanoid, anyway).

Old-style trebuchets could sometimes toss 300 kg rocks; force of gravity's 9.8 m/s^2. Gravitational potential energy is mgΔh. A reasonable 500m (1500ft) already gives us 1,470,000 J (1.47 MJ). Let's make our rock out of osmium; we need 13,280 cm^3 to get a sphere of osmium of around 300 kg. This gives us an area of around 680 cm^2. With a literal medieval weapon, we're within 2 orders of magnitude of the energy a shaped charge (and within 1 order of magnitude on the area this energy is applied to). If you build a trebuchet out of stuff you can find at Home Depot, you can likely increase this by an order of magnitude, if you wish.

Do not underestimate a big rock. Or overestimate human ingenuity.

This post is ultimately about Themes anyway- most "ancient evils" exist because human beings need to imagine an enemy. If the enemy is both extremely strong (terrorized the world for tens of millennia) and extremely weak (easily defeated by modern weaponry), it's a salve for the poor human soul which doesn't want to think about problems it can't solve AND wants to think it's really cool.

the thing about killing the ancient evil with a rocket launcher is if you’re thinking realistically and not just going Humanity Fuck Yeah you should also be able to kill them with a trebuchet. an industrially machined one if you must for Prophecy Reasons but a RPG and a giant rock both do the job of destroying tanks very nicely; it’s just that the latter is much harder to maneuver. the fact that it’s specifically modern weaponry and not the idea of more force behind weaponry in these scenarios is what makes them feel like contrived Humanity Fuck Yeah scenarios.

174 notes

·

View notes

Text

Sokan utálnak számolni, de néha azért nem árt...

Migránsok ástak alagutat a határkerítés alatt, itt a rendőrségi felvétel az egyik végéről. Mint a mellette elhelyezett mérőszalag mutatja, 70 centiméter az átmérője (kevesebb nem is nagyon lehet, ha felnőttek is át akartak jönni rajta). A keresztmetszete tehát 0.35×0.35×3.14=0.385 négyzetméter. A híradások szerint 37 méter hosszú volt, így 14.25 köbméter földet kellett hozzá kiásni. Hol van?! Az alagútban egyszerre csak egy ember dolgozhatott, méghozzá csak a kinti végétől (aki átjutott a kerítésen, az nyilván nem állt neki bentről kifelé alagutat ásni, hanem menekült, ahogy csak tudott). Ráadásul az első 1-2 méter után már csak homokozólapáttal, maximum gyalogsági ásóval, hogy a nyele is elférjen odabent. Ugyan mennyi ideig tarthatott kiásni?! És még csak nem is ez a szűk keresztmetszet. Ennyi föld 25-30 tonna súlyú, és ezt ki is kell hordani az alagútból. Egyszerre ezt is csak egy ember csinálhatja, amíg az előző be nem kúszott a fejtés frontjáig és a földadaggal együtt ki nem kúszott belőle, méghozzá hátrafelé (megfordulni nincs hely), addig a következő nem indulhatott el, hiszen hogyha találkoznak, nem tudják kikerülni egymást. A bejárattól a fejtés frontjáig tartó út hossza az ásás előrehaladtával persze egyre nő, az átlagos hossz kb. 19 méter. Ezt kell minden alkalommal megtenni befelé kúszva, maga előtt tolva egy üres kosarat, amibe az ásó ember majd a földet teszi, és a földdel teli kosárral együtt hátrafelé kúszva vissza. Azt hiszem, nagyon méltányosan számolom, ha azt mondom, hogy egy-egy ilyen út legalább negyedóra. Maga a kosár, amiben a földet szállítják, sem lehet túl nagy, ha odabent ki akarják cserélni az üres és a földdel teli kosarat, illetve, ha az ásó ember az üreset maga elé akarja bűvészkedni, hogy megtölthesse a kiásott földdel, a földdel megtöltöttet pedig maga mögé akarja juttatni, hogy a szállító kihúzhassa. Azt is tekintetbe kell venni, hogy a szállító a szűk alagútban hátrafelé hason kúszva aligha tud túlságosan nagy terhet mozgatni. Nem hiszem, hogy sokat tévednék, ha azt mondom, hogy mindezt figyelembe véve egy-egy alkalommal mintegy 10 kilogramm földet lehet kijuttatni. Vagyis alsó hangon 2500-szor kell megtenni az alagút szájától a fejtés frontjáig és vissza vezető, jóindulattal átlagosan negyedórásnak számított utat. Ez 625 óra, vagyis 26 nap, föltéve, hogy napi 24 órában percnyi megállás nélkül, éjjel-nappal dolgoztak. Kis híján egy hónapig. És egy hónap alatt mindez nem tűnt föl az ott járőröző határőröknek. Szóval, tisztelt kormánypárti propaganda, a hülyék ott szemben laknak...

50 notes

·

View notes

Text

Miami Grand Prix: World champion Max Verstappen sets rapid pace in second practice

Follow live text and radio commentary of third practice for the Miami Grand Prix on Saturday from 17:00 BST, with build-up to qualifying from 20:00 Max Verstappen set an imposing pace in Friday practice at the Miami Grand Prix as he headed the Ferraris of Carlos Sainz and Charles Leclerc. The world champion was 0.385 seconds quicker than Sainz, with Leclerc just 0.083secs further adrift. Red Bull's Sergio Perez, six points adrift of Verstappen in the title race, was fourth, 0.489secs back. Aston Martin's Fernando Alonso was fifth, ahead of McLaren's Lando Norris and the Mercedes of Lewis Hamilton. The seven-time champion's team-mate George Russell, who headed Hamilton in a Mercedes one-two in first practice, was down in 15th, struggling to get a lap time out of the car. Aston Martin's Lance Stroll, Alpine's Esteban Ocon and Williams driver Alex Albon completed the top 10. Leclerc crashed on his race-simulation runs later in the session with about eight minutes to go. The rear twitched through the long right-hander at Turn Seven; Leclerc caught it but could not stop the car nosing into the barriers. The front-right corner and front wing were damaged but the new floor introduced as an upgrade by Ferrari this weekend appeared unscathed. The session did resume after the accident, but the lost time meant all the drivers will go into Saturday with very limited information as to their race pace. In the first session, two drivers damaged their cars, Nico Hulkenberg crashing his Haas at Turn Three and Alpha Tauri's Nyck de Vries spinning and brushing the wall half way around the lap. via BBC Sport - Formula 1 http://www.bbc.co.uk/sport/

1 note

·

View note

Note

So how successful is s13 in terms of ratings and other measurements when compared to s4-s6 (or after the rating system changed)?

s13 rating and metrics is more successful than s4-s6. How? It’s part of that $1+ billion dollars deal with Netflix and CW’s own streaming service that you all young people advertised on your own and CW didn’t even have to spend promo money.

First, the traditional “linear” ratings: CW’s average season 13 demo rating is 0.385. SPN’s average demo rating is 0.56. SPN is still the #3 show on CW, doing better than Supergirl, Riverdale, and Arrow. For 13 years SPN has always been #2 or #3 while younger shows have risen to #1, then crashed and burned in half the time. - (credit)

Second, the mysterious Netflix ratings. After season 6, it was reported on Oct 13, 2011 that CW struck a deal with Netflix that is the envy of all networks: $1 billion to stream CW shows for four years. Note that two months earlier Jared’s salary was published because they were renegotiating his salary. Undoubtedly Jared’s agent knew about the hard bargaining between CW and Netflix and got his client a huge pay raise and Jensen’s agent has been playing catch up ever since. Jared looked like a cat that ate the canary and the cream in interviews during season 7.(Makes you wonder on the timing of Robert Singer employing every dirty trick to oust Sera Gamble and hire his own wife doesn’t it)CW is infamously skewered to a young audience who don’t even own a tv, they’re watching on their computer or phone that isn’t measured by nielsen ratings. CW knows this, Netflix knows this. Nobody knows Netflix viewing metrics and Netflix isn’t telling because that gives them the upper hand in negotiating licensing agreements. Producers, actors, and writers are mad and frustrated that they don’t know Netflix true viewership numbers because they can’t negotiate their own royalties or residuals and don’t know if they are being fairly compensated for their work. Stars on traditional network shows have neilsen rating data to threaten studio and network with, which is why the Friends cast was able to get a desperate NBC and WB to pay them $1 million per episode to keep the show on the air for one more season.But everybody noticed that when the 2011’s CW-Netflix deal was expiring that Netflix was “bidding hard” to renew its output deal with the CW, that is “a clear signal CW shows perform well on Netflix and have value.” The hard bargaining occurred during season 11 when Robert Singer was removed as executive producer while ratings increased. On July 5, 2016, it was reported that an agreement was reached and Netflix paid more than $1 billion to exclusively stream CW shows for five years. -(credit)(After the new CW-Netflix agreement was reached, Robert Singer was allowed back on SPN as an executive producer.)“The CW-Netflix pact is likely to rank one of the richest TV out deals ever” - (credit)Even without Netflix, CW has doubled their viewer numbers through their website and streaming app. CW’s president gloats that they didn’t even have to advertise their streaming service, you younglings did the work for them! The 100 has a 0.350 demo but have twice the viewers on streaming. I believe SPN’s numbers are even better and why Pedowitz kept saying “Supernatural will remain on the air as long as its performing and Jared and Jensen want to do it and I’m sitting it this chair.” (credit)In conclusion, by CW “linear” live tv standard the ratings for SPN is good. By CW streaming service standard the viewership is even better. By Netflix mysterious rating standard, it’s probably one of the best.

54 notes

·

View notes

Text

Q3:

Russell noted by race director for impeding Leclerc, no penalty

Norris lap time invalidated: track limits on T4

Norris secured pole: 1:15.096, followed by Piastri (+0.084) and Verstappen (+0.385)

Final Q3 results: NOR, PIA, VER, RUS, TSU, ALB, LEC, HAM, GAS, SAI

2025 Australian GP - Qualifying

Weather: 32⁰C, track temp: 38⁰C

Q1:

Bearman reported issues with gearbox on the outlap, returned to pitlane

Lawson locked up and went into the gravel trap, avoided the barrier and kept going

Lawson locked up again on the last 2 turns before the line, cancelled his quali lap and returned to the pitlane

Eliminated: BEA (no time), OCO, LAW, HUL, ANT

2 notes

·

View notes

Video

youtube

Contact: [email protected] Twitter: https://twitter.com/justanalysis1 Site: https://www.itsjustanalysis.com/ https://www.patreon.com/justanalysis Monthly 1 oz. Silver giveaway for every 20 Patreon contributors! ******** Prior trades are still pending and open. A new long idea was identified. ******** Trade-Ideas ******** ($0.005/3-box P&F Chart) - Nov 29th Buy Stop @ $0.345 Stop Loss @ $0.330 Profit Target @ $0.385

($0.1/3-box P&F Chart) - Nov 11th Buy Stop @ 3-box reversal ($0.36 at time of recording) Stop Loss @ 4 box reversal Profit Target @ $0.57

($0.2/3-box P&F Chart) - Nov 11th Sell Stop @ $0.30 Stop Loss @ $0.36 Profit Target @ $0.18 ******** Dollar-Cost Average - Updated Oct 25th ******** Buy Limit @ $0.2454 Buy Limit @ $0.1676

0 notes

Text

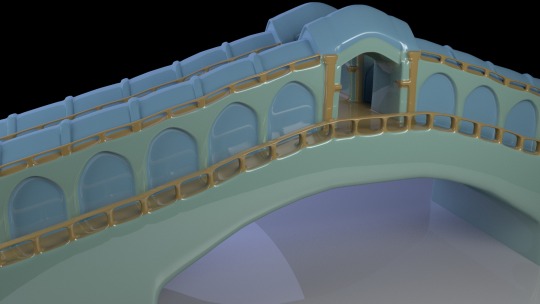

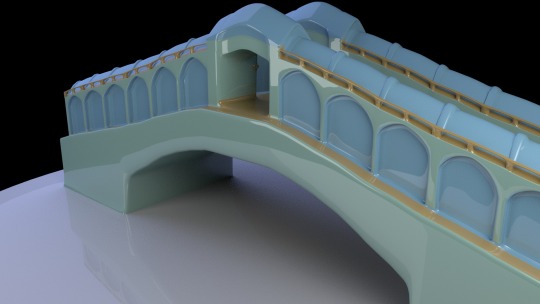

Model check and touch up

- Check over all models to make sure textures are all exactly the same:

- Blinn

- Light pink, 345.64, 0.495, 0.807

- Yellow, 35.987, 0.756, 0.761

-Dark pink, 344.47, 0.74, 0.27

-Blue, 205.38, 0.622,1

-Green, 156.92, 0.385, 0.876

-White, 156.92, 0, 1

-Touch up any models I’m not happy with

Updated models:

Rialto Bridge, changed shape of arches to be more curved and adde3d arches on back after checking google earth and noticing they were there.

0 notes

Text

Regression Models – Assignment 3

Regression Models – Assignment 3

The relationship between breast cancer rate and urbanrate is being studied.

Result 1- OLS regression model between breast cancer and Urban rate

The OLS regression model shows that the breast cancer rate increases with the urban rate. And we can use the formula breastcancerate = 37.52 + .611 * urbanrate for the linear relationship. But the r-squared is .354% indicating that the linear association is capturing 35.4% variability in breast cancer rate.

print ("after centering OLS regression model for the association between urban rate and breastcancer rate ")

reg1 = smf.ols('breastcancerrate ~ urbanrate_c', data=sub1).fit()

print (reg1.summary())

after centering OLS regression model for the association between urban rate and breastcancer rate

OLS Regression Results ==============================================================================

Dep. Variable: breastcancerrate R-squared: 0.354

Model: OLS Adj. R-squared: 0.350

Method: Least Squares F-statistic: 88.32

Date: Thu, 11 Feb 2021 Prob (F-statistic): 5.36e-17

Time: 15:13:54 Log-Likelihood: -706.75

No. Observations: 163 AIC: 1417.

Df Residuals: 161 BIC: 1424.

Df Model: 1

Covariance Type: nonrobust

===============================================================================

coef std err t P>|t| [0.025 0.975]

-------------------------------------------------------------------------------

Intercept 37.5153 1.457 25.752 0.000 34.638 40.392

urbanrate_c 0.6107 0.065 9.398 0.000 0.482 0.739

==============================================================================

Omnibus: 5.949 Durbin-Watson: 1.705

Prob(Omnibus): 0.051 Jarque-Bera (JB): 5.488

Skew: 0.385 Prob(JB): 0.0643

Kurtosis: 2.536 Cond. No. 22.4

==============================================================================

Result 2 - OLS regression model after adding more explanatory variables

Now I added confounders female employ rate, CO2 emission rate and alcohol consumption to the model. The p-value for CO2 emission rate and alcohol consumption have a low p-value, <.05 and we can say that both of these are positively associated with breast cancer rate. Countries with higher urban rate have 0.49 more breast cancer rate. In a population, there's a 95% chance that countries with higher urbanrate will have between 0.36 and .62 more breast cancer rate

The female employ rate p-value is 0.775, and not significant so we cannot reject null hypothesis – no association between female employ and breast cancer rate. The 95% confidence interval for female employ rate is between -0.164 and 0.220, which means we can say with 95% confidence level that there may be a 0 breast cancer association in a population.

print ("after centering OLS regression model for the association between famele employed, co2emissions, alcohol consumption,urban rate and breastcancer rate ")

reg2= smf.ols('breastcancerrate ~ urbanrate_c + femaleemployrate_c + co2emissions_c + alcconsumption_c', data=sub1).fit()

print (reg2.summary())

after centering OLS regression model for the association between famele employed, co2emissions, alcohol consumption,urban rate and breastcancer rate

OLS Regression Results ==============================================================================

Dep. Variable: breastcancerrate R-squared: 0.495

Model: OLS Adj. R-squared: 0.482

Method: Least Squares F-statistic: 38.74

Date: Thu, 11 Feb 2021 Prob (F-statistic): 1.43e-22

Time: 15:13:59 Log-Likelihood: -686.69

No. Observations: 163 AIC: 1383.

Df Residuals: 158 BIC: 1399.

Df Model: 4

Covariance Type: nonrobust

======================================================================================

coef std err t P>|t| [0.025 0.975]

--------------------------------------------------------------------------------------

Intercept 37.5153 1.300 28.852 0.000 34.947 40.083

urbanrate_c 0.4925 0.066 7.413 0.000 0.361 0.624

femaleemployrate_c 0.0278 0.097 0.286 0.775 -0.164 0.220

co2emissions_c 1.389e-10 4.68e-11 2.971 0.003 4.66e-11 2.31e-10

alcconsumption_c 1.5061 0.282 5.349 0.000 0.950 2.062

==============================================================================

Omnibus: 2.965 Durbin-Watson: 1.832

Prob(Omnibus): 0.227 Jarque-Bera (JB): 2.942

Skew: 0.324 Prob(JB): 0.230

Kurtosis: 2.883 Cond. No. 2.83e+10

Result 3 – Scatter plot for linear and Quadratic model

Here I created one linear scatter plot and another one with quadratic to see if the relation is curvilinear. May it is quadratic.

# plot a scatter plot for linear

scat1 = seaborn.regplot(x="urbanrate", y="breastcancerrate", fit_reg=True, data=sub1)

plt.xlabel('urbanrate')

plt.ylabel('breastcancerrate')

plt.title('Scatterplot for the Association Between urbanrate Rate and breastcancerrate')

# plot a scatter plot# add the quadratic portion to see if it is curvilinear

scat1 = seaborn.regplot(x="urbanrate", y="breastcancerrate", order=2, fit_reg=True, data=sub1)

plt.xlabel('urbanrate')

plt.ylabel('breastcancerrate')

plt.title('Scatterplot for the Association Between urbanrate Rate and breastcancerrate')

Result 4 - OLS regression model with quadratic

Here the R-squared has improved from .354 to .371. A slightly higher value.

print ("after centering OLS regression model for the association between urban rate and breastcancer rate and adding qudratic portion")

reg3= smf.ols('breastcancerrate ~ urbanrate_c + I(urbanrate_c**2)', data=sub1).fit()

print (reg3.summary())

after centering OLS regression model for the association between urban rate and breastcancer rate and adding qudratic portion

OLS Regression Results ==============================================================================

Dep. Variable: breastcancerrate R-squared: 0.371

Model: OLS Adj. R-squared: 0.363

Method: Least Squares F-statistic: 47.13

Date: Fri, 12 Feb 2021 Prob (F-statistic): 8.06e-17

Time: 07:52:49 Log-Likelihood: -704.64

No. Observations: 163 AIC: 1415.

Df Residuals: 160 BIC: 1425.

Df Model: 2

Covariance Type: nonrobust

=======================================================================================

coef std err t P>|t| [0.025 0.975]

---------------------------------------------------------------------------------------

Intercept 34.6381 2.014 17.201 0.000 30.661 38.615

urbanrate_c 0.6250 0.065 9.656 0.000 0.497 0.753

I(urbanrate_c ** 2) 0.0057 0.003 2.048 0.042 0.000 0.011

==============================================================================

Omnibus: 3.684 Durbin-Watson: 1.705

Prob(Omnibus): 0.159 Jarque-Bera (JB): 3.734

Skew: 0.345 Prob(JB): 0.155

Kurtosis: 2.728 Cond. No. 1.01e+03

Result 5 - OLS regression model with quadratic and another variable

Here I have added 2nd order urban rate and alcohol consumption rate to the model. The p-value for alcohol consumption is significant meaning there is association between alcohol consumption and breast cancer rate. The R-squared has increased to 49.8%, slightly improved from previous.

print (“after centering OLS regression model for the association between urban rate and breastcancer rate after adding alcohol consumption rate “)

reg4 = smf.ols(‘breastcancerrate ~ urbanrate_c + I(urbanrate_c**2) + alcconsumption_c ‘, data=sub1).fit()

print (reg4.summary())

after centering OLS regression model for the association between urban rate and breastcancer rate after adding alcohol consumption rate

OLS Regression Results

Dep. Variable: breastcancerrate R-squared: 0.498

Model: OLS Adj. R-squared: 0.488

Method: Least Squares F-statistic: 52.51

Date: Fri, 12 Feb 2021 Prob (F-statistic): 1.21e-23

Time: 08:49:00 Log-Likelihood: -686.27

No. Observations: 163 AIC: 1381.

Df Residuals: 159 BIC: 1393.

Df Model: 3

Covariance Type: nonrobust

coef std err t P>|t| [0.025 0.975]

Intercept 33.4837 1.814 18.459 0.000 29.901 37.066

urbanrate_c 0.5203 0.060 8.627 0.000 0.401 0.639

I(urbanrate_c ** 2) 0.0080 0.003 3.169 0.002 0.003 0.013

alcconsumption_c 1.7136 0.270 6.340 0.000 1.180 2.247

Omnibus: 2.069 Durbin-Watson: 1.691

Prob(Omnibus): 0.355 Jarque-Bera (JB): 1.645

Skew: 0.216 Prob(JB): 0.439

Kurtosis: 3.235 Cond. No. 1.01e+03

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.01e+03. This might indicate that there are

strong multicollinearity or other numerical problems.

Result 6 - QQPlot

In this regression model, the residual is the difference between the predicted breast cancer rate, and the actual observed breast cancer rate for each country. Here I have plotted the residuals. The qqplot for our regression model shows that the residuals generally follow a straight line, but deviate at the lower and higher quantiles. This indicates that our residuals did not follow perfect normal distribution. This could mean that the curvilinear association that we observed in our scatter plot may not be fully estimated by the quadratic urban rate term. There might be other explanatory variables that we might consider including in our model, that could improve estimation of the observed curvilinearity.

#Q-Q plot for normality

fig4=sm.qqplot(reg4.resid, line='r')

Result 7 – Plot of Residuals

Here there is 1 point below -3 standard deviation of residuals, 3 points (1.84%) above 2.5 standard deviation of residuals, and 8 points (4.9%) above 2 standard deviation of residuals. So there are 1.84% of points above 2.5, and 4.9% points above 2.0 std deviation. This suggests that the fit of the model is relatively poor and could be improved. May be there are other explanatory variables that could be included in the model.

# simple plot of residuals

stdres=pandas.DataFrame(reg4.resid_pearson)

plt.plot(stdres, 'o', ls='None')

l = plt.axhline(y=0, color='r')

plt.ylabel('Standardized Residual')

plt.xlabel('Observation Number')

Result 8 – Residual Plot and Partial Regression Plot

The plot in the upper right hand corner shows the residuals for each observation at different values of alcohol consumption. It looks like the absolute values of residual are significantly higher at lower alcohol consumption rate and get smaller closer to 0 as alcohol consumption increases, but then again increase at higher levels of alcohol consumption. This model does not predict breast cancer rate as well for countries that have either high or low levels of alcohol consumption rate. May be there is a curvilinear relation between alcohol consumption rate and breast cancer rate.

The partial regression plot which is in the lower left hand corner. The residuals are spread out in a random pattern around the partial regression line and in addition many of the residuals are pretty far from this line, indicating a great deal of breast cancer rate prediction error. Although alcohol consumption rate shows a statistically significant association with breast cancer rate,this association is pretty weak after controlling for urban rate.

# additional regression diagnostic plots

fig2 = plt.figure(figsize=(12,8))

fig2 = sm.graphics.plot_regress_exog(reg4, "alcconsumption_c", fig=fig2)

Result 9 – Leverage Plot

Here in the leverage plot there are few outliers on the left side with a std deviation greater than 2, but they are close to 0 meaning they do not have much leverage. There is one in the right which is 199 which is significant.

# leverage plot

fig3=sm.graphics.influence_plot(reg4, size=8)

print(fig3)

Complete Python Code

# -*- coding: utf-8 -*-

"""

Created on Mon Feb 8 20:37:33 2021

@author: GB8PM0

"""

#%%

import pandas

import numpy

import seaborn

import scipy

import matplotlib.pyplot as plt

import statsmodels.formula.api as smf

import statsmodels.api as sm

data = pandas.read_csv('C:\Training\Data Analysis\gapminder.csv', low_memory=False)

#setting variables you will be working with to numeric

data['breastcancerrate'] = pandas.to_numeric(data['breastcancerper100th'], errors='coerce')

data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce')

data['femaleemployrate'] = pandas.to_numeric(data['femaleemployrate'], errors='coerce')

data['co2emissions'] = pandas.to_numeric(data['co2emissions'], errors='coerce')

data['alcconsumption'] = pandas.to_numeric(data['alcconsumption'], errors='coerce')

# listwise deletion of missing values

sub1 = data[['urbanrate', 'breastcancerrate','femaleemployrate', 'co2emissions','alcconsumption' ]].dropna()

# center the explanatory variable urbanrate by subtracting mean

sub1['urbanrate_c'] = (sub1['urbanrate'] - sub1['urbanrate'].mean())

sub1['femaleemployrate_c'] = (sub1['femaleemployrate'] - sub1['femaleemployrate'].mean())

sub1['co2emissions_c'] = (sub1['co2emissions'] - sub1['co2emissions'].mean())

sub1['alcconsumption_c'] = (sub1['alcconsumption'] - sub1['alcconsumption'].mean())

print ("after centering OLS regression model for the association between urban rate and breastcancer rate ")

reg1 = smf.ols('breastcancerrate ~ urbanrate_c', data=sub1).fit()

print (reg1.summary())

#%%

#after adding other variables

print ("after centering OLS regression model for the association between famele employed, co2emissions, alcohol consumption,urban rate and breastcancer rate ")

reg2= smf.ols('breastcancerrate ~ urbanrate_c + femaleemployrate_c + co2emissions_c + alcconsumption_c', data=sub1).fit()

print (reg2.summary())

#%%

# plot a scatter plot for linear

scat1 = seaborn.regplot(x="urbanrate", y="breastcancerrate", fit_reg=True, data=sub1)

plt.xlabel('urbanrate')

plt.ylabel('breastcancerrate')

plt.title('Scatterplot for the Association Between urbanrate Rate and breastcancerrate')

# plot a scatter plot# add the quadratic portion to see if it is curvilinear

scat1 = seaborn.regplot(x="urbanrate", y="breastcancerrate", order=2, fit_reg=True, data=sub1)

plt.xlabel('urbanrate')

plt.ylabel('breastcancerrate')

plt.title('Scatterplot for the Association Between urbanrate Rate and breastcancerrate')

#%%

# add the quadratic portion to see if it is curvilinear

print ("after centering OLS regression model for the association between urban rate and breastcancer rate and adding qudratic portion")

reg3= smf.ols('breastcancerrate ~ urbanrate_c + I(urbanrate_c**2)', data=sub1).fit()

print (reg3.summary())

#%%

print ("after centering OLS regression model for the association between urban rate and breastcancer rate after adding alcohol consumption rate ")

reg4 = smf.ols('breastcancerrate ~ urbanrate_c + I(urbanrate_c**2) + alcconsumption_c ', data=sub1).fit()

print (reg4.summary())

#%%

#Q-Q plot for normality

fig4=sm.qqplot(reg4.resid, line='r')

#%%

# simple plot of residuals

stdres=pandas.DataFrame(reg4.resid_pearson)

plt.plot(stdres, 'o', ls='None')

l = plt.axhline(y=0, color='r')

plt.ylabel('Standardized Residual')

plt.xlabel('Observation Number')

#%%

# additional regression diagnostic plots

fig2 = plt.figure(figsize=(12,8))

fig2 = sm.graphics.plot_regress_exog(reg4, "alcconsumption_c", fig=fig2)

#%%

# leverage plot

fig3=sm.graphics.influence_plot(reg4, size=8)

print(fig3)

0 notes

Text

FP2: Verstappen heads Ferrari duo in second Miami practice

FP2: Verstappen heads Ferrari duo in second Miami practice By Balazs Szabo on 06 May 2023, 00:54 Following the eye-catching perfromance from the Mercedes drivers in the opening practice, reigning world champion Max Verstappen bounced back in the afternoon session to head Ferrari's Carlos Sainz and Charles Leclerc with a new unofficial track record. Although he was overtaken by the Mercedes drivers in the dying minutes of the first practice session, Max Verstappen appeared to be the fastest earlier which he could confirm when drivers completed the second 60-minute outing on the resurfaced Miami International Autodrome. The reigning champion set an unofficial lap record in Miami with a 1m27.930s before displaying eye-catching long run performance on high fuel. The Ferrari duo of Carlos Sainz and Charles Leclerc took second and third respectively, but were unable to get close tot he championship leader, finishing the second almost four tenths of a second. The Monegasque ended the session prematurely, bringing out the only red flag of FP2 when he wrestled with a snap of oversteer, locked up and sent his Ferrari SF-23 into the barriers at Turn 7. Following his win in last weekend’s F1 Baku Sprint, Sergio Perez kicked off his weekend in low-key form, but managed to up his game in the second practice, taking fourth behind the Ferraris. With his upgraded McLaren, Lando Norris briefly led the session when soft-tyred running began which was mainly down to his impressive time in the tricky first sector. In the end, the Briton ended up sixth fastest behind Aston Martin’s Fernando Alonso and ahead of Mercedes’ Lewis Hamilton. The Anglo-German outfit displayed great pace in the opening session, ending the first outing with a one-two, but fell back in the second session. Hamilton’s teammate George Russell took 15th, running wide at Turn 11 on his high fuel run. Alpine were unable to show the effect of their new package in Baku due to their issues, but they appeared to be in the mix for point-scoring places today with Esteban Ocon and Pierre Gasly taking 9th and 11th respectively. AlphaTauri’s Yuki Tsunoda took P18 in front of his team mate De Vries. Williams racer Alexander Albon ended in a strong P10 while his teammate Logan Sargeant rounded out the standings, around two seconds off the pace. Results Pos. No. Driver Car Time Gap Laps 1 1 Max Verstappen Red Bull Racing Honda Rbpt 1:27.930 23 2 55 Carlos Sainz Ferrari 1:28.315 +0.385s 26 3 16 Charles Leclerc Ferrari 1:28.398 +0.468s 20 4 11 Sergio Perez Red Bull Racing Honda Rbpt 1:28.419 +0.489s 24 5 14 Fernando Alonso Aston Martin Aramco Mercedes 1:28.660 +0.730s 25 6 4 Lando Norris Mclaren Mercedes 1:28.741 +0.811s 24 7 44 Lewis Hamilton Mercedes 1:28.858 +0.928s 23 8 18 Lance Stroll Aston Martin Aramco Mercedes 1:28.930 +1.000s 23 9 31 Esteban Ocon Alpine Renault 1:28.937 +1.007s 25 10 23 Alexander Albon Williams Mercedes 1:29.046 +1.116s 26 11 10 Pierre Gasly Alpine Renault 1:29.098 +1.168s 22 12 20 Kevin Magnussen Haas Ferrari 1:29.171 +1.241s 22 13 24 Zhou Guanyu Alfa Romeo Ferrari 1:29.181 +1.251s 27 14 77 Valtteri Bottas Alfa Romeo Ferrari 1:29.189 +1.259s 26 15 63 George Russell Mercedes 1:29.216 +1.286s 21 16 81 Oscar Piastri Mclaren Mercedes 1:29.339 +1.409s 24 17 27 Nico Hulkenberg Haas Ferrari 1:29.393 +1.463s 22 18 22 Yuki Tsunoda Alphatauri Honda Rbpt 1:29.613 +1.683s 25 19 21 Nyck De Vries Alphatauri Honda Rbpt 1:29.928 +1.998s 25 20 2 Logan Sargeant Williams Mercedes 1:30.038 +2.108s 27 via F1Technical.net . Motorsport news https://www.f1technical.net/news/

0 notes

Link

via Politics – FiveThirtyEight

It’s hard not to compare the 2018 midterms to the 2010 midterms. A controversial president sits in the Oval Office. Resistance to his major policies spurs protests and grassroots activism. Special-election results portend a massive electoral wave that threatens to kneecap his ability to govern. So with the first primaries of 2018 taking place this month, will another 2010 phenomenon, the tea party, reappear? Quite possibly, only this time on the left.

A loosely defined mélange of grassroots conservative activists and hard-right political committees most prominent from 2009 to 2014, the tea party famously demanded ideological purity out of Republican candidates for elected office. In election after election during this period, tea party voters rejected moderate or establishment candidates in Republican primaries in favor of hardcore conservatives — costing the GOP more than one important race and pushing the party to the right in the process.

This Tuesday could mark the first time in 2018 that a moderate incumbent Democrat loses a primary bid to a more extreme challenger. Marie Newman, a progressive running for Illinois’s 3rd Congressional District, has argued there is no longer a place in the Democratic Party for Rep. Dan Lipinski’s anti-abortion, anti-same-sex-marriage views. Lipinski, however, has accused Newman of dismantling the party’s big tent and fomenting a “tea party of the left.”

But how accurate is that comparison really? One person’s “tea party rebellion” is another person’s justified excision of a Democrat or Republican “in name only.” To get a sense for whether Newman’s campaign against Lipinski looks more like part of a movement to pull the party leftward or simply an attempt to bring the district in line with the Democratic mainstream, we decided to look back at the original tea party — or, more accurately, since the term “tea party” is vague and sloppily applied, recent Republican primary challenges.

The following is a list of Republican incumbents in the U.S. Senate and House who have lost a primary election since 2010. To ensure that we’re capturing only Newman-style campaigns — that is, candidates who are challenging incumbents from the extreme wings of the party — we’re not including incumbents who lost to other incumbents as a result of redistricting throwing them together, nor are we counting two incumbents who were explicitly primaried from the center.1

Republican incumbents who were beaten from the right

DW-Nominate ideology scores of incumbent Republicans who lost primary challenges from the right and the scores that the challengers later earned in Congress (if they were elected), in races since 2010

District Defeated Member New Nominee year Name Partisan Lean Name Score Name Score ’10 AK-SEN R+27 Lisa Murkowski 0.208 Joe Miller — ’10 AL-05 R+27 Parker Griffith* 0.385 Mo Brooks 0.600 ’10 SC-04 R+29 Bob Inglis 0.518 Trey Gowdy 0.663 ’10 UT-SEN R+37 Bob Bennett 0.331 Mike Lee 0.919 ’12 FL-03 R+28 Cliff Stearns 0.554 Ted Yoho 0.703 ’12 IN-SEN R+12 Richard Lugar 0.304 Richard Mourdock — ’12 OH-02 R+15 Jean Schmidt 0.467 Brad Wenstrup 0.577 ’12 OK-01 R+36 John Sullivan 0.513 Jim Bridenstine 0.690 ’14 TX-04 R+52 Ralph Hall 0.424 John Ratcliffe 0.746 ’14 VA-07 R+19 Eric Cantor 0.518 David Brat 0.838 ’16 VA-02 R+6 Randy Forbes 0.407 Scott Taylor 0.474 ’17 AL-SEN R+29 Luther Strange 0.560 Roy Moore —

* During his time in the House, Parker Griffith switched parties from Democrat to Republican. The DW-Nominate score listed is for his time as a Republican.

Partisan lean is the average difference between how the constituency voted and how the country voted overall in the two most recent presidential elections, with the more recent election weighted 75 percent and the less recent one weighted 25 percent.

DW-Nominate scores are a measurement of how liberal or conservative members of Congress are on a scale from 1 (most conservative) to -1 (most liberal). Because DW-Nominate is based on congressional voting records, it is unavailable for politicians who never served in Congress.

Source: Greg Giroux, Ballotpedia, Daily Kos Elections, Swing State Project, Dave Leip’s Atlas of U.S. Presidential Elections, Voteview

This isn’t an exhaustive list of tea-party-style challengers — for example, it doesn’t include Republicans shooting for open seats, such as Christine O’Donnell in Delaware in 2010, or hard-liners who won GOP primaries to take on Democratic incumbents, such as Sharron Angle in Nevada (also) in 2010 — but it’s a good cross section. The reason it’s useful to look only at Republican incumbents who went down to defeat is that it allows us to use DW-Nominate, a data set that quantifies how liberal or conservative members of Congress are — on a scale from 1 (most conservative) to -1 (most liberal). Since the score is based on congressional voting records, our focus on races in which incumbents lost means that both the losing candidate and, in most cases, the winning candidate have a DW-Nominate score.2

When you use DW-Nominate to try to quantify this slice of the tea party movement, what you quickly see is that there’s barely a pattern to it at all. “Tea party” candidates primaried plenty of moderate Republicans, such as Sen. Lisa Murkowski (a DW-Nominate score of .208) and party-switching former Rep. Parker Griffith (.385 in his time as a Republican). But they also toppled plenty of solid conservatives, including former Reps. Eric Cantor and Bob Inglis (both .518). Insurgents found success in moderate jurisdictions like Indiana (12 percentage points more Republican than the nation as a whole at the time of the election in question3) as well as dark-red districts like Texas’s 4th (R+52).

If, as this data suggests, the only prerequisite for being called a tea partier is to attack your Republican opponent from the right, then, sure, Newman is waging the mirror image of a tea party challenge. But that’s a fairly lazy conclusion; it lumps together all the primary challenges listed above when the data shows there are clear differences between them. For example, now-Sen. Mike Lee’s 2010 primary challenge to then-Sen. Bob Bennett in Utah made a lot of sense because of Bennett’s moderate DW-Nominate score (.331), the high contrast with Lee’s positions (he went on to earn a score of .919) and the fact that Utah was a very conservative state (37 points more Republican than the nation as a whole). By contrast, now-Rep. Brad Wenstrup’s defeat of then-Rep. Jean Schmidt in Ohio’s 2nd District in 2012 produced only a slightly more conservative representative (.577 to .467), and in a relatively moderate district to boot (R+15).

Lipinski’s DW-Nominate score is -.234, making him the 17th-most-moderate Democrat in the House. Lipinski is also a relatively strong ally of President Trump, at least as far as Democrats go. His Trump score (FiveThirtyEight’s measure of how often each member of Congress votes with the president) is 35.3 percent, 11th-highest among House Democrats. In other words, he is indeed notably more centrist than most members of his party. Going by DW-Nominate, this would place Newman’s challenge of Lipinski in the same ballpark as Joe Miller’s challenge of Murkowski in the Republican primary for Alaska’s 2010 U.S. Senate race. That’s one of the tea party cases in which it’s easier to see the ideological origins.

But the Illinois 3rd District is also only 12 points more Democratic than the nation as a whole. Just 39 Democratic-held districts are more conservative. If you subscribe to the notion that districts toward the center should have more centrist representatives, then Lipinski is still on the moderate side, but he’s not that moderate. For instance, Lipinski’s predicted Trump score is 33.7 percent, meaning that he votes with Trump almost exactly as much as we would expect given the political lean back home. According to DW-Nominate, several other Democratic House members are more moderate than he is4 and represent more liberal districts, including fellow Illinois Reps. Bill Foster and Brad Schneider. Neither of them is facing a primary challenger on Tuesday.

If you consider the district’s partisanship, then maybe Newman’s campaign is more like Richard Mourdock’s Republican primary challenge to incumbent Sen. Richard Lugar in Indiana’s 2012 U.S. Senate race: According to our partisan lean calculations, Indiana was as Republican then as the Illinois 3rd is Democratic now. Lugar was indeed moderate enough (a .304 DW-Nominate score) that a primary challenge made some sense on its own, but Mourdock infamously ended up losing that general election.

All things considered, it’s debatable whether Newman’s challenge of Lipinski is within reason according to these ideological scores or out of line. Lipinski is indeed a Democratic nonconformist who can’t reliably be counted on to vote against the Trump agenda — but he’s not wildly out of sync with his district either. The voters will have to decide how much heterodoxy they can tolerate on Tuesday.

3 notes

·

View notes

Text

Assignment-2.1 (Course-02 : Week-01)

Course – 02, Week - 01

Assignment – 2_1

Dataset : Gapminder

Variables under consideration :

· urbanrate (explanatory variable for both research questions) : it is collapsed into groups of [0-10], [10-20],…………[80-90] and [90-100].

· alcconsumption (responsive variable for 1st research question)

· lifeexpectancy (responsive variable for 2nd research question)

Redefining Research Questions and Related Hypothesis

Research Question – 01

The per capita alcohol consumption of a country depends on its urban rate.

H0 : For all groups of the urban-rate, the group-wise mean per-capita alcohol consumptions are equal

HA : The mean per-capita alcohol consumption of all the groups are not equal

Research Question – 02

The average life-expectancy of new born baby in a country depends on its urban rate.

H0 : For all groups of the urban-rate, the group-wise mean life expectancies are equal

HA : The mean life expectancies of all the groups are not equal

Code

# -*- coding: utf-8 -*-

"""

Created on Fri Jun 19 16:35:22 2020

@author: ASUS

"""

# Assignment_3

import pandas

import numpy

import seaborn

import matplotlib.pyplot as plt

import statsmodels.formula.api as smf

import statsmodels.stats.multicomp as multi

#importing data

data = pandas.read_csv('Dataset_gapminder.csv', low_memory=False)

#Set PANDAS to show all columns in DataFrame

pandas.set_option('display.max_columns', None)

#Set PANDAS to show all rows in DataFrame

pandas.set_option('display.max_rows', None)

# bug fix for display formats to avoid run time errors

pandas.set_option('display.float_format', lambda x:'%f'%x)

#printing number of rows and columns

print ('Rows')

print (len(data))

print ('columns')

print (len(data.columns))

#------- Variables under consideration------#

# alcconsumption

# urbanrate

# lifeexpectancy

# Setting values to numeric

data['urbanrate'] = data['urbanrate'].convert_objects(convert_numeric=True)

data['alcconsumption'] = data['alcconsumption'].convert_objects(convert_numeric=True)

data['lifeexpectancy'] = data['lifeexpectancy'].convert_objects(convert_numeric=True)

data2 = data

# urbanrate

data2['urbgrps']=pandas.cut(data2.urbanrate,[0,10,20,30,40,50,60,70,80,90,100])

data2["urbgrps"] = data2["urbgrps"].astype('category')

# ------Analysis -------#

#------Urbgrps vs. alcconsumption---------#

print ('Urbgrps vs. alcconsumption')

#-----Descriptive analysis----#

print ('Descriptive data analysis : ')

print ('C->Q bar graph')

seaborn.factorplot(x='urbgrps', y='alcconsumption', data=data2, kind="bar", ci=None)

plt.ylabel('Per capita alcohol consumption in a year')

plt.title('Scatterplot for the Association Between Urban Rate and per-capita alcohol consumption')

print ('Seems H0 is to be rejected')

#----Unferential statistics-----#

print ('ANOVA-F test')

sub1_1 = data2[['alcconsumption', 'urbgrps']].dropna()

model1 = smf.ols(formula='alcconsumption ~ C(urbgrps)', data=sub1_1).fit()

print (model1.summary())

m1_1= sub1_1.groupby('urbgrps').mean()

print (m1_1)

#-----Post hoc----#

print ('Post-hoc test')

post_h_1 = multi.MultiComparison(sub1_1['alcconsumption'], sub1_1['urbgrps'])

res1 = post_h_1.tukeyhsd()

print(res1.summary())

# ------- urbgrps vs. lifexpectancy -------#

print ('urbgrps vs. lifexpectancy')

print ('Descriptive statistical analysis')

print ('C->Q bar graph')

seaborn.factorplot(x='urbgrps', y='lifeexpectancy', data=data2, kind="bar", ci=None)

plt.ylabel('Life Expectancy')

plt.title('Scatterplot for the Association Between Urban Rate and life expectancy')

#----Unferential statistics-----#

print ('ANOVA-F test')

sub1_2 = data2[['lifeexpectancy', 'urbgrps']].dropna()

model2 = smf.ols(formula='lifeexpectancy ~ C(urbgrps)', data=sub1_2).fit()

print (model2.summary())

m1_2= sub1_2.groupby('urbgrps').mean()

print (m1_2)

#-----Post hoc----#

print ('Post-hoc test')

post_h_2 = multi.MultiComparison(sub1_2['lifeexpectancy'], sub1_2['urbgrps'])

res2 = post_h_2.tukeyhsd()

print(res2.summary())

Output

Rows

213

columns

16

Urbgrps vs. alcconsumption

Descriptive data analysis :

C->Q bar graph

Seems H0 is to be rejected

ANOVA-F test

__main__:40: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

__main__:41: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

__main__:42: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

OLS Regression Results

==============================================================================

Dep. Variable: alcconsumption R-squared: 0.143

Model: OLS Adj. R-squared: 0.103

Method: Least Squares F-statistic: 3.625

Date: Tue, 07 Jul 2020 Prob (F-statistic): 0.000634

Time: 15:10:23 Log-Likelihood: -537.03

No. Observations: 183 AIC: 1092.

Df Residuals: 174 BIC: 1121.

Df Model: 8

Covariance Type: nonrobust

===================================================================================================================

coef std err t P>|t| [0.025 0.975]

-------------------------------------------------------------------------------------------------------------------

Intercept 5.8516 0.328 17.843 0.000 5.204 6.499

C(urbgrps)[T.Interval(10, 20, closed='right')] -0.5483 1.249 -0.439 0.661 -3.014 1.917

C(urbgrps)[T.Interval(20, 30, closed='right')] -1.9921 0.949 -2.100 0.037 -3.865 -0.119

C(urbgrps)[T.Interval(30, 40, closed='right')] -1.1990 0.930 -1.289 0.199 -3.035 0.637

C(urbgrps)[T.Interval(40, 50, closed='right')] 0.1864 0.990 0.188 0.851 -1.767 2.140

C(urbgrps)[T.Interval(50, 60, closed='right')] 1.6110 0.930 1.731 0.085 -0.225 3.447

C(urbgrps)[T.Interval(60, 70, closed='right')] 3.0216 0.819 3.691 0.000 1.406 4.637

C(urbgrps)[T.Interval(70, 80, closed='right')] 2.0307 0.949 2.140 0.034 0.158 3.903

C(urbgrps)[T.Interval(80, 90, closed='right')] 3.2649 0.990 3.299 0.001 1.312 5.218

C(urbgrps)[T.Interval(90, 100, closed='right')] -0.5236 1.361 -0.385 0.701 -3.209 2.162

==============================================================================

Omnibus: 8.172 Durbin-Watson: 1.832

Prob(Omnibus): 0.017 Jarque-Bera (JB): 8.039

Skew: 0.500 Prob(JB): 0.0180

Kurtosis: 3.233 Cond. No. 1.37e+16

==============================================================================

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The smallest eigenvalue is 1.1e-30. This might indicate that there are

strong multicollinearity problems or that the design matrix is singular.

alcconsumption

urbgrps

(0, 10] nan

(10, 20] 5.303333

(20, 30] 3.859545

(30, 40] 4.652609

(40, 50] 6.038000

(50, 60] 7.462609

(60, 70] 8.873226

(70, 80] 7.882273

(80, 90] 9.116500

(90, 100] 5.328000

Post-hoc test

Multiple Comparison of Means - Tukey HSD, FWER=0.05

========================================================

group1 group2 meandiff p-adj lower upper reject

--------------------------------------------------------

(10, 20] (20, 30] -1.4438 0.9 -6.7067 3.8191 False

(10, 20] (30, 40] -0.6507 0.9 -5.8731 4.5716 False

(10, 20] (40, 50] 0.7347 0.9 -4.6203 6.0896 False

(10, 20] (50, 60] 2.1593 0.9 -3.0631 7.3816 False

(10, 20] (60, 70] 3.5699 0.381 -1.4161 8.5559 False

(10, 20] (70, 80] 2.5789 0.8146 -2.684 7.8418 False

(10, 20] (80, 90] 3.8132 0.389 -1.5418 9.1681 False

(10, 20] (90, 100] 0.0247 0.9 -6.2546 6.3039 False

(20, 30] (30, 40] 0.7931 0.9 -3.5803 5.1665 False

(20, 30] (40, 50] 2.1785 0.8319 -2.3525 6.7094 False

(20, 30] (50, 60] 3.6031 0.1993 -0.7703 7.9765 False

(20, 30] (60, 70] 5.0137 0.0051 0.9255 9.1019 True

(20, 30] (70, 80] 4.0227 0.1067 -0.399 8.4445 False

(20, 30] (80, 90] 5.257 0.0104 0.726 9.7879 True

(20, 30] (90, 100] 1.4685 0.9 -4.1246 7.0615 False

(30, 40] (40, 50] 1.3854 0.9 -3.0984 5.8692 False

(30, 40] (50, 60] 2.81 0.5145 -1.5145 7.1345 False

(30, 40] (60, 70] 4.2206 0.0329 0.1847 8.2565 True

(30, 40] (70, 80] 3.2297 0.3364 -1.1437 7.6031 False

(30, 40] (80, 90] 4.4639 0.052 -0.0199 8.9477 False

(30, 40] (90, 100] 0.6754 0.9 -4.8796 6.2304 False

(40, 50] (50, 60] 1.4246 0.9 -3.0592 5.9084 False

(40, 50] (60, 70] 2.8352 0.4672 -1.3709 7.0413 False

(40, 50] (70, 80] 1.8443 0.9 -2.6866 6.3752 False

(40, 50] (80, 90] 3.0785 0.4877 -1.559 7.716 False

(40, 50] (90, 100] -0.71 0.9 -6.3898 4.9698 False

(50, 60] (60, 70] 1.4106 0.9 -2.6253 5.4465 False

(50, 60] (70, 80] 0.4197 0.9 -3.9537 4.7931 False

(50, 60] (80, 90] 1.6539 0.9 -2.8299 6.1377 False

(50, 60] (90, 100] -2.1346 0.9 -7.6896 3.4204 False

(60, 70] (70, 80] -0.991 0.9 -5.0792 3.0973 False

(60, 70] (80, 90] 0.2433 0.9 -3.9628 4.4494 False

(60, 70] (90, 100] -3.5452 0.4859 -8.8786 1.7881 False

(70, 80] (80, 90] 1.2342 0.9 -3.2967 5.7651 False

(70, 80] (90, 100] -2.5543 0.8772 -8.1474 3.0388 False

(80, 90] (90, 100] -3.7885 0.4813 -9.4683 1.8913 False

urbgrps vs. lifexpectancy

Descriptive statistical analysis

C->Q bar graph

ANOVA-F test

OLS Regression Results

==============================================================================

Dep. Variable: lifeexpectancy R-squared: 0.406

Model: OLS Adj. R-squared: 0.380

Method: Least Squares F-statistic: 15.32

Date: Tue, 07 Jul 2020 Prob (F-statistic): 4.76e-17

Time: 15:25:26 Log-Likelihood: -644.54

No. Observations: 188 AIC: 1307.

Df Residuals: 179 BIC: 1336.

Df Model: 8

Covariance Type: nonrobust

===================================================================================================================

coef std err t P>|t| [0.025 0.975]

-------------------------------------------------------------------------------------------------------------------

Intercept 62.4438 0.521 119.810 0.000 61.415 63.472

C(urbgrps)[T.Interval(10, 20, closed='right')] -1.7713 2.041 -0.868 0.387 -5.800 2.257

C(urbgrps)[T.Interval(20, 30, closed='right')] 0.2834 1.548 0.183 0.855 -2.772 3.338

C(urbgrps)[T.Interval(30, 40, closed='right')] -1.7116 1.548 -1.106 0.270 -4.767 1.343

C(urbgrps)[T.Interval(40, 50, closed='right')] 4.5547 1.615 2.820 0.005 1.367 7.742

C(urbgrps)[T.Interval(50, 60, closed='right')] 7.0269 1.518 4.629 0.000 4.031 10.022

C(urbgrps)[T.Interval(60, 70, closed='right')] 10.4459 1.283 8.140 0.000 7.914 12.978

C(urbgrps)[T.Interval(70, 80, closed='right')] 13.4776 1.548 8.706 0.000 10.423 16.533

C(urbgrps)[T.Interval(80, 90, closed='right')] 13.9092 1.694 8.212 0.000 10.567 17.252

C(urbgrps)[T.Interval(90, 100, closed='right')] 16.2290 1.841 8.816 0.000 12.597 19.861

==============================================================================

Omnibus: 8.034 Durbin-Watson: 1.953

Prob(Omnibus): 0.018 Jarque-Bera (JB): 8.379

Skew: -0.515 Prob(JB): 0.0152

Kurtosis: 2.902 Cond. No. 7.24e+15

==============================================================================

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The smallest eigenvalue is 4.02e-30. This might indicate that there are

strong multicollinearity problems or that the design matrix is singular.

lifeexpectancy

urbgrps

(0, 10] nan

(10, 20] 60.672500

(20, 30] 62.727136

(30, 40] 60.732182

(40, 50] 66.998450

(50, 60] 69.470652

(60, 70] 72.889647

(70, 80] 75.921364

(80, 90] 76.353000

(90, 100] 78.672733

Post-hoc test

Multiple Comparison of Means - Tukey HSD, FWER=0.05

=========================================================

group1 group2 meandiff p-adj lower upper reject

---------------------------------------------------------

(10, 20] (20, 30] 2.0546 0.9 -6.56 10.6693 False

(10, 20] (30, 40] 0.0597 0.9 -8.555 8.6743 False

(10, 20] (40, 50] 6.3259 0.3698 -2.4394 15.0913 False

(10, 20] (50, 60] 8.7982 0.0384 0.2499 17.3464 True

(10, 20] (60, 70] 12.2171 0.001 4.1569 20.2774 True

(10, 20] (70, 80] 15.2489 0.001 6.6342 23.8635 True

(10, 20] (80, 90] 15.6805 0.001 6.7344 24.6266 True

(10, 20] (90, 100] 18.0002 0.001 8.7032 27.2973 True

(20, 30] (30, 40] -1.995 0.9 -9.2327 5.2428 False

(20, 30] (40, 50] 4.2713 0.6536 -3.1452 11.6878 False

(20, 30] (50, 60] 6.7435 0.0826 -0.4151 13.9022 False

(20, 30] (60, 70] 10.1625 0.001 3.5944 16.7307 True

(20, 30] (70, 80] 13.1942 0.001 5.9565 20.432 True

(20, 30] (80, 90] 13.6259 0.001 5.9966 21.2551 True

(20, 30] (90, 100] 15.9456 0.001 7.9077 23.9835 True

(30, 40] (40, 50] 6.2663 0.1729 -1.1502 13.6828 False

(30, 40] (50, 60] 8.7385 0.0054 1.5798 15.8971 True

(30, 40] (60, 70] 12.1575 0.001 5.5893 18.7256 True

(30, 40] (70, 80] 15.1892 0.001 7.9514 22.4269 True

(30, 40] (80, 90] 15.6208 0.001 7.9916 23.2501 True

(30, 40] (90, 100] 17.9406 0.001 9.9026 25.9785 True

(40, 50] (50, 60] 2.4722 0.9 -4.8671 9.8115 False

(40, 50] (60, 70] 5.8912 0.1431 -0.8734 12.6558 False

(40, 50] (70, 80] 8.9229 0.0065 1.5064 16.3394 True

(40, 50] (80, 90] 9.3546 0.0068 1.5555 17.1536 True

(40, 50] (90, 100] 11.6743 0.001 3.475 19.8735 True

(50, 60] (60, 70] 3.419 0.7444 -3.0619 9.8999 False

(50, 60] (70, 80] 6.4507 0.1144 -0.7079 13.6094 False

(50, 60] (80, 90] 6.8823 0.1057 -0.6719 14.4366 False

(50, 60] (90, 100] 9.2021 0.011 1.2353 17.1689 True

(60, 70] (70, 80] 3.0317 0.8683 -3.5364 9.5999 False

(60, 70] (80, 90] 3.4634 0.8057 -3.5339 10.4606 False

(60, 70] (90, 100] 5.7831 0.2689 -1.6576 13.2238 False

(70, 80] (80, 90] 0.4316 0.9 -7.1976 8.0609 False

(70, 80] (90, 100] 2.7514 0.9 -5.2866 10.7893 False

(80, 90] (90, 100] 2.3197 0.9 -6.0725 10.7119 False

---------------------------------------------------------

Result

Research Question 01

We see that F-value of the ANOVA-F test is 3.625 and the corresponding p-value is 0.000634, which is less than 0.05. Thus the chance of wrongly rejecting the null hypothesis (H0) is very less. Hence we can reject the null hypothesis and accept the alternate hypothesis, i.e. we conclude that alcconsumption depends on urban-rate.

From results of the post-hoc test, we see that the mean per-capita alcohol consumption is unequal for the following groups:

· [20-30] and [60-70]

· [30-40] and [60-70]

· [20-30] and [80-90]

Research Question 02

We see that F-value of the ANOVA-F test is 15.32 and the corresponding p-value is 4.76e-17, which is less than 0.05. Thus the chance of wrongly rejecting the null hypothesis (H0) is very less. Hence we can reject the null hypothesis and accept the alternate hypothesis, i.e. we conclude that lfe-expectancy depends on urban-rate.

From results of the post-hoc test, we see that the mean per-capita alcohol consumption is unequal for the following groups:

· [50-60] and [90-100]

· [20-30] and [90-100]

· [20-30] and [80-90]

· And many more

0 notes

Text

Chemistry homework help

These are a portion of the questions that are needed to be answered. If you decided to complete this assignment I will screenshot and send the remaining 9 questions.

1. The equilibrium constant KP for the reaction is 203 at a certain temperature. Calculate

PO2

if

PNO = 0.411 atm and PNO2 = 0.385 atm.

2NO2(g)⇆ 2NO(g) + O2(g) PO2 = atm

2. Enter your answer in the provided box. For the reaction N2(g

View On WordPress

0 notes

Text

「林來瘋」席捲北國!林書豪遭老鷹買斷,加盟暴龍的影響

雖然在上週的交易截止日前,林書豪沒有透過任何交易轉隊,但對於明顯不是以挑戰季後賽為目標的老鷹來說,林書豪終究不是他們真正的解答,根據名記者Adrian Wojnarowski的消息指出,林書豪已經和老鷹談妥買斷本季這最後一季約1377萬美金的合約,並將在被買斷後加盟目前暫居東區第二的多倫多暴龍隊。

根據NBA規定,在3月1日前完成簽約的球員,才能加入球隊在季後賽的旅程,因此在季中交易截止日過後,有許多球隊已經在買斷市場上蠢蠢欲動,例如在交易截止日前被換到太陽的Wayne Ellington就被太陽買斷,將加入活塞,在小牛—尼克交易中轉戰大蘋果的Wesley Matthews,也在被尼克買斷後加入溜馬。

30歲的林書豪本季在老鷹平均10.7分、3.5助攻,近期狀況不錯,進入2019年後的19場出賽共有12場得分達兩位數,以替補雙能衛而言,林書豪表現不差,本季命中率0.466更寫下生涯新高,對於菜鳥控衛Trae Young來說,身邊有一個經驗足夠的林書豪支援,讓他能在場上不必馬上對抗高強度的賽事,一直都是林書豪帶給老鷹、以及Young的無形資產。

林書豪和暴龍並非毫無瓜葛,七年前的2月14日,正是「林來瘋」的那段日子,林書豪第五場先發,就在暴龍主場Air Canada Centre寫下戰功,他在比賽最後0.5秒飆進致勝三分球,幫助尼克90:87獲勝。至今在眾人回顧當時「林來瘋」的精華片段時,也是屬於他生涯經典的鏡頭之一。

youtube

更多最新 NBA 精選好文:

還在酸 James Harden 狂下一分雨? 5 項超狂紀錄可能會讓你對他改觀!

交易截止日結束後還沒完!影響聯盟 5 大買斷戰力人選

Markelle Fultz 的費城碼錶到點,生涯碼錶仍在倒數計時

暴龍在交易截止日前,才用Jonas Valanciunas、C.J. Miles、Delon Wright和2024年的二輪選秀權向灰熊交易來Marc Gasol,除了中鋒的互換外,也失去了後場戰力,尤其暴龍近兩年其實非常依賴替補戰力,雄厚的板凳深度是他們能在東區竄起的關鍵,缺少了Wright的後場雙能衛空缺,就將由林書豪來補上。暴龍目前的後場有先發的Kyle Lowry、Danny Green和替補的Fred VanVleet,在VanVleet傳出因左手拇指傷勢將缺席三週以上之後,林書豪的加入更有其必要性。

暴龍著眼在季後賽增添後場可用之兵,依照目前暴龍的戰績,可望順利前進季後賽,也會讓林書豪迎接生涯第四次的季後賽經驗,他曾在2013、2014和2016年打過季後賽,2013和14還在火箭,2016則是黃蜂在首輪挑戰熱火的成員之一,只是這三年林書豪所屬球隊都沒能闖過第一輪,而他在17場季後賽初賽中平均每場10.1分、3.1助攻,但命中率是偏低的0.385。

林書豪已經完成了在老鷹的階段性任務,暴龍則需要填補在交易中流失的後場深度,兩造都為自己的前景作出決定,林書豪也抵達生涯的第8支球隊,在而立之年,他加入暴龍,很可能會是生涯所屬球隊戰績最好的一年,也大有可能贏得生涯第一次系列賽,在今年夏天成為自由球員之前,再多累積一點在市場上談判的籌碼。

更多最新 NBA 精選好文:

NBA 30 隊下一位退休球衣:東南組

NBA 30 隊下一位退休球衣:大西洋組

比你想的還要恐怖!Zion Williamson 可能成為史上第一控球中鋒?

從西區第一跌至聯盟谷底 曼菲斯的下一步該如何走?

早已擺脫爛隊的刻板印象!重建進度超前的東西軍:籃網與國王

0 notes