#AI detection aggregators

Explore tagged Tumblr posts

Text

Chapter 1. Introduction 1.1. Overview of AI Advancements 1.2. Importance of Big Tech in AI Development Chapter 2. Google’s AI Innovations 2.1. Personalized Podcasts from Search Data 2.2. Enhancing User Engagement 2.3. Impact on Information Accessibility Chapter 3. Microsoft’s Open Source Efforts 3.1. Introduction to Phi-4 Model 3.2. Collaboration on Hugging Face 3.3. Benefits for the AI…

#AI assistant features#AI avatar creation#AI chatbots#AI detection aggregators#AI for content creation#AI natural language#AI pricing insights#AI productivity tools#AI search engines#AI speech-to-text#AI system integration.#AI tool categories#AI tools directory#AI translation services#AI user reviews#AI video intelligence#AI writing tools#AI-driven finance tools#best AI tools#free AI tools

0 notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

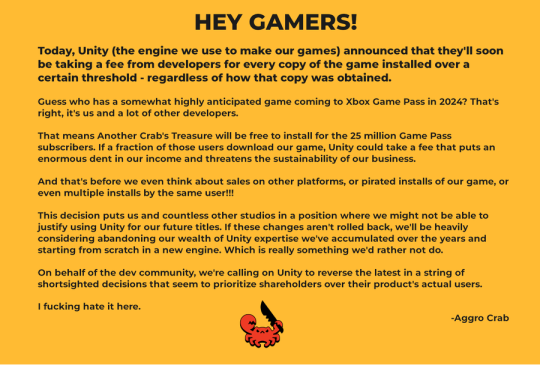

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

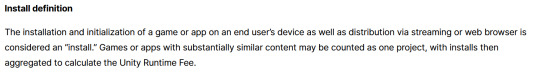

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Text

These days, when Nicole Yelland receives a meeting request from someone she doesn’t already know, she conducts a multi-step background check before deciding whether to accept. Yelland, who works in public relations for a Detroit-based non-profit, says she’ll run the person’s information through Spokeo, a personal data aggregator that she pays a monthly subscription fee to use. If the contact claims to speak Spanish, Yelland says, she will casually test their ability to understand and translate trickier phrases. If something doesn’t quite seem right, she’ll ask the person to join a Microsoft Teams call—with their camera on.

If Yelland sounds paranoid, that’s because she is. In January, before she started her current non-profit role, Yelland says she got roped into an elaborate scam targeting job seekers. “Now, I do the whole verification rigamarole any time someone reaches out to me,” she tells WIRED.

Digital imposter scams aren’t new; messaging platforms, social media sites, and dating apps have long been rife with fakery. In a time when remote work and distributed teams have become commonplace, professional communications channels are no longer safe, either. The same artificial intelligence tools that tech companies promise will boost worker productivity are also making it easier for criminals and fraudsters to construct fake personas in seconds.

On LinkedIn, it can be hard to distinguish a slightly touched-up headshot of a real person from a too-polished, AI-generated facsimile. Deepfake videos are getting so good that longtime email scammers are pivoting to impersonating people on live video calls. According to the US Federal Trade Commission, reports of job and employment related scams nearly tripled from 2020 to 2024, and actual losses from those scams have increased from $90 million to $500 million.

Yelland says the scammers that approached her back in January were impersonating a real company, one with a legitimate product. The “hiring manager” she corresponded with over email also seemed legit, even sharing a slide deck outlining the responsibilities of the role they were advertising. But during the first video interview, Yelland says, the scammers refused to turn their cameras on during a Microsoft Teams meeting and made unusual requests for detailed personal information, including her driver’s license number. Realizing she’d been duped, Yelland slammed her laptop shut.

These kinds of schemes have become so widespread that AI startups have emerged promising to detect other AI-enabled deepfakes, including GetReal Labs, and Reality Defender. OpenAI CEO Sam Altman also runs an identity-verification startup called Tools for Humanity, which makes eye-scanning devices that capture a person’s biometric data, create a unique identifier for their identity, and store that information on the blockchain. The whole idea behind it is proving “personhood,” or that someone is a real human. (Lots of people working on blockchain technology say that blockchain is the solution for identity verification.)

But some corporate professionals are turning instead to old-fashioned social engineering techniques to verify every fishy-seeming interaction they have. Welcome to the Age of Paranoia, when someone might ask you to send them an email while you’re mid-conversation on the phone, slide into your Instagram DMs to ensure the LinkedIn message you sent was really from you, or request you text a selfie with a timestamp, proving you are who you claim to be. Some colleagues say they even share code words with each other, so they have a way to ensure they’re not being misled if an encounter feels off.

“What’s funny is, the low-fi approach works,” says Daniel Goldman, a blockchain software engineer and former startup founder. Goldman says he began changing his own behavior after he heard a prominent figure in the crypto world had been convincingly deepfaked on a video call. “It put the fear of god in me,” he says. Afterwards, he warned his family and friends that even if they hear what they believe is his voice or see him on a video call asking for something concrete—like money or an internet password—they should hang up and email him first before doing anything.

Ken Schumacher, founder of the recruitment verification service Ropes, says he’s worked with hiring managers who ask job candidates rapid-fire questions about the city where they claim to live on their resume, such as their favorite coffee shops and places to hang out. If the applicant is actually based in that geographic region, Schumacher says, they should be able to respond quickly with accurate details.

Another verification tactic some people use, Schumacher says, is what he calls the “phone camera trick.” If someone suspects the person they’re talking to over video chat is being deceitful, they can ask them to hold up their phone camera to their laptop. The idea is to verify whether the individual may be running deepfake technology on their computer, obscuring their true identity or surroundings. But it’s safe to say this approach can also be off-putting: Honest job candidates may be hesitant to show off the inside of their homes or offices, or worry a hiring manager is trying to learn details about their personal lives.

“Everyone is on edge and wary of each other now,” Schumacher says.

While turning yourself into a human captcha may be a fairly effective approach to operational security, even the most paranoid admit these checks create an atmosphere of distrust before two parties have even had the chance to really connect. They can also be a huge time suck. “I feel like something’s gotta give,” Yelland says. “I’m wasting so much time at work just trying to figure out if people are real.”

Jessica Eise, an assistant professor studying climate change and social behavior at Indiana University-Bloomington, says that her research team has been forced to essentially become digital forensics experts, due to the amount of fraudsters who respond to ads for paid virtual surveys. (Scammers aren’t as interested in the unpaid surveys, unsurprisingly.) If the research project is federally funded, all of the online participants have to be over the age of 18 and living in the US.

“My team would check time stamps for when participants answered emails, and if the timing was suspicious, we could guess they might be in a different time zone,” Eise says. “Then we’d look for other clues we came to recognize, like certain formats of email address or incoherent demographic data.”

Eise says the amount of time her team spent screening people was “exorbitant,” and that they’ve now shrunk the size of the cohort for each study and have turned to “snowball sampling” or having recruiting people they know personally to join their studies. The researchers are also handing out more physical flyers to solicit participants in person. “We care a lot about making sure that our data has integrity, that we’re studying who we say we’re trying to study,” she says. “I don’t think there’s an easy solution to this.”

Barring any widespread technical solution, a little common sense can go a long way in spotting bad actors. Yelland shared with me the slide deck that she received as part of the fake job pitch. At first glance, it seemed like legit pitch, but when she looked at it again, a few details stood out. The job promised to pay substantially more than the average salary for a similar role in her location, and offered unlimited vacation time, generous paid parental leave, and fully-covered health care benefits. In today’s job environment, that might have been the biggest tipoff of all that it was a scam.

27 notes

·

View notes

Note

"chatgpt writing is bad because you can tell when it's chatgpt writing because chatgpt writing is bad". in reality the competent kids are using chatgpt well and the incompetent kids are using chatgpt poorly... like with any other tool.

It's not just like other tools. Calculators and computers and other kinds of automation don't require you to steal the hard work of other people who deserve recognition and compensation. I dont know why I have to keep reminding people of this.

It also uses an exorbitant amount of energy and water during an environmental crisis and it's been linked to declining cognitive skills. The competent kids are becoming less competent by using it and they're fucked when we require in-class essays.

Specifically, it can enhance your writing output and confidence but it decreases creativity, originality, critical thinking, reading comprehension, and makes you prone to data bias. Remember, AI privileges the most common answers, which are often out of date and wrong when it comes to scientific and sociological data. This results in reproduction of racism and sexist ideas, because guess whats common on the internet? Racism and sexism!

Heres a source (its a meta-analysis, so it aggregates data from a collection of studies. This means it has better statistical power than any single study, which could have been biased in a number of ways. Meta analysis= more data points, more data points= higher accuracy).

This study also considers positives of AI by the way, as noted it can increase writing efficiency but the downsides and ethical issues don't make that worthwhile in my opinion. We can and should enhance writing and confidence in other ways.

Heres another source:

The issue here is that if you rely on AI consistently, certain skills start to atrophy. So what happens when you can't use it?

Im not completely against all AI, there is legitimate possibility for ethical usage when its trained on paid for data sets and used for specific purpose. Ive seen good evidence for use in medical fields, and for enhancing language learning in certain ways. If we can find a way to reduce the energy and water consumption then cool.

But when you write essays with chatgpt you're just robbing yourself an opportunity to exercise valuable cognitive muscles and you're also robbing millions of people of the fruit of their own intellectual and creative property. Also like, on a purely aesthetic level it has such boring prose, it makes you sound exactly like everyone else and I actually appreciate a distinctive voice in a piece of writing.

It also often fails to cite ideas that belong to other people, which can get you an academic violation for plagiarism even if your writing isn't identified as AI. And by the way, AI detection software is only going to keep getting better in tandem with AI.

All that said it really doesn't matter to me how good it gets at faking human or how good people get at using it, I'm never going to support it because again, it requires mass scale intellectual theft and (at least currently) it involves an unnecessary energy expenditure. Like it's really not that complicated.

At the end of the day I would much rather know that I did my work. I feel pride in my writing because I know I chose every word, and because integrity matters to me.

This is the last post I'm making about this. If you send me another ask I'll block you and delete it. This space is meant to be fun for me and I don't want to engage in more bullshit discourse here.

15 notes

·

View notes

Text

More and more plastic litter ends up in oceans every day. Satellite images can help detect accumulations of litter along shores and at sea so that it can be taken out. A research team has developed a new artificial intelligence model that recognizes floating plastics much more accurately in satellite images than before, even when the images are partly covered by clouds or weather conditions are hazy. Our society relies heavily on plastic products, and the amount of plastic waste is expected to increase in the future. If not properly discarded or recycled, much of it accumulates in rivers and lakes. Eventually, it will flow into the oceans, where it can form aggregations of marine debris together with natural materials like driftwood and algae. A new study from Wageningen University and EPFL researchers, recently published in iScience, has developed an artificial intelligence-based detector that estimates the probability of marine debris shown in satellite images. This could help to systematically remove plastic litter from the oceans with ships. Accumulations of marine debris are visible in freely available Sentinel-2 satellite images that capture coastal areas every 2–5 days worldwide on land masses and coastal areas. Because these amount to terabytes of data, the data needs to be analyzed automatically through artificial intelligence models like deep neural networks.

Continue Reading.

47 notes

·

View notes

Text

Dimensity 7300 : Unbelievable Speed and Power Unleashed

MediaTek launched the 4nm Dimensity 7300 and Dimensity 7300X high-end mobile device processors today. Best-in-class power economy and performance make Dimensity 7300 chipsets ideal for AI-enhanced computing, faster gaming, better photography, and effortless multitasking. Dimensity 7300X supports twin displays for flip-style foldable devices.

Both MediaTek Dimensity 7300 chipsets have 2.5GHz octa-core CPUs with 4X Arm Cortex-A78 and A55 cores. 4nm reduces A78 core power consumption by 25% over Dimensity 7050. To speed up gaming, the CPU combines the most recent Arm Mali-G615 GPU with a number of MediaTek HyperEngine optimisations. The Dimensity 7300 series provides 20% quicker FPS and 20% more energy efficiency than competing options. The new chips optimise 5G and Wi-Fi game connections, use clever resource optimisation, support Dual-Link True Wireless Stereo Audio and Bluetooth LE Audio technology to significantly improve gaming experiences.

According to MediaTek’s Wireless Communications Business Deputy General Manager Dr. According to Yenchi Lee, “The MediaTek Dimensity 7300 chips will help integrate the newest AI and connectivity technologies consumers can stream and game seamlessly. “Furthermore, the dual display capability of the Dimensity 7300X allows OEMs to create creative new form factors.”

With compatibility for a 200MP primary camera and a premium-grade 12-bit HDR-ISP, the MediaTek Imagiq 950 is another enhanced photographic feature available with the Dimensity 7300 chipsets. New hardware engines provide accurate noise reduction (MCNR), face detection (HWFD), and video HDR in the Dimensity 7300. This allows users to take beautiful pictures and videos in any kind of lighting. Compared to the Dimensity 7050, live focus and photo remastering are 1.3X and 1.5X faster, respectively. In addition, 4K HDR video may be recorded with a dynamic range that is more than 50% broader than competing solutions, allowing users to capture more detail in their recordings.

The MediaTek APU 655 doubles the performance of the Dimensity 7050 while also greatly increasing the efficiency of AI tasks. Also, in order to reduce memory requirements for larger AI models and more effectively use memory bandwidth, the Dimensity 7300 chips support new mixed precision data types.

The Dimensity 7300 SoCs enable global HDR standards and remarkably detailed WFHD+ displays with 10-bit true colour thanks to MediaTek’s built-in MiraVision 955. This improves media streaming and playing. Additionally, OEMs find it simpler to satisfy the expanding market demand for cutting-edge form factors thanks to the Dimensity 7300X’s specialised support for dual display flip phones.

Dimensity 7300 and Dimensity 7300X important features

With MediaTek’s own optimisations and a full suite of R16 power-saving advancements, MediaTek 5G UltraSave 3.0+ technology offers 13–30% more power efficiency than competing options in typical 5G sub-6GHz connectivity settings.

Supporting 3CC carrier aggregation up to 3.27Gb/s 5G downstream speed, which enables better downlink rates in suburban and urban settings.

Support for multi-band Wi-Fi 6E allows for dependable and quick multi-gigabit wireless access.

Users will have more options with dual 5G SIM capability and dual VoNR.

This was addressed by MediaTek’s Dimensity 7300 series in May 2024. The Dimensity 7300 and 7300X chipset series boosts high-end to smartphone and foldable device performance, energy efficiency, and AI.

Constructed for Rapidity and Effectiveness

A powerful octa-core CPU powers the Dimensity 7300. This combo is ideal for gaming and multitasking with four 2.5 GHz Arm Cortex-A78 cores. Four Arm Cortex-A55 cores, which are energy-efficient, complement these and guarantee dependable performance for daily use. In comparison to the Dimensity 7050, this combination represents a major advancement.

The advanced 4nm manufacturing process is a feature of the 7300 series. This corresponds to a 25% decrease in power usage over the 6nm technique utilised in earlier generations. Users will benefit from longer battery life as a result, while manufacturers can create sleeker devices without compromising functionality.

Superpower in Graphics

Immersive mobile experiences require strong visuals. The Dimensity 7300 has an Arm Mali-G615 GPU to fix this. This graphics processor runs even the most demanding games smoothly, and MediaTek’s HyperEngine technology stabilises the network and efficiently manages resources. MediaTek says the 7300 series is appealing to mobile gamers due to its 20% higher frame rates (FPS) and energy economy.

AI Gains Strength

AI is increasingly changing the smartphone experience, enabling intelligent assistants, better photography, and facial recognition. The Dimensity 7300 uses the MediaTek APU 655, following this trend. This AI processing unit doubles the efficiency of the Dimensity 7050, resulting in a notable performance boost. New mixed precision data types, which enable more effective memory bandwidth utilisation and lower memory requirements for larger AI models, are also supported by the 7300 series. Future smartphones will be able to incorporate even more advanced AI features thanks to this.

Gorgeous Images

For a fascinating mobile experience, a stunning display is essential; a strong processor is only half the story. This demand is met by the Dimensity 7300 series, which supports powerful screens. With the ability to support WFHD+ resolutions at frame rates of up to 120 Hz, these chipsets guarantee fluid and visually spectacular images. For an even smoother user experience, the 7300 series also supports Full HD+ screens at an even higher refresh rate of 144Hz.

Concentrate on Take Pictures

Users now place a greater value on mobile photography, and the Dimensity 7300 series delivers. The chip has MediaTek’s Imagiq 950 image signal processor (ISP), which provides sophisticated photographic features. With the 12-bit HDR pipeline supported by this ISP, detailed information and brilliant colours may be captured. Furthermore, the Imagiq 950 enables features like video HDR, face detection, and precise noise reduction, guaranteeing beautiful images and movies in a range of lighting scenarios. The 7300 series stands out for its ability to enable dual simultaneous video capture, which allows for the development of more imaginative content.

A Set of Chips for Foldables

A 7300 variation called the Dimensity 7300X goes one step farther by being designed with foldable cellphones in mind. One essential component of these cutting-edge gadgets is dual display compatibility, which this chipset provides. Manufacturers may design foldable phones with seamless experiences on both the cover and main displays thanks to the 7300X.

Mobile’s Future

Mobile processing power has advanced significantly with the release of the Dimensity 7300 series. This chipset family, with its emphasis on efficiency, performance, AI capabilities, and support for cutting-edge technologies like foldable devices and high refresh rate screens, is positioned to have a significant impact on the direction of mobile technology. The Dimensity 7300 series offers makers a strong and adaptable foundation to develop next-generation smartphones that offer outstanding user experiences as smartphones continue to advance.

Read more on govindhtech.com

#dimensity7300x#MediaTekDimensity#AI#artificialintelligence#AITechnology#mobiletechnology#smartphone#aimodel#CPU#cpupower#news#technews#technology#technologynews#technologytrends#govindhtech

7 notes

·

View notes

Text

What are the latest technological advancements shaping the future of fintech?

The financial technology (fintech) industry has witnessed an unprecedented wave of innovation over the past decade, reshaping how people and businesses manage money. As digital transformation accelerates, fintech new technologies are emerging, revolutionizing payments, lending, investments, and other financial services. These advancements, driven by fintech innovation, are not only enhancing user experience but also fostering greater financial inclusion and efficiency.

In this article, we will explore the most significant fintech trending technologies that are shaping the future of the industry. From blockchain to artificial intelligence, these innovations are redefining the boundaries of what fintech can achieve.

1. Blockchain and Cryptocurrencies

One of the most transformative advancements in fintech is the adoption of blockchain technology. Blockchain serves as the foundation for cryptocurrencies like Bitcoin, Ethereum, and stablecoins. Its decentralized, secure, and transparent nature has made it a game-changer in areas such as payments, remittances, and asset tokenization.

Key Impacts of Blockchain:

Decentralized Finance (DeFi): Blockchain is driving the rise of DeFi, which eliminates intermediaries like banks in financial transactions. DeFi platforms offer lending, borrowing, and trading services, accessible to anyone with an internet connection.

Cross-Border Payments: Blockchain simplifies and accelerates international transactions, reducing costs and increasing transparency.

Smart Contracts: These self-executing contracts are automating and securing financial agreements, streamlining operations across industries.

As blockchain adoption grows, businesses are exploring how to integrate this technology into their offerings to increase trust and efficiency.

2. Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML are at the core of fintech innovation, enabling smarter and more efficient financial services. These technologies are being used to analyze vast amounts of data, predict trends, and automate processes.

Applications of AI and ML:

Fraud Detection and Prevention: AI models detect anomalies and fraudulent transactions in real-time, enhancing security for both businesses and customers.

Personalized Financial Services: AI-driven chatbots and virtual assistants are offering tailored advice, improving customer engagement.

Credit Scoring: AI-powered algorithms provide more accurate and inclusive credit assessments, helping underserved populations gain access to loans.

AI and ML are enabling fintech companies to deliver faster, more reliable services while minimizing operational risks.

3. Open Banking

Open banking is one of the most significant fintech trending technologies, promoting collaboration between banks, fintechs, and third-party providers. It allows customers to share their financial data securely with authorized parties through APIs (Application Programming Interfaces).

Benefits of Open Banking:

Enhanced Financial Management: Aggregated data helps users better manage their finances across multiple accounts.

Increased Competition: Open banking fosters innovation, as fintech startups can create solutions tailored to specific customer needs.

Seamless Payments: Open banking APIs enable instant and direct payments, reducing reliance on traditional methods.

Open banking is paving the way for a more connected and customer-centric financial ecosystem.

4. Biometric Authentication

Security is paramount in the financial industry, and fintech innovation has led to the rise of biometric authentication. By using physical characteristics such as fingerprints, facial recognition, or voice patterns, biometric technologies enhance security while providing a seamless user experience.

Advantages of Biometric Authentication:

Improved Security: Biometrics significantly reduce the risk of fraud by making it difficult for unauthorized users to access accounts.

Faster Transactions: Users can authenticate themselves quickly, leading to smoother digital payment experiences.

Convenience: With no need to remember passwords, biometrics offer a more user-friendly approach to security.

As mobile banking and digital wallets gain popularity, biometric authentication is becoming a standard feature in fintech services.

5. Embedded Finance

Embedded finance involves integrating financial services into non-financial platforms, such as e-commerce websites or ride-hailing apps. This fintech new technology allows businesses to offer services like loans, insurance, or payment options directly within their applications.

Examples of Embedded Finance:

Buy Now, Pay Later (BNPL): E-commerce platforms enable customers to purchase products on credit, enhancing sales and customer satisfaction.

In-App Payments: Users can make seamless transactions without leaving the platform, improving convenience.

Insurance Integration: Platforms offer tailored insurance products at the point of sale.

Embedded finance is creating new revenue streams for businesses while simplifying the customer journey.

6. RegTech (Regulatory Technology)

As financial regulations evolve, fintech innovation is helping businesses stay compliant through RegTech solutions. These technologies automate compliance processes, reducing costs and minimizing errors.

Key Features of RegTech:

Automated Reporting: Streamlines regulatory reporting requirements, saving time and resources.

Risk Management: Identifies and mitigates potential risks through predictive analytics.

KYC and AML Compliance: Simplifies Know Your Customer (KYC) and Anti-Money Laundering (AML) processes.

RegTech ensures that fintech companies remain agile while adhering to complex regulatory frameworks.

7. Cloud Computing

Cloud computing has revolutionized the way fintech companies store and process data. By leveraging the cloud, businesses can scale rapidly and deliver services more efficiently.

Benefits of Cloud Computing:

Scalability: Enables businesses to handle large transaction volumes without investing in physical infrastructure.

Cost-Effectiveness: Reduces operational costs by eliminating the need for on-premise servers.

Data Security: Advanced cloud platforms offer robust security measures to protect sensitive financial data.

Cloud computing supports the rapid growth of fintech companies, ensuring reliability and flexibility.

The Role of Xettle Technologies in Fintech Innovation

Companies like Xettle Technologies are at the forefront of fintech new technologies, driving advancements that make financial services more accessible and efficient. With a focus on delivering cutting-edge solutions, Xettle Technologies helps businesses integrate the latest fintech trending technologies into their operations. From AI-powered analytics to secure cloud-based platforms, Xettle Technologies is empowering organizations to stay competitive in an ever-evolving industry.

Conclusion

The future of fintech is being shaped by transformative technologies that are redefining how financial services are delivered and consumed. From blockchain and AI to open banking and biometric authentication, these fintech new technologies are driving efficiency, security, and inclusivity. As companies like Xettle Technologies continue to innovate, the industry will unlock even greater opportunities for businesses and consumers alike. By embracing these fintech trending advancements, organizations can stay ahead of the curve and thrive in a dynamic financial landscape.

2 notes

·

View notes

Text

Mastering Neural Networks: A Deep Dive into Combining Technologies

How Can Two Trained Neural Networks Be Combined?

Introduction

In the ever-evolving world of artificial intelligence (AI), neural networks have emerged as a cornerstone technology, driving advancements across various fields. But have you ever wondered how combining two trained neural networks can enhance their performance and capabilities? Let’s dive deep into the fascinating world of neural networks and explore how combining them can open new horizons in AI.

Basics of Neural Networks

What is a Neural Network?

Neural networks, inspired by the human brain, consist of interconnected nodes or "neurons" that work together to process and analyze data. These networks can identify patterns, recognize images, understand speech, and even generate human-like text. Think of them as a complex web of connections where each neuron contributes to the overall decision-making process.

How Neural Networks Work

Neural networks function by receiving inputs, processing them through hidden layers, and producing outputs. They learn from data by adjusting the weights of connections between neurons, thus improving their ability to predict or classify new data. Imagine a neural network as a black box that continuously refines its understanding based on the information it processes.

Types of Neural Networks

From simple feedforward networks to complex convolutional and recurrent networks, neural networks come in various forms, each designed for specific tasks. Feedforward networks are great for straightforward tasks, while convolutional neural networks (CNNs) excel in image recognition, and recurrent neural networks (RNNs) are ideal for sequential data like text or speech.

Why Combine Neural Networks?

Advantages of Combining Neural Networks

Combining neural networks can significantly enhance their performance, accuracy, and generalization capabilities. By leveraging the strengths of different networks, we can create a more robust and versatile model. Think of it as assembling a team where each member brings unique skills to tackle complex problems.

Applications in Real-World Scenarios

In real-world applications, combining neural networks can lead to breakthroughs in fields like healthcare, finance, and autonomous systems. For example, in medical diagnostics, combining networks can improve the accuracy of disease detection, while in finance, it can enhance the prediction of stock market trends.

Methods of Combining Neural Networks

Ensemble Learning

Ensemble learning involves training multiple neural networks and combining their predictions to improve accuracy. This approach reduces the risk of overfitting and enhances the model's generalization capabilities.

Bagging

Bagging, or Bootstrap Aggregating, trains multiple versions of a model on different subsets of the data and combines their predictions. This method is simple yet effective in reducing variance and improving model stability.

Boosting

Boosting focuses on training sequential models, where each model attempts to correct the errors of its predecessor. This iterative process leads to a powerful combined model that performs well even on difficult tasks.

Stacking

Stacking involves training multiple models and using a "meta-learner" to combine their outputs. This technique leverages the strengths of different models, resulting in superior overall performance.

Transfer Learning

Transfer learning is a method where a pre-trained neural network is fine-tuned on a new task. This approach is particularly useful when data is scarce, allowing us to leverage the knowledge acquired from previous tasks.

Concept of Transfer Learning

In transfer learning, a model trained on a large dataset is adapted to a smaller, related task. For instance, a model trained on millions of images can be fine-tuned to recognize specific objects in a new dataset.

How to Implement Transfer Learning

To implement transfer learning, we start with a pretrained model, freeze some layers to retain their knowledge, and fine-tune the remaining layers on the new task. This method saves time and computational resources while achieving impressive results.

Advantages of Transfer Learning

Transfer learning enables quicker training times and improved performance, especially when dealing with limited data. It’s like standing on the shoulders of giants, leveraging the vast knowledge accumulated from previous tasks.

Neural Network Fusion

Neural network fusion involves merging multiple networks into a single, unified model. This method combines the strengths of different architectures to create a more powerful and versatile network.

Definition of Neural Network Fusion

Neural network fusion integrates different networks at various stages, such as combining their outputs or merging their internal layers. This approach can enhance the model's ability to handle diverse tasks and data types.

Types of Neural Network Fusion

There are several types of neural network fusion, including early fusion, where networks are combined at the input level, and late fusion, where their outputs are merged. Each type has its own advantages depending on the task at hand.

Implementing Fusion Techniques

To implement neural network fusion, we can combine the outputs of different networks using techniques like averaging, weighted voting, or more sophisticated methods like learning a fusion model. The choice of technique depends on the specific requirements of the task.

Cascade Network

Cascade networks involve feeding the output of one neural network as input to another. This approach creates a layered structure where each network focuses on different aspects of the task.

What is a Cascade Network?

A cascade network is a hierarchical structure where multiple networks are connected in series. Each network refines the outputs of the previous one, leading to progressively better performance.

Advantages and Applications of Cascade Networks

Cascade networks are particularly useful in complex tasks where different stages of processing are required. For example, in image processing, a cascade network can progressively enhance image quality, leading to more accurate recognition.

Practical Examples

Image Recognition

In image recognition, combining CNNs with ensemble methods can improve accuracy and robustness. For instance, a network trained on general image data can be combined with a network fine-tuned for specific object recognition, leading to superior performance.

Natural Language Processing

In natural language processing (NLP), combining RNNs with transfer learning can enhance the understanding of text. A pre-trained language model can be fine-tuned for specific tasks like sentiment analysis or text generation, resulting in more accurate and nuanced outputs.

Predictive Analytics

In predictive analytics, combining different types of networks can improve the accuracy of predictions. For example, a network trained on historical data can be combined with a network that analyzes real-time data, leading to more accurate forecasts.

Challenges and Solutions

Technical Challenges

Combining neural networks can be technically challenging, requiring careful tuning and integration. Ensuring compatibility between different networks and avoiding overfitting are critical considerations.

Data Challenges

Data-related challenges include ensuring the availability of diverse and high-quality data for training. Managing data complexity and avoiding biases are essential for achieving accurate and reliable results.

Possible Solutions

To overcome these challenges, it’s crucial to adopt a systematic approach to model integration, including careful preprocessing of data and rigorous validation of models. Utilizing advanced tools and frameworks can also facilitate the process.

Tools and Frameworks

Popular Tools for Combining Neural Networks

Tools like TensorFlow, PyTorch, and Keras provide extensive support for combining neural networks. These platforms offer a wide range of functionalities and ease of use, making them ideal for both beginners and experts.

Frameworks to Use

Frameworks like Scikit-learn, Apache MXNet, and Microsoft Cognitive Toolkit offer specialized support for ensemble learning, transfer learning, and neural network fusion. These frameworks provide robust tools for developing and deploying combined neural network models.

Future of Combining Neural Networks

Emerging Trends

Emerging trends in combining neural networks include the use of advanced ensemble techniques, the integration of neural networks with other AI models, and the development of more sophisticated fusion methods.

Potential Developments

Future developments may include the creation of more powerful and efficient neural network architectures, enhanced transfer learning techniques, and the integration of neural networks with other technologies like quantum computing.

Case Studies

Successful Examples in Industry

In healthcare, combining neural networks has led to significant improvements in disease diagnosis and treatment recommendations. For example, combining CNNs with RNNs has enhanced the accuracy of medical image analysis and patient monitoring.

Lessons Learned from Case Studies

Key lessons from successful case studies include the importance of data quality, the need for careful model tuning, and the benefits of leveraging diverse neural network architectures to address complex problems.

Online Course

I have came across over many online courses. But finally found something very great platform to save your time and money.

1.Prag Robotics_ TBridge

2.Coursera

Best Practices

Strategies for Effective Combination

Effective strategies for combining neural networks include using ensemble methods to enhance performance, leveraging transfer learning to save time and resources, and adopting a systematic approach to model integration.

Avoiding Common Pitfalls

Common pitfalls to avoid include overfitting, ignoring data quality, and underestimating the complexity of model integration. By being aware of these challenges, we can develop more robust and effective combined neural network models.

Conclusion

Combining two trained neural networks can significantly enhance their capabilities, leading to more accurate and versatile AI models. Whether through ensemble learning, transfer learning, or neural network fusion, the potential benefits are immense. By adopting the right strategies and tools, we can unlock new possibilities in AI and drive advancements across various fields.

FAQs

What is the easiest method to combine neural networks?

The easiest method is ensemble learning, where multiple models are combined to improve performance and accuracy.

Can different types of neural networks be combined?

Yes, different types of neural networks, such as CNNs and RNNs, can be combined to leverage their unique strengths.

What are the typical challenges in combining neural networks?

Challenges include technical integration, data quality, and avoiding overfitting. Careful planning and validation are essential.

How does combining neural networks enhance performance?

Combining neural networks enhances performance by leveraging diverse models, reducing errors, and improving generalization.

Is combining neural networks beneficial for small datasets?

Yes, combining neural networks can be beneficial for small datasets, especially when using techniques like transfer learning to leverage knowledge from larger datasets.

#artificialintelligence#coding#raspberrypi#iot#stem#programming#science#arduinoproject#engineer#electricalengineering#robotic#robotica#machinelearning#electrical#diy#arduinouno#education#manufacturing#stemeducation#robotics#robot#technology#engineering#robots#arduino#electronics#automation#tech#innovation#ai

4 notes

·

View notes

Text

The Difference Between Business Intelligence and Data Analytics

Introduction

In today’s hyper-digital business world, data flows through every corner of an organization. But the value of that data is only realized when it’s converted into intelligence and ultimately, action.

That’s where Business Intelligence (BI) and Data Analytics come in. These two often-interchanged terms form the backbone of data-driven decision-making, but they serve very different purposes.

This guide unpacks the nuances between the two, helping you understand where they intersect, how they differ, and why both are critical to a future-ready enterprise.

What is Business Intelligence?

Business Intelligence is the systematic collection, integration, analysis, and presentation of business information. It focuses primarily on descriptive analytics — what happened, when, and how.

BI is built for reporting and monitoring, not for experimentation. It’s your corporate dashboard, a rearview mirror that helps you understand performance trends and operational health.

Key Characteristics of BI:

Historical focus

Dashboards and reports

Aggregated KPIs

Data visualization tools

Low-level predictive power

Examples:

A sales dashboard showing last quarter’s revenue

A report comparing warehouse efficiency across regions

A chart showing customer churn rate over time

What is Data Analytics?

Data Analytics goes a step further. It’s a broader umbrella that includes descriptive, diagnostic, predictive, and prescriptive approaches.

While BI focuses on “what happened,” analytics explores “why it happened,” “what might happen next,” and “what we should do about it.”

Key Characteristics of Data Analytics:

Exploratory in nature

Uses statistical models and algorithms

Enables forecasts and optimization

Can be used in real-time or batch processing

Often leverages machine learning and AI

Examples:

Predicting next quarter’s demand using historical sales and weather data

Analyzing clickstream data to understand customer drop-off in a sales funnel

Identifying fraud patterns in financial transactions

BI vs Analytics: Use Cases in the Real World

Let’s bring the distinction to life with practical scenarios.

Retail Example:

BI: Shows sales per store in Q4 across regions

Analytics: Predicts which product category will grow fastest next season based on external factors

Banking Example:

BI: Tracks number of new accounts opened weekly

Analytics: Detects anomalies in transactions suggesting fraud risk

Healthcare Example:

BI: Reports on patient visits by department

Analytics: Forecasts ER admission rates during flu season using historical and external data

Both serve a purpose, but together, they offer a comprehensive view of the business landscape.

Tools That Power BI and Data Analytics

Popular BI Tools:

Microsoft Power BI — Accessible and widely adopted

Tableau — Great for data visualization

Qlik Sense — Interactive dashboards

Looker — Modern BI for data teams

Zoho Analytics — Cloud-based and SME-friendly

Popular Analytics Tools:

Python — Ideal for modeling, machine learning, and automation

R — Statistical computing powerhouse

Google Cloud BigQuery — Great for large-scale data

SAS — Trusted in finance and healthcare

Apache Hadoop & Spark — For massive unstructured data sets

The Convergence of BI and Analytics

Modern platforms are increasingly blurring the lines between BI and analytics.

Tools like Power BI with Python integration or Tableau with R scripts allow businesses to blend static reporting with advanced statistical insights.

Cloud-based data warehouses like Snowflake and Databricks allow real-time querying for both purposes, from one central hub.

This convergence empowers teams to:

Monitor performance AND

Experiment with data-driven improvements

Skills and Teams: Who Does What?

Business Intelligence Professionals:

Data analysts, reporting specialists, BI developers

Strong in SQL, dashboard tools, storytelling

Data Analytics Professionals:

Data scientists, machine learning engineers, data engineers

Proficient in Python, R, statistics, modeling, and cloud tools

While BI empowers business leaders to act on known metrics, analytics helps technical teams discover unknowns.

Both functions require collaboration for maximum strategic impact.

Strategic Value for Business Leaders

BI = Operational Intelligence

Track sales, customer support tickets, cash flow, delivery timelines.

Analytics = Competitive Advantage

Predict market trends, customer behaviour, churn, or supply chain risk.

The magic happens when you use BI to steer, and analytics to innovate.

C-level insight:

CMOs use BI to measure campaign ROI, and analytics to refine audience segmentation

CFOs use BI for financial health tracking, and analytics for forecasting

CEOs rely on both to align performance with vision

How to Choose What Your Business Needs

Choose BI if:

You need faster, cleaner reporting

Business users need self-service dashboards

Your organization is report-heavy and reaction-focused

Choose Data Analytics if:

You want forward-looking insights

You need to optimize and innovate

You operate in a data-rich, competitive environment

Final Thoughts: Intelligence vs Insight

In the grand scheme, Business Intelligence tells you what’s going on, and Data Analytics tells you what to do next.

One is a dashboard; the other is a crystal ball.

As the pace of business accelerates, organizations can no longer afford to operate on gut instinct or lagging reports. They need the clarity of BI and the power of analytics together.

Because in a world ruled by data, those who turn information into insight, and insight into action, are the ones who win.

0 notes

Text

AI Flash Sale Scraping for Walmart & Target USA

Introduction

In the fast-moving world of e-commerce, businesses can't afford to make decisions based on guesswork. From competitor pricing to product performance, real-time data fuels every smart retail strategy. Among Southeast Asia's leading online marketplaces, Shopee stands out with its vast product categories, dynamic pricing strategies, and active customer base. To stay ahead in this competitive environment, businesses increasingly turn to Shopee Product Data Extraction API to collect structured, reliable, and actionable data from the platform. Whether you're a seller, brand analyst, or price intelligence provider, integrating Shopee's data into your operations offers unparalleled benefits—from pricing accuracy to market visibility. This blog will explore how Shopee data extraction empowers smarter e-commerce operations, what data points can be collected, and why this practice is crucial for business growth.

For brands, aggregators, retail analysts, and e-commerce sellers, missing these short-lived events can mean missed opportunities, lost conversions, and poor price competitiveness.

That’s where Product Data Scrape steps in. With powerful AI Flash Sale Scraping for Walmart & Target USA, our system detects flash sales in real time across Walmart.com and Target.com, providing structured alerts, pricing deltas, and inventory changes to enable smarter, faster decisions.

The Growing Influence of Flash Sales on U.S. Retail

From “Rollback” offers at Walmart to “Deal of the Day” promotions on Target, the flash-sale ecosystem in the U.S. is growing faster than ever.

Why Flash Sales Matter:

Limited-time promotions lasting a few hours

Sudden discount drops of 10%–70%

Used to rotate stock and trigger impulse purchases

Critical for high-traffic events like Black Friday, Labor Day, or Memorial Day

Tracked closely by price comparison engines and deal forums

Traditional Scraping vs AI-Powered Flash Sale Detection

Most scrapers rely on fixed intervals to extract pricing data. But flash sales don’t follow a predictable schedule.

Here’s how AI scraping models from Product Data Scrape outperform legacy methods:FeatureTraditional ScrapingAI-Powered Scraping (Ours)Data Refresh FrequencyStatic (e.g., every 6 hours)Dynamic (adjusts based on behavior)Flash Sale DetectionNoYes – Real-time triggersInventory Status TrackingBasic (yes/no)Smart tracking (in-stock trends)Discount Spike DetectionMissed oftenAI-flagged pricing anomaliesSite AdaptabilityManual updatesAuto-adjusts to page layout changes

How Our AI Models Work

Our proprietary AI engine monitors every page element that may indicate a flash deal:

Price Spike Detection: ML models flag sudden drops in pricing vs historical trends

Banner & Label Analysis: Detects “Hot Deal,” “Today Only,” “Rollback,” and “Clearance” labels

Inventory Signals: Analyzes cart availability and stock depletion velocity

Delivery Window Compression: AI notices when “1-hour delivery” appears — a flash sale trigger

Variant-level Differentiation: AI distinguishes when only specific SKUs/colors are on sale

Sample Dataset: Flash Sale Detection Table

TimestampPlatformProduct NameOriginal PriceSale Price% DropDetected Label11:02 AM ESTWalmartApple AirPods Pro (Gen 2)$249$17928.1%Rollback11:08 AM ESTTargetNinja Air Fryer XL$149$9933.5%Today Only12:30 PM ESTWalmartHP Chromebook 14"$299$21926.8%Flash Deal1:10 PM ESTTargetDyson V8 Absolute$429$29930.3%Daily Deal

Real-time tracking powered by Product Data Scrape

Use Case: U.S. Consumer Electronics Brand

A mid-sized U.S. electronics brand needed visibility into Walmart Flash Sale Scraping to protect its pricing integrity and reduce gray market undercutting.

By using Product Data Scrape, the brand:

Detected over 72 unannounced flash sales in one month

Triggered automatic alerts to their MAP enforcement team

Realigned their own discount calendar based on Target USA Price Monitoring

Boosted competitive positioning during weekend campaigns

Achieved 21% higher conversion on matching products via price adjustments

Event-Based Monitoring: Black Friday, Prime Day, Labor Day

Product Data Scrape’s AI system intensifies monitoring during high-impact events using temporal models and keyword signal mapping.

High-Impact Events Tracked:

Black Friday – Hourly discount tracking for electronics, toys, apparel

Back-to-School Season – Flash laptop and backpack offers

Prime Day Counter-Deals – Walmart and Target matching Amazon flash discounts

Labor Day / Memorial Day – Appliance & home goods spikes

E-commerce Discount Intelligence is crucial in these windows, where over 50% of deals disappear within 4 hours.

Deep Dive: How Flash Sales Look Differently on Walmart vs Target

FeatureWalmart.comTarget.comLabel Terminology“Rollback”, “Flash Deal”, “Hot Deal”“Daily Deal”, “Today Only”, “Sale”Price Fluctuation PatternSlight drops + deep cutsTime-limited & sharply timedInventory BehaviorFlash deals tied to stock depletionOften tied to delivery window urgencyDiscount Depth10%–60% depending on productUsually 20%–50% with loyalty boostsFrequency of Sale LabelsHigh (visible across categories)Moderate (highlighted products only)

AI scrapers trained on both platforms ensure Retail Price Monitoring Tools are always aligned with platform-specific behaviors.

System Architecture Overview

Here's how AI Web Scraping for Retailers is implemented at Product Data Scrape:

1. Crawler Engine – Extracts page data, JS content, dynamic elements

2. AI Label Detector – Flags sales using pattern recognition (e.g., “Deal ends in X hrs”)

3. Discount Delta Engine – Compares current vs 30-day price history

4. Flash Sale Model – Predicts duration + depth of sale

5. API/Alert System – Sends JSON, CSV or Slack alerts in real time

Top Categories Where Flash Sales Are Common

CategoryFlash Sale Frequency (Monthly)Avg. Discount (%)Electronics120+25–50%Kitchen Appliances90+20–40%Baby Products60+15–30%Furniture & Decor75+25–45%Health & Fitness50+10–25%

Who Benefits from AI Flash Sale Scraping?

D2C Brands: Avoid undercutting, align price policies

Retail Intelligence Firms: Track industry-wide promo trends

AdTech Companies: Sync ad budgets with flash sale windows

Price Comparison Sites: Update prices every hour

Deal Communities: Automate deal curation and notifications

Real-Time Price Scraping USA empowers smarter campaigns, better decision-making, and higher sales velocity.

Case Study: Deal Aggregator Boosts Clicks by 42%

A U.S.-based deals website integrated Product Data Scrape’s flash sale API for Walmart and Target. By syncing updates every 15 minutes:

They posted time-sensitive deals before competitors

CTR (Click Through Rate) on email campaigns increased by 42%

Their subscriber base grew by 18% in just 30 days

Page views doubled during weekend deal events

The takeaway? Detect Flash Sales Automatically = more visibility and more revenue.

Competitive Benchmark: Why Product Data Scrape Wins

FeatureProduct Data ScrapeOther ToolsAI Flash Sale DetectionYesNoReal-Time Walmart + Target SyncYesPartialJSON + Slack + CSV DeliveryMulti-ModeLimitedAdaptive AI for Promo LabelsYesNoEvent-Sensitive MonitoringSeasonal + DailyNoU.S.-Based Support & DeploymentYesOffshore

Final Thoughts

Flash sales aren’t just a marketing gimmick — they’re a pricing battleground. In 2025, where discounts can change every 15 minutes, only businesses with AI-powered scraping and real-time visibility can stay ahead.

With Product Data Scrape, you don’t just scrape — you detect, react, and optimize instantly.

Start winning the flash sale race With:

Walmart Flash Sale Scraping

Target USA Price Monitoring

AI Web Scraping for Retailers

Full-stack Retail Price Monitoring Tools

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

Source>>>https://www.productdatascrape.com/ai-flash-sale-scraping-walmart-target-usa.php

#AIFlashSaleScrapingForWalmartAndTargetUSA#TargetUSAPriceMonitoring#AIWebScrapingForRetailers#WalmartFlashSaleScraping#RetailPriceMonitoringTools

0 notes

Text

The Future of Crypto Index: AI, Automation, and Decentralized Portfolios

As the cryptocurrency market evolves, so too do the tools investors use to navigate it. In 2025, one of the most powerful and user-friendly investment tools is the crypto index. But we’re just getting started. The next wave of innovation in this space will redefine how people build, manage, and grow their crypto portfolios.

From AI-powered automation to decentralized index protocols, the future of crypto indexing is smarter, faster, and more aligned with Web3 principles. In this article, we explore where crypto indices are heading and what it means for both everyday investors and institutions.

A Quick Recap: What Is a Crypto Index?

A crypto index is a curated portfolio of digital assets designed to represent a specific market segment, investment theme, or strategy. Think of it as the crypto equivalent of the S&P 500, but often more flexible and dynamic.

Crypto indices come in many forms:

Market cap-based (e.g., Top 10 cryptos)

Thematic (e.g., AI tokens, DeFi, Memecoins)

Strategy-based (e.g., momentum, risk-adjusted growth)

AI-powered (dynamically rebalanced based on real-time signals)

The Trends Reshaping Crypto Indexing

1. AI Will Power the Next Generation of Indices

AI-driven indices are already outperforming traditional passive ones in dynamic market conditions. Token Metrics, for example, uses machine learning to analyze 80+ data points per token—including sentiment, momentum, volatility, and technical indicators—to allocate capital intelligently.

In the future, AI indices will:

Operate on shorter rebalancing cycles (e.g., daily)

Integrate macro data (inflation, Fed policy, global trends)

Use on-chain analytics to detect smart money flows

Adapt strategies in real time to protect capital and maximize gains

This makes AI not just a nice-to-have, but a competitive edge in portfolio management.

2. Customizable Indices Will Become Standard

In the near future, users won’t be limited to predefined indices. Platforms will let users:

Select sectors (e.g., 40% AI, 30% DeFi, 30% RWA)

Set rebalancing rules (e.g., weekly, when trend shifts)

Integrate risk preferences (e.g., exclude low liquidity tokens)

Define goals (capital growth vs. income generation)

Tokenized “build-your-own” indices will be composable in DeFi and tradable as single assets—making portfolio construction accessible to everyone.

3. Tokenization of Indices for On-Chain Trading

Just like ETFs in traditional finance, crypto indices will be tokenized. Imagine an ERC-20 token that represents a dynamic portfolio of 20 assets, rebalanced weekly via smart contract.

Tokenized indices will:

Be tradable on DEXes like Uniswap

Integrate with DeFi protocols for lending, staking, and yield

Enable cross-chain portability via bridges and L2s

Projects like Phuture and Index Coop already use this model. Expect it to become the norm as on-chain infrastructure matures.

4. Decentralized Index Protocols Will Replace Centralized Funds

Web3 users don’t want to give up custody or rely on black-box investment products. That’s why decentralized index protocols are rising.

Decentralized index investing means:

No centralized asset manager

Rules enforced by smart contracts

Governance by token holders (DAOs)

Transparent performance tracking

Platforms like Index Coop, Phuture, and Set Protocol already allow users to create and invest in on-chain portfolios governed by the community.

5. Indices Will Include Yield-Generating Strategies

Future indices won’t just hold tokens—they’ll generate income.

Example strategies:

Staking ETH, SOL, or AVAX inside an index

Lending stablecoins for passive yield

Providing liquidity via LP tokens

Real-world asset (RWA) yield from tokenized treasuries

These hybrid portfolios blur the lines between index funds and yield aggregators, offering capital growth and cash flow.

6. Cross-Chain and Multi-Chain Indices

Right now, most indices are built on Ethereum. But the future is multi-chain.

Expect indices that:

Include tokens from Solana, Avalanche, NEAR, Base, and more

Use cross-chain messaging (like LayerZero) for syncing

Automatically rebalance across chains based on liquidity

This opens up a broader universe of assets, greater flexibility, and more seamless integration with emerging ecosystems.

What This Means for Investors

📊 For Beginners

Expect more user-friendly platforms with pre-made index templates

Easy access to diversified, automated portfolios

Less research, more hands-free investing

🧠 For Advanced Users

Full customization of index logic and asset mix

On-chain transparency with tokenized portfolio shares

Smart contracts executing strategies you define

💼 For Institutions

Regulated AI index products (like Bitwise + Token Metrics hybrid models)

Multi-asset portfolios using secure custody solutions

Performance metrics for quarterly reporting

Future Use Case: AI Index Agent + Wallet Integration

Imagine a future where your wallet is connected to an AI investment agent that:

Monitors your financial goals

Adjusts your crypto index exposure in real time

Sends alerts: “AI Index has exited Memecoins due to negative momentum”

Automatically shifts allocations between risk-on and risk-off assets

With platforms like Token Metrics already integrating AI insights and trading signals, we’re closer than ever to this reality.

Challenges to Solve

Despite the promise, some hurdles remain:

Smart contract risk in on-chain indices

Liquidity fragmentation across L1s and L2s

Security of rebalancing logic

Index manipulation by illiquid or low-float tokens

However, these are solvable through governance models, liquidity partnerships, and better on-chain data feeds.

The Bottom Line: Crypto Indices Are Evolving—Fast

Crypto indices started as simple, passive tools to gain market exposure. In 2025 and beyond, they are transforming into autonomous, intelligent, and composable portfolios that will power the future of investing.

If you want to stay ahead of the curve:

Embrace AI-powered dynamic indices

Explore tokenized index assets

Use decentralized platforms to build your own strategies

Platforms like Token Metrics, Phuture, and Index Coop are leading this next wave—making it easier than ever to invest like a quant, without writing a single line of code.

Meta Description: Explore the future of crypto index investing—from AI-powered dynamic portfolios to decentralized on-chain index protocols. Learn how automation, tokenization, and multi-chain support are reshaping crypto portfolio management.

0 notes

Text

How Predictive Analytics is Transforming Modern Healthcare

Predictive analytics has become a cornerstone of modern healthcare, fundamentally reshaping how hospitals and health systems approach patient care, resource management, and operational efficiency. By leveraging historical patient data, machine learning algorithms, and real-time analytics, healthcare providers are empowered to anticipate health risks and make proactive, data-driven decisions that can save lives and reduce costs.

Understanding Predictive Analytics in Healthcare

Predictive analytics refers to the use of statistical methods, data mining, and machine learning techniques to forecast future events based on historical and current data. In the context of healthcare, this involves examining vast amounts of medical data—such as electronic health records (EHRs), lab results, imaging, and even wearable device data—to predict clinical outcomes and patient behaviors.

For example, a predictive model might estimate the risk of hospital readmission for a heart failure patient based on vital signs, medication adherence, and past admissions. Similarly, it could identify patients likely to develop complications post-surgery or those at risk of chronic conditions such as diabetes or hypertension.