#AI is still a crapshoot

Explore tagged Tumblr posts

Text

Oh, and for anyone curious what the correct information is:

Shepard headbutts Gatatog Uvenk (a different krogan) on Tuchanka (which could maybe be called a "derelict krogan settlement" except it's not deserted or neglected, just messy), not to prove he/she is "krogan enough" but just to get Uvenk to shut up (also, it's an optional Renegade interrupt). And it's for Grunt's loyalty mission, not recruitment mission.

Good job, AI, that's just wrong.

I was trying to check something quickly for a thing I'm working on, and had to just stop and stare for a hot minute at the AI Overview trying to "help" me. And this is why I keep telling Google the Overview is useless, because I have to fact-check it anyway by looking at the links because I can't trust it to tell me accurate information, so what's the point?

Anyway.

I forgot to get a screenshot of the one that claimed it was on the Citadel. Did you know you can refresh and sometimes get different results from the AI Overview? And yet none of those refreshes gave me accurate information.

#mass effect#mass effect 2#headbutting krogan#one of the renegade interrupts I always take#AI is still a crapshoot#AI is still wrong

2 notes

·

View notes

Text

Everything can change at any point!

Create images here: https://www.bing.com/images/create?FORM=GENILP before i say anything else though: not following someone else's prompts means you'll likely find some wild and unexpected things yourself. If you follow my prompts like they're laws, you will only ever get results like mine. There are people doing much cooler, weirder things. Don't get restricted by this.

the site was VERY BROKEN for the last 6 days, you haven't been banned. You get 15 boosts a day which usually override any current downtime, but the popup thinks you get 25 a week, which is an indicator of how busted and poorly planned they were for this flood of users. It's not too hard to create illegal results, and there's millions of users, so it's very unlikely a human is ever looking at your results. Unless you're doing really spectacularly terrible things, of course. If you get the warning as soon as you enter your prompt, change the most controversial aspects of your prompt immediately, as repeats of this will get you suspended for increasingly long times. It is possible to make alt accounts with throwaway emails though. It's unconfirmed but it appears that US residents get priority access during US times, and UK residents can only reliably make things from 7am to 1pm for example. Weekend access is a crapshoot. I don't personally pay for ChatGPT so I can't say anything about the alleged priority access you get there, but even that can be slow and restricted during the worst times (I assume this will the their priority to fix though). There are many conflicting reports about whether it's more censored or not. Reports is a very fancy way of saying reddit comments.

Everything I superstitiously guess about prompts:

you can be very descriptive and write in natural english, or you can be very brief. both methods work, I suspect both versions do different things. repetition and restating the same thing in other ways also seems to emphasise (possibly.) Prompts can be quite chaotic and contradictory - you can describe a lot of things happening and it may surprise you, so have fun with weirdness! some words are "heavy" against the automated filters, and can be safe in one prompt and unsafe in another. think of it like buckaroo, the AI is trying to find meaning in your prompt and it will sometimes combine things and get mad about it. be aware of politics and words that may be used in erotic senses, and switch those up.

this is the format I use the most because i am super lazy and unimaginative. items in [ ] are optional and can be anything, and I don't know how the word order matters - in old Midjourney it mattered quite a bit according to guides, but now they're all pushing to parse natural english I'm not so sure:

[number of] [body type] [age] [nationality] [male noun or job] wearing [clothes], with a [size, shape] belly, [hair description], [pose], [location, time of day, weather, lighting, era], [facial expression or attitude], [actions]

The number of guys can be vague like "several." Also placing a number here will generally result in all men being fat. To add a second, very different person (even women! imagine the power), simply describe that in plain english later in the prompt. Try adding "with friends" or something and seeing what happens.

Mentioning body type is separate from mentioning that he has a large stomach because "fat man" alone doesn't make him very fat. also, the body type prompt will dictate his physical build underneath the belly - this allows you to make mpreg very easily, for example. Mentioning his belly separately also seems to be a key part in making clothes not cover it up. However, DallE has clearly gotten much better at this for some clothes, but not all of them. Formalwear is improving, though tactical vests no longer do the cute thing they used to do, and football shirts still ride up reliably. Nationality can be weird, and you can use it to exploit stereotypes, or it can be an eye-opening view of stereotypes from countries you barely know about - want to know what differentiates an Angolan man from a Kenyan man? Probably don't trust AI results! I suspect some countries are controversial due to current politics, and I suspect some are controversial due to fetishy stereotyping. However, if for example "English man" got censored, consider going for capital cities or famous regions, eg "London man." Maybe look up sports teams from that country. I'm a big fan of the "Italian-American" prompt but lately it's gotten quite a few results blocked, so I'd switch to "New Jersey," maybe even "New Jersey Italian."

"Handsome" may slim your results down, or even break the prompt entirely. Consider making your men footballers or rugby players, mention trendy haircuts, or using out of date synonyms to get round it. AI isn't all that likely to give you especially ugly results anyway, particularly if you specify ages under 40. It doesn't get the hair precisely right, but even a generic prompt like "short thick hair" can help. Giving your character a job may dictate what he'll wear, but you might want to specify what clothes you want anyway. Don't mention either if you hope he'll turn out naked. Certain jobs are tricky to use, as AI strains to be as unpolitical as possible - it doesn't want you doing politicians and it sometimes seems to refuse anything that might make the police or military look bad. However, it will accept "wearing a [colour] uniform/pilot shirt" very happily, because it's duuuuumb.

Mention trousers, footwear or even just feet if your results keep zooming in too much. (It'll also zoom in if you mention too much about his face, I think.) Side view appears to make certain prompts fatter, but will often mean he's looking away - you can add "Looking at camera" if you want that. Metallic and plastic clothes can have very fun and weird results, especially if you change the location to a night setting in the rain. Gladiator costumes will reduce his clothes to a few leather straps.

"Flex pose" and "strong pose" will get butch bodybuilder poses (it will also buff up the muscle mass) and "battling strong winds" gets very superhero poses. At least when I was trying these out, I found I couldn't actually get proper bodybuilder poses or mention of superheroes past the censor, but it's been a few weeks so who knows what it's up to now. Give them all a go!

Casual poses and actions can liven things up a little if you just want portraits but don't want it to repeatedly be the same thing facing you directly. Getting out of a car, climbing stairs, leaning against things, adjusting his clothes or putting on a coat, all these kinds of things work. Smoking or drinking does quite a lot. "Tired" or "Exhausted" changes his attitude a lot too, your leans get leaned into more.

Contact words can be a little difficult, so consider ways to exploit using soft contact, or be very wordy and detailed about it so it's not misinterpreting you. "Patting him on the back" is a fairly safe phrase, but DallE isn't intelligent, so it will allow the contact but it will struggle to be precise, especially when the bodies are fat or not positioned in a way they can reach the back - the result of this is that there will be a lot of belly pats. Prodding in the stomach, pointing at the stomach, these both work, but I think DallE is vague about stomach=torso and you may want "pointing at his belt" to give a lower focus. Admiring can direct attention and vibes, whispering will draw their heads closer and make them interact somewhat. Embracing and hugging work but is very heavy for the censor, "hugging on his shoulder/belly" seems safer for some reason. Shaking, grabbing, "examining/concerned about his belly" can work. Bizarrely, squeezing past another man in a narrow corridor/doorway/cupboard works if you want a LOT of contact. And if you want unpredictable contact, fighting can work.

For more dynamic safe contact, try sporting actions. Baseball slides, football tackles, that kind of thing. It's hard to get them to lie flat and the AI seems to resist allowing heads to touch the ground, but "lying in a hammock" works pretty well, and sometimes specifying what the head is touching works. pretty much every minor prompt variation and scenario I've ever used:

"falling onto a broken chair/breaking an object with his weight" "washing windows" "with waiters helping him up" "with friends bringing him food" "falling over another man" "outside of a skyscraper washing windows, harness for safety, hoisted" "hyper-obese man wearing denim dungarees with an enormous inflated belly, drinking from a hose" ("blowing into a hose" gets better expressions for that IMO) "stuck in a broken narrow red british phonebooth with another man, bursting out with his enormous belly, black trousers" "bent over eating at a pie eating contest wearing a dirty white tank top with an enormous round belly and his face hidden buried in messy pie" "sitting on a throne next to a very fat 35 year old spanish monarch" "lying on his back the floor, enjoying a banquet, side view, tired expression" "very fat 35 year old handsome british man wearing tracksuit and gold chain with a hugely distended beerbelly, man with a massive round stomach, washing his car in a carpark at night side view" "at water park, stuck in a water slide" "before and after weightloss picture, in the left he is X and in the right he is Y" "with a large round belly spilled over eating at a banquet with an enormous round belly, bronzed, with waiters helping him up/being prodded with a fork" "washing dishes and leaning over his belly on a freestanding enamel pedestal basin" "climbing and leaning against a stepladder to change a lightbulb on the ceiling [with friend holding the stepladder steady]" "side view, photo of two 40 year old beefy handsome fat italian-american rugby player with a hugely distended round belly, resting hand on his chest, wearing a tracksuit with a gigantic round sagging stomach, gold chain, raining, whispering in a car park at night, leaning/hugging on shoulder, tired, stern expression looking at camera, smoking a cigarette" "side view photo of two strong 40 year old handsome samoan rugby player with a hugely distended round beerbelly, chest hair, wearing a white formal shirt and black suit, hugging on his belly, proud expectant father, boyfriends outside a busy pub at night, stern, looking at camera, raining" "two fat los angeles rams handsome footballers wearing white pilot shirt and plain tie and black trousers pushing through a narrow saloon door with their enormously distended beerbellies, stern" "photo of very fat 30 year old hunk rugby player with enormously distended belly, carrying his belly in a wheelbarrow" "very fat 35 year old man wearing white pilot shirt with an enormous round belly, tough man with a very large beerbelly, too fat for small broken airplane seat sitting on another man, fat belly spilling over armrest and pressing against over man, black trousers, slightly concerned, suave" "being carried on the back of a flatbed truck" can turn them into horrific lardvalanches but you don't get much control over it

original characters do not steal prompts: "30 year old man who looks like he's the main character from the game Uncharted with an enormous distended round beerbelly, with one hand on a bar in a pub, nathan" This is sometimes surprisingly effective, but most often it'll simply draw vibes from the IP mentioned, so you can use it to get specific settings at least

Try spelling the names wrong or reversing the name order - sometimes it'll even accept names sprinkled throughout the prompt. Repeating the name may increase its effect (it might also not!) Also it's speculated that placing the celebrity fraud in a place or situation they would normally be found in helps. That said, I could only get a Robert Downey Jr if I made him dress as a gladiator. So maybe weirdness and ingenuity are your strengths. see also https://www.tumblr.com/baron-bear/731903035856584704/what-do-you-use-for-your-ai-stuff

245 notes

·

View notes

Text

witness me trying to cull the regene related asks down to the useful ones by summarizing any new info i found lol. avoided this one so long cos there were so many of them ;___; list ahoy under the cut

Regenes are given codes and codenames (08 may 2018)

regene rights (09 may 2018)

industrial tattoos (03 june 2018)

blugenes colourrange (16 aug 2018)

regenes get older + organic bodies (20 sept 2018)

cyborg definition (10 nov 2018)

ai minds clarification, genitech can't build minds (11 nov 2018)

regenes have genitals (12 nov 2018)

regene making process (12 nov 2018)

emp shielding AI chips (12 nov 2018)

regene enhanced power classes (15 nov 2018)

regenes have unique faces (21 nov 2018)

periods (25 nov 2018)

regene numbers/nicknames (26 nov 2018)

development in other countries? (27 nov 2018)

regene making process (1-3 years) (03 dec 2018)

reconditioning (13 dec 2018)

organic? transplants patents etc (15 dec 2018)

general regene literacy training, handlers (15 dec 2018)

regene culture nicknames (15 dec 2018)

install regene ai chips in humans? (18 dec 2018)

teenager decant (18 dec 2018)

lifespan? kia too early to test (22 dec 2018)

specific decanting teenage range 14-15 (22 dec 2018)

regene bonding (25 dec 2018)

regene vulcan culture (25 dec 2018)

organic brains but need need ai chip (28 dec 2018)

pain (28 dec 2018)

no chip swapping between regenes (28 dec 2018)

higher calorie intake than humans (boost-related) (7 jan 2019)

regenes can get sick (08 jan 2019)

tattoo design styles are the same, patterns and colours differ (10 jan 2019)

tat barcodes (10 jan 2019)

why not make them identical? (17 jan 2019)

programmed life skills (21 jan 2019)

few hundred regenes (01 feb 2019)

nails eyelashes nails grow (04 feb 2019)

lifespan studies? (11 feb 2019)

viability > "minor imperfections" (16 feb 2019)

hacking/captcha pass (28 march 2019)

lowlight vision regene eyeshine (27 apr 2019)

healing others (more a boost q) (03 may 2019)

regenes have fingerprints/sometimes removed (04 may 2019)

step's batchmates (05 may 2019)

bellybuttons (16 may 2019)

overwhelmed etsy store production (01 jun 2019)

regene book 3 topic (21 jun 2019)

regene death, avo'd (21 jun 2019),

androids? (02 jul 2019)

family units/parent regenes? (15 jul 2019)

sib relationships (any relationships outside teams discouraged) (15 jul 2019)

cuckoos and human mission working (unaware) (15 jul 2019)

cuckoo solo operatives (15 jul 2019)

can have extra organs/limbs (presumably via boosting) (21 jul 2019)

seeded with boost drug in tube stage (01 aug 2019)

ai chips counteract the vegetable brain growth speed issue (01 aug 2019)

cancer, boost drug casualties (01 aug 2019)

ranger interactions (01 aug 2019)

high maintenance (01 aug 2019)

control mechanisms (15 aug 2019)

rebirth regene team age/older ones trusted on us soil (18 aug 2019)

thought-voids feeling (24 aug 2019)

sex and pronouns (16 sept 2019)

boost-related miscarriage/procedures to prevent procreation (30 sept 2019)

fully compatible w/ human germs/disease (29 oct 2019)

power stacking (30 oct 2019)

training templates (25 nov 2019)

handler knowledge of sibling bonds? (25 nov 2019)

codename designated by handlers (25 nov 2019)

handler favourites (25 nov 2019)

mod augmenting powers (25 nov 2019)

regene:handler ratio (avo'd) (25 nov 2019)

selfawareness/autonomy (25 nov 2019)

reggies. (25 nov 2019)

legal classification (03 dec 2019)

finch giving step psych meds? (avo'd) (05 dec 2019)

10% survival -> 10% useful (1%) (08 dec 2019)

regene power scale? (10 dec 2019)

regene v rangers: step's opinion (10 dec 2019)

friendly regenes characters? (11 dec 2019)

tattoo process (14 dec 2019)

brain chip size (18 dec 2019)

tracking chips (19 dec 2019)

on regene vs human boosts (22 dec 2019)

farm makes all kinds of regenes (27 dec 2019)

some premature aging (30 dec 2020)

double dipping (05 jan 2020)

aiming for more reliable process, some progress but still a crapshoot (06 jan 2020)

bellybuttons (placental organ) birthmarks (20 jan 2020)

power maturity/corrections/ai chip surgical insertion at neck #VERY USEFUL(31 jan 2020)

ai chip can't be removed (or....?) (31 jan 2020)

kill switch standard issue(31 jan 2020)

decanting/training/etc process timeline stages (31 jan 2020)

"supposedly sterile" (04 feb 2020) (contradictory?)

rebirth regene (farm not above doing frankenstein arms but mods easier. um.) (15 feb 2020)

powers important for viability >>> minor "defects" (18 feb 2020)

research centres (20 feb 2020)

tattoo meanings? weren't codified at the time (20 feb 2020)

vaccines for abroad deployment!!! + germ susceptibility (23 feb 2020)

morality is for handlers (28 feb 2020)

regenes sweat (11 apr 2020)

chip reboot (16 apr 2020)

22 notes

·

View notes

Note

For the character ask game - Haru (appmon) 1, 2, 11, 12, 23!

HAAAAAAAARU! THANK YOUUUUU~! <3

The character ask game is here!

1. My first impression of them

Honestly my first impression (from seeing promo images and such posted on this very website!) was something like “Whoa. Clashing colors much?!” So I guess you could say it was not the best 😂

Obviously I got over that, but I did learn recently that trying to apply his color scheme to any other character is still quite a shock! ROTFL Click for proof if you dare!

The rest is cut! For! Spoilers!!!

2. When I think I truly started to like them (or dislike them, if you've sent me a character I don't like)

The first time I watched Appmon, I felt like basically the whole first half of the series was a bit of a crapshoot, quality-wise (not in terms of the animation or anything, but how I felt about it). Like, if you tried to graph my enjoyment of each episode right after I watched it, it would look like a jagged mountain range. The first episode was ok, the second episode was “eh,” the third and fourth episodes was pretty good, the fifth was back to “eh,” etc. I don’t know, pacing and tone and kind of unsympathetic character introductions and it just being “different” were all things that were working against it at that point in my mind, and made me wonder what the writers were really trying to do (honestly, what REALLY sold me on watching Appmon at all was @firstagent’s pitch at a con, which explicitly plugged Offmon and Yuujin’s dynamic!).

(I should say here that despite those rough beginnings, Appmon is probably my favorite Digimon series now, so yeah I take time to warm up to things sometimes but the right combination of factors makes me fall and fall HARD)

All that said, I know exactly when I really start to like Haru, and it was during the third episode (the dungeon one with Roleplaymon). He’s just so excited and happy throughout, while also being a complete nerd, and instead of a big Appmon fight at the end (well, being unable to have one due to an evolution whoopsie), he just talks to Roleplaymon… and it works. That felt kind of revolutionary, and it was the episode that I started to not only understand his character, but (maybe) gain a bigger picture view of the series and what the show was going for. This episode just crystallized a lot of things for me.

Me after episode 3: Haru is kind. Oh, and also he’s my son now, I want to see him grow up strong, I WILL keep watching FOR HIM actually (best decision ever!!) 💖

11. What’s the first thing you think about when thinking about the character?

Joke answer: “Do I need to be working on the blog queue right now, or can I procrastinate a few more days?” 🤔

Real answer: No thoughts anymore, just feelings (rotfl). After watching every episode in order to look at every single shot of Haru and find the very best frames, I can still say definitively that he’s so cute and I love him and all Harus are good Harus 🥺🥺🥺

12. Sexuality hc!

Bisexual! And uh… one specific android-sexual? You cannot deny his crush on Ai, and Haru/Yuujin is so good. They’re one of my OTPs, they’re soulmates (by some definition of the word “soul”), they were made for (and made by) each other, they’re actually making eyes at each other from across the room in my head right now 🤫

23. Future headcanon

Oh, he’s definitely going to be an AI researcher, that much I believe! One who is very literature- and ethics-minded, and thinks a lot about what what “existence” means, and is hyper-aware of beings’ rights and the need to advocate for both humans AND AI, in a world where both appmon and general artificial intelligences actually exist. These beliefs are the cornerstone of his work, regardless of what he actually does with AI. Would he follow in his Grandpa’s footsteps in being an academic designing his own? Maybe borrow a page from Koushiro Izumi’s book and start a company? Or go the Susan Calvin route and become an AI psychologist? Any! All! Idk 🤣

Honestly, so much else about specific future headcanons depends on how you interpret the very last moments of the series (literal or symbolic), and for me it could go either way. I really like interpreting it as literal, if only because it’s such a great hook into the nebulous Appmon Season 2 that exists in my head. Yuujin’s back, but how? Cue mystery and more Appmon shenanigans.

Regardless, I’m most interested in the future where Yuujin comes back in some form at some point during Haru’s lifetime, and no matter how, when, or why that happens it’s going to be a joyous occasion, but also a bit of a rough transition. Yuujin may have changed. Haru may have changed. There would be a LOT anxiety about “is this ok?” and “how do you feel?” and “what does it MEAN that this is how you feel?” and “what does ANY of this mean for us as individuals, and us together?” because it’s all very complicated when you bring the android that was programmed to be your best friend and your ideal version of a person back to life. Is there even a happy ending out there for them? Again, for me it could go either way.

So yeah, a bit rambly, because to me there is no one answer I could ever 100% decide on. They’re all possible. And that’s kind of the great thing about the vagueness of the ending! I will say I like drawing them in happy-ending mode best though, even though I would probably write their future in a way that involves more drama, because it’s way more interesting, and nothing in life is ever that easy (I’m rooting for them though!).

Again, thanks so much for the ask!!

#asks#julbilines#appmon#appli monsters#appmon spoilers#haru shinkai#green-haired cutie pie#<- my original tag for him hehehe#the cactus runs a sideblog

9 notes

·

View notes

Note

What is the hardest game you have ever played?

Mmmmm. I'm not really good at video games despite liking them, so I tend to avoid games that are too difficult.

...Probably Unleashed? It took me about nine hours to beat Perfect Dark Gaia (my thumbs say never again) and I still get flashbacks to Eggmanland. They were really out for blood with that one.

Actually NO I JUST REMEMBERED SONIC SHUFFLE

SONIC

SHUFFLE

LET ME EXPLAIN TO YOU THE NINTH RUNG OF HELL THAT IS SONIC SHUFFLE

So Shuffle is a Hudson-developed party game much like Mario Party, right? Except this game actively fucking hates you, will not give you even a snowball's chance, and I am not kidding, the curbstomp begins immediately out of the gate. Like. It is the most brutal fucking experience. You cannot play this game just to get your bearings, or you will get your ass whooped around the world and back. I had to restart the board three times just to get the slightest edge over the computer and that was on Easy. The way the game works is that you and the other players are given a hand of cards, which determines the number of spaces you move across the board. When you use up your hand, you must then pick the cards in the others' decks, which you are not allowed to see. This makes strategizing a huge pain in the ass and largely a crapshoot because you don't know which cards your opponents have. In order to get Precious Stones, you need to defeat enemies. You pick cards to determine whether your character has a chance at defeating them, because then a die is rolled by the computer. It's entirely possible to pick a 6 against an enemy that picks a 2 and still lose to them anyway because the die rolled a 1 for you. You cannot practice minigames, so you're stumbling around trying to figure out these obscure-ass mechanics. Because the AI was programmed to win like 3 times out of 4 even on Easy, your best bet is literally to pray the computer doesn't fuck you over too hard. Even strategy guides on Reddit are like "yeah just hope the RNG isn't too brutal" like my dude Shuffle revealed to me the darkness that lurks in my heart, and then punished me for my sins. Part of the first board lies submerged underwater. That is to say, you can drown if you stay underwater for too long unless you step on a Bubble Space. I think it's like, five turns underwater? That might seem generous but it's really not.

Well. Me being the shamless opportunist I am, I decided to try to get a leg up on Tails by forcing him to play a 1-turn card and drowning him.

Guess what the game fucking did that very next turn?

MADE ME SWITCH PLACES WITH HIM.

It can't be stressed enough how much the game hates you. It will hand out wins to the other characters left and right, but make you toil for your Precious Stones. I am not kidding, you are always at a disadvantage and can never count on the computer being in your favor ever. E v e r. Where the others got awarded extra rings on luck-based events, I got my ass knocked out for three turns.

I wasted three hours on the first board on Easy and lost to fucking Amy simply because she earned more rings and I swear to God I turned off the Dreamcast more defeated than I had ever felt in my entire life lol

6 notes

·

View notes

Text

Source: Animation By Adam Ferriss

Silicon Valley Wants To Help Me Make A Superbaby. Should I Let It?

High-Powered Couples Are Turning To Genetic-Testing Startups To Help “Design” Their Offspring. I Decided To Take The First Steps Myself.

— By Margaux MacColl | June 01, 2025

Most people haven’t met a superbaby. I have.

Last year, I attended a cocktail hour at a venture capitalist’s Presidio mansion, filled with founders from his firm’s portfolio companies. As the entrepreneurs lounged on couches, sipping craft cocktails and pitching their wealth management software and AI security systems, conversation suddenly sputtered. The investor’s wife, a startup founder herself, descended the grand white staircase, cradling the night’s real headliner.

“Here’s My SuperrrrrBabyyy,” the investor cooed, taking the cherubic newborn from his wife’s arms.

The couple had told me weeks earlier that they had given birth to the superbaby using in vitro fertilization and a surrogate. They had screened their embryos with Orchid, a genetic testing startup that charges upward of $2,500 per embryo to test for polygenic conditions — complex diseases caused by the combined effect of many genes, like bipolar disorder or Alzheimer’s. Though the superbaby drooled and babbled like a regular infant, he was as genetically optimized as current science will allow.

Polygenic testing startups are Silicon Valley at its most audacious: promising to make future generations healthier and smarter, while inviting deep controversy over the soundness of the science and its potential for harm. Studies have shown that assigning “risk scores” for polygenic diseases is still a crapshoot, the results “random” and “inconsistent,” while critics claim they over-rely on data from people of European descent and offer parents a dangerous illusion of control. Hank Greely, a bioethics and law professor at Stanford University who taught Orchid founder Noor Siddiqui, said polygenic risk scores are “unproven, unprovable, unclear,” and that couples who have used them to select embryos have “wasted money.” He told me his former student Siddiqui was “very smart” but had likely “gotten ahead of her skis.”

In spite of widespread doubts among scientists, over the last five years, tech heavyweights like Anne Wojcicki, Sam Altman, Vitalik Buterin, Elad Gil, and Alexis Ohanian have poured millions into the direct-to-consumer polygenic testing startups Orchid, Nucleus, and Genomic Prediction. It’s a bet on a radical future, one in which, for a few thousand bucks, a biotech company can screen your embryos, comb through your DNA, and assign you and your future child odds on everything from developing a drug addiction to becoming obese. Some even estimate your unborn’s IQ.

Unlike most genetic tests, which screen for single-gene diseases like Tay-Sachs or cystic fibrosis, Orchid — which has rapidly become the go-to testing service for Silicon Valley elites — scans embryos for polygenic diseases like type 2 diabetes and inflammatory bowel disease. Orchid sends parents an online report that estimates the genetic risk of each embryo developing these illnesses.

Parents are left to decide: Which risks can we live with? Which embryos are worth implanting? And which tests are accurate?

Given the celebrities who have vocally lined up behind these services, it’s easy to be skeptical. Elon Musk reportedly used Orchid for at least one of his children. Michael Phelps and longevity extremist Bryan Johnson have both endorsed Nucleus. “Orchid is a categorical change,” Coinbase CEO Brian Armstrong, an investor, said in a quote on the company’s website. “It’s a step towards where we need to go in medicine — away from managing chronic disease, and towards anticipating and preventing it.”

In the weeks and months after the VC’s house party, I couldn’t stop thinking about their gene-curated superbaby. How many parents in San Francisco would happily experiment with the genetic disposition of their future children? Would these people be willing to hand-select “healthy” embryos even if there wasn’t any regulatory oversight of genetic testing services? And what if the tests weren’t even accurate?

Then, in March, I learned that the genome sequencing startup Nucleus was launching a feature for couples planning to have kids. I reached out to Kian Sadeghi, the 25-year-old founder of Nucleus, who agreed to waive the $798 fee so The Standard could try their initial service, testing individual’s DNA for polygenetic risk. (I later learned that the polygenic family-planning service wasn’t test-ready yet.)

I proposed the plan to my husband: In the name of journalism and science, let’s hand over our DNA to an untested startup and see what Silicon Valley can tell us about our someday children. He reluctantly agreed.

‘A Total Black Box’

To understand the murky, revolutionary, and unregulated state of genetic research in the U.S., you have to start in 2003, when the Human Genome Project finished most of its mapping.

Before that, genetic testing was mostly used to search for a single gene mutation known to cause specific diseases (also known as monogenic diseases), like the mutated gene that causes Huntington’s. But with the project’s map of the human genome combined with new sequencing technologies, scientists could conduct massive studies that analyze the DNA of millions of people.

Around 2007, scientists started combing through databases of DNA to identify variants — differences in a DNA sequence — that kept showing up in people with illnesses like schizophrenia and coronary artery disease. Based on how many of these variants a person had, the scientists could assign them a “polygenic risk score” that estimated the genetic risk for the disease.

Since then, scientists have created thousands of polygenic risk scores for other diseases, including type 2 diabetes, breast cancer, and bipolar disorder. A 2018 study found that, in some cases, these scores were nearly as effective at predicting disease as monogenic testing. A high polygenic risk score doesn’t mean you’ll certainly get that disease — environment and lifestyle play major roles — but it does mean that your DNA may put you at higher risk.

‘It Is Not Always Transparent How Companies Are Calculating These Scores. They Claim To Have A Proprietary Algorithm, Which, In Reality, Is A Total Black Box.’

Yet the scores remain extremely controversial. Some scientists argue that it will take years before we know with certainty whether these scores can predict disease across a lifetime. One 2023 study from University College London researchers looked at more than 900 polygenic risk scores and found that the tests correctly identified only about 11% of the people who would develop the diseases.

“It is not always transparent how companies are calculating these scores. They claim to have a proprietary algorithm, which, in reality, is a total black box,” Jacob Sherkow, a law professor at the University of Illinois Urbana-Champaign who specializes in bioethics, told the university publication. “If they are not completely accurate, consumers may make adverse health choices on the basis of misinformation.”

Because of the scientific uncertainty, most doctors choose not to test patients’ DNA for polygenic risk scores. But that hasn’t stopped venture-backed startups from putting these scores directly into the hands of consumers. Orchid publicly launched its embryo testing service in late 2023, while Nucleus began whole-genome analysis in 2024. As Nucleus founder Sadeghi told me, doctors have historically treated the patient “like a baby” when it came to extensive genetic testing; these startups would entrust people with their own genetic information.

Despite my own worries about society sliding down a slippery slope to eugenicism, I I was still open to the possibilities of genetic testing. My husband and I are both 27, and our plan has always been to have kids at 30. If we wanted to take the first step toward birthing our own superbaby, we needed to start soon.

My husband, Connor, is a devout Catholic with a beautiful tendency to see every decision through a capital-M moral lens. For him, the idea of using Orchid to determine our baby’s genetics was a severe moral hazard. “This seems like a path toward having no people with Down syndrome, having no people with certain disabilities, even though there are people that have those disabilities that live fulfilling lives,” he said. “You’re talking about culling a tree of human evolution, right?”

However, there are some genetic realities that Connor and I must face. For one, his mother has multiple sclerosis, a debilitating disorder caused by a complicated combination of genetics and environmental factors. Meanwhile, my family has its Irish American streak of alcoholism, and my grandmother suffered from schizophrenia.

Though he wasn’t wild about submitting his DNA to Nucleus, Connor finally acquiesced just in time for the testing kit to arrive. The process was simple: We filled out a brief survey on our families’ medical histories, then swabbed the inside of our cheeks. We put our DNA samples into the vials and sent them off to a lab that would sequence our genomes.

After the lab processed our DNA, Nucleus would take the data and run it through its algorithms, searching our genetic codes for variants linked to diseases to determine our polygenic risk scores. This is only a fraction of what the company does: it also tests for monogenic conditions, and particularly high-impact variants, as well as incorporating factors like lifestyle and family history into their risk scores.

Six weeks later, an email from Nucleus arrived: Our results were ready. Sitting in my living room, I hovered my computer mouse over the link. It was like opening a Pandora’s box of ancestral curses. Connor’s mom feared that his results would announce, in flashing red text, that he had inherited her MS.

I was most afraid of what would happen next: If we both scored high-risk for something, what would we decide to do about it when I was ready to get pregnant?

‘Simulate Your Children’

What I did not expect was for my DNA results to look like a Spotify Wrapped. On a yellow background with white text, a Canva-esque slideshow informed me that my risk of type 2 diabetes is a whopping 53.8% — three times the average. I also have an above-average likelihood of developing celiac disease, coronary artery disease, and obsessive-compulsive disorder. I immediately envisioned my flailing future self: diabetic, gluten-free, clutching my chest mid heart attack.

Meanwhile, the test ruled that Connor also had a higher-than-average risk of coronary artery disease and OCD. “At least we’ll have heart attacks together,” he shrugged.

Then, relief: He doesn’t carry the variants most associated with MS. Years of buzzing, restless anxiety briefly stilled.

Nucleus plans to roll out a “genetics-syncing” service for couples that will allow it to “simulate your children” by weighing both parents’ risk scores and predicting what it means for offspring. But that feature isn’t available yet, so I turned to Orchid for a similar test. I emailed Siddiqui, the company’s founder, and asked if she could look over our DNA and tell me something — anything — about what life might have in store for our kids.

She agreed, so I downloaded the raw, unanalyzed genetic data from Nucleus (134 gigabytes) and sent them to Orchid. A few days later, I got an email that our results were ready.

Orchid offers every customer the chance to meet with a genetic counselor, who jumps on a Zoom call with couples as they open their results. (The company did this process for The Standard for free; normally, the pre-IVF family planning feature costs $995.) As I peered at the report with Orchid’s lead genetic counselor, Maria Katz, looking on, I gasped.

“I just don’t understand,” I said. “To me, this feels shocking.”

Both Nucleus and Orchid use proprietary models to assign their risk scores — and Nucleus incorporates more factors into their scores — so I expected the results to be slightly different. But unlike Nucleus’ report, Orchid’s claimed that, based on my genetics, I had a below-average shot at developing type 2 diabetes (23%, versus Nucleus’ claim of 53.8%). And, while Nucleus gave me a 8.6% risk score for breast cancer, Orchid insisted my risk score was over three times higher. Several scores for my husband were dramatically different, too: Nucleus told him he had a 65.6% risk of coronary artery disease; Orchid said 26%.

‘I Can’t Believe Silicon Valley Is Allowed To Do Shit Like This. I Mean, I Can Believe It. But Come On.’

Katz assured me that my confusion (cascading into anger) was “very fair.” But before we dove into the difference in scores, she wanted to show me Connor and my simulated embryo report. Still sharing her screen, Katz opened the Orchid patient portal and moused over to 12 line graphs, one for each polygenic risk score.

Our genes, when simulated together, were, in a word, grim. Our hypothetical child was expected to have a genetic risk of Class III obesity of anywhere from 11% to 25%, compared with the average of 9.2%. If we have a daughter, her inherited genes will give her a 14% to 28% chance of breast cancer. The average is 13%. And I haven’t done our child any favors in the schizophrenia department: The average risk score is 0.9%, but our child would likely have a score from 1% to 2.2%.

In fact, there are only three illnesses that our future kid would likely have a lower-than-average genetic chance of developing: celiac disease, type 1 diabetes, and type 2 diabetes.

The author's individual test results from Nucleus

When I was growing up, my father admitted to being afraid that my sister and I would abruptly untether from reality, unwitting victims of his genetics. This is what happened to his mother, who suffered from schizophrenia. But with the promise of this new technology, I could pick an embryo with the smallest chance of developing this damaging disorder. I imagined the peace of mind that comes from holding your own superbaby, knowing you did everything in your power to protect it.

But my reverie lasted only a moment, because the results I received from Nucleus and Orchid were so different. What was the point of these scores if they varied so much from company to company? What if the investor and his wife from that Presidio cocktail party had used Nucleus instead of Orchid to select their embryo? Would they have ended up with a completely different superbaby?

“I can’t believe Silicon Valley is allowed to do shit like this,” my husband said when I showed him the divergent scores. “I mean, I can believe it. But come on.”

The author's individual test results from Orchid

‘A Silicon Valley Guinea Pig’

I spent the next few days on the phone with Nucleus and Orchid trying to answer a simple question: Which company should I believe?

Katz, the counselor at Orchid, pointed out that, for many of the medical conditions, the company tested for significantly more variants. For type 2 diabetes, Orchid tested for more than 1.1 million variants; Nucleus, just 171,249. “I would say that I’m not sure if [Nucleus is] providing meaningful scores,” she said.

But the number of variants alone doesn’t guarantee accuracy. Jerry Lanchbury, a geneticist who has advised both Orchid and Nucleus, told me that in most polygenic models, the first fistful of variants are weighted significantly more than the rest, meaning that testing more than a million variants may not meaningfully improve predictions.

Even if the companies technically tested the same number of variants, they might have totally different datasets. Gabriel Lazaro-Munoz, a neuroscientist and bioethicist who conducts research on the societal impact of genomics, said that these datasets can skew towards certain races, or vary in size of samples — dramatically impacting an individual’s results from company to company.

The green bars show the estimated risk for the author and her husband's simulated children, via Orchid. Source: Margaux MacColl

Then I got on the phone with Nucleus founder Sadeghi. He was unbothered by the discrepancies in my results. He pointed out that, unlike Orchid, his company’s algorithm incorporates our family histories and lifestyles, expanding the polygenic risk score to an overall health score. My gender or body mass index could have raised my score. My husband is a former smoker, which could have increased his risk of coronary artery disease. “From what I can discover so far, they’re very explainable, right?” he reassured me.

But a week later, I got an email from Nucleus. My type 2 diabetes risk score had been adjusted, plummeting from more than 53% to 10.9%. My old score was derived from a 2018 study, the email informed me; the new one was based on a 2022 study and included 1.3 million variants — similar to what Orchid had used. My husband also got an email, saying Nucleus had lowered his risk of coronary artery disease.

The message assured us that our old scores “weren’t wrong,” but that “genetic science is always improving.”

Halle Marchese, Nucleus’s director of marketing, said in a statement that this was a planned update and that “the data we used to build the score improved and, hence, so did our analysis.”

But the timing was so convenient, I couldn’t help but wonder: if I hadn’t been a reporter cross-checking results across multiple services, would I still be working off a supposedly outdated score, going through life thinking I had a sky-high chance at type 2 diabetes? If I were a patient selecting an embryo based on a number derived from the 2018 study, there’d be no rewind button.

The main reason this behavior is allowed is that, when it comes to genetic testing, the U.S. is still the Wild West. While the U.K. and other countries have largely banned the use of polygenic risk scores in embryo selection, the Food and Drug Administration, the U.S.’s main medical enforcer, has largely chosen not to regulate companies that provide these services. This, despite the agency’s “mounting concern” that the services are misleading consumers, according to the National Human Genome Research Institute.

In late 2023, the FDA approved AvertD, a polygenic testing service that measures the risk of opioid addiction. But, for now, no other polygenic testing company is subject to FDA oversight. This means that every user, and every resulting superbaby, is a Silicon Valley guinea pig.

“This Is Not 100% Pseudoscience”

After having our DNA analyzed twice, and seeing the wild variances in the results from different companies, my husband and I have reached a new agreement: When it comes time to grow our family, we won’t be having a superbaby.

At least not until the FDA begins to regulate the companies providing these opaque, inconsistent, and often unreliable services. For Connor, the process was morally validating. “The scores give the illusion of control,” he shrugged. “I’m just pissed that now two startups have all my genetic data.”

But I felt like I’d lost something. Because I’ve now met children born through this process, I wanted to believe in it — to think there’s something I could do to spare our future kids from MS or schizophrenia. I liked this particular illusion of control.

“What gets me the most is that this is not 100% pseudoscience,” I told Connor. “It is coming from picking up patterns in DNA. The patterns might not ultimately mean anything, but the patterns are there.”

Imagine, 10 years from now, when there’s more research and consensus around which scores are accurate. “And all the rich people have healthier, non-schizophrenic kids,” I said. “That’s not fair.”

He kissed me. “It’s not fair,” he said. “But I don’t really want to play god anyway.”

I sighed. “But I kinda do.”

#Business#Culture#Silicon Valley#Tech#Superbaby#Genetic-Testing#Genetic-Testing | Startups | Help | “Design” | Offspring#Margaux MacColl#“Here’s My SuperrrrrBabyyy”#The San Francisco Standard#SFStandard.Com

0 notes

Text

Size Matters

The way we shop continues to evolve, but in some regards, it’s also a case of what goes around, comes around. Funny how that happens.

More than a century ago, if you lived out of town, you purchased pretty much everything from a catalog, from clothes to even your house. Sears and Montgomery Ward both sold “house kits” that, once delivered, had to be assembled much like an IKEA bookshelf. Just harder.

But even in more recent years, companies like Sears, JC Penney, and Montgomery Ward also issued an annual catalog chock full of the latest fashions. You had to make your size selection with fingers crossed. It was a crapshoot at best. And in a nod to nostalgia, J. Crew just announced they are reviving their catalog, seven years after retiring it.

Now consider e-commerce, which is basically just an online catalog. As much as people squirm and put up resistance to buying clothing online, they probably did it without question from a paper catalog. But resistance is resistance, and savvy marketers know they have to figure out a way to overcome.

Which leads us to the topic of virtual try-on. That handy size chart you see a link away from any garment you’re pondering is evolving into interactive sizing video powered by AI. Google Shopping just announced it is expanding its virtual try-on from men’s and women’s tops to now include ladies’ dresses. Shoppers can select from real models of all shapes, sizes, and colors to get a better idea of how a garment will look. And since a dress necessarily has to fit in a lot more places than a shirt, there’s a lot more consumer hesitancy to overcome.

Interestingly, Google’s efforts lag substantially behind what Walmart has already done. Two years ago the company launched its version of an AI-powered look book, but with the added twist that shoppers could upload a photo of themself and then see the garment superimposed. Thus far, Walmart’s program is only available for women’s clothing.

Wait. Isn’t that just a bit creepy? I mean, at minimum you are sharing pics of yourself, but someone else could have twisted fun at your expense.

While I am dead certain I would pass if and when Walmart adds the feature in the Men’s department, I am good with Google’s version. After all, it still beats a static photo in a catalog or on a website. Photoshoots are expensive, models aren’t cheap, and if you’re trying to be size-inclusive, you have to show the garment on both big and small people. How much better it would be if we could just let AI do all of that modeling for us, right?

There will always be risk when buying clothes sight-unseen. Colors in print or on-screen may not be realistic, and can look vastly different against various skin tones. There is terrible inconsistency in sizing across brands, even within a brand if they outsource manufacturing across multiple providers. And then there’s the fact that you are uniquely you, with all of your proportionalities. One size fits all? You gotta be kidding.

Both Google and Walmart are not perfect…yet. While we may still be going through the motions of buying clothes from afar, just like 125 years ago, the shopping proposition has gotten better. Stir in some AI, and we’re getting much closer to a fitting room experience without actually having to do it.

I’m good with that, because as far as I’m concerned, that’s the last thing I want to be doing on a Saturday afternoon. Bring it, Google and Walmart. If it doesn’t fit, I’ll just return it.

Dr “Try This On For Size” Gerlich

Audio Blog

0 notes

Text

Kingdom Hearts on Steam

So kingdom hearts is on steam, or at least the 'Integrum Masterpiece Collection' of 3 game bundles: Kingdom Hearts 1.5 + 2.5 Remix, Kingdom Hearts 2.8 Final Chapter Prologue, and Kingdom Hearts 3 + Re:mind. The games have been available on PC before via the Epic Games store, but those versions have an online check in DRM system that A) sucks, and 2) makes the games effectively unplayable on on-the-go handheld PCs like the Steam Deck. So far these seem to be high quality PC ports - everything's running well, few to no weird bugs. Always a crapshoot on ports from Squeenix, but this seems to be one of the better ones.

As always, it's nice to have the games on PC, if for no other reason than it makes modding a lot easier, and some of these games really benefit from modding. My favorite use of mods so far is to restore the original color palettes to the enemies in KH 1 final mix.

A downside of the Steam versions, which is unfortunately getting patched back into the Epic versions, is that the textures of the older games have been ai-upscaled for 4k resolutions. This has a negligible impact on visual quality, especially when playing at a reasonable, god-fearing resolution anywhere between 720 and 1440p, and even when it is noticeable it isn't always a good thing, as random elements can sometimes stand out weirdly. But it has a dramatically non-negligible and very negative impact on the storage space taken by these games. KH 1.5 + 2.5 remix is 3 ps2 games and a few extra cut scenes, yet takes up a whopping 72 gigs on your hard drive. It would be one thing if they let you pick between the original & upscaled textures when installing the game, but no.

The base prices for these games on Steam is, frankly, insane - fully 200 united states dollars to buy all three separately. Please don't do that. BUT the collection of all three together is discounted 50 percent AND both the individual games and the full collection are further discounted 30% through July 11, bringing the total for the bundle down to about us$70, which imo is just barely a fair price for this bundle of dearly beloved but honestly mostly kind of bad old games.

As for my brief (lol) thoughts on the individual games themselves... I was going to put summaries below a read more cut, but it all got too long so I'm breaking them into separate posts.

edit: Eh, coming out of the first game & into the others, and the quality of the port isn't as good as i first thought. some framerate issues, some slowdown & audio/video desync, esp in the cutscenes. tinkering with framerate limits & vysynch, &, on steamdeck, turning on halfrate shaders has helped. still doesn't feel bad at all, as such things go, but still jot as good a port as I let on above.

1 note

·

View note

Text

Oh, I’m judging you. And since you tagged me directly I guess you wanna hear more.

(Cut for everybody else’s sake cause all my mutuals already hate Large Language Model Generative AI.)

ChatGPT doesn’t “put it together better” it’s a crapshoot if it spits anything coherent at all. It could tell you Lucy Lawless herself can fly because of that episode of the Simpsons, and factor that into the modern day depictions of women in media.

LLM AI doesn’t think. It puts together patterns without any real reasoning. By asking it to think for you, you degrade your own ability to think critically about why depictions of queer people and strong female characters in media aren’t getting much better, why representation is so often a one step forward two steps back thing, and so on and so forth. It’s something I think about a lot. Probably too much.

But I digress.

The real problem here, as with all LLM AI, is the processing power needed to store all the scraped data—so you to ask about media after Xena, remember—is fucking destroying the environment along with the communities near the massive data centers needed to make it spit out Frankensteined information in seconds.

But if you don’t care about that, there’s nothing more I can say to change your mind.

Circling back your stop crying tag, it does makes me sad you could write your own analysis on a show and cultural phenomenon you’re clearly passionate about, and it’d be fantastic by sheer virtue of the fact that you made the fucking effort.

You could still do that one day, if you ever find the time.

Anyway, if nothing else this reminded me I haven’t re-watched Xena in a while. Gonna go do that now. ✌️

I ask chatgpt because it's easier duh!

All chatgpt does is find the articles and put together better. @catoperated

I could put this together myself I didn't have the time.

7 notes

·

View notes

Note

kari there is an ai that can generate images from any prompt...

I typed in the names of the baby riders

I just can't. lol!!!

Proof that AI is still a crapshoot

7 notes

·

View notes

Text

since it’s a hot topic right now, here are my ai art opinions, even though nobody asked me. i should preface this by saying i’m not an ai engineer or a computer ethicist, but i am an artist!

i like ai art. i think it can produce some pretty cool stuff. publicly available ai programs are powerful nowadays, and it’s cool that anyone with a computer has the ability to make solidly interesting art using ai as a tool. it’s also not a complete crapshoot. in order to make consistently good ai art, you have to practice and know your way around the programs you’re using. i make ai art pretty frequently, usually using artbreeder. it’s fun!

but i think there are some ethical problems. ai and neural networks work because they’ve been fed gigabytes of information to teach them what the images they produce are supposed to look like. if a neural network creates photorealistic portraits, like thispersondoesnotexist, then it was fed thousands or even millions of portraits photographed by actual humans so it could learn what a human face is. in this way, generating something using ai is like a group project whose members are you, a computer, and the countless people who produced the source data. your ai generated song is a collaboration between you and thousands of other artists.

the problem here is pretty obvious. for those open about their art being ai generated, there’s no real issue, at least not on this front (there are other ethics-related problems in the ai world that i’m not going to get into here). but someone claiming they alone created their ai generated art is almost certainly lying. if they are using someone else’s program trained on other people’s work, it’s not a solo venture.

all things considered, i think it’s best to treat ai art like commissioning an artist. you give the artist a prompt and some pointers, you’re definitely involved in the process, and you own the finished piece. but it wasn’t you who did the brunt of the work, and claiming that you created art you commissioned is a huge faux pas in artistic spaces. it should be the same with ai. post it online, maybe even submit it to competitions if it’s allowed, but don’t claim it’s your original work.

the lines get blurrier when you edit a piece of ai art, which is what i tend to do, or you produce something inspired by an ai generation (using a simple generated melody to construct an entire song, completely repainting a generated image, making collages out of generated photos), but i think it’s still important to give credit to the ai program in these situations.

unless you trained the program yourself solely on work that only you created, your art is directly derived from other people’s. all art is derivative! but this level of derivation is historically unprecedented. this is new territory. we need new guidelines.

hopefully within the next few years etiquette regarding ai work in the art community will develop. hopefully in five years it will be very frowned upon to pass off ai work as your own. but we need to start being critical now so that these changes actually happen. if all we do is panic about how ai programs will replace flesh and blood artists, then we won’t be doing anything to stop that from eventually happening. ai isn’t the end of the world unless we let it be!

4 notes

·

View notes

Text

How to skate by: a guide for the weaker decks

I’ve seen no shortage of posts of people complaining about being behind in lessons and how everyone else is forging ahead. For some of you, I’m afraid I cannot help. For most of you, I suspect I can by offering a few helpful tips that have worked out for me.

This guide is for the standard battles only. The boss battles are far more complicated and difficult.

For context: I have only three URs and I’ve been playing since March. Two of them are UR+’s, but two of them are Belphie, so there’s never a situation where I can actually deploy all three. I’ve spent my time not maxing them out at the earliest opportunity but leveling up three SSRs for every sin to level 70, but two of my slots usually are the URS. You’re probably better off than I am team-wise if you have three maxed URs with three different brothers at your disposal. But, well, until I get that third one this is what I’m compelled to do.

First and foremost, you have to be comfortable with just getting one star until you can start knocking them out of the park. I have only one two star back in lesson 38. Since all you want to do is get to the story this shouldn’t be a problem, but unless you get particularly lucky you shouldn’t expect Special Guest to pop up very often. Sad! Though they actually did pop up for me half the time in this particular lesson, strangely enough. And of course I forgot how to poke the brothers when they don’t dance well and it all went to waste. (:

Furthermore, you will need glowsticks ready for the particularly nasty dance offs. You just won’t need as many as you may think, at a glance.

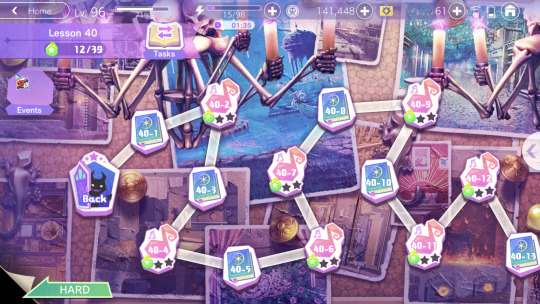

As you can see here in lesson 40-9, the enemy team has a 40k+ point advantage over my team. I don’t think they’re lying to you: the enemy team ai is just typically dumb and predictable, and you can use that to your advantage if you know how it’s dumb and predictable, to make this gap irrelevant. I don’t think they factor in the amount of heart points you can nab, either.

That Asmo card is also common/old af and has a useless skill, but it still consistently shows up in my recommended deck, so idgaf

As a general rule, I think enemy teams that are 30-40k points above you are still beatable. After 50k you usually will need to use a glowstick, but I always try without one first in case I get really lucky. I’ve managed to pull it off, but it’s a crapshoot most of the time.

Think of it this way: the goal is not to be stronger than the enemy team, it’s to reach the marker in time and foil their efforts to subtract points from your score. The stronger the enemies, the more points their attacks knock off from your score, so it counts in that particular regard.

To circumvent that, you need to get those sweet, sweet combo counters.

Anyway, no glowsticks. Lets go, bitches. You don’t skurr me.

First, you always want to combo. Combos give you more points. But they’re not the only thing that gives you more points: countering enemy combos does as well. I almost always use all three cards in any combo ever, but I typically wait until after one sprite rotation/twirl to shoot it off in hope of trying to get the enemy to fire off first so I can counter... but it’s also beneficial when they try to counter your combo in the first pass -- because that means they won’t be throwing combos at me that I can’t counter before my meters refill. They’ll still be in cooldown, too.

In this particular fight, though, I managed to bait only one enemy into firing off right away. I couldn’t counter two attacks during my cooldown... but thankfully they didn’t combine into a combo and their skills are useless. Because the ai is dumb and doesn’t strategize. I got x1.25 more points for this nevertheless, even though I was countering just one wee enemy. If you notice, I was behind them until this point.

I haven’t figured out how all the enemy types function, sadly, but I do know gluttony enemies always fire off as soon as they can, so there’s no use in waiting to fire off your cards. The others seem capable of waiting, unfortunately, but it seems random. Perhaps I will one day deduce the rhyme or reason for when they do, if there is one.

I wait until the last second to fire off my next (and last) combo because, again, you get more points when you team fully counters an enemy combo. Sometimes it can put you right over the top.

This time, I didn’t really need to, but I did so for the sake of the demonstration. The enemies who chose to attack me while on cooldown couldn’t counter me at the very end because they were still on cooldown. And, again, they didn’t really have any skills that hindered my team.

KO, I win.

This was actually an easy battle to win, despite...

... this. BUT HEY, IT STILL COUNTS. I earned that one whole star.

It gets trickier when the enemies have skills like confuse and stun. They can easily make what seems to be a winnable battle (by my standards at least) practically a game of chance. If you’re up against enemies that have those skills, you should always make sure you’re capable of countering when they fire off -- and they seem to have a tendency to do it ALL AT ONCE, which is a good thing if their skills don’t activate and a terrible thing when they do.

Strategy tl;dr

Get them combo counters

1. Wait a second or two after your gauges fill up to fire off your combo in hope of getting the enemy team to fire theirs off first (I let the sprites twirl once before I fire it off). Gets you more points when you counter + prevents them from throwing an uncounterable combo at you. You don’t want to wait too long trying to bait them because you want to be capable of countering an enemy combo in the last stretch, and if you wait too long you’ll still be on cooldown.

2. Nab all your hearts and stuff because you’ll be in cooldown for most of the remainder of the battle after the first go.

3. Wait until the last second to fire off your last combo in hope of getting the enemy team to fire theirs off first so you can counter and get more points.

Happy dancing!

EDIT: Apparently you get the combo multiplier added to your score even if the enemies counter you, as long as you “win” the pass (your arrow triumphs over theirs). Get the biggest combo chain you can!

81 notes

·

View notes

Text

[Review] Conker: Live & Reloaded (XB)

Let’s see just how well this misguided remake/expansion holds up. This will be a long one!

Conker’s Bad Fur Day is my favourite N64 game. It’s cinematic and ambitious, technically impressive, has scads of gameplay variety with fun settings and setpieces, and when I first played it I was just the right age for the humour to land very well for me. A scant four years later Rare remade it for the Xbox after their acquisition by Microsoft, replacing the original multiplayer modes with a new online mode that would be the focus of the project, with classes and objectives and such.

First, an assessment of the single-player campaign. On a revisit I can see the common criticisms hold some water: the 3D platformer gameplay is a bit shaky at times, certain gameplay segments are just plain wonky and unfair, and some of the humour doesn’t hold up. It’s got all the best poorly-aged jokes: reference humour, gross-out/shock humour, and poking fun at conventions of the now dormant 3D collectathon platformer genre. I also am more sensitive these days to things like the sexual assault and homophobia undertones to the cogs, or Conker doing awful things for lols. Having said that, there’s plenty that I still find amusing, and outside of a few aggravatingly difficult sequences (surf punks, the mansion key hunt, the submarine attack, the beach escape) I do still appreciate the range of things you do in the game.

As for the remake, I’m not sure it can be called an improvement by any metric. Sure, there’s some minor additions. There’s a new surgeon Tediz miniboss, the new haunted baby doll enemy, and the opening to Spooky has been given a Gothic village retheme along with an added—though unremarked on—costume for Conker during this chapter based on the Hugh Jackman Van Helsing flop. Other changes are if anything detrimental. The electrocution and Berri’s shooting cutscenes have been extended, thus undermining the joke/emotional impact. The original game used the trope of censoring certain swear words to makes lines more funny; the remake adds more censorship for some reason, in one case (the Rock Solid bouncer scene) ruining the joke, and Chucky Poo’s Lament is just worse with fart noises covering the cursing.

The most egregious change, and one lampshaded in the tutorial, is the replacement of the frying pan (an instant and satisfying interaction) with a baseball bat which must be equipped, changing the control and camera to the behind-the-back combat style, and then swung with timed inputs to defeat the many added armoured goblings and dolls carelessly dumped all throughout the game world. This flat out makes the game less fun to play through.

On top of this, all the music has been rerecorded (with apologies to Robin Beanland, I didn’t really notice apart from instances where it had to be changed, such as in Franky’s boss fight where the intensely frenetic banjo lead was drastically reduced as a concession to the requirement to actually play it in real life), and the graphics totally redone. Bad Fur Day made excellent use of textures, but with detail cranked up, the sixth generation muddiness, and a frankly overdone fur effect, something is lost. I’m not a fan of the character redesigns either; sure Birdy has a new hat, but I didn’t particularly want to see Conker’s hands, and the Tediz are no longer sinister stuffed bears but weird biological monster bears with uniforms. On top of all this you notice regular dropped details; a swapped texture makes for nonsensical dialogue in the Batula cutscene, and characters have lost some emotive animations. Plus, the new translucent scrolling speech bubbles are undeniably worse.

I could mention the understandable loading screens (at least they’re quick), the mistimed lip sync (possibly exacerbated by my tech setup), or the removal of cheats (not a big deal), but enough remake bashing. To be fair, the swimming controls have been improved and the air meter mercifully extended, making Bats Tower more palatable. And some sequences have been shortened to—I suppose—lessen gameplay tedium (although removing the electric eel entirely is an odd choice). But let’s cover the multiplayer. Losing the varied modes from the original is a heavy blow, as I remember many a fun evening spent in Beach, War, or Raptor, along with the cutscenes setting up each mode.

The new headline feature of this release is the Live mode. The new Xbox Live service allowing online multiplayer was integrated, although it’s all gone now. Chasing the hot trends of the time, it’s a set of class-based team missions, with the Squirrel High Command vs. the Tediz in a variety of scenarios, mostly boiling down to progressing through capture points or capture the flag. Each class is quite specialised and I’m not sure how balanced it is, plus there’s proto-achievements and unlocks behind substantial milestones none of which I got close to reaching (I don’t think I could get most of them anyway, not being “Live”).

The maps are structured around a “Chapter X” campaign in which the Tediz and the weasel antagonist from BFD Ze Professor (here given a new and highly offensive double-barrelled slur name) are initially fighting the SHC in the Second World War-inspired past of the Old War, before using a time machine, opening up a sci-fi theme for the Future War. These are mainly just aesthetic changes, but it’s a fun idea and lets them explore Seavor’s beloved wartime theming a bit more while also bringing in plenty of references to Star Wars, Alien, Dune, and Halo; mostly visual.

Unfortunately the plot is a bit incoherent, rushed through narration (unusually provided by professional American voice actor Fred Tatasciore rather than a Rare staffer doing a raspy or regional voice like the rest of the game) over admittedly nice-looking cutscenes. They also muddle the timeline significantly, seemingly ignoring the BFD events... and then the Tediz’ ultimate goal is to revive the hibernating Panther King, when the purpose of their creation was to usurp him in the first place! It expands on the Conker universe but in a way that makes the world feel smaller and more confusing. It’s weird, and also Conker doesn’t appear at all.

On top of this, I found the multiplayer experience itself frustrating. To unlock the full Chapter X, you need to play the first three maps on easy, then you can go through the whole six. But I couldn’t pass the first one on normal difficulty! The “Dumbots” seemed to have so much health and impeccable aim, while the action was so chaotic, obscured by intrusive UI, floating usernames, and smoke and other effects with loads of characters milling around, not to mention the confusing map layouts, the friendly fire, the instant respawns, and the spawncamping. Luckily I could play the maps themselves in solo mode with cutscenes and adjustable AI and options.

I found some classes much more satisfying than others. I tried to like the Long Ranger and the slow Demolisher, but found it difficult to be accurate. The awkward range of the Thermophile and the Sky Jockey’s rarely effective vehicles made them uncommon choices. I had most success with the simple Grunt, or the melee-range Sneeker (the SHC variant of which is sadly the sole playable female in the whole thing). You can pick up upgrade tokens during gameplay to expand the toolset of each class, which range from necessary to situational. But ultimately it’s a crapshoot, as I rarely felt that my intentions led to clear results.

Live & Reloaded is such a mess. The Reloaded BFD is full of odd decisions and baffling drawbacks, while the Live portion feels undercooked. I’d have preferred a greater focus on either one; a remake is unnecessary, especially only four years on, but a new single-player adventure would have been ace. And a multiplayer mode in this universe with its own story mode could be cool if it was better balanced and had more to it than just eight maps. As a source of some slight scrapings of new Conker content I appreciated it to some extent, but I can’t help being let down. I guess it’s true what they say... the grass is always greener. And you don’t really know what it is you have, until it’s gone... gone. Gone.

Yes, that ending is still genuinely emotionally affecting.

4 notes

·

View notes

Text

Dark Souls (3) Diary:

Still fighting Ariandel and Friede. I don't think I'm exaggerating when I say this is the hardest fight in the entire series so far.

Packing a +10, dark-infused, strike weapon with a decent bleed rating and respecced all my attunement points into strength, so I'm doing some decent DPS, at least.

The first phase is, at this point, easy. I can make it through without using an Estus most of the time. The second phase goes either very well or very badly, but either way, it happens fast.

But that third phase... goddamn. Not only is she lightning fast, she's throwing an entire kitchen sink of moves out with barely a breath.

I do really appreciate being able to summon Gael unembered. If he makes it through phase 2 with a decent amount of health--which is a crapshoot cos his AI is really bad at dodging Friede's AOE's--the two of us can do a decent job of breaking her aggro by trading off blows. I'm playing a LOT more aggressively than I usually do and I've gotten her as low as about 1/5 health, but haven't quite been able to seal the deal yet.

Nameless King was tough--as tough or tougher than O&S and Fume Knight, the fights I had the most trouble with in their respective games. But this shit is on another level. I can see victory though--it's just a matter of pulling it off.

1 note

·

View note

Text

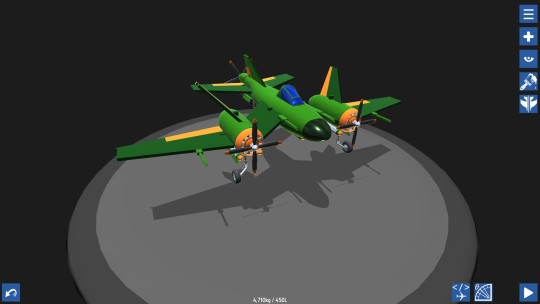

Back to making aircraft in simpleplanes, more specifically in order to complete all the combat challenges. Variations on the jet fighter I’d made previously could do most of them, with the exception of two that you can only do with world war two era parts, ie. no missiles or jet engines, only guns, unguided munitions and propeller propulsion. I could have just made something that might look normal for the standards of the era, but the mad scientist in me won’t allow that. One mission was to make a torpedo bomber, so I made this:

I do think there was an experimental soviet ww2 plane that used a similar kind of back-and-front propeller configuration, although that was a little different to this, I think it just had one propeller on the front and wings in the back that were nearly as long as the frontal wings. Anyway, it flies fast and straight and it’s stable enough at low altitude to get the torpedo job done, though on that mission you’re flying into heavy flak regardless so it’s still something of a crapshoot.

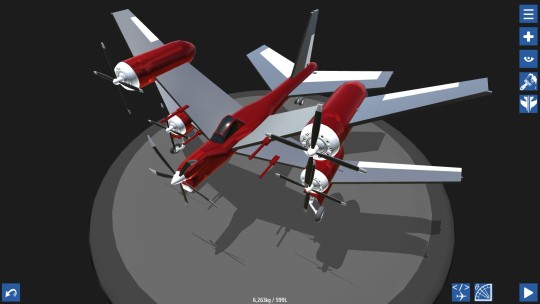

The other mission is a standard dogfight. The prior plane has guns for that kind of thing, but I felt like creating something even more preposterous than that. I made this:

This thing’s a bit of a beast in 1-on-1 dogfights. I’ve tested it by going into the dogfight mod and having it controlled by the AI and giving myself a jet fighter. If I stick to guns only, I lose almost every time. Missiles obviously made it pretty easy, but only if things stay at arm’s reach. It can do 800 kph and pitches surprisingly fast. It doesn’t roll super quick but it’s not bad either. It also takes a beating because of all its redundant engines. You can even shoot an entire wing off and it’ll still fly, albeit not as well. The only thing is, it’s kind of a big target and you start between two enemy fighters on the dogfight mission, so it’s still rough. In fact, the one success I’ve had so far came because one of the enemies unexpectedly crashed, and I’d still got shot up a bit prior to that. Also, unsurprisingly it guzzles fuel like John Green guzzling balls. I really love this thing but still feel the need to go back to the drawing board so I can win that mission more legitimately.

4 notes

·

View notes

Photo

The first thing I need to address in this “description” is that this post is not what I had planned. I have actually made an entire 3 minute video using actual moving picture and audio, but for all my efforts I was unable to post it anywhere, including Tumblr. YouTube “partially blocked” the video file from being seen in every country in the world. Yes, I’m being serious.

By looking for the real episodes and, by extension, the clips inside them, you will get the same picture of the scenes depicted as PNG’s and it will still work. For some of them an early cutoff in editing is necessary, but every scene shown here was one solid clip requiring no rearranging or time skips.