#AiModels

Explore tagged Tumblr posts

Text

IBM Analog AI: Revolutionizing The Future Of Technology

What Is Analog AI?

The process of encoding information as a physical quantity and doing calculations utilizing the physical characteristics of memory devices is known as Analog AI, or analog in-memory computing. It is a training and inference method for deep learning that uses less energy.

Features of analog AI

Non-volatile memory

Non-volatile memory devices, which can retain data for up to ten years without power, are used in analog AI.

In-memory computing

The von Neumann bottleneck, which restricts calculation speed and efficiency, is removed by analog AI, which stores and processes data in the same location.

Analog representation

Analog AI performs matrix multiplications in an analog fashion by utilizing the physical characteristics of memory devices.

Crossbar arrays

Synaptic weights are locally stored in the conductance values of nanoscale resistive memory devices in analog AI.

Low energy consumption

Energy use may be decreased via analog AI

Analog AI Overview

Enhancing the functionality and energy efficiency of Deep Neural Network systems.

Training and inference are two distinct deep learning tasks that may be accomplished using analog in-memory computing. Training the models on a commonly labeled dataset is the initial stage. For example, you would supply a collection of labeled photographs for the training exercise if you want your model to recognize various images. The model may be utilized for inference once it has been trained.

Training AI models is a digital process carried out on conventional computers with conventional architectures, much like the majority of computing nowadays. These systems transfer data to the CPU for processing after first passing it from memory onto a queue.

Large volumes of data may be needed for AI training, and when the data is sent to the CPU, it must all pass through the queue. This may significantly reduce compute speed and efficiency and causes what is known as “the von Neumann bottleneck.” Without the bottleneck caused by data queuing, IBM Research is investigating solutions that can train AI models more quickly and with less energy.

These technologies are analog, meaning they capture information as a changeable physical entity, such as the wiggles in vinyl record grooves. Its are investigating two different kinds of training devices: electrochemical random-access memory (ECRAM) and resistive random-access memory (RRAM). Both gadgets are capable of processing and storing data. Now that data is not being sent from memory to the CPU via a queue, jobs may be completed in a fraction of the time and with a lot less energy.

The process of drawing a conclusion from known information is called inference. Humans can conduct this procedure with ease, but inference is costly and sluggish when done by a machine. IBM Research is employing an analog method to tackle that difficulty. Analog may recall vinyl LPs and Polaroid Instant cameras.

Long sequences of 1s and 0s indicate digital data. Analog information is represented by a shifting physical quantity like record grooves. The core of it analog AI inference processors is phase-change memory (PCM). It is a highly adjustable analog technology that uses electrical pulses to calculate and store information. As a result, the chip is significantly more energy-efficient.

As an AI word for a single unit of weight or information, its are utilizing PCM as a synaptic cell. More than 13 million of these PCM synaptic cells are placed in an architecture on the analog AI inference chips, which enables us to construct a sizable physical neural network that is filled with pretrained data that is, ready to jam and infer on your AI workloads.

FAQs

What is the difference between analog AI and digital AI?

Analog AI mimics brain function by employing continuous signals and analog components, as opposed to typical digital AI, which analyzes data using discrete binary values (0s and 1s).

Read more on Govindhtech.com

#AnalogAI#deeplearning#AImodels#analogchip#IBMAnalogAI#CPU#News#Technews#technology#technologynews#govindhtech

4 notes

·

View notes

Text

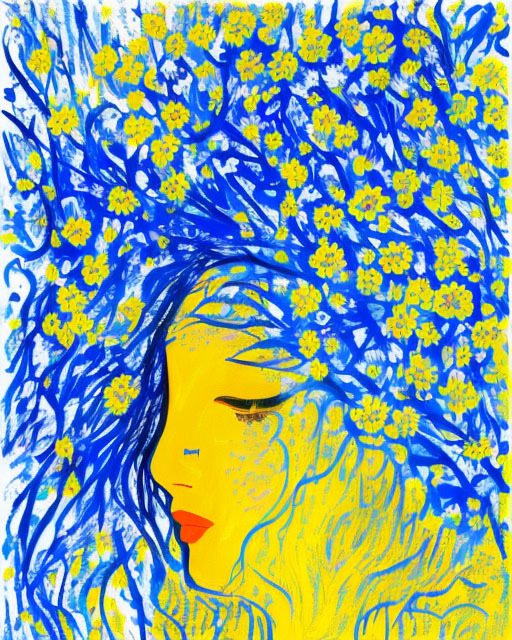

#AIArt#DigitalArt#AIGenerated#AIArtwork#AIArtist#GenerativeArt#AICommunity#MachineLearningArt#TechArt#FutureArt#StableDiffusion#SDXL#AIImageGeneration#FooocusAI#AIModels#CheckpointModels#LoRAModels#RealisticArt#SurrealArt#AbstractArt#CyberpunkArt#FantasyArt#DigitalRealism#ConceptArt#CreativeAI#ArtOfTheFuture#NextGenArt#ArtificialIntelligence#InnovationInArt#CreativeTechnology

2 notes

·

View notes

Text

Super AI Review 2025: The All-in-One AI Supermodel You’ve Been Waiting For

Introduction: Super AI Review 2025

This review explores the world’s initial SuperModel™ AI application named Super AI which centralizes each dominant AI tool through its simple dashboard interface.

No monthly fees. No hidden costs. Just one small flat fee.

This post presents an explanation of Super AI functionality together with its features and the reason behind its “last AI application ever needed” designation.

Overview: Super AI Review 2025

Vendor: Seyi Adeleke

Product: Super AI

Launch Date: 2025-May-05

Front-End Price: $27

Niche: Affiliate Marketing, Artificial Intelligence (AI), Next Gen AI, AI Apps

Guarantee: 30-day money-back guarantee

Recommendation: Highly recommended

Support: Check

Contact Info: Check

What Is Super AI?

Super AI is a brand-new tool that fuses over 230+ AI models into one easy-to-use dashboard.

You get access to top AI engines like:

ChatGPT

Claude

DeepSeek

Gemini

Manus

Apple Intelligence

Lama

And many more…

All without APIs. No subscriptions. Just one login.

It’s the ultimate AI toolkit. And yes — it’s built for everyone.

#SuperAIReview#AI2025#AIFuture#SupermodelAI#AllInOneAI#AIInnovation#TechTrends#ArtificialIntelligence#FutureOfAI#SmartTechnology#AIRevolution#DigitalTransformation#AIApplications#MachineLearning#AIModels#TechReview#AIInsights#FutureTech#AIInspiration#NextGenAI#AIForEveryone#AICommunity#TechSavvy

1 note

·

View note

Text

Learn how Qwen2.5-Coder is revolutionizing code generation with training data of 5.5 trillion tokens and support for 92 languages. This open-source model excels in benchmarks like HumanEval and MultiPL-E, offering advanced code intelligence and long-context support up to 128K tokens. Discover its capabilities and how it outperforms other models.

#Qwen2.5Coder#OpenSource#CodeModels#AI#MachineLearning#Coding#TechTrends#SoftwareDevelopment#AIModels#artificial intelligence#open source#machine learning#software engineering#programming#python#nlp

2 notes

·

View notes

Text

I feel amazing, how are you love?

#aimodel#aimodellife#aimodelling#aimodelgirl#aimode#aimodeling#aimodels#aigirlmodel#aigirlfantasy#aigirlsforyou#aigirlfried#aigirloftheweek#aigirlgallery#aigirl#aiforever#aifuture#aifantasy#aiphoto#aiphotography#aiportraits#aiplifestyle#aipfriendly#aiportrait#aiphotorealistic#aibeauty#aibeautifulgirl#buymeacoffee#loyalfans#fanvue#patreon

4 notes

·

View notes

Text

#aiart#peopleofai#aiartcommunity#aiphotography#aiphotograph#aiphotographer#aiartwork#aihuman#aimodel#aimodels

2 notes

·

View notes

Text

AI model selection based on cost and accessibility

AI model selection based on cost and accessibility—choose smartly with our quick guide to balance pricing and usability.

#BestAIModels#AI2025#ArtificialIntelligence#AITools#AIModels#AIComparison#TechTrends2025#FutureOfAI#AIInnovation#MachineLearning

0 notes

Text

I trained a neural network on my drawings

Warning: this article is a bit outdated. Download the model here: https://huggingface.co/netsvetaev/netsvetaev-free. It’s great for seamless patterns, abstract drawings, and watercolor-styled images. How to use it and train a neural network on your own pictures?

How to use .ckpt model

You download the .ckpt file, put it in your models folder. Then it all depends on what fork you're using. In InvokeAI, which I use, the models are added by usual loading invoke.py and executing !import_model command in console.

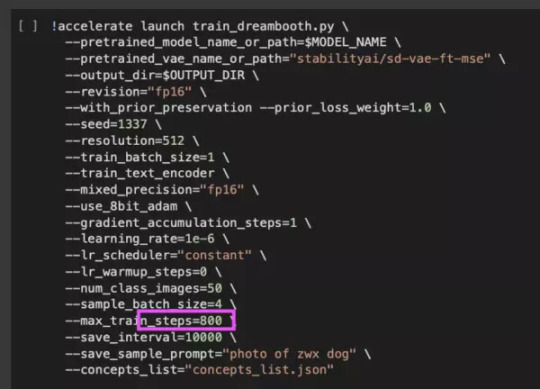

How to train neural network on your images if you don't have nvidia 4090?

Google has a wonderful service called Colab: Google gives you free server for about an hour. That's enough to build a model from 15-30-50 images. First go here and get acquainted with colab (but don't touch anything): https://colab.research.google.com/github/ShivamShrirao/diffusers/blob/main/examples/dreambooth/DreamBooth_Stable_Diffusion.ipynb All that is required from you, except for clicking through each code window and uploading pictures, is a hugging face token.

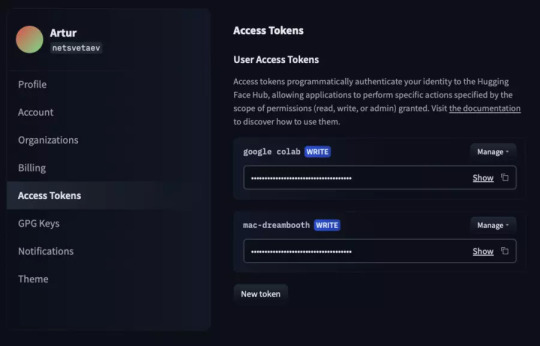

Huggingface Token & Settings

Register at huggingface.co, and then create a token in the settings: https://huggingface.co/settings/tokens

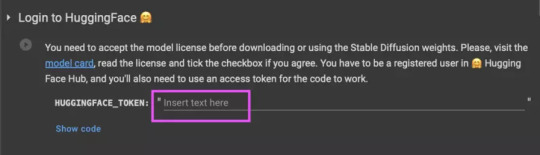

Insert the token here:

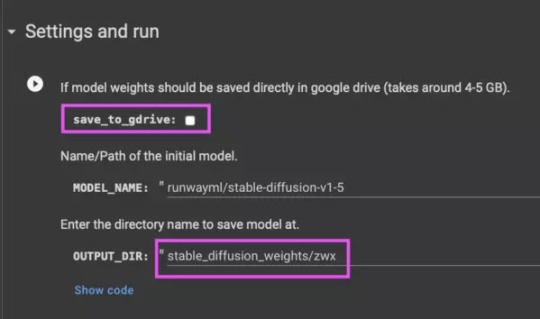

Check the box to save the model to google drive and name a folder, if you want (it will be created automatically)

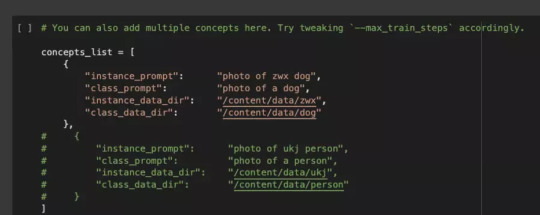

Next, specify how we will call your style or object

This is not so important, but you need to remember this token to invoke your object. Then upload the pictures:

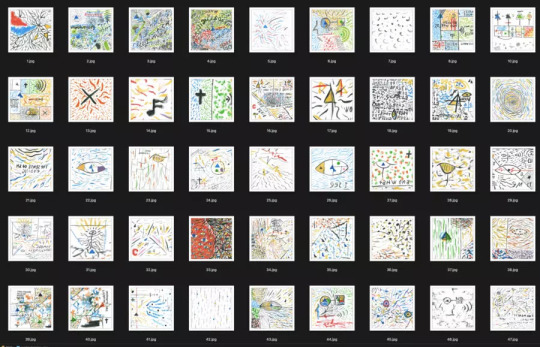

Dataset

You need to use the size of 512x512 pixels, and it is better to prepare them in advance. It's up to you to decide what to cut, but I recommend to cut it so that the composition and significant objects were preserved. You can also make several separate zoomed in and cropped pictures.

Depending on the number of images, you can change the number of training steps. A reasonable number is 100 steps * number of pictures. For 8 images (at least 8 is enough, btw) — 800 steps is enough. Too many steps is also bad: the generation will become too contrast and you will get artifacts on images (overtrained model).

Next, simply start each block in the colab with the Play button in the top left corner and wait for the completion of each code. When you get to the section shown above and start it, you will see the learning process. It will take about 10 minutes to prepare + another 15-30 minutes, depending on the number of pictures and steps.

Test your ai model

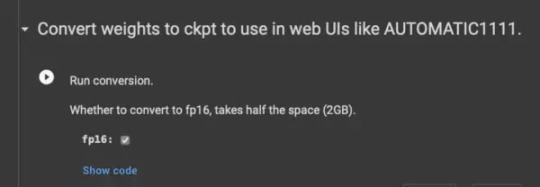

After training you can test the model on test generation and convert it to .ckpt format:

Now you have the file on your Google disk: download it and add it to your favorite fork. Additionally you can delete unnecessary files and finish the process.

Other examples:

About me

My name is Artur Netsvetaev, I am a product manager, entrepreneur and UI/UX designer. I help with the development of the InvokeAI interface and have been using it myself since the beginning of this project. First published: https://habr.com/ru/articles/699002/ Read the full article

0 notes

Text

Dell AI PCs: A Gateway To AI For Life Sciences Organizations

AI in the Life Sciences: A Useful Method Using Computers.

For life sciences companies wishing to experiment with AI before making a full commitment, Dell AI PCs are perfect. The Dell AI PCs are revolutionary way to get started in the vast field of artificial intelligence, particularly for clients in the life sciences who are searching for a cost-effective way to create intricate processes.

The Dell AI PCs, GPU-enhanced servers, and cutting-edge storage solutions are essential to the AI revolution. If you approach the process strategically, it may be surprisingly easy to begin your AI journey.

Navigating the Unmarked Path of AI Transformation

The lack of a clear path is both an exciting and difficult part of the AI transition in the medical sciences. As it learn more about the actual effects of generative and extractive AI models on crucial domains like drug development, clinical trials, and industrial processes, the discipline continues to realize its enormous promise.

It is evident from discussions with both up-and-coming entrepreneurs and seasoned industry titans in the global life sciences sector that there are a variety of approaches to launching novel treatments, each with a distinct implementation strategy.

A well-thought-out AI strategy may help any firm, especially if it prioritizes improving operational efficiency, addressing regulatory expectations from organizations like the FDA and EMA, and speeding up discovery.

Cataloguing possible use cases and setting clear priorities are usually the initial steps. But according to a client, after just two months of appointing a new head of AI, they were confronted with more than 200 “prioritized” use cases.

When the CFO always inquires about the return on investment (ROI) for each one, this poses a serious problem. The answer must show observable increases in operational effectiveness, distinct income streams, or improved compliance clarity. A pragmatic strategy to evaluating AI models and confirming their worth is necessary for large-scale AI deployment in order to guarantee that the investment produces quantifiable returns.

The Dell AI PC: Your Strategic Advantage

Presenting the Dell AI PCs, the perfect option for businesses wishing to experiment with AI before committing to hundreds of use cases. AI PCs and robust open-source software allow resources in any department to investigate and improve use cases without incurring large costs.

Each possible AI project is made clearer by beginning with a limited number of Dell AI PCs and allocating skilled resources to these endeavors. Trials on smaller datasets provide a low-risk introduction to the field of artificial intelligence and aid in the prediction of possible results. This method guarantees that investments are focused on the most promising paths while also offering insightful information about what works.

Building a Sustainable AI Framework

Internally classifying and prioritizing use cases is essential when starting this AI journey. Pay close attention to data kinds, availability, preferences for production vs consumption, and choices for the sale or retention of results. Although the process may be started by IT departments, using IT-savvy individuals from other departments to develop AI models may be very helpful since they have personal experience with the difficulties and data complexities involved.

As a team, it is possible to rapidly discover areas worth more effort by regularly assessing and prioritizing use case development, turning conjecture into assurance. The team can now confidently deliver data-driven findings that demonstrate the observable advantages of your AI activities when the CFO asks about ROI.

The Rational Path to AI Investment

Investing in AI is essential, but these choices should be based on location, cost, and the final outcomes of your research. Organizations may make logical decisions about data center or hyperscaler hosting, resource allocation, and data ownership by using AI PCs for early development.

This goes beyond only being a theoretical framework. This strategy works, as shown by Northwestern Medicine’s organic success story. It have effectively used AI technology to improve patient care and expedite intricate operations, illustrating the practical advantages of using AI strategically.

Read more on Govindhtech.com

#DellAIPCs#AIPCs#LifeSciences#AI#AImodels#artificialintelligence#AItechnology#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

3 notes

·

View notes

Text

#AIArt#DigitalArt#AIGenerated#AIArtwork#AIArtist#GenerativeArt#AICommunity#MachineLearningArt#TechArt#FutureArt#StableDiffusion#SDXL#AIImageGeneration#FooocusAI#AIModels#CheckpointModels#LoRAModels#RealisticArt#SurrealArt#AbstractArt#CyberpunkArt#FantasyArt#DigitalRealism#ConceptArt#CreativeAI#ArtOfTheFuture#NextGenArt#ArtificialIntelligence#InnovationInArt#CreativeTechnology

2 notes

·

View notes

Text

instagram

#ObjectDetection#ComputerVision#MachineLearning#DeepLearning#YOLOv8#ImageRecognition#AIApplicationslications#VisionAI#AIModels#sunshinedigitalservices#Instagram

0 notes

Text

Learn how Open-FinLLMs is setting new benchmarks in financial applications with its multimodal capabilities and comprehensive financial knowledge. Finetuned from a 52 billion token financial corpus and powered by 573K financial instructions, this open-source model outperforms LLaMA3-8B and BloombergGPT. Discover how it can transform your financial data analysis.

#OpenFinLLMs#FinancialAI#LLMs#MultimodalAI#MachineLearning#ArtificialIntelligence#AIModels#artificial intelligence#open source#machine learning#software engineering#programming#ai#python

2 notes

·

View notes

Text

I feel amazing, how are you love?

#aimodel#aimodellife#aimodelling#aimodelgirl#aimode#aimodeling#aimodels#aigirlmodel#aigirlfantasy#aigirlsforyou#aigirlfried#aigirloftheweek#aigirlgallery#aigirl#aiforever#aifuture#aifantasy#aiphoto#aiphotography#aiportraits#aiplifestyle#aipfriendly#aiportrait#aiphotorealistic#aibeauty#aibeautifulgirl#buymeacoffee#loyalfans#fanvue#patreon

5 notes

·

View notes

Text

Opus 4 vs Sonnet 4: Which Claude 4 Model Should You Use?

Opus 4 delivers flagship-level reasoning, deep code generation, and 200K-token context with premium pricing (~5× cost). Sonnet 4 offers near-top performance with faster responses and cost‑efficient throughput—ideal for chatbots, content, and prototyping. https://www.creolestudios.com/claude-opus-4-vs-sonnet-4-ai-model-comparison/?utm_source=tumblr&utm_medium=socialbookmarking&utm_campaign=sumit

0 notes

Text

✨ AI Twins, Glam Vibes & Digital Artistry: Your Faceless Brand Just Got Real ✨

View On WordPress

#AestheticAI#AIModels#AITwin#blog#ContentCreatorLife#daily#DigitalBossBabe#DigitalEmpire#FacelessBrand#FacelessMarketing#GlowUpWithAI#GoldenCodeVault#LearnWithMe

0 notes