#Application Database Migration

Explore tagged Tumblr posts

Text

Reliable Data Migration Consultants in USA

When businesses in the USA face the challenge of transferring data between platforms, data migration consultants offer vital support. At RalanTech, our consultants specialize in securely migrating complex databases with zero data loss and minimal downtime. Whether moving to the cloud or upgrading legacy systems, we ensure that your critical information stays intact and accessible.

Our expert team uses strategic methodologies tailored to your organization's infrastructure. From small businesses to large enterprises, our consulting services are scalable, ensuring every migration is completed efficiently. RalanTech’s consultants deliver not just transition—but transformation—through reliable and repeatable processes.

#database migrations#Cloud Database Migration#Application Database Migration#ERP Database Migration#Data Center Migration

0 notes

Text

Transform Your Database Performance with Simple Logic!

Challenges: Database crashes caused by heavy BLOB data 📉 Slow performance impacting daily operations 🕒 Limited server capacity leading to bottlenecks 🚧

Our Solution: Offloaded BLOB data to a shared Linux server for improved efficiency 🖥️ Enhanced application code for seamless data fetching 🛠️ Migrated the database to a new, optimized server for better performance 🚀

The Results: 99% improved stability ✨ Faster query times ⏩ Optimized server performance, enabling critical tasks to run smoothly 🎯

Say goodbye to database crashes and hello to high performance! Let Simple Logic elevate your database optimization today. 🌟

💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/

🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/

👉 Contact us here: https://simplelogic-it.com/contact-us/

#Data#Database#DatabaseService#DataBasePerformance#SlowPerformance#Applications#DataFetching#Linux#Migration#BLOB#MySQL#Stability#SimpleLogicIT#MakingITSimple#MakeITSimple#SimpleLogic#ITServices#ITConsulting

0 notes

Text

clip studio paint is driving me up a wall

do you use clip studio paint on windows? are you fucking tired of running out of storage space because you download a lot of materials and brushes? are your materials files stuck on your local c drive and you don't know how to move it?

making this tutorial because for some reason all other tutorials miss what you're supposed to do after changing the directory. btw, i use windows. fuck ya macos (i don't know how to help you guys)

close all clip studio programs and go to clip studio

2. go to the settings icon in the top right corner and hover over it. click on location of materials (p)

remember the address of the current location of materials.

3. create a new folder in your alternate drive/storage space. do not add anything in it. do not copy anything in it. simply write that new folder's address in here. click save changes.

now if you're like me and downloaded a ton of stuff, it's gonna take a while to load. it's transferring your document and material folders over. let it run. when it's complete, acknowledge and accept the popup.

4. CRUCIAL: after this step, delete the old "Document" and "Material" folders from the old directory (the address you memorized) (likely located in some address that has AppData (newer versions) in it or Documents (older versions)) (if you can't find this, turn hidden folders on in file explorer, AppData is a hidden folder).

when you try to exit out of this clip studio instance, it will also remind you to remove old files before closing the application. do NOT delete the CLIPStudioCommon folder. LEAVE the DB and other folders there.

before:

after:

5. after deleting the old folders, exit out of the clip studio instance. and you're done.

now you should enjoy like, a good 10 more gb of storage. at least for me. i'm enjoying that. also i use csp latest version 1 version (like 1.13 something idk) (i'll upgrade when they give me a free upgrade).

i forget if this is available for earlier versions but i did migrate csp across devices a lot, and that was a bunch of manually copying folders, which won't work for transferring between drives like this tutorial.

by the way if you're doing something like manually copying full folders over (like the old method of transferring csp between devices), you may find that your materials/organization structure is gone. you can go through clip studio and run both "Organize Materials" and "Organize Material Folders" to restore them to that tab in clip studio paint. at least when i was fucking around trying to find out how to put my materials on a different drive, i went through several iterations of backing up and copying back over 13.4 gb of data because i'd mess up One step.

disclaimer: i have not tried downloading new materials yet. i swear to fucking god they better download in my other drive. i am sick and tired of running out of storage. imagine being an engineer debugging code and then files on your computer start to disappear because your C drive ran out of space. happened to me!!!

no i don't know what will happen if you try to put these materials folders on a flashdrive. i think that's an "external drive" and there's some warning for that in the middle of this process. i feel like there's nothing wrong with that so long as you always plug that drive in when you use clip studio products or CSP at least.

if you encounter problems in the middle of this, i don't know how to solve them. i saw some people encounter problems when transferring the data over after changing the address and i don't know where their issues were (this issue seems to be because they installed CSP on D). this seems to be an article summarizing transferring csp across devices (both cloud and the old manual way)

discussion below:

my theory is that the DB folders are like a "database" that holds addresses towards those files and therefore need to stay in the C drive for. some godawful reason. i think i tried installing csp on my D drive before, but it would automatically create a new folder in users/documents in my C drive (in older versions).

after weakly changing this directory and not giving a shit for a while (idk when updates come out. it must've been a year or a few), they changed this address to Users/AppData/somethign/blah blah

i spend too much time trying to manage storage on my computer it's not healthy. anyways kudos to treesize the program for telling me that this entire time clip studio paint fucking updated and changed my meticulous structure to place files in places where there's space to it's shit default structure.

oh and i don't think there's a solution here but it's kind of informative on clip studio's organization structure?

first wacom, now csp. sai1 didn't work with my huion either. maybe i'm forever doomed to be cucked by japanese art products. come to think of it, micron pens hate me too.

nobody ever let me touch copics.

#clip studio paint#clip studio paint tutorial#art reference#talks#literally gotta save this for MYSELF tbh#csp

12 notes

·

View notes

Text

Cloud Migration and Integration A Strategic Shift Toward Scalable Infrastructure

In today’s digital-first business environment, cloud computing is no longer just a technology trend—it’s a foundational element of enterprise strategy. As organizations seek greater agility, scalability, and cost-efficiency, cloud migration and integration have emerged as critical initiatives. However, transitioning to the cloud is far from a lift-and-shift process; it requires thoughtful planning, seamless integration, and a clear understanding of long-term business objectives.

What is Cloud Migration and Why Does It Matter

Cloud migration involves moving data, applications, and IT processes from on-premises infrastructure or legacy systems to cloud-based environments. These environments can be public, private, or hybrid, depending on the organization’s needs. While the move offers benefits such as cost reduction, improved performance, and on-demand scalability, the true value lies in enabling innovation through flexible technology infrastructure.

But migration is only the first step. Cloud integration—the process of configuring applications and systems to work cohesively within the cloud—is equally essential. Without integration, businesses may face operational silos, inconsistent data flows, and reduced productivity, undermining the very purpose of migration.

Key Considerations in Cloud Migration

A successful cloud migration depends on more than just transferring workloads. It involves analyzing current infrastructure, defining the desired end state, and selecting the right cloud model and service providers. Critical factors include:

Application suitability: Not all applications are cloud-ready. Some legacy systems may need reengineering or replacement.

Data governance: Moving sensitive data to the cloud demands a strong focus on compliance, encryption, and access controls.

Downtime management: Minimizing disruption during the migration process is essential for business continuity.

Security architecture: Ensuring that cloud environments are resilient against threats is a non-negotiable part of migration planning.

Integration for a Unified Ecosystem

Once in the cloud, seamless integration becomes the linchpin for realizing operational efficiency. Organizations must ensure that their applications, databases, and platforms communicate efficiently in real time. This includes integrating APIs, aligning with enterprise resource planning (ERP) systems, and enabling data exchange across multiple cloud platforms.

Hybrid and Multi-Cloud Strategies

Cloud strategies have evolved beyond single-provider solutions. Many organizations now adopt hybrid (combining on-premise and cloud infrastructure) or multi-cloud (using services from multiple cloud providers) approaches. While this enhances flexibility and avoids vendor lock-in, it adds complexity to integration and governance.

To address this, organizations need a unified approach to infrastructure orchestration, monitoring, and automation. Strong integration frameworks and middleware platforms become essential in stitching together a cohesive IT ecosystem.

Long-Term Value of Cloud Transformation

Cloud migration and integration are not one-time projects—they are ongoing transformations. As business needs evolve, cloud infrastructure must adapt through continuous optimization, cost management, and performance tuning.

Moreover, integrated cloud environments serve as the foundation for emerging technologies like artificial intelligence, data analytics, and Internet of Things (IoT), enabling businesses to innovate faster and more efficiently.

By treating cloud migration and integration as strategic investments rather than tactical moves, organizations position themselves to stay competitive, agile, and future-ready.

#CloudMigration#CloudIntegration#DigitalTransformation#HybridCloud#MultiCloud#CloudComputing#InfrastructureModernization#ITStrategy#BusinessContinuity

2 notes

·

View notes

Text

Vibecoding a production app

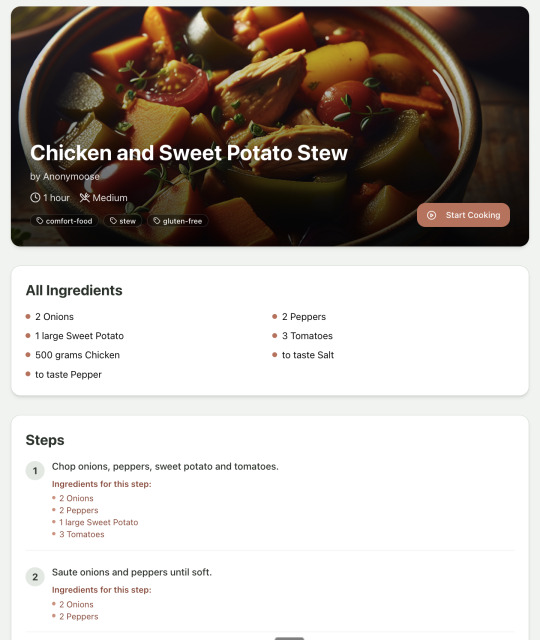

TL;DR I built and launched a recipe app with about 20 hours of work - recipeninja.ai

Background: I'm a startup founder turned investor. I taught myself (bad) PHP in 2000, and picked up Ruby on Rails in 2011. I'd guess 2015 was the last time I wrote a line of Ruby professionally. I've built small side projects over the years, but nothing with any significant usage. So it's fair to say I'm a little rusty, and I never really bothered to learn front end code or design.

In my day job at Y Combinator, I'm around founders who are building amazing stuff with AI every day and I kept hearing about the advances in tools like Lovable, Cursor and Windsurf. I love building stuff and I've always got a list of little apps I want to build if I had more free time.

About a month ago, I started playing with Lovable to build a word game based on Articulate (it's similar to Heads Up or Taboo). I got a working version, but I quickly ran into limitations - I found it very complicated to add a supabase backend, and it kept re-writing large parts of my app logic when I only wanted to make cosmetic changes. It felt like a toy - not ready to build real applications yet.

But I kept hearing great things about tools like Windsurf. A couple of weeks ago, I looked again at my list of app ideas to build and saw "Recipe App". I've wanted to build a hands-free recipe app for years. I love to cook, but the problem with most recipe websites is that they're optimized for SEO, not for humans. So you have pages and pages of descriptive crap to scroll through before you actually get to the recipe. I've used the recipe app Paprika to store my recipes in one place, but honestly it feels like it was built in 2009. The UI isn't great for actually cooking. My hands are covered in food and I don't really want to touch my phone or computer when I'm following a recipe.

So I set out to build what would become RecipeNinja.ai

For this project, I decided to use Windsurf. I wanted a Rails 8 API backend and React front-end app and Windsurf set this up for me in no time. Setting up homebrew on a new laptop, installing npm and making sure I'm on the right version of Ruby is always a pain. Windsurf did this for me step-by-step. I needed to set up SSH keys so I could push to GitHub and Heroku. Windsurf did this for me as well, in about 20% of the time it would have taken me to Google all of the relevant commands.

I was impressed that it started using the Rails conventions straight out of the box. For database migrations, it used the Rails command-line tool, which then generated the correct file names and used all the correct Rails conventions. I didn't prompt this specifically - it just knew how to do it. It one-shotted pretty complex changes across the React front end and Rails backend to work seamlessly together.

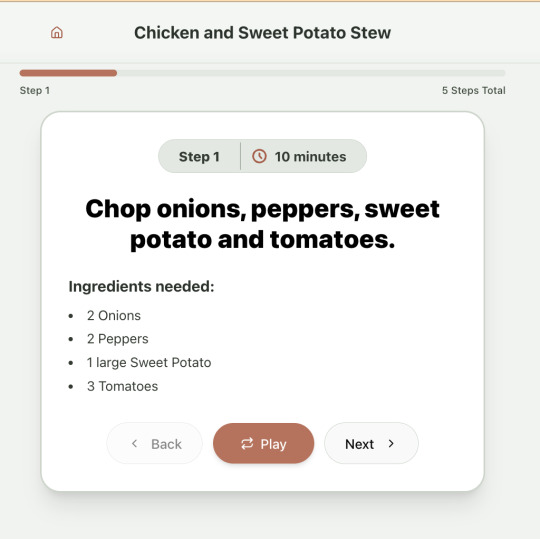

To start with, the main piece of functionality was to generate a complete step-by-step recipe from a simple input ("Lasagne"), generate an image of the finished dish, and then allow the user to progress through the recipe step-by-step with voice narration of each step. I used OpenAI for the LLM and ElevenLabs for voice. "Grandpa Spuds Oxley" gave it a friendly southern accent.

Recipe summary:

And the recipe step-by-step view:

I was pretty astonished that Windsurf managed to integrate both the OpenAI and Elevenlabs APIs without me doing very much at all. After we had a couple of problems with the open AI Ruby library, it quickly fell back to a raw ruby HTTP client implementation, but I honestly didn't care. As long as it worked, I didn't really mind if it used 20 lines of code or two lines of code. And Windsurf was pretty good about enforcing reasonable security practices. I wanted to call Elevenlabs directly from the front end while I was still prototyping stuff, and Windsurf objected very strongly, telling me that I was risking exposing my private API credentials to the Internet. I promised I'd fix it before I deployed to production and it finally acquiesced.

I decided I wanted to add "Advanced Import" functionality where you could take a picture of a recipe (this could be a handwritten note or a picture from a favourite a recipe book) and RecipeNinja would import the recipe. This took a handful of minutes.

Pretty quickly, a pattern emerged; I would prompt for a feature. It would read relevant files and make changes for two or three minutes, and then I would test the backend and front end together. I could quickly see from the JavaScript console or the Rails logs if there was an error, and I would just copy paste this error straight back into Windsurf with little or no explanation. 80% of the time, Windsurf would correct the mistake and the site would work. Pretty quickly, I didn't even look at the code it generated at all. I just accepted all changes and then checked if it worked in the front end.

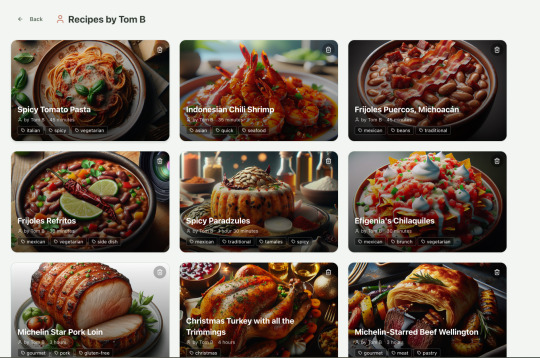

After a couple of hours of work on the recipe generation, I decided to add the concept of "Users" and include Google Auth as a login option. This would require extensive changes across the front end and backend - a database migration, a new model, new controller and entirely new UI. Windsurf one-shotted the code. It didn't actually work straight away because I had to configure Google Auth to add `localhost` as a valid origin domain, but Windsurf talked me through the changes I needed to make on the Google Auth website. I took a screenshot of the Google Auth config page and pasted it back into Windsurf and it caught an error I had made. I could login to my app immediately after I made this config change. Pretty mindblowing. You can now see who's created each recipe, keep a list of your own recipes, and toggle each recipe to public or private visibility. When I needed to set up Heroku to host my app online, Windsurf generated a bunch of terminal commands to configure my Heroku apps correctly. It went slightly off track at one point because it was using old Heroku APIs, so I pointed it to the Heroku docs page and it fixed it up correctly.

I always dreaded adding custom domains to my projects - I hate dealing with Registrars and configuring DNS to point at the right nameservers. But Windsurf told me how to configure my GoDaddy domain name DNS to work with Heroku, telling me exactly what buttons to press and what values to paste into the DNS config page. I pointed it at the Heroku docs again and Windsurf used the Heroku command line tool to add the "Custom Domain" add-ons I needed and fetch the right Heroku nameservers. I took a screenshot of the GoDaddy DNS settings and it confirmed it was right.

I can see very soon that tools like Cursor & Windsurf will integrate something like Browser Use so that an AI agent will do all this browser-based configuration work with zero user input.

I'm also impressed that Windsurf will sometimes start up a Rails server and use curl commands to check that an API is working correctly, or start my React project and load up a web preview and check the front end works. This functionality didn't always seem to work consistently, and so I fell back to testing it manually myself most of the time.

When I was happy with the code, it wrote git commits for me and pushed code to Heroku from the in-built command line terminal. Pretty cool!

I do have a few niggles still. Sometimes it's a little over-eager - it will make more changes than I want, without checking with me that I'm happy or the code works. For example, it might try to commit code and deploy to production, and I need to press "Stop" and actually test the app myself. When I asked it to add analytics, it went overboard and added 100 different analytics events in pretty insignificant places. When it got trigger-happy like this, I reverted the changes and gave it more precise commands to follow one by one.

The one thing I haven't got working yet is automated testing that's executed by the agent before it decides a task is complete; there's probably a way to do it with custom rules (I have spent zero time investigating this). It feels like I should be able to have an integration test suite that is run automatically after every code change, and then any test failures should be rectified automatically by the AI before it says it's finished.

Also, the AI should be able to tail my Rails logs to look for errors. It should spot things like database queries and automatically optimize my Active Record queries to make my app perform better. At the moment I'm copy-pasting in excerpts of the Rails logs, and then Windsurf quickly figures out that I've got an N+1 query problem and fixes it. Pretty cool.

Refactoring is also kind of painful. I've ended up with several files that are 700-900 lines long and contain duplicate functionality. For example, list recipes by tag and list recipes by user are basically the same.

Recipes by user:

This should really be identical to list recipes by tag, but Windsurf has implemented them separately.

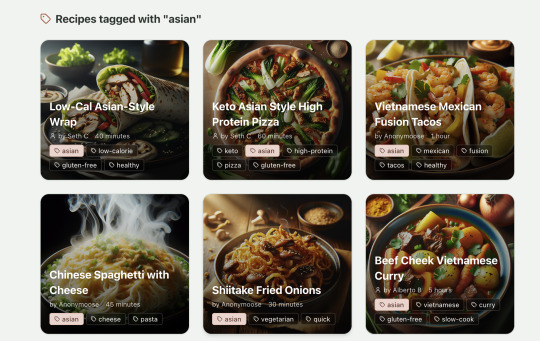

Recipes by tag:

If I ask Windsurf to refactor these two pages, it randomly changes stuff like renaming analytics events, rewriting user-facing alerts, and changing random little UX stuff, when I really want to keep the functionality exactly the same and only move duplicate code into shared modules. Instead, to successfully refactor, I had to ask Windsurf to list out ideas for refactoring, then prompt it specifically to refactor these things one by one, touching nothing else. That worked a little better, but it still wasn't perfect

Sometimes, adding minor functionality to the Rails API will often change the entire API response, rather just adding a couple of fields. Eg It will occasionally change Index Recipes to nest responses in an object { "recipes": [ ] }, versus just returning an array, which breaks the frontend. And then another minor change will revert it. This is where adding tests to identify and prevent these kinds of API changes would be really useful. When I ask Windsurf to fix these API changes, it will instead change the front end to accept the new API json format and also leave the old implementation in for "backwards compatibility". This ends up with a tangled mess of code that isn't really necessary. But I'm vibecoding so I didn't bother to fix it.

Then there was some changes that just didn't work at all. Trying to implement Posthog analytics in the front end seemed to break my entire app multiple times. I tried to add user voice commands ("Go to the next step"), but this conflicted with the eleven labs voice recordings. Having really good git discipline makes vibe coding much easier and less stressful. If something doesn't work after 10 minutes, I can just git reset head --hard. I've not lost very much time, and it frees me up to try more ambitious prompts to see what the AI can do. Less technical users who aren't familiar with git have lost months of work when the AI goes off on a vision quest and the inbuilt revert functionality doesn't work properly. It seems like adding more native support for version control could be a massive win for these AI coding tools.

Another complaint I've heard is that the AI coding tools don't write "production" code that can scale. So I decided to put this to the test by asking Windsurf for some tips on how to make the application more performant. It identified I was downloading 3 MB image files for each recipe, and suggested a Rails feature for adding lower resolution image variants automatically. Two minutes later, I had thumbnail and midsize variants that decrease the loading time of each page by 80%. Similarly, it identified inefficient N+1 active record queries and rewrote them to be more efficient. There are a ton more performance features that come built into Rails - caching would be the next thing I'd probably add if usage really ballooned.

Before going to production, I kept my promise to move my Elevenlabs API keys to the backend. Almost as an afterthought, I asked asked Windsurf to cache the voice responses so that I'd only make an Elevenlabs API call once for each recipe step; after that, the audio file was stored in S3 using Rails ActiveStorage and served without costing me more credits. Two minutes later, it was done. Awesome.

At the end of a vibecoding session, I'd write a list of 10 or 15 new ideas for functionality that I wanted to add the next time I came back to the project. In the past, these lists would've built up over time and never gotten done. Each task might've taken me five minutes to an hour to complete manually. With Windsurf, I was astonished how quickly I could work through these lists. Changes took one or two minutes each, and within 30 minutes I'd completed my entire to do list from the day before. It was astonishing how productive I felt. I can create the features faster than I can come up with ideas.

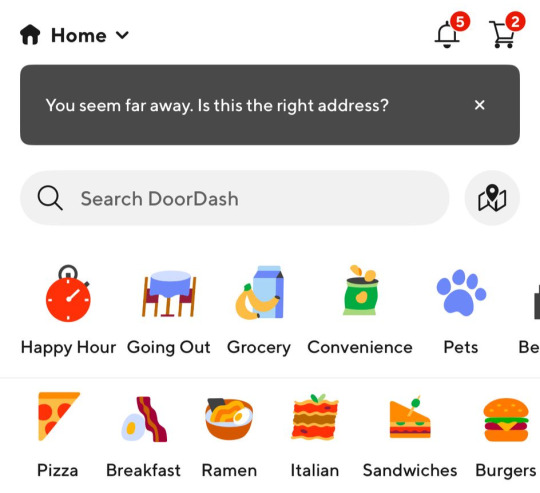

Before launching, I wanted to improve the design, so I took a quick look at a couple of recipe sites. They were much more visual than my site, and so I simply told Windsurf to make my design more visual, emphasizing photos of food. Its first try was great. I showed it to a couple of friends and they suggested I should add recipe categories - "Thai" or "Mexican" or "Pizza" for example. They showed me the DoorDash app, so I took a screenshot of it and pasted it into Windsurf. My prompt was "Give me a carousel of food icons that look like this". Again, this worked in one shot. I think my version actually looks better than Doordash 🤷♂️

Doordash:

My carousel:

I also saw I was getting a console error from missing Favicon. I always struggle to make Favicon for previous sites because I could never figure out where they were supposed to go or what file format they needed. I got OpenAI to generate me a little recipe ninja icon with a transparent background and I saved it into my project directory. I asked Windsurf what file format I need and it listed out nine different sizes and file formats. Seems annoying. I wondered if Windsurf could just do it all for me. It quickly wrote a series of Bash commands to create a temporary folder, resize the image and create the nine variants I needed. It put them into the right directory and then cleaned up the temporary directory. I laughed in amazement. I've never been good at bash scripting and I didn't know if it was even possible to do what I was asking via the command line. I guess it is possible.

After launching and posting on Twitter, a few hundred users visited the site and generated about 1000 recipes. I was pretty happy! Unfortunately, the next day I woke up and saw that I had a $700 OpenAI bill. Someone had been abusing the site and costing me a lot of OpenAI credits by creating a single recipe over and over again - "Pasta with Shallots and Pineapple". They did this 12,000 times. Obviously, I had not put any rate limiting in.

Still, I was determined not to write any code. I explained the problem and asked Windsurf to come up with solutions. Seconds later, I had 15 pretty good suggestions. I implemented several (but not all) of the ideas in about 10 minutes and the abuse stopped dead in its tracks. I won't tell you which ones I chose in case Mr Shallots and Pineapple is reading. The app's security is not perfect, but I'm pretty happy with it for the scale I'm at. If I continue to grow and get more abuse, I'll implement more robust measures.

Overall, I am astonished how productive Windsurf has made me in the last two weeks. I'm not a good designer or frontend developer, and I'm a very rusty rails dev. I got this project into production 5 to 10 times faster than it would've taken me manually, and the level of polish on the front end is much higher than I could've achieved on my own. Over and over again, I would ask for a change and be astonished at the speed and quality with which Windsurf implemented it. I just sat laughing as the computer wrote code.

The next thing I want to change is making the recipe generation process much more immediate and responsive. Right now, it takes about 20 seconds to generate a recipe and for a new user it feels like maybe the app just isn't doing anything.

Instead, I'm experimenting with using Websockets to show a streaming response as the recipe is created. This gives the user immediate feedback that something is happening. It would also make editing the recipe really fun - you could ask it to "add nuts" to the recipe, and see as the recipe dynamically updates 2-3 seconds later. You could also say "Increase the quantities to cook for 8 people" or "Change from imperial to metric measurements".

I have a basic implementation working, but there are still some rough edges. I might actually go and read the code this time to figure out what it's doing!

I also want to add a full voice agent interface so that you don't have to touch the screen at all. Halfway through cooking a recipe, you might ask "I don't have cilantro - what could I use instead?" or say "Set a timer for 30 minutes". That would be my dream recipe app!

Tools like Windsurf or Cursor aren't yet as useful for non-technical users - they're extremely powerful and there are still too many ways to blow your own face off. I have a fairly good idea of the architecture that I want Windsurf to implement, and I could quickly spot when it was going off track or choosing a solution that was inappropriately complicated for the feature I was building. At the moment, a technical background is a massive advantage for using Windsurf. As a rusty developer, it made me feel like I had superpowers.

But I believe within a couple of months, when things like log tailing and automated testing and native version control get implemented, it will be an extremely powerful tool for even non-technical people to write production-quality apps. The AI will be able to make complex changes and then verify those changes are actually working. At the moment, it feels like it's making a best guess at what will work and then leaving the user to test it. Implementing better feedback loops will enable a truly agentic, recursive, self-healing development flow. It doesn't feel like it needs any breakthrough in technology to enable this. It's just about adding a few tool calls to the existing LLMs. My mind races as I try to think through the implications for professional software developers.

Meanwhile, the LLMs aren't going to sit still. They're getting better at a frightening rate. I spoke to several very capable software engineers who are Y Combinator founders in the last week. About a quarter of them told me that 95% of their code is written by AI. In six or twelve months, I just don't think software engineering is going exist in the same way as it does today. The cost of creating high-quality, custom software is quickly trending towards zero.

You can try the site yourself at recipeninja.ai

Here's a complete list of functionality. Of course, Windsurf just generated this list for me 🫠

RecipeNinja: Comprehensive Functionality Overview

Core Concept: the app appears to be a cooking assistant application that provides voice-guided recipe instructions, allowing users to cook hands-free while following step-by-step recipe guidance.

Backend (Rails API) Functionality

User Authentication & Authorization

Google OAuth integration for user authentication

User account management with secure authentication flows

Authorization system ensuring users can only access their own private recipes or public recipes

Recipe Management

Recipe Model Features:

Unique public IDs (format: "r_" + 14 random alphanumeric characters) for security

User ownership (user_id field with NOT NULL constraint)

Public/private visibility toggle (default: private)

Comprehensive recipe data storage (title, ingredients, steps, cooking time, etc.)

Image attachment capability using Active Storage with S3 storage in production

Recipe Tagging System:

Many-to-many relationship between recipes and tags

Tag model with unique name attribute

RecipeTag join model for the relationship

Helper methods for adding/removing tags from recipes

Recipe API Endpoints:

CRUD operations for recipes

Pagination support with metadata (current_page, per_page, total_pages, total_count)

Default sorting by newest first (created_at DESC)

Filtering recipes by tags

Different serializers for list view (RecipeSummarySerializer) and detail view (RecipeSerializer)

Voice Generation

Voice Recording System:

VoiceRecording model linked to recipes

Integration with Eleven Labs API for text-to-speech conversion

Caching of voice recordings in S3 to reduce API calls

Unique identifiers combining recipe_id, step_id, and voice_id

Force regeneration option for refreshing recordings

Audio Processing:

Using streamio-ffmpeg gem for audio file analysis

Active Storage integration for audio file management

S3 storage for audio files in production

Recipe Import & Generation

RecipeImporter Service:

OpenAI integration for recipe generation

Conversion of text recipes into structured format

Parsing and normalization of recipe data

Import from photos functionality

Frontend (React) Functionality

User Interface Components

Recipe Selection & Browsing:

Recipe listing with pagination

Real-time updates with 10-second polling mechanism

Tag filtering functionality

Recipe cards showing summary information (without images)

"View Details" and "Start Cooking" buttons for each recipe

Recipe Detail View:

Complete recipe information display

Recipe image display

Tag display with clickable tags

Option to start cooking from this view

Cooking Experience:

Step-by-step recipe navigation

Voice guidance for each step

Keyboard shortcuts for hands-free control:

Arrow keys for step navigation

Space for play/pause audio

Escape to return to recipe selection

URL-based step tracking (e.g., /recipe/r_xlxG4bcTLs9jbM/classic-lasagna/steps/1)

State Management & Data Flow

Recipe Service:

API integration for fetching recipes

Support for pagination parameters

Tag-based filtering

Caching mechanisms for recipe data

Image URL handling for detailed views

Authentication Flow:

Google OAuth integration using environment variables

User session management

Authorization header management for API requests

Progressive Web App Features

PWA capabilities for installation on devices

Responsive design for various screen sizes

Favicon and app icon support

Deployment Architecture

Two-App Structure:

cook-voice-api: Rails backend on Heroku

cook-voice-wizard: React frontend/PWA on Heroku

Backend Infrastructure:

Ruby 3.2.2

PostgreSQL database (Heroku PostgreSQL addon)

Amazon S3 for file storage

Environment variables for configuration

Frontend Infrastructure:

React application

Environment variable configuration

Static buildpack on Heroku

SPA routing configuration

Security Measures:

HTTPS enforcement

Rails credentials system

Environment variables for sensitive information

Public ID system to mask database IDs

This comprehensive overview covers the major functionality of the Cook Voice application based on the available information. The application appears to be a sophisticated cooking assistant that combines recipe management with voice guidance to create a hands-free cooking experience.

2 notes

·

View notes

Text

New regulations on the screening of non-EU nationals at the bloc’s external borders, which come into force this week, could have major implications for migrants and asylum seekers’ privacy rights, campaigners warn.

Ozan Mirkan Balpetek, Advocacy and Communications Coordinator for Legal Centre Lesvos, an island in Greece on the so-called Balkan Route for migrants seeking to reach Western Europe, says the new pact “will significantly expand the Eurodac database [European Asylum Dactyloscopy Database], creating overlaps with other databases, such as international criminal records accessible to police forces”.

“Specific provisions of the pact directly undermine GDPR regulations that protect personal data from being improperly processed,” Balpetek told BIRN. “The pact only expands existing rights violations, including data breaches. Consequently, information shared by asylum seekers can be used against them during the asylum process, potentially leading to further criminalization of racialized communities,” he added.

The European Council confirmed the deal in May, and it should start being implemented in June 2026.

This legislation sets out new procedures for managing the arrival of irregular migrants, processing asylum applications, determining the EU country responsible for these applications, and devising strategies to handle migration crises.

The pact promises a “robust” screening at the borders to differentiate between those people deemed in need of international protection and those who are not.

The screening and border procedures will mandate extensive data collection and automatic exchanges, resulting in a regime of mass surveillance of migrants. Reforms to the Eurodac Regulation will mandate the systematic collection of migrants’ biometric data, now including facial images, which will be retained in databases for up to 10 years. The reform also lowers the thresholder for storing data in the system to the age of six.

Amnesty International in Greece said the new regulation “will set back European asylum law for decades to come”.

“These proposals come hand in hand with mounting efforts to shift responsibility for refugee protection and border control to countries outside of the EU – such as recent deals with Tunisia, Egypt, and Mauritania – or attempts to externalize the processing of asylum claims to Albania,” the human rights organisation told BIRN.

“These practices risk trapping people in states where their human rights will be in danger, render the EU complicit in the abuses that may follow, and compromises Europe’s ability to uphold human rights beyond the bloc,” it added.

NGOs working with people in need have been warning for months that the pact will systematically violate fundamental principles, resulting in a proliferation of rights violations in Europe.

Jesuit Refugee Services, including its arm in Croatia, said in April in a joint statement that it “cannot support a system that will enable the systematic detention of thousands of people, including children, at the EU’s external borders.

“The proposed legislation will exponentially increase human suffering while offering no real solutions to current system deficiencies,” JRS said.

Despite criticism, the European Parliament adopted the regulation in April.

Individuals who do not meet the entry requirements will be registered and undergo identification, security, and health checks. These checks are to be completed within seven days at the EU’s external borders and within three days for those apprehended within the EU.

Under the new system, EU member states can either accept a minimum of 30,000 asylum applicants annually or contribute at least 20,000 euros per asylum applicant to a joint EU fund.

After screening, individuals will be swiftly directed into one of three procedures: Border Procedures, Asylum Procedures, or Returns Procedures.

5 notes

·

View notes

Text

every now and again I start to think that maybe windows was right recommending a whole key/value database for system and application configuration but I just migrated my weechat config between servers by going `scp -r ~/.weechat awful.cloud:.weechat` and it worked with almost zero apparent issues and I'm like right they were wrong and are actively hurting their users.

I'm still convinced that the registry is part of why Windows gets really slow cold boot times even on modern hardware.

11 notes

·

View notes

Text

Cloud Agnostic: Achieving Flexibility and Independence in Cloud Management

As businesses increasingly migrate to the cloud, they face a critical decision: which cloud provider to choose? While AWS, Microsoft Azure, and Google Cloud offer powerful platforms, the concept of "cloud agnostic" is gaining traction. Cloud agnosticism refers to a strategy where businesses avoid vendor lock-in by designing applications and infrastructure that work across multiple cloud providers. This approach provides flexibility, independence, and resilience, allowing organizations to adapt to changing needs and avoid reliance on a single provider.

What Does It Mean to Be Cloud Agnostic?

Being cloud agnostic means creating and managing systems, applications, and services that can run on any cloud platform. Instead of committing to a single cloud provider, businesses design their architecture to function seamlessly across multiple platforms. This flexibility is achieved by using open standards, containerization technologies like Docker, and orchestration tools such as Kubernetes.

Key features of a cloud agnostic approach include:

Interoperability: Applications must be able to operate across different cloud environments.

Portability: The ability to migrate workloads between different providers without significant reconfiguration.

Standardization: Using common frameworks, APIs, and languages that work universally across platforms.

Benefits of Cloud Agnostic Strategies

Avoiding Vendor Lock-InThe primary benefit of being cloud agnostic is avoiding vendor lock-in. Once a business builds its entire infrastructure around a single cloud provider, it can be challenging to switch or expand to other platforms. This could lead to increased costs and limited innovation. With a cloud agnostic strategy, businesses can choose the best services from multiple providers, optimizing both performance and costs.

Cost OptimizationCloud agnosticism allows companies to choose the most cost-effective solutions across providers. As cloud pricing models are complex and vary by region and usage, a cloud agnostic system enables businesses to leverage competitive pricing and minimize expenses by shifting workloads to different providers when necessary.

Greater Resilience and UptimeBy operating across multiple cloud platforms, organizations reduce the risk of downtime. If one provider experiences an outage, the business can shift workloads to another platform, ensuring continuous service availability. This redundancy builds resilience, ensuring high availability in critical systems.

Flexibility and ScalabilityA cloud agnostic approach gives companies the freedom to adjust resources based on current business needs. This means scaling applications horizontally or vertically across different providers without being restricted by the limits or offerings of a single cloud vendor.

Global ReachDifferent cloud providers have varying levels of presence across geographic regions. With a cloud agnostic approach, businesses can leverage the strengths of various providers in different areas, ensuring better latency, performance, and compliance with local regulations.

Challenges of Cloud Agnosticism

Despite the advantages, adopting a cloud agnostic approach comes with its own set of challenges:

Increased ComplexityManaging and orchestrating services across multiple cloud providers is more complex than relying on a single vendor. Businesses need robust management tools, monitoring systems, and teams with expertise in multiple cloud environments to ensure smooth operations.

Higher Initial CostsThe upfront costs of designing a cloud agnostic architecture can be higher than those of a single-provider system. Developing portable applications and investing in technologies like Kubernetes or Terraform requires significant time and resources.

Limited Use of Provider-Specific ServicesCloud providers often offer unique, advanced services—such as machine learning tools, proprietary databases, and analytics platforms—that may not be easily portable to other clouds. Being cloud agnostic could mean missing out on some of these specialized services, which may limit innovation in certain areas.

Tools and Technologies for Cloud Agnostic Strategies

Several tools and technologies make cloud agnosticism more accessible for businesses:

Containerization: Docker and similar containerization tools allow businesses to encapsulate applications in lightweight, portable containers that run consistently across various environments.

Orchestration: Kubernetes is a leading tool for orchestrating containers across multiple cloud platforms. It ensures scalability, load balancing, and failover capabilities, regardless of the underlying cloud infrastructure.

Infrastructure as Code (IaC): Tools like Terraform and Ansible enable businesses to define cloud infrastructure using code. This makes it easier to manage, replicate, and migrate infrastructure across different providers.

APIs and Abstraction Layers: Using APIs and abstraction layers helps standardize interactions between applications and different cloud platforms, enabling smooth interoperability.

When Should You Consider a Cloud Agnostic Approach?

A cloud agnostic approach is not always necessary for every business. Here are a few scenarios where adopting cloud agnosticism makes sense:

Businesses operating in regulated industries that need to maintain compliance across multiple regions.

Companies require high availability and fault tolerance across different cloud platforms for mission-critical applications.

Organizations with global operations that need to optimize performance and cost across multiple cloud regions.

Businesses aim to avoid long-term vendor lock-in and maintain flexibility for future growth and scaling needs.

Conclusion

Adopting a cloud agnostic strategy offers businesses unparalleled flexibility, independence, and resilience in cloud management. While the approach comes with challenges such as increased complexity and higher upfront costs, the long-term benefits of avoiding vendor lock-in, optimizing costs, and enhancing scalability are significant. By leveraging the right tools and technologies, businesses can achieve a truly cloud-agnostic architecture that supports innovation and growth in a competitive landscape.

Embrace the cloud agnostic approach to future-proof your business operations and stay ahead in the ever-evolving digital world.

2 notes

·

View notes

Text

Comparing Laravel And WordPress: Which Platform Reigns Supreme For Your Projects? - Sohojware

Choosing the right platform for your web project can be a daunting task. Two popular options, Laravel and WordPress, cater to distinct needs and offer unique advantages. This in-depth comparison by Sohojware, a leading web development company, will help you decipher which platform reigns supreme for your specific project requirements.

Understanding Laravel

Laravel is a powerful, open-source PHP web framework designed for the rapid development of complex web applications. It enforces a clean and modular architecture, promoting code reusability and maintainability. Laravel offers a rich ecosystem of pre-built functionalities and tools, enabling developers to streamline the development process.

Here's what makes Laravel stand out:

MVC Architecture: Laravel adheres to the Model-View-Controller (MVC) architectural pattern, fostering a well-organized and scalable project structure.

Object-Oriented Programming: By leveraging object-oriented programming (OOP) principles, Laravel promotes code clarity and maintainability.

Built-in Features: Laravel boasts a plethora of built-in features like authentication, authorization, caching, routing, and more, expediting the development process.

Artisan CLI: Artisan, Laravel's powerful command-line interface (CLI), streamlines repetitive tasks like code generation, database migrations, and unit testing.

Security: Laravel prioritizes security by incorporating features like CSRF protection and secure password hashing, safeguarding your web applications.

However, Laravel's complexity might pose a challenge for beginners due to its steeper learning curve compared to WordPress.

Understanding WordPress

WordPress is a free and open-source content management system (CMS) dominating the web. It empowers users with a user-friendly interface and a vast library of plugins and themes, making it ideal for creating websites and blogs without extensive coding knowledge.

Here's why WordPress is a popular choice:

Ease of Use: WordPress boasts an intuitive interface, allowing users to create and manage content effortlessly, even with minimal technical expertise.

Flexibility: A vast repository of themes and plugins extends WordPress's functionality, enabling customization to suit diverse website needs.

SEO Friendliness: WordPress is inherently SEO-friendly, incorporating features that enhance your website's ranking.

Large Community: WordPress enjoys a massive and active community, providing abundant resources, tutorials, and support.

While user-friendly, WordPress might struggle to handle complex functionalities or highly customized web applications.

Choosing Between Laravel and WordPress

The optimal platform hinges on your project's specific requirements. Here's a breakdown to guide your decision:

Laravel is Ideal For:

Complex web applications require a high degree of customization.

Projects demanding powerful security features.

Applications with a large user base or intricate data structures.

Websites require a high level of performance and scalability.

WordPress is Ideal For:

Simple websites and blogs.

Projects with a primary focus on content management.

E-commerce stores with basic product management needs (using WooCommerce plugin).

Websites requiring frequent content updates by non-technical users.

Sohojware, a well-versed web development company in the USA, can assist you in making an informed decision. Our team of Laravel and WordPress experts will assess your project's needs and recommend the most suitable platform to ensure your web project's success.

In conclusion, both Laravel and WordPress are powerful platforms, each catering to distinct project needs. By understanding their strengths and limitations, you can make an informed decision that empowers your web project's success. Sohojware, a leading web development company in the USA, possesses the expertise to guide you through the selection process and deliver exceptional results, regardless of the platform you choose. Let's leverage our experience to bring your web vision to life.

FAQs about Laravel and WordPress Development by Sohojware

1. Which platform is more cost-effective, Laravel or WordPress?

While WordPress itself is free, ongoing maintenance and customization might require development expertise. Laravel projects typically involve developer costs, but these can be offset by the long-term benefits of a custom-built, scalable application. Sohojware can provide cost-effective solutions for both Laravel and WordPress development.

2. Does Sohojware offer support after project completion?

Sohojware offers comprehensive post-development support for both Laravel and WordPress projects. Our maintenance and support plans ensure your website's continued functionality, security, and performance.

3. Can I migrate my existing website from one platform to another?

Website migration is feasible, but the complexity depends on the website's size and architecture. Sohojware's experienced developers can assess the migration feasibility and execute the process seamlessly.

4. How can Sohojware help me with Laravel or WordPress development?

Sohojware offers a comprehensive range of Laravel and WordPress development services, encompassing custom development, theme and plugin creation, integration with third-party applications, and ongoing maintenance.

5. Where can I find more information about Sohojware's Laravel and WordPress development services?

You can find more information about Sohojware's Laravel and WordPress development services by visiting our website at https://sohojware.com/ or contacting our sales team directly. We'd happily discuss your project requirements and recommend the most suitable platform to achieve your goals.

3 notes

·

View notes

Text

Exploring Essential Laravel Development Tools for Building Powerful Web Applications

Laravel has emerged as one of the most popular PHP frameworks, providing builders a sturdy and green platform for building net packages. Central to the fulfillment of Laravel tasks are the development tools that streamline the improvement process, decorate productiveness, and make certain code quality. In this article, we will delve into the best Laravel development tools that each developer should be acquainted with.

1 Composer: Composer is a dependency manager for PHP that allows you to declare the libraries your project relies upon on and manages them for you. Laravel itself relies closely on Composer for package deal management, making it an essential device for Laravel builders. With Composer, you may without problems upload, eliminate, or update applications, making sure that your Laravel project stays up-to-date with the present day dependencies.

2 Artisan: Artisan is the command-line interface blanketed with Laravel, presenting various helpful instructions for scaffolding, handling migrations, producing controllers, models, and plenty extra. Laravel builders leverage Artisan to automate repetitive tasks and streamline improvement workflows, thereby growing efficiency and productiveness.

3 Laravel Debugbar: Debugging is an crucial component of software program development, and Laravel Debugbar simplifies the debugging procedure by using supplying exact insights into the application's overall performance, queries, views, and greater. It's a accessible device for identifying and resolving problems all through improvement, making sure the clean functioning of your Laravel application.

4 Laravel Telescope: Similar to Laravel Debugbar, Laravel Telescope is a debugging assistant for Laravel programs, presenting actual-time insights into requests, exceptions, database queries, and greater. With its intuitive dashboard, developers can monitor the software's behavior, pick out performance bottlenecks, and optimize hence.

5 Laravel Mix: Laravel Mix offers a fluent API for outlining webpack build steps on your Laravel application. It simplifies asset compilation and preprocessing duties together with compiling SASS or LESS documents, concatenating and minifying JavaScript documents, and dealing with versioning. Laravel Mix significantly streamlines the frontend improvement procedure, permitting builders to attention on building notable consumer reviews.

6 Laravel Horizon: Laravel Horizon is a dashboard and configuration system for Laravel's Redis queue, imparting insights into process throughput, runtime metrics, and more. It enables builders to monitor and control queued jobs efficiently, ensuring most beneficial performance and scalability for Laravel programs that leverage history processing.

7 Laravel Envoyer: Laravel Envoyer is a deployment tool designed specifically for Laravel packages, facilitating seamless deployment workflows with 0 downtime. It automates the deployment process, from pushing code adjustments to more than one servers to executing deployment scripts, thereby minimizing the chance of errors and ensuring smooth deployments.

8 Laravel Dusk: Laravel Dusk is an cease-to-give up browser testing tool for Laravel applications, built on pinnacle of the ChromeDriver and WebDriverIO. It lets in builders to put in writing expressive and dependable browser assessments, making sure that critical user interactions and workflows function as expected across exceptional browsers and environments.

9 Laravel Valet: Laravel Valet gives a light-weight improvement surroundings for Laravel applications on macOS, offering seamless integration with equipment like MySQL, NGINX, and PHP. It simplifies the setup process, permitting developers to consciousness on writing code instead of configuring their development environment.

In end, mastering the vital Laravel development tools noted above is important for building robust, green, and scalable internet packages with Laravel. Whether it's handling dependencies, debugging troubles, optimizing overall performance, or streamlining deployment workflows, those equipment empower Laravel developers to supply outstanding answers that meet the demands of current internet development. Embracing these gear will certainly increase your Laravel improvement enjoy and accelerate your journey toward turning into a talented Laravel developer.

3 notes

·

View notes

Text

Laravel development services offer a myriad of benefits for businesses seeking efficient and scalable web solutions. With its robust features, Laravel streamlines development processes, enhancing productivity and reducing time-to-market. From built-in security features to seamless database migrations, Laravel ensures smooth performance and maintenance. Its modular structure allows for easy customization, making it a preferred choice for creating dynamic and high-performance web applications.

2 notes

·

View notes

Text

The Debate of the Decade: What to choose as the backend framework Node.Js or Ruby on Rails?

New, cutting-edge web development frameworks and tools have been made available in recent years. While this variety is great for developers and company owners alike, it does come with certain drawbacks. This not only creates a lot of confusion but also slows down development at a time when quick and effective answers are essential. This is why discussions about whether Ruby on Rails or Noe.js is superior continue to rage. What framework is best for what kind of project is a hotly contested question. Nivida Web Solutions is a top-tier web development company in Vadodara. Nivida Web Solutions is the place to go if you want to make a beautiful website that gets people talking.

Identifying the optimal option for your work is challenging. This piece breaks things down for you. Two widely used web development frameworks, RoR and Node.js, are compared and contrasted in this article. We'll also get deep into contrasting RoR and Node.js. Let's get started with a quick overview of Ruby on Rails and Node.js.

NodeJS:

This method makes it possible to convert client-side software to server-side ones. At the node, JavaScript is usually converted into machine code that the hardware can process with a single click. Node.js is a very efficient server-side web framework built on the Chrome V8 Engine. It makes a sizable contribution to the maximum conversion rate achievable under normal operating conditions.

There are several open-source libraries available through the Node Package Manager that make the Node.js ecosystem special. Node.js's built-in modules make it suitable for managing everything from computer resources to security information. Are you prepared to make your mark in the online world? If you want to improve your online reputation, team up with Nivida Web Solutions, the best web development company in Gujarat.

Key Features:

· Cross-Platforms Interoperability

· V8 Engine

· Microservice Development and Swift Deployment

· Easy to Scale

· Dependable Technology

Ruby on Rails:

The back-end framework Ruby on Rails (RoR) is commonly used for both web and desktop applications. Developers appreciate the Ruby framework because it provides a solid foundation upon which other website elements may be built. A custom-made website can greatly enhance your visibility on the web. If you're looking for a trustworthy web development company in India, go no further than Nivida Web Solutions.

Ruby on Rails' cutting-edge features, such as automatic table generation, database migrations, and view scaffolding, are a big reason for the framework's widespread adoption.

Key Features:

· MVC Structure

· Current Record

· Convention Over Configuration (CoC)

· Automatic Deployment

· The Boom of Mobile Apps

· Sharing Data in Databases

Node.js v/s RoR:

· Libraries:

The Rails package library is called the Ruby Gems. However, the Node.Js Node Package Manager (NPM) provides libraries and packages to help programmers avoid duplicating their work. Ruby Gems and NPM work together to make it easy to generate NPM packages with strict version control and straightforward installation.

· Performance:

Node.js' performance has been lauded for its speed. Node.js is the go-to framework for resource-intensive projects because of its ability to run asynchronous code and the fact that it is powered by Google's V8 engine. Ruby on Rails is 20 times less efficient than Node.js.

· Scalability:

Ruby's scalability is constrained by comparison to Node.js due to the latter's cluster module. In an abstraction-based cluster, the number of CPUs a process uses is based on the demands of the application.

· Architecture:

The Node.js ecosystem has a wealth of useful components, but JavaScript was never designed to handle backend activities and has significant constraints when it comes to cutting-edge construction strategies. Ruby on Rails, in contrast to Node.js, is a framework that aims to streamline the process of building out a website's infrastructure by eliminating frequent installation problems.

· The learning curve:

Ruby has a low barrier to entry since it is an easy language to learn. The learning curve with Node.js is considerably lower. JavaScript veterans will have the easiest time learning the language, but developers acquainted with various languages should have no trouble.

Final Thoughts:

Both Node.JS and RoR have been tried and tested in real-world scenarios. Ruby on Rails is great for fast-paced development teams, whereas Node.js excels at building real-time web apps and single-page applications.

If you are in need of a back-end developer, Nivida Web Solutions, a unique web development agency in Gujarat, can assist you in creating a product that will both meet and exceed the needs of your target audience.

#web development company in vadodara#web development company in India#web development company in Gujarat#Web development Companies in Vadodara#Web development Companies in India#Web development Companies in Gujarat#Web development agency in Gujarat#Web development agency in India#Web development agency in Vadodara

8 notes

·

View notes

Text

Top 10 Laravel Development Companies in the USA in 2024

Laravel is a widely-used open-source PHP web framework designed for creating web applications using the model-view-controller (MVC) architectural pattern. It offers developers a structured and expressive syntax, as well as a variety of built-in features and tools to enhance the efficiency and enjoyment of the development process.

Key components of Laravel include:

1. Eloquent ORM (Object-Relational Mapping): Laravel simplifies database interactions by enabling developers to work with database records as objects through a powerful ORM.

2. Routing: Laravel provides a straightforward and expressive method for defining application routes, simplifying the handling of incoming HTTP requests.

3. Middleware: This feature allows for the filtering of HTTP requests entering the application, making it useful for tasks like authentication, logging, and CSRF protection.

4. Artisan CLI (Command Line Interface): Laravel comes with Artisan, a robust command-line tool that offers commands for tasks such as database migrations, seeding, and generating boilerplate code.

5. Database Migrations and Seeding: Laravel's migration system enables version control of the database schema and easy sharing of changes across the team. Seeding allows for populating the database with test data.

6. Queue Management: Laravel's queue system permits deferred or background processing of tasks, which can enhance application performance and responsiveness.

7. Task Scheduling: Laravel provides a convenient way to define scheduled tasks within the application.

What are the reasons to opt for Laravel Web Development?

Laravel makes web development easier, developers more productive, and web applications more secure and scalable, making it one of the most important frameworks in web development.

There are multiple compelling reasons to choose Laravel for web development:

1. Clean and Organized Code: Laravel provides a sleek and expressive syntax, making writing and maintaining code simple. Its well-structured architecture follows the MVC pattern, enhancing code readability and maintainability.

2. Extensive Feature Set: Laravel comes with a wide range of built-in features and tools, including authentication, routing, caching, and session management.

3. Rapid Development: With built-in templates, ORM (Object-Relational Mapping), and powerful CLI (Command Line Interface) tools, Laravel empowers developers to build web applications quickly and efficiently.

4. Robust Security Measures: Laravel incorporates various security features such as encryption, CSRF (Cross-Site Request Forgery) protection, authentication, and authorization mechanisms.

5. Thriving Community and Ecosystem: Laravel boasts a large and active community of developers who provide extensive documentation, tutorials, and forums for support.

6. Database Management: Laravel's migration system allows developers to manage database schemas effortlessly, enabling version control and easy sharing of database changes across teams. Seeders facilitate the seeding of databases with test data, streamlining the testing and development process.

7. Comprehensive Testing Support: Laravel offers robust testing support, including integration with PHPUnit for writing unit and feature tests. It ensures that applications are thoroughly tested and reliable, reducing the risk of bugs and issues in production.

8. Scalability and Performance: Laravel provides scalability options such as database sharding, queue management, and caching mechanisms. These features enable applications to handle increased traffic and scale effectively.

Top 10 Laravel Development Companies in the USA in 2024

The Laravel framework is widely utilised by top Laravel development companies. It stands out among other web application development frameworks due to its advanced features and development tools that expedite web development. Therefore, this article aims to provide a list of the top 10 Laravel Development Companies in 2024, assisting you in selecting a suitable Laravel development company in the USA for your project.

IBR Infotech

IBR Infotech excels in providing high-quality Laravel web development services through its team of skilled Laravel developers. Enhance your online visibility with their committed Laravel development team, which is prepared to turn your ideas into reality accurately and effectively. Count on their top-notch services to receive the best as they customise solutions to your business requirements. Being a well-known Laravel Web Development Company IBR infotech is offering the We provide bespoke Laravel solutions to our worldwide customer base in the United States, United Kingdom, Europe, and Australia, ensuring prompt delivery and competitive pricing.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $25 — $49 / hr

No. Employee: 10–49

Founded Year : 2014

Verve Systems

Elevate your enterprise with Verve Systems' Laravel development expertise. They craft scalable, user-centric web applications using the powerful Laravel framework. Their solutions enhance consumer experience through intuitive interfaces and ensure security and performance for your business.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $25

No. Employee: 50–249

Founded Year : 2009

KrishaWeb

KrishaWeb is a world-class Laravel Development company that offers tailor-made web solutions to our clients. Whether you are stuck up with a website concept or want an AI-integrated application or a fully-fledged enterprise Laravel application, they can help you.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $50 - $99/hr

No. Employee: 50 - 249

Founded Year : 2008

Bacancy

Bacancy is a top-rated Laravel Development Company in India, USA, Canada, and Australia. They follow Agile SDLC methodology to build enterprise-grade solutions using the Laravel framework. They use Ajax-enabled widgets, model view controller patterns, and built-in tools to create robust, reliable, and scalable web solutions

Additional Information-

GoodFirms : 4.8

Avg. hourly rate: $25 - $49/hr

No. Employee: 250 - 999

Founded Year : 2011

Elsner

Elsner Technologies is a Laravel development company that has gained a high level of expertise in Laravel, one of the most popular PHP-based frameworks available in the market today. With the help of their Laravel Web Development services, you can expect both professional and highly imaginative web and mobile applications.

Additional Information-

GoodFirms : 5

Avg. hourly rate: < $25/hr

No. Employee: 250 - 999

Founded Year : 2006

Logicspice

Logicspice stands as an expert and professional Laravel web development service provider, catering to enterprises of diverse scales and industries. Leveraging the prowess of Laravel, an open-source PHP framework renowned for its ability to expedite the creation of secure, scalable, and feature-rich web applications.

Additional Information-

GoodFirms : 5

Avg. hourly rate: < $25/hr

No. Employee: 50 - 249

Founded Year : 2006

Sapphire Software Solutions

Sapphire Software Solutions, a leading Laravel development company in the USA, specialises in customised Laravel development, enterprise solutions,.With a reputation for excellence, they deliver top-notch services tailored to meet your unique business needs.

Additional Information-

GoodFirms : 5

Avg. hourly rate: NA

No. Employee: 50 - 249

Founded Year : 2002

iGex Solutions

iGex Solutions offers the World’s Best Laravel Development Services with 14+ years of Industry Experience. They have 10+ Laravel Developer Experts. 100+ Elite Happy Clients from there Services. 100% Client Satisfaction Services with Affordable Laravel Development Cost.

Additional Information-

GoodFirms : 4.7

Avg. hourly rate: < $25/hr

No. Employee: 10 - 49

Founded Year : 2009

Hidden Brains

Hidden Brains is a leading Laravel web development company, building high-performance Laravel applications using the advantage of Laravel's framework features. As a reputed Laravel application development company, they believe your web application should accomplish the goals and can stay ahead of the rest.

Additional Information-

GoodFirms : 4.9

Avg. hourly rate: < $25/hr

No. Employee: 250 - 999

Founded Year : 2003

Matellio

At Matellio, They offer a wide range of custom Laravel web development services to meet the unique needs of their global clientele. There expert Laravel developers have extensive experience creating robust, reliable, and feature-rich applications

Additional Information-

GoodFirms : 4.8

Avg. hourly rate: $50 - $99/hr

No. Employee: 50 - 249

Founded Year : 2014

What advantages does Laravel offer for your web application development?

Laravel, a popular PHP framework, offers several advantages for web application development:

Elegant Syntax

Modular Packaging

MVC Architecture Support

Database Migration System

Blade Templating Engine

Authentication and Authorization

Artisan Console

Testing Support

Community and Documentation

Conclusion:

I hope you found the information provided in the article to be enlightening and that it offered valuable insights into the top Laravel development companies.

These reputable Laravel development companies have a proven track record of creating customised solutions for various sectors, meeting client requirements with precision.

Over time, these highlighted Laravel developers for hire have completed numerous projects with success and are well-equipped to help advance your business.

Before finalising your choice of a Laravel web development partner, it is essential to request a detailed cost estimate and carefully examine their portfolio of past work.

#Laravel Development Companies#Laravel Development Companies in USA#Laravel Development Company#Laravel Web Development Companies#Laravel Web Development Services

2 notes

·

View notes

Text

Charting the Course to SAP HANA Cloud

The push towards SAP HANA cloud shift stems from the need for agility and responsiveness in a dynamic business climate. The cloud promises reduced infrastructure expenses, robust data analytics, and the nimbleness to address changing needs quickly. However, for many companies, transitioning from on-premise SAP HANA to the cloud involves navigating concerns around data security, performance, and potentially relinquishing control of business-critical ERP systems.

SAP HANA Enterprise Cloud: A Tailored Offering

In light of these challenges, SAP presented the SAP HANA Enterprise Cloud (HEC), a private cloud service designed expressly for mission-critical workloads. HEC advertises no compromise on performance, integration, security, failover, or disaster recovery. It spotlights versatility, strong customer support, and end-to-end coverage - from strategic planning to application management. This offering intends to provide the cloud’s agility and innovation under SAP’s direct guidance and expertise.

Actual Delivery of HANA Enterprise Cloud

Despite SAP’s messaging, the HEC’s delivery involves a consortium of third-party providers, including HPE, IBM, CenturyLink, Dimension Data, and Virtustream. SAP collaborates with these partners, who bid on projects often awarded to the lowest bidder, to leverage specialized capabilities while upholding SAP’s standards.

Weighing the Pros and Cons

Partnering with competent vendors ensures clients receive secure, best-practice SAP hosting and support. HEC’s comprehensive solution integrates licensing, infrastructure, and support with touted scalability and integration.

However, several customer challenges emerge. Firstly, leveraging SAP’s brand for cloud hosting and SAP managed services risks diminishing anticipated cost savings. Secondly, the lack of direct engagement with third-party providers raises concerns about entrusting critical ERP operations to unseen partners. This dynamic obscures visibility and control over SAP HANA migration and management.

Furthermore, the absence of a direct relationship between SAP HANA users and cloud suppliers may complicate support, especially for urgent issues warranting rapid response. While SAP’s ecosystem aims to guarantee quality and security, intermediation can hinder the timely resolution of critical situations, affecting system uptime and operations.

SAP HANA Cloud: A Strategic Decision

As SAP systems become increasingly vital, migrating SAP HANA is not simply a technical or operational choice but a strategic one. SAP HANA transcends a database or software suite – it constitutes a competitive advantage that, when optimized, can spur tremendous innovation and success. This migration necessitates meticulous planning, execution, and governance to ensure the transition empowers rather than compromises SAP HANA’s strategic value.

In this context, selecting the ideal cloud model and service providers represents critical decisions. Companies must scrutinize partners beyond cost, evaluating track records, SAP skills, security protocols, and the aptitude to deliver personalized, responsive service.

The Future SAP HANA Cloud Trajectory

As we advance into 2024, the SAP DATA Cloud Analytics landscape continues evolving. Innovations in cloud technology, security, and service creation provide new prospects for migration planning. Firms must stay updated on cloud service advancements, SAP’s strategic direction, and cloud shift best practices to navigate this transition successfully.

To accomplish this, companies should:

Collaborate cross-functionally to align SAP HANA cloud plans with broader business goals and technology roadmaps.

Ensure chosen cloud environments and suppliers meet rigorous data security, privacy, and regulatory standards.

Assess infrastructure ability to support SAP HANA performance requirements and scale amid fluctuating demands.

Institute clear governance and support structures for effective issue resolution throughout and post-migration.

Transitioning SAP HANA to the cloud is complex but ultimately rewarding, unlocking efficiency, agility, and innovation when executed deliberately. By weighing the strategic, operational, and technical dynamics, businesses can drive this migration smoothly, fully capturing SAP HANA’s power to fuel future prosperity.

2 notes

·

View notes

Text

Choosing the Right Control Panel for Your Hosting: Plesk vs cPanel Comparison

Whether you're a business owner or an individual creating a website, the choice of a control panel for your web hosting is crucial. Often overlooked, the control panel plays a vital role in managing web server features. This article compares two popular control panels, cPanel and Plesk, to help you make an informed decision based on your requirements and knowledge.

Understanding Control Panels

A control panel is a tool that allows users to manage various features of their web server directly. It simplifies tasks like adjusting DNS settings, managing databases, handling website files, installing third-party applications, implementing security measures, and providing FTP access. The two most widely used control panels are cPanel and Plesk, both offering a plethora of features at affordable prices.

Plesk: A Versatile Control Panel

Plesk is a web hosting control panel compatible with both Linux and Windows systems. It provides a user-friendly interface, offering access to all web server features efficiently.

cPanel: The Trusted Classic

cPanel is the oldest and most trusted web control panel, providing everything needed to manage, customize, and access web files effectively.

Comparing Plesk and cPanel

User Interface:

Plesk: Offers a user-friendly interface with a primary menu on the left and feature boxes on the right, similar to WordPress.