#Automated Data Collection Systems Great Lakes

Explore tagged Tumblr posts

Text

Unleashing Efficiency: The Rise of Industrial Automation Software Solutions

In the age of digital transformation, industrial sectors across the globe are undergoing a fundamental shift. One of the most significant developments driving this evolution is the rise of Industrial Automation Software Solutions. These intelligent systems are redefining how industries operate, streamline processes, and respond to real-time data — all while reducing human error and maximizing productivity.

Industrial automation is no longer just a competitive advantage; it’s quickly becoming a necessity for modern manufacturing, logistics, energy, and production-based businesses. At the core of this transformation lies powerful software designed to integrate machinery, manage workflows, and monitor operations with precision and efficiency.

One of the key strengths of Industrial Automation Software Solutions is their ability to centralize control over complex industrial processes. From programmable logic controllers (PLCs) to supervisory control and data acquisition (SCADA) systems, this software enables seamless communication between machines, sensors, and operators. The result is a smarter, more connected operation that can adapt quickly to changing demands.

Beyond basic automation, these solutions offer deep analytics capabilities. Using machine learning and AI, the software can detect patterns, predict equipment failures, and recommend proactive maintenance — ultimately helping businesses avoid costly downtime. This predictive approach not only increases reliability but also extends the lifespan of expensive equipment.

Flexibility is another crucial advantage. Most modern Industrial Automation Software Solutions are highly customizable and scalable, allowing companies to tailor systems to their unique needs and expand functionality as they grow. Whether an organization is automating a single production line or an entire facility, the right software can be scaled appropriately without significant disruptions.

In today’s globalized economy, remote access and cloud integration are more important than ever. Many automation platforms now offer web-based dashboards and mobile apps, giving managers and technicians the ability to monitor and control systems from virtually anywhere. This level of accessibility promotes faster response times and better decision-making, even across multiple locations.

Security, too, has become a top priority. As more industrial systems connect to the internet, they become more vulnerable to cyber threats. Industrial Automation Software Solutions are now being developed with robust cybersecurity features, including encrypted communication, multi-layered access control, and real-time threat monitoring.

These solutions also play a key role in sustainability efforts. By optimizing energy usage, reducing waste, and ensuring consistent product quality, industrial automation supports greener operations. Businesses not only improve efficiency but also meet environmental standards more effectively.

As industries continue to adopt smart technologies and prepare for Industry 4.0, the demand for reliable and intelligent automation software is set to grow exponentially. Investing in Industrial Automation Software Solutions is no longer just about keeping up with competitors — it's about laying the foundation for a resilient, future-ready operation.

Whether it's enhancing productivity, improving safety, or driving innovation, automation software is reshaping what’s possible in the industrial world. The future of manufacturing and production isn’t just automated — it’s intelligent, adaptive, and incredibly powerful.

#Traceability Software Great Lakes Region#Machine Vision Integration Great Lakes#Great Lakes Industrial Marking Solutions#Factory Monitoring Software Great Lakes Region#Lean Manufacturing Solutions Great Lakes#Automated Data Collection Systems Great Lakes#Industrial Traceability Solutions Detroit#Machine Vision Systems Detroit#Detroit Barcode Verification Services#Laser Marking Services Detroit MI#Production Management Software Detroit#Detroit Manufacturing Systems#product traceability software Detroit#industrial marking systems Detroit#Laser Marking Services Auburn Hills#Industrial Traceability Solutions Michigan#Barcode Verification Systems Auburn Hills#Machine Vision Systems Michigan#Auburn Hills Laser Part Marking#Industrial Marking Solutions Michigan#Traceability Software Auburn Hills#Turnkey Laser Systems Michigan#Auburn Hills Barcode Reader Solutions#Advanced Laser Marking Auburn Hills MI#laser marking systems#laser marking machine#laser part marking#uv laser marking machine#uv laser marking#dot peen marking machine

0 notes

Text

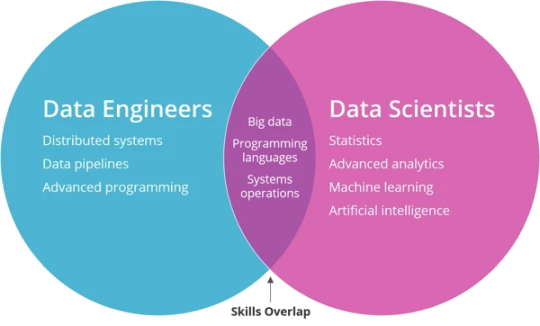

Big Data and Data Engineering

Big Data and Data Engineering are essential concepts in modern data science, analytics, and machine learning.

They focus on the processes and technologies used to manage and process large volumes of data.

Here’s an overview:

What is Big Data? Big Data refers to extremely large datasets that cannot be processed or analyzed using traditional data processing tools or methods.

It typically has the following characteristics:

Volume:

Huge amounts of data (petabytes or more).

Variety:

Data comes in different formats (structured, semi-structured, unstructured). Velocity: The speed at which data is generated and processed.

Veracity: The quality and accuracy of data.

Value: Extracting meaningful insights from data.

Big Data is often associated with technologies and tools that allow organizations to store, process, and analyze data at scale.

2. Data Engineering:

Overview Data Engineering is the process of designing, building, and managing the systems and infrastructure required to collect, store, process, and analyze data.

The goal is to make data easily accessible for analytics and decision-making.

Key areas of Data Engineering:

Data Collection:

Gathering data from various sources (e.g., IoT devices, logs, APIs). Data Storage: Storing data in data lakes, databases, or distributed storage systems. Data Processing: Cleaning, transforming, and aggregating raw data into usable formats.

Data Integration:

Combining data from multiple sources to create a unified dataset for analysis.

3. Big Data Technologies and Tools

The following tools and technologies are commonly used in Big Data and Data Engineering to manage and process large datasets:

Data Storage:

Data Lakes: Large storage systems that can handle structured, semi-structured, and unstructured data. Examples include Amazon S3, Azure Data Lake, and Google Cloud Storage.

Distributed File Systems:

Systems that allow data to be stored across multiple machines. Examples include Hadoop HDFS and Apache Cassandra.

Databases:

Relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra, HBase).

Data Processing:

Batch Processing: Handling large volumes of data in scheduled, discrete chunks.

Common tools:

Apache Hadoop (MapReduce framework). Apache Spark (offers both batch and stream processing).

Stream Processing:

Handling real-time data flows. Common tools: Apache Kafka (message broker). Apache Flink (streaming data processing). Apache Storm (real-time computation).

ETL (Extract, Transform, Load):

Tools like Apache Nifi, Airflow, and AWS Glue are used to automate data extraction, transformation, and loading processes.

Data Orchestration & Workflow Management:

Apache Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. Kubernetes and Docker are used to deploy and scale applications in data pipelines.

Data Warehousing & Analytics:

Amazon Redshift, Google BigQuery, Snowflake, and Azure Synapse Analytics are popular cloud data warehouses for large-scale data analytics.

Apache Hive is a data warehouse built on top of Hadoop to provide SQL-like querying capabilities.

Data Quality and Governance:

Tools like Great Expectations, Deequ, and AWS Glue DataBrew help ensure data quality by validating, cleaning, and transforming data before it’s analyzed.

4. Data Engineering Lifecycle

The typical lifecycle in Data Engineering involves the following stages: Data Ingestion: Collecting and importing data from various sources into a central storage system.

This could include real-time ingestion using tools like Apache Kafka or batch-based ingestion using Apache Sqoop.

Data Transformation (ETL/ELT): After ingestion, raw data is cleaned and transformed.

This may include:

Data normalization and standardization. Removing duplicates and handling missing data.

Aggregating or merging datasets. Using tools like Apache Spark, AWS Glue, and Talend.

Data Storage:

After transformation, the data is stored in a format that can be easily queried.

This could be in a data warehouse (e.g., Snowflake, Google BigQuery) or a data lake (e.g., Amazon S3).

Data Analytics & Visualization:

After the data is stored, it is ready for analysis. Data scientists and analysts use tools like SQL, Jupyter Notebooks, Tableau, and Power BI to create insights and visualize the data.

Data Deployment & Serving:

In some use cases, data is deployed to serve real-time queries using tools like Apache Druid or Elasticsearch.

5. Challenges in Big Data and Data Engineering

Data Security & Privacy:

Ensuring that data is secure, encrypted, and complies with privacy regulations (e.g., GDPR, CCPA).

Scalability:

As data grows, the infrastructure needs to scale to handle it efficiently.

Data Quality:

Ensuring that the data collected is accurate, complete, and relevant. Data

Integration:

Combining data from multiple systems with differing formats and structures can be complex.

Real-Time Processing:

Managing data that flows continuously and needs to be processed in real-time.

6. Best Practices in Data Engineering Modular Pipelines:

Design data pipelines as modular components that can be reused and updated independently.

Data Versioning: Keep track of versions of datasets and data models to maintain consistency.

Data Lineage: Track how data moves and is transformed across systems.

Automation: Automate repetitive tasks like data collection, transformation, and processing using tools like Apache Airflow or Luigi.

Monitoring: Set up monitoring and alerting to track the health of data pipelines and ensure data accuracy and timeliness.

7. Cloud and Managed Services for Big Data

Many companies are now leveraging cloud-based services to handle Big Data:

AWS:

Offers tools like AWS Glue (ETL), Redshift (data warehousing), S3 (storage), and Kinesis (real-time streaming).

Azure:

Provides Azure Data Lake, Azure Synapse Analytics, and Azure Databricks for Big Data processing.

Google Cloud:

Offers BigQuery, Cloud Storage, and Dataflow for Big Data workloads.

Data Engineering plays a critical role in enabling efficient data processing, analysis, and decision-making in a data-driven world.

0 notes

Text

The Future of Water Management: A Deep Dive into Water Level Automation

Introduction

Water is a precious resource that is vital for our survival, and efficient water management is crucial to ensure its sustainable use. With the increasing stress on global water resources due to population growth and climate change, innovative solutions are needed to optimize water usage. One such solution that holds immense promise is water level automation. In this blog, we will explore the future of water management through the lens of water level automation.

The Growing Need for Water Management

Water is essential for various purposes, including drinking, agriculture, industrial processes, and ecological balance. As our population continues to grow and climate change disrupts traditional weather patterns, the need for effective water management becomes increasingly urgent.

In many regions, water scarcity is a pressing issue, with water levels in reservoirs, rivers, and aquifers fluctuating unpredictably. Mismanagement of water resources can lead to devastating consequences such as droughts, water shortages, and even conflicts over water rights. To address these challenges, we must turn to technology for innovative solutions, and water level automation offers a promising path forward.

What is Water Level Automation?

Water level automation refers to the use of technology to monitor and control water levels in various systems, such as reservoirs, tanks, and irrigation systems. This automation relies on a combination of sensors, actuators, and control systems to ensure that water is distributed efficiently and wastage is minimized.

Key Components of Water Level Automation

Sensors: Water level sensors are the heart of any automation system. These sensors can be ultrasonic, pressure-based, or float switches, and they measure water levels accurately in real time.

Control Systems: Control systems process the data collected by the sensors and make decisions about when to open or close valves, pumps, or gates to regulate water flow.

Actuators: Actuators are the devices that physically control the flow of water. They can be valves, pumps, or gates that respond to the commands of the control system.

Advantages of Water Level Automation

Resource Optimization: Water level automation ensures that water is used efficiently, reducing wastage and conserving this precious resource.

Energy Efficiency: By automating pumps and valves, water level automation minimizes the energy required to maintain water levels, saving both energy and operational costs.

Data-Driven Decision Making: The data collected by the system provides valuable insights for better decision-making and long-term planning.

Reduced Labor Requirements: Automation reduces the need for constant manual monitoring and adjustment, freeing up human resources for more complex tasks.

Applications of Water Level Automation

Agriculture: Automated irrigation systems can help farmers optimize water usage, resulting in better crop yields and water conservation.

Municipal Water Supply: Water utilities can manage reservoirs and distribution systems more effectively, ensuring a stable water supply for communities.

Industrial Processes: Industries that require precise water levels, such as those in manufacturing or mining, can benefit from automation to reduce production downtime and save water.

Environmental Monitoring: Water level automation is crucial for monitoring and managing the water levels of natural ecosystems, such as wetlands and lakes.

Challenges and Considerations

While water level automation holds great potential, it is not without challenges. Initial setup costs, the need for maintenance, and potential cybersecurity risks are considerations that must be addressed. Additionally, the technology needs to be accessible and affordable for smaller communities and developing regions to truly make a global impact.

The Future of Water Level Automation

As technology continues to advance, the future of water level automation looks promising. Integration with the Internet of Things (IoT), artificial intelligence, and machine learning will enable even more sophisticated control and predictive capabilities. This will make water management more precise, efficient, and adaptable to changing conditions.

Conclusion

Water level automation represents a vital step towards ensuring the sustainable use of water resources in an ever-changing world. As the global population grows and climate change continues to impact our environment, efficient water management is not only a practical solution but a moral imperative. By embracing technology and innovation, we can look forward to a future where water is managed intelligently, ensuring a reliable supply for all while preserving this precious resource for generations to come.

1 note

·

View note

Text

The Privacy Paradox in the Information Economy and the age of Digital Sovereignty

Reflections and Learnings After Collecting and Exploiting Personal Data of Millions of Users in Latin America.

Introduction

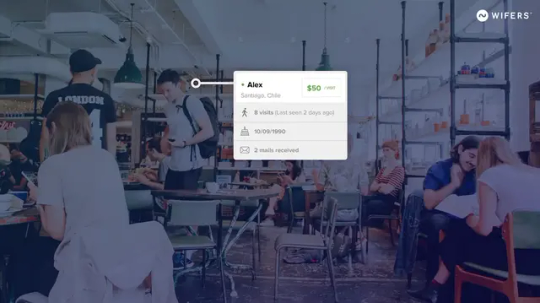

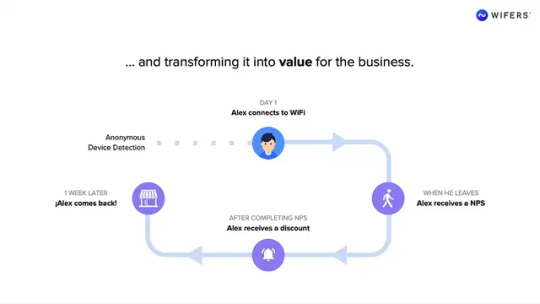

Wifers, the company I co-founded in Argentina in 2016, was the result of a process of building on previous experiences together with a unique industry context. Having previously led e-commerce and digital identity projects, it seemed clear to me that the next step would be a convergence between the digital and physical worlds. The vision was to transfer the knowledge and tools acquired by digital businesses during the boom of e-commerce a few years before to physical stores, which still represented over 90% of total commerce. By using hardware and software to collect information at the point of sale, we would be able to bridge the gap between online and offline commerce.

Initially, our focus was on generating a simple and affordable solution for small bistros to capture information from customers visiting their stores using Wi-Fi access points. In following iterations, we offered these businesses the opportunity to target and automate their customer communications, aiming to enhance customer engagement and retention. Finally, once we had a significant network of locations, we embarked on a data analysis challenge leveraging behavioral insights, customer preferences, recurrence, and walk-through metrics at the point of sale. We could even detect when a social media influencer walked in. By the beginning of 2020, our solution had been deployed in hundreds of stores across six countries in the region and our client base expanded to include pharmaceutical companies, multinational corporations and governments.

During that period, the industry underwent significant changes, including advancements in privacy and personal data protection regulations, updates in mobile operating systems and multiple devaluations of the Argentine peso. Ultimately, the pandemic forced us to stop our operations, interrupting an acquisition process with a US company that we had been negotiating with for over a year. Some of the operational and strategic decisions that left valuable lessons can be covered in another article. For now, I would like to share some insights on privacy in the information economy. The case of Wifers and other similar companies is relevant because it was enabled by three simultaneous phenomena: the privacy paradox, opacity vs. transparency and regulatory gaps.

Presenting Wifers at Start-Up Chile G21 Demo Day (Santiago de Chile, 2019)

The Privacy Paradox

In his 1944 book “The Great Transformation”, Hungarian-American economist Karl Polanyi described the commoditization of essential elements of society that propelled the rise of industrial capitalism:

The idea of taking “human activity” outside the market, bringing it into the market, labeling it as labor and assigning it a price;

The idea of bringing elements of “nature” such as lakes, trees, and land into the market, calling it real estate and assigning it a price;

And the idea of “exchange”, which, subordinate to the market, has become the concept of money.

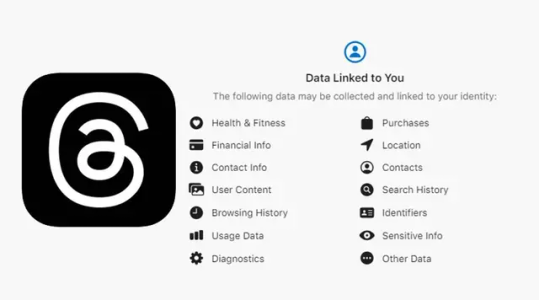

The great discovery of the 21st century is the notion that we can bring “human experiences” into the market, calling it data and then buy, sell, or create new markets for targeted advertising, personalization and profit generation. For several years now, data is the new commodity fueling the rise of surveillance capitalism, a new economic paradigm based on the intrusion into individuals’ privacy through digital technologies. The rapid and widespread adoption of these technologies and data collection practices have made it challenging for individuals to fully understand and control their privacy invasion. We can take the example of ‘Threads”, recently launched by Meta. How many users who were pulled from Instagram to this new app are aware of the amount of data it is collecting? How many are wondering why they really need all this information for an ordinary public messaging application?

The data collected by the Threads app could include your sexual orientation, race and ethnicity, biometric data, trade union membership, pregnancy status, politics and religious beliefs.

Even acknowledging this, why individuals feel compelled to trade their personal data for access to certain products and services? The privacy paradox explains this disconnect between people’s concerns about privacy and their actual behaviors, attributed to several factors: asymmetry of power (individuals feel helpless or resigned against large corporations and believe their privacy is already compromised), convenience (people will overlook privacy concerns in exchange of benefits and personalized services), lack of awareness and understanding of data collection practices, social influence and norms (observing others willingly sharing personal information without negative consequences influence them to do the same) and timing (people prioritize immediate gratification over long-term privacy considerations when making decisions).

“The sharing of personal information might be perceived as a loss of psychological ownership that threatens individual’s emotional attachment to their data.”

Back in 1944, Polanyi argued that the unrestricted marketization of key commodities can have detrimental social and environmental consequences, contending that society must establish protective measures and institutions to counterbalance the potentially harmful effects of unregulated markets. In the information economy, understanding the privacy paradox is crucial for policymakers, organizations and individuals, and it highlights the urgent need for transparent data practices, user-friendly privacy policies and improved education regarding privacy risks.

Opacity vs. Transparency

Despite having fully capitalized on the privacy paradox, we always upheld ethical practices when it came to storing, managing, and being transparent with user data policies for those connecting to our systems. Throughout my period as the company’s director, no data was compromised, sold or transferred to third parties, either directly or indirectly. I firmly believed that if we were the ones processing the information, we should be the ones capable of monetising it in the most transparent and ethical way possible.

However, increased data volume meant more value for the company, driving the constant need to find new ways of extracting information from individuals through new sources and processing techniques. In order to achieve many of our functionalities, we needed to rapidly and seamlessly collect data on millions of people, and many of these data collection techniques operated opaquely, in the background. For instance, users were often unaware that we could detect their devices in stores, even when not connected to our Wi-Fi networks or when their devices were locked, despite it being stated in our terms and conditions.

The dilemma of opacity and transparency is not new in the history of information systems but becomes particularly sensitive when a company’s existence is directly tied to the quantity and quality of the data it utilizes. Products like Threads and Wifers can take advantage of opacity to find new data collection opportunities because their business relies on it. Maintaining transparency policies requires significant effort. In the information economy, the pursuit of data as a commodity can blur ethical boundaries and lead companies to overlook this in order to maximize profits, potentially resulting in privacy violations, data misuse and other significant problems.

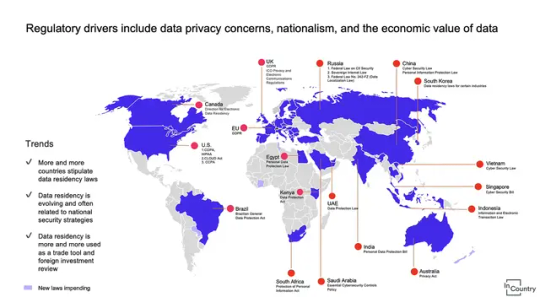

Regulatory Gaps

In a familiar pattern from previous experiences, we entered the market early. Not only did we secure our first sales before companies even realised they needed our technology, but we also operated silently for a significant period of time, employing a small organizational structure and employing seemingly invisible technology. This ability to remain unobserved provided us with a competitive advantage, but it was also facilitated by the context. These transactions existed in a regulatory vacuum, devoid of oversight, audits, or comprehensive protection measures.

Across the numerous locations and countries where we operated in Latin America, there existed (and still exists) no clear-cut regulatory framework that establishes the boundaries within which companies can collect and exploit personal data belonging to their customers. Our business model would have encountered very different challenges in the United States or Europe, where online privacy and personal data have been subject to legislation since 2016. Instead, we thrived for years in a green field facilitated by regulatory gaps.

The progress made in data legislation worldwide in 2021 represents a significant milestone in the ongoing fight for internet freedom and individual protection (InCountry, 2021)

Fortunately, privacy regulations are evolving slowly but surely. Leading the way is the European Union with its robust General Data Protection Regulation (GDPR), setting a high standard for data protection and inspiring other jurisdictions to update or establish their own data privacy laws. In an environment where privacy concerns are growing, the evolving landscape of privacy regulations worldwide may signify a shift towards consumer protection.

The age of digital sovereignty

Companies like Wifers were possible a few years ago, but may not be viable today. It is no coincidence that Threads has not yet been launched in Europe. The resistance against surveillance capitalism, fueled by the dominance of digital advertising giants in the Web 2 era and signed by the Facebook-Cambridge Analytica scandal, has raised awareness and sparked a strong desire among consumers and developers to challenge and weaken the data aggregation practices of corporations and governments.

More educated and privacy-conscious consumers, developers creating more transparent alternatives and regulated environments are paving the way for a transformative shift in the digital landscape. As this new paradigm takes shape, a vision is emerging for the Web 3 as a decentralized future that respects privacy, protects autonomy, and challenges power distribution. Some of this alternatives could truly disrupt the dominance of major players and restore digital sovereignty to individuals. For example, promising technologies like Self-Sovereign Identity (SSI) or decentralized identity are offering new ways to protect personal data and reshape the landscape in technical, commercial, legal, and social dimensions.

The path ahead is long, but the destination is clear. Our personal information and experiences must belong to us once again, and the internet should fulfill its promise as a tool for connection and democratization.

0 notes

Text

AI CITY Will Pave the Way For the Cities of Tomorrow

Cities often represent growth in mankind’s collective wisdom. The goal of every great city is to be built with the highest quality resources available and make use of the newest and most innovative technologies.

In the ancient city of Rome in the 1st century AD, the use of early hydro technologies allowed the population to flourish. Hydro power was one of the most widely used examples, generating mechanical energy through massive waterwheels. Furthermore, a highly developed water supply and drainage system provided ample clean water every day. They would collect the water and store it in large reservoirs by redirecting subsurface water from nearby rivers and lakes into artificial channels called ‘aqueducts’. This system was so effective that their water supply actually exceeded population demand. It was able to support households, gardening, water shows, mill grinding and the great Roman fountains we still see in use today. The Romans could even use the waterways as escape routes or in complex military strategies.

These innovations were magnificent. They laid the foundation for the incredibly rich, vibrant cities we live in today. Over 2,000 years later, our idea of innovation has shifted. Instead of aqueducts, we are working with the invisible networks of 5G, big data, Internet of Things (IoT) and artificial intelligence (AI).

Let’s set the stage. Morning sunbeams stretch slowly over a city silhouette, as the light reaches out into every corner of this X-Tech city. The residential roads are bordered by lush greenery as dewdrops slide from the highest tree leaves down onto solar panel laden rooftops, then to their final resting place in the grass. There is a heartbeat - not just within the surrounding nature - but witihin the structures themselves. As the dewdrops fall, the solar panels adjust themselves, ever so slightly, so water can slide into the gutters and reach the plants below. As sunlight hits the houses, bedroom windows adjust their opacity to allow the natural light to wake sleepy residents. Once light has filled the room, an AI virtual housekeeper selects your breakfast, matches your outfit with the weather, and presents a full schedule of your day. After breakfast, you step into your intelligent, fully-automated vehicle, and begin your intercity commute cities browsing global market news - recommended by an algorithm, of course!

This is not some SciFi pipedream, but a glance at the plans for an upcoming AI CITY. As early as 1992, Singapore proposed an plan known as the "Intelligent Island" in an attempt to create a country powered by artificial intelligence and machine learning. Subsequently, the Korean government proposed the "U-Korea" strategy in 2004, hoping to use the Internet and wireless sensors to transform Korea into a smart society.

In 2008, IBM first proposed the "Smarter Planet” initiative, and then formally rolled out the concept of "Smarter Cities" in 2010. Since then, there has been momentum toward creating "smart cities" around the world.

In 2013, the Ministry of Industry and Information Technology of China led the establishment of the China Smart City Industry Alliance and planned to invest RMB 500 billion in the next few years to build smart cities. In 2015, the US government proposed a new smart city initiative to actively deploy smart grids, smart transportation and broadband.

Cities are effectively giant machines that must run 24/7. People need this machine in excellent working order every day to protect and transport people and resources for different purposes – big and small. These tasks range from keeping public order to repairing broken manhole covers. Smart cities seek to connect all these details of city life to the Internet and Cloud, applying IoT, AI, 5G, big data and other new ICT technologies to optimize operational efficiency of these giant machines.

The AI CITY is the next generation smart cities, and its scope is more sophisticated than merely connecting devices. The key innovation lies in the role of artificial intelligence in its infrastructure, which will allow devices to operate on their own. This will ultimately allow the city to better manage its people and resources.

Europe and the United States had an early start in the development of smart cities. In London, investments in smart city infrastructure such as the Internet of Things, big data, cloud computing, artificial intelligence and blockchain have reached GBP 1.3 trillion. These investments were largely used in building smart grids, smart streets, smart transportation and other municipal projects. As an example, let’s look at London’s West End. Using GIS, CAD and 3D virtual technology, the area has been transformed into a hub of data generating buildings. Around 45,000 buildings within an area of nearly 20 sq.km are now utilizing internet-connected, data-generating devices in the West End. It’s a prime example of an urban geo-information system offering optimized looks into landscape design, traffic control, sustainability, emergency management and more.

New York has also benefited from a smart urban technology. More specifically, the city implemented a smart grid project where Con Edison, an electricity utilities company, automated and upgraded more than one-third of New York’s power grid to prevent any single accident from causing damage to the entire grid.

Moving geographies, the number of smart city developments in the East have outpaced the West. Both Japan and South Korea have used smart city technology to focus on resource allocation in their most densely populated cities. Seoul has established an integrated transportation system that uses smart cameras in subways to obtain information on passenger volume, and adjust the speed and frequency of trains in real time accordingly. It also installed sensors to monitor important train components to proactively prevent malfunctions. In Yokohama, the Smart Environment Project has deployed a series of advanced energy management systems in households and commercial buildings to "visualize" energy. Solar power solutions and home energy management systems were installed which allowed crucial energy data to be displayed. Now municipal officials could track power generation, power consumption and power sales.

Currently, China enjoys the fastest development of smart cities and has the highest abundancy of smart communities and AI technology in the world. More than 40% of global smart city projects are currently under construction in China. In Chongqing, a metropolis in southwest China, Terminus - an innovator in artificial intelligence, internet of things, and robotics - is building a smart city with a total gross floor area of 2,500,000 sqm. The company plans to use its suite of AI and IoT technologies to upgrade the sustainability, operations and visitor experience of the city. It will also empower traditional industries with new ICT technologies to create the world's first model of an AI CITY which incorporates all types of businesses and diversified industrial chains.

The Catalyst for AI CITY Implementation: Competition among the World's Top Tech Companies

Although the government plays an important role in planning and building an AI CITY, the true drivers of smart city innovation are the world's top technology companies.

IBM, the first major player in the smart city space, applied different functional modules such as smart healthcare, smart transportation, smart education, smart energy, and smart telecommunication to hundreds of cities across the globe. It collected a large quantity of data and integrated it into the city's main operational management centers.

However, given that cloud computing and AI technologies were not salient during this period, IBM was not able to effectively roll out its “Smarter Cities” initiative. Its solutions were frequently turned down by municipal governments due to the immense investment costs overshadowing the benefits gained from the new technology.

As a world-renowned network provider, Cisco has developed another angle on smart cities. Instead of undertaking mammoth projects like integrating smart technology across entire cities, Cisco’s strategy focuses on establishing smaller smart communities and driving change from the bottom up. In Copenhagen, it helped the city become carbon neutral; in Hamburg, it played a role in establishing a fully digital network and IT strategy that enabled an integrated management of land and marine transportation.

Meanwhile, Siemens, a global leading industrial manufacturer, has been working on smart grid solutions to help cities optimize their energy storage. Centralizing their strategy around smart grids allows for meaningful connections between smart buildings, electric transportation, smart meters, power generation, etc. It allows the company to integrate and upgrade an existing system as opposed to building out an entirely new system from scratch. It is an effective way to test out the new IoT technologies without generating tremendous risk to the city’s existing infrastructure.

The AI CITY ecosystem in China has benefitted tremendously from these existing projects. Chinese companies have been able to learn from the development of these global projects and combine the existing frameworks with emerging technologies like 5G, big data, cloud computing, AI and intelligent hardware R&D. This has provided an edge when constructing new smart city projects domestically.

Terminus, a leading global smart service provider, is one company taking full advantage of this opportunity. Terminus aims to shape the next generation of technology with AI and IoT, and has emerged as an industry integrator, achieving full coverage from a suite of products from service robots and sensors to edge smart products, all the way to cloud platforms. Terminus’ AI CITY solution is scene-oriented. It utilises the company's many years of experience in the AIoT industry to bring solutions for social management, energy efficiency improvement, smart parks, cultural innovation and smart finance to communities and cities. It has implemented and maintained more than 8,000 smart scenes in 84 cities.

Terminus’ AI CITY smart solutions are used to enhance traditional offline spaces. Shopping malls, for example, have several areas where operational efficiency could be better optimized. Terminus’ smart solutions allow malls to find these optimal points by collecting multi-dimensional data related to people, goods, stores, and orders. Their solutions provide digital operational tools for each functional unit of the mall. This allows customers to have a more reliable and enjoyable shopping experience. As an added benefit, by tapping into rich data, the shopping mall is able to achieve higher sales conversion, higher per capita sales and higher inventory turnover.

Terminus’ intelligent fire protection solution tells another positive story in favour of developing smart technologies. Terminus is able to leverage artificial intelligence to create a system of automated fire prevention and control. It lowers risk to firefighters and law enforcement while increasing preventative measures for structure fires. Terminus uses GPS, GIS, BIM and other geographic location information systems to map firehouses and fire law enforcement units in real-time. As a result, responders can reach the scene more efficiently by triangulating the closest available units. This solution also provides tooling for conducting data queries, pulling statistics, general analysis, decision-making, and intelligent identification of fire origins. The information is stored in data warehouses which can be mined and analyzed as needed.

Unlike the bottom-up strategies that other technology companies have used to promote smart city construction, Terminus has taken a top-down approach for scaling their solutions. Terminus aims to build science and technology industry parks by integrating its industrial resources. It also aims to enrich and improve its solutions by exploring diverse use cases. By continuing to grow within local smart city operations, Terminus has seized an opportunity to shape core frameworks for future smart cities. It stands to profit from the growing demand for smart technologies, while also contributing to a more sustainable world.

What Else Can We Do to Find the Way to AI CITY

The map to a sustainable AI CITY is unfolding at an unprecedented rate. Two players will influence the continued development of smart cities: governments and industry-leading companies.

For governments, it is essential to support in the planning and development of an AI CITY. Most importantly, they must consider the opinions and well-being of their citizens. London is an excellent example. The Greater London Authority developed an online community called "Talk London" to provide a safe space for online discussion, voting, Q&A, surveys, and more to cover impactful topics ranging from private rental markets to the safety of cyclists around large trucks. This process allows London citizens to participate in government decision-making. As a result, the policies formulated by the government often more accurately reflect the real needs of its people. In comparison, Google's Toronto Smart City Plan was recently under fire as it went against the needs of the local residents.

In closing, it would seem industry-leading companies will be tasked with shouldering more social responsibility. There is a clear commitment from these entities to contribute to technological progress, cultivate highly skilled professionals, and grow their brand. However, there is a crucial role to be played in popularizing and building out legitimate use cases for these new cities. These companies must extend the range of their considerations beyond just technology to those that will be using it. Innovation comes with the innate responsibility to better the lives of people around the globe. If the smart city and AI CITY models can embrace this notion, the future will be brighter than ever before.

https://www.terminusgroup.com/ai-city-will-pave-the-way-for-the-cities-of-tomorr.html

1 note

·

View note

Text

Maximize Efficiency with Track-Pac Traceability Solution

Stay ahead in production with customizable tools built for precision. Discover how Track-Pac Traceability Solution can help!

#advanced laser marking auburn hills mi#auburn hills laser part marking#automated data collection systems great lakes#detroit barcode verification services#Track-Pac Traceability Solution

0 notes

Text

How to Pass Microsoft Azure Foundation Exam AZ-900 (Part 2 of 3)

The Microsoft Azure Foundation Exam AZ-900 or the equivalent from AWS are usually the first cloud certificates that someone new to the cloud starts with. Both cover basic cloud concepts and ensure that you gain a profound understanding of the respective services. As the passing grade of 80% for the AZ-900 is quite high, it is advisable to thoroughly study for the exam. This is the first of three posts that will provide you with all key information about the Azure services that you need to pass the Azure Foundation Exam AZ-900.

The following structure is taken from the latest exam syllabus for the Azure Foundation 2021 and indicates the weight of each chapter in the exam. For each chapter, I have written down a very brief summary of key concepts and information that are typically asked for during the exam. The summary is a great resource to check and finalize your studies for the exam. However, if you are new to the topic, you should first start by going through the official Microsoft Azure training materials.

This is part 2 of the three-parts series regarding the Microsoft Azure Foundation exam AZ-900 and it will cover the third and fourth topic from the content below:

1. Describe Cloud Concepts (20-25%)

2. Describe Core Azure Services (15-20%)

3. Describe core solutions and management tools on Azure (10-15%)

3.1 Describe core solutions available in Azure

3.2 Describe Azure management tools

4. Describe general security and network security features (10-15%)

4.1 Describe Azure security features

4.2 Describe Azure network security

5. Describe identity, governance, privacy, and compliance features (20- 25%)

6. Describe Azure cost management and Service Level Agreements (10- 15%)

3. Describe core solutions and management tools on Azure (10-15%)

3.1 Describe core solutions available in Azure

Virtual Machines

A virtual machine is an IaaS service. Administrators from a company would have full control over the operating system and be able to install all applications on it. For example, Virtual machines can have a VPN installed that encrypts all traffic from the virtual machine itself to a host on the Internet. They can also transfer a virtual machine between different subscriptions.

Scale sets help to manage increased demands, load balancer help to distribute user traffic among identical virtual machines. Azure Virtual Machine Scale Sets are used to host and manage a group of identical Virtual Machines.

To avoid failure in case that a data center fails, you need to deploy across multiple availability zones. At least two virtual machines are needed to ensure 99.99% up time. If a virtual machine is switched off, there are no costs for processing, but still for storage services.

Containers

Containers are more lightweight than virtual machines. Instead of virtualizing the complete operating system, they only need the images and libraries and access the underlying operating system from the host environment. Multiple containers are managed with Azure Kubernetes, which is an IaaS solution.

Storage

Data disks for virtual machines are available through blob storage. Blob storage costs depend on the region. Storage costs depend on the amount of stored data, but also on the amount of read and write operations. Transfers between different regions also costs.

An Azure Storage account – file service – can be used to map a network drive from on premise computers to a Microsoft Azure storage.

Cool storage and archive storage can be used for data that is infrequently accessed.

Further Azure Services

Azure SQL database is a PaaS service. Companies buying the service would not have control over the underlying server hosting in Azure

Azure Web App is a PaaS solution, accessible via https://portal.azure.com. One would not have full access on the underlying machine hosting the web application

Azure DevOps is an integration solution for the deployment of code. It provides a continuous integration and delivery toolset

Azure DevTestLabs quickly provides development and test environments, such as 50 customized virtual machines per week

Azure Event Grid can collect events from multiple sources and process them to an application

Azure Databricks is a big data analysis service for machine learning

Azure Machine Learning Studio can be used to build, test, and deploy predictive analytics solutions

Azure Logic Apps is a platform to create workflows

Azure Data Lakes is a storage repository holding large amounts of data in its native, raw format

Azure Data Lake Analytics helps to transform data and provide valuable insights on the data itself

Azure SQL Data Warehouse is a centralized repository of integrated data from one or more sources. It requires zero administration of the underlying infrastructure and provides low latency access to the data

Cosmos DB Service is a globally distributed, multimodal database service. It can host tables and json documents in Azure without required administration of the underlying infrastructure

Azure Synapse Analytics is an analytics service that brings together enterprise data ware housing and Big Data Analytics

Azure HD Insight is a managed, full-spectrum, open-source analytics service. It can be used for frameworks such as Hadoop, Apache etc

Azure Functions App and Azure Logic App are platforms for serverless code. Azure Logic focuses on workflows, automation, integration, and orchestration, while Azure Functions merely executes code

Azure App Services hosts web apps / web-based applications. It requires to manage the infrastructure

Azure Marketplace is an online store that offers applications and services either built on or designed to integrate with Azure

IoT Central provides a fully managed SaaS solution that makes it easy to connect, monitor, and manage IoT assets at scale

IoT Hub can be used to monitor and control billions of Internet of Things assets

IoT Edge is an IoT solution that can be used to analyze data on end user devices

Azure Time Series Insights provides data exploration and telemetry tools to help refine operational analysis

Azure Cognitive Services is a simplified tool to build intelligent Artificial Intelligence applications

3.2 Describe Azure management tools

Azure Applications Insights monitors web applications and detects and diagnoses anomalies in web apps

The Azure CLI, Azure Powershell, and Azure Portal can be used on Windows 10, Ubuntu, and macOS machines

Cloud Shell works on Android or MacOS that has Powershell Core 6.0 installed

Windows PowerShell and Command Prompt can be used to install the CLI on a computer

4. Describe general security and network security features (10-15%)

4.1 Describe Azure security features

The Azure Firewall protects the network infrastructure

The Azure DDoS Protection provides protection against distributed denial of service attacks

Network Security Groups restrict inbound and outbound traffic. They are used to secure Azure environments

Azure Multi-Factor Authentication provides an extra level of security when users log into the Azure Portal. It is available for administrative and non-administrative user accounts

The Azure Key Vault can be used to store secrets, certificates, or database passwords etc.

Azure Information Protection encrypts documents and email messages

Azure AD Identity Protection can make users that try to login from an anonymous IT address to need to change their password

Authentication is the process of verifying a user´s credentials

4.2 Describe Azure network security

A Network Security Group can filter network traffic to and from Azure resources in an Azure virtual network. They can also ensure that traffic restrictions are in place so that a database server can only communicate with the web browser

An Azure Virtual Network can provide an isolated environment for hosting of virtual machines

A Virtual Network Gateway is needed to connect an on-premise data center to an Azure Virtual Network using a Site-to-Site connection

A Local Network Gateway can represent a VPN device in the cloud context

0 notes

Text

How does data science help start-up post-2020

Technology has grown by miles in recent years, which has prompted businesses to utilize it to the fullest for their growth. However, with technology, a significant amount of data is getting generated. Getting valuable and actionable insights from the data has thus become difficult. This is where data science comes into the picture for businesses across verticals. Even when we talk of startups, data scientists need to create architectures from scratch.

Data scientists have to perform multiple tasks in a startup like identifying key business metrics, understanding customer behaviour, developing data products, and testing them for their efficiency. Let us understand in detail how data science can help startups in 2020.

Why is data science important even for non-tech startups?

With the increasing competition in the business space, it has become more important than ever for organizations to master the art of personalization. Though serving the customers at the start of the journey remains a challenge, scaling up the business eventually is more challenging. Data science can help entrepreneurs ramp up their personalization efforts. Retail buyers’ persona can be identified and created based on their shopping history. This serves businesses to showcase to new customers what other people have seen, based on similar preferences. It helps in making informed recommendations.

How can startups implement data science in their business?

Rather than having data science integrated at the organizational level, it must be integrated at the team level. This includes different teams like sales, marketing, product, etc. Businesses must look forward to giving the data to the data scientists in an appropriate format so they can work efficiently.

It will be an ineffective process if companies just dump the data in the systems of data scientists. With data in a single format, businesses can give a data lake. It will bring in efficiency and make data scientists more productive. Let us have a look at the various stages of data science in startups.

Data Extraction and Tracking

As the first step, it is important to collect data that needs analysis at a later stage. Before proceeding ahead, you must identify the customer persona and user base. If you run an ed-tech startup and want to develop an app, you must identify how likely would its usage be. You must identify the number of users who will install the app, what would be the number of active sessions, and how likely will they spend.

This makes it important to collect the data on these parameters to identify the user base of your app. It is also essential to add specific attributes regarding product usage. Doing so will also help you identify the users who are most likely to opt-out of services and ways to prevent that.

Creating Data Pipelines

Being the second step in the process, you must analyze and process the data to gain relevant insights. It is the most important step that needs careful analysis. This helps data scientists to analyze the data from the data pipeline. It usually remains connected to a database, either an SQL or Hadoop platform.

Product Health Analysis

Data scientists need to analyze the metrics, which indicates the product’s health. Using raw data to transform it into usable data that shows the health of the products is a vital function of data scientists. Businesses can identify the product’s performance through these metrics. Several tools help data scientists perform this process. ETLs (extract, transform and load), R, and KPIs are some key metrics to analyze the product performance.

Exploratory Data Analysis

Exploring the data and gaining crucial insights is the next step after establishing a data pipeline. Data scientists can understand the data’s shape, the relationship between different features, and also gain insights about the data.

Creating predictive models

With the help of machine learning, data scientists can make predictions and classify the data. One of the best tools to forecast the behaviour of a user is predictive modelling. It helps businesses identify how well the users will respond to their product. Startups offering recommendation systems can create a predictive model to recommend products and services based on a user’s browsing history.

Building Products

Building products centred on data can help startups improve their offerings. This can be done when data scientists move from training to deployment. This is possible by using different tools to create new data products. Identifying the operational issues might not be possible every time. This can be overcome by manifesting data specifications on an actual product. It can handle data-related issues and prove beneficial for the startup.

Product Experimentation

Before introducing changes in a product, startups must conduct an analysis to identify their benefits. They need to identify if customers will accept and embrace the change. A/B testing is one of the common experimentation tools. You can draw a statistical conclusion when conducting hypothesis testing to compare the different variable versions. One of the limiting factors of A/B testing is the inability to control the users of different groups.

Data science and automation

Strenuous and repetitive tasks can easily get replaced with the help of data science. The cost reduction here can prove beneficial for utilization in other areas. This will help startups utilize their resources productively. So it will not reduce jobs but will give startups the chance to get better ROI from their employees. With increased productivity, startups can scale their operations faster. The available capital can complement the growth and increase their operational capacities.

Final Thoughts

Data science can help startups improve their product offerings and scale operations. The importance of data science is huge, as data is the lifeline of startups. As the demand for skilled data scientists is on the rise, Great Learning can help you learn data science. If you opt for a data science program, it will help you understand big data analytics, predictive analytics, neural network, and much more.

One of the excellent learning sources, these data science courses can give you a comprehensive learning experience. You can opt for a Python data science course, data scientist course, or even data science online training. Get in touch with us today to know more details about the courses and admission process.

0 notes

Text

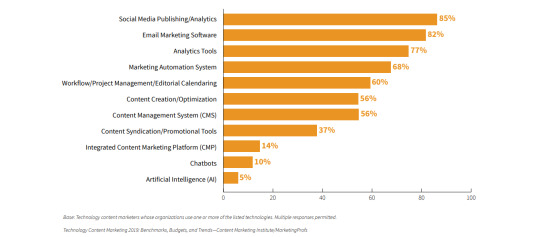

Data Science & AI Trends In India To Watch Out For In 2020 | By Analytics India Magazine & AnalytixLabs

The year 2019 was great in terms of analytics adoption as the domestic analytics industry witnessed a significant growth this year. There has been a visible shift towards intelligent automation, AI and machine learning that is changing the face of all major sectors — right from new policies by the Indian Government, to micro-adoption by startups and SMEs. While customer acquisition, investment in enterprise-grade data infrastructure, personalised products were some of the trends from 2018, this year our industry interaction suggested that democratisation of AI, AI push into hardware and software are much talked about. Our annual data science and AI trends report for the upcoming year 2020 aims at exploring the key strategic shifts that enterprises are most likely to make in the coming year to stay relevant and intelligent. This year we collaborated with AnalytixLabs, a leading Applied AI & Data Science training institute to bring out the key trends. Some of the key areas that have witnessed remarkable developments are deep learning, RPA and neural networks which, in turn, is affecting all the major industries such as marketing, sales, banking and finance, and others. Some of the most popular trends, according to our respondents, were the rise in robotic process automation or hyper-automation that has begun to use machine learning tools to work effectively. The rise in explainable AI is another exciting development that the industry is likely to see in the popularity charts in the coming year, along with the importance of saving data lakes and the rise of hyperscale data centres, among others. Some of the other trends like advancements in conversational AI and augmented analytics, are here to stay. Semantic AI, enterprise knowledge graphs, hybrid clouds, self-service analytics, real-time analytics and multilingual text processing were some of the other popular trends mentioned by the respondents which are likely to be on the rise in 2020.

01. The Rise Of Hyper-Automation

"2019 has seen rising adoption of Robotic Process Automation (RPA) across various industries. Intelligence infused in automation through data science and analytics is leading to an era of hyper-automation that enables optimization and modernisation. It is cost-effective too but may have risks. 2020 will see enterprises evaluating risks and control mechanisms associated with hyper-automation."Anjani Kommisetti, Country Manager, India & SAARC

"Hyper-automation uses a combination of various ML, automation tools and packaged software to work simultaneously and in perfect sync. These include RPA, intelligent business management software and AI, to take the automation of human roles and organizational processes to the next level. Hyper automation requires a mix of devices to support this process to recreate exactly where the human employee is involved with a project, after which it can carry out the decision-making process independently."Suhale Kapoor, Executive VP & Co-founder, Absolutdata

"Automation is going to increase in multitudes. Over 30% of data-based tasks will become automated. This will result in higher rates of productivity and analysts will have broader access to data. Automation will additionally assist decision makers to take better decisions for their customers with the help of correct analytics." Vishal Shah, Head of Data Sciences, Digit Insurance 02. Humanized Artificial Intelligence Products

"We will see AI getting deeper into Homes and lifestyle and Human Interaction would begin to increase in the coming year. This means a reliable AI Engine. We have already seen some voice based technology making a comfortable place in homes. Now, with Jio Fiber coming home and Jio disrupting telecom sector it will be interesting to see how the data can be leveraged to improve/ develop devices that are more human than products." Tanuja Pradhan, Head- Special Projects, Consumer Insights & New Commerce Analytics, Jio

"Rise of AI has been sensationalised in the media as a battle between man and machine and there are numerous numbers flying around on impact on job loss for millions of workers globally. However, only less than 10% of roles will really get automated in the near future. Most of the impact is rather on non-value-added tasks which will free-up time for humans to invest in more meaningful activities. We are seeing more and more companies releasing this now and investing in reskilling workforce to co-exist with and take advantage of technology." Abhinav Singhal, Director, tk Innovations, Thyssenkrupp

"The effects of data analysis on vast amounts of data have now reached a tipping point, bringing us landmark achievements. We all know Shazam, the famous musical service where you can record sound and get info about the identified song. More recently, this has been expanded to more use cases, such as clothes where you shop simply by analyzing a photo, and identifying plants or animals. In 2020, we’ll see more use-cases for “shazaming” data in the enterprise, e.g. pointing to a data-source and getting telemetry such as where it comes from, who is using it, what the data quality is, and how much of the data has changed today. Algorithms will help analytic systems fingerprint data, find anomalies and insights, and suggest new data that should be analyzed with it. This will make data and analytics leaner and enable us to consume the right data at the right time."Dan Sommer, Market Intelligence Lead, Qlik 03. Advancements in Natural Language Processing & Conversational AI

"Data Scientists form backbone of organisation’s success and employers have set the bar high while hiring these unicorns. With voice search and voice assistants becoming the next paradigm shift in AI, organisations are now possessing a massive amount of audio data, which means those with NLP skills have an edge over others. While this has always been a part of data science, it has gained more steam than ever due to the advancements in voice searches and text analysis for finding relevant information from documents." Sourabh Tiwari, CIO, Meril Group of Companies

"NLP is becoming a necessary element for companies looking to improve their data analytics capabilities by enhancing visualized dashboards and reports within their BI systems. In several cases, it is facilitating interactions via Q&A/chat mediums to get real-time answers and useful visualizations in response to data-specific questions. It is predicted that natural-language generation and artificial intelligence will be standard features of 90% of advanced business intelligence platforms including those which are backed by cloud platforms. Its increasing use across the market indicates that, by bringing in improved efficiency and insights, NLP will be instrumental in optimizing data exploration in the years to come." Suhale Kapoor, Executive VP & Co-founder, Absolutdata

"The advent of transformers for solving sequence-to-sequence tasks has revamped natural language processing and understanding use-cases, dramatically. For instance, BERT framework built using transformers is widely being tapped onto, for development of natural language applications like Bolo, demonstrating the applicability of AI for education. AI in education is here to stay." Deepika Sandeep, Practice Head, AI & ML, Bharat Light & Power

"2019 was undeniably the year of Personal assistants. Though Google assistant and Siri have seen many winters since their launch but 2019 saw Amazon Alexa and Google home making way into our personal space and in some cases have already become an integral part of some households. Ongoing research in the area of computational linguistics will definitely changes the way we communicate with machines in the coming years." Ritesh Mohan Srivastava, Advanced Analytics Leader, Novartis 04. Explainable Artificial Intelligence (XAI)

"Decisions and predictions made by artificial intelligence are becoming complex and critical especially in areas of fraud detection, preventive medical science and national security. Trusting a neural network has become increasingly difficult owing to the complexity of work. Data scientists train and test a model for accuracy and positive predictive values. However, they hesitate to use it in areas of fraud detection, security and medicine. Models inherently lack transparency and explanation on what is made or why something can go wrong. Artificial intelligence can no longer be a black box and data scientists need to understand the impact, application and decision the algorithm is making. XAI will be an exciting new trend in 2020. Its model agnostic nature allows it to be applied to answer some critical questions in data science." Pramod Singh, Chief Analytics Officer & VP, Envestnet|Yodlee

"Another area that is taking shape in the last few years is Explainable AI. While the data science community is divided on how much explainability should be built into ML models, top level decision makers are extremely keen to get as much of an insight as possible into the so-called AI mind. As the business need for explainability increases people will build methods to peep into the AI models to get a better sense of their decision making abilities. Companies will also consider surfacing some such explanations to their users in an effort to build more user confidence and trust in the company’s models. Look out for this area in the next 5 years." Bhavik Gandhi, Sr. Director, Data Science & Analytics, Shaadi.com 05. Augmented Analytics & Artificial Intelligence

"Augmented Analytics is the merger of statistical and linguistic technology. It is connected to the ability to work with Big Data and transform them into smaller usable subsets that are more informative. It makes use of Machine Learning and Natural Language Processing algorithms to extract insights. Data Scientists spend 80% of their time in Data Collection and Data Preparation. The final goal of augmented analytics is to completely replace this standard process with AI, taking care of the entire analysis process from data collection to business recommendations to decision makers." Kavita D. Chiplunkar, Head, Data Science, Infinite-Sum Modelling Inc.

"Augmented Assistance to exploit human-algorithm synergy will be a big trend in the coming years. While the decision support systems have been around for a long time, we believe that advancements in Conversation systems will propel the digital workers in a totally different realm. We witnessed early progress in ChatOps in 2019 but 2020 should see development of similar technology for diverse personas like Database Admin, Data Steward and Governance Officers." Sameep Mehta, Senior Manager, Data & AI Research, IBM Research India

"With the proliferation of AI-based solutions comes the need to show how they deliver value. This is giving rise to the evolution of explainable “white box” algorithms and the development of frameworks that allow for the encoding of domain expertise and a strong emphasis on data storytelling." Zabi Ulla S, Sr. Director Advanced Analytics, Course5 Intelligence

"The increasing amount of big data that enterprises have to deal with today – from collection to analysis to interpretation – makes it nearly impossible to cover every conceivable permutation and combination manually. Augmented analytics is stepping in to ensure crucial insights aren’t missed, while also unearthing hidden patterns and removing human bias. Its widespread implementation will allow valuable data to be more widely accessible not just for data and analytics experts, but for key decision-makers across business functions." Suhale Kapoor, Executive Vice President & Co-founder, Absolutdata 06. Innovations In Data Storage Technologies "Data explosion increases every year and 2019 was no different. But to manage this ever-increasing data SDS saw an exponential rise, not just to attain agility but also make data more secure, that again has been a boon to SMEs. 2020 will see SME/ SMB sectors rising in the wave of intelligent transformation to make intelligent choices and reducing the total cost of ownership." Vivek Sharma, MD India, Lenovo DCG

"Hyperscale data centre construction has dominated the data centre industry in 2019 and provided enterprises with an opportunity to adopt Data Centre Infrastructure Management (DCIM) solutions, befitting their modern business and environment. With the help of DCIM solutions, 2020 will see enterprises designing smart data centres enabling operators to integrate proactive sustainability and efficiency measures." Anjani Kommisetti, Country Manager, India & SAARC, Raritan

"There is a rise of new innovations in data collection and storage technologies that will directly impact how we do store, process and do data science. These graphical database systems will greatly expedite data science model building, scale analytics at rapid speed and provides greater flexibility, allowing users to insert new data into a graph without changing the structure of the overall functionality of a graph." Zabi Ulla S, Sr. Director, Advanced Analytics, Course5 Intelligence

"Data Science and Data Engineering are working more closely than ever. And T-shaped data scientists are very popular! With an increasing need for data scientists to deploy their algorithms and models, they need to work closely with engineering teams to ensure the right computation power, storage, RAM, streaming abilities etc are made available. A lot of organisations have created multi-disciplinary teams to achieve this objective." Abhishek Kothari, Co founder, Flexi Loans 07. Data Privacy Getting Mainstream

"Consumers have finally matured to the need for robust data privacy as well as data protection in the products they use, and app developers cannot ignore that expectation anymore. In 2020, we can expect much more investment towards this facet of the business as well as find entirely new companies coming up to cater to this requirement alone." Shantanu Bhattacharya, Data Scientist, Locus

"Data security will be the biggest challenging trend. Most AI-driven businesses are in nascent stages and have grown too fast. Businesses will have to relook at data security and build safer and robust infrastructure. Data and Analytics industry will face this biggest challenge in 2020 due to lack of orientation of data security in India. Focus has been on growth and 2020 will get the focus on sustaining this growth by securing data and building sustainability." Dr Mohit Batra, Founder & CEO, Marketmojo.com

"As governments start to dive deeper into data & technology, more & more sensitive information will be unearthed. More importantly, we see an increasing trend in collaboration between governments and private sector, for design & delivery of public goods. To make the most of this phase of innovation, it will be critical for governments at all levels to not only articulate how it sees the contours of data sharing and usage (in India, we currently have a draft Personal Data Protection Bill) but also how these nitty-gritties are embedded in the day to day working of the governments and decision makers." Poornima Dore, Head, Data-driven Governance, Tata Trusts 08. Increasing Awareness On Ethical Use Of Artificial Intelligence

"The analytics community is starting to awaken to the profound ways our algorithms will impact society, and are now attempting to develop guidelines on ethics for our increasingly automated world. The EU has developed principles for ethical AI, as has the IEEE, Google, Microsoft, and other countries and corporations including OECD. We don’t have the perfect answers yet for concerns around privacy, biases or its criminal misuse, but it’s good to see at least an attempt in the right direction." Abhinav Singhal, Director, tk Innovations, Thyssenkrupp

"Artificial Intelligence comes with great challenges, such as AI bias, accelerated hacking, and AI terrorism. The success of using AI for good depends upon trust, and that trust can only be built over time with the utmost adherence to ethical principles and practices. As we plough ahead into the 2020s, the only way we can realistically see AI and automation take the world of business by storm is if it is smartly regulated. This begins with incentivising further advancements and innovation to the tech, which means regulating applications rather than the tech itself. Whilst there is a great deal of unwarranted fear around AI and the potential consequences it may have, we should be optimistic about a future where AI is ethical and useful." Asheesh Mehra, Co-founder and Group CEO of AntWorks 09. Quantum Computing & Data Science

"Quantum computers perform calculations based on the probability of the state of an object before it is measured- rather than just microseconds- which means that they have the potential to process more data exponentially compared to conventional computers. In a quantum system, the qubits or quantum bits store much more data and can run complex computations within seconds. Quantum computing in data science can allow companies to test and refine enormous data for various business use cases. Quantum computers can quickly detect, analyze, integrate and diagnose patterns from large scattered datasets." Vivek Zakarde, Segment Head- Technology (Head BI & DWH), Reliance General Insurance Company Ltd.

"While still in the very nascent stages quantum computing holds a promise that no one can ignore. The ability to do 10000 years of computations in 200 seconds coupled with the exabytes of data that we generate daily can allow data scientists to train massive super complex models that can accomplish complex tasks with human or superhuman levels of accuracy. 8-10 years down the line we would be seeing models being trained on quantum computers and for that we need AI that works on quantum computers and this area will grow a lot in the coming years."Bhavik Gandhi, Sr Director, Data Science & Analytics, Shaadi.com 10. Saving The Data Lakes

"While Data Lakes may have solved the problem of data centralization, they in turn become an unmanaged dump yard of data. As the veracity of data becomes a suspect, analytics development has slowed down. Pseudo-anonymization to check the quality of incoming data, strong governance and lineage processes to ensure integrity and a marketplace approach to consumption would emerge as the next frontier for enterprises in their journey of being data-driven. Further, smart data discovery will enable uncovering of patterns and trends to maximize organizations’ ROI by breaking information silos." Saurav Chakravorty, Principal Data Scientist, Brillio

"Data Lake will become more mainstream as the technology starts maturing and getting consolidated. External data will become as one of the main data sources and Data Lake will be the de-facto choice in forming a base for a single customer view. It will help in improving the customer journey thereby increasing efficiency." Vishal Shah, Head of Data Science, Digit Insurance Download the complete report Data_Science_AI_Trends_India_2020_Analytics_India_MagazineDownload Read the full article

0 notes

Text

5 Ways to Improve Your B2B Content Marketing Strategy in 2020

There’s no point in us saying that ‘content is King’. We all know this. And practically all businesses are incorporating content marketing — in one form or another — into their promotional and advertising efforts.

The problem is that the content marketing landscape is changing… and we’re not keeping up.

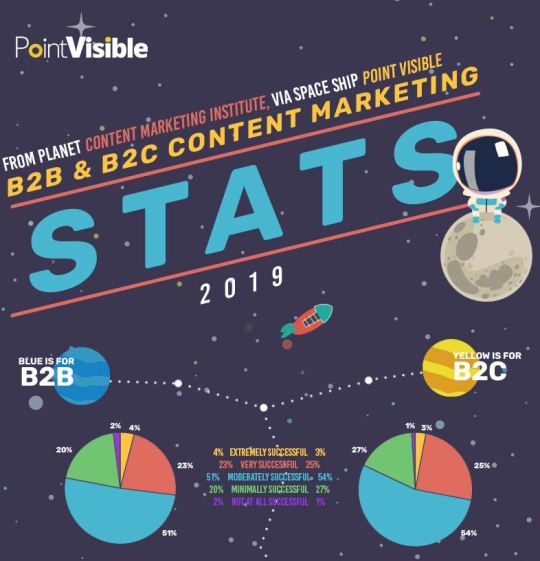

Consider this. Content marketing statistics show that only around 30% of all marketers lead really successful content marketing campaigns.

Why is that the case, if we know that everyone is putting so much effort and trust into content marketing?

The answer is simple: they’re failing to adapt as the marketing landscape evolves.

With 2020 on it's way, now is the perfect time to take a look at what parts of your content marketing strategy missed the mark in 2019.

Today we'll explore emerging trends in content marketing that could help us all to create more effective strategies for the new year.

So let’s take a look at five ways to improve your content marketing strategy in 2020.

1. Performance Monitoring

Tracking key performance indicators… it seems like a no-brainer, right?

Yet according to the numbers, only around 50% actually measured their content marketing return on investment in 2018.

What’s particularly worrying about this is that it implies that some organizations could have been placing their time, money, and human resources into lengthy campaigns that weren’t achieving the desired result.

In 2020, there must be a greater focus on monitoring campaigns and tailoring them as deemed necessary.

Perhaps the reason why 50% of marketers don’t measure their success is that they think of content marketing as being more of a science than an art. It’s easily done.

After all, we’re beginning to understand a lot more about what works, and what doesn’t. But content marketing is an art.

There’s no hard and fast rule about what marketers should do, and it’s natural that they’ll be a little trial and error involved. Next year, brands should be working to monitor, track, reflect, and adjust their campaigns.

Here are some ways that make it easier to keep a close eye on the overall success of your campaign:

Analyze audience behavior on your site. Are your readers clicking through to other areas of your website, or taking some sort of action towards conversion, such as making an inquiry? If not, it suggests that the quality of your leads isn’t quite as good as it could be.

Determine your value.Tools such as Google Analytics can be useful in determining the sales ‘value’ of each page and, more importantly, the content on that particular page. These tracking tools help you to create a route map, highlighting which pages are responsible for making SQLs convert.