#Cloud-native

Explore tagged Tumblr posts

Text

AI-Powered Sports Broadcasting: Transforming Athletic Storytelling

Sports broadcasting has always been about capturing lightning in a bottle—seizing those split-second moments of athletic brilliance before they disappear into memory. Traditionally, audiences have experienced these moments through a narrow lens, limited by conventional production constraints. But imagine if that single perspective could multiply into countless viewpoints. Picture experiencing these moments with unprecedented intimacy and personalization. Envision every competitor's journey being documented, regardless of their position in the field. This transformation isn't a distant dream—it's the present reality emerging from the convergence of artificial intelligence and live sports production.

The partnership between TVU Networks and Red Bull Media House for the Wings for Life World Run exemplifies this broadcasting evolution. This distinctive marathon format features participants being pursued by a "Catcher Car" across multiple global locations simultaneously, with the final runner caught declared the winner. The event's massive scale—thousands of simultaneous participants worldwide—creates unprecedented challenges for traditional broadcasting approaches.

The 2025 Wings for Life World Run showcased TVU Networks' revolutionary AI-enhanced production workflow. Through their cloud-based infrastructure, the system processed feeds from diverse sources including professional broadcast equipment and consumer smartphones. The breakthrough technology, TVU Search, employed AI-driven capabilities for instant athlete identification and footage compilation using advanced facial recognition and race number detection. Production teams could transcend following only lead runners, instantly accessing and assembling compelling narratives from any participant globally in real-time. This capability exemplifies AI's power to democratize sports coverage, creating more personalized and inclusive viewing experiences.

AI integration in sports broadcasting has evolved beyond experimental applications into a rapidly expanding industry sector. Innovative companies are developing sophisticated algorithms that automate labor-intensive processes, generate novel content formats, and provide viewers with unprecedented control over their entertainment experience.

TVU Networks leads the charge in utilizing AI and cloud technologies for creating more flexible and economical broadcast operations. Their comprehensive solution portfolio tackles modern sports production challenges, spanning remote production capabilities to content management and distribution systems. TVU Search demonstrates AI's potential to revolutionize post-production through automated indexing and content search functionality using facial recognition, brand detection, and speech-to-text conversion. The cloud-based ecosystem enables distributed production teams to collaborate seamlessly in real-time, minimizing on-site crew requirements and expensive satellite infrastructure, thereby reducing costs and environmental impact. Solutions like the TVU Anywhere application transform smartphones into broadcast-quality cameras, enabling smaller leagues and specialized sports to create professional-grade live content.

EVS, the industry standard for live sports production and instant replay systems, now incorporates AI to enhance their market-leading replay technology. Their XtraMotion system employs machine learning to create ultra-slow-motion replays from any camera perspective, including those not captured with high-speed equipment. This provides broadcasters expanded creative possibilities for spectacular slow-motion replay sequences from multiple viewpoints. EVS also leverages AI for sports officiating enhancement through their Xeebra system, utilized for video assistant refereeing in soccer and other sports. The AI-assisted Video Offside Line feature helps referees make quicker and more precise offside determinations. Additionally, EVS explores AI applications for automated highlight generation through real-time game data and video feed analysis.

Stats Perform, the premier sports data and analytics provider, harnesses AI to discover fresh insights and create compelling fan content. Their OptaAI platform utilizes natural language generation for automated written content creation, including game previews, recaps, and player profiles, enabling broadcasters and media organizations to produce quality content at significantly reduced costs compared to manual creation. Through historical data analysis, Stats Perform's AI models generate game outcome and player performance predictions that integrate seamlessly into broadcasts. Computer vision technology analyzes video feeds to extract player tracking data, ball trajectory information, and tactical formations, creating sophisticated analytics and visualizations for deeper game understanding.

WSC Sports has positioned itself as the automated video highlight industry leader. Their AI-powered platform analyzes live broadcasts in real-time, automatically identifying and clipping pivotal moments including goals, touchdowns, and slam dunks, enabling broadcasters to deliver highlights to fans almost instantaneously. The platform's strength lies in creating personalized highlights for individual fans by analyzing user data and preferences, automatically generating customized highlight reels based on personal favorites. WSC Sports facilitates easy distribution of these personalized highlights across multiple platforms, from social media and websites to mobile applications and over-the-top services.

Grabyo, a cloud-based video production platform, has integrated AI to streamline broadcaster and media company workflows. Similar to WSC Sports, Grabyo uses AI for automatic key moment identification and clipping from live streams for rapid highlight creation. Their platform automatically logs and tags content with comprehensive metadata, simplifying search and retrieval of specific clips. As a cloud-native solution, Grabyo enables seamless collaboration between remote production teams, particularly valuable for sports broadcasters covering multi-location events.

While these companies currently lead the market, rapid innovation ensures the landscape continues evolving. The next innovation wave will likely emphasize hyper-personalization, with broadcasts completely customized to individual viewers, offering choices of camera angles, commentary teams, and displayed graphics and statistics. As AI technology advances, we can anticipate AI-powered systems producing high-quality live sports broadcasts with minimal human intervention, enabling coverage of broader sports and events, from grassroots to professional levels. The convergence of AI, augmented reality, and virtual reality will create immersive experiences, such as viewing games from favorite players' perspectives or having interactive graphics overlaid on playing fields. AI will also generate new revenue opportunities, from personalized advertising to innovative interactive content formats.

The most commercially significant trend involves AI-driven personalization creating entirely new revenue models. Fox Sports' natural language query system allows fans to request specific highlight types—"Show me all Hail Mary passes" or "Find diving catches from the third quarter"—transforming passive viewing into interactive exploration. Amazon Prime Video's Prime Insights provides personalized metadata and predictive analytics, while platforms like Pulselive use Amazon Personalize to achieve 20% increases in video consumption through AI-powered content recommendations. These systems learn individual viewing patterns, team preferences, and engagement behaviors to create customized experiences.

The advertising implications are substantial. AI-driven targeted advertising achieves higher engagement rates through real-time campaign optimization, while dynamic ad insertion adapts content based on viewer demographics, location, and viewing history. Advanced analytics create new data monetization opportunities, with sports organizations generating revenue from insights and predictions extending far beyond traditional broadcasting.

The AI broadcasting market is projected to reach $27.63 billion by 2030, growing at 21.1% annually, but these figures only partially capture the transformation. The real revolution lies in AI's democratization of high-quality sports production and the creation of entirely new content categories. By 2026-2027, we can expect AI systems to adapt dynamically to game pace and crowd sentiment, automatically adjusting camera angles, graphics packages, and even commentary tone based on real-time emotional analysis. Automated highlight generation will extend beyond individual plays to create narrative-driven content following story arcs across entire seasons.

Integration with augmented and virtual reality will create immersive viewing experiences where AI curates personalized camera angles, statistical overlays, and social interaction opportunities. For lower-tier events and niche sports, AI represents complete paradigm transformation. Fully autonomous broadcasting systems will enable professional-quality coverage for events that could never justify traditional production costs. High school athletics, amateur leagues, and emerging sports will gain access to broadcast capabilities rivaling professional productions.

Implementation costs remain substantial—comprehensive AI broadcasting systems require $50,000 to $500,000+ investments—and integration with existing infrastructure presents ongoing challenges. Quality control concerns persist, particularly for live environments where AI failures have immediate consequences. The industry faces legitimate questions about job displacement, with traditional camera operators, editors, and production assistants confronting increasingly automated workflows. However, experience suggests AI creates new roles even as it eliminates others. AI systems require human oversight, creative direction, and technical expertise that didn't exist five years ago.

Regulatory considerations around AI-generated content, deepfakes, and automated decision-making will require industry-wide standards and transparency measures. The EU AI Act's implementation in 2024 already affects sports media applications, with requirements for accountability and explainability in AI systems.

Reflecting on the rapid evolution from the TVU Networks case study to broader industry transformation, one thing becomes clear: AI in sports broadcasting isn't approaching—it's already here. Successful implementations at major events like the Olympics, Masters, and professional leagues demonstrate that AI systems are ready for mainstream adoption across all sports broadcasting levels. The convergence of cloud infrastructure, machine learning, computer vision, and 5G connectivity creates opportunities that seemed like science fiction just a few years ago. We're not merely automating existing workflows; we're creating entirely new sports content forms that enhance fan engagement while reducing production costs and environmental impact.

Organizations embracing this transformation will flourish, while those resisting will find themselves increasingly irrelevant in a market rewarding innovation, efficiency, and fan-centric experiences. The AI revolution in sports broadcasting isn't just changing content production—it's redefining what sports broadcasting can become. The future belongs to those who can harness these tools while maintaining the human creativity and storytelling that make sports broadcasting compelling. The race is underway, and the finish line is already visible.

0 notes

Text

The concerted effort of maintaining application resilience

New Post has been published on https://thedigitalinsider.com/the-concerted-effort-of-maintaining-application-resilience/

The concerted effort of maintaining application resilience

Back when most business applications were monolithic, ensuring their resilience was by no means easy. But given the way apps run in 2025 and what’s expected of them, maintaining monolithic apps was arguably simpler.

Back then, IT staff had a finite set of criteria on which to improve an application’s resilience, and the rate of change to the application and its infrastructure was a great deal slower. Today, the demands we place on apps are different, more numerous, and subject to a faster rate of change.

There are also just more applications. According to IDC, there are likely to be a billion more in production by 2028 – and many of these will be running on cloud-native code and mixed infrastructure. With technological complexity and higher service expectations of responsiveness and quality, ensuring resilience has grown into being a massively more complex ask.

Multi-dimensional elements determine app resilience, dimensions that fall into different areas of responsibility in the modern enterprise: Code quality falls to development teams; infrastructure might be down to systems administrators or DevOps; compliance and data governance officers have their own needs and stipulations, as do cybersecurity professionals, storage engineers, database administrators, and a dozen more besides.

With multiple tools designed to ensure the resilience of an app – with definitions of what constitutes resilience depending on who’s asking – it’s small wonder that there are typically dozens of tools that work to improve and maintain resilience in play at any one time in the modern enterprise.

Determining resilience across the whole enterprise’s portfolio, therefore, is near-impossible. Monitoring software is silo-ed, and there’s no single pane of reference.

IBM’s Concert Resilience Posture simplifies the complexities of multiple dashboards, normalizes the different quality judgments, breaks down data from different silos, and unifies the disparate purposes of monitoring and remediation tools in play.

Speaking ahead of TechEx North America (4-5 June, Santa Clara Convention Center), Jennifer Fitzgerald, Product Management Director, Observability, at IBM, took us through the Concert Resilience Posture solution, its aims, and its ethos. On the latter, she differentiates it from other tools:

“Everything we’re doing is grounded in applications – the health and performance of the applications and reducing risk factors for the application.”

The app-centric approach means the bringing together of the different metrics in the context of desired business outcomes, answering questions that matter to an organization’s stakeholders, like:

Will every application scale?

What effects have code changes had?

Are we over- or under-resourcing any element of any application?

Is infrastructure supporting or hindering application deployment?

Are we safe and in line with data governance policies?

What experience are we giving our customers?

Jennifer says IBM Concert Resilience Posture is, “a new way to think about resilience – to move it from a manual stitching [of other tools] or a ton of different dashboards.” Although the definition of resilience can be ephemeral, according to which criteria are in play, Jennifer says it’s comprised, at its core, of eight non-functional requirements (NFRs):

Observability

Availability

Maintainability

Recoverability

Scalability

Usability

Integrity

Security

NFRs are important everywhere in the organization, and there are perhaps only two or three that are the sole remit of one department – security falls to the CISO, for example. But ensuring the best quality of resilience in all of the above is critically important right across the enterprise. It’s a shared responsibility for maintaining excellence in performance, potential, and safety.

What IBM Concert Resilience Posture gives organizations, different from what’s offered by a collection of disparate tools and beyond the single-pane-of-glass paradigm, is proactivity. Proactive resilience comes from its ability to give a resilience score, based on multiple metrics, with a score determined by the many dozens of data points in each NFR. Companies can see their overall or per-app scores drift as changes are made – to the infrastructure, to code, to the portfolio of applications in production, and so on.

“The thought around resilience is that we as humans aren’t perfect. We’re going to make mistakes. But how do you come back? You want your applications to be fully, highly performant, always optimal, with the required uptime. But issues are going to happen. A code change is introduced that breaks something, or there’s more demand on a certain area that slows down performance. And so the application resilience we’re looking at is all around the ability of systems to withstand and recover quickly from disruptions, failures, spikes in demand, [and] unexpected events,” she says.

IBM’s acquisition history points to some of the complimentary elements of the Concert Resilience Posture solution – Instana for full-stack observability, Turbonomic for resource optimization, for example. But the whole is greater than the sum of the parts. There’s an AI-powered continuous assessment of all elements that make up an organization’s resilience, so there’s one place where decision-makers and IT teams can assess, manage, and configure the full-stack’s resilience profile.

The IBM portfolio of resilience-focused solutions helps teams see when and why loads change and therefore where resources are wasted. It’s possible to ensure that necessary resources are allocated only when needed, and systems automatically scale back when they’re not. That sort of business- and cost-centric capability is at the heart of app-centric resilience, and means that a company is always optimizing its resources.

Overarching all aspects of app performance and resilience is the element of cost. Throwing extra resources at an under-performing application (or its supporting infrastructure) isn’t a viable solution in most organizations. With IBM, organizations get the ability to scale and grow, to add or iterate apps safely, without necessarily having to invest in new provisioning, either in the cloud or on-premise. Plus, they can see how any changes impact resilience. It’s making best use of what’s available, and winning back capacity – all while getting the best performance, responsiveness, reliability, and uptime across the enterprise’s application portfolio.

Jennifer says, “There’s a lot of different things that can impact resilience and that’s why it’s been so difficult to measure. An application has so many different layers underneath, even in just its resources and how it’s built. But then there’s the spider web of downstream impacts. A code change could impact multiple apps, or it could impact one piece of an app. What is the downstream impact of something going wrong? And that’s a big piece of what our tools are helping organizations with.”

You can read more about IBM’s work to make today and tomorrow’s applications resilient.

#2025#acquisition#ADD#ai#AI-powered#America#app#application deployment#application resilience#applications#approach#apps#assessment#billion#Business#business applications#change#CISO#Cloud#Cloud-Native#code#Companies#complexity#compliance#continuous#convention#cybersecurity#data#Data Governance#data pipeline

0 notes

Text

Cloud-native Anwendungen: Effiziente Entwicklung und Deployment

In der heutigen dynamischen IT-Landschaft gewinnen Cloud-native Anwendungen zunehmend an Bedeutung. Sie ermöglichen Unternehmen, schnell auf sich ändernde Marktbedingungen zu reagieren und innovative Lösungen zu entwickeln. In diesem Artikel werden wir die Grundlagen und Architekturprinzipien von Cloud-native Anwendungen sowie die Optimierung von Entwicklung und Deployment in der Cloud…

#Automatisierung#Cloud-Native#Containerisierung#DevOps#Innovation#Managed Services#Softwareentwicklung

0 notes

Link

Migrating your enterprise service bus (ESB) integration to a cloud-native pattern can be a significant undertaking. However, the potential benefits, like increased agility, scalability, and resiliency, are well worth it. This kind of migration involves rearchitecting your integration solution to leverage cloud services and take advantage of cloud-native principles. But why migrate from an ESB? ...

0 notes

Text

Delivering Global Solutions with Microservices, Cloud-Native APIs and Inner Source

The Challenge: High level requirements were to deliver a global digital voucher solution across various retail machines, consumer devices and regions.

However, there were additional requirements. We needed to support small regional marketing teams who needed a simple and flexible solution that they could easily use. Our small development team needed to provide operational support.

We also had to consider the non-functional requirements, such as usability, compatibility, security, and performance.

Usability was a concern for both for internal users and customers. We wanted to create a user-friendly solution that would make it easy for customers to access and redeem their digital vouchers, without any confusion. Analytics and management of the digital vouchers were another concern as we also wanted to create a solution that would provide valuable insights and feedback to the marketeers. So that they could optimize their campaigns and increase their conversions.

The Solution: was to build a cloud-based microservices with clear and robust APIs following MACH architecture. MACH is a modern approach to software development that stands for Microservices, API-first, Cloud-native, and Headless.

Microservices are small independent services that communicate over well-defined APIs. This way, we could create, update, and deploy features faster and easier, without affecting the whole system. Building cloud-native APIs enabled us to scale, adapt, and reuse product components.

We chose AWS as our cloud provider. We benefited from its global infrastructure, which provided high network availability and ensured a performant solution across the globe. Using AWS cloud services, such as storage, database, and security, we reduced the overhead and maintenance costs of our solution and increased time to market.

Following an API-first approach, we created well-defined contracts for each API, so that dependent development teams can work in parallel to speed up the implementation timelines. This approach was beneficial internally too. We improved development maturity by enforcing coding procedures and defining benchmarks for performance and availability. We also followed Agile methodology, which allowed us to fail fast and learn from our mistakes. We iterated quickly and responded to the customer feedback swiftly.

In just over three months, we launched our first version to the US market. We were able to expand to the next market within a week. MACH architecture helped us to extend the solution further quickly and efficiently both with extended capabilities and brand new implementations as each cloud-based service was isolated and provided clear functionality.

We learned from each experience and shared the learnings. We used data analysis and user testing to improve our solution and meet the expectations of our customers.

We also embraced the inner source, a software development approach that applies the principles and practices of open source to internal projects. Inner source helps us to collaborate and innovate with different teams and projects around the world.

This was a demanding project with tight deadlines and tough business constraints and was a remarkable achievement that required teamwork and coordination across borders and time zones.

0 notes

Text

Xicom | Cloud-Native App Development Company in USA

The adoption of cloud-native mobile apps has been a significant game-changer in the app development industry. In a bid to remain competitive and meet changing user demands, businesses are embracing this innovative approach. Nonetheless, building cloud-native mobile apps presents distinct challenges and benefits, as is the case with any technological advancement. Consequently, there is a need for a shift in both technology comprehension and application design/deployment approaches to leverage this transformation fully. By embracing cloud-native mobile apps, we are paving the way for the future of software and app development, a future that promises unprecedented scalability, flexibility, and efficiency..

For More Information Visit Us: https://www.xicom.biz/blog/building-cloud-native-mobile-apps-the-future-of-app-development/

#android developer#app development#mobile app development#software#app development services#automotive#hire app developer#hire mobile app developer#xicom technologies#mobile app developers#cloud-native mobile apps#cloud-native#mobile app

0 notes

Text

Day hike grid

Lava plug rock formation 🪨

#photography#nature#pnw photography#my post#beautiful#nature photography#travel#naturecore#nature hikes#hike#hiking#travelcore#travel the world#sunny day#summer#vacation#washington#canada#pnw native plants#river#fairy aesthetic#fairycore#trees and forests#trees#moss#rock formations#the great pnw#green#watercore#clouds

887 notes

·

View notes

Text

lingering thought clouds...

214 notes

·

View notes

Text

Good News - June 8-14

Like these weekly compilations? Tip me at $Kaybarr1735! And if you tip me and give me a way to contact you, at the end of the month I'll send you a link to all of the articles I found but didn't use each week!

1. Rare foal born on estate for first time in 100 years

“The Food Museum at Abbot's Hall in Stowmarket, Suffolk, is home to a small number of Suffolk Punch horses - a breed considered critically endangered by the Rare Breeds Survival Trust. A female foal was born on Saturday and has been named Abbots Juno to honour the last horse born at the museum in 1924. [...] Juno is just one of 12 fillies born so far this year in the country and she could potentially help produce more of the breed in the future.”

2. The cement that could turn your house into a giant battery

“[Scientists] at Massachusetts Institute of Technology (MIT) have found a way of creating an energy storage device known as a supercapacitor from three basic, cheap materials – water, cement and a soot-like substance called carbon black. [... Supercapacitators] can charge much more quickly than a lithium ion battery and don't suffer from the same levels of degradation in performance. [... Future applications of this concrete might include] roads that store solar energy and then release it to recharge electric cars wirelessly as they drive along a road [... and] energy-storing foundations of houses.”

3. New road lights, fewer dead insects—insect-friendly lighting successfully tested

“Tailored and shielded road lights make the light source almost invisible outside the illuminated area and significantly reduces the lethal attraction for flying insects in different environments. [...] The new LED luminaires deliver more focused light, reduce spill light, and are shielded above and to the side to minimize light pollution. [... In contrast,] dimming the conventional lights by a factor of 5 had no significant effect on insect attraction.”

4. When LGBTQ health is at stake, patient navigators are ready to help

“[S]ome health care systems have begun to offer guides, or navigators, to get people the help they need. [... W]hether they're just looking for a new doctor or taking the first step toward getting gender-affirming care, "a lot of our patients really benefit from having someone like me who is there to make sure that they are getting connected with a person who is immediately going to provide a safe environment for them." [... A navigator] also connects people with LGBTQ community organizations, social groups and peer support groups.”

5. Tech company to help tackle invasive plant species

“Himalayan balsam has very sugary nectar which tempts bees and other pollinators away from native plants, thereby preventing them from producing seed. It outcompetes native plant species for resources such as sunlight, space and nutrients. [...] The volunteer scheme is open to all GWT WilderGlos users who have a smartphone and can download the Crowdorsa app, where they can then earn up to 25p per square meter of Balsam removed.”

6. [Fish & Wildlife] Service Provides Over $14 Million to Benefit Local Communities, Clean Waterways and Recreational Boaters

“The U.S. Fish and Wildlife Service is distributing more than $14 million in Clean Vessel Act grants to improve water quality and increase opportunities for fishing, shellfish harvests and safe swimming in the nation’s waterways. By helping recreational boaters properly dispose of sewage, this year’s grants will improve conditions for local communities, wildlife and recreational boaters in 18 states and Guam.”

7. Bornean clouded leopard family filmed in wild for first time ever

“Camera traps in Tanjung Puting National Park in Indonesian Borneo have captured a Bornean clouded leopard mother and her two cubs wandering through a forest. It's the first time a family of these endangered leopards has been caught on camera in the wild, according [to] staff from the Orangutan Foundation who placed camera traps throughout the forest to learn more about the elusive species.”

8. Toy library helps parents save money 'and the planet'

“Started in 2015 by Annie Berry, South Bristol's toy library aims to reduce waste and allow more children access to more - and sometimes expensive - toys. [...] Ms Berry partnered with the St Philips recycling centre on a pilot project to rescue items back from landfill, bringing more toys into the library. [...] [P]eople use it to support the environment, take out toys that they might not have the space for at home or be able to afford, and allow children to pick non-gender specific toys.”

9. Chicago Receives $3M Grant to Inventory Its Trees and Create Plan to Manage City’s Urban Forest

“The Chicago Park District received a $1.48 million grant [“made available through the federal Inflation Reduction Act”] to complete a 100% inventory of its estimated 250,000 trees, develop an urban forestry management plan and plant 200 trees in disadvantaged areas with the highest need. As with the city, development of the management plan is expected to involve significant community input.”

10. Strong Public Support for Indigenous Co-Stewardship Plan for Bears Ears National Monument

“[The NFW has a] plan to collaboratively steward Bears Ears National Monument to safeguard wildlife, protect cultural resources, and better manage outdoor recreation. The plan was the result of a two-year collaboration among the five Tribes of the Bears Ears Inter-Tribal Coalition and upholds Tribal sovereignty, incorporates Traditional Ecological Knowledge, and responsibly manages the monument for hunting, fishing, and other outdoor recreation while ensuring the continued health of the ecosystem.”

June 1-7 news here | (all credit for images and written material can be found at the source linked; I don’t claim credit for anything but curating.)

#hopepunk#good news#nature#horse#rare breed#energy storage#clean energy#biodiversity#street lights#lgbtq#health#native plants#invasive species#incentive#fws#water#fishing#swimming#clouded leopard#indonesia#library#kids toys#interdependence#bristol#uk#funding#native#outdoor recreation#animals#wildlife

752 notes

·

View notes

Text

March 2025

#noseysilverfox#photography#pine trees#trees#parks#nature#spring#clouds#cloudy weather#photography on tumblr#atmospheric#photo on tumblr#photoblog#nature photography#native plants#plant photography#flora fauna#plant#фотоблог#природа#парк#сосны#весна#после дождя#фотографии природы#фотография#флора#русск��й tumblr#эстетичныефото#весна 2025

101 notes

·

View notes

Text

Cloud-Native Broadcasting: The New Frontier

Imagine this: It's the middle of the night, you're orchestrating a live stream of an international music festival, and suddenly your main mixing board decides it's time for an unscheduled intermission. A few years ago, this would have been a career-defining disaster. Today? It's barely a hiccup. Welcome to the era of cloud-native broadcasting, where adaptability is the name of the game, and the sky's the limit.

I've been in this field long enough to remember when "streaming" meant wading through a river, and "buffering" was something you did to your car. But let me tell you, the technological tsunami that's crashed through our industry lately makes those old analog-to-digital shifts look like ripples in a pond.

In this article, we're going to pull back the curtain on the cloud-native revolution that's redefining the broadcast landscape. We'll explore the core technologies making it all possible, examine how industry leaders are harnessing these innovations, and speculate on what the future might hold. So, strap in and prepare for takeoff as we explore the new frontier of broadcasting.

The Pillars of Cloud-Native Broadcasting

Before we dive into what companies are doing, let's break down the foundational technologies that are driving this transformation. If these concepts are new to you, don't worry - I'll explain them in terms that make sense for media professionals.

Remember when setting up a new piece of broadcast equipment meant hours of tweaking and troubleshooting? Virtualization is changing all that. Think of it as creating a digital twin of physical hardware. It allows us to run multiple virtual machines on a single physical server, maximizing resource utilization and flexibility.

For us in the broadcast world, this means we can deploy complex media processing applications with unprecedented agility. Need to launch a new encoding workflow for a major live event? With virtualization, it's as simple as clicking a button. The era of wrestling with hardware-specific configurations is rapidly becoming a relic of the past.

But it's not just about ease of deployment. Virtualization also enables more efficient resource allocation. In the past, we often had to over-provision hardware to handle peak loads. With virtual machines, we can scale resources up or down dynamically, potentially leading to significant infrastructure cost savings.

Now, if virtualization is the engine of our cloud orchestra, orchestration is the conductor. As our broadcasting workflows become increasingly complex, involving dozens or even hundreds of interconnected microservices, we need a way to manage and coordinate all these moving parts.

This is where orchestration platforms come into play. These systems handle the intricate details of deploying, scaling, and managing our virtualized applications. They ensure that all the components of our broadcasting pipeline work together seamlessly, from ingest to transcoding to delivery.

But here's where it gets really exciting for us in the broadcast world: intelligent resource allocation. Orchestration platforms can be configured to understand the unique requirements of media workflows. They can ensure that interdependent services are placed optimally to minimize latency - crucial for live production. They can also dynamically allocate computing resources based on the complexity of media processing tasks. Handling a 4K HDR stream? The system can automatically provision more powerful nodes to handle the load.

Now, let's talk about something that doesn't always get the spotlight it deserves: the network. In the realm of cloud-based broadcasting, the network is everything. It's the nervous system that connects all the parts of our production workflow.

The challenges here are significant. We're not just talking about pushing files around anymore. We're dealing with real-time, high-bitrate media streams that need to be moved around with minimal latency. This is where technologies like Software-Defined Networking (SDN) come into play. SDN allows us to programmatically control network behavior, making our infrastructure more agile and responsive to the unique demands of media workflows.

And let's not forget about emerging network technologies. As these technologies become more widespread, they're opening up new possibilities for remote production. Imagine being able to send high-quality, low-latency video feeds from a live event back to your production hub without the need for expensive satellite uplinks. That's the kind of game-changer we're looking at with advanced networking integration.

Now, let's talk about something really exciting: immersive media production. This is where cloud-native technologies are really pushing the envelope.

Take volumetric video, for example. This technology allows for the creation of three-dimensional video that viewers can explore from any angle. The computational demands for this kind of technology are enormous, but cloud-native architectures make it feasible.

Or consider object-based broadcasting. The challenge here isn't just in producing the content - it's in delivering it efficiently to viewers. Cloud-based solutions are enabling innovative approaches like object-based audio and personalized video streams that adapt to viewer preferences and device capabilities.

Industry Trailblazers: Harnessing the Power of the Cloud

Now that we've covered the foundational technologies, let's look at how some of the major players in our industry are putting these innovations to work. I've had the chance to work with or closely observe many of these companies, and the progress they're making is truly remarkable.

It's impossible to talk about cloud-based media solutions without mentioning AWS. They've built a comprehensive suite of services that cover virtually every aspect of the media production and delivery pipeline.

Their MediaLive service is a powerhouse for real-time video processing. It supports adaptive bitrate (ABR) streaming, which is crucial for delivering high-quality experiences across a range of devices and network conditions. What's really powerful is how seamlessly it integrates with other AWS services. You can ingest a live feed, transcode it, package it, and deliver it to viewers worldwide, all within the AWS ecosystem.

For file-based workflows, there's MediaConvert. It supports a wide range of codecs and formats, including advanced compression technologies for more efficient delivery. One feature I find particularly interesting is their content-aware encoding. This approach adjusts the encoding parameters based on the complexity of the content, potentially saving on storage and delivery costs without sacrificing quality.

And let's not forget about their content delivery network. The integration between their CDN and media services is seamless, making it relatively straightforward to set up global content delivery for your streams. They've also put a lot of work into security features, including robust encryption and sophisticated access controls.

TVU Networks is doing some really interesting work at the intersection of cloud computing, AI, and live broadcasting. Their approach is built around a microservices architecture, which gives them a lot of flexibility in terms of how they deploy and scale their solutions.

One product that's caught my eye is their cloud-based media hub. It's a solution that allows you to ingest multiple sources into their ecosystem and then manipulate those sources within their other applications. This kind of flexibility is crucial in today's fast-paced production environments.

What's really impressive about TVU's approach is their focus on hybrid workflows. Their solutions can be deployed on existing on-premise hardware, giving broadcasters a lot of flexibility in terms of how they manage their transition to the cloud. I've seen this approach work well in large-scale productions like major sporting events.

TVU is also doing some interesting work with AI integration. They've been incorporating AI into their solutions for several years, using it to streamline tasks and expand coverage capabilities. As someone who's seen a lot of hype around AI, it's refreshing to see a company applying it in practical, value-adding ways.

Vizrt has long been a leader in real-time graphics and studio automation, and they're making significant strides in bringing these capabilities to the cloud. Their latest engine is a cloud-based real-time rendering and compositing solution that's opening up new possibilities for distributed production workflows.

One aspect of their latest engine that I find particularly interesting is its integration with advanced networking technologies. This opens up new possibilities for low-latency remote production, potentially allowing for more flexible and cost-effective setups for live events.

Vizrt's control application for graphics systems is another product worth mentioning. It's a cloud-native solution that supports collaborative workflows for distributed teams. In a world where remote work is becoming increasingly common, tools like this are going to be crucial.

Blackbird is tackling one of the most challenging aspects of cloud-based production: video editing. Their cloud-native video editing platform is designed from the ground up for remote and collaborative workflows.

What sets Blackbird apart is their proprietary technology, which is optimized for cloud-based editing. This allows for professional-grade editing directly in a web browser, which is pretty impressive when you think about it. They've also put a lot of work into intelligent bandwidth management, which helps ensure a smooth editing experience even in less-than-ideal network conditions.

One feature I particularly like is their edge technology. This is essentially a local processing node that can be deployed on-premise, providing improved performance while still integrating seamlessly with their cloud infrastructure. It's a great example of the kind of hybrid approach that I think we'll see more of in the coming years.

Amagi is making waves with their cloud-based broadcast and streaming infrastructure solutions. Their playout platform is a software-defined solution that's giving broadcasters unprecedented flexibility in channel management.

What's really powerful about their playout solution is how it integrates with major cloud providers. This allows broadcasters to leverage the global reach of these providers, potentially launching channels in new markets with minimal infrastructure investment.

Amagi's ad insertion platform is also worth mentioning. It's a server-side solution that allows for dynamic ad placement, enabling more personalized viewer experiences. In a world where targeted advertising is becoming increasingly important, solutions like this are going to be crucial.

The Horizon: Challenges and Opportunities

As we look to the future, it's clear that cloud-native technologies are going to play an increasingly central role in broadcasting. But as with any major technological shift, there are both challenges and opportunities ahead.

We're only scratching the surface of what AI and machine learning can do for our industry. I expect we'll see more AI-driven tools for automated content creation, real-time analytics, and intelligent resource allocation. Imagine an AI system that can automatically adjust your production workflow based on real-time viewer engagement data. That's the kind of capability we're moving towards.

As powerful as cloud computing is, there are still scenarios where latency is a critical issue. This is where edge computing comes in. By bringing computing resources closer to where they're needed, edge computing can enable lower latency and more efficient processing for live production workflows. I expect we'll see tighter integration between edge and cloud infrastructures in the coming years.

The evolution of network technologies is going to be a game-changer for our industry. It will enable new possibilities for remote production and immersive experiences. But we're not stopping there - work is already underway on next-generation network technologies. As these networks evolve, they'll open up new frontiers in what's possible with live video production.

As cloud-based production tools proliferate, there's an increasing need for standardization and improved interoperability. No broadcaster wants to be locked into a single vendor's ecosystem. I expect we'll see industry initiatives aimed at developing common standards and improving interoperability between different cloud-based production tools and platforms.

As more of our content and production workflows move to the cloud, ensuring the security and privacy of media assets will remain a critical focus area. This isn't just about preventing unauthorized access - it's also about ensuring compliance with an increasingly complex landscape of data protection regulations.

Finally, let's talk about sustainability. The environmental impact of cloud computing is becoming an increasingly important consideration. I expect we'll see innovations in energy-efficient technologies and sustainable practices in video production. This isn't just about being good corporate citizens - in many markets, it's becoming a regulatory requirement.

As we conclude our virtual tour of the cloud-native broadcasting universe, one thing is abundantly clear: we're entering uncharted territory. The technologies we've explored – from virtualization to AI-driven workflows – aren't just incremental improvements. They're the foundation of a new broadcasting paradigm, one where the only limit is our creativity (and maybe our internet bandwidth).

For those of us who've been in the trenches of the industry for years, this shift is both thrilling and, admittedly, a bit daunting. It's like we've been playing chess for decades, and suddenly someone's introduced a three-dimensional board. But here's the crucial point: embracing this change isn't just an option, it's a necessity for staying relevant in the future of broadcasting.

The companies we've highlighted – AWS, TVU Networks, Vizrt, Blackbird, and Amagi – they're not just adapting to this new landscape. They're actively shaping it, redefining the very foundations of our industry. And for every broadcaster and content creator out there, the message is clear: innovate or risk obsolescence.

But here's the aspect that truly excites me as a broadcasting enthusiast: we're just at the beginning. The cloud-native revolution we're witnessing is like the opening scene of an epic saga. The real adventures, the plot twists, the groundbreaking innovations – they're all still to come.

So, my fellow broadcast pioneers, as we stand on the cusp of this new era, I offer this advice: stay curious, remain adaptable, and keep your imagination boundless. Because in the world of cloud-native broadcasting, the most exhilarating chapter… is the one we're about to write together.

0 notes

Text

Greetings from Konza Prairie in the Flint Hills of eastern Kansas! This is one of the largest remnant tallgrass prairies in North America.

#prairie#Konza Prairie#tallgrass prairie#Kansas#ecology#panorama#grasslands#native plants#native ecology#hiking#sky#clouds#trails#Flint Hills

67 notes

·

View notes

Text

South Australia, Sunrise 💙💜

#australia#southern aesthetic#rural australia#aesthetic#nature#art#photography#sunrise#nautical#expressing#love#artist#life#thoughts#horizon#country aesthetic#winter#pretty#clouds#pink sky#sky photography#my own#my photo#landscape#no edit#native#a e s t h e t i c#golden hour#good omens#beautiful views

29 notes

·

View notes

Text

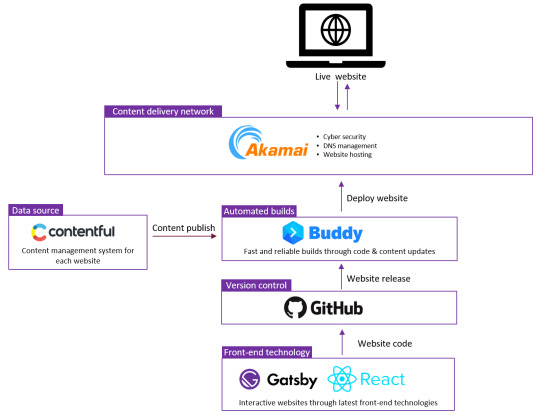

JAMstack, Buddy, and Contentful: A Winning Combination for Scalable and Reliable Websites

The Challenge: We had to build and manage multiple international websites with a small team of 5, while ensuring scalability with the localisation at its core, reliability with easy content and code changes, and efficiency with minimal operational overhead.

The business ambition was to launch 10 new websites within the first year with a limited budget and no additional team members. Our project plan was to use the central development team to build the core components, own the technical operation of the websites, and train the local teams who would content-manage their own localised websites.

Our future vision was to empower local teams to expand their websites with local components, so the central solution had to be a modular and scalable by nature.

The Solution: JAMstack, a web development architecture that uses JavaScript, APIs, and Markup to create fast, secure, and scalable websites and applications. JAMstack allowed us to build a headless website that was decoupled from its back-end, so that it could be served directly from a CDN (Content Delivery Network). This architecture reduced the need for dynamic servers and databases, and improved reliability and scalability which in return helped us to keep operational and maintenance costs much lower than traditonal websites.

We chose Contentful as our headless CMS, as it fit the picture perfectly with its RESTful APIs and easy to handle JSON files. Contentful gave us the flexibility and control to manage our content across different regions and languages, and to integrate with various services and features from third-party providers via APIs.

We used Gatsby and React to build the front-end that was managed through GitHub. Gatsby and React are powerful frameworks that enable us to create dynamic and interactive web pages with high performance and SEO which were crucial for the project as these were brand websites with keen focus on the design and findability. GitHub was our version control system that helped us to collaborate and track changes in our code.

We used Buddy, the DevOps automation platform, to build the CI/CD pipelines that automated our workflows and integrated with our tools. Buddy has a visual pipeline editor and a dashboard that show us the status and performance of our projects, and allow us to manage our settings.

The trick: was to leverage MACH architecture, starting with Headless. MACH stands for Microservices, API-first, Cloud-native, and Headless, and it is a modern approach to software development that helps us to build a scalable, modular, and composable architecture. MACH architecture gives us the freedom and flexibility to choose the best tools and services for our needs, and enable us to adapt to market changes.

With the help of these technologies and tools, we were able to automate a big portion of the operation, ensuring that the team could manage the ambitious scale. By using the same infrastructure and codebase for every single website, we could avoid duplication, inconsistency, and maintenance issues. We also achieved faster delivery, better quality, and higher customer satisfaction.

#MACH#JAMstack#contentful#Buddy#headless#cloud-native#website#international#leadership#software delivery

0 notes

Text

off the surface of darkness, light reflects

from its depths, life persists

#personal#art#photography#mine#nature#nature hikes#nature photography#lilly pads#water#sky#clouds#plants#native plants#swamp#poetry

23 notes

·

View notes

Text

• Angel Cloud •

#artists on tumblr#own characters#oc art#borzoi#dog#canine#anthro#it seems this is the first full-fledged post with my character. I've drawn her before#but I haven't given any information about her.#So let's get to know each other.. This is Angel Cloud.#You probably already guessed from her clothes that she doesn't live with us at the same time. This is the 19th century#France.#Maria Theresa's former maid of honor leaves her native country after the events of the 1830 July Revolution (fr. Trois Glorieuses).#After leaving her native harbor#she and her brother flee to England.#later I will introduce you to her twin brother Nicholas#the English Duke of Linor#the beautiful maid of the Duke of Devonshire#and a young teacher at Cambridge University.Just give me some time.

74 notes

·

View notes