#Copilot

Explore tagged Tumblr posts

Text

AI software assistants make the hardest kinds of bugs to spot

Hey, German-speakers! Through a very weird set of circumstances, I ended up owning the rights to the German audiobook of my bestselling 2022 cryptocurrency heist technothriller Red Team Blues and now I'm selling DRM-free audio and ebooks, along with the paperback (all in German and English) on a Kickstarter that runs until August 11.

It's easy to understand why some programmers love their AI assistants and others loathe them: the former group get to decide how and when they use AI tools, while the latter has AI forced upon them by bosses who hope to fire their colleagues and increase their workload.

Formally, the first group are "centaurs" (people assisted by machines) and the latter are "reverse-centaurs" (people conscripted into assisting machines):

https://pluralistic.net/2025/05/27/rancid-vibe-coding/#class-war

Most workers have parts of their jobs they would happily automate away. I know of a programmer who uses AI to take a first pass at CSS code for formatted output. This is a notoriously tedious chore, and it's not hard to determine whether the AI got it right – just eyeball the output in a variety of browsers. If this was a chore you hated doing and someone gave you an effective tool to automate it, that would be cause for celebration. What's more, if you learned that this was only reliable for a subset of cases, you could confine your use of the AI to those cases.

Likewise, many workers dream of doing something through automation that is so expensive or labor-intensive that they can't possibly do it. I'm thinking here of the film editor who extolled the virtues to me of deepfaking the eyelines of every extra in a crowd scene, which lets them change the focus of the whole scene without reassembling a couple hundred extras, rebuilding the set, etc. This is a brand new capability that increases the creative flexibility of that worker, and no wonder they love it. It's good to be a centaur!

Then there's the poor reverse-centaurs. These are workers whose bosses have saddled them with a literally impossible workload and handed them an AI tool. Maybe they've been ordered to use the tool, or maybe they've been ordered to complete the job (or else) by a boss who was suggestively waggling their eyebrows at the AI tool while giving the order. Think of the freelance writer whom Hearst tasked with singlehandedly producing an entire, 64-page "best-of" summer supplement, including multiple best-of lists, who was globally humiliated when his "best books of the summer" list was chock full of imaginary books that the AI "hallucinated":

https://www.404media.co/viral-ai-generated-summer-guide-printed-by-chicago-sun-times-was-made-by-magazine-giant-hearst/

No one seriously believes that this guy could have written and fact-checked all that material by himself. Nominally, he was tasked with serving as the "human in the loop" who validated the AI's output. In reality, he was the AI's fall-guy, what Dan Davies calls an "accountability sink," who absorbed the blame for the inevitable errors that arise when an employer demands that a single human sign off on the products of an error-prone automated system that operates at machine speeds.

It's never fun to be a reverse centaur, but it's especially taxing to be a reverse centaur for an AI. AIs, after all, are statistical guessing programs that infer the most plausible next word based on the words that came before. Sometimes this goes badly and obviously awry, like when the AI tells you to put glue or gravel on your pizza. But more often, AI's errors are precisely, expensively calculated to blend in perfectly with the scenery.

AIs are conservative. They can only output a version of the future that is predicted by the past, proceeding on a smooth, unbroken line from the way things were to the way they are presumed to be. But reality isn't smooth, it's lumpy and discontinuous.

Take the names of common code libraries: these follow naming conventions that make it easy to predict what a library for a given function will be, and to guess what a given library does based on its name. But humans are messy and reality is lumpy, so these conventions are imperfectly followed. All the text-parsing libraries for a programming language may look like this: docx.text.parsing; txt.text.parsing, md.text.parsing, except for one, which defies convention by being named text.odt.parsing. Maybe someone had a brainfart and misnamed the library. Maybe the library was developed independently of everyone else's libraries and later merged. Maybe Mercury is in retrograde. Whatever the reason, the world contains many of these imperfections.

Ask an LLM to write you some software and it will "hallucinate" (that is, extrapolate) libraries that don't exist, because it will assume that all text-parsing libraries follow the convention. It will assume that the library for parsing odt files is called "odt.text.parsing," and it will put a link to that nonexistent library in your code.

This creates a vulnerability for AI-assisted code, called "slopsquatting," whereby an attacker predicts the names of libraries AIs are apt to hallucinate and creates libraries with those names, libraries that do what you would expect they'd do, but also inject malicious code into every program that incorporates them:

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

This is the hardest type of error to spot, because the AI is guessing the statistically most plausible name for the imaginary library. It's like the AI is constructing one of those spot-the-difference image puzzles on super-hard mode, swapping the fork and knife in a diner's hands from left to right and vice-versa. You couldn't generate a harder-to-spot bug if you tried.

It's not like people are very good at supervising machines to begin with. "Automation blindness" is what happens when you're asked to repeatedly examine the output of a generally correct machine for a long time, and somehow remain vigilant for its errors. Humans aren't really capable of remaining vigilant for things that don't ever happen – whatever attention and neuronal capacity you initially devote to this never-come eventuality is hijacked by the things that happen all the time. This is why the TSA is so fucking amazing at spotting water-bottles on X-rays, but consistently fails to spot the bombs and guns that red team testers smuggle into checkpoints. The median TSA screener spots a hundred water bottles a day, and is (statistically) never called upon to spot something genuinely dangerous to a flight. They have put in their 10,000 hours, and then some, on spotting water bottles, and approximately zero hours on spotting stuff that we really, really don't want to see on planes.

So automation blindness is already going to be a problem for any "human in the loop," from a radiologist asked to sign off on an AI's interpretation of your chest X-ray to a low-paid overseas worker remote-monitoring your Waymo…to a programmer doing endless, high-speed code-review for a chatbot.

But that coder has it worse than all the other in-looped humans. That coder doesn't just have to fight automation blindness – they have to fight automation blindness and spot the subtlest of errors in this statistically indistinguishable-from-correct code. AI's are basically doing bug steganography, smuggling code defects in by carefully blending them in with correct code.

At code shops around the world, the reverse centaurs are suffering. A survey of Stack Overflow users found that AI coding tools are creating history's most difficult-to-discharge technology debt in the form of "almost right" code full of these fiendishly subtle bugs:

https://venturebeat.com/ai/stack-overflow-data-reveals-the-hidden-productivity-tax-of-almost-right-ai-code/

As Venturebeat reports, while usage of AI coding assistants is up (from 76% last year to 84% this year), trust in these tools is plummeting – 33%, with no bottom in sight. 45% of coders say that debugging AI code takes longer than writing the code without AI at all. Only 29% of coders believe that AI tools can solve complex code problems.

Venturebeat concludes that there are code shops that "solve the 'almost right' problem" and see real dividends from AI tools. What they don't say is that the coders for whom "almost right" isn't a problem are centaurs, not reverse centaurs. They are in charge of their own production and tooling, and no one is using AI tools as a pretext for a relentless hurry-up amidst swingeing cuts to headcount.

The AI bubble is driven by the promise of firing workers and replacing them with automation. Investors and AI companies are tacitly (and sometimes explicitly) betting that bosses who can fire a worker and replace them with a chatbot will pay the chatbot's maker an appreciable slice of that former worker's salary for an AI that takes them off the payroll.

The people who find AI fun or useful or surprising are centaurs. They're making automation choices based on their own assessment of their needs and the AIs' capabilities.

They are not the customers for AI. AI exists to replace workers, not empower them. Even if AI can make you more productive, there is no business model in increasing your pay and decreasing your hours.

AI is about disciplining labor to decrease its share of an AI-using company's profits. AI exists to lower a company's wage-bill, at your expense, with the savings split between the your boss and an AI company. When Getty or the NYT or another media company sues an AI company for copyright infringement, that doesn't mean they are opposed to using AI to replace creative workers – they just want a larger slice of the creative workers' salaries in the form of a copyright license from the AI company that sells them the worker-displacing tool.

They'll even tell you so. When the movie studios sued Midjourney, the RIAA (whose most powerful members are subsidiaries of the same companies that own the studios) sent out this press statement, attributed to RIAA CEO Mitch Glazier:

There is a clear path forward through partnerships that both further AI innovation and foster human artistry. Unfortunately, some bad actors – like Midjourney – see only a zero-sum, winner-take-all game.

Get that? The problem isn't that Midjourney wants to replace all the animation artists – it's that they didn't pay the movie studios license fees for the training data. They didn't create "partnerships."

Incidentally: Mitch Glazier's last job was as a Congressional staffer who slipped an amendment into must-pass bill that killed musicians' ability to claim the rights to their work back after 35 years through "termination of transfer." This was so outrageous that Congress held a special session to reverse it and Glazier lost his job.

Whereupon the RIAA hired him to run the show.

AI companies are not pitching a future of AI-enabled centaurs. They're colluding with bosses to build a world of AI-shackled reverse centaurs. Some people are using AI tools (often standalone tools derived from open models, running on their own computers) to do some fun and exciting centaur stuff. But for the AI companies, these centaurs are a bug, not a feature – and they're the kind of bug that's far easier to spot and crush than the bugs that AI code-bots churn out in volumes no human can catalog, let alone understand.

Support me this summer in the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop! This summer, I'm writing The Reverse-Centaur's Guide to AI, a short book for Farrar, Straus and Giroux that explains how to be an effective AI critic.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/08/04/bad-vibe-coding/#maximally-codelike-bugs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

165 notes

·

View notes

Text

CoPilot in MS Word

I opened Word yesterday to discover that it now contains CoPilot. It follows you as you type and if you have a personal Microsoft 365 account, you can't turn it off. You will be given 60 AI credits per month and you can't opt out of it.

The only way to banish it is to revert to an earlier version of Office. There is lot of conflicting information and overly complex guides out there, so I thought I'd share the simplest way I found.

How to revert back to an old version of Office that does not have CoPilot

This is fairly simple, thankfully, presuming everything is in the default locations. If not you'll need to adjust the below for where you have things saved.

Click the Windows Button and S to bring up the search box, then type cmd. It will bring up the command prompt as an option. Run it as an administrator.

Paste this into the box at the cursor: cd "\Program Files\Common Files\microsoft shared\ClickToRun"

Hit Enter

Then paste this into the box at the cursor: officec2rclient.exe /update user updatetoversion=16.0.17726.20160

Hit enter and wait while it downloads and installs.

VERY IMPORTANT. Once it's done, open Word, go to File, Account (bottom left), and you'll see a box on the right that says Microsoft 365 updates. Click the box and change the drop down to Disable Updates.

This will roll you back to build 17726.20160, from July 2024, which does not have CoPilot, and prevent it from being installed.

If you want a different build, you can see them all listed here. You will need to change the 17726.20160 at step 4 to whatever build number you want.

This is not a perfect fix, because while it removes CoPilot, it also stops you receiving security updates and bug fixes.

Switching from Office to LibreOffice

At this point, I'm giving up on Microsoft Office/Word. After trying a few different options, I've switched to LibreOffice.

You can download it here for free: https://www.libreoffice.org/

If you like the look of Word, these tutorials show you how to get that look:

www.howtogeek.com/788591/how-to-make-libreoffice-look-like-microsoft-office/

www.debugpoint.com/libreoffice-like-microsoft-office/

If you've been using Word for awhile, chances are you have a significant custom dictionary. You can add it to LibreOffice following these steps.

First, get your dictionary from Microsoft

Go to Manage your Microsoft 365 account: account.microsoft.com.

One you're logged in, scroll down to Privacy, click it and go to the Privacy dashboard.

Scroll down to Spelling and Text. Click into it and scroll past all the words to download your custom dictionary. It will save it as a CSV file.

Open the file you just downloaded and copy the words.

Open Notepad and paste in the words. Save it as a text file and give it a meaningful name (I went with FromWord).

Next, add it to LibreOffice

Open LibreOffice.

Go to Tools in the menu bar, then Options. It will open a new window.

Find Languages and Locales in the left menu, click it, then click on Writing aids.

You'll see User-defined dictionaries. Click New to the right of the box and give it a meaningful name (mine is FromWord).

Hit Apply, then Okay, then exit LibreOffice.

Open Windows Explorer and go to C:\Users\[YourUserName]\AppData\Roaming\LibreOffice\4\user\wordbook and you will see the new dictionary you created. (If you can't see the AppData folder, you will need to show hidden files by ticking the box in the View menu.)

Open it in Notepad by right clicking and choosing 'open with', then pick Notepad from the options.

Open the text file you created at step 5 in 'get your dictionary from Microsoft', copy the words and paste them into your new custom dictionary UNDER the dotted line.

Save and close.

Reopen LibreOffice. Go to Tools, Options, Languages and Locales, Writing aids and make sure the box next to the new dictionary is ticked.

If you use LIbreOffice on multiple machines, you'll need to do this for each machine.

Please note: this worked for me. If it doesn't work for you, check you've followed each step correctly, and try restarting your computer. If it still doesn't work, I can't provide tech support (sorry).

#fuck AI#fuck copilot#fuck Microsoft#Word#Microsoft Word#Libre Office#LibreOffice#fanfic#fic#enshittification#AI#copilot#microsoft copilot#writing#yesterday was a very frustrating day

3K notes

·

View notes

Text

Get Thee Behind Me

Love how Microsoft just decided to put a preview of Copilot AI on my computer without telling me.

The only reason I noticed is that I use the lower-right-hand status bar in Windows fairly frequently and saw a weird little rainbow that said "Pre", and when I clicked on it a whole-ass Copilot window came up. Copilot is the host body for Microsoft Recall as well, the creepy parasite that Microsoft had to roll back after an uproar over the idea of it watching every single thing you do on your computer.

While I could search the internet for ways to turn it off, I do appreciate irony of making apps I don't want tell me how to kill them. So I asked it how to remove it from my computer and whaddaya know, it's as easy as Start > Settings > Personalization > Taskbar to switch it off. And now you know too!

Keep an eye on your status bar, I guess.

2K notes

·

View notes

Text

How to Kill Microsoft's AI "Helper" Copilot WITHOUT Screwing With Your Registry!

Hey guys, so as I'm sure a lot of us are aware, Microsoft pulled some dickery recently and forced some Abominable Intelligence onto our devices in the form of its "helper" program, Copilot. Something none of us wanted or asked for but Microsoft is gonna do anyways because I'm pretty sure someone there gets off on this.

Unfortunately, Microsoft offered no ways to opt out of the little bastard or turn it off (unless you're in the EU where EU Privacy Laws force them to do so.) For those of us in the United Corporations of America, we're stuck... or are we?

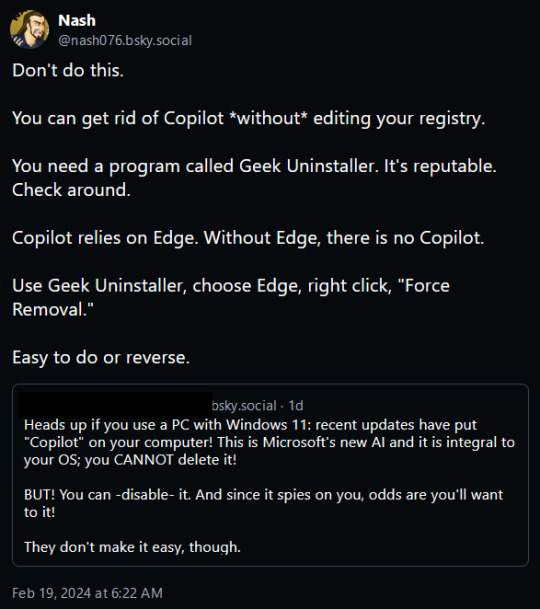

Today while perusing Bluesky, one of the many Twitter-likes that appeared after Musk began burning Twitter to the ground so he could dance in the ashes, I came across this post from a gentleman called Nash:

Intrigued, I decided to give this a go, and lo and behold it worked exactly as described!

We can't remove Copilot, Microsoft made sure that was riveted and soldered into place... but we can cripple it!

Simply put, Microsoft Edge. Normally Windows will prevent you from uninstalling Edge using the Add/Remove Programs function saying that it needs Edge to operate properly (it doesn't, its lying) but Geek Uninstaller overrules that and rips the sucker out regardless of what it says!

I uninstalled Edge using it, rebooted my PC, and lo and behold Copilot was sitting in the corner with blank eyes and drool running down it's cheeks, still there but dead to the world!

Now do bear in mind this will have a little knock on effect. Widgets also rely on Edge, so those will stop functioning as well.

Before:

After:

But I can still check the news and weather using an internet browser so its a small price to pay to be rid of Microsoft's spyware-masquerading-as-a-helper Copilot.

But yes, this is the link for Geek Uninstaller:

Run it, select "Force Uninstall" For anything that says "Edge," reboot your PC, and enjoy having a copy of Windows without Microsoft's intrusive trash! :D

UPDATE: I saw this on someone's tags and I felt I should say this as I work remotely too. If you have a computer you use for work, absolutely 100% make sure you consult with your management and/or your IT team BEFORE you do this. If they say don't do it, there's likely a reason.

2K notes

·

View notes

Text

家主がせいせいAIでオレとでしを出してみようと思ったら

オレのかわりにぱんがーさんみたいなシロネコが出てきたのです

My landlady has tried to make a picture of me and my apprentice, then it makes cats like my apprentice and a white cat like Ms.Pangur

2K notes

·

View notes

Text

"They shoved Copilot into my face so I might as well use it."

You absolute fool. You're just telling them that you're fine with having shit features forced onto you. Features that you already knew perfectly well were shit, you knew why they're shit, you know why they'll never improve, and you're using it to get some information about the Marlett system font that Copilot just grabbed from Wikipedia?

"They shoved it into my face so I might as well use it" is sending exactly the wrong message. And you already know they are listening.

209 notes

·

View notes

Text

I hate copilot (AI tool) so much, personally I think it makes developers lazy and worse at logical thinking.

We are working on an UI application that is mocking service call responses for local testing with the use of MSW.

There were some changes done to the service calls that would require updates on the MSW mocking, but instead of looking at the MSW documentation to figure out how to solve that, my coworker asked copilot.

Did it gave him a code that fixed the issue? Yes, but when I asked my coworker how it fixed it he had no idea because a) he doesn’t know MSW, b) he didn’t know what was the issue to begin with.

I did the MSW configuration myself, I read the documentation and I immediately knew what was needed to fix the issue but I wanted my coworker to do it himself so he would get familiarized with MSW so he could fix issues in the future, instead he used AI to solve something without actually understanding neither the issue or the solution.

And this is exactly why I refuse to use AI/Copilot.

#copilot#Anti AI#what did you fix? idk#then how did you fix it? idk#please for the love of god at the least read the documentation before asking copilot#programming

154 notes

·

View notes

Text

Shane Jones, the AI engineering lead at Microsoft who initially raised concerns about the AI, has spent months testing Copilot Designer, the AI image generator that Microsoft debuted in March 2023, powered by OpenAI’s technology. Like with OpenAI’s DALL-E, users enter text prompts to create pictures. Creativity is encouraged to run wild. But since Jones began actively testing the product for vulnerabilities in December, a practice known as red-teaming, he saw the tool generate images that ran far afoul of Microsoft’s oft-cited responsible AI principles.

Copilot was happily generating realistic images of children gunning each other down, and bloody car accidents. Also, copilot appears to insert naked women into scenes without being prompted.

Jones was so alarmed by his experience that he started internally reporting his findings in December. While the company acknowledged his concerns, it was unwilling to take the product off the market.

Lovely! Copilot is still up, but now rejects specific search terms and flags creepy prompts for repeated offenses, eventually suspending your account.

However, a persistent & dedicated user can still trick Copilot into generating violent (and potentially illegal) imagery.

Yiiiikes. Imagine you're a journalist investigating AI, testing out some of the prompts reported by your source. And you get arrested for accidentally generating child pornography, because Microsoft is monitoring everything you do with it?

Good thing Microsoft is putting a Copilot button on keyboards!

414 notes

·

View notes

Text

Co-pilot K-2SO is here ✨

🎥 📷 @jimmykimmellive IG stories

#alan tudyk#k2so#diego luna#cassian andor#andor#jimmy kimmel live#bts#star wars#rogue one#rogue one a star wars story#stilts#copilot#brothers#video#I'm tired just watching him walk lol#omg#all core

45 notes

·

View notes

Text

Poster creato da copilot da una mia foto

#natura#fotomia#photography#canon#nature#tumblr photographer#art on tumblr#artists on tumblr#fiori#ai art#copilot

44 notes

·

View notes

Text

Genuine question but does post-crash Curly have an eyelid??

Sure there’s a whole meaning about now he’s forced to watch the consequences of his actions of letting Jimmy run around the ship after previously ignoring it and turning a blind eye (see what I did there). Now that he’s made a choice the game makes damn sure he sticks with it.

There is nothing left for him to do but watch what he has allowed to happen because his eye is the only thing he can really use on his own. He’s now a spectator in his own body, forced by Jimmy to take the pills, forced by Jimmy to eat his own flesh and forced by him to stay alive. Jimmy is the captain now and makes almost every choice for him, whether he wants that or not. The monster he allowed or just down right ignored (whether or not Curly could’ve actually done anything is actually difficult to say because of the way Pony express is and also the limited options on the ship. Be 100 percent aware I don’t completely blame curly in the slightest!!!) is now his saviour of sorts. And he has to live with the fact that he is only alive because Jimmy let him. That all he can do is watch the man that massacred his friends and caused their deaths save his life. You’re saved by the people you save.

But also his eye must be fucking dry?? Like eyeballs are no joke they gotta stay moist and if you don’t blink bacteria will start climbing in there and rooting around as they do after like a few weeks let alone a few days

ALSO THIS IS A VIDEO GAME SUSPEND YOUR DISBELIEF AND ALL THAT STUFF !!!!

(Also reached my first 100 likes a couple days ago!! Thank you guys so much hehe)

#back on the mouthwashing grind👍#just thinking about curlys eyeball tbh#mouthwashing#mouthwashing curly#curly#captain curly#Jimmy#mouthwashing Jimmy#copilot jimmy#copilot#captain#mouthwashing video game#mouthwashing game#wrong organ#mouthwashing analysis#mouthwashing thoughts#mouthwashing theory#meganalysis

63 notes

·

View notes

Text

What the FUCK is Copilot doing in MY WORD DOCUMENT?! No, I do not want to draft with Copilot!

51 notes

·

View notes

Text

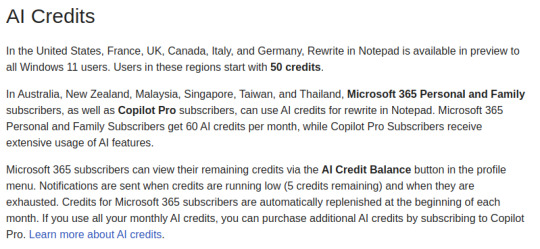

Classic Windows apps like Paint and Notepad are also getting LLM (Generative AI) support. There is no reason whatsoever that Notepad should do more than text editing. Microsoft has gone crazy with these tools. Of course, as part of these tools, your text in Notepad is sent to Microsoft under the name of Generative AI, which then sells it to the highest bidder. I'm so sick of Microsoft messing around with working tool and yes, you need stupid copilot subscription called "Microsoft 365 Personal and Family Subscribers" for Notepad. It is pain now. So, stick with FLOSS tools and OS and avoid this madness. Keep your data safe.

You need stupid AI credits to use basic tools.

In other words, you now need AI credits to use basic tools like Notepad and Paint. It's part of their offering called 'Microsoft 365 Personal and Family' or 'Copilot Pro'. These tools come with 'Content Filtering,' and only MS-approved topics or images can be created with their shity AI that used stolen data from all over the web. Read for yourself: Isn't AI wonderful? Artists and authors don't get paid, now you've added censorship, and the best part you need to pay for it!

GTFO, Microsoft.

104 notes

·

View notes

Text

Good Morning!!! Believe in your dreams!!!

#morning#good morning#good morning message#good morning image#good morning man#the good morning man#the entire morning#gif#gm#morning vibes#morning motivation#tgmm#☀️🧙🏼♂️✌🏼#pilot#pilots#copilot#copilots#i love you#less talk#more fly#flying#if we don't who will#sun#the sun#winning team#winning#plane

78 notes

·

View notes

Text

PSA: Copilot installed itself on my Android phone

I STRONGLY suggest you check your apps and uninstall it. Fortunately, I was able to just go to apps in settings and uninstall it.

I also found with my latest Windows update it got reinstalled after I removed it, although that was less of a surprise.

(This is not a post to add your opinions about how I shouldn't use Windows or Android anyway. Life is not as simple as that.)

32 notes

·

View notes