#DBMSBasics

Explore tagged Tumblr posts

Text

Indexing and Query Optimization Techniques in DBMS

In the world of database management systems (DBMS), optimizing performance is a critical aspect of ensuring that data retrieval is efficient, accurate, and fast. As databases grow in size and complexity, the need for effective indexing strategies and query optimization becomes increasingly important. This blog explores the key techniques used to enhance database performance through indexing and query optimization, providing insights into how these techniques work and their impact on data retrieval processes.

Database Managment System

Understanding Indexing in DBMS

Indexing is a technique used to speed up the retrieval of records from a database. An index is essentially a data structure that improves the speed of data retrieval operations on a database table at the cost of additional writes and storage space. It works much like an index in a book, allowing quick access to the desired information.

Types of Indexes

Primary Index: This is created automatically when a primary key is defined on a table. It organizes the data rows in the table based on the primary key fields.

Secondary Index: Also known as a non-clustered index, this type of index is created explicitly on fields that are frequently used in queries but are not part of the primary key.

Clustered Index: This type of index reorders the physical order of the table and searches on the basis of the key values. There can only be one clustered index per table since it dictates how data is stored.

Composite Index: An index on multiple columns of a table. It can be useful for queries that filter on multiple columns at once.

Unique Index: Ensures that the indexed fields do not contain duplicate values, similar to a primary key constraint.

Benefits of Indexing

Faster Search Queries: Indexes significantly reduce the amount of data that needs to be searched to find the desired information, thus speeding up query performance.

Efficient Sorting and Filtering: Queries that involve sorting or filtering operations benefit from indexes, as they can quickly identify the subset of rows that meet the criteria.

Reduced I/O Operations: By narrowing down the amount of data that needs to be processed, indexes help in reducing the number of disk I/O operations.

Drawbacks of Indexing

Increased Storage Overhead: Indexes consume additional disk space, which can be significant for large tables with multiple indexes.

Slower Write Operations: Insertions, deletions, and updates can be slower because the index itself must also be updated.

Query Optimization

Query Optimization in DBMS

Query optimization is the process of choosing the most efficient means of executing a SQL statement. A DBMS generates multiple query plans for a given query, evaluates their cost, and selects the most efficient one.

Steps in Query Optimization

Parsing: The DBMS first parses the query to check for syntax errors and to convert it into an internal format.

Query Rewrite: The DBMS may rewrite the query to a more efficient form. For example, subqueries can be transformed into joins.

Plan Generation: The query optimizer generates multiple query execution plans using different algorithms and access paths.

Cost Estimation: Each plan is evaluated based on estimated resources like CPU time, memory usage, and disk I/O.

Plan Selection: The plan with the lowest estimated cost is chosen for execution.

Techniques for Query Optimization

Join Optimization: Reordering joins and choosing efficient join algorithms (nested-loop join, hash join, etc.) can greatly improve performance.

Index Selection: Using the right indexes can reduce the number of scanned rows, hence speeding up query execution.

Partitioning: Dividing large tables into smaller, more manageable pieces can improve query performance by reducing the amount of data scanned.

Materialized Views: Precomputing and storing complex query results can speed up queries that use the same calculations repeatedly.

Caching: Storing the results of expensive operations temporarily can reduce execution time for repeated queries.

Best Practices for Indexing and Query Optimization

Analyze Query Patterns: Understand the commonly executed queries and pattern of data access to determine which indexes are necessary.

Monitor and Tune Performance: Use tools and techniques to monitor query performance and continuously tune indexes and execution plans.

Balance Performance and Resources: Consider the trade-off between read and write performance when designing indexes and query plans.

Regularly Update Statistics: Ensure that the DBMS has up-to-date statistics about data distribution to make informed decisions during query optimization.

Avoid Over-Indexing: While indexes are beneficial, too many indexes can degrade performance. Only create indexes that are necessary.

Indexing and Query

Conclusion

Indexing and query optimization are essential components of effective database management. By understanding and implementing the right strategies, database administrators and developers can significantly enhance the performance of their databases, ensuring fast and accurate data retrieval. Whether you’re designing new systems or optimizing existing ones, these techniques are vital for achieving efficient and scalable database performance.

FAQs

What is the main purpose of indexing in a DBMS?

The primary purpose of indexing is to speed up the retrieval of records from a database by reducing the amount of data that needs to be scanned.

How does a clustered index differ from a non-clustered index?

A clustered index sorts and stores the data rows of the table based on the index key, whereas a non-clustered index stores a logical order of data that doesn’t affect the order of the data within the table itself.

Why can too many indexes be detrimental to database performance?

Excessive indexes can slow down data modification operations (insert, update, delete) because each index must be maintained. They also consume additional storage space.

What is a query execution plan, and why is it important?

A query execution plan is a sequence of operations that the DBMS will perform to execute a query. It is important because it helps identify the most efficient way to execute the query.

Can materialized views improve query performance, and how?

Yes, materialized views can enhance performance by precomputing and storing the results of complex queries, allowing subsequent queries to retrieve data without recomputation.

HOME

#QueryOptimization#IndexingDBMS#DatabasePerformance#LearnDBMS#DBMSBasics#SQLPerformance#DatabaseManagement#DataRetrieval#TechForStudents#InformationTechnology#AssignmentHelp#AssignmentOnClick#assignment help#aiforstudents#machinelearning#assignmentexperts#assignment service#assignmentwriting#assignment

0 notes

Text

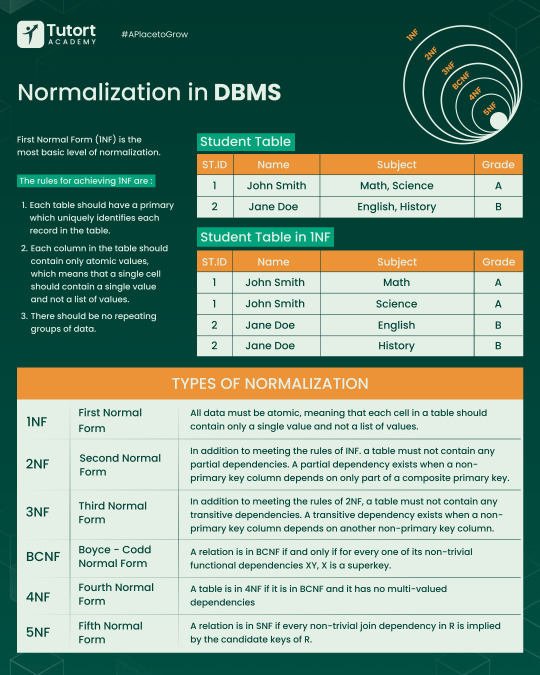

Break Up to Make Up: Normalization in DBMS Demystified!

In the world of data science for working professionals, messy databases are the real villains. That’s where Normalization in DBMS shines—it structures your data, eliminates redundancy, and improves integrity. Think of it as spring-cleaning your tables! From 1NF to 3NF and beyond, normalization ensures your system design course online covers efficient data storage like a pro. At TutorT Academy, we simplify these core concepts so you can ace interviews and build scalable systems with confidence.

#DBMSBasics #NormalizationExplained #DataScienceForWorkingProfessionals #SystemDesignCourseOnline #TutorTAcademy

0 notes

Text

Normalization in DBMS: Simplifying 1NF to 5NF

Database Management Systems (DBMS) are essential for storing, retrieving, and managing data efficiently. However, without a structured approach, databases can suffer from redundancy and anomalies, leading to inefficiencies and potential data integrity issues. This is where normalization comes into play. Normalization is a systematic method of organizing data in a database to reduce redundancy and improve data integrity. In this article, we will explore the different normal forms from 1NF to 5NF, understand their significance, and provide examples to illustrate how they help in avoiding redundancy and anomalies.

Normalization in DBMS

Understanding Normal Forms

Normalization involves decomposing a database into smaller, more manageable tables without losing data integrity. The different levels of normalization are called normal forms, each with specific criteria that need to be met. Let’s delve into each normal form and understand its importance.

First Normal Form (1NF)

The First Normal Form (1NF) is the foundation of database normalization. A table is in 1NF if:

All the attributes in a table are atomic, meaning each column contains indivisible values.

Each column contains values of a single type.

Each column must contain unique values or values that are part of a primary key.

Example of 1NF

Consider a table storing information about students and their subjects:

StudentID

Name

Subjects

1

Alice

Math, Science

2

Bob

English, Art

This table violates 1NF because the Subjects column contains multiple values. To transform it into 1NF, we need to split these values into separate rows:

StudentID

Name

Subject

1

Alice

Math

1

Alice

Science

2

Bob

English

2

Bob

Art

Second Normal Form (2NF)

A table is in the Second Normal Form (2NF) if:

It is in 1NF.

All non-key attributes are fully functionally dependent on the primary key.

In simpler terms, there should be no partial dependency of any column on the primary key.

Example of 2NF

Consider a table storing information about student enrollments:

EnrollmentID

StudentID

CourseID

Instructor

1

1

101

Dr. Smith

2

1

102

Dr. Jones

Here, Instructor depends only on CourseID, not on the entire primary key (EnrollmentID). To achieve 2NF, we split the table:

StudentEnrollments Table:

EnrollmentID

StudentID

CourseID

1

1

101

2

1

102

Courses Table:

CourseID

Instructor

101

Dr. Smith

102

Dr. Jones

Third Normal Form (3NF)

A table is in Third Normal Form (3NF) if:

It is in 2NF.

There are no transitive dependencies, i.e., non-key attributes should not depend on other non-key attributes.

Example of 3NF

Consider a table with student addresses:

StudentID

Name

Address

City

ZipCode

1

Alice

123 Main St

Gotham

12345

2

Bob

456 Elm St

Metropolis

67890

Here, City depends on ZipCode, not directly on StudentID. To achieve 3NF, we separate the dependencies:

Students Table:

StudentID

Name

Address

ZipCode

1

Alice

123 Main St

12345

2

Bob

456 Elm St

67890

ZipCodes Table:

ZipCode

City

12345

Gotham

67890

Metropolis

Understanding Normal Forms

Boyce-Codd Normal Form (BCNF)

A table is in Boyce-Codd Normal Form (BCNF) if:

It is in 3NF.

Every determinant is a candidate key.

BCNF is a stricter version of 3NF, dealing with certain anomalies not addressed by the latter.

Example of BCNF

Consider a table with employee project assignments:

EmployeeID

ProjectID

Task

1

101

Design

2

102

Build

Suppose an employee can work on multiple projects, and each project can have multiple tasks. If Task depends only on ProjectID, it violates BCNF. To achieve BCNF, decompose the table:

EmployeeProjects Table:

EmployeeID

ProjectID

1

101

2

102

ProjectTasks Table:

ProjectID

Task

101

Design

102

Build

Fourth Normal Form (4NF)

A table is in Fourth Normal Form (4NF) if:

It is in BCNF.

It has no multi-valued dependencies.

Multi-valued dependencies occur when one attribute in a table uniquely determines another attribute, independent of other attributes.

Example of 4NF

Consider a table with student courses and projects:

StudentID

CourseID

ProjectID

1

101

P1

1

102

P2

If CourseID and ProjectID are independent of each other, this violates 4NF. To achieve 4NF, separate the multi-valued dependencies:

StudentCourses Table:

StudentID

CourseID

1

101

1

102

StudentProjects Table:

StudentID

ProjectID

1

P1

1

P2

Fifth Normal Form (5NF)

A table is in Fifth Normal Form (5NF) if:

It is in 4NF.

It cannot have any join dependencies that are not implied by candidate keys.

5NF is primarily concerned with eliminating anomalies during complex join operations.

Example of 5NF

Consider a table with suppliers, parts, and projects:

SupplierID

PartID

ProjectID

1

A

X

1

B

Y

If SupplierID, PartID, and ProjectID are independent, the table needs to be decomposed to eliminate anomalies:

SupplierParts Table:

SupplierID

PartID

1

A

1

B

SupplierProjects Table:

SupplierID

ProjectID

1

X

1

Y

PartProjects Table:

PartID

ProjectID

A

X

B

Y

Normal Forms

Conclusion

Normalization is a crucial process in database design that helps eliminate redundancy and anomalies, ensuring data integrity and efficiency. By understanding the principles of each normal form from 1NF to 5NF, database designers can create structured and optimized databases. It’s important to balance normalization with practical considerations, as over-normalization can lead to complex queries and decreased performance.

FAQs

Why is normalization important in databases? Normalization is important because it reduces data redundancy, improves data integrity, and makes the database more efficient and easier to maintain.

What are the common anomalies avoided by normalization? Normalization helps avoid insertion, update, and deletion anomalies, which can compromise data integrity and lead to inconsistencies.

Can a database be over-normalized? Yes, over-normalization can lead to complex queries and decreased performance. It’s crucial to balance normalization with practical application requirements.

Is every table required to be in 5NF? Not necessarily. While 5NF eliminates all possible redundancies, many databases stop at 3NF or BCNF, which sufficiently addresses most redundancy and anomaly issues.

How do I decide which normal form to apply? The choice of normal form depends on the specific requirements of the database and application. Generally, it's best to start with 3NF or BCNF and assess if further normalization is needed based on the complexity and use case.

HOME

#DBMSNormalization#DatabaseNormalization#1NFto5NF#LearnDBMS#DatabaseDesign#DataModeling#SQLBasics#TechForStudents#InformationTechnology#DBMSBasics#AssignmentHelp#AssignmentOnClick#assignment#machinelearning#aiforstudents#assignmentwriting#assignment service#assignment help#assignmentexperts

0 notes

Text

Understanding ER Modeling and Database Design Concepts

In the world of databases, data modeling is a crucial process that helps structure the information stored within a system, ensuring it is organized, accessible, and efficient. Among the various tools and techniques available for data modeling, Entity-Relationship (ER) diagrams and database normalization stand out as essential components. This blog will delve into the concepts of ER modeling and database design, demonstrating how they contribute to creating an efficient schema design.

ER Modeling

What is an Entity-Relationship Diagram?

An Entity-Relationship Diagram, or ERD, is a visual representation of the entities, relationships, and data attributes that make up a database. ERDs are used as a blueprint to design databases, offering a clear understanding of how data is structured and how entities interact with one another.

Key Components of ER Diagrams

Entities: Entities are objects or things in the real world that have a distinct existence within the database. Examples include customers, orders, and products. In ERDs, entities are typically represented as rectangles.

Attributes: Attributes are properties or characteristics of an entity. For instance, a customer entity might have attributes such as CustomerID, Name, and Email. These are usually represented as ovals connected to their respective entities.

Relationships: Relationships depict how entities are related to one another. They are represented by diamond shapes and connected to the entities they associate. Relationships can be one-to-one, one-to-many, or many-to-many.

Cardinality: Cardinality defines the numerical relationship between entities. It indicates how many instances of one entity are associated with instances of another entity. Cardinality is typically expressed as (1:1), (1:N), or (M:N).

Primary Keys: A primary key is an attribute or set of attributes that uniquely identify each instance of an entity. It is crucial for ensuring data integrity and is often underlined in ERDs.

Foreign Keys: Foreign keys are attributes that establish a link between two entities, referencing the primary key of another entity to maintain relationships.

Steps to Create an ER Diagram

Identify the Entities: Start by listing all the entities relevant to the database. Ensure each entity represents a significant object or concept.

Define the Relationships: Determine how these entities are related. Consider the type of relationships and the cardinality involved.

Assign Attributes: For each entity, list the attributes that describe it. Identify which attribute will serve as the primary key.

Draw the ER Diagram: Use graphical symbols to represent entities, attributes, and relationships, ensuring clarity and precision.

Review and Refine: Analyze the ER Diagram for completeness and accuracy. Make necessary adjustments to improve the model.

The Importance of Normalization

Normalization is a process in database design that organizes data to reduce redundancy and improve integrity. It involves dividing large tables into smaller, more manageable ones and defining relationships among them. The primary goal of normalization is to ensure that data dependencies are logical and stored efficiently.

Normal Forms

Normalization progresses through a series of stages, known as normal forms, each addressing specific issues:

First Normal Form (1NF): Ensures that all attributes in a table are atomic, meaning each attribute contains indivisible values. Tables in 1NF do not have repeating groups or arrays.

Second Normal Form (2NF): Achieved when a table is in 1NF, and all non-key attributes are fully functionally dependent on the primary key. This eliminates partial dependencies.

Third Normal Form (3NF): A table is in 3NF if it is in 2NF, and all attributes are solely dependent on the primary key, eliminating transitive dependencies.

Boyce-Codd Normal Form (BCNF): A stricter version of 3NF where every determinant is a candidate key, resolving anomalies that 3NF might not address.

Higher Normal Forms: Beyond BCNF, there are Fourth (4NF) and Fifth (5NF) Normal Forms, which address multi-valued dependencies and join dependencies, respectively.

Benefits of Normalization

Reduced Data Redundancy: By storing data in separate tables and linking them with relationships, redundancy is minimized, which saves storage and prevents inconsistencies.

Improved Data Integrity: Ensures that data modifications (insertions, deletions, updates) are consistent across the database.

Easier Maintenance: With a well-normalized database, maintenance tasks become more straightforward due to the clear organization and relationships.

Benefits of Normalization

ER Modeling and Normalization: A Symbiotic Relationship

While ER modeling focuses on the conceptual design of a database, normalization deals with its logical structure. Together, they form a comprehensive approach to database design by ensuring both clarity and efficiency.

Steps to Integrate ER Modeling and Normalization

Conceptual Design with ERD: Begin with an ERD to map out the entities and their relationships. This provides a high-level view of the database.

Logical Design through Normalization: Use normalization steps to refine the ERD, ensuring that the design is free of redundancy and anomalies.

Physical Design Implementation: Translate the normalized ERD into a physical database schema, considering performance and storage requirements.

Common Challenges and Solutions

Complexity in Large Systems: For extensive databases, ERDs can become complex. Using modular designs and breaking down ERDs into smaller sub-diagrams can help.

Balancing Normalization with Performance: Highly normalized databases can sometimes lead to performance issues due to excessive joins. It's crucial to balance normalization with performance needs, possibly denormalizing parts of the database if necessary.

Maintaining Data Integrity: Ensuring data integrity across relationships can be challenging. Implementing constraints and triggers can help maintain the consistency of data.

Common Challenges and Solutions

Conclusion

Entity-Relationship Diagrams and normalization are foundational concepts in database design. Together, they ensure that databases are both logically structured and efficient, capable of handling data accurately and reliably. By integrating these methodologies, database designers can create robust systems that support complex data requirements and facilitate smooth data operations.

FAQs

What is the purpose of an Entity-Relationship Diagram?

An ER Diagram serves as a blueprint for database design, illustrating entities, relationships, and data attributes to provide a clear structure for the database.

Why is normalization important in database design?

Normalization reduces data redundancy and enhances data integrity by organizing data into related tables, ensuring consistent and efficient data storage.

What is the difference between ER modeling and normalization?

ER modeling focuses on the conceptual design and relationships within a database, while normalization addresses the logical structure to minimize redundancy and dependency issues.

Can normalization impact database performance?

Yes, while normalization improves data integrity, it can sometimes lead to performance issues due to increased joins. Balancing normalization with performance needs is essential.

How do you choose between different normal forms?

The choice depends on the specific needs of the database. Most databases aim for at least 3NF to ensure a balance between complexity and efficiency, with higher normal forms applied as necessary.

HOME

#ERModeling#DatabaseDesign#LearnDBMS#EntityRelationship#DataModeling#DBMSBasics#DatabaseConcepts#TechForStudents#InformationTechnology#DataArchitecture#AssignmentHelp#AssignmentOnClick#assignment help#assignment service#aiforstudents#machinelearning#assignmentexperts#assignment#assignmentwriting

0 notes

Text

DBMS Architecture Explained: 1-Tier, 2-Tier, and 3-Tier Models

In today's digitally driven world, managing data efficiently is crucial for businesses of all sizes. Database Management Systems (DBMS) play a pivotal role in organizing, storing, and retrieving data. Understanding the architecture of DBMS is vital for anyone involved in IT, from developers to system administrators. This blog delves into the three primary DBMS architectures: 1-Tier, 2-Tier, and 3-Tier models, explaining how databases are structured across client-server environments and application layers.

Database Managemnet System

The Importance of DBMS Architecture

Before diving into the specifics of each model, it's essential to grasp why DBMS architecture matters. The architecture determines how data is stored, accessed, and manipulated. It influences system performance, scalability, security, and user interaction. Choosing the appropriate DBMS architecture can lead to efficient data management and improved system performance, whereas an ill-suited architecture can result in bottlenecks, increased costs, and security vulnerabilities.

1-Tier Architecture

The 1-Tier architecture is the simplest form of a DBMS. It is a single-tiered approach where the database is directly accessible to the user without any intermediate application layer. This model is typically used for personal or small-scale applications where the database and user interface reside on the same machine.

Characteristics of 1-Tier Architecture

Simplicity: The 1-Tier architecture is straightforward, making it easy to implement and manage.

Direct Access: Users have direct access to the database, allowing for quick data retrieval and updates.

Limited Scalability: Due to its simplicity, this model is not suitable for large-scale applications.

Security Concerns: Direct access can pose security risks, as there is no separation between the user interface and the database.

Use Cases

1-Tier architecture is often employed in standalone applications, educational purposes, or during the development phase of a project where the simplicity of direct interaction with the database is beneficial.

2-Tier Architecture

The 2-Tier architecture adds a layer of abstraction between the user interface and the database. This model consists of a client application (the first tier) and a database server (the second tier). The client communicates directly with the database server, sending queries and receiving data.

Characteristics of 2-Tier Architecture

Client-Server Model: The separation between the client application and the database server allows for better organization and management.

Improved Security: The database is not directly accessible by users, reducing security risks.

Moderate Scalability: Suitable for small to medium-sized applications, but scalability is limited compared to the 3-Tier model.

Performance: Can experience bottlenecks if multiple clients access the database simultaneously.

Use Cases

2-Tier architecture is commonly used in small to medium-sized enterprises and applications that require a balance between simplicity and functionality, such as desktop applications that connect to a central database server.

3-Tier Architecture

The 3-Tier architecture introduces an additional layer between the client and the database server, known as the application server. This model consists of three layers: the client (or presentation layer), the application server (or business logic layer), and the database server (or data layer).

Characteristics of 3-Tier Architecture

Separation of Concerns: Each layer has distinct responsibilities, enhancing modularity and maintainability.

Enhanced Security: The database server is not directly accessible from the client, providing an additional security layer.

High Scalability: The architecture supports large-scale applications and can handle numerous client requests efficiently.

Flexibility: Changes in one layer do not affect others, allowing for easier updates and maintenance.

Use Cases

3-Tier architecture is ideal for enterprise-level applications, web applications, and systems requiring high scalability and security, such as e-commerce platforms and online banking systems.

Comparing the Architectures

Feature

1-Tier

2-Tier

3-Tier

Complexity

Low

Medium

High

Scalability

Limited

Moderate

High

Security

Low

Moderate

High

Use Case

Personal, Small

Small to Medium Enterprises

Large Enterprises, Web Apps

Maintenance

Simple

Moderate

Complex

DBMS Architecture

Choosing the Right Architecture

When deciding on a DBMS architecture, consider factors such as the size of the application, expected user load, security requirements, and future scalability needs. For small-scale applications, a 1-Tier or 2-Tier architecture might suffice. However, for large-scale, complex applications requiring robust security and scalability, a 3-Tier architecture is often the best choice.

Choosing the Right Architecture

Conclusion

Understanding the different DBMS architectures is crucial for designing efficient and secure database systems. Whether it's the simplicity of the 1-Tier model, the balanced approach of the 2-Tier model, or the robust scalability and security of the 3-Tier model, each architecture has its strengths and ideal use cases. By aligning the architecture with the application's requirements, businesses can ensure efficient data management and enhance overall system performance.

FAQs

What is the primary advantage of a 3-Tier architecture over a 2-Tier architecture?

The primary advantage of a 3-Tier architecture is its enhanced scalability and security. It separates the presentation, application logic, and data layers, allowing independent updates and maintenance while offering better protection against unauthorized access.

Can a 1-Tier architecture be used for web applications?

While technically possible, it's not recommended. Web applications typically require higher security and scalability than what a 1-Tier architecture can provide. A 3-Tier architecture is more suitable for web applications.

What are common challenges faced with 2-Tier architecture?

Common challenges include limited scalability and potential performance bottlenecks when multiple clients access the database simultaneously. It also requires more careful management of security compared to a 3-Tier system.

Is it possible to upgrade from a 1-Tier to a 3-Tier architecture?

Yes, it's possible, but it requires a significant redesign of the application. This includes separating the application logic from the user interface and introducing an application server to handle business logic.

How does DBMS architecture impact data security?

DBMS architecture significantly impacts data security. Multi-tier architectures, such as 2-Tier and 3-Tier, offer better security by isolating the database from direct user access and providing an additional layer for implementing security protocols.

Home

#DBMSArchitecture#DatabaseArchitecture#1Tier2Tier3Tier#LearnDBMS#DatabaseManagement#DBMSBasics#DataStorage#TechForStudents#InformationTechnology#DBMSTutorial#AssignmentHelp#AssignmentOnClick#assignment help#aiforstudents#machinelearning#assignmentexperts#assignment service#assignment#assignmentwriting

1 note

·

View note

Text

instagram

#DBMS#LearnDBMS#DatabaseManagement#DBMSBasics#DataStorage#TechForBeginners#DatabaseSystems#DataManagement#TechForStudents#InformationTechnology#AssignmentHelp#AssignmentOnClick#assignment help#machinelearning#aiforstudents#assignmentexperts#assignment service#assignment#assignmentwriting#Instagram

1 note

·

View note