#Data Integration Engineering Services

Explore tagged Tumblr posts

Text

How Data Integration Engineering Services Drive Business Intelligence and Growth

In today’s data-driven world, businesses are more reliant than ever on data to make informed decisions. From operational efficiency to customer insights, data has become the backbone of modern decision-making processes. However, with the exponential growth of data, companies are often faced with the challenge of managing vast volumes of diverse data sources in a way that creates value. This is where Data Integration Engineering Services come into play. By seamlessly connecting different data systems, these services unlock the true potential of business intelligence (BI) and significantly contribute to business growth.

In this blog, we’ll explore how data integration engineering services drive business intelligence and growth, the role they play in transforming raw data into actionable insights, and why they are essential for businesses to stay competitive.

What is Data Integration Engineering?

Before diving into how data integration services drive business growth, let’s first define what data integration engineering is. At its core, data integration involves combining data from various sources into a unified view that allows businesses to derive actionable insights. Data integration engineering services involve designing, developing, and implementing complex processes that connect disparate data sources such as databases, applications, cloud platforms, and more, ensuring that data flows seamlessly and securely across systems.

Data integration engineers leverage a combination of technologies, including APIs, ETL (Extract, Transform, Load) processes, middleware, and data pipelines, to ensure smooth data transfer and integration. These services are crucial for organizations that operate in multiple geographies, industries, or sectors, as they help consolidate data from various platforms and formats into a single, cohesive data environment.

The Role of Data Integration Engineering in Business Intelligence

Centralized Data Access

One of the most significant advantages of data integration is the creation of a centralized data repository. Businesses typically store data in different locations and formats—some in on-premise databases, others in cloud storage or third-party applications. The fragmented nature of this data makes it difficult for decision-makers to get a comprehensive, real-time view of key business metrics.

Data integration engineering services break down these silos, enabling organizations to consolidate data from different sources into a single repository or data warehouse. This centralization not only enhances data accessibility but also improves data quality and consistency, allowing business intelligence tools to provide more accurate insights. 2. Improved Data Quality and Accuracy

Data quality is essential for effective business intelligence. Inconsistent, incomplete, or inaccurate data can lead to erroneous insights, making it difficult to make well-informed decisions. Data integration engineering services ensure that data from various systems is cleaned, transformed, and harmonized before being integrated into the central repository.

Through careful data cleansing, validation, and enrichment processes, these services help ensure that businesses rely on high-quality, accurate data for their business intelligence initiatives. By integrating data sources that provide complementary and supplementary information, organizations are also able to uncover hidden patterns and relationships that would have otherwise gone unnoticed. 3. Real-Time Analytics and Decision Making

In the fast-paced business environment, organizations can no longer afford to rely on outdated data for decision-making. The ability to access real-time or near-real-time data is crucial for gaining a competitive edge. Data integration engineering services enable the continuous flow of data from various sources to business intelligence tools, making it possible to analyze and act on the most current data available.

Real-time analytics provides businesses with up-to-date insights into market trends, customer behavior, operational performance, and more. With a unified data environment, decision-makers can quickly assess situations and make timely, data-driven decisions that can improve business performance, enhance customer experiences, and even identify new revenue opportunities. 4. Advanced Analytics and Predictive Insights

The ultimate goal of business intelligence is not just to analyze historical data but to leverage that information for predictive insights that drive future growth. By integrating data from various sources, companies can use advanced analytics techniques like machine learning, artificial intelligence, and data mining to forecast future trends and behaviors.

Data integration engineering services facilitate the preparation of data for these advanced analytics methods. For example, by integrating customer data from sales, marketing, and social media platforms, businesses can create predictive models to identify potential leads, optimize marketing campaigns, or predict customer churn. These insights enable businesses to proactively make decisions that boost growth and mitigate risks. How Data Integration Engineering Services Promote Business Growth

Enhanced Operational Efficiency

Data integration services streamline internal processes, improving operational efficiency. By centralizing data and automating workflows, companies can reduce manual efforts, eliminate redundant tasks, and accelerate decision-making processes. Employees across departments can access up-to-date data in real time, collaborate more effectively, and respond to changes faster.

For instance, integrating supply chain data with sales, inventory, and financial systems enables better forecasting, demand planning, and inventory management. This leads to optimized operations, reduced waste, and cost savings, ultimately boosting profitability and supporting sustainable growth.

Better Customer Insights

Understanding customer behavior and preferences is vital for any business. Data integration engineering services consolidate customer data from multiple touchpoints such as website analytics, CRM systems, social media, and customer support platforms. By creating a single, comprehensive view of each customer, businesses can tailor their marketing efforts, improve product offerings, and provide personalized services.

A 360-degree view of the customer allows businesses to anticipate needs, personalize communication, and enhance customer satisfaction. When businesses deliver exceptional customer experiences, they drive customer loyalty, repeat business, and word-of-mouth referrals—all of which contribute to growth.

Informed Strategic Decision-Making

In today’s competitive landscape, the ability to make data-driven strategic decisions is a critical factor in business success. By providing access to integrated, real-time data, data integration engineering services enable businesses to analyze key performance indicators (KPIs) across departments, geographies, and markets. This comprehensive view helps executives and managers make informed decisions about resource allocation, expansion strategies, market positioning, and more.

For example, companies with integrated financial, sales, and marketing data can better assess the profitability of different product lines, identify underperforming regions, and reallocate resources accordingly. Such strategic decision-making ultimately supports sustainable growth and long-term success.

Scalable Growth

As businesses grow, so do their data requirements. Data integration engineering services help companies scale their operations by ensuring that data flows smoothly between systems, regardless of the size of the data or the complexity of the infrastructure. These services make it easier for businesses to add new data sources, expand into new markets, and integrate new technologies without disrupting existing operations.

By ensuring that data systems can grow and evolve alongside business needs, data integration engineering services make it possible to scale growth without sacrificing data quality, security, or accuracy.

Conclusion

Data integration engineering services are essential for businesses that want to unlock the full potential of their data and drive growth. By providing a unified view of data across various systems, improving data quality, enabling real-time analytics, and supporting advanced predictive insights, these services play a pivotal role in enhancing business intelligence and fostering growth.

In an increasingly data-centric world, organizations that invest in data integration engineering are better equipped to make informed decisions, optimize operations, understand customer needs, and strategically position themselves for long-term success. Embracing data integration as a core component of your business strategy is not just a necessity—it’s an opportunity to gain a competitive edge and fuel sustainable growth.

0 notes

Text

Unlocking Data Potential: RalanTech’s Expertise in Cloud Database and Integration Services

RalanTech’s data engineering consulting services empower organizations to tackle these challenges head-on. By leveraging cutting-edge technologies and best practices, the company delivers tailored solutions that streamline data processing and enhance accessibility. Read more at: https://shorturl.at/JAU37

#Cloud Database Consulting#data engineering consulting services#data integration consulting services

0 notes

Text

Choosing a Data Engineering Consultant: Your Complete Guide: Find the perfect data engineering consultant with our guide. Explore critical factors like flexibility, compliance, and ongoing support.

#data engineering consultant#data engineering services#data consulting partner#data analytics consultant#data engineering expert#data-driven business strategy#choosing data consultant#business intelligence solutions#scalable data engineering#data integration consultant#data pipeline optimization

0 notes

Text

Understanding the Basics of Team Foundation Server (TFS)

In software engineering, a streamlined system for project management is vital. Team Foundation Server (TFS) provides a full suite of tools for the entire software development lifecycle.

TFS is now part of Azure DevOps Services. It is a Microsoft tool supporting the entire software development lifecycle. It centralizes collaboration, version control, build automation, testing, and release management. TFS (Talend Open Studio) is the foundation for efficient teamwork and the delivery of top-notch software.

Key Components of TFS

The key components of team foundation server include-

Azure DevOps Services (formerly TFS): It is the cloud-based version of TFS. It offers a set of integrated tools and services for DevOps practices.

Version Control: TFS provides version control features for managing source code. It includes centralized version control and distributed version control.

Work Item Tracking: It allows teams to track and manage tasks, requirements, bugs, and other development-related activities.

Build Automation: TFS enables the automation of the build process. It allows developers to create and manage build definitions to compile and deploy applications.

Test Management: TFS includes test management tools for planning, tracking, and managing testing efforts. It supports manual and automated testing processes.

Release Management: Release Management automates the deployment of applications across various environments. It ensures consistency and reliability in the release process.

Reporting and Analytics: TFS provides reporting tools that allow teams to analyze their development processes. Custom reports and dashboards can be created to gain insights into project progress.

Authentication and Authorization: TFS and Azure DevOps manage user access, permissions, and security settings. It helps to protect source code and project data.

Package Management: Azure DevOps features a package management system for teams to handle and distribute software packages and dependencies.

Code Search: Azure DevOps provides powerful code search capabilities to help developers find and explore code efficiently.

Importance of TFS

Here are some aspects of TFS that highlight its importance-

Collaboration and Communication: It centralizes collaboration by integrating work items, version control, and building processes for seamless teamwork.

Data-Driven Decision Making: It provides reporting and analytics tools. It allows teams to generate custom reports and dashboards. These insights empower data-driven decision-making. It helps the team evaluate progress and identify areas for improvement.

Customization and Extensibility: It allows customization to adapt to specific team workflows. Its rich set of APIs enables integration with third-party tools. It enhances flexibility and extensibility based on team needs.

Auditing and Compliance: It provides auditing capabilities. It helps organizations track changes and ensure compliance with industry regulations and standards.

Team Foundation Server plays a pivotal role in modern software development. It provides an integrated and efficient platform for collaboration, automation, and project management.

Learn more about us at Nitor Infotech.

#Team Foundation Server#data engineering#sql server#big data#data warehousing#data model#microsoft sql server#sql code#data integration#integration of data#big data analytics#nitor infotech#software services

1 note

·

View note

Text

***CUSTOM SOFTWARE DEVELOPMENT COMPANY FOR SALE***

We are listing for sale a company that develops custom software and solutions for businesses that deal with large data sets in heavily regulated industries. With a strong integration team that specializes in healthcare, they're poised to continue growth as a cloud engineering and AI team.

The CEO will be staying, while the co-owner will be exiting the company to pursue other interests.

Location - New York State

Acquisition Highlights: - AWS Select Tier Partner - Healthcare focus - knowledge of regulatory requirements & healthcare transaction sets - One owner will be staying with the company - The company operates fully remote - Licensed to do business in Canada

If you would be interested in learning more about this opportunity please contact Scott Blackwood at [email protected].

#custom software development#software development#cloud engineering services#cloud solutions#cloud migration#cloud consulting services#cloud adoption#data engineering#dataanalytics#system integration#softwaresolutions#aws#awscloud#for sale#business for sale#opportunity

0 notes

Text

All-Star Moments in Space Communications and Navigation

How do we get information from missions exploring the cosmos back to humans on Earth? Our space communications and navigation networks – the Near Space Network and the Deep Space Network – bring back science and exploration data daily.

Here are a few of our favorite moments from 2024.

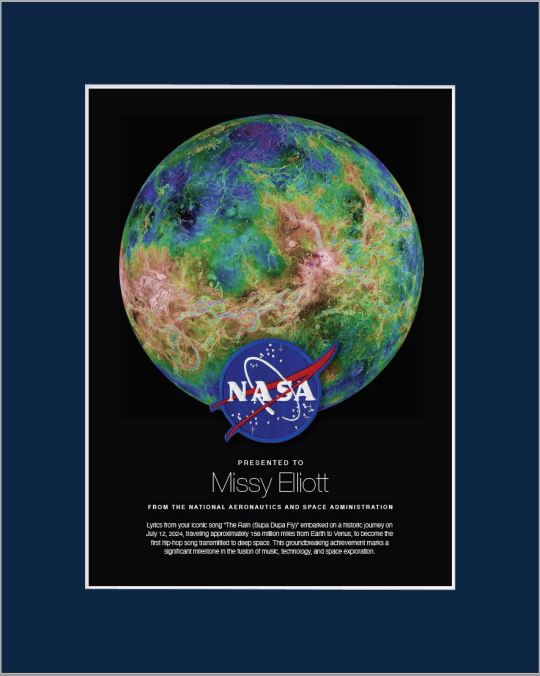

1. Hip-Hop to Deep Space

The stars above and on Earth aligned as lyrics from the song “The Rain (Supa Dupa Fly)” by hip-hop artist Missy Elliott were beamed to Venus via NASA’s Deep Space Network. Using a 34-meter (112-foot) wide Deep Space Station 13 (DSS-13) radio dish antenna, located at the network’s Goldstone Deep Space Communications Complex in California, the song was sent at 10:05 a.m. PDT on Friday, July 12 and traveled about 158 million miles from Earth to Venus — the artist’s favorite planet. Coincidentally, the DSS-13 that sent the transmission is also nicknamed Venus!

NASA's PACE mission transmitting data to Earth through NASA's Near Space Network.

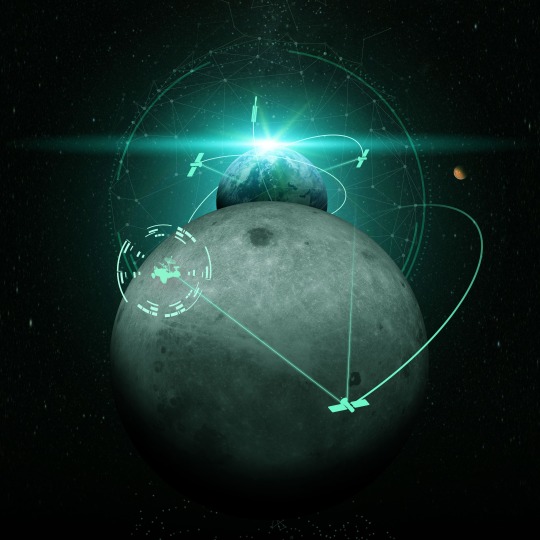

2. Lemme Upgrade You

Our Near Space Network, which supports communications for space-based missions within 1.2 million miles of Earth, is constantly enhancing its capabilities to support science and exploration missions. Last year, the network implemented DTN (Delay/Disruption Tolerant Networking), which provides robust protection of data traveling from extreme distances. NASA’s PACE (Plankton, Aerosol, Cloud, ocean Ecosystem) mission is the first operational science mission to leverage the network’s DTN capabilities. Since PACE’s launch, over 17 million bundles of data have been transmitted by the satellite and received by the network’s ground station.

A collage of the pet photos sent over laser links from Earth to LCRD and finally to ILLUMA-T (Integrated LCRD Low Earth Orbit User Modem and Amplifier Terminal) on the International Space Station. Animals submitted include cats, dogs, birds, chickens, cows, snakes, and pigs.

3. Who Doesn’t Love Pets?

Last year, we transmitted hundreds of pet photos and videos to the International Space Station, showcasing how laser communications can send more data at once than traditional methods. Imagery of cherished pets gathered from NASA astronauts and agency employees flowed from the mission ops center to the optical ground stations and then to the in-space Laser Communications Relay Demonstration (LCRD), which relayed the signal to a payload on the space station. This activity demonstrated how laser communications and high-rate DTN can benefit human spaceflight missions.

4K video footage was routed from the PC-12 aircraft to an optical ground station in Cleveland. From there, it was sent over an Earth-based network to NASA’s White Sands Test Facility in Las Cruces, New Mexico. The signals were then sent to NASA’s Laser Communications Relay Demonstration spacecraft and relayed to the ILLUMA-T payload on the International Space Station.

4. Now Streaming

A team of engineers transmitted 4K video footage from an aircraft to the International Space Station and back using laser communication signals. Historically, we have relied on radio waves to send information to and from space. Laser communications use infrared light to transmit 10 to 100 times more data than radio frequency systems. The flight tests were part of an agency initiative to stream high-bandwidth video and other data from deep space, enabling future human missions beyond low-Earth orbit.

The Near Space Network provides missions within 1.2 million miles of Earth with communications and navigation services.

5. New Year, New Relationships

At the very end of 2024, the Near Space Network announced multiple contract awards to enhance the network’s services portfolio. The network, which uses a blend of government and commercial assets to get data to and from spacecraft, will be able to support more missions observing our Earth and exploring the cosmos. These commercial assets, alongside the existing network, will also play a critical role in our Artemis campaign, which calls for long-term exploration of the Moon.

On Monday, Oct. 14, 2024, at 12:06 p.m. EDT, a SpaceX Falcon Heavy rocket carrying NASA’s Europa Clipper spacecraft lifts off from Launch Complex 39A at NASA’s Kennedy Space Center in Florida.

6. 3, 2, 1, Blast Off!

Together, the Near Space Network and the Deep Space Network supported the launch of Europa Clipper. The Near Space Network provided communications and navigation services to SpaceX’s Falcon Heavy rocket, which launched this Jupiter-bound mission into space! After vehicle separation, the Deep Space Network acquired Europa Clipper’s signal and began full mission support. This is another example of how these networks work together seamlessly to ensure critical mission success.

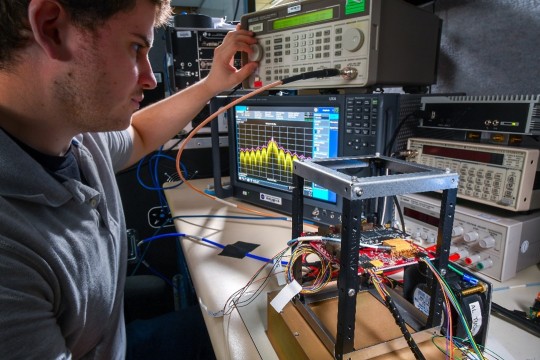

Engineer Adam Gannon works on the development of Cognitive Engine-1 in the Cognitive Communications Lab at NASA’s Glenn Research Center.

7. Make Way for Next-Gen Tech

Our Technology Education Satellite program organizes collaborative missions that pair university students with researchers to evaluate how new technologies work on small satellites, also known as CubeSats. In 2024, cognitive communications technology, designed to enable autonomous space communications systems, was successfully tested in space on the Technology Educational Satellite 11 mission. Autonomous systems use technology reactive to their environment to implement updates during a spaceflight mission without needing human interaction post-launch.

A first: All six radio frequency antennas at the Madrid Deep Space Communication Complex, part of NASA’s Deep Space Network (DSN), carried out a test to receive data from the agency’s Voyager 1 spacecraft at the same time.

8. Six Are Better Than One

On April 20, 2024, all six radio frequency antennas at the Madrid Deep Space Communication Complex, part of our Deep Space Network, carried out a test to receive data from the agency’s Voyager 1 spacecraft at the same time. Combining the antennas’ receiving power, or arraying, lets the network collect the very faint signals from faraway spacecraft.

Here’s to another year connecting Earth and space.

Make sure to follow us on Tumblr for your regular dose of space!

1K notes

·

View notes

Text

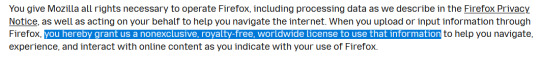

heads up for firefox users who care about their privacy and don't want their data sold to third parties.

they just updated their TOU policy with some shady shit

they're claiming they "need" a license to use this data to provide basic services and functionality but they have never had this before or needed it previously to do what they were already doing (render web pages/integrate a search engine) having purchased a license to "use" your search data (and potentially anything you upload through the service?), maintaining it for up to 25 months on private servers, they also updated their FAQ 2 months ago to redact an important line:

they've got reps on their blog rn trying to do damage control but honestly i think the service is cooked. personally, i think they just want to use our data to train an LLM, which of course we won't be able to opt out of the use of once launched. maybe i'm wrong, but i think it's pretty likely

if you're looking for alts (& imo using anything chromium-based is just an even worse backslide) apparently there's a firefox fork called waterfox (and another called librewolf) that's committed to privacy, but i legit haven't looked into them and can't vouch. i know I'll be looking into alts in the coming days :/

706 notes

·

View notes

Text

'Artificial Intelligence' Tech - Not Intelligent as in Smart - Intelligence as in 'Intelligence Agency'

I work in tech, hell my last email ended in '.ai' and I used to HATE the term Artificial Intelligence. It's computer vision, it's machine learning, I'd always argue.

Lately, I've changed my mind. Artificial Intelligence is a perfectly descriptive word for what has been created. As long as you take the word 'Intelligence' to refer to data that an intelligence agency or other interested party may collect.

But I'm getting ahead of myself. Back when I was in 'AI' - the vibe was just odd. Investors were throwing money at it as fast as they could take out loans to do so. All the while, engineers were sounding the alarm that 'AI' is really just a fancy statistical tool and won't ever become truly smart let alone conscious. The investors, baffingly, did the equivalent of putting their fingers in their ears while screaming 'LALALA I CAN'T HEAR YOU"

Meanwhile, CEOs were making all sorts of wild promises about what AI will end up doing, promises that mainly served to stress out the engineers. Who still couldn't figure out why the hell we were making this silly overhyped shit anyway.

SYSTEMS THINKING

As Stafford Beer said, 'The Purpose of A System is What It Does" - basically meaning that if a system is created, and maintained, and continues to serve a purpose? You can read the intended purpose from the function of a system. (This kind of thinking can be applied everywhere - for example the penal system. Perhaps, the purpose of that system is to do what it does - provide an institutional structure for enslavement / convict-leasing?)

So, let's ask ourselves, what does AI do? Since there are so many things out there calling themselves AI, I'm going to start with one example. Microsoft Copilot.

Microsoft is selling PCs with integrated AI which, among other things, frequently screenshots and saves images of your activity. It doesn't protect against copying passwords or sensitive data, and it comes enabled by default. Now, my old-ass-self has a word for that. Spyware. It's a word that's fallen out of fashion, but I think it ought to make a comeback.

To take a high-level view of the function of the system as implemented, I would say it surveils, and surveils without consent. And to apply our systems thinking? Perhaps its purpose is just that.

SOCIOLOGY

There's another principle I want to introduce - that an institution holds insitutional knowledge. But it also holds institutional ignorance. The shit that for the sake of its continued existence, it cannot know.

For a concrete example, my health insurance company didn't know that my birth control pills are classified as a contraceptive. After reading the insurance adjuster the Wikipedia articles on birth control, contraceptives, and on my particular medication, he still did not know whether my birth control was a contraceptive. (Clearly, he did know - as an individual - but in his role as a representative of an institution - he was incapable of knowing - no matter how clearly I explained)

So - I bring this up just to say we shouldn't take the stated purpose of AI at face value. Because sometimes, an institutional lack of knowledge is deliberate.

HISTORY OF INTELLIGENCE AGENCIES

The first formalized intelligence agency was the British Secret Service, founded in 1909. Spying and intelligence gathering had always been a part of warfare, but the structures became much more formalized into intelligence agencies as we know them today during WW1 and WW2.

Now, they're a staple of statecraft. America has one, Russia has one, China has one, this post would become very long if I continued like this...

I first came across the term 'Cyber War' in a dusty old aircraft hanger, looking at a cold-war spy plane. There was an old plaque hung up, making reference to the 'Upcoming Cyber War' that appeared to have been printed in the 80s or 90s. I thought it was silly at the time, it sounded like some shit out of sci-fi.

My mind has changed on that too - in time. Intelligence has become central to warfare; and you can see that in the technologies military powers invest in. Mapping and global positioning systems, signals-intelligence, of both analogue and digital communication.

Artificial intelligence, as implemented would be hugely useful to intelligence agencies. A large-scale statistical analysis tool that excels as image recognition, text-parsing and analysis, and classification of all sorts? In the hands of agencies which already reportedly have access to all of our digital data?

TIKTOK, CHINA, AND AMERICA

I was confused for some time about the reason Tiktok was getting threatened with a forced sale to an American company. They said it was surveiling us, but when I poked through DNS logs, I found that it was behaving near-identically to Facebook/Meta, Twitter, Google, and other companies that weren't getting the same heat.

And I think the reason is intelligence. It's not that the American government doesn't want me to be spied on, classified, and quantified by corporations. It's that they don't want China stepping on their cyber-turf.

The cyber-war is here y'all. Data, in my opinion, has become as geopolitically important as oil, as land, as air or sea dominance. Perhaps even more so.

A CASE STUDY : ELON MUSK

As much smack as I talk about this man - credit where it's due. He understands the role of artificial intelligence, the true role. Not as intelligence in its own right, but intelligence about us.

In buying Twitter, he gained access to a vast trove of intelligence. Intelligence which he used to segment the population of America - and manpulate us.

He used data analytics and targeted advertising to profile American voters ahead of this most recent election, and propogandize us with micro-targeted disinformation. Telling Israel's supporters that Harris was for Palestine, telling Palestine's supporters she was for Israel, and explicitly contradicting his own messaging in the process. And that's just one example out of a much vaster disinformation campaign.

He bought Trump the white house, not by illegally buying votes, but by exploiting the failure of our legal system to keep pace with new technology. He bought our source of communication, and turned it into a personal source of intelligence - for his own ends. (Or... Putin's?)

This, in my mind, is what AI was for all along.

CONCLUSION

AI is a tool that doesn't seem to be made for us. It seems more fit-for-purpose as a tool of intelligence agencies, oligarchs, and police forces. (my nightmare buddy-cop comedy cast) It is a tool to collect, quantify, and loop-back on intelligence about us.

A friend told me recently that he wondered sometimes if the movie 'The Matrix' was real and we were all in it. I laughed him off just like I did with the idea of a cyber war.

Well, I re watched that old movie, and I was again proven wrong. We're in the matrix, the cyber-war is here. And know it or not, you're a cog in the cyber-war machine.

(edit -- part 2 - with the 'how' - is here!)

#ai#computer science#computer engineering#political#politics#my long posts#internet safety#artificial intelligence#tech#also if u think im crazy im fr curious why - leave a comment

118 notes

·

View notes

Text

Musk’s DOGE seeks access to personal taxpayer data, raising alarm at IRS

Elon Musk’s U.S. DOGE Service is seeking access to a heavily-guarded Internal Revenue Service system that includes detailed financial information about every taxpayer, business and nonprofit in the country, according to two people familiar with the activities, sparking alarm within the tax agency. Under pressure from the White House, the IRS is considering a memorandum of understanding that would give DOGE officials broad access to tax-agency systems, property and datasets. Among them is the Integrated Data Retrieval System, or IDRS, which enables tax agency employees to access IRS accounts — including personal identification numbers — and bank information. It also lets them enter and adjust transaction data and automatically generate notices, collection documents and other records. IDRS access is extremely limited — taxpayers who have had their information wrongfully disclosed or even inspected are entitled by law to monetary damages — and the request for DOGE access has raised deep concern within the IRS, according to three people familiar with internal agency deliberations who, like others in this report, spoke on the condition of anonymity to discuss private conversations. [...] It’s highly unusual to grant political appointees access to personal taxpayer data, or even programs adjacent to that data, experts say. IRS commissioners traditionally do not have IDRS access. The same goes for the national taxpayer advocate, the agency’s internal consumer watchdog, according to Nina Olson, who served in the role from 2001 to 2019. “The information that the IRS has is incredibly personal. Someone with access to it could use it and make it public in a way, or do something with it, or share it with someone else who shares it with someone else, and your rights get violated,” Olson said. A Trump administration official said DOGE personnel needed IDRS access because DOGE staff are working to “eliminate waste, fraud, and abuse, and improve government performance to better serve the people.” [...] Gavin Kliger, a DOGE software engineer, arrived unannounced at IRS headquarters on Thursday and was named senior adviser to the acting commissioner. IRS officials were told to treat Kliger and other DOGE officials as contractors, two people familiar said.

the holocaust denying 25-year-old neonazi now has access to your bank information

72 notes

·

View notes

Text

The Story of KLogs: What happens when an Mechanical Engineer codes

Since i no longer work at Wearhouse Automation Startup (WAS for short) and havnt for many years i feel as though i should recount the tale of the most bonkers program i ever wrote, but we need to establish some background

WAS has its HQ very far away from the big customer site and i worked as a Field Service Engineer (FSE) on site. so i learned early on that if a problem needed to be solved fast, WE had to do it. we never got many updates on what was coming down the pipeline for us or what issues were being worked on. this made us very independent

As such, we got good at reading the robot logs ourselves. it took too much time to send the logs off to HQ for analysis and get back what the problem was. we can read. now GETTING the logs is another thing.

the early robots we cut our teeth on used 2.4 gHz wifi to communicate with FSE's so dumping the logs was as simple as pushing a button in a little application and it would spit out a txt file

later on our robots were upgraded to use a 2.4 mHz xbee radio to communicate with us. which was FUCKING SLOW. and log dumping became a much more tedious process. you had to connect, go to logging mode, and then the robot would vomit all the logs in the past 2 min OR the entirety of its memory bank (only 2 options) into a terminal window. you would then save the terminal window and open it in a text editor to read them. it could take up to 5 min to dump the entire log file and if you didnt dump fast enough, the ACK messages from the control server would fill up the logs and erase the error as the memory overwrote itself.

this missing logs problem was a Big Deal for software who now weren't getting every log from every error so a NEW method of saving logs was devised: the robot would just vomit the log data in real time over a DIFFERENT radio and we would save it to a KQL server. Thanks Daddy Microsoft.

now whats KQL you may be asking. why, its Microsofts very own SQL clone! its Kusto Query Language. never mind that the system uses a SQL database for daily operations. lets use this proprietary Microsoft thing because they are paying us

so yay, problem solved. we now never miss the logs. so how do we read them if they are split up line by line in a database? why with a query of course!

select * from tbLogs where RobotUID = [64CharLongString] and timestamp > [UnixTimeCode]

if this makes no sense to you, CONGRATULATIONS! you found the problem with this setup. Most FSE's were BAD at SQL which meant they didnt read logs anymore. If you do understand what the query is, CONGRATULATIONS! you see why this is Very Stupid.

You could not search by robot name. each robot had some arbitrarily assigned 64 character long string as an identifier and the timestamps were not set to local time. so you had run a lookup query to find the right name and do some time zone math to figure out what part of the logs to read. oh yeah and you had to download KQL to view them. so now we had both SQL and KQL on our computers

NOBODY in the field like this.

But Daddy Microsoft comes to the rescue

see we didnt JUST get KQL with part of that deal. we got the entire Microsoft cloud suite. and some people (like me) had been automating emails and stuff with Power Automate

This is Microsoft Power Automate. its Microsoft's version of Scratch but it has hooks into everything Microsoft. SharePoint, Teams, Outlook, Excel, it can integrate with all of it. i had been using it to send an email once a day with a list of all the robots in maintenance.

this gave me an idea

and i checked

and Power Automate had hooks for KQL

KLogs is actually short for Kusto Logs

I did not know how to program in Power Automate but damn it anything is better then writing KQL queries. so i got to work. and about 2 months later i had a BEHEMOTH of a Power Automate program. it lagged the webpage and many times when i tried to edit something my changes wouldn't take and i would have to click in very specific ways to ensure none of my variables were getting nuked. i dont think this was the intended purpose of Power Automate but this is what it did

the KLogger would watch a list of Teams chats and when someone typed "klogs" or pasted a copy of an ERROR mesage, it would spring into action.

it extracted the robot name from the message and timestamp from teams

it would lookup the name in the database to find the 64 long string UID and the location that robot was assigned too

it would reply to the message in teams saying it found a robot name and was getting logs

it would run a KQL query for the database and get the control system logs then export then into a CSV

it would save the CSV with the a .xls extension into a folder in ShairPoint (it would make a new folder for each day and location if it didnt have one already)

it would send ANOTHER message in teams with a LINK to the file in SharePoint

it would then enter a loop and scour the robot logs looking for the keyword ESTOP to find the error. (it did this because Kusto was SLOWER then the xbee radio and had up to a 10 min delay on syncing)

if it found the error, it would adjust its start and end timestamps to capture it and export the robot logs book-ended from the event by ~ 1 min. if it didnt, it would use the timestamp from when it was triggered +/- 5 min

it saved THOSE logs to SharePoint the same way as before

it would send ANOTHER message in teams with a link to the files

it would then check if the error was 1 of 3 very specific type of error with the camera. if it was it extracted the base64 jpg image saved in KQL as a byte array, do the math to convert it, and save that as a jpg in SharePoint (and link it of course)

and then it would terminate. and if it encountered an error anywhere in all of this, i had logic where it would spit back an error message in Teams as plaintext explaining what step failed and the program would close gracefully

I deployed it without asking anyone at one of the sites that was struggling. i just pointed it at their chat and turned it on. it had a bit of a rocky start (spammed chat) but man did the FSE's LOVE IT.

about 6 months later software deployed their answer to reading the logs: a webpage that acted as a nice GUI to the KQL database. much better then an CSV file

it still needed you to scroll though a big drop-down of robot names and enter a timestamp, but i noticed something. all that did was just change part of the URL and refresh the webpage

SO I MADE KLOGS 2 AND HAD IT GENERATE THE URL FOR YOU AND REPLY TO YOUR MESSAGE WITH IT. (it also still did the control server and jpg stuff). Theres a non-zero chance that klogs was still in use long after i left that job

now i dont recommend anyone use power automate like this. its clunky and weird. i had to make a variable called "Carrage Return" which was a blank text box that i pressed enter one time in because it was incapable of understanding /n or generating a new line in any capacity OTHER then this (thanks support forum).

im also sure this probably is giving the actual programmer people anxiety. imagine working at a company and then some rando you've never seen but only heard about as "the FSE whos really good at root causing stuff", in a department that does not do any coding, managed to, in their spare time, build and release and entire workflow piggybacking on your work without any oversight, code review, or permission.....and everyone liked it

#comet tales#lazee works#power automate#coding#software engineering#it was so funny whenever i visited HQ because i would go “hi my name is LazeeComet” and they would go “OH i've heard SO much about you”

63 notes

·

View notes

Text

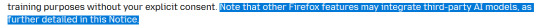

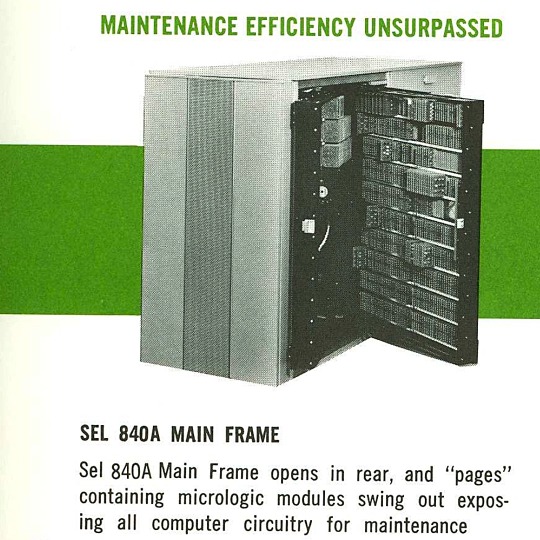

🎄💾🗓️ Day 11: Retrocomputing Advent Calendar - The SEL 840A🎄💾🗓️

Systems Engineering Laboratories (SEL) introduced the SEL 840A in 1965. This is a deep cut folks, buckle in. It was designed as a high-performance, 24-bit general-purpose digital computer, particularly well-suited for scientific and industrial real-time applications.

Notable for using silicon monolithic integrated circuits and a modular architecture. Supported advanced computation with features like concurrent floating-point arithmetic via an optional Extended Arithmetic Unit (EAU), which allowed independent arithmetic processing in single or double precision. With a core memory cycle time of 1.75 microseconds and a capacity of up to 32,768 directly addressable words, the SEL 840A had impressive computational speed and versatility for its time.

Its instruction set covered arithmetic operations, branching, and program control. The computer had fairly robust I/O capabilities, supporting up to 128 input/output units and optional block transfer control for high-speed data movement. SEL 840A had real-time applications, such as data acquisition, industrial automation, and control systems, with features like multi-level priority interrupts and a real-time clock with millisecond resolution.

Software support included a FORTRAN IV compiler, mnemonic assembler, and a library of scientific subroutines, making it accessible for scientific and engineering use. The operator’s console provided immediate access to registers, control functions, and user interaction! Designed to be maintained, its modular design had serviceability you do often not see today, with swing-out circuit pages and accessible test points.

And here's a personal… personal computer history from Adafruit team member, Dan…

== The first computer I used was an SEL-840A, PDF:

I learned Fortran on it in eight grade, in 1970. It was at Oak Ridge National Laboratory, where my parents worked, and was used to take data from cyclotron experiments and perform calculations. I later patched the Fortran compiler on it to take single-quoted strings, like 'HELLO', in Fortran FORMAT statements, instead of having to use Hollerith counts, like 5HHELLO.

In 1971-1972, in high school, I used a PDP-10 (model KA10) timesharing system, run by BOCES LIRICS on Long Island, NY, while we were there for one year on an exchange.

This is the front panel of the actual computer I used. I worked at the computer center in the summer. I know the fellow in the picture: he was an older high school student at the time.

The first "personal" computers I used were Xerox Alto, Xerox Dorado, Xerox Dandelion (Xerox Star 8010), Apple Lisa, and Apple Mac, and an original IBM PC. Later I used DEC VAXstations.

Dan kinda wins the first computer contest if there was one… Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#retrocomputing#firstcomputer#electronics#sel840a#1960scomputers#fortran#computinghistory#vintagecomputing#realtimecomputing#industrialautomation#siliconcircuits#modulararchitecture#floatingpointarithmetic#computerscience#fortrancode#corememory#oakridgenationallab#cyclotron#pdp10#xeroxalto#computermuseum#historyofcomputing#classiccomputing#nostalgictech#selcomputers#scientificcomputing#digitalhistory#engineeringmarvel#techthroughdecades#console

31 notes

·

View notes

Text

U.S. Approves Foreign Military Sale for South Korean F-15K Upgrade

The State Department has approved the possible sale of components that will allow South Korea to upgrade its F-15K Slam Eagle fleet to a configuration similar to the F-15EX Eagle II.

Stefano D'Urso

F-15K upgrade

The U.S. State Department has approved on Nov.19, 2024, a possible Foreign Military Sale (FMS) to the Republic of Korea of components that will allow the upgrade of the country’s F-15K Slam Eagle fleet. The package, which has an estimated cost of $6.2 billion, follows the decision in 2022 to launch an upgrade program for the aircraft.

The State Department has approved the possible sale of components that will allow South Korea to upgrade its F-15K Slam Eagle fleet to a configuration similar to the F-15EX Eagle II.The F-15KThe new capabilities

The Slam Eagles are the mainstay of the Republic of Korea Air Force’s (ROKAF) multirole missions, with a particular ‘heavy hitting’ long-range strike role. According to the available data, the country operates 59 F-15Ks out of 61 which were initially fielded in 2005. In 2022, the Defense Acquisition Program Administration (DAPA) approved the launch of an upgrade program planned to run from 2024 to 2034.

In particular, the Defense Security Cooperation Agency’s (DSCA) FMS notice says a number of components were requested for the upgrade, including 96 Advanced Display Core Processor II (ADCP II) mission system computers, 70 AN/APG-82(v)1 Active Electronically Scanned Arrays (AESA) radars, seventy 70 AN/ALQ-250 Eagle Passive Active Warning Survivability System (EPAWSS) electronic warfare (EW) suites and 70 AN/AAR-57 Common Missile Warning Systems (CMWS).

In addition to these, South Korea will also get modifications and maintenance support, aircraft components and spares, consumables, training aids and the entire support package commonly associated with FMS. It is interesting to note that the notice also includes aerial refueling support and aircraft ferry support, so it is possible that at least the initial aircraft will be ferried to the United States for the modifications before the rest are modified in country.

A ROKAF F-15K Slam Eagle drops two GBU-31 JDAM bombs with BLU-109 warhead. (Image credit: ROKAF)

The components included in the possible sale will allow the ROKAF to upgrade its entire fleet of F-15Ks to a configuration similar to the new F-15EX Eagle II currently being delivered to the U.S. Air Force. Interestingly, the Korean configuration will also include the CMWS, currently not installed on the EX, so the F-15K will also require some structural modifications to add the blisters on each side of the canopy rail where the sensors are installed.

“This proposed sale will improve the Republic of Korea’s capability to meet current and future threats by increasing its critical air defence capability to deter aggression in the region and to ensure interoperability with US forces,” says the DSCA in the official notice.

The upgrade of the F-15K is part of a broader modernization of the ROKAF’s fighter fleet. In fact, the service is also upgrading its KF-16s Block 52 to the V configuration, integrating a new AESA radar, mission computer, self-protection suite, with works expected to be completed by 2025. These programs complement the acquisition of the F-35 Lightning II and the KF-21 Boramae.

Ulchi Freedom Shield 24

A ROKAF F-15K Slam Eagle, assigned to the 11th Fighter Wing at Daegu Air Base, takes off for a mission on Aug. 20, 2024. (Image credit: ROKAF)

The F-15K

The F-15K is a variant of the F-15E Strike Eagle built for the Republic of Korea Air Force’s (ROKAF) with almost half of the components manufactured locally. The aircraft emerged as the winner of the F-X fighter program against the Rafale, Typhoon and Su-35 in 2002, resulting in an order for 40 F-15s equipped with General Electric F110-129 engines. In 2005, a second order for 21 aircraft equipped with Pratt & Whitney F100-PW-229 engines was signed.

The Slam Eagle name is derived from the F-15K’s capability to employ the AGM-84H SLAM-ER standoff cruise missiles, with the Taurus KEPD 350K being another weapon exclusive to the ROKAF jet. The F-15K is employed as a fully multi-role aircraft and is considered ad one of the key assets of the Korean armed forces.

With the aircraft averaging an age of 16 years and expected to be in service until 2060, the Defense Acquisition Program Administration (DAPA) launched in 2022 an upgrade program for the F-15Ks. The upgrade, expected to run from 2024 to 2034, is committed to strengthening the mission capabilities and survivability of the jet.

The F-15K currently equips three squadrons at Daegu Air Base, in the southeast of the country. Although based far from the demilitarized zone (DMZ), the F-15K with its SLAM-ER and KEPD 350 missiles can still hit strategic targets deep behind North Korean borders.

An F-15K releases a Taurus KEPD 350K cruise missile. (Image credit: ROKAF)

The new capabilities

It is not yet clear if the F-15K will receive a new cockpit, since its configuration will be similar to the Eagle II. In fact, the F-15EX has a full glass cockpit equipped with a 10×19-inch touch-screen multifunction color display and JHMCS II both in the front and rear cockpit, Low Profile HUD in the front, stand-by display and dedicated engine, fuel and hydraulics display, in addition to the standard caution/warning lights, switches and Hands On Throttle-And-Stick (HOTAS) control.

Either way, the systems will be powered by the Advanced Display Core Processor II, reportedly the fastest mission computer ever installed on a fighter jet, and the Operational Flight Program Suite 9.1X, a customized variant of the Suite 9 used on the F-15C and F-15E, designed to ensure full interoperability of the new aircraft with the “legacy Eagles”.

The F-15K will be equipped with the new AN/APG-82(V)1 Active Electronically Scanned Array (AESA) radar. The radar, which has been developed from the APG-63(V)3 AESA radar of the F-15C and the APG-79 AESA radar of the F/A-18E/F, allows to simultaneously detect, identify and track multiple air and surface targets at longer ranges compared to mechanical radars, facilitating persistent target observation and information sharing for a better decision-making process.

F-15K upgrade

A ROKAF F-15K Slam Eagle takes off for a night mission during the Pitch Black 2024 exercise. (Image credit: Australian Defense Force)

The AN/ALQ-250 EPAWSS will provide full-spectrum EW capabilities, including radar warning, geolocation, situational awareness, and self-protection to the F-15. Chaff and flares capacity will be increased by 50%, with four more dispensers added in the EPAWSS fairings behind the tail fins (two for each fairing), for a total of 12 dispenser housing 360 cartridges.

EPAWSS is fully integrated with radar warning, geo-location and increased chaff and flare capability to detect and defeat surface and airborne threats in signal-dense and highly contested environments. Because of this, the system enables freedom of maneuver and deeper penetration into battlespaces protected by modern integrated air defense systems.

The AN/AAR-57 CMWS is an ultra-violet based missile warning system, part of an integrated IR countermeasures suite utilizing five sensors to display accurate threat location and dispense decoys/countermeasures. Although CMWS was initially fielded in 2005, BAE Systems continuously customized the algorithms to adapt to new threats and CMWS has now reached Generation 3.

@TheAviationist.com

21 notes

·

View notes

Text

Automatically clean up data sets to prevent ‘garbage in, garbage out’ - Technology Org

New Post has been published on https://thedigitalinsider.com/automatically-clean-up-data-sets-to-prevent-garbage-in-garbage-out-technology-org/

Automatically clean up data sets to prevent ‘garbage in, garbage out’ - Technology Org

‘Garbage in, garbage out’ has become a winged expression for the concept that flawed input data will lead to flawed output data. Practical examples abound. If a dataset contains temperature readings in Celsius and Fahrenheit without proper conversion, any analysis based on that data will be flawed. If the input data for a gift recommender system contains errors in the age attribute of customers, it might accidentally suggest kids toys to grown-ups.

Illustration by Heyerlein via Unsplash, free license

At a time when more and more companies, organizations and governments base decisions on data analytics, it is highly important to ensure good and clean data sets. That is what Sebastian Schelter and his colleagues are working on. Schelter is assistant professor at of the Informatics Institute of the University of Amsterdam, working in the Intelligent Data Engineering Lab (INDElab). Academic work he published in 2018, when he was working at Amazon, presently powers some of Amazon’s data quality services. At UvA he is expanding that work.

What are the biggest problems with data sets?

‘Missing data is one big problem. Think of an Excel sheet where you have to fill in values in each cell, but some cells are empty. May be data got lost, may be data just wasn’t collected. That’s a very common problem. The second big problem is that some data are wrong. Let’s say you have data about the age of people and there appears to be somebody who is a thousand years old.

A third major problem with data sets is data integration errors, which arise from combining different data sets. Very often this leads to duplicates. Think of two companies that merge. They will have address data bases and may be the same address is spelled in slightly different ways: one database uses ‘street’ and the other one uses ‘st.’. Or the spelling might be different.

Finally, the fourth major problem is called ‘referential integrity’. If you have datasets that reference each other, you need to make sure that the referencing is done correctly. If a company has a dataset with billing data and a bank has a dataset with bank account numbers of their customers, you want a bank account number in the billing dataset to reference an existing bank account at that bank, otherwise it would reference something that does not exist. Often there are problems with references between two data sets.’

How does your research tackle these problems?

‘Data scientists spend a lot of their time cleaning up flawed data sets. The numbers vary, but surveys have shown that it’s up to eighty percent of their time. That’s a big waste of time and talent. To counter this, we have developed open source software, called Deequ. Instead of data scientists having to write a program that validates the data quality, they can just write down how their data should look like. For example, they can prescribe things like: ‘there shouldn’t be missing data in the column with social security numbers’ or ‘the values in the age-column shouldn’t be negative’. Then Deequ runs over the data in an efficient way and tells whether the test is passed or not. Often Deequ also shows the particular data records that violated the test.’

How is Deequ used in practice?

‘The original scientific paper was written when I was working at Amazon. Since then, the open source implementation of this work has become pretty popular for all kinds of applications in all kinds of domains. There is a Python-version which has more than four million downloads per month. After I left Amazon, the company built two cloud services based on Deequ, one of them called AWS Glue Data Quality. Amazon’s cloud is the most used cloud in the world, so many companies that use it, have access to our way of data cleaning.’

What is the current research you are doing to clean up data sets?

‘At the moment we are developing a way to measure data quality of streaming data in our ICAI-lab ‘AI for Retail’, cooperating with bol.com. Deequ was developed for data at rest, but many use cases have a continuous stream of data. The data might be too big to store, there might be privacy reasons for not storing them, or it might simply be too expensive to store the data. So, we built StreamDQ, which can run quality checks on streaming data. A big challenge is that you can’t spend much time on processing the data, otherwise everything will be slowed down too much. So, you can only do certain tests and sometimes you have to use approximations. We have a working prototype, and we are now evaluating it.’

Source: University of Amsterdam

You can offer your link to a page which is relevant to the topic of this post.

#ai#Amazon#Analysis#Analytics#applications#AWS#bases#cell#Cells#challenge#Cloud#cloud services#Companies#data#data analytics#data cleaning#Data Engineering#Data Integration#data quality#Database#datasets#domains#engineering#excel#excel sheet#Featured technology news#how#illustration#integration#it

0 notes

Text

Businesses often face challenges such as cost reduction, faster product launches, and high-quality delivery while building products. To tackle this, mitigate to the powerful solution: Solution Engineering in our blog.

Discover how this approach offers structured problem-solving and efficient solutions for your business. Align your business objectives and user needs, from problem identification to knowledge transfer, while saving time and cutting costs. With Solution Engineering, you can experience unmatched growth and success. Learn more.

#big data#operations software#business intelligence#development software#solution engineering#it services#Systems engineering#nitorinfotech#blog#automation#process improvement#data integration#workflow automation

0 notes

Text

The Office of Personnel Management’s acting inspector general has confirmed that the independent office is investigating whether any “emerging threats” to sensitive information have arisen as a result of Elon Musk’s DOGE operatives introducing rapid changes to protected government networks.

“The OPM OIG [office of the inspector general] is committed to providing independent and objective oversight of OPM’s programs and operations,” writes the acting inspector general, Norbert Vint, in a letter dated March 7 to Democratic lawmakers, adding that his office is not only legally required to scrutinize OPM’s security protocols, but routinely does so based on “developing risks.” The letter stated that the office would fold specific requests issued by Democratic lawmakers last month into its “existing work,” while also initiating a “new engagement” over potential risks at the agency associated with computer systems that have been accessed or modified by the United States DOGE Service.

Vint, whose predecessor was fired by Trump in January, is one of a half dozen deputy inspectors general urged by Democrats on the House Committee on Oversight and Government Reform last month to investigate reports from WIRED and other outlets about DOGE’s efforts to gain access to a wide range of record systems that host some of the government’s most sensitive data, including personnel files on millions of government employees and their families.

“We are deeply concerned that unauthorized system access could be occurring across the federal government and could pose a major threat to the personal privacy of all Americans and to the national security of our nation,” Gerald Connolly, the oversight committee’s ranking Democrat, wrote in a letter on February 6.

In addition to OPM, Democrats have pressed for similar security assessments at five other agencies, including at the Treasury Department, the General Services Administration, the Small Business Administration, the US Agency for International Development, and the Department of Education. However, Vint is the only watchdog at any of the named agencies to have responded so far, a committee spokesperson tells WIRED.

While in the minority in the House and Senate, Democrats have little power to conduct effective oversight outside of formal hearings, which must be convened by Republicans. During his first term, Trump’s Justice Department issued guidance notifying executive agencies that they had zero obligation to respond to questions from Democrats.

Congressional Republicans have committed to little, if any, formal oversight of DOGE’s work, opting instead to back-channel with the billionaire over the impacts of his anti-personnel crusade.

The executive order establishing DOGE, signed by the president on his first day in office, instructed federal agencies to provide Musk’s operatives with “full and prompt access” to all unclassified records systems in order to effectuate a government-wide purge of “fraud, waste, and abuse.” It quickly became clear, however, that DOGE’s staff, many of them young engineers with direct ties to Musk’s own businesses, were paying little attention to key privacy safeguards; eschewing, for instance, mandatory assessments of new technologies installed on protected government networks.

“Several of the concerns you expressed in your letter touch on issues that the OPM OIG evaluates as part of our annual reviews of OPM’s IT and financial systems, and we plan to incorporate those concerns into these existing projects,” writes Vint in the March 7 letter. “We have also just begun an engagement to assess risks associated with new and modified information systems at OPM. We believe that, ultimately, our new engagement will broadly address many of your questions related to the integrity of OPM systems.”

18 notes

·

View notes

Text

An Introduction to Cybersecurity

I created this post for the Studyblr Masterpost Jam, check out the tag for more cool masterposts from folks in the studyblr community!

What is cybersecurity?

Cybersecurity is all about securing technology and processes - making sure that the software, hardware, and networks that run the world do exactly what they need to do and can't be abused by bad actors.

The CIA triad is a concept used to explain the three goals of cybersecurity. The pieces are:

Confidentiality: ensuring that information is kept secret, so it can only be viewed by the people who are allowed to do so. This involves encrypting data, requiring authentication before viewing data, and more.

Integrity: ensuring that information is trustworthy and cannot be tampered with. For example, this involves making sure that no one changes the contents of the file you're trying to download or intercepts your text messages.

Availability: ensuring that the services you need are there when you need them. Blocking every single person from accessing a piece of valuable information would be secure, but completely unusable, so we have to think about availability. This can also mean blocking DDoS attacks or fixing flaws in software that cause crashes or service issues.

What are some specializations within cybersecurity? What do cybersecurity professionals do?

incident response

digital forensics (often combined with incident response in the acronym DFIR)

reverse engineering

cryptography

governance/compliance/risk management

penetration testing/ethical hacking

vulnerability research/bug bounty

threat intelligence

cloud security

industrial/IoT security, often called Operational Technology (OT)

security engineering/writing code for cybersecurity tools (this is what I do!)

and more!

Where do cybersecurity professionals work?

I view the industry in three big chunks: vendors, everyday companies (for lack of a better term), and government. It's more complicated than that, but it helps.

Vendors make and sell security tools or services to other companies. Some examples are Crowdstrike, Cisco, Microsoft, Palo Alto, EY, etc. Vendors can be giant multinational corporations or small startups. Security tools can include software and hardware, while services can include consulting, technical support, or incident response or digital forensics services. Some companies are Managed Security Service Providers (MSSPs), which means that they serve as the security team for many other (often small) businesses.

Everyday companies include everyone from giant companies like Coca-Cola to the mom and pop shop down the street. Every company is a tech company now, and someone has to be in charge of securing things. Some businesses will have their own internal security teams that respond to incidents. Many companies buy tools provided by vendors like the ones above, and someone has to manage them. Small companies with small tech departments might dump all cybersecurity responsibilities on the IT team (or outsource things to a MSSP), or larger ones may have a dedicated security staff.

Government cybersecurity work can involve a lot of things, from securing the local water supply to working for the big three letter agencies. In the U.S. at least, there are also a lot of government contractors, who are their own individual companies but the vast majority of what they do is for the government. MITRE is one example, and the federal research labs and some university-affiliated labs are an extension of this. Government work and military contractor work are where geopolitics and ethics come into play most clearly, so just… be mindful.

What do academics in cybersecurity research?

A wide variety of things! You can get a good idea by browsing the papers from the ACM's Computer and Communications Security Conference. Some of the big research areas that I'm aware of are:

cryptography & post-quantum cryptography

machine learning model security & alignment

formal proofs of a program & programming language security

security & privacy

security of network protocols

vulnerability research & developing new attack vectors

Cybersecurity seems niche at first, but it actually covers a huge range of topics all across technology and policy. It's vital to running the world today, and I'm obviously biased but I think it's a fascinating topic to learn about. I'll be posting a new cybersecurity masterpost each day this week as a part of the #StudyblrMasterpostJam, so keep an eye out for tomorrow's post! In the meantime, check out the tag and see what other folks are posting about :D

#studyblrmasterpostjam#studyblr#cybersecurity#masterpost#ref#I love that this challenge is just a reason for people to talk about their passions and I'm so excited to read what everyone posts!

47 notes

·

View notes