#Data governance

Explore tagged Tumblr posts

Text

Unlock the other 99% of your data - now ready for AI

New Post has been published on https://thedigitalinsider.com/unlock-the-other-99-of-your-data-now-ready-for-ai/

Unlock the other 99% of your data - now ready for AI

For decades, companies of all sizes have recognized that the data available to them holds significant value, for improving user and customer experiences and for developing strategic plans based on empirical evidence.

As AI becomes increasingly accessible and practical for real-world business applications, the potential value of available data has grown exponentially. Successfully adopting AI requires significant effort in data collection, curation, and preprocessing. Moreover, important aspects such as data governance, privacy, anonymization, regulatory compliance, and security must be addressed carefully from the outset.

In a conversation with Henrique Lemes, Americas Data Platform Leader at IBM, we explored the challenges enterprises face in implementing practical AI in a range of use cases. We began by examining the nature of data itself, its various types, and its role in enabling effective AI-powered applications.

Henrique highlighted that referring to all enterprise information simply as ‘data’ understates its complexity. The modern enterprise navigates a fragmented landscape of diverse data types and inconsistent quality, particularly between structured and unstructured sources.

In simple terms, structured data refers to information that is organized in a standardized and easily searchable format, one that enables efficient processing and analysis by software systems.

Unstructured data is information that does not follow a predefined format nor organizational model, making it more complex to process and analyze. Unlike structured data, it includes diverse formats like emails, social media posts, videos, images, documents, and audio files. While it lacks the clear organization of structured data, unstructured data holds valuable insights that, when effectively managed through advanced analytics and AI, can drive innovation and inform strategic business decisions.

Henrique stated, “Currently, less than 1% of enterprise data is utilized by generative AI, and over 90% of that data is unstructured, which directly affects trust and quality”.

The element of trust in terms of data is an important one. Decision-makers in an organization need firm belief (trust) that the information at their fingertips is complete, reliable, and properly obtained. But there is evidence that states less than half of data available to businesses is used for AI, with unstructured data often going ignored or sidelined due to the complexity of processing it and examining it for compliance – especially at scale.

To open the way to better decisions that are based on a fuller set of empirical data, the trickle of easily consumed information needs to be turned into a firehose. Automated ingestion is the answer in this respect, Henrique said, but the governance rules and data policies still must be applied – to unstructured and structured data alike.

Henrique set out the three processes that let enterprises leverage the inherent value of their data. “Firstly, ingestion at scale. It’s important to automate this process. Second, curation and data governance. And the third [is when] you make this available for generative AI. We achieve over 40% of ROI over any conventional RAG use-case.”

IBM provides a unified strategy, rooted in a deep understanding of the enterprise’s AI journey, combined with advanced software solutions and domain expertise. This enables organizations to efficiently and securely transform both structured and unstructured data into AI-ready assets, all within the boundaries of existing governance and compliance frameworks.

“We bring together the people, processes, and tools. It’s not inherently simple, but we simplify it by aligning all the essential resources,” he said.

As businesses scale and transform, the diversity and volume of their data increase. To keep up, AI data ingestion process must be both scalable and flexible.

“[Companies] encounter difficulties when scaling because their AI solutions were initially built for specific tasks. When they attempt to broaden their scope, they often aren’t ready, the data pipelines grow more complex, and managing unstructured data becomes essential. This drives an increased demand for effective data governance,” he said.

IBM’s approach is to thoroughly understand each client’s AI journey, creating a clear roadmap to achieve ROI through effective AI implementation. “We prioritize data accuracy, whether structured or unstructured, along with data ingestion, lineage, governance, compliance with industry-specific regulations, and the necessary observability. These capabilities enable our clients to scale across multiple use cases and fully capitalize on the value of their data,” Henrique said.

Like anything worthwhile in technology implementation, it takes time to put the right processes in place, gravitate to the right tools, and have the necessary vision of how any data solution might need to evolve.

IBM offers enterprises a range of options and tooling to enable AI workloads in even the most regulated industries, at any scale. With international banks, finance houses, and global multinationals among its client roster, there are few substitutes for Big Blue in this context.

To find out more about enabling data pipelines for AI that drive business and offer fast, significant ROI, head over to this page.

#ai#AI-powered#Americas#Analysis#Analytics#applications#approach#assets#audio#banks#Blue#Business#business applications#Companies#complexity#compliance#customer experiences#data#data collection#Data Governance#data ingestion#data pipelines#data platform#decision-makers#diversity#documents#emails#enterprise#Enterprises#finance

2 notes

·

View notes

Text

How Dr. Imad Syed Transformed PiLog Group into a Digital Transformation Leader?

The digital age demands leaders who don’t just adapt but drive transformation. One such visionary is Dr. Imad Syed, who recently shared his incredible journey and PiLog Group’s path to success in an exclusive interview on Times Now.

In this inspiring conversation, Dr. Syed reflects on the milestones, challenges, and innovative strategies that have positioned PiLog Group as a global leader in data management and digital transformation.

The Journey of a Visionary:

From humble beginnings to spearheading PiLog’s global expansion, Dr. Syed’s story is a testament to resilience and innovation. His leadership has not only redefined PiLog but has also influenced industries worldwide, especially in domains like data governance, SaaS solutions, and AI-driven analytics.

PiLog’s Success: A Benchmark in Digital Transformation:

Under Dr. Syed’s guidance, PiLog has become synonymous with pioneering Lean Data Governance SaaS solutions. Their focus on data integrity and process automation has helped businesses achieve operational excellence. PiLog’s services are trusted by industries such as oil and gas, manufacturing, energy, utilities & nuclear and many more.

Key Insights from the Interview:

In the interview, Dr. Syed touches upon:

The importance of data governance in digital transformation.

How PiLog’s solutions empower organizations to streamline operations.

His philosophy of continuous learning and innovation.

A Must-Watch for Industry Leaders:

If you’re a business leader or tech enthusiast, this interview is packed with actionable insights that can transform your understanding of digital innovation.

👉 Watch the full interview here:

youtube

The Global Impact of PiLog Group:

PiLog’s success story resonates globally, serving clients across Africa, the USA, EU, Gulf countries, and beyond. Their ability to adapt and innovate makes them a case study in leveraging digital transformation for competitive advantage.

Join the Conversation:

What’s your take on the future of data governance and digital transformation? Share your thoughts and experiences in the comments below.

#datamanagement#data governance#data analysis#data analytics#data scientist#big data#dataengineering#dataprivacy#data centers#datadriven#data#businesssolutions#techinnovation#businessgrowth#businessautomation#digital transformation#piloggroup#drimadsyed#timesnowinterview#datascience#artificialintelligence#bigdata#datadrivendecisions#Youtube

3 notes

·

View notes

Text

Data Modelling Master Class-Series | Introduction -Topic 1

https://youtu.be/L1x_BM9wWdQ

#theDataChannel @thedatachannel @datamodelling

#data modeling#data#data architecture#data analytics#data quality#enterprise data management#enterprise data warehouse#the Data Channel#data design#data architect#entity relationship#ERDs#physical data model#logical data model#data governance

2 notes

·

View notes

Text

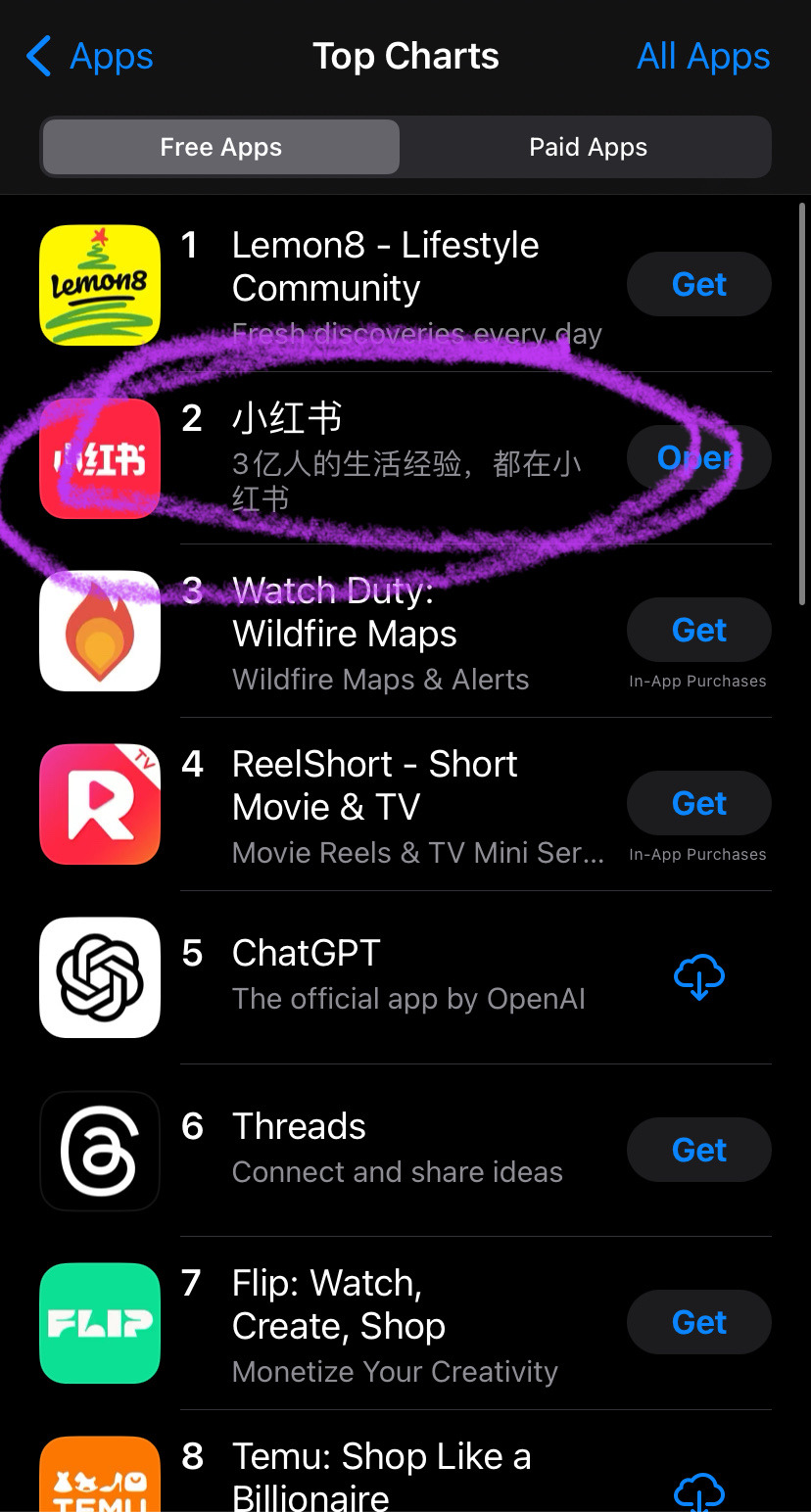

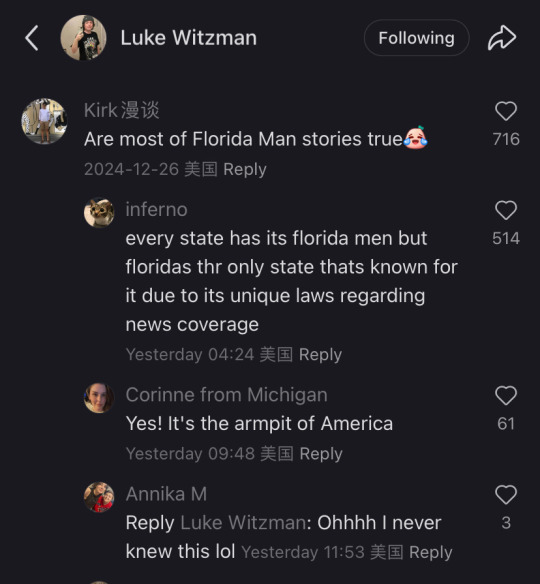

Nope now it’s at the point that i’m shocked that people off tt don’t know what’s going down. I have no reach but i’ll sum it up anyway.

SCOTUS is hearing on the constitutionality of the ban as tiktok and creators are arguing that it is a violation of our first amendment rights to free speech, freedom of the press and freedom to assemble.

SCOTUS: tiktok bad, big security concern because china bad!

Tiktok lawyers: if china is such a concern why are you singling us out? Why not SHEIN or temu which collect far more information and are less transparent with their users?

SCOTUS (out loud): well you see we don’t like how users are communicating with each other, it’s making them more anti-american and china could disseminate pro china propaganda (get it? They literally said they do not like how we Speak or how we Assemble. Independent journalists reach their audience on tt meaning they have Press they want to suppress)

Tiktok users: this is fucking bullshit i don’t want to lose this community what should we do? We don’t want to go to meta or x because they both lobbied congress to ban tiktok (free market capitalism amirite? Paying off your local congressmen to suppress the competition is totally what the free market is about) but nothing else is like TikTok

A few users: what about xiaohongshu? It’s the Chinese version of tiktok (not quite, douyin is the chinese tiktok but it’s primarily for younger users so xiaohongshu was chosen)

16 hours later:

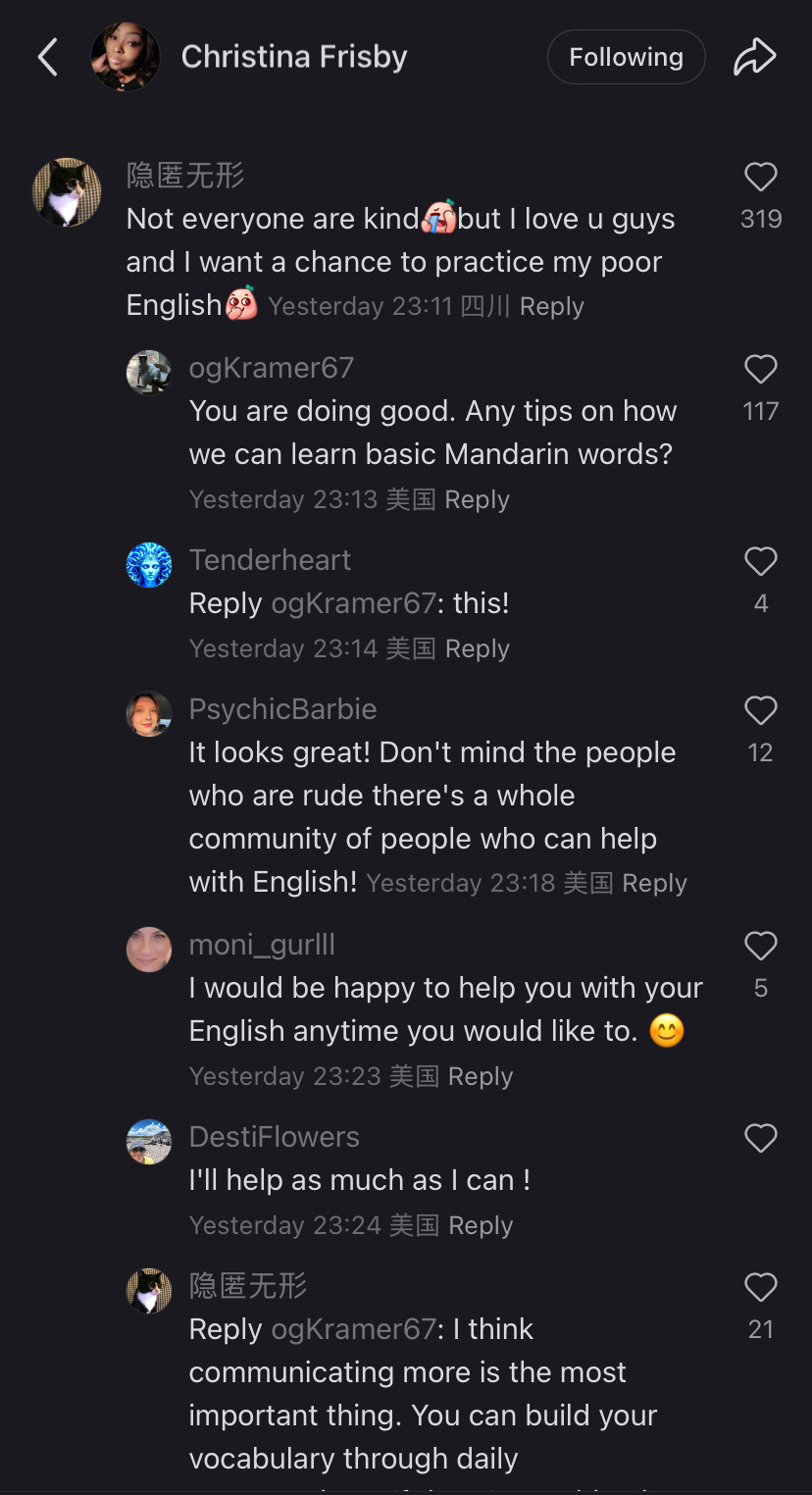

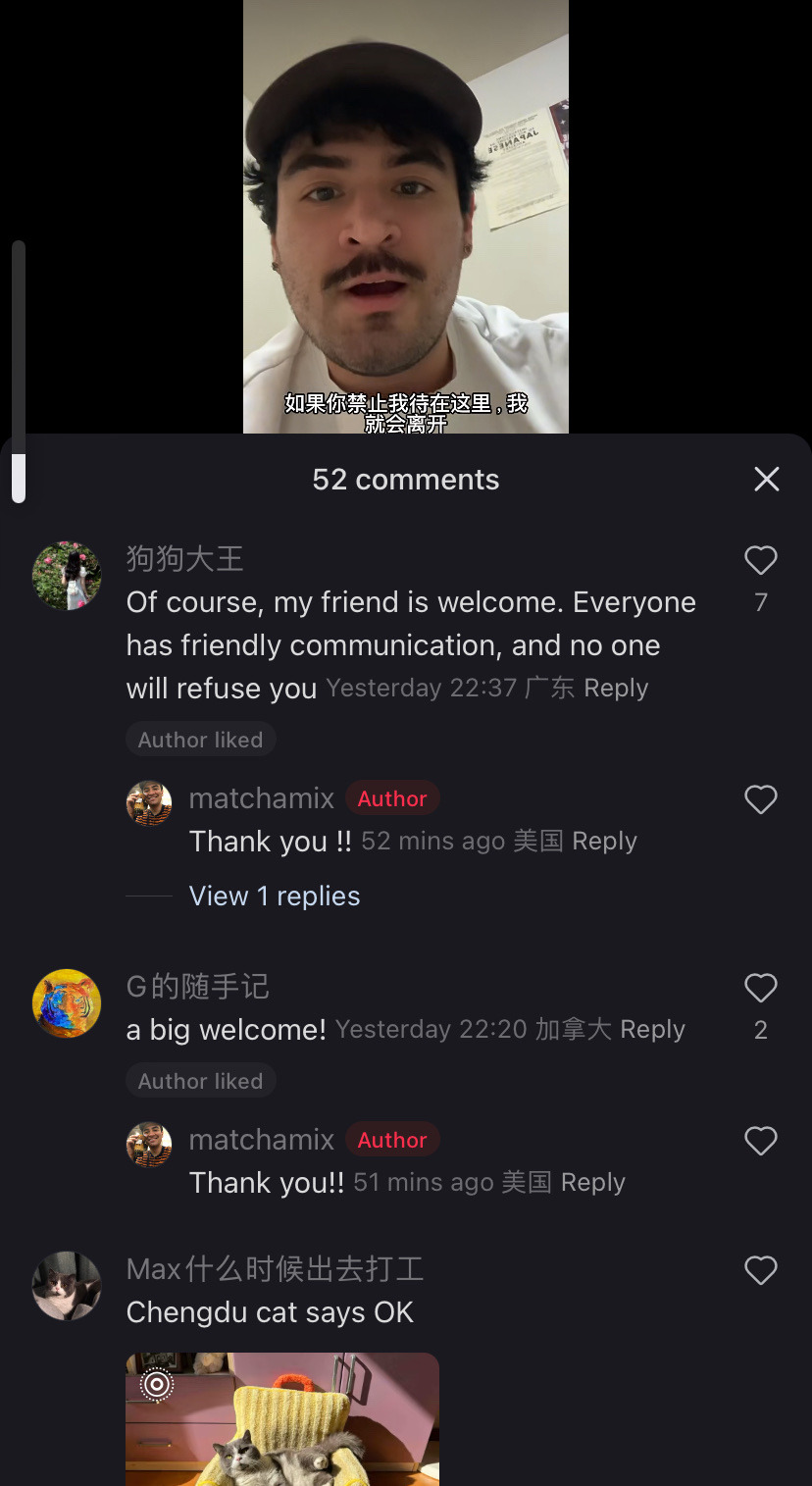

Tiktok as a community has chosen to collectively migrate TO a chinese owned app that is purely in Chinese out of utter spite and contempt for meta/x and the gov that is backing them.

My fyp is a mix of “i would rather mail memes to my friends than ever return to instagram reels” and “i will xerox my data to xi jinping myself i do not care i share my ss# with 5 other people anyway” and “im just getting ready for my day with my chinese made coffee maker and my Chinese made blowdryer and my chinese made clothing and listening to a podcast on my chinese made phone and get in my car running on chinese manufactured microchips but logging into a chinese social media? Too much for our gov!” etc.

So the government was scared that tiktok was creating a sense of class consciousness and tried to kill it but by doing so they sent us all to xiaohongshu. And now? Oh it’s adorable seeing this gov-manufactured divide be crossed in such a way.

This is adorable and so not what they were expecting. Im sure they were expecting a reluctant return to reels and shorts to fill the void but tiktokers said fuck that, we will forge connections across the world. Who you tell me is my enemy i will make my friend. That’s pretty damn cool.

#tiktok ban#xiaohongshu#the great tiktok migration of 2025#us politics#us government#scotus#ftr tiktok is owned primarily by private investors and is not operated out of china#and all us data is stored on servers here in the us#tiktok also employs 7000 us employees to maintain the US side of operations#like they’re just lying to get us to shut up about genocide and corruption#so fuck it we’ll go spill all the tea to ears that wanna hear it cause this country is not what its cracked up to be#we been lied to and the rest of the world has been lied to#if scotus bans it tomorrow i can’t wait for their finding out#rednote

42K notes

·

View notes

Text

From Data Chaos to Clarity: How Data Consulting Companies Make It Happen

In today’s digital era, businesses generate massive amounts of data from various sources such as customer interactions, online transactions, social media, IoT devices, and internal operations. While data is a powerful asset, most organizations struggle to manage it effectively. Without proper structure and strategy, data becomes overwhelming and leads to confusion instead of actionable insights. This is where data consulting companies and data analytics consulting companies play a transformative role.

These firms specialize in converting raw, unstructured data into valuable insights that drive business growth, streamline operations, and enhance decision-making. With expertise in big data, cloud technologies, artificial intelligence (AI), and machine learning (ML), data consulting companies are helping organizations move from data chaos to data clarity.

Why Data Chaos is a Major Challenge

Many organizations collect data from multiple sources but fail to unify it into a cohesive system. Common challenges include:

Inconsistent data formats and quality issues.

Lack of centralized storage or data strategy.

Difficulty in extracting meaningful patterns from large datasets.

Limited expertise in advanced analytics and visualization.

Without the right infrastructure and expertise, businesses miss opportunities, face inefficiencies, and struggle to stay competitive. Data analytics consulting companies offer solutions tailored to these pain points, ensuring businesses can fully leverage their data assets.

How Data Consulting Companies Turn Chaos into Clarity

Data Strategy and Governance Top data consulting companies begin by assessing the current data environment and developing a robust strategy. They define data governance policies, ensuring accuracy, consistency, and compliance with regulations such as GDPR and CCPA.

Data Integration and Cleaning One of the biggest challenges enterprises face is handling fragmented data sources. Data analytics consulting companies integrate data from multiple platforms, clean it to remove inaccuracies, and create a unified view for analysis.

Advanced Analytics and AI Implementation By leveraging AI and ML, consulting firms help businesses uncover patterns, predict trends, and gain insights that drive growth. Predictive and prescriptive analytics enable organizations to make proactive decisions rather than reactive ones.

Data Visualization and Reporting Turning raw data into user-friendly dashboards is a core service of data consulting companies. Tools like Tableau, Power BI, and Qlik are used to create real-time visual reports that make complex data easy to interpret.

Cloud Data Solutions Migrating to cloud platforms such as AWS, Azure, or Google Cloud ensures data scalability, security, and cost efficiency. Most data analytics consulting companies help enterprises transition from legacy systems to modern cloud environments.

Industry-Specific Insights Leading data consulting companies offer customized solutions for industries like finance, healthcare, retail, and manufacturing. For example, retailers can leverage customer data for personalized marketing, while manufacturers can optimize supply chains using predictive analytics.

Benefits of Partnering with Data Analytics Consulting Companies

Improved Decision-Making: Businesses gain actionable insights that improve strategy and execution.

Enhanced Efficiency: Streamlined data processes reduce operational delays and redundancies.

Competitive Advantage: Advanced analytics reveal opportunities before competitors notice them.

Risk Reduction: Data-driven strategies help identify and mitigate risks early.

Revenue Growth: Insights from data consulting companies often lead to new revenue streams and better customer retention.

Why 2025 is the Year of Data Clarity

As the volume of data continues to grow, businesses that invest in data analytics consulting companies are better equipped to stay ahead. With the integration of AI, real-time analytics, and big data platforms, 2025 will see more enterprises adopting a data-first approach. Data consulting companies are becoming strategic partners for organizations looking to unlock hidden opportunities and achieve long-term success.

Conclusion

From tackling data silos to implementing predictive analytics, data consulting companies are turning complex, messy datasets into actionable insights. By leveraging the expertise of data analytics consulting companies, businesses can achieve clarity, efficiency, and growth. For any organization aiming to thrive in today’s digital economy, investing in professional data consulting services is no longer optional — it is a necessity.

#data consulting companies#data analytics consulting companies#big data strategy#AI consulting#predictive analytics#business intelligence#data visualization#cloud data solutions#data governance#digital transformation

0 notes

Text

youtube

Ensure Data Quality for Generative AI Success – Tejasvi Addagada

How Generative AI relies on high-quality data to deliver accurate and reliable results. In this video, Tejasvi Addagada explains key strategies to ensure data quality, governance, and compliance for successful AI implementation.

#tejasvi addagada#data governance strategy#data management framework#data quality generative AI#data governance#data risk management#Youtube

0 notes

Text

7 Essential Data Governance Policies for a Successful Data Migration

Data migration is a high-stakes endeavor. Whether you’re moving to a new cloud platform, upgrading systems, or consolidating databases, the process is fraught with risks: data loss, security breaches, compliance fines, and operational disruptions. A robust data governance framework isn’t just a best practice—it’s your safety net. By implementing the right policies, you can ensure accuracy,…

0 notes

Text

🚀 NEW BLOG: Unlock the Next Wave of Digital Strategy! Discover how Agentic AI, emotional personalization, decentralized platforms, and next-gen data governance will shape business innovation through late 2025 and into 2026. Are you ready to future‑proof your digital roadmap? #digitalstrategy #AI #agenticAI #technology #future #futurism #datagovernance #personalization #cybersecurity #startups #innovation #management #productivity #entrepreneurship #marketing #digitaltransformation #humanresources #creativity #customerrelations #branding

#Agentic AI#AI#Behavioral KPIs#cybersecurity#Data Governance#Decentralized Platforms#Digital Transformation#Personalization

0 notes

Text

Best Master Data Harmonization

What is Master Data Harmonization?

Master Data Harmonization is the process of standardizing and consolidating master data—such as customer, product, supplier, and asset data—across multiple systems and platforms. It ensures that different business units refer to the same data definitions, values, and structures, thereby reducing inconsistencies, duplication, and data silos.

Benefits of Master Data Harmonization

Improved Data Quality: Standardized data reduces errors, inconsistencies, and redundancies.

Operational Efficiency: Harmonized data supports streamlined business operations and faster decision-making.

Better Compliance: Consistent data supports regulatory compliance and accurate reporting.

Enhanced Customer Experience: With a single view of customer or product data, businesses can deliver more personalized services.

Cost Savings: Reducing duplication and error correction leads to significant savings in time and resources.

Best Practices for Master Data Harmonization

Establish Data Governance Framework: Define clear policies, roles, and responsibilities to manage and govern data effectively.

Conduct Data Profiling and Assessment: Understand the current state of master data to identify inconsistencies and areas for improvement.

Define Standard Data Models: Create a unified data model with standardized naming conventions, formats, and hierarchies.

Use Data Harmonization Tools and Platforms: Leverage MDM (Master Data Management) tools with harmonization capabilities to automate and enforce consistency.

Implement a Phased Approach: Start with high-impact areas (e.g., customer or product data) before expanding to other domains.

Ensure Stakeholder Involvement: Engage business and IT stakeholders to ensure alignment and adoption.

Monitor and Maintain Continuously: Data harmonization is not a one-time activity. Regular audits, updates, and monitoring are essential to sustain quality.

Conclusion

Master Data Harmonization is critical for organizations seeking to thrive in the digital era. It ensures data consistency, enhances business agility, and empowers better decision-making. By adopting best practices and leveraging the right technologies, businesses can create a strong foundation of trusted data, enabling sustained growth and competitive advantage.

0 notes

Text

Protecting Your Data Supply Chain with DTO Technology

In today’s rapidly evolving global marketplace, organizations are increasingly relying on third-party vendors, suppliers, and contractors to manage critical services and functions. These partnerships are essential for streamlining operations, reducing costs, accelerating time-to-market, and achieving a sustainable competitive edge. However, as beneficial as outsourcing may be, it comes with its…

#business#Business Intelligence#cyber threat#cybersecurity#data governance#data privacy#data protection#digital twin#digital twin companies#digital twin for manufacturing#digital twin industry 4.0#Digital Twin of an Organization#digital twin of an organization dto#digital twin software#Digital Twin Technology#digital twinning#DTO#Information Technology#Security#technology#third-party risk management

0 notes

Text

The concerted effort of maintaining application resilience

New Post has been published on https://thedigitalinsider.com/the-concerted-effort-of-maintaining-application-resilience/

The concerted effort of maintaining application resilience

Back when most business applications were monolithic, ensuring their resilience was by no means easy. But given the way apps run in 2025 and what’s expected of them, maintaining monolithic apps was arguably simpler.

Back then, IT staff had a finite set of criteria on which to improve an application’s resilience, and the rate of change to the application and its infrastructure was a great deal slower. Today, the demands we place on apps are different, more numerous, and subject to a faster rate of change.

There are also just more applications. According to IDC, there are likely to be a billion more in production by 2028 – and many of these will be running on cloud-native code and mixed infrastructure. With technological complexity and higher service expectations of responsiveness and quality, ensuring resilience has grown into being a massively more complex ask.

Multi-dimensional elements determine app resilience, dimensions that fall into different areas of responsibility in the modern enterprise: Code quality falls to development teams; infrastructure might be down to systems administrators or DevOps; compliance and data governance officers have their own needs and stipulations, as do cybersecurity professionals, storage engineers, database administrators, and a dozen more besides.

With multiple tools designed to ensure the resilience of an app – with definitions of what constitutes resilience depending on who’s asking – it’s small wonder that there are typically dozens of tools that work to improve and maintain resilience in play at any one time in the modern enterprise.

Determining resilience across the whole enterprise’s portfolio, therefore, is near-impossible. Monitoring software is silo-ed, and there’s no single pane of reference.

IBM’s Concert Resilience Posture simplifies the complexities of multiple dashboards, normalizes the different quality judgments, breaks down data from different silos, and unifies the disparate purposes of monitoring and remediation tools in play.

Speaking ahead of TechEx North America (4-5 June, Santa Clara Convention Center), Jennifer Fitzgerald, Product Management Director, Observability, at IBM, took us through the Concert Resilience Posture solution, its aims, and its ethos. On the latter, she differentiates it from other tools:

“Everything we’re doing is grounded in applications – the health and performance of the applications and reducing risk factors for the application.”

The app-centric approach means the bringing together of the different metrics in the context of desired business outcomes, answering questions that matter to an organization’s stakeholders, like:

Will every application scale?

What effects have code changes had?

Are we over- or under-resourcing any element of any application?

Is infrastructure supporting or hindering application deployment?

Are we safe and in line with data governance policies?

What experience are we giving our customers?

Jennifer says IBM Concert Resilience Posture is, “a new way to think about resilience – to move it from a manual stitching [of other tools] or a ton of different dashboards.” Although the definition of resilience can be ephemeral, according to which criteria are in play, Jennifer says it’s comprised, at its core, of eight non-functional requirements (NFRs):

Observability

Availability

Maintainability

Recoverability

Scalability

Usability

Integrity

Security

NFRs are important everywhere in the organization, and there are perhaps only two or three that are the sole remit of one department – security falls to the CISO, for example. But ensuring the best quality of resilience in all of the above is critically important right across the enterprise. It’s a shared responsibility for maintaining excellence in performance, potential, and safety.

What IBM Concert Resilience Posture gives organizations, different from what’s offered by a collection of disparate tools and beyond the single-pane-of-glass paradigm, is proactivity. Proactive resilience comes from its ability to give a resilience score, based on multiple metrics, with a score determined by the many dozens of data points in each NFR. Companies can see their overall or per-app scores drift as changes are made – to the infrastructure, to code, to the portfolio of applications in production, and so on.

“The thought around resilience is that we as humans aren’t perfect. We’re going to make mistakes. But how do you come back? You want your applications to be fully, highly performant, always optimal, with the required uptime. But issues are going to happen. A code change is introduced that breaks something, or there’s more demand on a certain area that slows down performance. And so the application resilience we’re looking at is all around the ability of systems to withstand and recover quickly from disruptions, failures, spikes in demand, [and] unexpected events,” she says.

IBM’s acquisition history points to some of the complimentary elements of the Concert Resilience Posture solution – Instana for full-stack observability, Turbonomic for resource optimization, for example. But the whole is greater than the sum of the parts. There’s an AI-powered continuous assessment of all elements that make up an organization’s resilience, so there’s one place where decision-makers and IT teams can assess, manage, and configure the full-stack’s resilience profile.

The IBM portfolio of resilience-focused solutions helps teams see when and why loads change and therefore where resources are wasted. It’s possible to ensure that necessary resources are allocated only when needed, and systems automatically scale back when they’re not. That sort of business- and cost-centric capability is at the heart of app-centric resilience, and means that a company is always optimizing its resources.

Overarching all aspects of app performance and resilience is the element of cost. Throwing extra resources at an under-performing application (or its supporting infrastructure) isn’t a viable solution in most organizations. With IBM, organizations get the ability to scale and grow, to add or iterate apps safely, without necessarily having to invest in new provisioning, either in the cloud or on-premise. Plus, they can see how any changes impact resilience. It’s making best use of what’s available, and winning back capacity – all while getting the best performance, responsiveness, reliability, and uptime across the enterprise’s application portfolio.

Jennifer says, “There’s a lot of different things that can impact resilience and that’s why it’s been so difficult to measure. An application has so many different layers underneath, even in just its resources and how it’s built. But then there’s the spider web of downstream impacts. A code change could impact multiple apps, or it could impact one piece of an app. What is the downstream impact of something going wrong? And that’s a big piece of what our tools are helping organizations with.”

You can read more about IBM’s work to make today and tomorrow’s applications resilient.

#2025#acquisition#ADD#ai#AI-powered#America#app#application deployment#application resilience#applications#approach#apps#assessment#billion#Business#business applications#change#CISO#Cloud#Cloud-Native#code#Companies#complexity#compliance#continuous#convention#cybersecurity#data#Data Governance#data pipeline

0 notes

Text

Stop Waste: Avoid Paying Twice for Spare Parts and Repeating RFx Procedures Let Data Governance Drive Your Procurement Efficiency

Have you ever wondered how much your company loses by purchasing the same spare parts multiple times or issuing RFx requests to the same supplier repeatedly?

In sectors such as aerospace, defense, and manufacturing, these redundant procurement activities don’t just increase expenses—they also reduce efficiency and introduce avoidable risks.

But the impact doesn’t end there:

Duplicate orders lead to unnecessary capital lock-up and poor inventory control.

Redundant RFx efforts cause wasted time, duplicated labor, and potential confusion for suppliers.

Insufficient visibility into your procurement data and processes can result in costly and time-consuming mistakes.

It’s time to eliminate inefficiencies and make your procurement process more streamlined.

How can you achieve this?

PiLog’s Data Governance solution helps you overcome these challenges by providing:

Centralized, real-time access to procurement data across all systems

Advanced data validation mechanisms that automatically identify duplicate or overlapping supply chain activities

Integrated governance frameworks and workflows that guarantee RFx requests are only sent when necessary—and directed to the right parties at the right time

By utilizing AI-driven data governance combined with intelligent automation, PiLog empowers your team to focus on strategic priorities, reduce costs, and improve operational efficiency.

0 notes

Text

Data Governance in Power BI: Security, Sharing, and Compliance

In today’s data-driven business landscape, Power BI stands out as a powerful tool for transforming raw data into actionable insights. But as organizations scale their analytics capabilities, data governance becomes crucial to ensure data security, proper sharing, and compliance with regulatory standards.

In this article, we explore how Power BI supports effective data governance and how mastering these concepts through Power BI training can empower professionals to manage data responsibly and securely.

🔐 Security in Power BI

Data security is the backbone of any governance strategy. Power BI offers several robust features to keep data secure across all layers:

1. Role-Level Security (RLS)

With RLS, you can define filters that limit access to data at the row level, ensuring users only see what they’re authorized to.

2. Microsoft Information Protection Integration

Power BI integrates with Microsoft’s sensitivity labels, allowing you to classify and protect sensitive information seamlessly.

3. Data Encryption

All data in Power BI is encrypted both at rest and in transit, using industry-standard encryption protocols.

🔗 Sharing and Collaboration

Collaboration is key in data analytics, but uncontrolled sharing can lead to data leaks. Power BI provides controlled sharing options:

1. Workspaces and App Sharing

Users can collaborate within defined workspaces and distribute dashboards or reports as apps to broader audiences with specific permissions.

2. Content Certification and Endorsement

Promote data trust by endorsing or certifying datasets, dashboards, and reports, helping users identify reliable sources.

3. Sharing Audits

Audit logs allow administrators to track how reports and dashboards are shared and accessed across the organization.

✅ Compliance and Auditing

To comply with regulations like GDPR, HIPAA, or ISO standards, Power BI includes:

1. Audit Logs and Activity Monitoring

Track user activities such as report views, exports, and data modifications for full traceability.

2. Data Retention Policies

Organizations can configure retention policies for datasets to meet specific regulatory requirements.

3. Service Trust Portal

Microsoft’s compliance framework includes regular audits and certifications to help Power BI users stay compliant with global standards.

🎓 Why Learn Data Governance in Power BI?

Understanding data governance is not just for IT professionals. Business users, analysts, and developers can benefit from structured Power BI training that includes:

Hands-on experience with RLS and permission settings

Best practices for sharing content securely

Compliance tools and how to use them effectively

By enrolling in a Power BI training program, you’ll gain the knowledge to build secure and compliant dashboards that foster trust and transparency in your organization.

🙋♀️ Frequently Asked Questions (FAQs)

Q1. What is data governance in Power BI? Data governance in Power BI involves managing the availability, usability, integrity, and security of data used in reports and dashboards.

Q2. How do I ensure secure data sharing in Power BI? Use role-level security, control access through workspaces, and audit sharing activities to ensure secure collaboration.

Q3. Is Power BI compliant with GDPR and other standards? Yes, Power BI is built on Microsoft Azure and complies with global data protection and privacy regulations.

Q4. Why is Power BI training important for governance? Training helps professionals understand and apply best practices for securing data, managing access, and ensuring compliance.

Q5. Where can I get the best Power BI training? We recommend enrolling in hands-on courses that cover real-time projects, governance tools, and industry use cases.

🌐 Ready to Master Power BI?

Unlock the full potential of Power BI with comprehensive training programs that cover everything from data modeling to governance best practices.

👉 Visit our website to learn more about Power BI training and certification opportunities.

0 notes

Text

Improve data control and usage with our step-by-step data governance strategy guide. Build trust in your data across the enterprise.

0 notes

Text

Why Using AI with Sensitive Business Data Can Be Risky

The AI Boom: Awesome… But Also Dangerous AI is everywhere now — helping with customer service, writing emails, analyzing trends. It sounds like a dream come true. But if you’re using it with private or regulated data (like health info, financials, or client records), there’s a real risk of breaking the rules — and getting into trouble. We’ve seen small businesses get excited about AI… until…

#AI#AI ethics#AI risks#AI security#artificial intelligence#business data#CCPA#compliance#cybersecurity#data governance#data privacy#GDPR#hipaa#informed consent#privacy by design#regulatory compliance#SMB

0 notes

Text

Why AI Risk and Data Quality Generative AI Matter to Boardrooms? | Tejasvi Addagada

Why boardrooms are prioritizing AI risk, value, and the impact of data quality generative AI on enterprise strategy. This shift reflects growing concerns about data governance, ethical AI use, and regulatory compliance. Learn how businesses are integrating AI responsibly to drive performance, manage risk, and maintain trust in digital operations.

#data risk management#data governance#data risk Management in Financial services#data risk management services#data quality generative ai#Tejasvi Addagada

0 notes