#data quality generative AI

Explore tagged Tumblr posts

Text

Boost Data Quality with Generative AI for Better Governance- Tejasvi Addagada

How Data Quality Generative AI enhances governance by automating data validation, reducing errors, and ensuring compliance. Learn proven strategies that integrate AI with privacy enhancing technologies and a strong data governance strategy. Improve accuracy, reduce risk, and unlock data's full potential with smart, scalable AI solutions for modern businesses.

#tejasvi addagada#Data Quality Generative AI#data governance strategy#privacy enhancing technologies#data risk management

0 notes

Text

Privacy Enhancing Technologies | Tejasvi Aadagada

Tejasvi Addagada is a thought leader in Privacy-Enhancing Technologies (PETs), driving innovation to safeguard data in an increasingly digital world. His expertise spans advanced cryptographic techniques, data anonymization, and secure multi-party computation, enabling organizations to harness data utility while maintaining strict privacy compliance. Through his strategic vision, Tejasvi is redefining how businesses implement PETs to mitigate risks, enhance data security, and build trust in digital ecosystems. His approach combines robust technical knowledge with a pragmatic understanding of regulatory landscapes, positioning him at the forefront of privacy technology advancements.

#privacy enhancing technologies#data quality generative ai#data management#data analytics#data strategy

1 note

·

View note

Text

When I say I hate generative AI, I don't mean, I hate gen AI unless it amuses me or is convenient for me. I don't mean, I hate gen AI only when it involves the art formats I participate in and everything else is fair game. I mean, I HATE GEN AI.

#rage rage#it's a waste of water#so much data used for llms is taken without permission#it's so much easier for misinfo to spread#artists are losing job opportunities#online communities are getting swamped with ai slop#and yet one of the most common criticisms i see of ai#is that the output is low quality#my dudes ai could generate an image of a bunch of perfectly rendered five fingered hands#and what i said above would still apply#if anything the low quality factor is a point in ai's favor

3 notes

·

View notes

Text

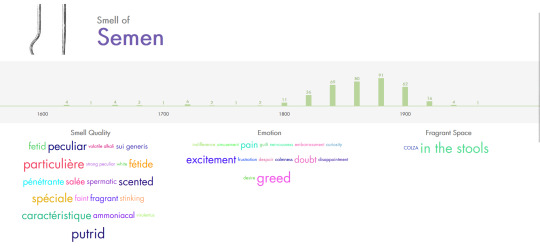

doing research

#you know the regular kind#also this database is so shit#it uses LLMs to scrape data from books to associate smells with feelings and qualities etc#99% of smells have the same vague descriptions like perfumed scented odorous fragrant#which is a shame bc the project in general seems really interesting#and some parts seem to be well done at least at a glance#it really just feels like they had to hop on the ai train to get funding

2 notes

·

View notes

Text

i am pretty excited for the miku nt update early access tomorrow. the demonstrations have sounded pretty solid so far and tbh i am super intrigued by the idea of hybrid concatenative+ai vocal synthesis, i wanna see what people doooo with it. show me it nowwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwww

#im assuming it'll be out sometime in japanese afternoon time. but i will be asleep so i have to wait until tomorrow <3#but im so intrigued....... synthv did a different thing a bajillion years ago where they like#trained ai voicebanks off of their concatenative data? it never went anywhere because of quality issues?#but i still think theres some potential in that. and i think nt2 might be the first commercial release thats#sample based with ai assistance? correct me if im wrong though i could be forgetting stuff#but i dunno.... im intrigued.... i would love to see another go at kaito in theory#BUT crypton is like afraid of his v1 hint of chest voice so i dunno how much id like the direction theyre going in#and that really is my biggest issue with later versions of kaito he's like all nasal#like the opposite issue genbu has LOL genbus all chest no head#(smacks phone against the pavement gif)#although all chest is easier to deal with because if i want a hiiiiint of a nasal-y heady tone i can fudge it with gender#plus he has those secret falsetto phonemes. the secret falsetto phonemes.#its harder to make a falsetto-y voice sound chestier with more warmth than the other way around#people can do pretty wonderful things with kaito v3 and sp though. but i still crave that v1 HJKFLDSJHds#but yeah i dunno! i imagine they wont bother with new NTs for the other guys after miku v6 but i would be curious#i am still not personally sold on v6 in general yet. but maybe vx will change that LOL#the future of vocal synthesizers is so exciting..... everything is happening all the time

6 notes

·

View notes

Text

If taxonomy alignment is so important to data quality, are we paying enough attention to it?

0 notes

Text

The Evolution of Generative AI in 2025: From Novelty to Necessity

New Post has been published on https://thedigitalinsider.com/the-evolution-of-generative-ai-in-2025-from-novelty-to-necessity/

The Evolution of Generative AI in 2025: From Novelty to Necessity

The year 2025 marks a pivotal moment in the journey of Generative AI (Gen AI). What began as a fascinating technological novelty has now evolved into a critical tool for businesses across various industries.

Generative AI: From Solution Searching for a Problem to Problem-Solving Powerhouse

The initial surge of Gen AI enthusiasm was driven by the raw novelty of interacting with large language models (LLMs), which are trained on vast public data sets. Businesses and individuals alike were rightfully captivated with the ability to type in natural language prompts and receive detailed, coherent responses from the public frontier models. The human-esque quality of the outputs from LLMs led many industries to charge headlong into projects with this new technology, often without a clear business problem to solve or any real KPI to measure success. While there have been some great value unlocks in the early days of Gen AI, it is a clear signal we are in an innovation (or hype) cycle when businesses abandon the practice of identifying a problem first, and then seeking a workable technology solution to solve it.

In 2025, we expect the pendulum to swing back. Organizations will look to Gen AI for business value by first identifying problems that the technology can address. There will surely be many more well funded science projects, and the first wave of Gen AI use cases for summarization, chatbots, content and code generation will continue to flourish, but executives will start holding AI projects accountable for ROI this year. The technology focus will also shift from public general-purpose language models that generate content to an ensemble of narrower models which can be controlled and continually trained on the distinct language of a business to solve real-world problems which impact the bottom line in a measurable way.

2025 will be the year AI moves to the core of the enterprise. Enterprise data is the path to unlock real value with AI, but the training data needed to build a transformational strategy is not on Wikipedia, and it never will be. It lives in contracts, customer and patient records, and in the messy unstructured interactions that often flow through the back office or live in boxes of paper.. Getting that data is complicated, and general purpose LLMs are a poor technology fit here, notwithstanding the privacy, security and data governance concerns. Enterprises will increasingly adopt RAG architectures, and small language models (SLMs) in private cloud settings, allowing them to leverage internal organizational data sets to build proprietary AI solutions with a portfolio of trainable models. Targeted SLMs can understand the specific language of a business and nuances of its data, and provide higher accuracy and transparency at a lower cost point – while staying in line with data privacy and security requirements.

The Critical Role of Data Scrubbing in AI Implementation

As AI initiatives proliferate, organizations must prioritize data quality. The first and most crucial step in implementing AI, whether using LLMs or SLMs, is to ensure that internal data is free from errors and inaccuracies. This process, known as “data scrubbing,” is essential for the curation of a clean data estate, which is the lynchpin for the success of AI projects.

Many organizations still rely on paper documents, which need to be digitized and cleaned for day to day business operations. Ideally, this data would flow into labeled training sets for an organization’s proprietary AI, but we are early days in seeing that happen. In fact, in a recent survey we conducted in collaboration with the Harris Poll, where we interviewed more than 500 IT decision-makers between August-September, found that 59% of organizations aren’t even using their entire data estate. The same report found that 63% of organizations agree that they have a lack of understanding of their own data and this is inhibiting their ability to maximize the potential of GenAI and similar technologies. Privacy, security and governance concerns are certainly obstacles, but accurate and clean data is critical, even slight training errors can lead to compounding issues which are challenging to unwind once an AI model gets it wrong. In 2025, data scrubbing and the pipelines to ensure data quality will become a critical investment area, ensuring that a new breed of enterprise AI systems can operate on reliable and accurate information.

The Expanding Impact of the CTO Role

The role of the Chief Technology Officer (CTO) has always been crucial, but its impact is set to expand tenfold in 2025. Drawing parallels to the “CMO era,” where customer experience under the Chief Marketing Officer was paramount, the coming years will be the “generation of the CTO.”

While the core responsibilities of the CTO remain unchanged, the influence of their decisions will be more significant than ever. Successful CTOs will need a deep understanding of how emerging technologies can reshape their organizations. They must also grasp how AI and the related modern technologies drive business transformation, not just efficiencies within the company’s four walls. The decisions made by CTOs in 2025 will determine the future trajectory of their organizations, making their role more impactful than ever.

The predictions for 2025 highlight a transformative year for Gen AI, data management, and the role of the CTO. As Gen AI moves from being a solution in search of a problem to a problem-solving powerhouse, the importance of data scrubbing, the value of enterprise data estates and the expanding impact of the CTO will shape the future of enterprises. Organizations that embrace these changes will be well-positioned to thrive in the evolving technological landscape.

#2025#ai#ai model#AI systems#ai use cases#Artificial Intelligence#Business#chatbots#Cloud#code#code generation#Collaboration#content#CTO#customer experience#data#Data Governance#Data Management#data privacy#data privacy and security#data quality#decision-makers#emerging technologies#enterprise#enterprise AI#Enterprises#Evolution#executives#focus#Future

0 notes

Text

What is AI-Ready Data? How to Get Your There?

Data powers AI systems, enabling them to generate insights, predict outcomes, and transform decision-making. However, AI’s impact hinges on the quality and readiness of the data it consumes. A recent Harvard Business Review report reveals a troubling trend: approximately 80% of AI projects fail, largely due to poor data quality, irrelevant data, and a lack of understanding of AI-specific data requirements.

As AI technologies are projected to contribute up to $15.7 trillion to the global economy by 2030, the emphasis on AI-ready data is more urgent than ever. Investing in data readiness is not merely technical; it’s a strategic priority that shapes AI’s effectiveness and a company’s competitive edge in today’s data-driven landscape.

(Source: Statista)

Achieving AI-ready data requires addressing identified gaps by building strong data management practices, prioritizing data quality enhancements, and using technology to streamline integration and processing. By proactively tackling these issues, organizations can significantly improve data readiness, minimize AI project risks, and unlock AI’s full potential to fuel innovation and growth.

In this article, we’ll explore what constitutes AI-ready data and why it is vital for effective AI deployment. We will also examine the primary obstacles to data readiness, the characteristics that define AI-ready data, and the practices for data preparation. Furthermore, well discuss how to align data with specific use-case requirements. By understanding these elements, businesses can ensure their data is not only AI-ready but optimized to deliver substantial value.

Key Takeaways:

AI-ready data is essential for maximizing the efficiency and impact of AI applications, as it ensures data quality, structure, and contextual relevance.

Achieving AI-ready data requires addressing data quality, completeness, and consistency, which ultimately enhances model accuracy and decision-making.

AI-ready data enables faster, more reliable AI deployment, reducing time-to-market and increasing operational agility across industries.

Building AI-ready data involves steps like cataloging relevant datasets, assessing data quality, consolidating data sources, and implementing governance frameworks.

AI-ready data aligns with future technologies like generative AI, positioning businesses to adapt to advancements and leverage scalable, next-generation solutions.

What is AI-Ready Data?

AI-ready data refers to data that is meticulously prepared, organized, and structured for optimized use in artificial intelligence applications. This concept goes beyond simply accumulating large data volumes; it demands data that is accurate, relevant, and formatted specifically for AI processes. With AI-ready data, every element is curated for compatibility with AI algorithms, ensuring data can be swiftly analyzed and interpreted.

High quality: AI-ready data is accurate, complete, and free from inconsistencies. These factors ensure that AI algorithms function without bias or error.

Relevant Structure: It is organized according to the AI model’s needs, ensuring seamless integration and enhancing processing efficiency.

Contextual Value: Data must provide contextual depth, allowing AI systems to extract and interpret meaningful insights tailored to specific use cases.

In essence, AI-ready data isn’t abundant, it’s purposefully refined to empower AI-driven solutions and insights.

Key Characteristics of AI-Ready Data

High Quality

For data to be truly AI-ready, it must demonstrate high quality across all metrics—accuracy, consistency, and reliability. High-quality data minimizes risks, such as incorrect insights or inaccurate predictions, by removing errors and redundancies. When data is meticulously validated and free from inconsistencies, AI models can perform without the setbacks caused by “noisy” or flawed data. This ensures AI algorithms work with precise inputs, producing trustworthy results that bolster strategic decision-making.

Structure Format

While AI systems can process unstructured data (e.g., text, images, videos), structured data vastly improves processing speed and accuracy. Organized in databases or tables, structured data is easier to search, query, and analyze, significantly reducing the computational burden on AI systems. With AI-ready data in structured form, models can perform complex operations and deliver insights faster, supporting agile and efficient AI applications. For instance, structured financial or operational data enables rapid trend analysis, fueling responsive decision-making processes.

Comprehensive Coverage

AI-ready data must cover a complete and diverse spectrum of relevant variables. This diversity helps AI algorithms account for different scenarios and real-world complexities, enhancing the model’s ability to make accurate predictions.

For example, an AI model predicting weather patterns would benefit from comprehensive data, including temperature, humidity, wind speed, and historical patterns. With such diversity, the AI model can better understand patterns, make reliable predictions, and adapt to new situations, boosting overall decision quality.

Timeline and Relevance

For data to maintain its AI readiness, it must be current and pertinent to the task. Outdated information can lead AI models to make erroneous predictions or irrelevant decisions, especially in dynamic fields like finance or public health. AI-ready data integrates recent updates and aligns closely with the model’s goals, ensuring that insights are grounded in present-day realities. For instance, AI systems for fraud detection rely on the latest data patterns to identify suspicious activities effectively, leveraging timely insights to stay a step ahead of evolving threats.

Data Integrity and Security

Security and integrity are foundational to trustworthy AI-ready data. Data must remain intact and safe from breaches to preserve its authenticity and reliability. With robust data integrity measures—like encryption, access controls, and validation protocols—AI-ready data can be protected from unauthorized alterations or leaks. This security not only preserves the quality of the AI model but also safeguards sensitive information, ensuring compliance with privacy standards. In healthcare, for instance, AI models analyzing patient data require stringent security to protect patient privacy and trust.

Key Drivers of AI-Ready Data

Understanding the drivers behind the demand for AI-ready data is essential. Organizations can harness the power of AI technologies better by focusing on these factors.

Vendor-Provided Models

Many AI models, especially in generative AI, come from external vendors. To fully unlock their potential, businesses must optimize their data. Pre-trained models thrive on high-quality, structured data. By aligning their data with these models’ requirements, organizations can maximize results and streamline AI integration. This compatibility ensures that AI-ready data empowers enterprises to achieve impactful outcomes, leveraging vendor expertise effectively.

Data Availability and Quality

Quality data is indispensable for effective AI performance. Many companies overlook data challenges unique to AI, such as bias and inconsistency. To succeed, organizations must ensure that AI-ready data is accurate, representative, and free of bias. Addressing these factors establishes a strong foundation, enabling reliable, trustworthy AI models that perform predictably across use cases.

Disruption of Traditional Data Management

AI’s rapid evolution disrupts conventional data management practices, pushing for dynamic, innovative solutions. Advanced strategies like data fabrics and augmented data management are becoming critical for optimizing AI-ready data. Techniques like knowledge graphs enhance data context, integration, and retrieval, making AI models smarter. This shift reflects a growing need for data management innovations that fuel efficient, AI-driven insights.

Bias and Hallucination Mitigation

New solutions tackle AI-specific challenges, such as bias and hallucination. Effective data management structures and prepares AI-ready data to minimize these issues. By implementing strong data governance and quality control, companies can reduce model inaccuracies and biases. This proactive approach fosters more reliable AI models, ensuring that decisions remain unbiased and data-driven.

Integration of Structured and Unstructured Data

Generative AI blurs the line between structured and unstructured data. Managing diverse data formats is crucial for leveraging generative AI’s potential. Organizations need strategies to handle and merge various data types, from text to video. Effective integration enables AI-ready data to support complex AI functionalities, unlocking powerful insights across multiple formats.

5 Steps to AI-Ready Data

The ideal starting point for public-sector agencies to advance in AI is to establish a mission-focused data strategy. By directing resources to feasible, high-impact use cases, agencies can streamline their focus to fewer datasets. This targeted approach allows them to prioritize impact over perfection, accelerating AI efforts.

While identifying these use cases, agencies should verify the availability of essential data sources. Building familiarity with these sources over time fosters expertise. Proper planning can also support bundling related use cases, maximizing resource efficiency by reducing the time needed to implement use cases. Concentrating efforts on mission-driven, high-impact use cases strengthens AI initiatives, with early wins promoting agency-wide support for further AI advancements.

Following these steps can ensure agencies select the right datasets that meet AI-ready data standards.

Step 1: Build a Use Case Specific Data Catalog

The chief data officer, chief information officer, or data domain owner should identify relevant datasets for prioritized use cases. Collaborating with business leaders, they can pinpoint dataset locations, owners, and access protocols. Tailoring data discovery to agency-specific systems and architectures is essential. Successful data catalog projects often include collaboration with system users and technical experts and leverage automated tools for efficient data discovery.

For instance, one federal agency conducted a digital assessment to identify datasets that drive operational efficiency and cost savings. This process enabled them to build a catalog accessible to data practitioners across the agency.

Step 2: Assess Data Quality and Completeness

AI success depends on high-quality, complete data for prioritized use cases. Agencies should thoroughly audit these sources to confirm their AI-ready data status. One national customs agency did this by selecting priority use cases and auditing related datasets. In the initial phases, they required less than 10% of their available data.

Agencies can adapt AI projects to maximize impact with existing data, refining approaches over time. For instance, a state-level agency improved performance by 1.5 to 1.8 times using available data and predictive analytics. These initial successes paved the way for data-sharing agreements, focusing investment on high-impact data sources.

Step 3: Aggregate Prioritized Data Sources

Selected datasets should be consolidated within a data lake, either existing or purpose-built on a new cloud-based platform. This lake serves analytics staff, business teams, clients, and contractors. For example, one civil engineering organization centralized procurement data from 23 resource planning systems onto a single cloud instance, granting relevant stakeholders streamlined access.

Step 4: Evaluate Data Fit

Agencies must evaluate AI-ready data for each use case based on data quantity, quality, and applicability. Fit-for-purpose data varies depending on specific use case requirements. Highly aggregated data, for example, may lack the granularity needed for individual-level insights but may still support community-level predictions.

Analytics teams can enhance fit by:

Selecting data relevant to use cases.

Developing a reusable data model with the necessary fields and tables.

Systematically assessing data quality to identify gaps.

Enriching the data model iteratively, adding parameters or incorporating third-party data.

A state agency, aiming to support care decisions for vulnerable populations, found their initial datasets incomplete and in poor formats. They improved quality through targeted investments, transforming the data for better model outputs.

Step 5: Governance and Execution

Establishing a governance framework is essential to secure AI-ready data and ensure quality, security, and metadata compliance. This framework doesn’t require exhaustive rules but should include data stewardship, quality standards, and access protocols across environments.

In many cases, existing data storage systems can meet basic security requirements. Agencies should assess additional security needs, adopting control standards such as those from the National Institute of Standards and Technology. For instance, one government agency facing complex security needs for over 150 datasets implemented a strategic data security framework. They simplified the architecture with a use case–level security roadmap and are now executing a long-term plan.

For public-sector success, governance and agile methods like DevOps should be core to AI initiatives. Moving away from traditional development models is crucial, as risk-averse cultures and longstanding policies can slow progress. However, this transition is vital to AI-ready data initiatives, enabling real-time improvements and driving impactful outcomes.

Challenges to AI-Ready Data

While AI-ready data promises transformative potential, achieving it poses significant challenges. Organizations must recognize and tackle these obstacles to build a strong, reliable data foundation.

Data Silos

Data silos arise when departments store data separately, creating isolated information pockets. This fragmentation hinders the accessibility, analysis, and usability essential for AI-ready data.

Impact: AI models thrive on a comprehensive data view to identify patterns and make predictions. Silos restrict data scope, resulting in biased models and unreliable outputs.

Solution: Build a centralized data repository, such as a data lake, to aggregate data from diverse sources. Implement cross-functional data integration to dismantle silos, ensuring AI-ready data flows seamlessly across the organization.

Data Inconsistency

Variations in data formats, terms, and values across sources disrupt AI processing, creating confusion and inefficiencies.

Impact: Inconsistent data introduces errors and biases, compromising AI reliability. For example, a model with inconsistent gender markers like “M” and “Male” may yield flawed insights.

Solution: Establish standardized data formats and definitions. Employ data quality checks and validation protocols to catch inconsistencies. Utilize governance frameworks to uphold consistency across the AI-ready data ecosystem.

Data Quality

Poor data quality—like missing values or errors—undermines the accuracy and reliability of AI models.

Impact: Unreliable data leads to skewed predictions and biased models. For instance, missing income data weakens a model predicting purchasing patterns, impacting its effectiveness.

Solution: Use data cleaning and preprocessing to resolve quality issues. Apply imputation techniques for missing values and data enrichment to fill gaps, reinforcing AI-ready data integrity.

Data Privacy and Security

Ensuring data privacy and security is crucial, especially when managing sensitive information under strict regulations.

Impact: Breaches and privacy lapses damage reputations and erodes trust, while legal penalties strain resources. AI-ready data demands rigorous security to safeguard sensitive information.

Solution: Implement encryption, access controls, and data masking to secure AI-ready data. Adopt privacy-enhancing practices, such as differential privacy and federated learning, for safer model training.

You can read about the pillars of AI security.

Skill Shortages

Developing and maintaining an AI-ready data infrastructure requires specialized skills in data science, engineering, and AI.

Impact: Without skilled professionals, organizations struggle to govern data, manage quality, and design robust AI solutions, stalling progress toward AI readiness.

Solution: Invest in hiring and training for data science and engineering roles. Collaborate with external consultants or partner with AI and data management experts to bridge skill gaps.

Why is AI-Ready Data Important?

Accelerated AI Development

AI-ready data minimizes the time data scientists spend on data cleaning and preparation, shifting their focus to building and optimizing models. Traditional data preparation can be tedious and time-consuming, especially when data is unstructured or lacks consistency. With AI-ready data, data is pre-cleaned, labeled, and structured, allowing data scientists to jump straight into analysis. This efficiency translates into a quicker time-to-market, helping organizations keep pace in a rapidly evolving AI landscape where every minute counts.

Improved Model Accuracy

The accuracy of AI models hinges on the quality of the data they consume. AI-ready data is not just clean; it’s relevant, complete, and up-to-date. This enhances model precision, as high-quality data reduces biases and errors. For instance, if a retail company has AI-ready data on customer preferences, its models will generate more accurate recommendations, leading to higher customer satisfaction and loyalty. In essence, AI-ready data helps unlock better predictive accuracy, ensuring that organizations make smarter, data-driven decisions.

Streamlined MLOps for Consistent Performance

Machine Learning Operations (MLOps) ensure that AI models perform consistently from development to deployment. AI-ready data plays a vital role here by ensuring that both historical data (used for training) and real-time data (used in production) are aligned and in sync. This consistency supports smoother transitions between training and deployment phases, reducing model degradation over time. Streamlined MLOps mean fewer interruptions in production environments, helping organizations implement AI faster, and ensuring that models remain robust and reliable in the long term.

Cost Reduction Through Optimized Data Protection

AI projects can be costly, especially when data preparation takes a significant portion of a project’s budget. AI-ready data cuts down the need for extensive manual preparation, enabling engineers to invest time in high-value tasks. This shift not only reduces labor costs but also shortens project timelines, which is particularly advantageous in competitive industries where time-to-market can impact profitability. In essence, the more AI-ready a dataset, the less costly the AI project becomes, allowing for more scalable AI implementations.

Improved Data Governance and Compliance

In a regulatory environment, data governance is paramount, especially as AI decisions become more scrutinized. AI-ready data comes embedded with metadata and lineage information, ensuring that data’s origin, transformations, and usage are documented. This audit trail is crucial when explaining AI-driven decisions to stakeholders, including customers and regulators. Proper governance and transparency are not just compliance necessities—they build trust and enhance accountability, positioning the organization as a responsible AI user.

Future-Proofing for GenAI

With the rapid advancement in generative AI (GenAI), organizations need to prepare now to capitalize on future AI applications. Forward-thinking companies are already developing GenAI-ready data capabilities, setting the groundwork for rapid adoption of new AI technologies.

AI-ready data ensures that, as the AI landscape evolves, the organization’s data is compatible with new AI models, reducing rework and accelerating adoption timelines. This preparation creates a foundation for scalability and adaptability, enabling companies to lead rather than follow in AI evolution.

Reducing Data Preparation Time for Data Scientists

It’s estimated that data scientists spend around 39% of their time preparing data, a staggering amount given their specialized skill sets. By investing in AI-ready data, companies can drastically reduce this figure, allowing data scientists to dedicate more energy to model building and optimization. When data is already clean, organized, and ready to use, data scientists can direct their expertise toward advancing AI’s strategic goals, accelerating innovation, and increasing overall productivity.

Conclusion

In the data-driven landscape, preparing AI-ready data is essential for any organization aiming to leverage artificial intelligence effectively. AI-ready data is not just about volume; it’s about curating data that is accurate, well-structured, secure, and highly relevant to specific business objectives. High-quality data enhances the predictive accuracy of AI models, ensuring reliable insights that inform strategic decisions. By investing in robust data preparation processes, organizations can overcome common AI challenges like biases, errors, and data silos, which often lead to failed AI projects.

Moreover, AI-ready data minimizes the time data scientists spend on tedious data preparation, enabling them to focus on building and refining models that drive innovation. For businesses, this means faster time-to-market, reduced operational costs, and improved adaptability to market changes. Effective data governance and security measures embedded within AI-ready data also foster trust, allowing organizations to meet regulatory standards and protect sensitive information.

As AI technology continues to advance, having a foundation of AI-ready data is crucial for scalability and flexibility. This preparation not only ensures that current AI applications perform optimally but also positions the organization to quickly adopt emerging AI innovations, such as generative AI, without extensive rework. In short, prioritizing AI-ready data today builds resilience and agility, paving the way for sustained growth and a competitive edge in the future.

Source URL: https://www.techaheadcorp.com/blog/what-is-ai-ready-data-how-to-get-your-there/

0 notes

Text

AI Consulting Business in Construction: Transforming the Industry

The construction industry is experiencing a profound transformation due to the integration of artificial intelligence (AI). The AI consulting business is at the forefront of this change, guiding construction firms in optimizing operations, enhancing safety, and improving project outcomes. This article explores various applications of AI in construction, supported by examples and statistics that…

#AI Consulting Business#AI in Construction#AI Technologies#artificial intelligence#Big Data Analytics#Construction Automation#construction efficiency#construction industry#Construction Safety#construction sustainability#Data Science#Generative Design#IoT Technologies#Labor Optimization#Machine Learning#Predictive Analytics#project management#quality control#Robotics#Safety Monitoring

0 notes

Text

Generative AI | High-Quality Human Expert Labeling | Apex Data Sciences

Apex Data Sciences combines cutting-edge generative AI with RLHF for superior data labeling solutions. Get high-quality labeled data for your AI projects.

#GenerativeAI#AIDataLabeling#HumanExpertLabeling#High-Quality Data Labeling#Apex Data Sciences#Machine Learning Data Annotation#AI Training Data#Data Labeling Services#Expert Data Annotation#Quality AI Data#Generative AI Data Labeling Services#High-Quality Human Expert Data Labeling#Best AI Data Annotation Companies#Reliable Data Labeling for Machine Learning#AI Training Data Labeling Experts#Accurate Data Labeling for AI#Professional Data Annotation Services#Custom Data Labeling Solutions#Data Labeling for AI and ML#Apex Data Sciences Labeling Services

1 note

·

View note

Note

You’ve probably been asked this before, but do you have a specific view on ai-generated art. I’m doing a school project on artificial intelligence and if it’s okay, i would like to cite you

I mean, you're welcome to cite me if you like. I recently wrote a post under a reblog about AI, and I did a video about it a while back, before the full scale of AI hype had really started rolling over the Internet - I don't 100% agree with all my arguments from that video anymore, but you can cite it if you please.

In short, I think generative AI art

Is art, real art, and it's silly to argue otherwise, the question is what KIND of art it is and what that art DOES in the world. Generally, it is boring and bland art which makes the world a more stressful, unpleasant and miserable place to be.

AI generated art is structurally and inherently limited by its nature. It is by necessity averages generated from data-sets, and so it inherits EVERY bias of its training data and EVERY bias of its training data validators and creators. It naturally tends towards the lowest common denominator in all areas, and it is structurally biased towards reinforcing and reaffirming the status quo of everything it is turned to.

It tends to be all surface, no substance. As in, it carries the superficial aesthetic of very high-quality rendering, but only insofar as it reproduces whatever signifiers of "quality" are most prized in its weighted training data. It cannot understand the structures and principles of what it is creating. Ask it for a horse and it does not know what a "horse" is, all it knows is what parts of it training data are tagged as "horse" and which general data patterns are likely to lead an observer to identify its output also as "horse." People sometimes describe this limitation as "a lack of soul" but it's perhaps more useful to think of it as a lack of comprehension.

Due to this lack of comprehension, AI art cannot communicate anything - or rather, the output tends to attempt to communicate everything, at random, all at once, and it's the visual equivalent of a kind of white noise. It lacks focus.

Human operators of AI generative tools can imbue communicative meaning into the outputs, and whip the models towards some sort of focus, because humans can do that with literally anything they turn their directed attention towards. Human beings can make art with paint spatters and bits of gum stuck under tennis shoes, of course a dedicated human putting tons of time into a process of trial and error can produce something meaningful with genAI tools.

The nature of genAI as a tool of creation is uniquely limited and uniquely constrained, a genAI tool can only ever output some mixture of whatever is in its training data (and what's in its training data is biased by the data that its creators valued enough to include), and it can only ever output that mixture according to the weights and biases of its programming and data set, which is fully within the control of whoever created the tool in the first place. Consequently, genAI is a tool whose full creative capacity is always, always, always going to be owned by corporations, the only entities with the resources and capacity to produce the most powerful models. And those models, thus, will always only create according to corporate interest. An individual human can use a pencil to draw whatever the hell they want, but an individual human can never use Midjourney to create anything except that which Midjourney allows them to create. GenAI art is thus limited not only by its mathematical tendency to bias the lowest common denominator, but also by an ideological bias inherited from whoever holds the leash on its creation. The necessary decision of which data gets included in a training set vs which data gets left out will, always and forever, impose de facto censorship on what a model is capable of expressing, and the power to make that decision is never in the hands of the artist attempting to use the tool.

tl;dr genAI art has a tendency to produce ideologically limited and intrinsically censored outputs, while defaulting to lowest common denominators that reproduce and reinforce status quos.

... on top of which its promulgation is an explicit plot by oligarchic industry to drive millions of people deeper into poverty and collapse wages in order to further concentrate wealth in the hands of the 0.01%. But that's just a bonus reason to dislike it.

2K notes

·

View notes

Text

Enhance Data Quality with Generative AI- Tejasvi Addagada

How generative AI can enhance data quality by identifying patterns, correcting inconsistencies, and improving data reliability. In this article, Tejasvi Addagada explores the role of generative AI in modern data management, privacy-enhancing technologies, and frameworks that ensure accurate, secure, and actionable data. Learn how businesses can reduce risk, boost compliance, and unlock value through smarter data strategies.

#tejasvi addagada#data quality generative AI#data risk management#data management books#data governance strategy#Tejasvi Addagada

0 notes

Text

Not certain if this has already been posted about here, but iNaturalist recently uploaded a blog post stating that they had received a grant from Google to incorporate new forms of generative AI into their 'computer vision' model.

I'm sure I don't need to tell most of you why this is a horrible idea, that does away with much of the trust gained by the thus far great service that is iNaturalist. But, to elaborate on my point, to collaborate with Google on tools such as these is a slap in the face to much of the userbase, including a multitude of biological experts and conservationists across the globe.

They claim that they will work hard to make sure that the identification information provided by the AI tools is of the highest quality, which I do not entirely doubt from this team. I would hope that there is a thorough vetting process in place for this information (Though, if you need people to vet the information, what's the point of the generative AI over a simple wiki of identification criteria). Nonetheless, if you've seen Google's (or any other tech company's) work in this field in the past, which you likely have, you will know that these tools are not ready to explain the nuances of species identification, as they continue to provide heavy amounts of complete misinformation on a daily basis. Users may be able to provide feedback, but should a casual user look to the AI for an explanation, many would not realize if what they are being told is wrong.

Furthermore, while the data is not entirely my concern, as the service has been using our data for years to train its 'computer vision' model into what it is today, and they claim to have ways to credit people in place, it does make it quite concerning that Google is involved in this deal. I can't say for certain that they will do anything more with the data given, but Google has proven time and again to be highly untrustworthy as a company.

Though, that is something I'm less concerned by than I am by the fact that a non-profit so dedicated to the biodiversity of the earth and the naturalists on it would even dare lock in a deal of this nature. Not only making a deal to create yet another shoehorned misinformation machine, that which has been proven to use more unclean energy and water (among other things) than it's worth for each unsatisfactory and untrustworthy search answer, but doing so with one of the greediest companies on the face of the earth, a beacon of smog shining in colors antithetical to the iNaturalist mission statement. It's a disgrace.

In conclusion, I want to believe in the good of iNaturalist. The point stands, though, that to do this is a step in the worst possible direction. Especially when they, for all intents and purposes, already had a system that works! With their 'computer vision' model providing basic suggestions (if not always accurate in and of itself), and user suggested IDs providing further details and corrections where needed.

If you're an iNaturalist user who stands in opposition to this decision, leave a comment on this blog post, and maybe we can get this overturned.

[Note: Yes, I am aware there is good AI used in science, this is generative AI, which is a different thing entirely. Also, if you come onto this post with strawmen or irrelevant edge-cases I will wring your neck.]

2K notes

·

View notes

Text

#machine learning#cogito ai#data annotation#data quality#cogitotech usa#content#generative ai#llms#RLHF

0 notes

Text

Google is now the only search engine that can surface results from Reddit, making one of the web’s most valuable repositories of user generated content exclusive to the internet’s already dominant search engine. If you use Bing, DuckDuckGo, Mojeek, Qwant or any other alternative search engine that doesn’t rely on Google’s indexing and search Reddit by using “site:reddit.com,” you will not see any results from the last week. DuckDuckGo is currently turning up seven links when searching Reddit, but provides no data on where the links go or why, instead only saying that “We would like to show you a description here but the site won't allow us.” Older results will still show up, but these search engines are no longer able to “crawl” Reddit, meaning that Google is the only search engine that will turn up results from Reddit going forward. Searching for Reddit still works on Kagi, an independent, paid search engine that buys part of its search index from Google. The news shows how Google’s near monopoly on search is now actively hindering other companies’ ability to compete at a time when Google is facing increasing criticism over the quality of its search results. And while neither Reddit or Google responded to a request for comment, it appears that the exclusion of other search engines is the result of a multi-million dollar deal that gives Google the right to scrape Reddit for data to train its AI products.

July 24 2024

2K notes

·

View notes

Text

See, here's the thing about generative AI:

I will always, always prefer to read the beginner works of a young writer that could use some editing advice, over anything a predictive text generator can spit out no matter how high of a "quality" it spits out.

I will always be more interested in reading a fanfiction or original story written by a kid who doesn't know you're meant to separate different dialogues into their own paragraphs, over anything a generative ai creates.

I will happily read a story where dialogue isn't always capitalized and has some grammar mistakes that was written by a person over anything a computer compiles.

Why?

Because *why should I care about something someone didn't even care enough to write themselves?*

Humans have been storytellers since the dawn of humankind, and while it presents itself in different ways, almost everyone has stories they want to tell, and it takes effort and care and a desire to create to put pen to paper or fingers to keyboard or speech to text to actually start writing that story out, let alone share it for others to read!

If a kid writes a story where all the dialogue is crammed in the same paragraph and missing some punctuation, it's because they're still learning the ropes and are eager to share their imagination with the world even if its not perfect.

If someone gets generative AI to make an entire novel for them, copying and pasting chunks of text into a document as it generates them, then markets that "novel" as being written by a real human person and recruits a bunch of people to leave fake good reviews on the work praising the quality of the book to trick real humans into thinking they're getting a legitimate novel.... Tell me, why on earth would anyone actually want to read that "novel" outside of morbid curiosity?

There's a few people you'll see in the anti-ai tags complaining about "people being dangerously close to saying art is a unique characteristic of the divine human soul" and like...

... Super dramatic wording there to make people sound ridiculous, but yeah, actually, people enjoy art made by humans because humans who make art are sharing their passion with others.

People enjoy art made by animals because it is fascinating and fun to find patterns in the paint left by paw prints or the movements of an elephants trunk.

Before Generative AI became the officially sanctioned "Plagiarism Machine for Billionaires to Avoid Paying Artists while Literally Stealing all those artists works" people enjoyed random computer-generated art because, like animals, it is fascinating and fun to see something so different and alien create something that we can find meaning in.

But now, when Generative AI spits out a work that at first appears to be a veritable masterpiece of art depicting a winged Valkyrie plunging from the skies with a spear held aloft, you know that anything you find beautiful or agreeable in this visual media has been copied from an actual human artist who did not consent or doesn't even know that their art has been fed into the Plagiarism Machine.

Now, when Generative AI spits out a written work featuring fandom-made tropes and concepts like Alpha Beta Omega dyanamics, you know that you favorite fanfiction website(s) have probably all been scraped and that the unpaid labours of passion by millions of people, including minors, have been scraped by the Plagiarism Machine and can now be used to make money for anyone with the time and patience to sit and have the Plagarism Machine generate stories a chunk at a time and then go on to sell those stories to anyone unfortunate enough to fall for the scam,

all while you have no way to remove your works from the existing training data and no way to stop any future works you post be put in, either.

Generative AI wouldn't be a problem if it was exclusively trained on Public Domain works for each country and if it was freely available to anyone in that country (since different countries have different copyright laws)

But its not.

Because Generative AI is made by billionaires who are going around saying "if you posted it on the Internet at any point, it is fair game for us to take and profit off," and anyone looking to make a quick buck can start churning out stolen slop and marketing it online on trusted retailers, including generating extremely dangerous books like foraging guides or how to combine cleaning chemicals for a spotless home, etc.

Generative AI is nothing but the works of actual humans stolen by giant corporations looking for profit, even works that the original creators can't even make money off of themselves, like fanfiction or fanart.

And I will always, always prefer to read "fanfiction written by a 13 year old" over "stolen and mashed together works from Predictive Text with a scifi name slapped on it", because at least the fanfiction by a kid actually has *passion and drive* behind its creation.

405 notes

·

View notes