#Datasal

Explore tagged Tumblr posts

Text

Sunday 1 October Mixtape 379 “Analogic Surfing EXCLUSIVE”

Retro Space Electronic Idm Wednesdays, Fridays & Sundays. Support the artists and labels. Don't forget to tip so future shows can bloom.

Jumble Hole Clough-Surfing the Sargasso Sea 00:31

Oberu-Analogic 03:35

London Clay-Presence Unknown 05:56

Lars Leonhard-Raytracing 09:49

Higher Intelligence Agency-Delta 16:31

Carbon Based Lifeforms, Karin My-Bloom, Pt. 1 22:34

John Scott Shepherd-Trans Space 27:32

Cate Brooks-Flowstate 30:40

Datasal-Besök 34:39

Sordid Sound System-Sharawadji 41:03

The Irresistible Force-Blue My Mind 46:50

The British Stereo Collective-Mystery Fields 54:00

Cartas de Japón-Nueva Atlantis 55:41

Dohnavùr-The Kindness Of Others - Concretism Remix 1:04:06

Violet Mist-Celestial Drift 1:07:20

S U R V I V E-Hourglass 1:10:22

Runningonair-Passage Of Days 1:17:26

Metamatics-Jakemond 1:26:52

Sick Robot-1980 1:32:12

Curved Light-VIII (Lapis) 1:38:14

James Bernard-End of an Era (Comit Remix) 1:45:55

Michael Brückner-A Secret (Part 3) 1:51:12

Mioclono, John Talabot, Velmondo-Myoclonic Sequences 1:57:20

#Jumble Hole Clough#Woodford Halse#Oberu#London Clay#Castles in Space Subscription Library#Castles In Space#Lars Leonhard#Cyclical Dreams#Higher Intelligence Agency#Carbon Based Lifeforms#Karin My#John Scott Shepherd#Cate Brooks#Café Kaput#Datasal#Höga Nord Rekords#Sordid Sound System#Invisible Inc.#The Irresistible Force#Liquid Sound Design#The British Stereo Collective#Cartas de Japón#Dohnavùr#Concretism#Violet Mist#S U R V I V E#Runningonair#Metamatics#Hydrogen Dukebox Records#Sick Robot

2 notes

·

View notes

Text

Assassin's Creed Syndicate - Sanctity: Doña Aimée Isabelle Henriette Artois-Cotoner, La Condesa de Tortosa.

_______________________________________________

_______________________________________________

-Portrait of Lady Aimée Artois, 1860.-

Aimée Isabelle Henriette Cotoner (Née Artois) was a French Philantropist, Socialite, Musician and Fashion Icon of the Spanish and French Courts in the 19th Century.

.

The youngest daughter of Frederic Alphonse D'Artois , Comte d'Eu and his wife, Marie Johanna von Lamberg. Being the granddaughter of Charles Ferdinand D'Artois, Duke of Berry and great-granddaughter of Charles X of France.

.

Born in 1830, the very year of the July Monarchy and the incredibly political tension in her country and her family. Aimée, despite being descendent of an Absolutist Monarchy Family, had her father and mother being much more alliegned with a Constitutional Monarchy, which made them eventually become allies to the French and Austrian Brotherhoods of Assassins.

.

Born and raised in Eu, Normandy. She was kept away from the chaos of the court most of her life. Receiving the best education her father could provide, she grew up to be a refined, eloquent and clever young woman. A lover of the arts and it's many forms.

.

Although her family's political connections to the Brotherhood, Aimée never showed interest in taking part in such business. Fearing what the connection between this other secret society could bring to her loved ones (Her family already have once being alliegned to the French Rite of the Templar Order), her worries would triple as her older sister, Félicité, married a Master Assassin of the Dutch Brotherhood and becamed an active ally to their cause.

.

She herself would dwell more into this world after being married to Carlos Rafael Cotoner y Moncada, Conde de Tortosa. Despite being arranged, love bloomed among the couple, that would go on and have five children, even if only two were born.

.

As the wife and mother of Spanish Master Assassins, her role in the Creed would fortify. The years spent among the Tenents would make her shape her world views to one closer to the Assassins, however, she never truly accepted or approved her husband and daughter's role in this. For she always lived afraid of loosing them in this secret war.

.

A loving mother, a caring wife, a noblewoman of the House of Bourbon, descendent of the very Capetian Dynasty, proud of her family and legacy... A woman who left her mark on history for her donations to charities and her work in the development of arts and music.

Hélix Data Base / Entry of the Countess of Tortosa

Art by @gabmik

#assassin's creed#assassin's creed: syndicate#assassin's creed oc#assassin's creed syndicate oc#my oc#Aimée Artois#Countess Aimée Isabelle Henriette Artois-Cotoner#Condesa de Tortosa#AC D'Artois Family#AC Cotoner Family#French Noblewoman OC#Victorian Era#French Dove 🕊️🤍#Friend's art#My AC OC#Friend's gift#Solange and Serena's mother#Desirée's younger sister#Carlos' wife#My beloved french lady <3#Go check Mika out everyone!!!#GO GIVE HER ART ALL THE LOVE SHE DESERVES 🫵🏽🫵🏽🫵🏽#Character Aesthetic#Aimée Aesthetic#OC database#fan helix datase#Assassin's Creed Sanctity#assassin's creed fanfiction#Assassin's Creeed fanfic#My AU

14 notes

·

View notes

Text

The blog post titled "Twitter Advanced Search: How To Utilize This Ultimate Search Tool?" on TrackMyHashtag.com offers a comprehensive guide to leveraging Twitter's Advanced Search feature for precise and efficient tweet discovery. It explains how to use various search filters to find specific tweets, accounts, or hashtags, making it an invaluable tool for digital marketers, researchers, and social media enthusiasts. The article provides step-by-step instructions for accessing and utilizing the Advanced Search on both web and mobile platforms, detailing how to filter tweets by words, phrases, accounts, dates, and more. Additionally, it highlights the benefits of using these advanced search techniques to monitor brand mentions, track campaign performance, and conduct competitor analysis. By mastering Twitter's Advanced Search, users can enhance their social media strategies and gain deeper insights into their target audience's behavior and preferences.

1 note

·

View note

Text

Power Automate Web Scraping – A No-Code Approach to Data Extraction

Web scraping has become an essential tool for businesses looking to extract valuable insights from the internet. While traditional scraping methods require coding expertise, Power Automate web scraping offers a simple, no-code approach to automate data extraction efficiently. In this guide, we explore how Microsoft Power Automate streamlines web scraping and why it’s a game-changer for professionals and businesses alike.

What is Power Automate Web Scraping?

Power Automate is a Microsoft tool that automates repetitive tasks, including web scraping. With its built-in UI Flows and Desktop Flows, Power Automate allows users to extract data from web pages without writing complex scripts. Whether you need product pricing, stock updates, or real estate data, this solution simplifies web scraping for everyone.

How to Use Microsoft Power Automate for Web Scraping

Using Power Automate for web scraping involves creating an automated flow that navigates a webpage, extracts the required data, and saves it to a structured format like Excel or a database. Here’s a basic outline:

Set Up Power Automate – Install and configure Power Automate Desktop.

Create a New Desktop Flow – Use the Web Recorder feature to record interactions with the website.

Extract Data Elements – Select the specific text, tables, or images you need from the web page.

Save the Data – Export the scraped data into Excel, CSV, or a database for further analysis.

Schedule Automation – Run your web scraping flow automatically at set intervals.

For a more detailed breakdown, visit our in-depth guide on web scraping using Microsoft Power Automate.

Why Choose Power Automate for Web Scraping?

1. No Coding Required

Power Automate’s drag-and-drop interface makes it accessible for non-developers.

2. Seamless Integration

It easily integrates with Microsoft applications like Excel, SharePoint, and Power BI.

3. Automated Workflows

You can set up scheduled scrapes to keep your datasets updated automatically.

4. Cost-Effective Solution

Compared to third-party scraping tools, Power Automate is a budget-friendly option.

Alternative Web Scraping Solutions

While Power Automate is an excellent choice for basic web scraping, businesses with advanced data needs may explore dedicated scraping solutions such as:

Blinkit Sales Dataset – Get valuable insights from Blinkit’s grocery sales data.

Flipkart Dataset – Extract product listings and pricing from Flipkart.

Car Extract – Scrape automobile data for market analysis.

Zillow Scraper – Automate real estate data extraction for property insights.

Final Thoughts

Power Automate web scraping provides a simple yet effective way to extract data without the complexities of coding. Whether you need structured data for market research, competitor analysis, or business intelligence, this tool can significantly improve your workflow. However, if you require more advanced scraping capabilities, dedicated web scraping services might be a better fit.

Need assistance with web scraping? Our experts at Actowiz Solutions can help! Explore our web scraping solutions to find the best fit for your needs.

#web scraping using Microsoft Power Automate#Blinkit Sales Datase#Flipkart Dataset#Car Extract#web scraping solutions

0 notes

Text

Introducing Data Reduction In Real Time For Edge Computing

What is Data reduction?

The process by which an organization aims to reduce the quantity of data it stores is known as data reduction.

In order to more effectively retain huge volumes of originally obtained data as reduced data, data reduction methods aim to eliminate the redundancy present in the original data set.

It should be emphasized right away that “data reduction” does not always mean that information is lost. In many cases, data reduction simply indicates that data is now being kept more intelligently, sometimes after optimization and then being reassembled with related data in a more useful arrangement.

Furthermore, data deduplication the process of eliminating duplicate copies of the same data for the sake of streamlining is not the same as data reduction. More precisely, data reduction accomplishes its objectives by integrating elements of many distinct processes, including data consolidation and deduplication.

A more thorough analysis of the data

When discussing data in the context of data reduction, the often refer to it in its solitary form rather than the more common pluralized form. Determining the precise physical size of individual data points is one facet of data reduction, for instance.

Data-reduction initiatives entail a significant degree of data science. The capacity of a person of average intellect to comprehend a certain machine learning model is known as interpretability. This word was created since the content might be somewhat complicated and difficult to express succinctly.

Since this data is being seen from a near-microscopic viewpoint, it might be difficult to understand what some of these words signify. In data reduction, the often talk about data in its most “micro” meaning, although it typically describe data in its “macro” form. More precisely, the majority of conversations on this subject will need both macro-level and micro-level talks.

Advantages of data reduction

An business usually experiences significant financial benefits in the form of lower storage expenses due to using less storage space when it decreases the amount of data it is carrying.

Other benefits of data reduction techniques include improved data efficiency. Once data reduction is accomplished, the resultant data may be used more easily by artificial intelligence (AI) techniques in a number of ways, such as complex data analytics applications that can significantly simplify decision-making processes.

Successful usage of storage virtualization, for instance, helps to coordinate server and desktop environments, increasing their overall dependability and efficiency.

In data mining operations, data minimization initiatives are crucial. Before being mined and utilized for data analysis, data must be as clean and ready as feasible.

Types of data reduction

Some strategies that businesses might use to reduce data include the following.

Dimensionality reduction

This whole idea is based on the concept of data dimensionality. The quantity of characteristics (or features) attributed to a single dataset is known as its dimensionality. There is a trade-off involved, though, in that the more dimensionality a dataset has, the more storage it requires. Additionally, data tends to be sparser the greater the dimensionality, which makes outlier analysis more difficult.

By reducing the “noise” in the data and facilitating improved data visualization, dimensionality reduction combats that. The wavelet transform technique, which aids in picture compression by preserving the relative distance between objects at different resolution levels, is a perfect illustration of dimensionality reduction.

Another potential data transformation that may be used in combination with machine learning is feature extraction, which converts the original data into numerical features. A huge collection of variables is reduced into a smaller set while keeping the majority of the data from the large set. This is different from principle component analysis (PCA), another method of decreasing the dimensionality of large data sets.

Numerosity reduction

Choosing a smaller, less data-intensive format to describe data is the alternative approach. Numerosity reduction may be divided into two categories: parametric and non-parametric. Regression and other parametric techniques focus on model parameters rather than the actual data. In a similar vein, a log-linear model that emphasizes data subspaces may be used. On the other hand, non-parametric techniques (such as histograms, which illustrate the distribution of numerical data) do not depend on models in any manner.

Data cube aggregation

Data may be stored visually using data cubes. Because it refers to a huge, multidimensional cube made up of smaller, structured cuboids, the phrase “data cube” is really nearly deceiving in its supposed singularity.

With regard to measures and dimensions, each cuboid represents a portion of the entire data contained within that data cube. Therefore, data cube aggregation is the process of combining data into a multidimensional cube visual shape, which minimizes data size by providing it with a special container designed for that use.

Data discretization

Data discretization, which creates a linear collection of data values based on a specified set of intervals that each correspond to a given data value, is another technique used for data reduction.

Data compression

A variety of encoding techniques may be used to successfully compress data and restrict file size. Generally speaking, data compression methods are divided into two categories: lossless compression and lossy compression. With lossless compression, the whole original data may be recovered if necessary, while the data size is decreased using encoding methods and algorithms.

In contrast to lossless compression, lossy compression employs different techniques to compress data, and although the treated data may be valuable, it will not be an identical replica.

Data preprocessing

Prior to going through the data analysis and data reduction procedures, certain data must be cleansed, handled, and processed. The conversion of analog to digital data may be a component of that change. Another type of data preprocessing is binning, which uses median values to normalize different kinds of data and guarantee data integrity everywhere.

Read more on Govindhtech.com

#Datareduction#machinelearning#AI#datasize#dataanalysis#data#Numerosityreduction#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

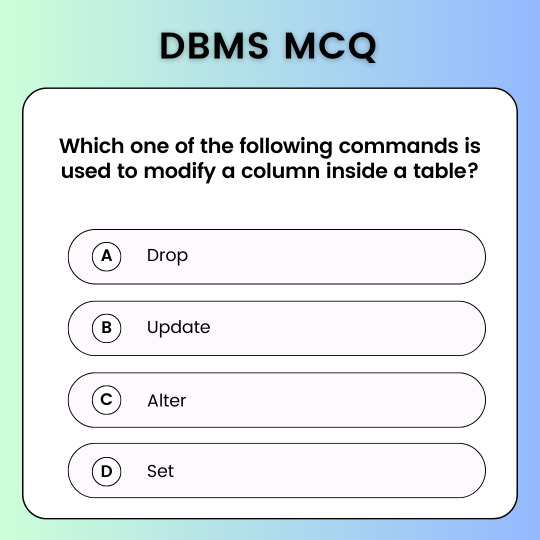

Test Your Knowledge: DBMS Quiz Challenge!!! 📝🧠

Which one of the following commands is used to modify a column inside a table?🤔

For more interesting quizzes, check the link below! 📚

For the explanation of the right answer, you can check Q.No. 54 of the above link. 📖

0 notes

Video

youtube

How I Wasted Time and Money Because of Data Silos 💸

In today's data-driven world, information is power. But data trapped in silos can be a critical roadblock for companies. 🚧 What exactly are data silos? They emerge when teams and systems hoard data in isolated repositories. This restricts flow and accessibility across the organization.

⛔️ The result? Inconsistencies, duplications, and gaps in the data landscape prevent a comprehensive perspective. 👀 Without the full picture, identifying key patterns and opportunities becomes difficult.

❌ In this video, we dive into the far-reaching dangers of neglecting data silos, including:

Poor customer experiences 🙁 Security vulnerabilities 🚨 Wasted time and resources ⏰ Slowed innovation 💡 Risks of non-compliance 📝 But don't worry, these challenges can be overcome with the right data strategy and technology.

💪 We discuss solutions like: Implementing a unified data fabric 🔗 Harnessing data virtualization and automation ⚡️ Consolidating analytics platforms

📊 This video is based on the insightful article "Data Silos: Unveiling the Hidden Dangers of Neglecting Digital Data" (source: https://bit.ly/3qvP4QY). Join us as we unveil the hidden dangers of data silos and how to start breaking down barriers to data accessibility and value. The future of your business depends on it!

0 notes

Text

Actors Strike

So with the Advent of AI Actors, voices, and how many artists have in their contracts: "We literally own your ass for longer than you'll be alive and your family and estate will get nothing when you die, but we (the company) will live forever."

A contract that I have never signed myself, So I wouldn't know how that feels.

Anyway, with the Advent of AI Actors, the bottleneck is in the *quality* of the produced media. You wouldn't know this, but streaming services and DataCaps have limited the quality of any type of movie or TV show quite a bit. And Anime and vtubers specifically, they either stream to a lot of people "audio only" or their lack of depth and flat color schemes drops the datasize quite a bit, which makes it easier for audiences to participate. (And watch)

Now, theatrical releases, and cable releases get to stream at HD4K ezpz. And while some streaming services offer 4K, there's no guarantee every piece of media is offered at that quality, and there's not guarantee that if it is offered at the quality, that it won't just be upscaled.

Now some people may say "the human eye can't even tell the higher quality--technology has surpassed human limitations!" But that's not true, we thought that every step of the way, starting with color tv where it was "realer than real" and then cable tv, and vhs, and DVD. But audio, video, and technology enthusiasts have since pushed the boundaries with "lossless" recordings that your average user doesn't typically play around with (even though you can record videos on your phone at better quality than you can download from the Internet or upload to TikTok.)

AI's limitation is that it cannot upscale into newer cleaner formats. It will never be able to, it needs the detail that it doesn't have access to (that it is currently doing ad libs with) think of zoom on your camera, when it is zoomed out there's missing detail, but if you're interested in moles, you can zoom right in on it and get every hairy detail.

And as technology advances, so will our ability be to capture and stream those details.

But you might be thinking; well telecom companies won't raise DataCaps, and nobody uses physical media anymore so we're free to go with less-than DVD quality, probably for decades to come.

But then what incentive do viewers have to go to the movie theaters then when there isn't a better quality to watch in theaters than the 380p (with buffering) that you can get from your phone?

Well people will buy a bigger TV then (To stream that *same* 380p through Comcast, because you hit your datacap thanks to Fortnight and Roblox downloads, because why does a landline have a data cap anyway!?)

I'm not denying there is a technological bottleneck here, advocates of the free and open internet have been talking about it being a problem for decades. But the leading argument against for decades was "Nobody uses the Internet anyway".

At the very last second you wanna do a UTurn? It's too late for that, now you get to sit in traffic waiting on buffering like it's 1999 all over again.

What this means for the movie industry: is that nobody will want to buy the media you've created and expected a long-term paycheck from after that bottleneck is cleared up. That means that all your investments now will lose out to anybody with a YouTube channel that records in 4k and scales their videos down.

Because people will be able to tell there's digital actors, just like they were able to tell in Final Fantasy Spirit Within, even though all the headlines talked about "This is so realistic! nobody won't be fooled that animation isn't real again!"

6 notes

·

View notes

Text

The US lawyer starts a large job to get through the California's laws

Exclusive: United States For District of California Bill Essayi is taking action to distract “a sacred space in Gold. Starting “an Angel A Angel,” a team of job team from ice, HSS, Dea, FBI, ATF, and The movement of the limitTo take care of the careless DATASE DATASE DATASE Every day to see illegally constructed bonds in laws that doAJ can pay for a valid Feel. “They have made it impossible to do…

0 notes

Text

Artificial Intelligence In Pathology Market Analysis and Key Developments to 2033

Introduction

The integration of Artificial Intelligence (AI) into the field of pathology is revolutionizing diagnostic practices, enhancing accuracy, and expediting clinical workflows. Pathology, which involves studying diseases through laboratory examination of tissues, cells, and bodily fluids, is pivotal in diagnosing a myriad of health conditions, including cancers, infectious diseases, and chronic illnesses. With advancements in AI technologies, the pathology market is experiencing significant transformations, driven by the demand for precision medicine, improved diagnostic accuracy, and efficiency.

Market Overview

The global Artificial Intelligence in pathology market is witnessing robust growth, fueled by technological innovations and the increasing adoption of digital pathology solutions. AI-powered tools, such as machine learning algorithms, computer vision, and deep learning models, are being leveraged to automate tasks like image analysis, pattern recognition, and data interpretation. This automation not only enhances diagnostic accuracy but also reduces the workload on pathologists.

Download a Free Sample Report:-https://tinyurl.com/2sfhyrtj

Market Size and Growth

As of 2023, the AI in pathology market was valued at approximately USD XX billion and is projected to grow at a compound annual growth rate (CAGR) of XX% from 2024 to 2032. Factors contributing to this growth include rising cancer prevalence, advancements in digital imaging, and a growing focus on reducing diagnostic errors.

Key Drivers of the Market

Rising Prevalence of Chronic Diseases: With increasing incidences of cancer and other chronic diseases, there is a heightened demand for accurate and efficient diagnostic tools.

Technological Advancements: Innovations in AI, such as convolutional neural networks (CNNs) and natural language processing (NLP), are enhancing pathology workflows.

Shortage of Pathologists: The global shortage of pathologists is driving the need for AI-powered tools that can assist in diagnostics.

Government Initiatives: Supportive policies and funding for digital health technologies are boosting the adoption of AI in pathology.

Market Segmentation

By Component

Software

Services

By Application

Cancer Diagnostics

Infectious Diseases

Others

By End-User

Hospitals

Diagnostic Laboratories

Research Centers

Regional Analysis

North America

North America is leading the market due to advanced healthcare infrastructure, high adoption rates of technology, and supportive regulatory frameworks.

Europe

The European market is also witnessing significant growth, with increasing investments in digital pathology and AI technologies.

Asia-Pacific

The Asia-Pacific region is expected to witness the fastest growth, driven by rising healthcare investments and improving diagnostic facilities.

Industry Trends

Integration of AI with Digital Pathology: Combining AI with digital pathology platforms enhances diagnostic precision and streamlines workflows.

Collaborations and Partnerships: Companies are increasingly collaborating to integrate AI tools with laboratory information systems (LIS).

Regulatory Approvals: The market is witnessing an increase in AI tools receiving regulatory approvals, enhancing their credibility and adoption.

Challenges

Data Privacy Concerns: Handling of medical data through AI systems raises privacy and security concerns.

High Implementation Costs: The initial setup and integration of AI tools can be costly.

Regulatory and Compliance Issues: Adhering to stringent healthcare regulations remains a challenge for market players.

Future Outlook

The AI in pathology market is poised for exponential growth, with advancements in machine learning and predictive analytics. The focus will increasingly shift towards personalized medicine, where AI can provide tailored diagnostic insights. Additionally, the development of AI algorithms capable of handling complex datasets will further enhance the efficiency and accuracy of pathology services.

Conclusion

The adoption of AI in the pathology market is transforming the healthcare sector by improving diagnostic accuracy, enhancing patient outcomes, and optimizing clinical workflows. As technological advancements continue and healthcare providers recognize the benefits of AI, the market is expected to thrive, offering lucrative opportunities for stakeholders. With continued investments and innovations, AI-driven pathology solutions will become a cornerstone of modern diagnostics by 2032.Read Full Report:-https://www.uniprismmarketresearch.com/verticals/healthcare/artificial-intelligence-in-pathology.html

0 notes

Text

0 notes

Text

How to protect your art from AI training

protecting our art from AI: a journey™️

us: posts art online AI: is for me? 👉👈 us: NO 🛡️

what we've learned about keeping our art safe:

→ watermarking is your bestie

→ metadata matters

→ some platforms actually help???

the basics that are working for us:

⭐ DWT watermarking (fancy but worth it)

⭐ smart platform choices

⭐ metadata tricks

full guide below. Because protecting our art shouldn't be rocket science 🚀 (image credit: digimarc corporation)

0 notes

Text

Your guide to LLMOps

New Post has been published on https://thedigitalinsider.com/your-guide-to-llmops/

Your guide to LLMOps

Navigating the field of large language model operations (LLMOps) is more important than ever as businesses and technology sectors intensify utilizing these advanced tools.

LLMOps is a niche technical domain and a fundamental aspect of modern artificial intelligence frameworks, influencing everything from model design to deployment.

Whether you’re a seasoned data scientist, a machine learning engineer, or an IT professional, understanding the multifaceted landscape of LLMOps is essential for harnessing the full potential of large language models in today’s digital world.

In this guide, we’ll cover:

What is LLMOps?

How does LLMOps work?

What are the benefits of LLMOps?

LLMOps best practices

What is LLMOps?

Large language model operations, or LLMOps, are techniques, practices, and tools that are used in operating and managing LLMs throughout their entire lifecycle.

These operations comprise language model training, fine-tuning, monitoring, and deployment, as well as data preparation.

What is the current LLMops landscape?

LLMs. What opened the way for LLMOps.

Custom LLM stack. A wider array of tools that can fine-tune and implement proprietary solutions from open-source regulations.

LLM-as-a-Service. The most popular way of delivering closed-based models, it offers LLMs as an API through its infrastructure.

Prompt execution tools. By managing prompt templates and creating chain-like sequences of relevant prompts, they help to improve and optimize model output.

Prompt engineering tech. Instead of the more expensive fine-tuning, these technologies allow for in-context learning, which doesn’t use sensitive data.

Vector databases. These retrieve contextually relevant data for specific commands.

The fall of centralized data and the future of LLMs

Gregory Allen, Co-Founder and CEO at Datasent, gave this presentation at our Generative AI Summit in Austin in 2024.

What are the key LLMOps components?

Architectural selection and design

Choosing the right model architecture. Involving data, domain, model performance, and computing resources.

Personalizing models for tasks. Pre-trained models can be customized for lower costs and time efficiency.

Hyperparameter optimization. This optimizes model performance as it finds the best combination. For example, you can use random search, grid research, and Bayesian optimization.

Tweaking and preparation. Unsupervised pre-training and transfer learning lower training time and enhance model performance.

Model assessment and benchmarking. It’s always good practice to benchmark models against industry standards.

Data management

Organization, storing, and versioning data. The right database and storage solutions simplify data storage, retrieval, and modification during the LLM lifecycle.

Data gathering and processing. As LLMs run on diverse, high-quality data, models might need data from various domains, sources, and languages. Data needs to be cleaned and pre-processed before being fed into LLMs.

Data labeling and annotation. Supervised learning needs consistent and reliable labeled data; when domain-specific or complex instances need expert judgment, human-in-the-loop techniques are beneficial.

Data privacy and control. Involves pseudonymization, anonymization techniques, data access control, model security considerations, and compliance with GDPR and CCPA.

Data version control. LLM iteration and performance improvement are simpler with a clear data history; you’ll find errors early by versioning models and thoroughly testing them.

Deployment platforms and strategies

Model maintenance. Showcases issues like model drift and flaws.

Optimizing scalability and performance. Models might need to be horizontally scaled with more instances or vertically scaled with additional resources within high-traffic settings.

On-premises or cloud deployment. Cloud deployment is flexible, easy to use, and scalable, while on-premises deployment could improve data control and security.

LLMOps vs. MLOps: What’s the difference?

Machine learning operations, or MLOps, are practices that simplify and automate machine learning workflows and deployments. MLOps are essential for releasing new machine learning models with both data and code changes at the same time.

There are a few key principles of MLOps:

1. Model governance

Managing all aspects of machine learning to increase efficiency, governance is vital to institute a structured process for reviewing, validating, and approving models before launch. This also includes considering ethical, fairness, and ethical concerns.

2. Version control

Tracking changes in machine learning assets allows you to copy results and roll back to older versions when needed. Code reviews are part of all machine learning training models and code, and each is versioned for ease of auditing and reproduction.

3. Continuous X

Tests and code deployments are run continuously across machine learning pipelines. Within MLOps, ‘continuous’ relates to four activities that happen simultaneously whenever anything is changed in the system:

Continuous integration

Continuous delivery

Continuous training

Continuous monitoring

4. Automation

Through automation, there can be consistency, repeatability, and scalability within machine learning pipelines. Factors like model training code changes, messaging, and application code changes can initiate automated model training and deployment.

MLOps have a few key benefits:

Improved productivity. Deployments can be standardized for speed by reusing machine learning models across various applications.

Faster time to market. Model creation and deployment can be automated, resulting in faster go-to-market times and reduced operational costs.

Efficient model deployment. Continuous delivery (CI/CD) pipelines limit model performance degradation and help to retain quality.

LLMOps are MLOps with technology and process upgrades tuned to the individual needs of LLMs. LLMs change machine learning workflows and requirements in distinct ways:

1. Performance metrics

When evaluating LLMs, there are several different standard scoring and benchmarks to take into account, like recall-oriented understudy for gisting evaluation (ROUGE) and bilingual evaluation understudy (BLEU).

2. Cost savings

Hyperparameter tuning in LLMs is vital to cutting the computational power and cost needs of both inference and training. LLMs start with a foundational model before being fine-tuned with new data for domain-specific refinements, allowing them to deliver higher performance with fewer costs.

3. Human feedback

LLM operations are typically open-ended, meaning human feedback from end users is essential to evaluate performance. Having these feedback loops in KKMOps pipelines streamlines assessment and provides data for future fine-tuning cycles.

4. Prompt engineering

Models that follow instructions can use complicated prompts or instructions, which are important to receive consistent and correct responses from LLMs. Through prompt engineering, you can lower the risk of prompt hacking and model hallucination.

5. Transfer learning

LLM models start with a foundational model and are then fine-tuned with new data, allowing for cutting-edge performance for specific applications with fewer computational resources.

6. LLM pipelines

These pipelines integrate various LLM calls to other systems like web searches, allowing LLMs to conduct sophisticated activities like a knowledge base Q&A. LLM application development tends to focus on creating pipelines, not new ones.

3 learnings from bringing AI to market

Drawing from experience at Salesforce, Mike Kolman shares three essential learnings to help you confidently navigate the AI landscape.

How does LLMOps work?

LLMOps involve a few important steps:

1. Selection of foundation model

Foundation models, which are LLMs pre-trained on big datasets, are used for downstream operations. Training models from scratch can be very expensive and time-consuming; big companies often develop proprietary foundation models, which are larger and have better performance than open-source ones. They do, however, have more expensive APIs and lower adaptability.

Proprietary model vendors:

OpenAI (GPT-3, GPT-4)

AI21 Labs (Jurassic-2)

Anthropic (Claude)

Open-source models:

LLaMA

Stable Diffusion

Flan-T5

2. Downstream task adaptation

After selecting the foundation model, you can use LLM APIs, which don’t always say what input leads to what output. It might take iterations to get the LLM API output you need, and LLMs can hallucinate if they don’t have the right data. Model A/B testing or LLM-specific evaluation is often used to test performance.

You can adapt foundation models to downstream activities:

Model assessment

Prompt engineering

Using embeddings

Fine-tuning pre-trained models

Using external data for contextual information

3. Model deployment and monitoring

LLM-powered apps must closely monitor API model changes, as LLM deployment can change significantly across different versions.

What are the benefits of LLMOps?

Scalability

You can achieve more streamlined management and scalability of data, which is vital when overseeing, managing, controlling, or monitoring thousands of models for continuous deployment, integration, and delivery.

LLMOps does this by enhancing model latency for more responsiveness in user experience. Model monitoring with a continuous integration, deployment, and delivery environment can simplify scalability.

LLM pipelines often encourage collaboration and reduce speed release cycles, being easy to reproduce and leading to better collaboration across data teams. This leads to reduced conflict and increased release speed.

LLMOps can manage large amounts of requests simultaneously, which is important in enterprise applications.

Efficiency

LLMOps allow for streamlined collaboration between machine learning engineers, data scientists, stakeholders, and DevOps – this leads to a more unified platform for knowledge sharing and communication, as well as model development and employment, which allows for faster delivery.

You can also cut down on computational costs by optimizing model training. This includes choosing suitable architectures and using model pruning and quantization techniques, for example.

With LLMOps, you can also access more suitable hardware resources like GPUs, allowing for efficient monitoring, fine-tuning, and resource usage optimization. Data management is also simplified, as LLMOps facilitate strong data management practices for high-quality dataset sourcing, cleaning, and usage in training.

With model performance able to be improved through high-quality and domain-relevant training data, LLMOps guarantees peak performance. Hyperparameters can also be improved, and DaraOps integration can ease a smooth data flow.

You can also speed up iteration and feedback loops through task automation and fast experimentation.

3. Risk reduction

Advanced, enterprise-grade LLMOps can be used to enhance privacy and security as they prioritize protecting sensitive information.

With transparency and faster responses to regulatory requests, you’ll be able to comply with organization and industry policies much more easily.

Other LLMOps benefits

Data labeling and annotation

GPU acceleration for REST API model endpoints

Prompt analytics, logging, and testing

Model inference and serving

Data preparation

Model review and governance

Superintelligent language models: A new era of artificial cognition

The rise of large language models (LLMs) is pushing the boundaries of AI, sparking new debates on the future and ethics of artificial general intelligence.

LLMOps best practices

These practices are a set of guidelines to help you manage and deploy LLMs efficiently and effectively. They cover several aspects of the LLMOps life cycle:

Exploratory Data Analysis (EDA)

Involves iteratively sharing, exploring, and preparing data for the machine learning lifecycle in order to produce editable, repeatable, and shareable datasets, visualizations, and tables.

Stay up-to-date with the latest practices and advancements by engaging with the open-source community.

Data management

Appropriate software that can handle large volumes of data allows for efficient data recovery throughout the LLM lifecycle. Making sure to track changes with versioning is essential for seamless transitions between versions. Data must also be protected with access controls and transit encryption.

Data deployment

Tailor pre-trained models to conduct specific tasks for a more cost-effective approach.

Continuous model maintenance and monitoring

Dedicated monitoring tools are able to detect drift in model performance. Real-world feedback for model outputs can also help to refine and re-train the models.

Ethical model development

Discovering, anticipating, and correcting biases within training model outputs to avoid distortion.

Privacy and compliance

Ensure that operations follow regulations like CCPA and GDPR by having regular compliance checks.

Model fine-tuning, monitoring, and training

A responsive user experience relies on optimized model latency. Having tracking mechanisms for both pipeline and model lineage helps efficient lifecycle management. Distributed training helps to manage vast amounts of data and parameters in LLMs.

Model security

Conduct regular security tests and audits, checking for vulnerabilities.

Prompt engineering

Make sure to set prompt templates correctly for reliable and accurate responses. This also minimizes the probability of prompt hacking and model hallucinations.

LLM pipelines or chains

You can link several LLM external system interactions or calls to allow for complex tasks.

Computational resource management

Specialized GPUs help with extensive calculations on large datasets, allowing for faster and more data-parallel operations.

Disaster redundancy and recovery

Ensure that data, models, and configurations are regularly backed up. Redundancy allows you to handle system failures without any impact on model availability.

Propel your career in AI with access to 200+ hours of video content, a free in-person Summit ticket annually, a members-only network, and more.

Sign up for a Pro+ membership today and unlock your potential.

AI Accelerator Institute Pro+ membership

Unlock the world of AI with the AI Accelerator Institute Pro Membership. Tailored for beginners, this plan offers essential learning resources, expert mentorship, and a vibrant community to help you grow your AI skills and network. Begin your path to AI mastery and innovation now.

#2024#access control#ai#ai skills#ai summit#AI21#amp#Analysis#Analytics#anthropic#API#APIs#application development#applications#approach#apps#architecture#artificial#Artificial General Intelligence#Artificial Intelligence#assessment#assets#automation#benchmark#benchmarking#benchmarks#career#ccpa#CEO#change

0 notes

Text

youtube

Jewel Studio brings your jewelry designs to life! We offer a comprehensive suite of services including: High-Quality Jewelry Scanning & Rendering: Create stunning, photorealistic images and 360° views of your pieces. Fast & Efficient Video Production: Showcase your jewelry in captivating videos optimized for faster streaming and smaller file sizes. Data Size Optimization: Reach a wider audience with videos that load quickly and seamlessly on any device. Stand out from the competition with Jewel Studio's innovative solutions. Contact us to know more about Jewel studio! Visit website: www.jewelstudio.tech #jewelstudio #jewellery #datasize #jewelrydesigner #detascanning #jewelryscanning #fasterstreaming

0 notes

Text

Data visualization in data analytics is essential for effectively communicating insights derived from data. It transforms complex data sets into visual formats like charts, graphs, and dashboards, making it easier for stakeholders to understand and act upon the information. By leveraging tools such as Tableau, Power BI, and Matplotlib, data visualization enhances clarity, highlights trends, and supports data-driven decision-making. Effective data visualization requires a balance of technical skills and design principles to ensure that the visual representation accurately conveys the intended message, ultimately driving better business outcomes and strategic insights.

0 notes

Text

Quotes from the book Data Science on AWS

Data Science on AWS

Antje Barth, Chris Fregly

As input data, we leverage samples from the Amazon Customer Reviews Dataset [https://s3.amazonaws.com/amazon-reviews-pds/readme.html]. This dataset is a collection of over 150 million product reviews on Amazon.com from 1995 to 2015. Those product reviews and star ratings are a popular customer feature of Amazon.com. Star rating 5 is the best and 1 is the worst. We will describe and explore this dataset in much more detail in the next chapters.

*****

Let’s click Create Experiment and start our first Autopilot job. You can observe the progress of the job in the UI as shown in Figure 1-11.

--

Amazon AufotML experiments

*****

...When the Feature Engineering stage starts, you will see SageMaker training jobs appearing in the AWS Console as shown in Figure 1-13.

*****

Autopilot built to find the best performing model. You can select any of those training jobs to view the job status, configuration, parameters, and log files.

*****

The Model Tuning creates a SageMaker Hyperparameter tuning job as shown in Figure 1-15. Amazon SageMaker automatic model tuning, also known as hyperparameter tuning (HPT), is another functionality of the SageMaker service.

*****

You can find an overview of all AWS instance types supported by Amazon SageMaker and their performance characteristics here: https://aws.amazon.com/sagemaker/pricing/instance-types/. Note that those instances start with ml. in their name.

Optionally, you can enable data capture of all prediction requests and responses for your deployed model. We can now click on Deploy model and watch our model endpoint being created. Once the endpoint shows up as In Service

--

Once Autopilot find best hyperpharameters you can deploy them to save for later

*****

Here is a simple Python code snippet to invoke the endpoint. We pass a sample review (“I loved it!”) and see which star rating our model chooses. Remember, star rating 1 is the worst and star rating 5 is the best.

*****

If you prefer to interact with AWS services in a programmatic way, you can use the AWS SDK for Python boto3 [https://boto3.amazonaws.com/v1/documentation/api/latest/index.html], to interact with AWS services from your Python development environment.

*****

In the next section, we describe how you can run real-time predictions from within a SQL query using Amazon Athena.

*****

Amazon Comprehend. As input data, we leverage a subset of Amazon’s public customer reviews dataset. We want Amazon Comprehend to classify the sentiment of a provided review. The Comprehend UI is the easiest way to get started. You can paste in any text and Comprehend will analyze the input in real-time using the built-in model. Let’s test this with a sample product review such as “I loved it! I will recommend this to everyone.” as shown in Figure 1-23.

*****

mprehend Custom is another example of automated machine learning that enables the practitioner to fine-tune Comprehend’s built-in model to a specific datase

*****

We will introduce you to Amazon Athena and show you how to leverage Athena as an interactive query service to analyze data in S3 using standard SQL, without moving the data. In the first step, we will register the TSV data in our S3 bucket with Athena, and then run some ad-hoc queries on the dataset. We will also show how you can easily convert the TSV data into the more query-optimized, columnar file format Apache Parquet.

--

S3 deki datayı her zaman parquet e çevir

*****

One of the biggest advantages of data lakes is that you don’t need to pre-define any schemas. You can store your raw data at scale and then decide later in which ways you need to process and analyze it. Data Lakes may contain structured relational data, files, and any form of semi-structured and unstructured data. You can also ingest data in real time.

*****

Each of those steps involves a range of tools and technologies, and while you can build a data lake manually from the ground up, there are cloud services available to help you streamline this process, i.e. AWS Lake Formation.

Lake Formation helps you to collect and catalog data from databases and object storage, move the data into your Amazon S3 data lake, clean and classify your data using machine learning algorithms, and secure access to your sensitive data.

*****

From a data analysis perspective, another key benefit of storing your data in Amazon S3 is, that it shortens the “time to insight’ dramatically, as you can run ad-hoc queries directly on the data in S3, and you don’t have to go through complex ETL (Extract-Transform-Load) processes and data pipeli

*****

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena is serverless, so you don’t need to manage any infrastructure, and you only pay for the queries you run.

*****

With Athena, you can query data wherever it is stored (S3 in our case) without needing to move the data to a relational database.

*****

Athena and Redshift Spectrum can use to locate and query data.

*****

Athena queries run in parallel over a dynamic, serverless cluster which makes Athena extremely fast -- even on large datasets. Athena will automatically scale the cluster depending on the query and dataset -- freeing the user from worrying about these details.

*****

Athena is based on Presto, an open source, distributed SQL query engine designed for fast, ad-hoc data analytics on large datasets. Similar to Apache Spark, Presto uses high RAM clusters to perform its queries. However, Presto does not require a large amount of disk as it is designed for ad-hoc queries (vs. automated, repeatable queries) and therefore does not perform the checkpointing required for fault-tolerance.

*****

Apache Spark is slower than Athena for many ad-hoc queries.

*****

For longer-running Athena jobs, you can listen for query-completion events using CloudWatch Events. When the query completes, all listeners are notified with the event details including query success status, total execution time, and total bytes scanned.

*****

With a functionality called Athena Federated Query, you can also run SQL queries across data stored in relational databases (such as Amazon RDS and Amazon Aurora), non-relational databases (such as Amazon DynamoDB), object storage (Amazon S3), and custom data sources. This gives you a unified analytics view across data stored in your data warehouse, data lake and operational databases without the need to actually move the data.

*****

You can access Athena via the AWS Management Console, an API, or an ODBC or JDBC driver for programmatic access. Let’s have a look at how to use Amazon Athena via the AWS Management Console.

*****

When using LIMIT, you can better-sample the rows by adding TABLESAMPLE BERNOULLI(10) after the FROM. Otherwise, you will always return the data in the same order that it was ingested into S3 which could be skewed towards a single product_category, for example. To reduce code clutter, we will just use LIMIT without TABLESAMPLE.

*****

In a next step, we will show you how you can easily convert that data now into the Apache Parquet columnar file format to improve the query performance. Parquet is optimized for columnar-based queries such as counts, sums, averages, and other aggregation-based summary statistics that focus on the column values vs. row information.

*****

selected for DATABASE and then choose “New Query” and run the following “CREATE TABLE AS” (short CTAS) SQL statement:

CREATE TABLE IF NOT EXISTS dsoaws.amazon_reviews_parquet

WITH (format = 'PARQUET', external_location = 's3://data-science-on-aws/amazon-reviews-pds/parquet', partitioned_by = ARRAY['product_category']) AS

SELECT marketplace,

*****

One of the fundamental differences between data lakes and data warehouses is that while you ingest and store huge amounts of raw, unprocessed data in your data lake, you normally only load some fraction of your recent data into your data warehouse. Depending on your business and analytics use case, this might be data from the past couple of months, a year, or maybe the past 2 years. Let’s assume we want to have the past 2 years of our Amazon Customer Reviews Dataset in a data warehouse to analyze year-over-year customer behavior and review trends. We will use Amazon Redshift as our data warehouse for this.

*****

Amazon Redshift is a fully managed data warehouse which allows you to run complex analytic queries against petabytes of structured data. Your queries are distributed and parallelized across multiple nodes. In contrast to relational databases which are optimized to store data in rows and mostly serve transactional applications, Redshift implements columnar data storage which is optimized for analytical applications where you are mostly interested in the data within the individual columns.

*****

Redshift Spectrum, which allows you to directly execute SQL queries from Redshift against exabytes of unstructured data in your Amazon S3 data lake without the need to physically move the data. Amazon Redshift Spectrum automatically scales the compute resources needed based on how much data is being received, so queries against Amazon S3 run fast, regardless of the size of your data.

*****

We will use Amazon Redshift Spectrum to access our data in S3, and then show you how you can combine data that is stored in Redshift with data that is still in S3.

This might sound similar to the approach we showed earlier with Amazon Athena, but note that in this case we show how your Business Intelligence team can enrich their queries with data that is not stored in the data warehouse itself.

*****

So with just one command, we now have access and can query our S3 data lake from Amazon Redshift without moving any data into our data warehouse. This is the power of Redshift Spectrum.

But now, let’s actually copy some data from S3 into Amazon Redshift. Let’s pull in customer reviews data from the year 2015.

*****

You might ask yourself now, when should I use Athena, and when should I use Redshift? Let’s discuss.

*****

Amazon Athena should be your preferred choice when running ad-hoc SQL queries on data that is stored in Amazon S3. It doesn’t require you to set up or manage any infrastructure resources, and you don’t need to move any data. It supports structured, unstructured, and semi-structured data. With Athena, you are defining a “schema on read” -- you basically just log in, create a table and you are good to go.

Amazon Redshift is targeted for modern data analytics on large, peta-byte scale, sets of structured data. Here, you need to have a predefined “schema on write”. Unlike serverless Athena, Redshift requires you to create a cluster (compute and storage resources), ingest the data and build tables before you can start to query, but caters to performance and scale. So for any highly-relational data with a transactional nature (data gets updated), workloads which involve complex joins, and latency requirements to be sub-second, Redshift is the right choice.

*****

But how do you know which objects to move? Imagine your S3 data lake has grown over time, and you might have billions of objects across several S3 buckets in S3 Standard storage class. Some of those objects are extremely important, while you haven’t accessed others maybe in months or even years. This is where S3 Intelligent-Tiering comes into play.

Amazon S3 Intelligent-Tiering, automatically optimizes your storage cost for data with changing access patterns by moving objects between the frequent-access tier optimized for frequent use of data, and the lower-cost infrequent-access tier optimized for less-accessed data.

*****

Amazon Athena offers ad-hoc, serverless SQL queries for data in S3 without needing to setup, scale, and manage any clusters.

Amazon Redshift provides the fastest query performance for enterprise reporting and business intelligence workloads, particularly those involving extremely complex SQL with multiple joins and subqueries across many data sources including relational databases and flat files.

*****

To interact with AWS resources from within a Python Jupyter notebook, we leverage the AWS Python SDK boto3, the Python DB client PyAthena to connect to Athena, and SQLAlchemy) as a Python SQL toolkit to connect to Redshift.

*****

easy-to-use business intelligence service to build visualizations, perform ad-hoc analysis, and build dashboards from many data sources - and across many devices.

*****

We will also introduce you to PyAthena, the Python DB Client for Amazon Athena, that enables us to run Athena queries right from our notebook.

*****

There are different cursor implementations that you can use. While the standard cursor fetches the query result row by row, the PandasCursor will first save the CSV query results in the S3 staging directory, then read the CSV from S3 in parallel down to your Pandas DataFrame. This leads to better performance than fetching data with the standard cursor implementation.

*****

We need to install SQLAlchemy, define our Redshift connection parameters, query the Redshift secret credentials from AWS Secret Manager, and obtain our Redshift Endpoint address. Finally, create the Redshift Query Engine.

# Ins

*****

Create Redshift Query Engine

from sqlalchemy import create_engine

engine = create_engine('postgresql://{}:{}@{}:{}/{}'.format(redshift_username, redshift_pw, redshift_endpoint_address, redshift_port, redshift_database))

*****

Detect Data Quality Issues with Apache Spark

*****

Data quality can halt a data processing pipeline in its tracks. If these issues are not caught early, they can lead to misleading reports (ie. double-counted revenue), biased AI/ML models (skewed towards/against a single gender or race), and other unintended data products.

To catch these data issues early, we use Deequ, an open source library from Amazon that uses Apache Spark to analyze data quality, detect anomalies, and even “notify the Data Scientist at 3am” about a data issue. Deequ continuously analyzes data throughout the complete, end-to-end lifetime of the model from feature engineering to model training to model serving in production.

*****

Learning from run to run, Deequ will suggest new rules to apply during the next pass through the dataset. Deequ learns the baseline statistics of our dataset at model training time, for example - then detects anomalies as new data arrives for model prediction. This problem is classically called “training-serving skew”. Essentially, a model is trained with one set of learned constraints, then the model sees new data that does not fit those existing constraints. This is a sign that the data has shifted - or skewed - from the original distribution.

*****

Since we have 130+ million reviews, we need to run Deequ on a cluster vs. inside our notebook. This is the trade-off of working with data at scale. Notebooks work fine for exploratory analytics on small data sets, but not suitable to process large data sets or train large models. We will use a notebook to kick off a Deequ Spark job on a cluster using SageMaker Processing Jobs.

*****

You can optimize expensive SQL COUNT queries across large datasets by using approximate counts.

*****

HyperLogLogCounting is a big deal in analytics. We always need to count users (daily active users), orders, returns, support calls, etc. Maintaining super-fast counts in an ever-growing dataset can be a critical advantage over competitors.

Both Redshift and Athena support HyperLogLog (HLL), a type of “cardinality-estimation” or COUNT DISTINCT algorithm designed to provide highly accurate counts (<2% error) in a small fraction of the time (seconds) requiring a tiny fraction of the storage (1.2KB) to store 130+ million separate counts.

*****

Existing data warehouses move data from storage nodes to compute nodes during query execution. This requires high network I/O between the nodes - and reduces query performance.

Figure 3-23 below shows a traditional data warehouse architecture with shared, centralized storage.

*****

certain “latent” features hidden in our data sets and not immediately-recognizable by a human. Netflix’s recommendation system is famous for discovering new movie genres beyond the usual drama, horror, and romantic comedy. For example, they discovered very specific genres such as “Gory Canadian Revenge Movies,” “Sentimental Movies about Horses for Ages 11-12,” “

*****

Figure 6-2 shows more “secret” genres discovered by Netflix’s Viewing History Service - code named, “VHS,” like the popular video tape format from the 80’s and 90’s.

*****

Feature creation combines existing data points into new features that help improve the predictive power of your model. For example, combining review_headline and review_body into a single feature may lead to more-accurate predictions than using them separately.

*****

Feature transformation converts data from one representation to another to facilitate machine learning. Transforming continuous values such as a timestamp into categorical “bins” such as hourly, daily, or monthly helps to reduce dimensionality. Two common statistical feature transformations are normalization and standardization. Normalization scales all values of a particular data point between 0 and 1, while standardization transforms the values to a mean of 0 and standard deviation of 1. These techniques help reduce the impact of large-valued data points such as number of reviews (represented in 1,000’s) over small-valued data points such as helpful_votes (represented in 10’s.) Without these techniques, the mod

*****

One drawback to undersampling is that your training dataset size is sampled down to the size of the smallest category. This can reduce the predictive power of your trained models. In this example, we reduced the number of reviews by 65% from approximately 100,000 to 35,000.

*****

Oversampling will artificially create new data for the under-represented class. In our case, star_rating 2 and 3 are under-represented. One common oversampling technique is called Synthetic Minority Oversampling Technique (SMOTE). Oversampling techniques use statistical methods such as interpolation to generate new data from your current data. They tend to work better when you have a larger data set, so be careful when using oversampling on small datasets with a low number of minority class examples. Figure 6-10 shows SMOTE generating new examples for the minority class to improve the imbalance.

*****

Each of the three phases should use a separate and independent dataset - otherwise “leakage” may occur. Leakage happens when data is leaked from one phase of modeling into another through the splits. Leakage can artificially inflate the accuracy of your model.

Time-series data is often prone to leakage across splits. Companies often want to validate a new model using “back-in-time” historical information before pushing the model to production. When working with time-series data, make sure your model does not peak into the future accidentally. Otherwise, these models may appear more accurate than they really are.

*****

We will use TensorFlow and a state-of-the-art Natural Language Processing (NLP) and Natural Language Understanding (NLU) neural network architecture called BERT. Unlike previous generations of NLP models such as Word2Vec, BERT captures the bi-directional (left-to-right and right-to-left) context of each word in a sentence. This allows BERT to learn different meanings of the same word across different sentences. For example, the meaning of the word “bank” is different between these two sentences: “A thief stole money from the bank vault” and “Later, he was arrested while fishing on a river bank.”

For each review_body, we use BERT to create a feature vector within a previously-learned, high-dimensional vector space of 30,000 words or “tokens.” BERT learned these tokens by training on millions of documents including Wikipedia and Google Books.

Let’s use a variant of BERT called DistilBert. DistilBert is a light-weight version of BERT that is 60% faster, 40% smaller, and preserves 97% of BERT’s language understanding capabilities. We use a popular Python library called Transformers to perform the transformation.

*****

Feature stores can cache “hot features” into memory to reduce model-training times. A feature store can provide governance and access control to regulate and audit our features. Lastly, a feature store can provide consistency between model training and model predicting by ensuring the same features for both batch training and real-time predicting.

Customers have implemented feature stores using a combination of DynamoDB, ElasticSearch, and S3. DynamoDB and ElasticSearch track metadata such as file format (ie. csv, parquet), BERT-specific data (ie. maximum sequence length), and other summary statistics (ie. min, max, standard deviation). S3 stores the underlying features such as our generated BERT embeddings. This feature store reference architecture is shown in Figure 6-22.

*****

Our training scripts almost always include pip installing Python libraries from PyPi or downloading pre-trained models from third-party model repositories (or “model zoo’s”) on the internet. By creating dependencies on external resources, your training job is now at the mercy of these third-party services. If one of these services is temporarily down, your training job may not start.

To improve availability, it is recommended that we reduce as many external dependencies as possible by copying these resources into your Docker images - or into your own S3 bucket. This has the added benefit of reducing network utilization and starting our training jobs faster.

*****

Bring Your Own Container

The most customizable option is “bring your own container” (BYOC). This option lets you build and deploy your own Docker container to SageMaker. This Docker container can contain any library or framework. While we maintain complete control over the details of the training script and its dependencies, SageMaker manages the low-level infrastructure for logging, monitoring, environment variables, S3 locations, etc. This option is targeted at more specialized or systems-focused machine learning folks.

*****

GloVe goes one step further and uses recurrent neural networks (RNNs) to encode the global co-occurrence of words vs. Word2Vec’s local co-occurence of words. An RNN is a special type of neutral network that learns and remembers longer-form inputs such as text sequences and time-series data.

FastText continues the innovation and builds word embeddings using combinations of lower-level character embeddings using character-level RNNs. This character-level focus allows FastText to learn non-English language models with relatively small amounts of data compared to other models. Amazon SageMaker offers a built-in, pay-as-you-go SageMaker algorithm called BlazingText which is an implementation of FastText optimized for AWS. This algorithm was shown in the Built-In Algorithms section above.

*****

ELMo preserves the trained model and uses two separate Long-Short Term Memory (LSTM) networks: one to learn from left-to-right and one to learn from right-to-left. Neither LSTM uses both the previous and next words at the same time, however. Therefore ELMo does not learn a true bidirectional contextual representation of the words and phrases in the corpus, but it performs very well nonetheless.

*****

Without this bi-directional attention, an algorithm would potentially create the same embedding for the word bank for the following two(2) sentences: “A thief stole money from the bank vault” and “Later, he was arrested while fishing on a river bank.” Note that the word bank has a different meaning in each sentence. This is easy for humans to distinguish because of our life-long, natural “pre-training”, but this is not easy for a machine without similar pre-training.

*****

To be more concrete, BERT is trained by forcing it to predict masked words in a sentence. For example, if we feed in the contents of this book, we can ask BERT to predict the missing word in the following sentence: “This book is called Data ____ on AWS.” Obviously, the missing word is “Science.” This is easy for a human who has been pre-trained on millions of documents since birth, but not easy fo

*****

Neural networks are designed to be re-used and continuously trained as new data arrives into the system. Since BERT has already been pre-trained on millions of public documents from Wikipedia and the Google Books Corpus, the vocabulary and learned representations are transferable to a large number of NLP and NLU tasks across a wide variety of domains.

Training BERT from scratch requires a lot of data and compute, it allows BERT to learn a representation of the custom dataset using a highly-specialized vocabulary. Companies like LinkedIn have pre-trained BERT from scratch to learn language representations specific to their domain including job titles, resumes, companies, and business news. The default pre-trained BERT models were not good enough for NLP/NLU tasks. Fortunately, LinkedIn has plenty of data and compute

*****

The choice of instance type and instance count depends on your workload and budget. Fortunately AWS offers many different instance types including AI/ML-optimized instances with terabytes of RAM and gigabits of network bandwidth. In the cloud, we can easily scale our training job to tens, hundreds, or even thousands of instances with just one line of code.

Let’s select 3 instances of the powerful p3.2xlarge - each with 8 CPUs, 61GB of CPU RAM, 1 Nvidia Volta V100’s GPU processor, and 16GB of GPU RAM. Empirically, we found this combination to perform well with our specific training script and dataset - and within our budget for this task.

instance_type='ml.p3.2xlarge'

instance_count=3

*****

TIP: You can specify instance_type='local' to run the script either inside your notebook or on your local laptop. In both cases, your script will execute inside of the same open source SageMaker Docker container that runs in the managed SageMaker service. This lets you test locally before incurring any cloud cost.

*****

Also, it’s important to choose parallelizable algorithms that benefit from multiple cluster instances. If your algorithm is not parallelizable, you should not add more instances as they will not be used. And adding too many instances may actually slow down your training job by creating too much communication overhead between the instances. Most neural network-based algorithms like BERT are parallelizable and benefit from a distributed cluster.

0 notes