#DevOps Community

Explore tagged Tumblr posts

Text

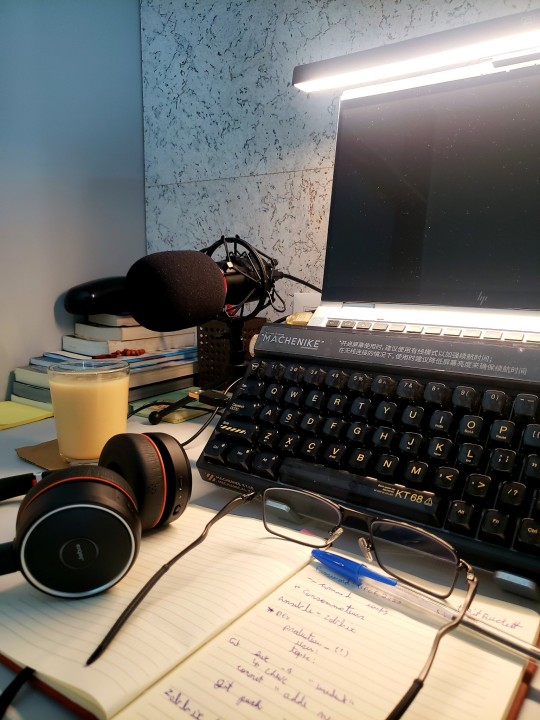

I found myself surrounded by companions daring to tackle a real software architecture challenge. We shared a good wine and cheese to conquer it together! (Unfortunately, there’s always something waiting to be dealt with on Monday.)

The glow of my Neovim terminal in Monokai theme reflects my rhythm — a guy who doesn’t stop on weekends but knows that balance isn’t about being all-in, all day.

The setup screams character: a seamless fusion of productivity and comfort. Lazygit commands at my fingertips, a Ghibli-esque avatar paired with Neofetch adding a touch of Tumblr aesthetic, and a playlist of Korean indie OSTs playing in the background to add depth to every keystroke.

This is how I drive — in code, creativity, and moments that are unapologetically mine.

#study blog#study aesthetic#studyblr#programmer#software development#student#studyblr community#studyblr europe#studyblr aesthetic#dark academia#dark aesthetic#night sky#student life#student university#developer#coding#programming#software#softwareengineering#software engineer#software engineering#software developers#devops#studyblr brazil#studies#study inspiration#study spot#self improvement#aesthetic

63 notes

·

View notes

Text

📅 Daily I/O Log – 2025.05.08

🧠 Morning Mindset

Book: Think & Grow Rich

Takeaway:

- Lack of persistence is one of the major causes of failure. - Lack of persistence is a weakness common to the majority of men, a weakness which may be overcome by effort. - The starting point of all achievement is desire. Keep this constantly in mind. - If you find yourself lacking in persistence, this weakness may be remedied by building a stronger fire under your desires. How to Develop Persistence a) A definite purpose backed by burning desire for it's fulfillment. b) A definite plan, expressed in continuous action. c) A mind closed tightly against all negative and discouraging influences, including negative suggestions of relatives, friends, and acquaintances. d) A friendly alliance with one or more persons who will encourage one to follow through with both plan and purpose.

💪🏽 Fitness Focus

Yoga -> Yin Yoga for Lung Cleanser ( L1 | 33m )

💻 Code Challenges

Platform: LeetCode Challenge: 2626. Array Reduce Transformation, 2629. Function Composition

🎓 Course Progress

➤ Personal Branding: Stand Out and Succeed • Focus: Note Taking • Module 2: People as Brands

➤ Full-Stack JavaScript Developer • Focus: Note Taking • Module 2: Introduction to Development (Introduction to Software Development)

#daily log#productivity#self improvement#personal growth#studyblr#mindset#devlog#coding#codewars#programming#devops#online learning#course progress#edtech#career development#morning routine#goal setting#discipline#high performance habits#tumblr study community#trailynne#trai lynne

2 notes

·

View notes

Text

EverGreen Technologies #1

Learn cloud… stay sound ..software is everything EverGreen Technologies #1

View On WordPress

#ai#cloud#cloud computing#communication#devops#evergreen#machine learning#skills#technology#vaignanik

0 notes

Text

Guy Piekarz, CEO of Panjaya – Interview Series

New Post has been published on https://thedigitalinsider.com/guy-piekarz-ceo-of-panjaya-interview-series/

Guy Piekarz, CEO of Panjaya – Interview Series

Guy Piekarz is the CEO of Panjaya, bringing extensive experience in building and scaling innovative technology services. A seasoned entrepreneur and operator, he has a proven track record of driving zero-to-one initiatives and developing scalable solutions at both startups and Apple. With a deep understanding of AI and video technology, Piekarz leads Panjaya in its mission to revolutionize multilingual video content through seamless AI-powered dubbing.

Panjaya is an AI-driven company specializing in video translation and dubbing at scale. Since its founding in 2022, the company has expanded with an executive team bringing expertise from Apple, Meta, and Vimeo.

Panjaya’s technology enables seamless dubbing, translation, and lip-syncing of videos into multiple languages while preserving the natural look and feel of the original content. With a focus on breaking language barriers in visual storytelling, the company aims to help creators and educators connect with global audiences effortlessly.

What lessons did you take from your experience building and selling Matcha to Apple that you’re applying to Panjaya?

Building and selling Matcha to Apple gave me a deep understanding of the importance of user-focused product development, strategic partnerships, and agility in a dynamic market. During my time at Apple, I saw firsthand the challenges of scaling content for global audiences, especially in localization and video translation. The inefficiencies of traditional dubbing inspired me to find a more innovative solution, which led me to Panjaya. Today, we’re applying those lessons by developing intuitive, AI-driven localization tools, collaborating with leaders like TED and JFrog, and staying adaptable to meet the evolving needs of a global audience – principles that drive everything we do as we work to redefine video localization.

What are the most significant inefficiencies in traditional dubbing and subtitling, and how is Panjaya addressing them?

Outdated solutions like closed captioning or traditional dubbing are often distracting and disrupt the viewer’s experience by failing to synchronize the nuances of communication, such as body language, facial expressions, and gestures along with the translated audio. Recognizing a need for a more advanced approach that goes beyond mere audio translation, Panjaya sought out to offer localized video content that resonates naturally and authentically with audiences around the world.

Unlike other platforms that focus solely on audio or lip synchronization only, Panjaya synchronizes audio with the full spectrum of body language – pauses, emphasis, gestures, and other body movements. This ensures a more natural, emotionally resonant connection with audiences, making Panjaya’s solutions unique in our ability to retain the integrity of the original content.

With AI dubbing, lip-syncing, and voice cloning, how do you ensure authenticity and cultural resonance across diverse audiences?

Panjaya is focused on deep-real technology, meaning we aim to represent speakers accurately and authentically. We work exclusively with trusted partners and businesses, ensuring that our technology is used ethically to enhance communication rather than mislead or misrepresent.

Panjaya is working with industry leaders like TED and JFrog. Can you share a success story that highlights the platform’s impact?

TED, a global platform for sharing ideas, has seen extraordinary results using Panjaya to adapt their talks for international audiences, including:

115% increase in views of dubbed talks

2x increase in video completion rates

69% increase in non-English browser views

30% increase in social sharing

Over 10M views and 400K interactions on highlight reels

JFrog, a DevOps and software distribution leader, hosts SwampUP, an annual conference showcasing industry advancements and thought leadership. Recognizing the importance of engaging their global audience, JFrog had already localized written content—but with video becoming the dominant medium, expanding accessibility was the next logical step. By leveraging Panjaya, JFrog seamlessly provided multilingual conference talks, ensuring their French and German-speaking users could fully engage with key product announcements and insights, driving deeper connection and broader reach.

What challenges do you foresee as AI dubbing technology becomes more widely adopted, and how is Panjaya preparing to address them?

One challenge is ensuring the output accounts for cultural context and nuance. That’s why we enable a human-in-the-loop approach—keeping it fast and seamless, with AI handling the heavy lifting while humans provide quick, intuitive refinements to ensure the final product feels natural and true to the speaker’s intent, while accounting for cultural differences.

Scalability is also something we think about constantly. As more industries adopt AI-driven solutions, we’ve invested heavily in R&D to make sure Panjaya can handle the complexities of real-world scenarios, from multi-speaker events to varying camera angles. For us, it’s all about staying ahead of these challenges while ensuring the technology is impactful, ethical, and accessible for everyone.

How do you see AI reshaping the future of video content creation and global accessibility?

Traditional barriers to global communication—language, time, and resources—are rapidly disappearing. AI-powered localization ensures higher accuracy and quality at scale, making it faster and more cost-effective for organizations to engage global audiences. With Panjaya’s platform, localization goes beyond words—capturing facial expressions, gestures, and emotional delivery to preserve the speaker’s true intent. This synchronization makes content feel native in any language, allowing organizations to connect with international audiences as if speaking directly to them.

Beyond accessibility, AI also enables deeper personalization—adapting tone, pacing, and delivery style to resonate with different cultural expectations. Instead of a one-size-fits-all approach, creators can tailor video content to feel more authentic and culturally relevant, driving the next generation of global storytelling.

While real-time AI localization isn’t here yet, it’s on the horizon—bringing us even closer to a world where language is no longer a barrier to connection.

What role does human oversight play in Panjaya’s AI dubbing process to maintain quality and nuance?

Our platform is built with collaboration in mind. Our dubbing studio offers intuitive tools that allow human reviewers to fine-tune speech rhythm, tone, and pacing, ensuring every detail aligns with the original speaker’s intent. Our human-in-the-loop approach ensures that the final output doesn’t just translate words but captures the heart of the message, making the content feel as impactful in the target language as it does in the original.

How does Panjaya ensure ethical use of its technology, particularly in preventing misuse like misinformation or impersonation?

It’s an issue we discuss and think a lot about.We are deeply committed to the ethical use of our technology. Our mission is to empower storytellers to seamlessly adapt video content for global audiences, but never at the expense of integrity or trust.

To prevent misuse, we take a number of steps. First, we only work with organizations that provide contractual guarantees regarding the content they translate. Second, we take a firm stance against misuse—our platform must never be used to impersonate individuals, spread misinformation, or cause harm. To uphold this, we are actively developing both policy and technological safeguards, including content detection and blocking measures, as well as tools to identify content generated through our platform. We are continuously refining our approach to ensure AI-driven localization remains a force for responsible, authentic communication.

What does success look like for Panjaya in the next five years, and how do you plan to achieve it?

Success for Panjaya means becoming the go-to video localization solution for any company with a global audience—or one looking to expand globally. Our focus is twofold: authenticity and scalability. On the authenticity front, we’re advancing AI to capture emotional tone, pacing, and cultural nuance so that every localized video feels as natural and impactful as the original.

For scalability, we’re tackling increasingly complex use cases—handling more speakers, diverse backgrounds, and dynamic video formats. At the same time, we’re prioritizing seamless integration into existing video creation workflows, ensuring effortless adoption through partnerships with leading video management and localization platforms. By pushing the boundaries of AI-driven localization, we aim to redefine how businesses communicate across languages—making global storytelling truly frictionless. Our work with TED is a great example of how these workflow integrations are being used.

The global video market is rapidly evolving. What trends do you see shaping its future, and how is Panjaya positioned to capitalize on them?

The global video market is being shaped by two major trends: the rapid growth of video as a primary communication tool across all levels—from individual creators to large enterprises—and the increasing need for businesses to expand globally to drive growth. As video consumption surges, companies must ensure their content resonates across languages and cultures. AI-powered localization is becoming essential—not just for translation, but for preserving authenticity, tone, and emotional impact at scale.

Panjaya is strategically positioned to capitalize on these trends by seamlessly integrating into existing video creation workflows, making global expansion effortless for businesses. Our technology is built for scale, allowing companies to localize content efficiently without compromising quality. Most importantly, we ensure that brands maintain authenticity—preserving their voice, tone, and message across languages and cultures to create video experiences that resonate worldwide.

As the market continues to demand more advanced localization and personalization capabilities, we’re not just following these trends—we’re driving them, enabling organizations to connect meaningfully with audiences worldwide.

Thank you for the great interview, readers who wish to learn more should visit Panjaya.

#2022#Accessibility#accounting#Accounts#adoption#ai#AI-powered#amp#Announcements#apple#approach#audio#barrier#brands#browser#Building#Capture#CEO#challenge#Collaboration#communication#Companies#conference#content#content creation#creators#detection#development#Devops#driving

0 notes

Text

Code Generation Revolution: New Era in App Development

Explore how the code generation revolution is transforming app development, empowering developers with speed and innovation in this new era.

The environment of app development is changing dramatically in the ever-changing field of technology. Code generation tools are becoming increasingly popular, heralding a new era of app development as companies and developers look for more effective ways to produce high-quality applications. This blog explores the idea of code generation, including its advantages, difficulties, and revolutionary…

View On WordPress

#AIinTech#App Development#Automation#Code Generation#Dev Community#DevOps#Low Code#No Code#Software Engineering#Tech Revolution

0 notes

Text

How To Become a Successful Freelance Developer & Other Tech

In this article, we are going through a detailed roadmap for tech professionals looking to transition into freelancing. We cover the essential steps to launch and maintain a successful freelance career in the technology sector. From identifying your niche and building a compelling portfolio to developing pricing strategies, acquiring clients, and managing your freelance business, this guide…

#freelance Amazon seller consultant#freelance Android developer#freelance animator#freelance app developer#freelance blog writer#freelance branding consultant#freelance business analyst#freelance business consultant#freelance CAD designer#freelance cloud engineer#freelance community manager#freelance content writer#freelance copywriter#freelance CRM consultant#freelance customer service#freelance cybersecurity consultant#freelance data analyst#freelance data entry specialist#freelance DevOps specialist#freelance digital marketing#freelance dropshipping consultant#freelance eBay seller consultant#freelance ecommerce developer#freelance education consultant#freelance email copywriter#freelance email marketing#freelance environmental consultant#freelance ERP consultant#freelance Etsy seller consultant#freelance event planner

0 notes

Text

6 Proven Tips for Effective DevOps Collaboration and Communication

Unlock seamless collaboration and communication in DevOps with these 6 proven tips. Learn to foster synergy across teams, streamline workflows, and enhance productivity. Embrace transparency, automate repetitive tasks, and prioritize feedback loops. Cultivate a culture of trust and accountability while leveraging cutting-edge tools and methodologies. Elevate your DevOps game today!

Also read collaboration and communication in DevOps

0 notes

Text

How to Install Azure DevOps Server 2022

View On WordPress

#Azure#Azure CI/CD#Azure DevOps#Azure DevOps Server 2022#Community Edition#Database#Porject Collection#Project#SQL Database#Team Project Collection#Windows#Windows Server

0 notes

Text

I'm speaking at the Data.TLV Summit 2023 in Israel

📢I'm #Speaking at the #DataTLV #Summit - the biggest #Data #Community event in #Israel ! Don't miss out! #Event #MadeiraData #SQLServer #DevOps #SSDT #RishonLezion

The Data TLV Summit in Israel is the single most biggest, largest, most amazingest annual summit in Israel for data enthusiasts, made by the community, for the community. The summit is taking place on November 2, 2023, and I will be delivering my most popular session there: Development Lifecycle Basics for DBAs! Continue reading Untitled

View On WordPress

0 notes

Text

Normal night in the midnight.

#coding#programmer#programming#software development#study aesthetic#student#study blog#studyblr#developer#software#study motivation#studyspo#studying#study art#studyaesthetic#study study study#self improvement#studyblr community#studyblr aesthetic#study life#student aesthetic#dark aesthetic#dark academia#stublr br#studentlife#student life#web development#devops#infrastructure#softwarengineer

18 notes

·

View notes

Text

📅 Daily I/O Log – 2025.05.07

🧠 Morning Mindset

Book: Atomic Habits

Takeaway:

- The 3rd Law of Behavior Change is make it easy - The most effective form of learning is practice, not planning. - Focus on taking action, not being in motion. - Habit formation is the process by which a behavior becomes progressively more authentic through repetition. - The amount of time you have been performing a habit is not as important as the number of times you have performed it.

💪🏽 Fitness Focus

Cardio Step Routine

Yoga

💻 Code Challenges

Platform: HackerRank Challenge: 3 Month Preparation Kit - Week 1 - Camel Case 4

🎓 Course Progress

➤ Personal Branding: Stand Out and Succeed • Focus: Study • Module 2: People as Brands

➤ Full-Stack JavaScript Developer • Focus: Study • Module 2: Introduction to Development (Introduction to Software Development)

#daily log#productivity#self improvement#personal growth#studyblr#mindset#devlog#coding#codewars#programming#devops#online learning#course progress#edtech#career development#morning routine#goal setting#discipline#high performance habits#tumblr study community#trailynne#trai lynne

0 notes

Photo

Deploy laravel project with docker swarm We check three major step in this guide Setup laravel project with docker compose Deploy the stack to the swarm Create gitlab-ci Setup laravel project with docker compose we will explore the process of deploying a laravel project using docker swarm and setting up a CI/CD pipline to automate the deployment process. Now let’s start with containerize a laravel project with docker compose we need three separate service containers: An app service running PHP7.4-FPM; A db service running MySQL 5.7; An nginx service that uses the app service to parse PHP code Step 1. Set a env variable in project In root directory of project we have .env file now we need to update some variable DB_CONNECTION=mysql DB_HOST=db DB_PORT=3306 DB_DATABASE=experience DB_USERNAME=experience_user DB_PASSWORD=your-password Step 2. Setting up the application’s Docekrfile we need to build a custom image for the application container. We’ll create a new Dockerfile for that. Docker file FROM php:7.4-fpm # Install system dependencies RUN apt-get update && apt-get install -y \ git \ curl \ libpng-dev \ libonig-dev \ libxml2-dev \ zip \ unzip # Clear cache RUN apt-get clean && rm -rf /var/lib/apt/lists/* # Install PHP extensions RUN docker-php-ext-install pdo_mysql mbstring exif pcntl bcmath gd # Get latest Composer COPY --from=composer:latest /usr/bin/composer /usr/bin/composer # Set working directory WORKDIR /var/www Step 3. Setting up Nginx config and Database dump file In root directory create a new directory called docker-compose Now we need two other directories, a nginx directory and mysql directory So we have this two route in our project laravel-project/docker-compose/nginx/ laravel-project/docker-compose/mysql/ In nginx directory create a file called experience.conf we write nginx config in this file like: server { listen 80; index index.php index.html; error_log /var/log/nginx/error.log; access_log /var/log/nginx/access.log; root /var/www/public; location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_pass app:9000; fastcgi_index index.php; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $fastcgi_path_info; } location / { try_files $uri $uri/ /index.php?$query_string; gzip_static on; } } In mysql directory create a file called init_db.init we write mysql initialization in this file like: DROP TABLE IF EXISTS `places`; CREATE TABLE `places` ( `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT, `name` varchar(255) COLLATE utf8mb4_unicode_ci NOT NULL, `visited` tinyint(1) NOT NULL DEFAULT '0', PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=12 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci; INSERT INTO `places` (name, visited) VALUES ('Berlin',0),('Budapest',0),('Cincinnati',1),('Denver',0),('Helsinki',0),('Lisbon',0),('Moscow',1); Step 4. Creating a multi container with docker-compose We need a building three container that should share networks and data volumes. Ok so create a docker-compose file in root directory of project For craete a network for connecting services we define network in docker-compose file like this: networks: experience: driver: bridge App service: app: build: context: ./ dockerfile: Dockerfile image: travellist container_name: experience-app restart: unless-stopped working_dir: /var/www/ volumes: - ./:/var/www networks: - experience DB service: db: image: mysql:8.0 container_name: experience-db restart: unless-stopped environment: MYSQL_DATABASE: ${DB_DATABASE} MYSQL_ROOT_PASSWORD: ${DB_PASSWORD} MYSQL_PASSWORD: ${DB_PASSWORD} MYSQL_USER: ${DB_USERNAME} SERVICE_TAGS: dev SERVICE_NAME: mysql volumes: - ./docker-compose/mysql:/docker-entrypoint-initdb.d networks: - experience Nginx service: nginx: image: nginx:1.17-alpine container_name: experience-nginx restart: unless-stopped ports: - 8000:80 volumes: - ./:/var/www - ./docker-compose/nginx:/etc/nginx/conf.d networks: - experience So our docker-compose file be like this: version: "3.7" services: app: build: context: ./ dockerfile: Dockerfile image: travellist container_name: experience-app restart: unless-stopped working_dir: /var/www/ volumes: - ./:/var/www networks: - experience db: image: mysql:8.0 container_name: experience-db restart: unless-stopped environment: MYSQL_DATABASE: ${DB_DATABASE} MYSQL_ROOT_PASSWORD: ${DB_PASSWORD} MYSQL_PASSWORD: ${DB_PASSWORD} MYSQL_USER: ${DB_USERNAME} SERVICE_TAGS: dev SERVICE_NAME: mysql volumes: - ./docker-compose/mysql:/docker-entrypoint-initdb.d networks: - experience nginx: image: nginx:alpine container_name: experience-nginx restart: unless-stopped ports: - 8100:80 volumes: - ./:/var/www - ./docker-compose/nginx:/etc/nginx/conf.d/ networks: - experience networks: experience: driver: bridge Step 5. Running application with docker compose Now we can build the app image with this command: $ docker-compose build app When the build is finished, we can run the environment in background mode with: $ docker-compose up -d Output: Creating exprience-db ... done Creating exprience-app ... done Creating exprience-nginx ... done to show information about the state of your active services, run: $ docker-compose ps Well in these 5 simple steps, we have successfully ran our application. Now we have a docker-compose file for our application that needs for using in docker swarm. Let’s start Initialize docker swarm. After installing docker in your server *attention: To install Docker, be sure to use the official documentation install docker check docker information with this command: $ docker info You should see “swarm : inactive” in output For activate swarm in docker use this command: $ docker swarm init The docker engine targeted by this command becomes a manager in the newly created single-node swarm. What we want to use is the services of this docker swarm. We want to update our service like app with docker swarm, The advantage of updating our service in Docker Swarm is that there is no need to down the app service first, update the service, and then bring the service up. In this method, with one command, we can give the image related to the service to Docker and give the update command. Docker raises the new service without down the old service and slowly transfers the load from the old service to the new service. When running Docker Engine in swarm mode, we can use docker stack deploy to deploy a complete application stack to the swarm. The deploy command accepts a stack description in the form of a Compose file. So we down our docker compose with this command: $ docker-compose down And create our stack. ok if everything is ok until now take a rest Deploy the stack to the swarm $ docker stack deploy --compose-file docker-compose.yml For example : $ docker stack deploy --compose-file docker-compose.yml staging Probably you see this in output: Creating network staging_exprience Creating service staging_nginx failed to create service staging_nginx: Error response from daemon: The network staging_exprience cannot be used with services. Only networks scoped to the swarm can be used, such as those created with the overlay driver. This is because of “driver: bridge” for deploying your service in swarm mode you must use overlay driver for network if you remove this line in your docker compose file When the stack is being deployed this network will be create on overlay driver automatically. So our docker-compose file in network section be like this: networks: experience: And run upper command: $ docker stack deploy --compose-file docker-compose.yml staging For now you probably you see this error : failed to create service staging_nginx: Error response from daemon: The network staging_experience cannot be used with services. Only networks scoped to the swarm can be used, such as those created with the overlay driver. Get network list in your docker: $ docker network ls Output: NETWORK ID NAME DRIVER SCOPE 30f94ae1c94d staging_experience bridge local So your network has local scope yet because in first time deploy stack this network save in local scope and we must remove that by: $ docker network rm staging_experience After all this run command: $ docker stack deploy --compose-file docker-compose.yml staging Output: Creating network staging_experience Creating service staging_app Creating service staging_db Creating service staging_nginx Now get check stack by: $ docker stack ls Output: NAME SERVICES staging 3 And get service list by: $ docker service ls Output: If your REPLICAS is 0/1 something wrong is your service For checking service status run this command: $ docker service ps staging_app for example And for check detail of service run this command: $ docker service logs staging_app for example Output of this command show you what is problem of your service. And for updating your a service with an image the command you need is this: $ docker service update --image "<your-image>" "<name-of-your-service>" --force That's it your docker swarm is ready for zero down time deployment :))) Last step for have a complete process zero down time deployment is create pipeline in gitlab. Create gitlab-ci In this step we want create a pipeline in gitlab for build, test and deploy a project So we have three stage: stages: - Build - Test - Deploy Ok let’s clear what we need and what is going on in this step . We want update laravel project and push our change in gitlab create a new image of this changes and test that and after that log in to host server pull that updated image in server, and update service of project. For login to server we need define some variable in gitlab in your repository goto setting->CI/CD->VARIABLES Add variable Add this variables: CI_REGISTRY : https://registry.gitlab.com DOCKER_AUTH_CONFIG: { "auths": { "registry.gitlab.com": { "auth": "<auth-key>" } } } auth-key is base64 hash of “gitlab-username:gitlab-password” SSH_KNOWN_HOSTS: Like 192.168.1.1 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCGUCqCK3hNl+4TIbh3+Af3np+v91AyW4+BxXRtHBC2Y/uPJXF2jdR6IHlSS/0RFR3hOY+8+5a/r8O1O9qTPgxG8BSIm9omb8YxF2c4Sz/USPDK3ld2oQxbBg5qdhRN28EvRbtN66W3vgYIRlYlpNyJA+b3HQ/uJ+t3UxP1VjAsKbrBRFBth845RskSr1V7IirMiOh7oKGdEfXwlOENxOI7cDytxVR7h3/bVdJdxmjFqagrJqBuYm30 You can see how generate ssh key in this post: generate sshkey SSH_PRIVATE_KEY: SSH_REMOTE_HOST: root@ This is your variables in gitlab. So let’s back to gitlab-ci In root directory of project create a new file .gitlab-ci.yml and set build stage set test stage And in the last set deploy stage like: stages: - Build - Test - Deploy variables: IMAGE_TAG: $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_SLUG-$CI_COMMIT_SHORT_SHA build: stage: Build image: docker:20.10.16 services: - docker:dind script: - docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY - docker build --pull -f Dockerfile -t $IMAGE_TAG . - docker push $IMAGE_TAG preparation: stage: Test image: $IMAGE_TAG needs: - build script: - composer install artifacts: expire_in: 1 day paths: - ./vendor cache: key: ${CI_COMMIT_REF_SLUG}-composer paths: - ./vendor unit-test: stage: Test image: $IMAGE_TAG services: - name: mysql:8 alias: mysql-test needs: - preparation variables: APP_KEY: ${APP_KEY} MYSQL_ROOT_PASSWORD: ${MYSQL_ROOT_PASSWORD} MYSQL_DATABASE: ${MYSQL_DATABASE} DB_HOST: ${DB_HOST} DB_USERNAME: ${DB_USERNAME} DB_PASSWORD: ${DB_PASSWORD} script: - php vendor/bin/phpunit staging-deploy: stage: Deploy extends: - .deploy-script variables: APP: "stackdemo_app" STACK: "travellist-staging" only: - develop needs: - unit-test environment: name: stage .remote-docker: variables: DOCKER_HOST: ssh://${SSH_REMOTE_HOST} image: docker:20.10.16 before_script: - eval $(ssh-agent -s) - echo $IMAGE_TAG - echo "$SSH_PRIVATE_KEY" | tr -d '\r' | ssh-add - - mkdir -p ~/.ssh - chmod 700 ~/.ssh - echo "HOST *" > ~/.ssh/config - echo "StrictHostKeyChecking no" >> ~/.ssh/config - echo -n $CI_REGISTRY_PASSWORD | docker login -u $CI_REGISTRY_USER --password-stdin $CI_REGISTRY .deploy-script: extends: - .remote-docker script: - cp $develop_config /root/project/core - docker pull $IMAGE_TAG - docker service update --image "$IMAGE_TAG" "$APP" --force dependencies: [] Change something in your project and push to gitlab and wait for it To see all pipeline pass like this : And this is beautiful. https://dev.to/holyfalcon/deploy-laravel-project-with-docker-swarm-5oi

0 notes

Text

Exciting Mock interview with DevOps/AWS engineer #devops #cloud #aws #devopsengineer #cloudengineer

Interviewer: Welcome to this exciting mock interview for the role of a DevOps/AWS Engineer! Today, we have an enthusiastic candidate eager to showcase their skills. Let’s begin! Candidate: Thank you! I’m thrilled to be here. Interviewer: Great to have you. Let’s start with a classic question: What attracted you to the field of DevOps and working with AWS? Candidate: DevOps combines my passion…

View On WordPress

#Automation#AWS#CI/CD#cloud engineering#cloud technology#collaboration#communication#continuous integration#cost optimization#cross-functional teams#DevOps#disaster recovery#high availability#IaC#incident management#infrastructure as code#mock interview#performance optimization#scalability#security#tech trends

0 notes

Text

The role of MLSecOps in the future of AI and ML

New Post has been published on https://thedigitalinsider.com/the-role-of-mlsecops-in-the-future-of-ai-and-ml/

The role of MLSecOps in the future of AI and ML

Having just spent some time in reviewing and learning further about MLSecOps (Fantastic Course on LinkedIn by Diana Kelley) I wanted to share my thoughts on the rapidly evolving landscape of technology, the integration of Machine Learning (ML) and Artificial Intelligence (AI) has revolutionized numerous industries.

However, this transformative power also comes with significant security challenges that organizations must address. Enter MLSecOps, a holistic approach that combines the principles of Machine Learning, Security, and DevOps to ensure the seamless and secure deployment of AI-powered systems.

The state of MLSecOps today

As organizations continue to harness the power of ML and AI, many are still playing catch-up when it comes to implementing robust security measures. In a recent survey, it was found that only 34% of organizations have a well-defined MLSecOps strategy in place. This gap highlights the pressing need for a more proactive and comprehensive approach to securing AI-driven systems.

Key challenges in existing MLSecOps implementations

1. Lack of visibility and transparency: Many organizations struggle to gain visibility into the inner workings of their ML models, making it difficult to identify and address potential security vulnerabilities.

2. Insufficient monitoring and alerting: Traditional security monitoring and alerting systems are often ill-equipped to detect and respond to the unique risks posed by AI-powered applications.

3. Inadequate testing and validation: Rigorous testing and validation of ML models are crucial to ensuring their security, yet many organizations fall short in this area.

4. Siloed approaches: The integration of ML, security, and DevOps teams is often a significant challenge, leading to suboptimal collaboration and ineffective implementation of MLSecOps.

5. Compromised ML models: If an organization’s ML models are compromised, the consequences can be severe, including data breaches, biased decision-making, and even physical harm.

6. Securing the supply chain: Ensuring the security and integrity of the supply chain that supports the development and deployment of ML models is a critical, yet often overlooked, aspect of MLSecOps.

The imperative for embracing MLSecOps

The importance of MLSecOps cannot be overstated. As AI and ML continue to drive innovation and transformation, the need to secure these technologies has become paramount. Adopting a comprehensive MLSecOps approach offers several key benefits:

1. Enhanced security posture: MLSecOps enables organizations to proactively identify and mitigate security risks inherent in ML-based systems, reducing the likelihood of successful attacks and data breaches.

2. Improved model resilience: By incorporating security testing and validation into the ML model development lifecycle, organizations can ensure the robustness and reliability of their AI-powered applications.

3. Streamlined deployment and maintenance: The integration of DevOps principles in MLSecOps facilitates the continuous monitoring, testing, and deployment of ML models, ensuring they remain secure and up-to-date.

4. Increased regulatory compliance: With growing data privacy and security regulations, a robust MLSecOps strategy can help organizations maintain compliance and avoid costly penalties.

Potential reputational and legal implications

The failure to implement effective MLSecOps can have severe reputational and legal consequences for organizations:

1. Reputational damage: A high-profile security breach or incident involving compromised ML models can severely damage an organization’s reputation, leading to loss of customer trust and market share.

2. Legal and regulatory penalties: Noncompliance with data privacy and security regulations can result in substantial fines and legal liabilities, further compounding the financial impact of security incidents.

3. Liability concerns: If an organization’s AI-powered systems cause harm due to security vulnerabilities, the organization may face legal liabilities and costly lawsuits from affected parties.

Key steps to implementing effective MLSecOps

1. Establish cross-functional collaboration: Foster a culture of collaboration between ML, security, and DevOps teams to ensure a holistic approach to securing AI-powered systems.

2. Implement comprehensive monitoring and alerting: Deploy advanced monitoring and alerting systems that can detect and respond to security threats specific to ML models and AI-driven applications.

3. Integrate security testing into the ML lifecycle: Incorporate security testing, including adversarial attacks and model integrity checks, into the development and deployment of ML models.

4. Leverage automated deployment and remediation: Automate the deployment, testing, and remediation of ML models to ensure they remain secure and up-to-date.

5. Embrace explainable AI: Prioritize the development of interpretable and explainable ML models to enhance visibility and transparency, making it easier to identify and address security vulnerabilities.

6. Stay ahead of emerging threats: Continuously monitor the evolving landscape of AI-related security threats and adapt your MLSecOps strategy accordingly.

7. Implement robust incident response and recovery: Develop and regularly test incident response and recovery plans to ensure organizations can quickly and effectively respond to compromised ML models.

8. Educate and train employees: Provide comprehensive training to all relevant stakeholders, including developers, security personnel, and end-users, to ensure a unified understanding of MLSecOps principles and best practices.

9. Secure the supply chain: Implement robust security measures to ensure the integrity of the supply chain that supports the development and deployment of ML models, including third-party dependencies and data sources.

10. Form violet teams: Establish dedicated “violet teams” (a combination of red and blue teams) to proactively search for and address vulnerabilities in ML-based systems, further strengthening the organization’s security posture.

The future of MLSecOps: Towards a proactive and intelligent approach

As the field of MLSecOps continues to evolve, we can expect to see the emergence of more sophisticated and intelligent security solutions. These may include:

1. Autonomous security systems: AI-powered security systems that can autonomously detect, respond, and remediate security threats in ML-based applications.

2. Federated learning and secure multi-party computation: Techniques that enable secure model training and deployment across distributed environments, enhancing the privacy and security of ML systems.

3. Adversarial machine learning: The development of advanced techniques to harden ML models against adversarial attacks, ensuring their resilience in the face of malicious attempts to compromise their integrity.

4. Continuous security validation: The integration of security validation as a continuous process, with real-time monitoring and feedback loops to ensure the ongoing security of ML models.

By embracing the power of MLSecOps, organizations can navigate the complex and rapidly evolving landscape of AI-powered technologies with confidence, ensuring the security and resilience of their most critical systems, while mitigating the potential reputational and legal risks associated with security breaches.

Have access to hundreds of hours of talks by AI experts OnDemand.

Sign up for our Pro+ membership today.

AI Accelerator Institute Pro+ membership

Unlock the world of AI with the AI Accelerator Institute Pro Membership. Tailored for beginners, this plan offers essential learning resources, expert mentorship, and a vibrant community to help you grow your AI skills and network. Begin your path to AI mastery and innovation now.

#Adversarial attacks#ai#ai skills#AI-powered#applications#approach#artificial#Artificial Intelligence#autonomous#Blue#breach#challenge#Collaboration#Community#compliance#comprehensive#compromise#computation#continuous#course#data#Data Breaches#data privacy#data privacy and security#deployment#developers#development#Devops#employees#Explainable AI

0 notes

Text

Essentials You Need to Become a Web Developer

HTML, CSS, and JavaScript Mastery

Text Editor/Integrated Development Environment (IDE): Popular choices include Visual Studio Code, Sublime Text.

Version Control/Git: Platforms like GitHub, GitLab, and Bitbucket allow you to track changes, collaborate with others, and contribute to open-source projects.

Responsive Web Design Skills: Learn CSS frameworks like Bootstrap or Flexbox and master media queries

Understanding of Web Browsers: Familiarize yourself with browser developer tools for debugging and testing your code.

Front-End Frameworks: for example : React, Angular, or Vue.js are powerful tools for building dynamic and interactive web applications.

Back-End Development Skills: Understanding server-side programming languages (e.g., Node.js, Python, Ruby , php) and databases (e.g., MySQL, MongoDB)

Web Hosting and Deployment Knowledge: Platforms like Heroku, Vercel , Netlify, or AWS can help simplify this process.

Basic DevOps and CI/CD Understanding

Soft Skills and Problem-Solving: Effective communication, teamwork, and problem-solving skills

Confidence in Yourself: Confidence is a powerful asset. Believe in your abilities, and don't be afraid to take on challenging projects. The more you trust yourself, the more you'll be able to tackle complex coding tasks and overcome obstacles with determination.

#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code

2K notes

·

View notes

Text

How I’d get freelance clients (starting from 0)

If I were starting out as a freelancer with zero clients and zero experience, here’s what I would do. First, I would specify my offering by listing out my skills. Based on these skills, I would then list the services I could offer. For each service, I would write down a list of deliverables. Let me give you an example: if I had skills in UI/UX design, my services might include responsive web…

#freelance Amazon seller consultant#freelance Android developer#freelance animator#freelance app developer#freelance blog writer#freelance branding consultant#freelance business analyst#freelance business consultant#freelance CAD designer#freelance cloud engineer#freelance community manager#freelance content writer#freelance copywriter#freelance CRM consultant#freelance customer service#freelance cybersecurity consultant#freelance data analyst#freelance data entry specialist#freelance DevOps specialist#freelance digital marketing#freelance dropshipping consultant#freelance eBay seller consultant#freelance ecommerce developer#freelance education consultant#freelance email copywriter#freelance email marketing#freelance environmental consultant#freelance ERP consultant#freelance Etsy seller consultant#freelance event planner

0 notes