#Drives and automation

Explore tagged Tumblr posts

Text

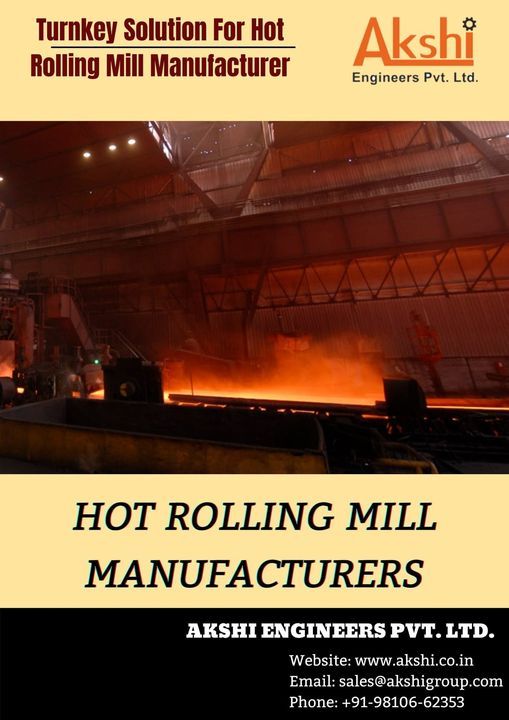

Innovations in Roll Design Revolutionizing Efficiency and Quality in Egypt's Steel Industry

In the heart of Egypt's thriving steel industry, a silent revolution is underway that promises to reshape the landscape of efficiency and product excellence. At the forefront of this transformation are pioneers like Akshi Engineers Pvt. Ltd., who are engineering a new era of innovation in roll design, propelling Egyptian rolling mills towards unprecedented heights of performance and quality.

Akshi Engineers Pvt. Ltd.: Illuminating the Path of Progress

As a prominent Rolling Mill Drives & Automation manufacturer, Akshi Engineers Pvt. Ltd. has cast a luminous trail in Egypt's steel sector. With an unwavering commitment to innovation, they stand as a testament to the synergy between technology and tradition, driving the industry's evolution.

Precision Roll Designs: Forging the Backbone of Quality

At the core of this transformation are the ingenious advancements in roll design. The meticulous engineering of rolls has evolved from mere mechanical components to precision instruments that orchestrate the symphony of steel production. Akshi Engineers Pvt. Ltd., along with other Rolling Mill Companies in Egypt, have led the charge in crafting rolls that endure immense pressures and temperatures while maintaining dimensional accuracy. This innovation lays the foundation for consistently high-quality steel products.

Elevating Mill Stand Designs: Where Stability Meets Flexibility

Mill stands, often the unsung heroes of hot rolling mills, have undergone a renaissance in design. The delicate equilibrium between stability and flexibility is now a hallmark of innovation. The efforts of Mill Stand Companies in Egypt have resulted in stands that seamlessly withstand the relentless forces of rolling while providing the agility to adjust and meet exact product specifications.

Powering Efficiency: The Gearbox Revolution

In the backdrop of the rolling spectacle, the unsung heroes of efficiency quietly hum – the gearboxes. Gearbox Manufacturers in Egypt have unleashed a new era of precision and control. Modern gearboxes, resembling masterful clockwork, harness optimal torque and speed control, allowing mills to adapt swiftly to varying product demands without compromising efficiency. This dynamism not only enhances production versatility but also champions energy conservation.

Turnkey Solutions for TMT Bar Mills: Meeting Market Dynamics

The Egyptian steel industry, mirroring the nation's rapid development, demands versatile and high-quality products. This is where turnkey solutions come into play. As the demand for TMT bars soars in Egypt's construction and infrastructure sectors, the expertise of Turnkey Solution for TMT Bar Mills Providers is evident. They ensure that every step, from initial mill design to ongoing maintenance, is orchestrated with precision, culminating in the consistent production of top-tier TMT bars.

Shaping a Sustainable Tomorrow

Innovations in roll design aren't just about efficiency and quality; they're about sustainability. The shift towards energy-efficient practices, streamlined heating techniques, and waste reduction strategies has gained momentum. As the steel industry embraces Industry 4.0 principles, Egypt's rolling mills stand at the cusp of a paradigm shift – one that integrates real-time data analytics and predictive maintenance to fine-tune production processes and elevate sustainability.

Charting the Course Ahead

The tale of Egypt's rolling mills is a narrative of resilience and innovation. Akshi Engineers Pvt. Ltd. and other industry leaders are the architects of this transformation, steering the course towards efficiency and excellence. Their relentless pursuit of precision roll designs, dynamic mill stands, and adaptable gearboxes has propelled the nation's steel industry to the forefront of progress.

As the sun sets over Egypt's steel landscape, it casts a radiant glow upon a sector that is not only adapting to change but embracing it with open arms. The harmony between tradition and innovation resonates, reminding us that rolling mills are not mere machines but the heartbeat of a nation's growth. Egypt's steel story continues, driven by the innovations in roll design that are illuminating a path toward an unparalleled future.

Source Url: https://raginitiwari.blogspot.com/2023/08/innovations-in-roll-design.html

#hot rolling mill manufacturer#twinchannel#rollingmillmanufacturers#rolling mill stand#gear boxes manufacturer#Drives and automation#turnkey solutions for tmt bar mill

0 notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

857 notes

·

View notes

Text

it's quite offputting to me when ppl can't disentangle their hatred for capitalism from a hatred for... new technological innovation? the ways in which capitalism has shaped the development of certain technologies has been deeply negative, not to mention that imperialism ensures that new technology is usually produced via extractive relationships with both the planet + ppl in the global south.

but this weird tying of capitalist impact on innovation (+the idea of what is/is not innovation) to hatred of innovation itself (or even more disturbing valorization of "the good old days"/implications that technology is causing social degeneracy) is baffling to me. perhaps it is impossible to achieve specific technologies without unconscionable resource extraction practices, in which case they should not be pursued. but so many ppl act like there is something inherently morally suspect in pursuit of tech such as autonomous vehicles or AI or automation, independent of the material conditions that produced them/that they may produce.

tesla is evil because they exploit ppl for profit + participate in an economy built on the exploitation of the global south + use 'innovation' as a marketing tool to mask serious safety concerns. they're not evil bcuz they want to make vehicles that move on their own. there are actually a great deal of fantastic applications for vehicles which move on their own? equating technology with moral decay is not a radical position; you need a material analysis of why technological innovation has become characterized by harmful practices.

#just saw a truly tragic article abt a death related to self driving car#entire comments section SWAMPED with the implication that any attempts to create self driving cars is INHERENTLY EVIL#like. actually fully automated public transportation would be dead fucking useful#public transit is not currently fully automated + is extremely limited in many cities#im so tired of ppl who need the technology to also be BAD to call out the practices which produced it#things do not need to be black/white for u to understand that exploiting others is morally wrong...

307 notes

·

View notes

Text

I kid you not, they referenced Knight Rider in SpongeBob of all shows!

However, this talking boat acts more like KARR than KITT and is a major asshole! But don't worry, the sass is on par with how a Knight Rider vehicle should be!

#knight rider#knight rider reference#spongebob#spongebon squarepants#drive happy#coupe#kitt#k.i.t.t.#knight industries two thousand#k.a.r.r.#karr#knight automated roving robot

27 notes

·

View notes

Text

He runs the kitchen in hq, which is about as stressful as you'd expect.

#spidersona#atsv#spiderman across the spiderverse#digital art#my art#oc#original character#csp#clip studio paint#character design#spider-gourmand#i do have a spidersona thats like an actual persona but ive redesigned them like 30 times n they still dont feel right#but gourmand? perfect man.#some Lore:#I imagine that a lot of tasks are automated but humans are still needed to supervise plan prep etc#defends the kitchen staff with his life whether theyre a volunteer or a regular#bc some spiders are assholes. other spiders are white boys who make the same jokes#about food they dont understand and it drives rene up a wall#he finds the multiverse very fascinating food-wise. for as much he complains about the job hes never turned#down a request from a spider for a dish specific to their home dimension#he believes strongly in the need to help yourself before you can help other people#which is why he gets absolutely pissed whenever spiders skip meals#what the fuck do you mean youll use your lunch break for another mission? who told you that? siddown.

125 notes

·

View notes

Text

i hate backing up files bc digital media is so paranoia-inducing bc the file could just corrupt or disappear or the hard drive could die at any moment

#trying to backup my pet photos off instagram n its a nightmare to even download the pics#i also have the existential issue of having a bunch of art on my ipad which would be super time consuming to backup#n which cant be automated#il prob have to get around to it one day but ugh#google drive is also an annoyance bc i keep some files there for the convenience of editing on the go#bc i often think of an idea n dont have time to get on my computer or its the middle of the night#but then i have to manually download the new copy to my computer to backup n its in a different place fo other files of the same project#dont even get me started on my fucking notes app#everything on my ipad survived the move to a new device once but i place too much faith that it will again. but theres just so much shit#thatd be so hard n time consuming to back up

4 notes

·

View notes

Text

i love my coworkers so much, but if i have to watch them set up any of the recorded events (streaming, broadcast records, physical records, literally anything) i will turn inside out.

#insane to me that there are so many different paths to the same outcome but even MORE insane to me#how wrong everyone else is about them.#i'm being facetious literally whatever works for you godspeed but please. i don't want to see it.#this extends to having to look at someone else's stream setup#which has unfortunately become so so much of my job#just. why have u done it this way there is NO precedent for these actions im biting u#this is like. one coworker in particular. she's the reason i was hired here at all and she did most of my initial training#but then we trained on the new automation simultaneously and dear god. did we get completely different things out of that training#which is fine! except#that she has trained every part time person for the last six years and they have all taken turns driving me absolutely insane#would u like to take eight steps where u could have taken one? boy do i have a training experience for you#i hate training im so glad i dont have to train (night shift) but SOMETIMES

4 notes

·

View notes

Text

My stance on AI is not that art or writing inherently must be made by a human to be soulful or good or whatnot but that the point of being alive is not to avoid doing anything ever.

#personally PERSONALLY I understand on the conceptual level why people want to automate hard tasks BUT on an emotional level on an intrinsic#‘this is how I view the world level’ i just have never understood the human races fascination with making life less life per life#the experience is the point? if a point could ever even claim to be made?#ik there’s this inclination towards skipping what we view as unpleasant like oh I’ll drive instead of walking to save time#oh I’ll just send a text instead of talkin To someone#and to a degree these innovations allow us to do things we wouldn’t be able to in some circumstances#such as reaching a store before it closes by car I#that you wouldn’t be able to get to by foot in the same time#BUT I firmly believe if the option exists to do something the slow way then it’s going to be better#even if you don’t enjoy the process of it like you do other things like hobbies or joys#doing things that are boring and tedious and a little painful are GOOD FOR YOU#LEARN TO EXIST IN DISCOMFORT AND BOREDOM AND REVEL IN MUNDANITY LIFE IS NOT JUST ABOUT DOING ENJOYABLE THINGS#An equal amount of life is doing things that are neutral or negative and idk why people seem not to be able to stand that? it’s beautiful#it’s life it’s living it’s just as good as whatever it is you do for joy just in a different manner#anyways AI is like the worst perversion of that like yeah I don’t want to write my emails but I’m going g to do it anyways it’s my life and#I want to live it fully! YES EVRN THE BORING PARTS YES EVEN THE EMAILS THE WRETCHED EMAILS#anyways don’t let a ghost of a computer steal your life write your own emails

2 notes

·

View notes

Text

AUTOMATIC AUTOMATION DEFENSIVE SYSTEMS USED AGAINST DEFINITE AND KNOWN TIME TRAVELERS AND THEIR ASSOCIATES

#AUTOMATIC AUTOMATION DEFENSIVE SYSTEMS#AUTOMATON#AUTOMATIC AUTOMATION DEFENSIVE SYSTEMS USED AGAINST DEFINITE AND KNOWN TIME TRAVELERS AND THEIR ASSOCIATES#robots#self-driving cars#deep learning#machine learning#drones#artificial intelligence#technology#culture#history#TIME TRAVEL#TEXT#TXT#txt#text

6 notes

·

View notes

Text

#ok so#the interview was for a cafe inside a big bank campus#all of my communication so far had been automated but they gave me an address and a name#i had to check in with someone to get into the main campus area#and then there were a bunch of confusing buildings and parking lots#but i made it to the building of the address they gave me#you have to scan a card to get in#i don't have a card#i see the cafe but theres no front entrance to it#theres two other buildings on the sides of it so i went to the doors and there was no one there or anything#i have no phone number to call#finally (thanks to my dad bc hes been here) i find a little call button by the door#i press that and wait . nothing#i see someone inside explaining to someone ELSE how to get somewhere#and she clearly saw me but didn't say anything to me and im wayyyyy too anxious at this point to say anything to her#and i was hoping someone was coming bc i pressed the button#i wait another minute#a guy comes out but not bc i pressed the button. he was just a guy#and he asked if i needed help getting in#and i told him i hsd the interview and i wasnt surr where to go#he said i would probably need a temporary badge but he wasn't sure exactly#and asked if i wanted him to walk me to the building for that#which was very nice but at this point i was TWENTY MINUTES LATE and very very anxious so i said no thank you#and went back to my car. and left.#i walked back while on the phone with my mom so no one else would try to talk to me#i got myself a little treat because OHHHHH MY GODDDDD#im gonna drive home blaring some music#maybe see about emailing them ?#BUT. GOOOOODDD LORD#WHY DIDNT THEY GIVE ME MORE INFORMATION?????? WHAT ELSE WAS I SUPPOSED TO DO?????? OH MY GOD IM GONNA THROW UP

5 notes

·

View notes

Video

tumblr

At the Drive-In - One-Armed Scissor (2000)

25 notes

·

View notes

Text

You know I imagine having a series of pre-recorded prompts at a drive thru window could be a great accessibility tool available to workers but I also do not imagine that's the reason bojangles did that

#they had to prompt me a couple of times because im so used to ignoring the initial pre-recorded/automated voice at drive thru speakers that#i didnt realize it was actually prompting me to order fdhDHD

2 notes

·

View notes

Text

do i even need to caption this

#he is automobile.#he drives auto#automism???#theme park ocs#oblivion#gardaland#rollercoaster oc#oblivion the black hole#oblivion: the black hole#oc meme#memes#oc#shitpost#my art#digital art

15 notes

·

View notes

Text

I'm still a bit skeptical that self-driving cars are safer than human drivers in general (though perhaps they are in specific situations). That said, not surprised that self-driving abilities have been slowly but steadily improving, esp as AI and related tech have been quickly advancing.

"The study compared Waymo’s liability claims to human driver baselines, which are based on Swiss Re’s data from over 500,000 claims and over 200 billion miles of exposure. It found that the Waymo Driver demonstrated better safety performance when compared to human-driven vehicles, with an 88% reduction in property damage claims and 92% reduction in bodily injury claims. In real numbers, across 25.3 million miles, the Waymo Driver was involved in just nine property damage claims and two bodily injury claims. Both bodily injury claims are still open and described in the paper. For the same distance, human drivers would be expected to have 78 property damage and 26 bodily injury claims."

2 notes

·

View notes

Photo

decided to draw me and a couple friends' automation cars, we ended up doing some races and challenges, and despite all odds, my SUV proved to have the superior performance!

#furry art#furry#art#car#suv#hatchback#compact#initial d#vehicle#automation#video games#driving#night

12 notes

·

View notes