#EC2

Explore tagged Tumblr posts

Text

64 vCPU/256 GB ram/2 TB SSD EC2 instance with #FreeBSD or Debian Linux as OS 🔥

39 notes

·

View notes

Text

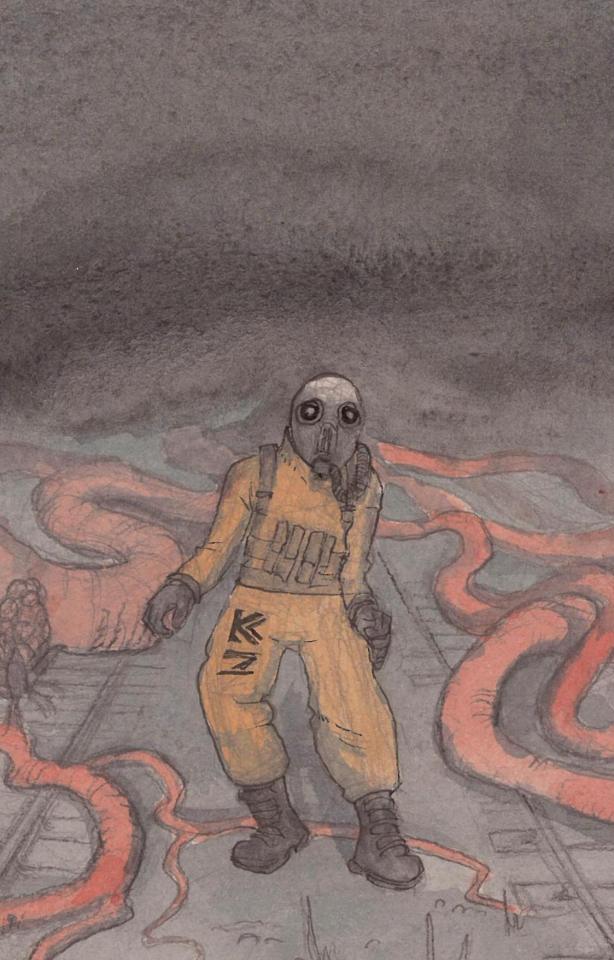

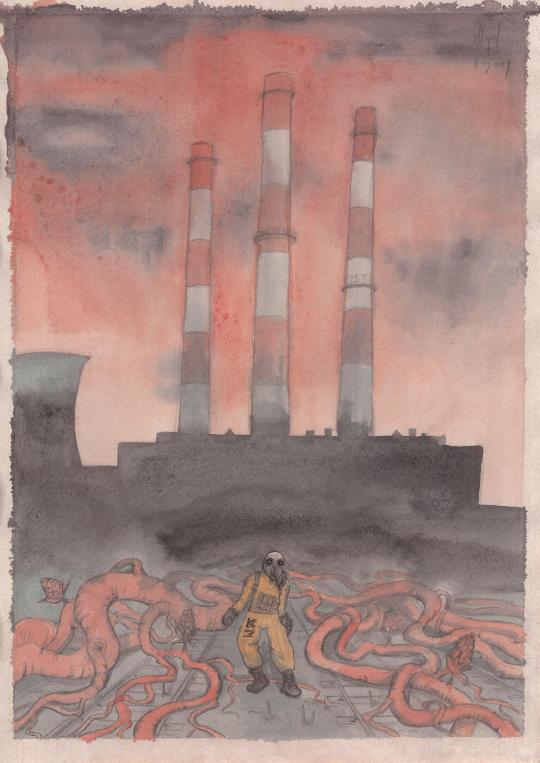

these lines fr live in my head rent-free. can't express how much I love it. perhaps one of my favorite panels in both volumes

4 notes

·

View notes

Text

"I float through physical thoughts

I stare down the abyss of organic dreams

All bets off,

I plunge Only to find that self is shed."

Meshuggah, "Shed" (album "Catch33", 2005).

youtube

#mikopol#watercolor#science fiction#dark#architecture#łódź#poland#europe#power station#elektrownia#industrial#relic#urbex#hazmat suit#horror#alien life#zona#elektrociepłownia#ec2#shed#meshuggah#metal#music#catch33#darkness#surreal#dream#stalker#Youtube

18 notes

·

View notes

Text

what the hell. like what the FUCK. liek whahahfhwhcbnrjgtrugirjghdfhvjdv (← going insane over branzy lore)

23 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

Best AWS Course in Electronic City Bangalore

1. Getting Started with Cloud Computing and AWS Course in Electronic City Bangalore

Cloud computing has revolutionized the way today’s businesses function, and Amazon Web Services (AWS) stands at the forefront of this technological shift, driving innovation and efficiency across industries. As a leading cloud service provider, AWS offers a wide array of services that empower organizations to scale their operations efficiently. With Bangalore emerging as a tech hub, many professionals are keen to harness these powerful tools for career advancement—making an AWS Training in Electronic City Bangalore a valuable stepping stone toward a successful cloud career.

If you’re on the lookout for top-notch AWS Training in Electronic City Bangalore, you're not alone. Many aspiring cloud practitioners are turning to specialized institutes that provide hands-on experience and expert guidance. This blog will delve into what makes eMexo Technologies one of the Best AWS Training in Electronic City Bangalore. Whether you’re new to cloud computing or looking to enhance your skills, there’s something here for everyone ready to take their career to new heights!

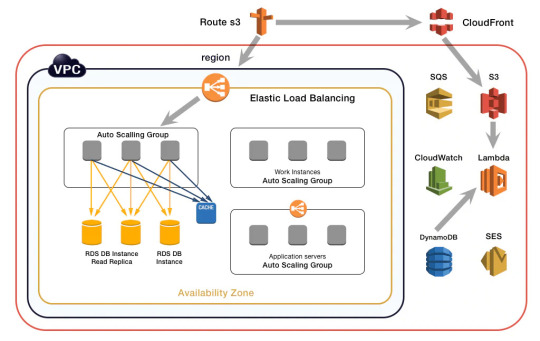

2. Explore Key AWS Services in Our AWS Training Program at Electronic City, Bangalore

The AWS Course in Electronic City Bangalore includes an in-depth understanding of core services that form the backbone of cloud computing. These services encompass computing capabilities, data storage options, database administration, and network infrastructure. Each service integrates seamlessly to deliver flexibility and scalability for various business applications.

Hands-on training is vital for mastering these AWS services. At eMexo Technologies, a leading AWS Training Institute in Electronic City Bangalore, students work on real-world projects that reinforce theoretical concepts with practical application.

Learners gain practical experience with Amazon EC2 for computing, S3 for scalable storage, and RDS for managed databases. Real-time labs and use cases provide the skills employers seek in today’s competitive job market.

The AWS ecosystem can be complex, but structured instruction simplifies the journey. With expert trainers by your side, you’ll learn not just how to use AWS—but how to use it well.

3. Master the Art of Creating Scalable Cloud Solutions with Our AWS Certification Training in Electronic City Bangalore.

Designing and deploying scalable architectures is a key focus of the AWS Certification Course in Electronic City Bangalore. These skills are essential for creating cloud systems that can handle changing workloads with ease and efficiency.

AWS offers robust services like Auto Scaling and Elastic Load Balancing, which are covered extensively in the course. These tools enable dynamic resource allocation to maintain performance during traffic spikes—crucial for any growing business.

You’ll also explore microservices architecture and container orchestration using Amazon ECS and EKS, ensuring your deployments are resilient and scalable. Best practices for high availability and fault tolerance using multiple Availability Zones are taught to help you build strong, reliable systems.

4. Cloud Monitoring, Security, and Cost Control – Taught at the Best AWS Training in Electronic City Bangalore

Maintaining performance and cost-efficiency is critical in AWS. In this module, part of the Best AWS Training in Electronic City Bangalore, you’ll explore essential tools like Amazon CloudWatch for monitoring and AWS IAM for secure access control.

Security fundamentals are emphasized, ensuring you understand how to manage access, encrypt data, and comply with industry standards. Gaining expertise in these skills is crucial for anyone aiming to succeed as an AWS professional.

You'll also dive deep into cost optimization techniques—gain hands-on experience with AWS Cost Explorer, and discover how to automate processes that help cut down unnecessary spending. These lessons are especially useful for professionals managing real-world cloud budgets.

At eMexo Technologies, a respected AWS Training Center in Electronic City Bangalore, students gain both the knowledge and the tools to maintain a secure, efficient, and well-managed cloud environment.

5. AWS Training and Placement in Electronic City Bangalore: Exam Prep and Career Guidance

Preparing for certification and finding the right job go hand-in-hand. That’s why the AWS Training and Placement in Electronic City Bangalore at eMexo Technologies includes both technical training and career development support.

You’ll benefit from certification-specific resources including mock exams, study materials, and real-time project experience—all aligned with current AWS exam formats.

In addition to technical prep, we offer career-building services such as resume writing, mock interviews, and job placement assistance. These features make our program one of the most comprehensive options for AWS Certification Course in Electronic City Bangalore.

Graduates of our program walk away not just certified—but confident. Whether you're starting fresh or upskilling for your next promotion, eMexo Technologies is the AWS Training Institute in Electronic City Bangalore that helps you get there.

Final Thoughts: If you're aiming for top-tier AWS training in Electronic City, Bangalore, eMexo Technologies is the smart choice.

The cloud computing industry is evolving rapidly, and AWS skills are in high demand. With eMexo Technologies, you're choosing a trusted AWS Training Center in Electronic City Bangalore that combines expert instruction, practical experience, and career support into one powerful training solution.

Whether you're interested in the AWS Course in Electronic City Bangalore, preparing for certification, or seeking job placement, we’re here to help. Take the first step toward a future-ready career—join our AWS Training and Placement in Electronic City Bangalore today!

📞 Contact: +91 9513216462 / [email protected]

📍 Location: Electronic City, Bangalore

🌐 Learn more and register here!

https://www.emexotechnologies.com/courses/aws-solution-architect-certification-training-course/

#AWS#AWSTraining#AWSCourse#LearnAWS#AWSDeveloper#AWSSkills#AWSDevelopment#EC2#S3#IAM#CloudFormation#Lambda#AWSCLI#AWSConsole#RDS#DynamoDB#DevOps#Terraform#Kubernetes#CloudComputing#CloudTraining#ITTraining#CloudDevelopment#CareerGrowth#AWSTrainingCenterInBangalore#AWSTrainingInElectronicCityBangalore#AWSCourseInElectronicCityBangalore#AWSTrainingInstitutesInElectronicCityBangalore#AWSClassesInElectronicCityBangalore#BestAWSTrainingInElectronicCityBangalore

0 notes

Text

I think the choice in the first single shows that Hayden is aware of this. In the EPs the narrator is always at least semi neutral. And obv PD is from the perspective of Ethel.

"Spoilers" for the verses of punish under the cut. But like... She performed it live and put these lyrics up on genius herself. Y'all have been warned.

If the verses in punish are to be taken literally, this is from the perspective of a rapist. I think whether they are or aren't meant to be taken literally, this album concept is gonna turn off a lot of people. I think Hayden is very aware of that and that it was potentially intentional.

The response to the announcement of Perverts online, specifically on TikTok, has confused me. I did not expect to see so many people surprised and even disgusted by the simple title of the album. Claiming that it's gone a step too far, or it's going to be hard to repost or interact with an album called 'Perverts' is very telling of how few people have actually analyzed or sincerely engaged with hayden's previous works. Of course it's okay to lightheartedly enjoy a piece of media, but an unconventional album title should be nothing surprising based on the topics hayden has an affinity for discussing. Perverts is going to be heavy and it's going to be dark, but so was Preacher's Daughter, Inbred, Golden Age, and Carpet Beds. The simplification of these works deeply saddens me. Why should anyone's main focus when enjoying a piece of media be the thought of how much online interaction it will get when you post about it?

2K notes

·

View notes

Text

Duchy, London EC2: ‘The small plates concept, once so edgy, shows no sign of relenting’ – restaurant review | Restaurants

I felt a compulsion to go to Duchy, in east London, because I had dined at its predecessor, Leroy, in 2018, as well as its genesis, Ellory, in 2015. These three different restaurants share DNA. Yes, 10 years have passed, but very little in the pared-back, pan-European anchovies-on-a-plate-for-£12 dining scene has moved on. No-frills decor, bare-brick walls, earnest small plates, staff with…

1 note

·

View note

Text

Pinterest Boosts Service Reliability by Overcoming AWS EC2 Network Throttling

Pinterest, a platform synonymous with creativity and inspiration, relies heavily on robust infrastructure to deliver seamless user experiences. However, like many tech giants, it faced significant challenges with AWS EC2 network throttling, which threatened service reliability. Overcoming AWS EC2 network throttling became a critical mission for Pinterest’s engineering team to ensure uninterrupted access for millions of users. This blog explores how Pinterest tackled this issue, optimized its cloud infrastructure, and boosted service reliability while maintaining scalability and performance.

Understanding AWS EC2 Network Throttling

AWS EC2 (Elastic Compute Cloud) is a cornerstone of modern cloud computing, offering scalable virtual servers. However, one limitation that organizations like Pinterest encounter is network throttling, where AWS imposes bandwidth limits on EC2 instances to manage resource allocation. These limits can lead to performance bottlenecks, especially for high-traffic platforms handling massive data transfers.

What Causes Network Throttling in AWS EC2?

Network throttling in AWS EC2 occurs when an instance exceeds its allocated network bandwidth, which is determined by the instance type and size. For example, smaller instances like t2.micro have lower baseline network performance compared to larger ones like m5.large. When traffic spikes or data-intensive operations occur, throttling can result in packet loss, increased latency, or degraded user experience.

Why Pinterest Faced This Challenge

Pinterest’s platform, with its image-heavy content and real-time user interactions, demands significant network resources. As user engagement grew, so did the strain on EC2 instances, leading to throttling issues that impacted service reliability. The need to address this became urgent to maintain Pinterest’s reputation for fast, reliable access to visual content.

Strategies Pinterest Employed to Overcome Throttling

To tackle AWS EC2 network throttling, Pinterest adopted a multi-faceted approach that combined infrastructure optimization, advanced monitoring, and strategic resource allocation. These strategies not only mitigated throttling but also enhanced overall system performance.

Optimizing Instance Types for Network Performance

One of Pinterest’s first steps was to reassess its EC2 instance types. The team identified that certain workloads were running on instances with insufficient network bandwidth.

Upgrading to Network-Optimized Instances

Pinterest migrated critical workloads to network-optimized instances like the C5 or R5 series, which offer enhanced networking capabilities through Elastic Network Adapter (ENA). These instances provide higher baseline bandwidth and support features like Enhanced Networking, reducing the likelihood of throttling during peak traffic.

Rightsizing Instances for Workloads

Rather than over-provisioning resources, Pinterest’s engineers conducted thorough workload analysis to match instance types to specific tasks. For example, compute-intensive tasks were assigned to C5 instances, while memory-intensive operations leveraged R5 instances, ensuring optimal resource utilization.

Implementing Advanced Traffic Management

Effective traffic management was crucial for overcoming AWS EC2 network throttling. Pinterest implemented several techniques to distribute network load efficiently.

Load Balancing with AWS Elastic Load Balancer (ELB)

Pinterest utilized AWS ELB to distribute incoming traffic across multiple EC2 instances. By spreading requests evenly, ELB prevented any single instance from hitting its network limits, reducing the risk of throttling. The team also configured Application Load Balancers (ALB) for more granular control over HTTP/HTTPS traffic.

Auto-Scaling for Dynamic Traffic Spikes

To handle sudden surges in user activity, Pinterest implemented auto-scaling groups. These groups automatically adjusted the number of active EC2 instances based on real-time demand, ensuring sufficient network capacity during peak periods like holiday seasons or viral content surges.

Enhancing Monitoring and Alerting

Proactive monitoring was a game-changer for Pinterest in identifying and addressing throttling issues before they impacted users.

Real-Time Metrics with Amazon CloudWatch

Pinterest leveraged Amazon CloudWatch to monitor network performance metrics such as NetworkIn, NetworkOut, and Packet Loss. Custom dashboards provided real-time insights into instance health, enabling engineers to detect throttling events early and take corrective action.

Custom Alerts for Throttling Thresholds

The team set up custom alerts in CloudWatch to notify them when network usage approached throttling thresholds. This allowed Pinterest to proactively scale resources or redistribute traffic, minimizing disruptions.

Leveraging VPC Endpoints and Direct Connect

To further reduce network bottlenecks, Pinterest optimized its AWS network architecture.

Using VPC Endpoints for Internal Traffic

Pinterest implemented AWS VPC Endpoints to route traffic to AWS services like S3 or DynamoDB privately, bypassing public internet routes. This reduced external network dependency, lowering the risk of throttling for data-intensive operations.

AWS Direct Connect for Stable Connectivity

For critical workloads requiring consistent, high-bandwidth connections, Pinterest adopted AWS Direct Connect. This dedicated network link between Pinterest’s on-premises infrastructure and AWS ensured stable, low-latency data transfers, further mitigating throttling risks.

The Role of Caching and Content Delivery

Pinterest’s image-heavy platform made caching and content delivery optimization critical components of its strategy to overcome network throttling.

Implementing Amazon CloudFront

Pinterest integrated Amazon CloudFront, a content delivery network (CDN), to cache static assets like images and videos at edge locations worldwide. By serving content closer to users, CloudFront reduced the network load on EC2 instances, minimizing throttling incidents.

In-Memory Caching with Amazon ElastiCache

To reduce database query loads, Pinterest used Amazon ElastiCache for in-memory caching. By storing frequently accessed data in Redis or Memcached, Pinterest decreased the network traffic between EC2 instances and databases, improving response times and reducing throttling risks.

Measuring the Impact of Pinterest’s Efforts

After implementing these strategies, Pinterest saw significant improvements in service reliability and user experience.

Reduced Latency and Improved Uptime

By optimizing instance types and leveraging advanced traffic management, Pinterest reduced latency by up to 30% during peak traffic periods. Uptime metrics also improved, with fewer incidents of downtime caused by network throttling.

Cost Efficiency Without Compromising Performance

Pinterest’s rightsizing efforts ensured that resources were used efficiently, avoiding unnecessary costs. By choosing the right instance types and implementing auto-scaling, the company maintained high performance while keeping infrastructure costs in check.

Enhanced User Satisfaction

With faster load times and fewer disruptions, Pinterest users reported higher satisfaction. The platform’s ability to handle traffic spikes during major events, like seasonal campaigns, strengthened its reputation as a reliable service.

Lessons Learned from Pinterest’s Approach

Pinterest’s success in overcoming AWS EC2 network throttling offers valuable lessons for other organizations facing similar challenges.

Prioritize Proactive Monitoring

Real-time monitoring and alerting are essential for identifying potential throttling issues before they impact users. Tools like CloudWatch can provide the insights needed to stay ahead of performance bottlenecks.

Balance Scalability and Cost

Choosing the right instance types and implementing auto-scaling can help organizations scale resources dynamically without overspending. Rightsizing workloads ensures cost efficiency while maintaining performance.

Leverage AWS Ecosystem Tools

AWS offers a suite of tools like ELB, CloudFront, and VPC Endpoints that can significantly reduce network strain. Integrating these tools into your architecture can enhance reliability and performance.

Future-Proofing Pinterest’s Infrastructure

As Pinterest continues to grow, its engineering team remains focused on future-proofing its infrastructure. Ongoing efforts include exploring serverless architectures, such as AWS Lambda, to offload certain workloads from EC2 instances. Additionally, Pinterest is investing in machine learning to predict traffic patterns and optimize resource allocation proactively.

Adopting Serverless for Flexibility

By incorporating serverless computing, Pinterest aims to reduce its reliance on EC2 instances for specific tasks, further minimizing the risk of network throttling. Serverless architectures can scale automatically, providing a buffer against sudden traffic spikes.

Predictive Analytics for Resource Planning

Using machine learning models, Pinterest is developing predictive analytics to forecast user activity and allocate resources accordingly. This proactive approach ensures that the platform remains resilient even during unexpected surges.

Overcoming AWS EC2 network throttling was a pivotal achievement for Pinterest, enabling the platform to deliver reliable, high-performance services to its global user base. By optimizing instance types, enhancing traffic management, leveraging caching, and adopting proactive monitoring, Pinterest not only addressed throttling but also set a foundation for future scalability. These strategies offer a blueprint for other organizations navigating similar challenges in the cloud, proving that with the right approach, even the most complex infrastructure hurdles can be overcome.

#Pinterest#ServiceReliability#AWS#EC2#NetworkThrottling#CloudComputing#TechSolutions#Infrastructure#PerformanceOptimization#Scalability

0 notes

Text

Software Development Engineer, EC2 Instance Networking

Job title: Software Development Engineer, EC2 Instance Networking Company: Amazon Job description: DESCRIPTION Do you want to shape the future of virtualized (SDN) networking in one of the world’s biggest public… performance of bare metal networking while maintaining all the benefits of the cloud, including delivering features… Expected salary: $129300 per year Location: Sunnyvale, CA Job date:…

0 notes

Text

PROJECT 6 - AWS End-To-End Data Engineering in 15 min | EC2 - Slack - Airflow - VS Code

In this video, you’ll learn as simply as possible what AWS EC2 is and carry out your fourth project. You will combine AWS EC2, … source

0 notes

Text

just realized that like. branzy committed significantly fewer war crimes in echocraft s2 than he did in s3. and im like. [side-eyes ec2 finale]. huh. hm. huh.

#*the archivist speaks#[vi]#branzy#echocraft#ec2#Something about. whatever the hell was going in those last 5 episodes Changed him.#im not sure if it was the possession or the trauma or the portal#but like. There was Something About That.

16 notes

·

View notes

Text

Hello everyone, guess who has two thumbs and was fired from work today

( Meeeee :3 )

I'm drunk and also the best programmer in the world but what can you do

The two IT directors who hired me three years ago quit and both tried to poach me after they left

(One successfully lol so at least I've got another bun in the oven for now)

And then the new IT director started and everything changed, morale has never been lower, and I was ready to go but still needed the money.

But I was def blind sided by today

I just spent all weekend migrating our MarCom EC2 servers from AL2 to AL2023 along with their respective Wordpress sites, SSLs, and databases.

I was very proud and no one acknowledged it :I So i guess I'm happy to be out of there.

But still. Sad day to be veryattractive.

( they cut off my credentials as I walked out the door, but I still have the api keys to their master database, I should delete all the students debts )

( they have back ups of all the databases anyway that i don't have access to anymore, it would be a lost cause )

1 note

·

View note

Text

Comprehensive AWS Server Guide with Programming Examples | Learn Cloud Computing

Explore AWS servers with this detailed guide . Learn about EC2, Lambda, ECS, and more. Includes step-by-step programming examples and expert tips for developers and IT professionals

Amazon Web Services (AWS) is a 🌐 robust cloud platform. It offers a plethora of services. These services range from 💻 computing power and 📦 storage to 🧠 machine learning and 🌐 IoT. In this guide, we will explore AWS servers comprehensively, covering key concepts, deployment strategies, and practical programming examples. This article is designed for 👩💻 developers, 🛠️ system administrators, and…

#AWS#AWSDeveloper#AWSGuide#AWSLambda#CloudComputing#CloudInfrastructure#CloudSolutions#DevOps#EC2#ITProfessionals#programming#PythonProgramming#Serverless

0 notes

Text

Future-dated Capacity Reservations For AWS EC2 On-Demand

Declaring future-dated Capacity Reservations for Amazon EC2 On-Demand Capacity

Amazon Elastic Compute Cloud (EC2) is used for databases, virtual desktops, big data processing, web hosting, HPC, and live event broadcasting. Customers requested the flexibility to reserve capacity for certain workloads because they are so important.

In 2018, EC2 On-Demand Capacity Reservations (ODCRs) were introduced to enable users to reserve capacity flexibly. Customers have since utilized capacity reservations (CRs) to run vital services such as processing financial transactions, hosting consumer websites, and live-streaming sporting events.

Today, AWS reveals that CRs can be used to determine the capacity for upcoming workloads. Many customers anticipate future events, such as product releases, significant migrations, or end-of-year sales occasions like Diwali or Cyber Monday. Since these events are crucial, customers want to be sure they have the capacity when and where they need it.

CRs were only available just-in-time, but they assisted clients in reserving capacity for certain events. Customers were therefore need to either carefully prepare to provision CRs just-in-time at the beginning of the event or provision the capacity in advance and pay for it.

Your CRs can now be planned and scheduled up to 120 days ahead of time. To begin, you provide the amount of capacity you require, the start date, your preferred delivery method, and the minimum amount of time you are willing to use the capacity reservation. Making a capacity reservation doesn’t cost anything up ahead. Following evaluation and approval of the request, Amazon EC2 will activate the reservation on the start date, allowing users to begin instances right away.

Beginning to make future-dated Capacity Reservations

Select Capacity Reservations in the Amazon EC2 console, then click Create On-Demand Capacity Reservation and click Get Started to reserve your future-dated capacity.

Indicate the instance type, platform, Availability Zone, platform, tenancy, and quantity of instances you want to reserve in order to create a capacity reservation.

Select the Capacity Reservation information section. Choose your start date and commitment period later on in the Capacity Reservation starts option.

Additionally, you can decide to manually or at a specified time terminate the capacity reserve. The reservation has no expiry date if you choose Manually. Unless you actively cancel it, it will stay in your account and keep getting billed. Click Create to reserve this capacity.

Your capacity request will show up in the dashboard with an Assessing status after it has been created. AWS systems will try to ascertain whether your request is supportable during this phase, which typically takes five days. The status will be changed to Scheduled as soon as the systems decide the request is feasible. Rarely, your request might not be able to be supported.

The capacity reserve will become Active on the day you have chosen, the total number of instances will be raised to the desired number, and you will be able to start instances right away.

You have to keep the reservation for the minimum amount of time specified when it is activated. You have the option to either cancel the reservation if it is no longer needed or keep it and use it if you would like after the commitment period has passed.

Things to consider

The following information concerning the future-dated Capacity Reservations is important for you to know:

Evaluation: When assessing your request, Amazon EC2 takes into account several parameters. In addition to the anticipated supply, Amazon EC2 considers the size of your request, the length of time you intend to hold the capacity, and how early you make the capacity reservation about your start date. Create your reservation at least 56 days (8 weeks) before the commencement date to increase Amazon EC2’s capacity to fulfill your request. For instance types C, M, R, T, and I only, you must require a minimum of 100 virtual CPUs. For the majority of requests, a 14-day minimum commitment is advised.

Notification: It suggests using the console or Amazon EventBridge to keep an eye on the progress of your request. These alerts can be used to send an email or SMS update or to start an automation process.

Cost: Future-dated capacity reservations are charged in the same way as standard CRs. Whether you run instances in reserved capacity or not, you are still charged the same On-Demand fee. For instance, you will be charged for 15 active instances and 5 unused instances in the reservation, including the minimum period, if you establish a future-dated CR for 20 instances and operate 15 instances. Both instances that are operating on the reservation and reservations that are not being used are covered by savings plans.

Currently offered

In all AWS regions where Amazon EC2 Capacity Reservations are available, EC2 future-dated Capacity Reservations are now accessible. Try Amazon EC2 Capacity Reservations in the Amazon EC2 console.

Read more on Govindhtech.com

#AWSEC2#CapacityReservations#AWSsystems#CRs#EC2#databases#cloudcomputing#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

EC2 Auto Recovery: Ensuring High Availability In AWS

Understanding EC2 Auto Recovery: Ensuring High Availability for Your AWS Instances

Amazon Web Services (AWS) offers a wide range of services to ensure the high availability and resilience of your applications. One such feature is EC2 Auto Recovery, a valuable tool that helps you maintain the health and uptime of your EC2 instances by automatically recovering instances that become impaired due to underlying hardware issues. This blog will guide you through the essentials of EC2 Auto Recovery, including its benefits, how it works, and how to set it up.

1. What is EC2 Auto Recovery?

EC2 Auto Recovery is a feature that automatically recovers your Amazon EC2 instances when they become impaired due to hardware issues or certain software issues. When an instance is marked as impaired, the recovery process stops and starts the instance, moving it to healthy hardware. This process minimizes downtime and ensures that your applications remain available and reliable.

2. Benefits of EC2 Auto Recovery

Increased Availability: Auto Recovery helps maintain the availability of your applications by quickly recovering impaired instances.

Reduced Manual Intervention: By automating the recovery process, it reduces the need for manual intervention and the associated operational overhead.

Cost-Effective: Auto Recovery is a cost-effective solution as it leverages the existing infrastructure without requiring additional investment in high availability setups.

3. How EC2 Auto Recovery Works

When an EC2 instance becomes impaired, AWS CloudWatch monitors its status through health checks. If an issue is detected, such as an underlying hardware failure or a software issue that causes the instance to fail the system status checks, the Auto Recovery feature kicks in. It performs the following actions:

Stops the Impaired Instance: The impaired instance is stopped to detach it from the unhealthy hardware.

Starts the Instance on Healthy Hardware: The instance is then started on new, healthy hardware. This process involves retaining the instance ID, private IP address, Elastic IP addresses, and all attached Amazon EBS volumes.

4. Setting Up EC2 Auto Recovery

Setting up EC2 Auto Recovery involves configuring a CloudWatch alarm that monitors the status of your EC2 instance and triggers the recovery process when necessary. Here are the steps to set it up:

Step 1: Create a CloudWatch Alarm

Open the Amazon CloudWatch console.

In the navigation pane, click on Alarms, and then click Create Alarm.

Select Create a new alarm.

Choose the EC2 namespace and select the StatusCheckFailed_System metric.

Select the instance you want to monitor and click Next.

Step 2: Configure the Alarm

Set the Threshold type to Static.

Define the Threshold value to trigger the alarm when the system status check fails.

Configure the Actions to Recover this instance.

Provide a name and description for the alarm and click Create Alarm.

5. Best Practices for Using EC2 Auto Recovery

Tagging Instances: Use tags to organize and identify instances that have Auto Recovery enabled, making it easier to manage and monitor them.

Monitoring Alarms: Regularly monitor CloudWatch alarms to ensure they are functioning correctly and triggering the recovery process when needed.

Testing Recovery: Periodically test the Auto Recovery process to ensure it works as expected and to familiarize your team with the process.

Using IAM Roles: Ensure that appropriate IAM roles and policies are in place to allow CloudWatch to perform recovery actions on your instances.

Conclusion

EC2 Auto Recovery is a powerful feature that enhances the availability and reliability of your applications running on Amazon EC2 instances. By automating the recovery process for impaired instances, it helps reduce downtime and operational complexity. Setting up Auto Recovery is straightforward and involves configuring CloudWatch alarms to monitor the health of your instances. By following best practices and regularly monitoring your alarms, you can ensure that your applications remain resilient and available even in the face of hardware or software issues.

By leveraging EC2 Auto Recovery, you can focus more on developing and optimizing your applications, knowing that AWS is helping to maintain their availability and reliability.

0 notes