#Gated Recurrent Unit (GRU)

Explore tagged Tumblr posts

Text

coincidence? i think not

#coincidence? i think not#machine learning#gru#gated recurrent unit#i am talking#i have an mlp on too...#despicable me

2 notes

·

View notes

Text

Discover the fundamentals of artificial intelligence through our Convolutional Neural Network (CNN) mind map. This visual aid demystifies the complexities of CNNs, pivotal for machine learning and computer vision. Ideal for enthusiasts keen on grasping image processing. Follow Softlabs Group for more educational resources on AI and technology, enriching your learning journey.

#Recurrent layer#Hidden state#Long Short-Term Memory (LSTM)#Gated Recurrent Unit (GRU)#Sequence modeling

0 notes

Text

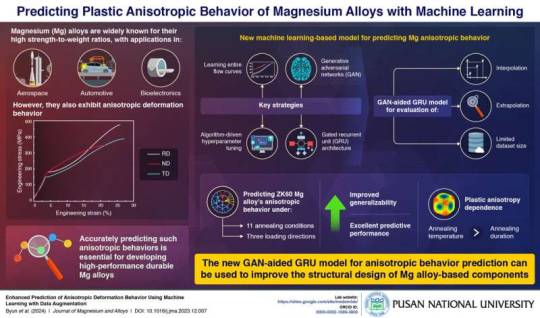

Researchers employ artificial intelligence to unlock the secrets of magnesium alloy anisotropy

Magnesium (Mg) alloys have been popularly used for designing aerospace and automotive parts owing to their high strength-to-weight ratio. Their biocompatibility and low density also make these alloys ideal for use in biomedical and electronic equipment. However, Mg alloys are known to exhibit plastic anisotropic behavior. In other words, their mechanical properties vary depending on the direction of the applied load. To ensure that the performance of these Mg alloys is unaffected by this anisotropic behavior, a better understanding of the anisotropic deformations and the development of models for their analysis is needed. According to Metal Design & Manufacturing (MEDEM) Lab led by Associate Professor Taekyung Lee from Pusan National University, Republic of Korea, machine learning (ML) might hold answers to this prediction problem. In their recent breakthrough, the team proposed a novel approach called "Generative adversarial networks (GAN)-aided gated recurrent unit (GRU)."

Read more.

#Materials Science#Science#Artificial intelligence#Magnesium#Anisotropy#Machine learning#Computational materials science#Pusan National University

6 notes

·

View notes

Link

0 notes

Text

How RNNs Imitate Memory: A Friendly Guide to Sequence Modeling

In today’s fast-moving world of artificial intelligence and machine learning, understanding how models process sequences of data is essential. Whether it’s predicting the next word in a sentence, transcribing speech, or forecasting stock prices, Recurrent Neural Networks (RNNs) play a crucial role. But how exactly do these models manage to "remember" past information, and why are they so powerful when it comes to handling sequential data? Let’s break it down in simple terms.

What Are RNNs and Why Do They Matter?

At their core, Recurrent Neural Networks are a type of neural network designed specifically to work with sequences. This sets them apart from traditional feedforward networks, which treat each input independently. RNNs, however, take into account what has come before — almost like they have a built-in short-term memory. This allows them to understand the order of things and how past events influence the present, making them perfect for tasks where timing and sequence matter.

How Do RNNs Mimic Memory?

RNNs don’t literally have memory like a human brain, but they do a good job of approximating it. Here’s how:

1. Passing Information Forward

Imagine reading a sentence one word at a time. With each word, you remember the previous ones to make sense of the sentence. RNNs do something similar by passing information from one step to the next using what's called a hidden state.

This hidden state is updated every time the model processes a new input. So at each time step, the network not only looks at the current input but also considers what it "remembers" from before. The formula might look technical, but in essence, it's just constantly refreshing its understanding of context.

2. Maintaining Continuity

Because of this hidden state, RNNs can handle data where one piece depends on what came before — like understanding a sentence, predicting the next value in a time series, or generating music. They essentially maintain a thread of continuity, similar to how our brains follow conversations or narratives.

3. Handling Longer Sequences

Standard RNNs can struggle with long-term memory due to issues like the vanishing gradient problem, which makes it difficult for them to retain information over long sequences. That’s where advanced models like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU) come in. These architectures introduce gates that help the network decide what to keep and what to forget — much like how we might focus on important details and disregard irrelevant ones.

Where Do We See RNNs in Action?

The practical applications of RNNs are everywhere:

Chatbots and virtual assistants rely on RNNs to maintain context and generate coherent replies.

Speech-to-text systems use them to process audio signals in sequence, converting speech into accurate text.

Financial forecasting and weather prediction models use RNNs to look at historical data and predict future trends.

Even video analysis applications use RNNs to understand sequences of frames and recognize patterns over time.

Why Learning RNNs and Sequence Modeling Matters

While it’s fascinating to read about RNNs, working with them in real-world projects brings a completely new level of understanding. Building models, tuning hyperparameters, and dealing with real data challenges are skills best learned through practical, hands-on training.

If you’re eager to dive into this field and you're in India — especially around Kolkata — the Machine Learning Course in Kolkata is an excellent place to start.

Learn from Experts at the Boston Institute of Analytics, Kolkata

The Boston Institute of Analytics (BIA) is known globally for providing industry-relevant training in machine learning, AI, and data science. Their Machine Learning Course in Kolkata is designed to help aspiring data professionals gain practical knowledge and hands-on experience.

Here’s what you can expect from their program:

Hands-on projects using real-world data sets that help you move beyond theory.

In-depth modules covering neural networks, RNNs, LSTMs, GRUs, and other advanced architectures.

Training in popular tools and libraries like Python, TensorFlow, Keras, and PyTorch.

Access to experienced instructors who are active in the data science and AI industry.

Strong placement support and career guidance to help you make the transition into a data-driven career.

Trust, Authority, and Experience Matter

When you choose to learn something as complex and future-focused as machine learning and deep learning, it’s important to do so from a credible, trusted institution. The Boston Institute of Analytics has built its reputation through:

An impressive track record of alumni placed in companies like Google, Amazon, and Deloitte.

Strong industry partnerships and endorsements.

Transparent, practical, and well-structured courses that are globally recognized.

This ensures that when you complete their program, you’re not just gaining knowledge — you're gaining the confidence to apply it in real-world scenarios.

The Future of Sequence Modeling: Endless Possibilities

As AI continues to grow, sequence modeling will only become more relevant. Technologies that understand time, order, and context are key to unlocking new levels of human-computer interaction. Whether it’s smarter voice assistants, real-time language translation, or predictive healthcare analytics, RNNs and their evolved forms (like LSTMs and GRUs) will continue to be at the heart of these innovations.

Final Thoughts

RNNs are powerful because they mimic a type of memory, enabling machines to understand sequences and patterns that unfold over time. From simple tasks like predicting the next word in a sentence to complex applications like forecasting stock prices or analyzing video footage — they’re everywhere.

But more importantly, they’re accessible. With the right training, anyone with curiosity and commitment can learn how to use these models. If you’re looking to start your journey in AI and machine learning, enrolling in the Data Science Course could be the perfect first step.

#data science course#data science training#ai training program#online data science course#Best Data Science Institute#Data Science Program#Best Data Science Programs#Machine Learning Course in Kolkata

0 notes

Text

Deep Learning for Time Series Prediction: LSTM & GRU Guide

1. Introduction 1.1. Overview and Importance Time-series prediction is a critical task across various domains, from finance to healthcare, involving forecasting future values based on historical data. Deep learning, particularly with LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) models, has emerged as a powerful approach, offering superior performance over traditional methods by…

0 notes

Text

Recurrent Neural Network Courses:

In the field of deep learning, recurrent neural networks (RNNs) have emerged as a key component, especially for processing sequential input such as text, audio, and time series. RNNs possess loops that enable them to retain information over time steps, which sets them apart from standard feed forward neural networks and makes them particularly effective for jobs requiring context. This article will explore the role that RNNs play in deep learning, including how to train them efficiently and which courses are the best to become proficient in them.

Neural networks of the RNN class are very good at handling data sequences, which makes them perfect for time series prediction, machine translation, and natural language processing (NLP). RNNs' "memory their ability to retain data from past inputs in their hidden states and use that information to affect subsequent outputs is its primary characteristic.

Why Use RNNs in Deep Learning?

Sequential data is frequently essential for deep learning. RNNs can capture dependencies across time in a variety of applications, including interpreting phrase context, assessing a series of photographs, and forecasting market prices based on historical trends. They are therefore especially well-suited for tasks involving sequential patterns and context. But problems like vanishing gradients make vanilla RNNs unreliable on lengthy sequences, which might impede learning. Thankfully, more sophisticated versions have been developed to get around these restrictions, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU).

Recurrent Neural Network Training:

When training RNNs, there are a few different problems than with standard neural networks. Back propagation Through Time (BPTT), a technique for propagating error gradients through time, is used in the process of modifying the weights based on sequential input data. Optimization is challenging, though, because traditional back propagation frequently encounters problems like vanishing or ballooning gradients, particularly with lengthy sequences.

The following are some crucial factors to take into account when training RNNs:

i)Selecting the Correct Architecture:

When handling lengthy sequences or intricate dependencies, LSTM and GRU networks frequently outperform vanilla RNNs.

ii)Optimization Strategies:

While learning rate schedules and batch normalization can enhance convergence, gradient clipping can help reduce the effects of expanding gradient issues.

iii)Regularization:

Especially when working with large datasets, dropout and other regularization techniques help prevent overfitting.

iv)Hardware Points to Remember:

RNN training can be computationally demanding; therefore, making use of GPUs and distributed computing frameworks such as PyTorch or TensorFlow can greatly accelerate the training process.

Top Courses on Recurrent Neural Networks:

Numerous Best online courses are available to help you become proficient with RNNs; they include both theoretical information and real-world, practical experience. Here are a few highly suggested items:

i)Andrew Ng's Deep Learning Specialization:

A thorough introduction to deep learning is provided by this course, which also includes a thorough module on sequence models that covers RNN in deep learning, LSTMs, and GRUs. TensorFlow is used in both theoretical and hands-on Python coding projects in Andrew Ng's course.

ii)An Introduction to Recurrent Neural Networks:

For those who are new to RNNs, this course is a fantastic place to start. It goes over the fundamentals of RNN theory, shows you how to use Keras to create RNNs in Python, and contains a number of projects, including sentiment analysis and text generation.

iii) Deep learning and advanced NLP:

While it covers more ground than simply RNNs and touches on more complex architectures like Transformer models, Stanford's NLP with deep learning course is a great resource for anyone interested in learning how RNNs fit into the larger picture of NLP. Comprehensive coverage of GRU and LSTM networks is included.

iv)PyTorch for AI and Deep Learning:

For individuals who would rather use PyTorch than TensorFlow, this course is perfect. It uses PyTorch to teach RNNs and other sequence models, with real-world examples including time series data prediction and character-level language model implementation.

In summary,

Deep learning has advanced significantly, thanks in large part to recurrent neural networks, particularly in fields where sequential data processing is necessary. However, it takes both theoretical knowledge and AI-Applications to properly teach them and comprehend their subtleties. Anyone may learn RNNs and use them to solve a wide range of challenging issues, from predictive analytics to language processing, if they enroll in the appropriate courses.

Investing through SkillDux in RNN courses can provide you with a thorough understanding of sequence models and the skills necessary to effectively address real-world problems, regardless of your level of experience.

0 notes

Text

Large Language Models feel the direction of time

16.09.24 - Researchers have found that AI large language models, like GPT-4, are better at predicting what comes next than what came before in a sentence. This “Arrow of Time” effect could reshape our understanding of the structure of natural language, and the way these models understand it. Large language models (LLMs) such as GPT-4 have become indispensable for tasks like text generation, coding, operating chatbots, translation and others. At their heart, LLMs work by predicting the next word in a sentence based on the previous words – a simple but powerful idea that drives much of their functionality. But what happens when we ask these models to predict backward — to go “backwards in time” and determine the previous word from the subsequent ones? The question led Professor Clément Hongler at EPFL and Jérémie Wenger of Goldsmiths (London) to explore whether LLMs could construct a story backward, starting from the end. Working with Vassilis Papadopoulos, a machine learning researcher at EPFL, they discovered something surprising: LLMs are consistently less accurate when predicting backward than forward. A fundamental asymmetry The researchers tested LLMs of different architectures and sizes, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) neural networks. Every one of them showed the “Arrow of Time” bias, revealing a fundamental asymmetry in how LLMs process text. Hongler explains: “The discovery shows that while LLMs are quite good both at predicting the next word and the previous word in a text, they are always slightly worse backwards rather than forward: their performance at predicting the previous word is always a few percent worse than at predicting the next word. This phenomenon is universal across languages, and can be observed with any large language model.” The work is also connected to the work of Claude Shannon, the father of Information Theory, in his seminal 1951 paper. Shannon explored whether predicting the next letter in a sequence was as easy as predicting the previous one. He discovered that although both tasks should theoretically be equally difficult, humans found backward prediction more challenging – though the performance difference was minimal. Intelligent agents “In theory, there should be no difference between the forward and backward directions, but LLMs appear to be somehow sensitive to the time direction in which they process text,” says Hongler. “Interestingly, this is related to a deep property of the structure of language that could only be discovered with the emergence of Large Language Models in the last five years.” The researchers link this property to the presence of intelligent agents processing information, meaning that it could be used as a tool to detect intelligence or life, and help design more powerful LLMs. Finally, it could point out new directions to the long-standing quest to understand the passage of time as an emergent phenomenon in physics. The work was presented at the prestigious International Conference on Machine Learning (2024) and is currently available on arXiv. From theater to math The study itself has a fascinating backstory, which Hongler relates: “In 2020, with Jérémie [Wenger], we were collaborating with The Manufacture theater school to make a chatbot that would play alongside actors to do improv; in improv, you often want to continue the story, while knowing what the end should look like. “In order to make stories that would finish in a specific manner, we got the idea to train the chatbot to speak ‘backwards’, allowing it to generate a story given its end – e.g., if the end is ‘they lived happily ever after’, the model could tell you how it happened. So, we trained models to do that, and noticed they were a little worse backwards than forwards. “With Vassilis [Papadopoulos], we later realized that this was a profound feature of language, and that it… http://actu.epfl.ch/news/large-language-models-feel-the-direction-of-time (Source of the original content)

1 note

·

View note

Link

Explaining how Gated Recurrent Neural Networks workContinue reading on Towards Data Science » #AI #ML #Automation

0 notes

Text

How is deep learning used in speech recognition?

Deep learning speechsynthesis:-Application of deep learning models to generate natural-sounding human speech from text

Key Techniques:-Utilizesdeep neural networks (DNN) trained with a large amount of recorded speech and text data

BreakthroughModels:-WaveNet by DeepMind, char2wav by Mila, Tacotron , and Tacotron2 by Google, VoiceLoop by Facebook

AcousticFeatures:-Typically use spectrograms or mel-spectrograms for modeling raw audio waveforms

Speech recognition is afield that involves converting spoken language into written text, enabling various applications such as voice assistants, dictation systems, and machine translation. Deep learning has significantly contributed to theadvancement of speech recognition, offering various architectures and techniques to improve accuracy and robustness.

Deep learning architectures for speech recognition include Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformers. RNNs are particularly suited for speech recognition tasks due to their ability to handle sequential data. Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) are popular variants of RNNs that address the vanishing gradient problem, enabling them to learn long-term dependencies in speech data.

Convolutional Neural Networks (CNNs) are another deep learning architecture successfully applied to speech recognition tasks. CNNs are particularly effective in extracting local features from spectrogram images, commonly used as input representations in speech recognition.

Transformers are a morerecent deep learning architecture with promising results in speech recognition tasks. Transformers are particularly effective in handling long-range dependencies in speech data, which is a common challenge in speech recognition tasks.

Deep learning techniquesfor speech recognition include Connectionist Temporal Classification (CTC), Attention Mechanisms, and End-to-End Deep Learning. CTC is a popular technique for speech recognition that allows for the direct mapping of input sequences to output sequences without the need for explicit alignment. Attention Mechanisms are another deep learning technique that has been successfully applied to speech recognition tasks, enabling models to focus on relevant parts of the input sequence for each output. End-to-end deep Learning is a more recent technique that involves training a single deep learning model to perform all steps of the speech recognition process, from feature extraction to decoding.

Deep learning hassignificantly improved the accuracy and robustness of speech recognition systems, enabling various applications such as voice assistants, dictation systems, and machine translation. However, there are still challenges to be addressed, such as handling noisy environments, dealing with different accents and dialects, and ensuring privacy and security.

In summary, deep learninghas revolutionized speech recognition, offering various architectures and techniques to improve accuracy and robustness. Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformers are popular deep learning architectures for speech recognition tasks, while Connectionist Temporal Classification (CTC), Attention Mechanisms, and End-to-End Deep Learning are popular deep learning techniques for speech recognition. Despite the significant progress made in speech recognition, there are still challenges to be addressed, such as handling noisy environments, dealing with different accents and dialects, and ensuring privacy and security.

There are some courses inmany courses like this in which one of the Top Engineering college in Jaipur Which is Arya College of Engineering & I.T.

0 notes

Text

Study on Neural Network-Guided Sparse Recovery for Interrupted-Sampling Repeater Jamming Suppression | Chapter 14 | Novel Perspectives of Engineering Research Vol. 8

ISRJ (interrupted-sampling repeater jamming) is a novel sort of DRFM-based jamming for linear frequency modulation (LFM) transmissions. ISRJ can obtain radar coherent processing gain by intercepting the radar signal slice and retransmitting it numerous times, resulting in the formation of multiple false target groups following pulse compression (PC), posing significant risks to radar imaging and target detection. However, in the time-frequency (TF) domain, the features of an ISRJ fragment interception can be exploited to discriminate from the true target signal. This study investigates the feasibility of exploiting the discontinuous distribution characteristics of ISRJ in the TF domain relative to the real target to create an adaptive interference suppression approach using a neural network. This work proposes a new method for ISRJ suppression based on the distribution characteristic of the echo signal and the coherence of ISRJ to radar signal. The position of the real target is calculated using a gated recurrent unit neural network (GRU-Net) in this method, and the real target may therefore be reconstructed by adaptive filtering in the sparse representation of the echo signal based on the target locating result. The reconstruction result comprises only the true target, and ISRJ's bogus target groups are totally suppressed. The projected GRU-Net has a target locating accuracy of up to. Simulations have demonstrated the efficacy of the proposed strategy. Author(S) Details Zijian Wang Aerospace Information Research Institute, Chinese Academy of Sciences, Beijing 100190, China and School of Electronic, Electrical and Communication Engineering, University of Chinese Academy of Sciences, Beijing 101408, China. Wenbo Yu Aerospace Information Research Institute, Chinese Academy of Sciences, Beijing 100190, China and School of Electronic, Electrical and Communication Engineering, University of Chinese Academy of Sciences, Beijing 101408, China. Zhongjun Yu Aerospace Information Research Institute, Chinese Academy of Sciences, Beijing 100190, China and School of Electronic, Electrical and Communication Engineering, University of Chinese Academy of Sciences, Beijing 101408, China. Yunhua Luo Aerospace Information Research Institute, Chinese Academy of Sciences, Beijing 100190, China. Jiamu Li Aerospace Information Research Institute, Chinese Academy of Sciences, Beijing 100190, China and School of Electronic, Electrical and Communication Engineering, University of Chinese Academy of Sciences, Beijing 101408, China. View Book:- https://stm.bookpi.org/NPER-V8/article/view/6120

#Interrupted-Sampling Repeater Jamming (ISRJ)#time-frequency (TF)#analysis#gated recurrent unit neural network (GRU-Net)#sparse representation#target locating#jamming suppression

0 notes

Text

Gated Recurrent Units (GRUs) are a type of recurrent neural network (RNN) architecture that was introduced as an alternative to traditional RNNs and Long Short-Term Memory (LSTM) units. Like other RNN variants, GRUs are designed to process sequential data and capture dependencies over time.

1 note

·

View note

Text

BME646 and ECE60146: Homework 8 solved

1 Introduction This homework has the following goals: 1. To gain insights into the workings of Recurrent Neural Networks. These are neural networks with feedback. You need such networks for language modeling, sequence-to-sequence learning (as in automatic translation systems), time-series data prediction, etc. 2. To understand how the gating mechanisms in GRU (Gated Recurrent Unit) helps combat…

View On WordPress

0 notes

Text

Evolution of Generative Artificial Intelligence for Text (ChatGPT)

Many companies and research organizations that are pioneers in AI have been actively contributing to the growth of generative AI content by bringing in & applying different AI models to fine-tune the precision of the output.

Before discussing the applications of generative AI in text and large language models, let’s see how the concept has evolved over the decades.

RNN sequencing

After researchers proposed the seq2seq algorithm, which is a class of Recurrent Neural Networks (RNN), it was later adopted & developed by companies like Google, Facebook, Microsoft, etc., to solve Large Language Problems.

The element-by-element sequencing model revolutionized how machines conversed with humans, yet, it had limitations and drawbacks like grammar mistakes and bad semantic sense.

LSTM

RNN suffered from a problem called Vanishing Gradient. LSTM (Long Short Term Memory) and GRU (Gated Recurring Unit) were introduced to address this issue.

Though in structure, they remain the same, LSTM preserves the context/information present in the initial part of the statement by preventing the issue of Vanishing Gradient. To retain the part of the statement, it introduced cell state and cell gates with layers such as forget gate, input gate, and output gate.

Transformer model

While LSTM was a rock star during its time in the NLP evolution, it had issues such as slow training and lack of contextual awareness due to a one-directional process. Bi-directional LSTM learned the context in forward & backward directions and concatenated them. Still, it was not ahead and back together, and it struggled to perform tasks such as text summarization and Q&A that deal with long sequences. Enter, Transformers. This popular model was introduced with improved training efficiency. Also, the model could parallelly process the sequences, based on which many text training algorithms were developed.

UNILM

Unified language model was developed from a transformer model, BERT – Bi-directional Encoder Representations. In this model, every output element is connected to every input element, and the language co-relation between the words was dynamically calculated. AI content improved with the tuning of algorithms and extensive training.

T5

Text to Text Transfer Transformer, with text as input, generates target text. This is an enhanced language translation model. It had a bi-directional encoder and a left-right decoder pre-trained on a mix of unsupervised and supervised tasks.

BART

Bi-directional & auto regressive transformers, a model structure proposed in 2020 by Facebook. Consider it as a generalization of BERT and GPT. It combines ideas from both the encoder and decoder. It had a bi-directional encoder and a left-to-right decoder.

GPT: Generative Pre-trained Transformer

GPT is the first autoregressive model based on Transformer architecture. Evolved as GPT, GPT2, GPT3, GPT 3.5 (aka GPT 3 Davinci-003) pre-trained model, which was fine-tuned & released to the public as ChatGPT (based on InstructGPT) by OpenAI.

The backbone of the model is Reinforcement Learning from Human Feedback (RLHF). It’s continuously human-trained for text, audio, and video.

This version of GPT converses like a human to a great extent, which is why this bot has a lot of hype. With all the tremendous efforts that went into scaling AI content, companies are striving to make it more human-like.

More Large Language and Generative AI models were built and released by Google (BARD based on Language Model for Dialogue Applications (LaMDA), HuggingFace (BLOOM), and the latest from Meta Research LLaMA, which was open-sourced.

Application of ChatGPT and Generative AI models

With companies expanding their investment in data analytics to use the power of data to derive critical insights, we must discuss AI bots’ role in data analytics.

As we read in the earlier paragraph on various applications of Generative AI, different models come into play for the same. We continue to see ChatGPT experiences from people of various backgrounds and industries. How and where can enterprises use ChatGPT?

As you know, ChatGPT is Language Model. Its application is predominantly in “Text” and tasks that require human-like conversation, taking notes in a meeting, composing an email, writing content, and increasing developers’ productivity.

Key challenges in using open-source AI bots for data analysis

Most data analysis projects deal with sensitive data. Large organizations sign agreements on data privacy protection with customers and the government that prevents them from disclosing sensitive information to open-source tools.

That’s why organizations must understand what kind of support the data engineering team looks for from AI bots and ensure no sensitive information is disclosed.

A known risk: AI models have continuously evolved to ensure improved accuracy. This implies that there is definitely room for errors. The open-source conversational bots, even if well-trained to perform certain activities, hold no responsibility for the output it provides. You need the right eye to ensure the AI gets the correct data, understands it, and does what it should.

Responsible governance & corporate policies

Technology is fast evolving and has the entire world working on it such that innovations, new tools, and upgrades are happening in the flash of an eye. It’s so compelling to try new tools for critical tasks. But, every organization must ensure the right policies are in place to responsibly handle booms or sensations like ChatGPT.

0 notes

Link

0 notes

Text

Long short-term memory from scratch

Long short-term memory (LSTM) is a type of recurrent neural network (RNN) that is designed to remember long-term dependencies. In other words, it can remember information from multiple inputs and use them to make decisions. This makes LSTMs particularly useful for tasks such as language modeling and machine translation, where the context of the words being used must be remembered over long sequences. In this tutorial, we will build an LSTM from scratch using Python and NumPy. We will use the same structure as a traditional RNN, but with some modifications to the activation functions and weights used. We will also look at how to train our model using backpropagation and gradient descent. By the end of this tutorial, you should have a basic understanding of how to build an LSTM from scratch in Python. First, let's start by defining some basic components of our model: - Inputs: These are the values that our model will receive as input at each time step. The number of inputs should be equal to the number of features in our dataset. - Hidden layers: These are hidden layers of neurons that take in inputs and produce outputs at each time step. - Activation functions: These are mathematical functions that determine how much influence each input has on a neuron's output. Common activation functions used in LSTMs include sigmoid, hyperbolic tangent (tanh), ReLU, and softmax. - Recurrent weights: These are weights that connect neurons across different time steps and help propagate information from one time step to another. - Output layer: This layer produces a single output value based on all the inputs and hidden layers at a given time step. Examples of long short-term memory. - Gated Recurrent Unit (GRU): A type of LSTM that uses gates to control the flow of information and reduce the number of parameters required for training. - Peephole Connections: Connections between each node in an LSTM cell and the output from the previous time-step, allowing the cell to access information from further in the past. - Forget Gate: A gate in an LSTM cell that controls how much information is stored in a memory cell and how much is forgotten. - Input Gate: A gate in an LSTM cell that determines how much new input is allowed into a memory cell at each time-step. - Output Gate: A gate in an LSTM cell that determines which parts of the memory cell will be used as output at each time-step. - Memory Cell: The central component of an LSTM network, responsible for storing and retrieving information over long periods of time. - Time-Steps: Each step through an LSTM network, where information from previous steps is combined with new input to update memory cells and generate outputs. - Vanishing Gradient Problem: The problem faced by RNNs where error gradients become too small or vanish over long-time intervals, preventing accurate learning over long periods of time. - Backpropagation Through Time (BPTT): An algorithm used to train RNNs by applying backpropagation over multiple time steps or layers at once, allowing for faster training times than traditional methods for deep networks with many layers/time steps. - Truncated Backpropagation Through Time (TBPTT): An algorithm used to train RNNs by only backpropagating through a certain number of time steps before resetting error gradients, helping prevent vanishing gradient problems while still allowing for fast training times on large datasets with many layers/time steps Once we have these components defined, we can start building our LSTM model using NumPy and Python code. We'll start by defining two classes - one for our LSTM cells and one for our overall model - which will store all of our parameters such as weights, biases, activations, etc.. Then we'll define two separate functions - one for forward propagation (where we process input data) and one for backpropagation (where we adjust weights/biases through gradient descent). Finally, we'll train our model using batches of training data until it reaches an acceptable level of accuracy on unseen test data sets. Once our model is trained correctly, it should be able to accurately predict outputs based on inputs it has seen before (or similar ones). This makes it an effective tool for tasks such as language modeling or machine translation where context must be remembered over long sequences. Read the full article

0 notes