#Graph Database Market Forecast

Explore tagged Tumblr posts

Text

Graph Database Market - Forecast(2024 - 2030)

Overview

The Graph Database Market is forecast to reach $ 3.21 billion by 2025 growing at a CAGR of 22.6% during the forecast period 2020-2025. The Growth of Graph Database Market can be attributed to the increase in application areas of graph database, upsurge in penetration of connected data to optimize marketing performance, surge in adoption of graph database software in the healthcare sector, rise in need for better response time & accuracy to discover new data correlations etc. Rising adoption of cloud based solutions to enhance business processes will further fuel the growth of Graph Database Market. Increase in usage of virtualization in big data analytics and technological advancements in graph database are expected to create lucrative opportunities for the market during the forecast period.

Request Sample

Key Takeaways

Large enterprises to boost the adoption of graph database tools as the implementation of these tools and services in large enterprises simplifies risk and compliance management tasks.

As most of the organizations has digitalized their businesses, the supply chain management application is expected to grow at the highest CAGR due to rising need for real-time, location-based insights across the entire supply chain.

Growing dependency on connected data for fraud detection across various industries including e-commerce, banking, financial services and insurance, contributes to the growth of global graph database market.

The transportation & logistic industry is expected to witness the highest CAGR in the graph database market during the forecast period, due to the rising adoption of graph database technology in this industry to compute fast, to enable localized door-to-door delivery of goods between buyers & sellers, and to scale their business to include the supply chain.

Implementation challenges and lack of technical expertise to create hurdles for the Graph Database Market.

Component Type - Segment Analysis

The software segment has been analyzed to attribute to the majority of share in the Graph Database Market during the forecast period 2020-2025 owing to the rising demand for software, as massive volume of data is continuously generated due to which managing data using a traditional relational database infrastructure is difficult for enterprises. These organizations need to manage their data efficiently and effectively to enhance their productivity and maintain business continuity. The proliferation of data amounting to big data has forced vendors to design graph database software and help Information Technology (IT) teams simplify and manage their decision-making process. For Instance, in July 2019 Dgraph raised $11.5 million for scalable graph database solutions. Thus Graph database tools are becoming prevalent among data-sensitive organizations to cope with the ever-increasing volumes of data which is indicating opportunity for the growth of Graph Database Market.

Inquiry Before Buying

End-user - Segment Analysis

Among the end users, the healthcare and life sciences sector is expected to dominate the Graph Database Market with the highest CAGR of 12.48% during the forecast period 2020-2025. Graph database software plays a key role in the healthcare and life sciences sectors for recording patient’s information and to provide this information to numerous patients or healthcare providers. Healthcare organizations and institutions are using graph database tools to improve and enhance the operational efficiency, safety, reduce costs, and improvise the quality of patient care, thus fueling its adoption in the healthcare sector. Industry regulations, such as HIPPA and HITECH, provide guidelines for healthcare data privacy and the safe transmission of electronic health data. Healthcare-focused start-ups are leveraging the graph database technology to cater to the unmet needs of customers. For instance, Zephyr Health, a health-focused start-up is leveraging graph database technology as one important component of its service offerings. Furthermore, by using graph database technology, healthcare organizations are leveraging the connections within their existing data such as connected genome or information related to patient treatments, which is opportunistic for the graph database market.

Geography - Segment Analysis

North America dominated the graph database market accounting for 42% of shares in 2019 and this trend is expected to continue over the forecast period 2020-2025. The emergence of technology-based enterprises and industries has created the significant growth opportunities for the graph database vendors in this region as the organizations highly rely on data, which is driving the adoption of graph database tools and related technologies. Increasing number of manufacturing firms, proliferation of Internet of Things (IoT) devices, and emergence of AI will further fuel the market growth in this region. The rapid generation of data across industry verticals and the increasing investments in new technologies, such as Machine Learning (ML) is expected to propel the demand for graph database tools during the forecast period. Increasing investments by various graph database vendors in these regions will further fuel the growth of the market. For instance, in September 2019, TigerGraph a California-based software developer raised $32 million for graph database tools.

Schedule a Call

Drivers – Smart Display Market

Real-time big data mining with visualization of results to drive the Graph Database Market.

Graph database eases the distribution of any data across data centers or cloud regions, making business applications ready to scale, and able to create real-time insights and experiences. Moreover, it helps display insights through data visualizations and provides a real-time prediction for streaming data. Hence, the graph database is used to enhance business process, improve decision-making, and achieve the ability to automate, optimize, and direct decisions as per demands to reach set business goals. The growing consensus toward graph database is the most direct path to extract business decisions from the value found in the data.

Penetration of connected data to optimize marketing performance is expected to drive the Graph database Market.

Graph databases are majorly designed for highly interconnected data. It works best when the data is connected, and should be represented by how it links or refers to other data. For instance, the application of graph database technology in social network. Moreover, for highly connected data, graph database technology provides numerous benefits as compared to other relational databases, including enhanced performance, improved flexibility, and easier data modeling, which are expected to provide lucrative opportunities for the graph database market. For instance, highly connected data can cause a lot of joins, which generally are expensive. After over seven self/recursive joins, the relational database management system slows down considerably as compared to graph databases.

Challenges – Graph Database Market.

Lack of standardization and programming ease to hamper the market growth.

While graph databases are technically NoSQL databases, in practice, they cannot be implemented across a low-cost cluster, but have to run on a single machine. This is the reason behind the rapid performance degradation across a network. Another potential drawback is that developers have to write their queries using Java as there is no Standard Query Language (SQL) to retrieve data from graph databases, which means employing expensive programmers or developers use SparcQL or one of the other query languages that have been developed to support graph databases. These challenges may hinder the Graph Database Market growth.

Buy Now

Market Landscape

The Graph Database Market is dominated by major solution providers, such as IBM (US), Microsoft (US), Oracle (US), AWS (US), Neo4j (US), OrientDB (UK), TIBCO (US), Franz (US), OpenLink Software (US), MarkLogic (US), TigerGraph (US), DataStax (US), Ontotext (Bulgaria), Stardog (US), Cray (US), ArangoDB (US), Bitnine (US), Objectivity (US), Memgraph (UK), Cambridge Semantics (US), Fluree (US), and Blazegraph (US). Investments, product launches along with partnerships are the key strategies adopted by the players in the Graph Database Market

Product Launches / Collaborations / Investments

In November 2018, Neo4j raised $80 million for next-generation graph databases to grow its developer tools and support popular use cases, particularly graph-enabled artificial intelligence (AI) and machine learning (ML) systems.

In April 2019, Neo4j and Google Cloud announced a strategic partnership that will offer the Neo4j graph database delivered as a seamless experience integrated with GCP console, billing, and support services.

In July 2019, TigerGraph Launched PartnerGraph Program to Meet Growing Demand for Graph Analytics in AI and Machine Learning. New Program Enables Partners to Leverage Leading Scalable Graph Database and Analytics Platform.

#Graph Database Market#Graph Database Market Size#Graph Database Market Share#Graph Database Market Analysis#Graph Database Market Revenue#Graph Database Market Trends#Graph Database Market Growth#Graph Database Market Research#Graph Database Market Outlook#Graph Database Market Forecast

0 notes

Text

Data Analysis: Turning Information into Insight

In nowadays’s digital age, statistics has come to be a vital asset for businesses, researchers, governments, and people alike. However, raw facts on its personal holds little value till it's far interpreted and understood. This is wherein records evaluation comes into play. Data analysis is the systematic manner of inspecting, cleansing, remodeling, and modeling facts with the objective of coming across beneficial information, drawing conclusions, and helping selection-making.

What Is Data Analysis In Research

What is Data Analysis?

At its middle, records analysis includes extracting meaningful insights from datasets. These datasets can variety from small and based spreadsheets to large and unstructured facts lakes. The primary aim is to make sense of data to reply questions, resolve issues, or become aware of traits and styles that are not without delay apparent.

Data evaluation is used in truely every enterprise—from healthcare and finance to marketing and education. It enables groups to make proof-based choices, improve operational efficiency, and advantage aggressive advantages.

Types of Data Analysis

There are several kinds of information evaluation, every serving a completely unique purpose:

1. Descriptive Analysis

Descriptive analysis answers the question: “What happened?” It summarizes raw facts into digestible codecs like averages, probabilities, or counts. For instance, a store might analyze last month’s sales to decide which merchandise achieved satisfactory.

2. Diagnostic Analysis

This form of evaluation explores the reasons behind beyond outcomes. It answers: “Why did it occur?” For example, if a agency sees a surprising drop in internet site visitors, diagnostic evaluation can assist pinpoint whether or not it changed into because of a technical problem, adjustments in search engine marketing rating, or competitor movements.

3. Predictive Analysis

Predictive analysis makes use of historical information to forecast destiny consequences. It solutions: “What is probable to occur?” This includes statistical models and system getting to know algorithms to pick out styles and expect destiny trends, such as customer churn or product demand.

4. Prescriptive Analysis

Prescriptive analysis provides recommendations primarily based on facts. It solutions: “What have to we do?” This is the maximum advanced type of analysis and often combines insights from predictive analysis with optimization and simulation techniques to manual selection-making.

The Data Analysis Process

The technique of information analysis commonly follows those steps:

1. Define the Objective

Before diving into statistics, it’s essential to without a doubt recognize the question or trouble at hand. A well-defined goal guides the entire analysis and ensures that efforts are aligned with the preferred outcome.

2. Collect Data

Data can come from numerous sources which includes databases, surveys, sensors, APIs, or social media. It’s important to make certain that the records is relevant, timely, and of sufficient high-quality.

3. Clean and Prepare Data

Raw information is regularly messy—it may comprise missing values, duplicates, inconsistencies, or mistakes. Data cleansing involves addressing these problems. Preparation may include formatting, normalization, or growing new variables.

Four. Analyze the Data

Tools like Excel, SQL, Python, R, or specialized software consisting of Tableau, Power BI, and SAS are typically used.

5. Interpret Results

Analysis isn't pretty much numbers; it’s about meaning. Interpreting effects involves drawing conclusions, explaining findings, and linking insights lower back to the authentic goal.

6. Communicate Findings

Insights have to be communicated effectively to stakeholders. Visualization tools including charts, graphs, dashboards, and reports play a vital position in telling the story behind the statistics.

7. Make Decisions and Take Action

The last aim of statistics analysis is to tell selections. Whether it’s optimizing a advertising marketing campaign, improving customer support, or refining a product, actionable insights flip data into real-global effects.

Tools and Technologies for Data Analysis

A big selection of gear is available for facts analysis, each suited to distinct tasks and talent levels:

Excel: Great for small datasets and short analysis. Offers capabilities, pivot tables, and charts.

Python: Powerful for complicated facts manipulation and modeling. Popular libraries consist of Pandas, NumPy, Matplotlib, and Scikit-learn.

R: A statistical programming language extensively used for statistical analysis and statistics visualization.

SQL: Essential for querying and handling information saved in relational databases.

Tableau & Power BI: User-friendly enterprise intelligence equipment that flip facts into interactive visualizations and dashboards.

Healthcare: Analyzing affected person statistics to enhance treatment plans, predict outbreaks, and control resources.

Finance: Detecting fraud, coping with threat, and guiding investment techniques.

Retail: Personalizing advertising campaigns, managing inventory, and optimizing pricing.

Sports: Enhancing performance through participant records and game analysis.

Public Policy: Informing choices on schooling, transportation, and financial improvement.

Challenges in Data Analysis

Data Quality: Incomplete, old, or incorrect information can lead to deceptive conclusions.

Data Privacy: Handling sensitive records requires strict adherence to privacy guidelines like GDPR.

Skill Gaps: There's a developing demand for skilled information analysts who can interpret complicated facts sets.

Integration: Combining facts from disparate resources may be technically hard.

Bias and Misinterpretation: Poorly designed analysis can introduce bias or lead to wrong assumptions.

The Future of Data Analysis

As facts keeps to grow exponentially, the sector of facts analysis is evolving rapidly. Emerging developments include:

Artificial Intelligence (AI) & Machine Learning: Automating evaluation and producing predictive fashions at scale.

Real-Time Analytics: Enabling decisions based totally on live data streams for faster reaction.

Data Democratization: Making records handy and understandable to everybody in an business enterprise

2 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

Data mining

1.What's Data mining ?

Datamining is the process of extracting and discovering patterns in large datasets involving methods at the intersection of machine learning, statistics and database systems. Datamining is interdisciplinary subfield of computer-science and statistics with overall goal of extracting information (with intelliegent methods) from a data set and transforming the information into a comprensible structure for further use. Data mining is the analysis step of The KDD process "Knowledge discovery in database".

2.What's KDD process ?

KDD process is known as "Knowledge Discovery in Database".It"s a multi-step process of finding knowledge from large data sets and emphasizes the high-level application of particular datamining methods.It's of interests to researchers in machine learning, pattern recognition, databases, ststistics, artificial intelligence, knowledge aquisition for experts systems and data-visualization. The picture below defines the different steps of KDD process and each of those steps have an input and output entity. The KDD process can't be executed without beginning on data.

3.What are the different steps of the KDD process ?

The overall process of finding and interpretting patterns from data involves the repeated application of the following steps mentioned in the graph above :

Selection : we create a target data set by seecting a part of the overall data set as a sample then focusing on a subset of variables on which discovery is to be performed. The result of these step is a subset of data considered as a sample.

Preprocessing : These step of the KDD process takes the target data set as an input then it applyes data cleaning by removing the noise from the input data set then restucturing the data set. The output of these operation is a preprocessed dataset that can be able to be transformed in the next step.

Data transformation : These step takes the preprocessed data as input and tres to find some useful features depending on the goal of the task and reducing dimension to execute an effective learining datamining.

Data mining : in this phase we will descide whether the goal of KDD process is classification, regression, clustering ...etc. Discover the patterns of interests.

Interpretation : Interpretating mined patterns and consolidating discovered knowledge.

4.What are data mining tasks ?

There are several steps that are defined in the sub-process of KDD especially in datamining steps. In Data mining, there are 02 types of data mining that are :

Predictive mining: predective data mining is the analysis done to predict a future event or other data or trends and to predict something will happen in the near future. Predective data mining offers a better future analysis and to make better decisions to add a value in predective analytics like for example predecting the future customer of a defined service, define the future price of oil and gaz in the world market, define the next ill of an international pandemic, define the future political conflict ... etc. There are 4 types of descriptive data mining tasks which are :

Classification analysis : It is used to retrieve critical and pertinent data and metadata. It categorizes information into various groups. Classification Analysis is best demonstrated by email providers. They use algorithms to determine whether or not a message is legitimate.

Regression Analysis : It tries to express the interdependence of variables. Forecasting and prediction are common applications.

Time Serious Analysis : It is a series of well-defined data points taken at regular intervals.

Prediction Analysis : It is related to time series, but the time isn’t restricted.

Descriptive mining : descriptive data mining is to describe data and make data more readable to human beings, it's used to extract information from previous events and data and to discovering an interesting patterns and association behind data. It's also used to exract correlations, relationships between features and finding new laws and regularities based on data. There are four different types of Descriptive Data Mining tasks. They are as follows :

Clustering analysis : It is the process of determining which data sets are similar to one another. For example, to increase conversion rates, clusters of customers with similar buying habits can be grouped together with similar products.

Summerazation analysis : It entails methods for obtaining a concise description of a dataset. For example, summarising a large number of items related to Christmas season sales provides a general description of the data, which can be extremely useful to sales and marketing managers.

Association rules analysis : This method aids in the discovery of interesting relationships between various variables in large databases. The retail industry is the best example. As the holiday season approaches, retail stores stock up on chocolates, with sales increasing before the holiday, which is accomplished through Data Mining.

Sequence discovery analysis : It's all about how to do something in a specefic order. For instance, a user may frequently purchase shaving gel before purchasing razor in a store.It all comes down to the order in which the user purchases the product, and the store owner can then arrange the items accordingly.

5.Links :

3 notes

·

View notes

Text

Top Reasons Why Your Business Should Hire a Data Visualization Consulting Company

The role of a data visualization consulting company has sparked the way businesses need to thrive in today’s data-driven world. Do you also look for the same for your venture? Before getting in, let’s explore the types of advantages you are likely to conclude with professional data consultants.

Undeniably, businesses generate and collect massive amounts of data and information daily. Organizing customer behavior analytics, marketing metrics, operational performance and financial forecasts can be tricky and overwhelming.

However, do you know the real challenge? It isn’t just collecting data! It’s making sense of it. Professional data visualization consulting companies bring the right approach in place to support businesses across dimensions.

Advantages of Working with a Professional Data Visualization Consulting Company

These specialized firms help businesses across many spheres. They help transform raw data into clear and compelling visuals to deliver actionable insights. These experts are productive for both a start-up or an established enterprise alike. Here is how:

They Transform Complex Data into Actionable Insights

Raw data seems rarely fruitful in spreadsheets or databases at first glance. Data engineering consultants generate charts, graphs, dashboards, and interactive visuals using numbers and information. It makes information accessible and understandable.

Ultimately, it helps decision-makers to identify trends quickly, detect anomalies, monitor KPIs in real time and make informed business decisions.

They Help Save Time and Resources

Generating meaningful data visualizations needs both technical expertise and design sensibility. It requires time as well which most internal teams may not have in abundance.

A professional consulting company showcases ready-made tools, frameworks, and experienced specialists who can deliver high-quality work efficiently. Businesses are likely to reduce the learning curve for internal staff and focus on core operations.

Access to Expert Knowledge and Tools

Knowledge is the key! Professional consultants specialize in platforms like Tableau, Power BI, Looker, D3.js, and more. At the same time, they stay updated with the latest features and best practices. They produce visualizations that are not just pretty, but also technically accurate and optimized for your data infrastructure.

They Offer Customized Solutions for Unique Business Needs

Further, every business comes with its own set of unique data challenges. A data visualization consulting organization pushes customized solutions that align with your goals, industry, and users. For instance, a SaaS company could benefit from user engagement trend analysis. Thankfully, consultants invest time in understanding your business model and tailoring visualizations accordingly.

They Elevate Collaboration Across Teams

With visualization, data becomes a universal language. It can be understood by everyone in the company, regardless of technical expertise. Eventually, sales, marketing, finance, operations, and executive teams can all interpret the same visuals and collaborate more effectively. Interactive dashboards further pass the power to users to explore data on their own and foster curiosity and innovation.

They Ensure Data Accuracy and Consistency

Poor visualizations can misrepresent data. It can further lead to bad decisions and affect the overall performance adversely. Marketing analytics consulting services follow established data storytelling principles. They maintain high standards for accuracy and consistency. This reduces the risk of misinformation and builds trust in the data across the organization.

Scalability and Future-Readiness

As your business grows, so does your data complexity. A data visualization consulting company doesn’t just solve immediate problems. They help future-proof your analytics infrastructure.

This long-term vision ensures your investment in data visualization continues to deliver value as your business evolves.

Conclusion

Needless to say, data visualization is no longer a luxury in the present competitive business environment. It's a necessity for modern business decision-making. Therefore, hiring a data visualization consulting company empowers organizations to unlock the full potential of their data.

These professional firms bring enormous benefits to enterprises. They help save time, improve accuracy and drive strategic insights. Whether you're struggling with messy spreadsheets or trying to communicate insights across departments, a consulting partner can bridge the gap between data and action. In a world where data is power, visualizing it effectively could be your biggest competitive advantage.Looking forward to hiring a reputed data consulting company for your business? Get in touch with Kaliper to grab comprehensive digital solutions. It helps organizations elevate business to new heights and explore unique prospects.

0 notes

Text

Unlock Data-Driven Success with Tableau Services

In today’s fast-paced business world, data is power. But raw numbers alone aren’t enough—companies need tools to turn data into clear, actionable insights. That’s where Tableau Services shine.

As a leading business intelligence platform (part of Salesforce), Tableau offers tools and support to help organizations visualize, analyze, and share data effortlessly. Let’s explore how Tableau Services can transform your business.

What Are Tableau Services?

Tableau Services include software, training, and expert support designed to simplify data management. Key tools include:

Tableau Desktop: Build interactive dashboards.

Tableau Cloud/Server: Share insights securely online or on-premises.

Tableau Prep: Clean and organize data quickly.

Paired with training courses, certifications, and 24/7 support, these services empower teams at all skill levels to make smarter decisions.

Top Benefits of Tableau Services

1. Easy Data VisualizationTableau’s drag-and-drop interface lets anyone create charts, graphs, and maps—no coding needed. For example, a retailer can track sales trends across regions in minutes using colorful dashboards.

2. Works for EveryoneWhether you’re a data expert or a beginner, Tableau adapts to your skills. Non-technical users can build reports, while analysts use SQL or Python for deeper dives.

3. Real-Time InsightsMonitor live data to act fast. A logistics company could track deliveries or inventory levels in real time, adjusting routes to save costs.

4. Connect Any Data SourceTableau links to spreadsheets, databases (like Google BigQuery), and apps (like Salesforce). Combine all your data into one dashboard for a unified view.

5. Team CollaborationShare dashboards securely with teams or clients. Marketing teams, for instance, can update executives on campaign performance instantly.

6. Grows with Your BusinessFrom startups to global firms, Tableau scales smoothly. Start with a single license and expand to enterprise-level solutions as needed.

7. Advanced AnalyticsPredict trends, like future customer demand, using AI-driven tools. Healthcare providers can forecast patient needs to improve care.

8. Save Time and MoneyAutomate data tasks to reduce manual work. Cloud options cut IT costs, letting small businesses focus on growth.

9. Top-Notch SecurityProtect sensitive data with features like role-based access and encryption—ideal for finance or healthcare industries.

10. Learn and ImproveAccess free courses, certifications, and a global user community. Get expert help to tailor Tableau to your goals.

Who Uses Tableau Services?

Retail: Optimize pricing using sales and customer data.

Healthcare: Improve patient care with treatment analytics.

Finance: Detect fraud and manage risk securely.

Education: Track student performance to allocate resources better.

Why Choose Tableau?

User-Friendly: Designed for all skill levels.

Trusted: Backed by Salesforce and used by over 1 million teams worldwide.

Flexible: Cloud, desktop, or server options fit any need.

How to Get Started

Free Trial: Test Tableau Cloud or Desktop on their website.

Plans: Choose subscriptions based on your team size.

Support: Partner with consultants for setup and training.

Final Thoughts

Tableau Services turn complex data into clear insights, helping businesses act faster, save costs, and stay competitive. Whether you’re analyzing sales trends or improving patient care, Tableau’s tools make data work for you.

Ready to unlock your data’s potential? Explore Tableau’s official website today—or try a free trial to see the difference yourself!

0 notes

Text

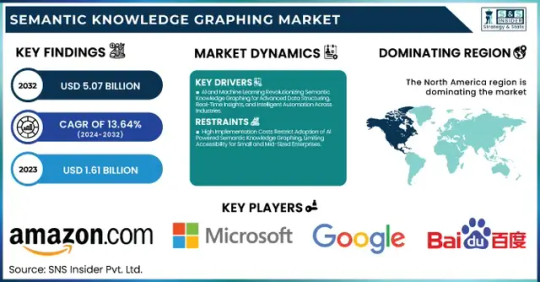

Semantic Knowledge Graphing Market Size, Share, Analysis, Forecast, and Growth Trends to 2032: Transforming Data into Knowledge at Scale

The Semantic Knowledge Graphing Market was valued at USD 1.61 billion in 2023 and is expected to reach USD 5.07 billion by 2032, growing at a CAGR of 13.64% from 2024-2032.

The Semantic Knowledge Graphing Market is rapidly evolving as organizations increasingly seek intelligent data integration and real-time insights. With the growing need to link structured and unstructured data for better decision-making, semantic technologies are becoming essential tools across sectors like healthcare, finance, e-commerce, and IT. This market is seeing a surge in demand driven by the rise of AI, machine learning, and big data analytics, as enterprises aim for context-aware computing and smarter data architectures.

Semantic Knowledge Graphing Market Poised for Strategic Transformation this evolving landscape is being shaped by an urgent need to solve complex data challenges with semantic understanding. Companies are leveraging semantic graphs to build context-rich models, enhance search capabilities, and create more intuitive AI experiences. As the digital economy thrives, semantic graphing offers a foundation for scalable, intelligent data ecosystems, allowing seamless connections between disparate data sources.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6040

Market Keyplayers:

Amazon.com Inc. (Amazon Neptune, AWS Graph Database)

Baidu, Inc. (Baidu Knowledge Graph, PaddlePaddle)

Facebook Inc. (Facebook Graph API, DeepText)

Google LLC (Google Knowledge Graph, Google Cloud Dataproc)

Microsoft Corporation (Azure Cosmos DB, Microsoft Graph)

Mitsubishi Electric Corporation (Maisart AI, MELFA Smart Plus)

NELL (Never-Ending Language Learner, NELL Knowledge Graph)

Semantic Web Company (PoolParty Semantic Suite, Semantic Middleware)

YAGO (YAGO Knowledge Base, YAGO Ontology)

Yandex (Yandex Knowledge Graph, Yandex Cloud ML)

IBM Corporation (IBM Watson Discovery, IBM Graph)

Oracle Corporation (Oracle Spatial and Graph, Oracle Cloud AI)

SAP SE (SAP HANA Graph, SAP Data Intelligence)

Neo4j Inc. (Neo4j Graph Database, Neo4j Bloom)

Databricks Inc. (Databricks GraphFrames, Databricks Delta Lake)

Stardog Union (Stardog Knowledge Graph, Stardog Studio)

OpenAI (GPT-based Knowledge Graphs, OpenAI Embeddings)

Franz Inc. (AllegroGraph, Allegro CL)

Ontotext AD (GraphDB, Ontotext Platform)

Glean (Glean Knowledge Graph, Glean AI Search)

Market Analysis

The Semantic Knowledge Graphing Market is transitioning from a niche segment to a critical component of enterprise IT strategy. Integration with AI/ML models has shifted semantic graphs from backend enablers to core strategic assets. With open data initiatives, industry-standard ontologies, and a push for explainable AI, enterprises are aggressively adopting semantic solutions to uncover hidden patterns, support predictive analytics, and enhance data interoperability. Vendors are focusing on APIs, graph visualization tools, and cloud-native deployments to streamline adoption and scalability.

Market Trends

AI-Powered Semantics: Use of NLP and machine learning in semantic graphing is automating knowledge extraction and relationship mapping.

Graph-Based Search Evolution: Businesses are prioritizing semantic search engines to offer context-aware, precise results.

Industry-Specific Graphs: Tailored graphs are emerging in healthcare (clinical data mapping), finance (fraud detection), and e-commerce (product recommendation).

Integration with LLMs: Semantic graphs are increasingly being used to ground large language models with factual, structured data.

Open Source Momentum: Tools like RDF4J, Neo4j, and GraphDB are gaining traction for community-led innovation.

Real-Time Applications: Event-driven semantic graphs are now enabling real-time analytics in domains like cybersecurity and logistics.

Cross-Platform Compatibility: Vendors are prioritizing seamless integration with existing data lakes, APIs, and enterprise knowledge bases.

Market Scope

Semantic knowledge graphing holds vast potential across industries:

Healthcare: Improves patient data mapping, drug discovery, and clinical decision support.

Finance: Enhances fraud detection, compliance tracking, and investment analysis.

Retail & E-Commerce: Powers hyper-personalized recommendations and dynamic customer journeys.

Manufacturing: Enables digital twins and intelligent supply chain management.

Government & Public Sector: Supports policy modeling, public data transparency, and inter-agency collaboration.

These use cases represent only the surface of a deeper transformation, where data is no longer isolated but intelligently interconnected.

Market Forecast

As AI continues to integrate deeper into enterprise functions, semantic knowledge graphs will play a central role in enabling contextual AI systems. Rather than just storing relationships, future graphing solutions will actively drive insight generation, data governance, and operational automation. Strategic investments by leading tech firms, coupled with the rise of vertical-specific graphing platforms, suggest that semantic knowledge graphing will become a staple of digital infrastructure. Market maturity is expected to rise rapidly, with early adopters gaining a significant edge in predictive capability, data agility, and innovation speed.

Access Complete Report: https://www.snsinsider.com/reports/semantic-knowledge-graphing-market-6040

Conclusion

The Semantic Knowledge Graphing Market is no longer just a futuristic concept—it's the connective tissue of modern data ecosystems. As industries grapple with increasingly complex information landscapes, the ability to harness semantic relationships is emerging as a decisive factor in digital competitiveness.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Semantic Knowledge Graphing Market#Semantic Knowledge Graphing Market Share#Semantic Knowledge Graphing Market Scope#Semantic Knowledge Graphing Market Trends

1 note

·

View note

Link

0 notes

Text

Global Personal Care Ingredients Market Analysis: Key Players, Revenue Trends, and Demand Forecast

The global personal care ingredients market would reach value of USD 15.12 Billion by 2027, according to a current analysis by Emergen Research. This growth of the market can be attributed to changes in consumer lifestyle and their purchasing behavior. Rising awareness regarding hygiene and health has driven sales of personal care products with high-quality ingredients. Increasing investments in research and development activities by manufacturers of personal care products to produce eco-friendly and sustainable products are driving the market for personal care ingredients. Growing desire for self-care and health-promoting products among consumers is contributing to the demand for personal care products.

The Personal Care Ingredients Market report carefully investigates the present market scenario and the fundamental growth prospects. The report entails an organized database of the Personal Care Ingredients market dynamics that helps market analysts estimate the global market growth rate over the projected timeline. Therefore, the report, published by Emergen Research, is a detail-oriented compilation of the crucial aspects of the Personal Care Ingredients market, including the key players’ product offerings, the wide application range of these products, the major market segments, leading market contenders, their company profiles, pricing strategies, production capacities, revenue generation schemes, technological advancements, and many others.

Claim Your FREE Sample Copy with Table of content@ https://www.emergenresearch.com/request-sample/437

The leading market contenders listed in the report are: Dow, BASF SE, Clariant AG, Solvay S.A., Ashland Global, Evonik Industries AG, Lubrizol Corporation, Nouryon, Lonza Group AG, Croda International

The research study examines historic data from 2018 and 2020 to draw forecasts until 2030. The timeline makes the report an invaluable resource for readers, investors, and stakeholders looking for key insights in readily accessible documents with the information presented in the form of tables, charts, and graphs. To Visit Full Report & Table of Contents Personal Care Ingredients Market: https://www.emergenresearch.com/industry-report/personal-care-ingredients-market

Market Overview: The report bifurcates the Personal Care Ingredients market on the basis of different product types, applications, end-user industries, and key regions of the world where the market has already established its presence. The report accurately offers insights into the supply-demand ratio and production and consumption volume of each segment. Segments Covered in this report are:

Application Outlook (Volume: Kilo Tons) (Revenue, USD Billion; 2017–2027)

Hair Care

Make-up

Skin Care

Oral Care

Others

Ingredient Type Outlook (Volume: Kilo Tons) (Revenue, USD Billion; 2017–2027)

Emulsifiers

Conditioning Polymers

Surfactants

Rheology Modifiers

Emollients

Others

Regional Outlook (Volume: Kilo Tons) (Revenue, USD Billion; 2017–2027)

North America

U.S.

Canada

Europe

Germany

U.K.

France

BENELUX

Rest of Europe

Asia Pacific

China

Japan

South Korea

Rest of APAC

Latin America

Brazil

Rest of LATAM

Middle East & Africa

Saudi Arabia

UAE

Rest of MEA

The research report offers a comprehensive regional analysis of the market with regards to production and consumption patterns, import/export, market size and share in terms of volume and value, supply and demand dynamics, and presence of prominent players in each market. Get An Impressive Discount On This Report@ https://www.emergenresearch.com/request-discount/437

Regional Analysis Covers: North America (U.S., Canada) Europe (U.K., Italy, Germany, France, Rest of EU) Asia Pacific (India, Japan, China, South Korea, Australia, Rest of APAC) Latin America (Chile, Brazil, Argentina, Rest of Latin America) Middle East & Africa (Saudi Arabia, U.A.E., South Africa, Rest of MEA)

Key reasons to buy the Global Personal Care Ingredients Market report:

The latest report comprehensively studies the global Personal Care Ingredients market size and provides useful inference on numerous aspects of the market, such as the current business trends, market share, product offerings, and product share.

The report offers an insightful analysis of the regional outlook of the market.

It offers a detailed account of the end-use applications of the products & services offered by this industry.

The report holistically covers the latest developments taking place in this industry. Therefore, it lists the most effective business strategies implemented by the market rivals for ideal business expansion.

Request Customization as per your specific requirement@ https://www.emergenresearch.com/request-for-customization/437

About Us:

Emergen Research is a market research and consulting company that provides syndicated research reports, customized research reports, and consulting services. Our solutions purely focus on your purpose to locate, target, and analyse consumer behavior shifts across demographics, across industries, and help clients make smarter business decisions. We offer market intelligence studies ensuring relevant and fact-based research across multiple industries, including Healthcare, Touch Points, Chemicals, Types, and Energy. We consistently update our research offerings to ensure our clients are aware of the latest trends existent in the market. Emergen Research has a strong base of experienced analysts from varied areas of expertise. Our industry experience and ability to develop a concrete solution to any research problems provides our clients with the ability to secure an edge over their respective competitors.

Contact Us:

Eric Lee

Corporate Sales Specialist

Emergen Research | Web: www.emergenresearch.com

Direct Line: +1 (604) 757-9756

E-mail: [email protected]

Visit for More Insights: https://www.emergenresearch.com/insights

Explore Our Custom Intelligence services | Growth Consulting Services

Trending Titles: Geocell Market | Pancreatic Cancer Treatment Market

Latest Report: Ceramic Tiles Market | Life Science Analytics Market

0 notes

Text

"Knowledge Graphs: Unlocking Insights Through Structured Data"

A knowledge graph is a powerful tool used to organize and structure vast amounts of information in a way that enables machines and humans to understand relationships between different entities. By capturing and connecting data points—such as people, places, concepts, and events—knowledge graphs map out the relationships and context, making complex data more accessible and actionable. This technology is widely used in industries like search engines, artificial intelligence (AI), and recommendation systems, where understanding connections and context is crucial for delivering precise insights.

One of the key benefits of knowledge graphs is their ability to provide context. Unlike traditional databases, which store data in isolated tables, knowledge graphs visualize how various data elements relate to each other. This semantic relationship between entities allows for more intuitive queries, advanced data analytics, and enhanced decision-making. For instance, when a user searches for information on a particular topic, a knowledge graph can not only pull up related facts but also present insights into how those facts connect to other subjects, creating a deeper understanding.

In the realm of search engines, Google’s Knowledge Graph is a prime example of this technology in action. It enhances search results by providing contextual information about entities directly on the search page, reducing the need for users to click through multiple links to gather information. Knowledge graphs power recommendation engines on platforms like Amazon and Netflix, analyzing user preferences and behavior to suggest products, movies, or services that are most relevant. This personalized approach leads to improved user experiences and engagement.

The growing use of AI and machine learning has made knowledge graphs even more valuable, as they can be used to train algorithms and improve the accuracy of predictions. In industries like healthcare, finance, and e-commerce, knowledge graphs are facilitating better decision-making by connecting disparate data sources and revealing hidden patterns. As more organizations recognize the potential of knowledge graphs, their applications will continue to expand, ultimately enabling businesses to harness data in smarter, more impactful ways.

#KnowledgeGraph #DataDrivenInsights #AIandData #SemanticWeb #SmartData #MachineLearning #AIRevolution

0 notes

Text

How do analysts find data trends?

Data analysts use a structured approach to discover trends within data, which involves collecting, processing, analyzing, and interpreting data to extract meaningful insights. The process begins with data collection from various sources such as databases, surveys, sensors, or business systems. This data can be structured (like SQL databases) or unstructured (such as logs or social media content).

Once the data is gathered, cleaning and preprocessing is essential to remove errors, duplicates, or irrelevant values. Tools like Excel, Python (with libraries such as Pandas and NumPy), or R are widely used for this step. Clean data ensures more accurate trend analysis.

The next step is exploratory data analysis (EDA), where analysts use statistical techniques to understand patterns. Visualizations play a key role in EDA — charts, histograms, scatter plots, and line graphs help identify relationships and anomalies. Software like Tableau, Power BI, or Matplotlib in Python assist in building these visuals.

Analysts then apply trend analysis methods, such as moving averages, regression analysis, and time series analysis, to detect patterns over time. They may segment data by categories (e.g., region, product, customer type) to spot trends that may not be visible in aggregate data.

To confirm these trends, analysts often run hypothesis tests or apply predictive models to forecast future behavior. Contextual knowledge about the domain (e.g., finance, marketing, healthcare) helps interpret the trends accurately and make informed business decisions.

Finally, findings are compiled into dashboards or reports for stakeholders, enabling strategic planning and action.

To build these skills and learn practical techniques, enrolling in an offline data analytics course can provide hands-on experience with real-world datasets and expert guidance.

0 notes

Text

The Orb, the ID, and the New Crypto Leviathan: Sam Altman’s World Is Just Getting Started

Let’s be clear: what Sam Altman is building isn’t just a crypto project. It’s a biometric-backed, AI-conscious, multi-pronged attempt to rewrite how identity, commerce, and personhood operate in the digital age. And with the U.S. launch of World—formerly Worldcoin—Altman’s vision has crossed a major threshold.

We are now witnessing the rollout of the first truly global, biometric-linked identity system backed by crypto incentives, deployed via Silicon Valley gloss and VC firepower, and wrapped in the language of inclusion and "trust."

You may want to look away, but you shouldn't. Because whether you love it or loathe it, World is signaling a new phase in the arms race between real humans, AI imposters, and the systems meant to mediate them.

From Orb to Oracle

World’s foundational premise is deceptively simple: in a world soon flooded with AI-generated noise, we must verify that a real human is on the other end. The solution? A literal eye scan via a silvery “Orb” that captures your biometric signature, checks it against a zero-knowledge database, and hands you a World ID and some WLD tokens in return.

There’s a sci-fi surrealism to it all: scan your iris at a pod in a San Francisco Apple-style showroom and walk out with crypto in your wallet and a digital passport to “prove you are human” online.

The rollout in six U.S. cities—Austin, LA, Miami, San Francisco, Nashville, and Atlanta—will accelerate adoption dramatically, especially now that World plans to deploy a mini-Orb, designed to fit in cafes, college campuses, and Razer gaming stores.

This is more than a gimmick. It’s a strategy for scaling to a billion users.

Why the U.S. Launch Matters

This isn’t just a geographic expansion—it’s a political signal. Under President Trump’s second administration, the U.S. has swung back toward a pro-crypto, anti-regulatory posture. That’s opened the floodgates for projects like World, which had previously been scrutinized, if not outright banned, in countries like Kenya, Brazil, and Hong Kong.

Altman is making a bet that the U.S. will serve as the legitimizing ground for biometric-backed identity networks. With 26 million users already and 12 million “unique humans” verified, World now aims to dominate the U.S. identity layer before regulators catch up.

And catch up they must—because this isn’t just about stopping bots. It’s about controlling the keys to what it means to be “real” in a digital-first economy.

Visa, Tinder, and Prediction Markets: World’s Ecosystem Play

World isn’t stopping at identity. It’s building an ecosystem that fuses payments, dating, prediction markets, and digital interaction.

A World-branded Visa debit card will soon let users spend their WLD and other digital assets wherever Visa is accepted. For merchants, it’s seamless fiat. For users, it’s crypto utility in the real world.

A partnership with Match Group (Tinder, Hinge, etc.) launches in Japan, allowing users to verify dating profiles with World ID. Altman’s team is pitching this as a trust layer for online relationships—an antidote to scam bots and fake profiles.

Integration with prediction market Kalshi brings verified humans into forecasting and betting—turning identity into a precondition for epistemic trust.

In other words, World isn’t just creating a token or a tool—it’s constructing a network of verified humans engaging in commerce, communication, and digital life.

What Facebook did for social graphs, World aims to do for identity. But unlike Facebook, World wants to be borderless, pseudonymous, and programmable.

Surveillance by Another Name?

Of course, not everyone is sold. Privacy advocates are rightfully alarmed. The idea of scanning irises and tying them to crypto wallets sets off Orwellian alarm bells—especially in jurisdictions where surveillance tech is already rampant.

World insists it doesn’t store biometric data and uses zero-knowledge proofs to confirm personhood. But as any technologist knows, the gap between “we don’t store your data” and “we never could” is often wide and legally squishy.

Moreover, World’s rollout in emerging markets came with the promise of “free money” in exchange for scanning—leading some critics to call it biometric colonialism. Now that the same system is landing in wealthy Western cities, the optics have shifted, but the fundamental trade-off remains: give us your body, we’ll give you digital access.

Altman’s defenders argue that this is exactly what’s needed: a voluntary, cryptographic proof-of-humanity system that can fight AI spam, fraud, and manipulation. They may be right. But let’s not pretend the stakes are small.

When you control identity, you control access. And when you link that to payments, apps, social graphs, and reputational layers—you’re not just solving “bots.” You’re building a civilizational gatekeeper.

The Real Fight: Identity as Public Infrastructure or Private Platform?

This is the big philosophical fight hiding beneath the press releases: Should identity be public infrastructure or a privately owned, VC-backed product?

World is choosing the latter. It’s for-profit, tokenized, and heavily funded by the same actors who brought us Web2 dominance—Andreessen Horowitz, Bain, Coinbase Ventures, even Sam Bankman-Fried before his fall.

That doesn’t mean it will fail. In fact, it may succeed because of that capital. But we shouldn’t confuse adoption with legitimacy.

In 2026, the Orb Mini will hit the streets. In 2025, the Visa card launches. In 2024, it was still called Worldcoin and struggling with bans. The velocity of iteration is staggering. Altman has effectively done for biometric identity what OpenAI did for generative text—dragged it from sci-fi to center stage.

World Will Become the Android of Identity

Mark this: World will not be the only identity network. Competing models—like ZK credentials on Ethereum, passportless proof-of-human systems like Humanity Protocol, and state-backed digital IDs—will multiply. But World has something they don’t: capital, UX, and momentum.

Expect World to become the Android OS of the identity layer—a semi-open platform adopted not because it’s loved, but because it’s ubiquitous, cheap, and convenient. Others may prefer Apple-like sovereignty or local alternatives, but for millions—perhaps billions—World will be “good enough.”

And once it’s integrated into dating, payments, gaming, and social apps? You won’t even think about whether you're using it. You’ll just be in it.

The question is whether we’ve had enough public debate before that moment arrives.

Everything you just read is free—but if you value sharp, independent crypto commentary like this, consider supporting us. We don’t run subscriptions, ads, or paywalls. We rely on your donations to keep publishing boldly and freely. Donate to our Ko-Fi page here : https://ko-fi.com/thedailydecrypt . Thank you for keeping this voice alive.

© 2025 InSequel Digital. ALL RIGHTS RESERVED. No part of this publication may be reproduced, distributed, or transmitted in any form without prior written permission. The content is provided for informational purposes only and does not constitute legal, tax, investment, financial, or other professional advice.

0 notes

Text

Data Analysis: Unlocking the Power of Data for Smarter Decisions

Introduction

In the digital age, data has become one of the most valuable resources. With the sheer volume of data generated every second, the ability to analyze and derive meaningful insights from it is a game-changer for businesses, governments, and individuals alike. Data analysis plays a critical role in transforming raw information into actionable knowledge, guiding strategic decisions, optimizing operations, and uncovering hidden patterns. In this comprehensive guide, we explore the concept of data analysis, its types, techniques, tools, real-world applications, and more.

What is Data Analysis?

Data analysis is the process of examining, cleaning, transforming, and modeling data to discover useful information, inform conclusions, and support decision-making. Whether it’s identifying market trends, predicting customer behavior, or evaluating performance, data analysis helps organizations stay competitive and innovative.

Types of Data Analysis

Descriptive Analysis

Summarizes past data to understand what has happened.

Common tools: averages, percentages, visualizations (charts, graphs).

Example: Analyzing sales data from the last quarter.

Diagnostic Analysis

Explores data to determine why something happened.

Utilizes techniques like correlation, regression, and drill-down.

Example: Investigating why sales dropped in a specific region.

Predictive Analysis

Uses historical data to make forecasts about future events.

Employs machine learning, statistical modeling, and algorithms.

Example: Predicting future customer churn based on past behavior.

Prescriptive Analysis

Recommends actions based on data insights.

Integrates AI and optimization models.

Example: Suggesting the best pricing strategy to increase profits.

Exploratory Data Analysis (EDA)

Helps identify patterns, anomalies, and relationships in data sets.

Often used in early stages of analysis to guide further investigation.

Steps in Data Analysis Process

Data Collection

Gathering data from multiple sources (databases, APIs, surveys).

Data Cleaning

Removing errors, duplicates, and inconsistencies.

Data Transformation

Converting data into a usable format (normalization, encoding).

Data Modeling

Applying statistical and machine learning models to analyze data.

Interpretation and Reporting

Visualizing data and presenting findings to stakeholders.

Popular Tools for Data Analysis

Microsoft Power BI

Great for data visualization and business intelligence.

Tableau

Known for creating interactive and shareable dashboards.

Python (Pandas, NumPy, Matplotlib)

Ideal for coding-based data analysis and machine learning.

R Programming

Preferred for statistical computing and graphics.

Excel

Widely used for basic data manipulation and visualization.

KNIME and Weka

Open-source tools for machine learning and advanced analytics.

Applications of Data Analysis

Business and Marketing

Targeted advertising, customer segmentation, sales forecasting.

Healthcare

Patient data analysis, disease prediction, hospital resource management.

Finance

Risk analysis, fraud detection, portfolio management.

Education

Performance tracking, curriculum improvement, student engagement.

Government

Policy development, public safety, smart city planning.

Benefits of Data Analysis

Improved decision-making

Increased operational efficiency

Enhanced customer experiences

Cost reduction

Innovation and competitive advantage

Challenges in Data Analysis

Data privacy and security

Handling large and unstructured data

Data integration from multiple sources

Ensuring data quality and accuracy

Review: Is Data Analysis Worth It?

Absolutely. Businesses that invest in data analysis gain a significant edge over competitors. From making informed decisions to understanding customer preferences, data analysis is a cornerstone of success in the modern world. It empowers organizations to move from gut-based decisions to evidence-based strategies.

FAQs About Data Analysis

What skills are needed for a data analyst?

Statistical analysis, programming (Python/R), SQL, Excel, critical thinking, and communication skills.

Is data analysis a good career?

Yes, it's in high demand across industries with competitive salaries and growth potential.

Can I learn data analysis online?

Absolutely. Platforms like Coursera, edX, and Udemy offer excellent courses.

How does data analysis differ from data science?

Data analysis focuses on interpreting data, while data science includes advanced modeling and predictive analytics.

What is big data analytics?

It involves analyzing massive, complex data sets that traditional tools can't handle, often in real-time.

Conclusion

Data analysis is a powerful discipline that continues to evolve with technology. Whether you're a business owner looking to improve operations, a marketer seeking deeper customer insights, or a student pursuing a tech career, understanding data analysis is a valuable asset. The ability to collect, clean, and interpret data is not just a technical skill but a strategic necessity in today’s data-driven world.

Stay informed, stay analytical, and unlock the full potential of your data with tools and techniques that make data analysis both an art and a science.

Visit us at diglip7.com for more insightful articles on digital marketing, data science, and technology trends!

0 notes

Text

"Data Analysis Insights: Trends and Patterns at DICS Laxmi Nagar"

Data Analysis at DICS Laxmi Nagar: Empowering Future Analysts

Introduction

Data analysis is the backbone of decision-making in the modern world. Whether in business, healthcare, finance, marketing, or technology, organizations rely on data to drive strategic decisions and gain a competitive edge. At DICS Laxmi Nagar, we provide top-notch training in data analysis, equipping students with essential skills to collect, process, and interpret data effectively.

Our comprehensive Data Analysis Program focuses on industry-relevant tools and techniques, ensuring students develop hands-on expertise in Excel, SQL, Python, Power BI, and Tableau. With a blend of theoretical concepts and real-world applications, we prepare students to become proficient data analysts who can transform raw data into valuable insights.

What is Data Analysis?

Data analysis involves examining, cleaning, transforming, and interpreting data to discover useful patterns and insights. The process includes:

Data Collection: Gathering relevant data from various sources.

Data Cleaning: Removing inconsistencies and errors for accurate analysis.

Exploratory Data Analysis (EDA): Identifying trends and patterns in data.

Statistical Analysis: Applying statistical methods to interpret data.

Data Visualization: Presenting insights using charts, graphs, and dashboards.

Predictive Analysis: Using machine learning models to forecast trends.

By mastering these techniques, professionals can enhance business decision-making, optimize operations, and drive innovation.

Why Learn Data Analysis?

Data-driven decision-making is essential for every industry today. Companies use data analytics to understand consumer behavior, forecast sales, improve marketing strategies, and streamline operations. Some of the key benefits of learning data analysis include:

✔ High Demand: Data analysts are in demand across multiple sectors, including IT, finance, healthcare, and e-commerce. ✔ Career Growth: Opens opportunities in business intelligence, data science, financial analytics, and research. ✔ Better Decision-Making: Helps organizations make data-backed strategic decisions. ✔ Competitive Salaries: Data analysts earn lucrative salaries with numerous career prospects.

What We Offer at DICS Laxmi Nagar

At DICS Laxmi Nagar, our Data Analysis Course is designed to provide students with a strong foundation in data analytics. Here’s what makes our program unique:

1. Industry-Oriented Curriculum

Our curriculum covers essential topics, including: 🔹 Data cleaning and preparation techniques 🔹 Statistical analysis and hypothesis testing 🔹 Data visualization with Power BI & Tableau 🔹 SQL for database management and querying 🔹 Python programming for data manipulation and analysis 🔹 Machine learning basics for predictive analytics

2. Hands-On Training & Real-World Projects

We emphasize practical learning by incorporating real-world case studies and projects. Students work on datasets from different industries, gaining exposure to actual business challenges.

3. Expert Faculty & Mentorship

Our experienced instructors guide students through every step of the learning process, offering insights into industry trends and best practices.

4. Placement Assistance & Career Guidance

We provide job placement support, resume-building sessions, and interview preparation to help students secure jobs in top companies.

Career Opportunities in Data Analysis

After completing our Data Analysis Course, students can explore roles such as:

Data Analyst

Business Intelligence Analyst

Financial Analyst

Marketing Analyst

Healthcare Data Analyst

Research Analyst

Conclusion

At DICS Laxmi Nagar, we are committed to providing students with the best training in data analysis. Our goal is to bridge the gap between theoretical learning and real-world applications, ensuring students are job-ready in the field of analytics.

Join us today and take the first step toward a successful career in data analysis!

0 notes

Text

Graph Database Market Dynamics, Trends, and Growth Factors 2032

The Graph Database Market size was valued at US$ 2.8 billion in 2023 and is expected to reach US$ 15.94 billion in 2032 with a growing CAGR of 21.32 % over the forecast period 2024-2032

Graph Database Market is experiencing exponential growth due to the rising need for handling complex and interconnected data. Businesses across various industries are leveraging graph databases to enhance data relationships, improve decision-making, and gain deeper insights. The adoption of AI, machine learning, and real-time analytics is further driving demand for graph-based data management solutions.

Graph Database Market continues to evolve as organizations seek efficient ways to manage highly connected data structures. Unlike traditional relational databases, graph databases provide superior performance in handling relationships between data points. The surge in big data, social media analytics, fraud detection, and recommendation engines is fueling widespread adoption across industries such as finance, healthcare, e-commerce, and telecommunications.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3615

Market Keyplayers:

Oracle Corporation

Ontotext

Orient DB

Hewlett Packard Enterprise

Microsoft Corporation

Teradata Corporation

Stardog Union Inc.

Amazon Web Services

Market Trends Driving Growth

1. Rising Demand for AI and Machine Learning Integration

Graph databases play a crucial role in AI and machine learning by enabling more accurate predictions, knowledge graphs, and advanced data analytics. Businesses are integrating graph technology to enhance recommendation systems, cybersecurity, and fraud prevention.

2. Increased Adoption in Fraud Detection and Risk Management

Financial institutions and e-commerce platforms are utilizing graph databases to detect fraudulent transactions in real time. By mapping and analyzing relationships between entities, these databases can uncover hidden patterns that indicate suspicious activities.

3. Growth of Personalized Recommendation Engines

Tech giants like Amazon, Netflix, and Spotify rely on graph databases to power their recommendation engines. By analyzing user behavior and interconnections, companies can deliver highly personalized experiences that enhance customer satisfaction.

4. Expansion in Healthcare and Life Sciences

Graph databases are revolutionizing healthcare by mapping patient records, drug interactions, and genomic data. Researchers and healthcare providers can leverage these databases to improve diagnostics, drug discovery, and personalized medicine.

5. Surge in Knowledge Graph Applications

Enterprises are increasingly using knowledge graphs to organize and retrieve vast amounts of unstructured data. This trend is particularly beneficial for search engines, virtual assistants, and enterprise data management systems.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3615

Market Segmentation:

By Component

Software

Services

By Deployment

Cloud

On-Premise

By Type

Relational (SQL)

Non-Relational (NoSQL)

By Application

Identity and Access Management

Customer Analytics

Recommendation Engine

Master Data Management

Privacy and Risk Compliance

Fraud Detection and Risk Management

Others

By Analysis Type

Community Analysis

Connectivity Analysis

Centrality Analysis

Path Analysis

Market Analysis and Growth Projections

The shift towards real-time data analytics and the increasing complexity of enterprise data management are key growth drivers. Leading database providers such as Neo4j, Amazon Neptune, and TigerGraph are investing in scalable and high-performance solutions to cater to growing demand.

Key industries driving adoption include:

Banking and Finance: Graph databases enhance fraud detection, risk analysis, and regulatory compliance.

Healthcare and Biotech: Used for genomic sequencing, drug discovery, and personalized treatment plans.

Retail and E-commerce: Enhancing customer engagement through personalized recommendations.

Cybersecurity: Detecting anomalies and cyber threats through advanced network analysis.

Despite its rapid growth, the market faces challenges such as data privacy concerns, high implementation costs, and the need for specialized skills. However, continuous advancements in cloud computing and database-as-a-service (DBaaS) solutions are helping businesses overcome these barriers.

Regional Analysis

1. North America Leading the Market

North America dominates the graph database market, driven by the presence of major tech companies, financial institutions, and government initiatives in AI and big data analytics. The U.S. and Canada are investing heavily in advanced data infrastructure.

2. Europe Experiencing Steady Growth

Europe is witnessing strong adoption, particularly in industries like healthcare, finance, and government sectors. Regulations such as GDPR are pushing organizations to adopt more efficient data management solutions.

3. Asia-Pacific Emerging as a High-Growth Region

Asia-Pacific is experiencing rapid growth due to increased digital transformation in China, India, and Japan. The rise of e-commerce, AI-driven applications, and cloud adoption are key factors driving demand.

4. Latin America and Middle East & Africa Showing Potential

Although these regions have a smaller market share, there is growing interest in graph databases for financial security, telecommunications, and government data management initiatives.

Key Factors Fueling Market Growth