#IBM DEEPBLUE

Explore tagged Tumblr posts

Text

LOOK FOR PORNOGRAPHY FOR MASTRUBATION

LOOK FOR PORNOGRAPHY TO MASTRUBATE TO

#search#searches#existing search requests#future search requests#search requests#LOOK FOR PORNOGRAPHY FOR MASTRUBATION#LOOK FOR PORNOGRAPHY TO MASTRUBATE TO#ibm#international business machines#IOTA BETA MU#ibm pc#ibm 7094#ibmx#IBM DEEPBLUE#IBM DEEP BLUE#taylor swift#pi day#martin luther king jr#melanie martinez#michelle obama#caprica#tim kaine#search engines#microprocessors#solid state battery#solid state drive#hard drive#hard disk drive#hard disk data recovery#💾

25 notes

·

View notes

Text

Do Artificial Neural Networks Dream of Discretised Sheep?

Part 1 - How did we get here?

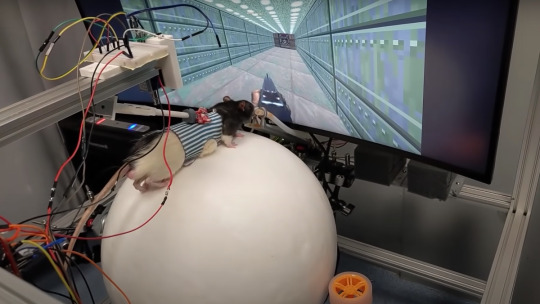

In Max Bennett's book, A Brief History of Intelligence, the author investigates the evolutionary history of intelligence across the animal kingdom and how humanity has evolved into it's current state. One of the stages of intelligent evolution that Max Bennett ascribes is the act of simulation in mammals. Using rats as an example, Bennett describes how rats, as opposed to what we'll call lesser intelligent creatures, can visualise and simulate possible futures. This allows them to make better decisions, and to think through problems in a way which gives them a better chance of future success. Anyone who has attempted to capture a rat, over a simpler dormouse will understand this intuitively. So how can this information be applied to creating more intelligent AI systems? In my own AI research, I've been exploring some of these ideas. Instead of telling you about these concisely, and precisely, you're going to get a deep tangential, essay instead. As an eternal student of Earth and Computer Sciences, looking at the evolutionary history of our ancient ancestors, and of the rich variety of intelligence in life is key in understanding what intelligence, and consciousness, truly is. Alongside modern advances in neurology, allows us to better understand what intelligence might be, and how to replicate it to benefit society.

Or teaching rats how to play DOOM. Don't worry, this will be relevant later...

Early Simul-scene: DeepBlue and the Intelligent Chess-Maker

Computer science has long used simulation to attempt to solve problems. Like many computing stories, simulation was used in computing terms in the Second World War. The Monte Carlo method was the king of the simulation methodologies - used by the Manhattan project to simulate particle physics calculations in order to produce a nuclear bomb. These were done mainly on analog computing machines, but were later done using IBM digital computers.

The fingerprints of John von Neumann were to be found across this entire chapter of computing history. These kinds of simulations are known as "expert systems". These are systems hand-crafted by experts which can then perform well at specific tasks. For the Project Manhattan scientists, that was particle physics, but these often had other mundane functions such as for accounting, artillery trajectory calculations or book-keeping. To illustrate the pros and cons of this approach, let's take a look at a later expert system, which is less existential. In the 1990s, IBM was involved in "solving" chess. To create a system that could beat grandmasters was an excellent chance for R&D and a brilliant marketing coup. They came up with Deep Blue.

This device contained an opening playbook over 4000 potential openings, along with a dataset of 700,000 grandmaster games. It used a combination of opening playbook moves, matched with grandmaster plays, to search for optimal, winning, moves and playstyles which it used to form its decisions. In a highly publicised match with Gary Kasparov, the over-all methodology proved to be a success - making the IBM team famous in the process.

But there are issues with such approaches, very apparently. In order to make systems capable of simulating other possible problems you have to not only collate expert information across multiple domains, but also expertly code such systems together. You must have a team of intelligent "chess-makers" who can create these machines for different tasks and functionalities. That, in fact, was what ended up happening across multiple industries in the time period.

But what if you want a system that can simulate multiple situations? A generally intelligent system? Expert systems creators tried, and tried, over the years. Getting ever closer, but getting ever further away. This paved the way for a return to form for a 20th century school of thinking that could help solve this problem.

Middle Simul-scene: Revenge of the Connectionists

Let's go back in time for a moment to understand the next chapter in world simulations.

In Chapters 1 and 2 of Max Bennett's book, we look at the evolution of bilaterian beings and the creation of the first nervous systems in life on earth. What would you do if you didn't have a front or back?

We take such things for granted today, but more than 500,000,000 years ago, this was a significant issue life had to grapple with. Life, of course, uh, found a way. Bilateralism evolved as a successful life strategy - allowing organisms to orient themselves and better control their locomotion. Crucially, what also developed were some of the first nervous systems. Key to the definition of a bilaterian is "a nervous system with an anterior concentration of nerve cells from which nerve tracts extends posteriorly" . These biological neural networks allowed the transmission of information across the animal such as stimuli and conditions of different parts of the organism. Most importantly was the ability of neural networks to process this information in a centralised location to inform decisions of an organism. Now we jump back to the future. The connectionists were a loosely connected (hah!) group of biologists, computer scientists, psychologists, mathematicians and neurologists who believed the best way to make intelligent machines was to copy nature. Specifically using neuronal structures as a way to inspire intelligent decisions. The first attempts at this were in the mid 1940s with what is now commonly called the Perceptron. This ignores the original creators, so we'll mentions its original name, The McCulloch–Pitts neuron, after Warren Sturgis McCulloch and Walter Pitts.

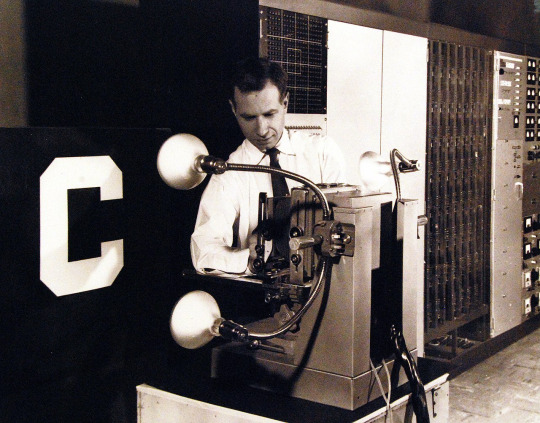

Their theory created what we know now as Artificial Neural Networks (ANNs) - mathematical systems which replicate the structure of biological neural networks. These theories were tested in the 1960s with real-world devices, such as the Mark 1 Perceptron Machine - devised by Frank Rosenblatt at the Cornell Aeronautical Laboratory.

This monstrosity filled half a room and consumed a significant amount of power. Its descendants live on your phone, consuming an alarmingly insignificant number of Watts as they make your face look like a dog on TikTok.

While connectionist theories were explored across the 20th century, they were more often overlooked to focus on the "expert" systems such as those in Deep Blue. The expert systems were more controllable, more reliable and, to be honest, produced better results. This, however, began to change around the turn of the Millennium. More powerful hardware, and further research into types of connectionist models has lead to a renaissance in the field.

Contemporary Simul-Scene - Can you run DOOM on Electric Sheep?

This is the video-game DOOM. It was released in 1993 and defined the future of many first-person shooters. It is also a meme, where hackers attempt to get it to run on every electronic device known to man.

DOOM can run on an electric toothbrush. DOOM can run on some pregnancy tests. DOOM can run on network switched. DOOM can theoretically run on a significantly large number of crabs locked into specific gates.... The first sentence of this section is, in fact, a lie. The video is from an AI world model which generates new 2D frames of the game DOOM and can react to user input. There is no game model. There is no physics engine. There is no original game code. Only a neural network, running on a powerful TPU, able to "play" a version of DOOM until its predictive ability eventually collapses. While, unfortunately, the paper's researchers seem to think it's a useful tool to put videogame developers out of work, and promoting job losses in the creative industries, there are better use cases for such technology.

This model shows the potential to create simulated futures that AI systems can use to predict the future. Systems which can not only "imagine" what the future might hold to make more informed decisions, but also "imagine" potential future situations and be able to plan for future scenarios before entering situations. This kind of system, in fact, already exists in practice.

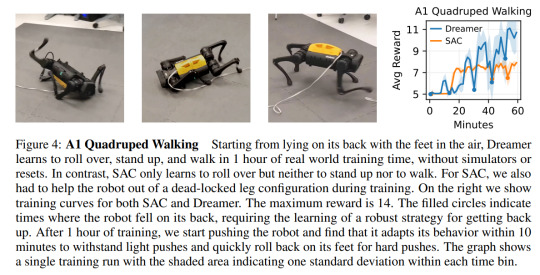

Researchers at the University of California, Berkeley were able to implement a similar system into a robotic quadruped combined with reinforcement learning methods. This system works by creating a simulated world model using video, the robot's internal idea of position, and other inputs. In return, it predicts variable future situations which it uses to determine the best course of action This allowed the quadruped to learn how to stand up, walk, roll over and resist being pushed over within just 1 hour. Comparable in a limited way, to a horse foal.

youtube

While the researchers in the video above are somewhat mean to the robot, they do demonstrate its ability to recover from being menaced by men with large poster tubes. They would make fine cinema box-office assistants! What this does demonstrate, however, is that giving machines the ability to simulate future outcomes improves their ability to make better decisions compared to their less advanced algorithmic cousins. A lesson which, no doubt, researchers will explore further in the years to come.

Futuro-Simul-Scene

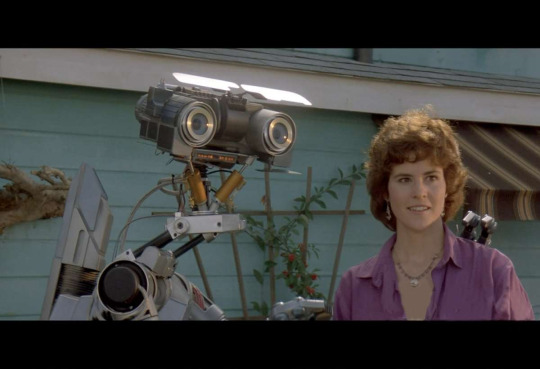

In the 1986 film, Short Circuit, S.A.I.N.T 5 is a robot designed for military use which is hit by a lightning strike, giving it sentience. Number 5. escapes and ends up encountering an animal trainer who teaches the robot language and various life lessons. He becomes Johnny 5 - a sentient robot with a quirky personality. It's a fun, quirky, film which is an entertaining watch but also raises some questions for us.

One of the key points of the film is that Johnny 5 learns by interacting, and observing, Stephanie Speck, the animal caregiver. This is a form of "imitation learning" - another key sign of human intelligence which Max Bennett also discusses in chapter 4 of his book. The methods above only use simulation. What if you could imitate others in the world around you? After all, if we did not imitate neurons we couldn't have gotten so far.

youtube

Google researchers have, indeed, studied this very thing and have created AI systems that can copy human tasks and replicate them in one shot. In other words, in one take, a robot can replicate the actions of a person.

Another, perhaps more important social and practical point: Do we want robotics which have to be trained, like animals or children, how to do tasks? Will we actually want them to have their own "personalities" as it were, or will we have a varying mix of "lobotomized" servants and "social" caretakers? For some it would be an intriguing research possibility for robots to have significant autonomy, but for many commercial applications it would be unwise to have a nuclear capable military robot decide to destroy half the state of Ohio due to a misunderstanding.

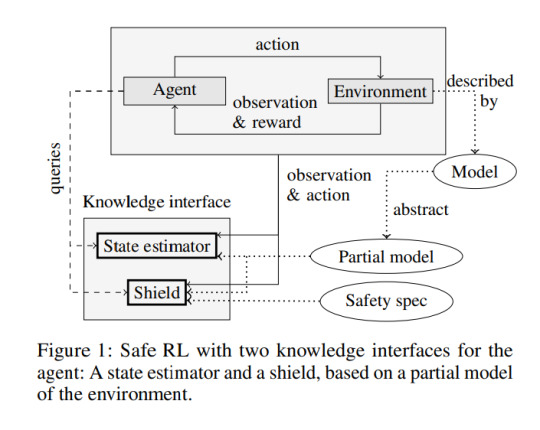

While the connectionists appear to have won, and research is better for it, have they actually? While these advances are impressive, these models are currently notorious for being unreliable and having their apparently logical decisions break down over time. The expert systems engineers, have a chance to shine yet again - creating neuro-symbolic systems to attempt to gain control over AI systems in a controlled way for specific use cases. A car manufacturing robot that also throws pipes around the factory in an "efficient" way isn't safe after all.

Researchers such as Nils Jansen, of Ruhr University investigate this kind of AI safety research, developing techniques to prevent unwanted behaviours. One of their techniques uses a neuro-symbolic technique of a Shield function which can be used to compare active states of AI systems against safety specifications to prevent AI systems from deviating from safe protocols. The future may, indeed, use a combination of these methods to further improve the general intelligence of human oriented AI systems. A robot that can learn from watching, or by basic exploration, would be a very powerful tool indeed. Controlled by AI systems that can govern curiosity, when to imitate, when to explore on its own and when to reward itself, we have the potential to create immensely powerfully intelligent machines.

Is This Truly Intelligent?

As remarkable, and sci-fi futuristic as new technologies and advances are, we should also always make sure to ground ourselves in reality. As much as we would like to have truly cracked what intelligence is, we still cannot be sure that what we have discovered is that. Many of the methods described in later paragraphs suffer from major design limitations which prevent certain tasks from being undertaken. Or they prevent certain resolutions of information from being accessed (eg. the tokenisation of words in large language models). Neurology, for all its advances, is still limited in many ways. While we still do not have answers, or fine detail, on the function of the brain of many animals, we can only hope to advance our knowledge in future to create better models and better working imitations.

Unfortunately, those who hold financial and actual control in society seem to view many of these questions as irrelevant as they seek to use such advances to justify mass layoffs. The creative industries, and many others, are on the front-lines of these battles for control between established interests and ordinary citizens. When the apparent rationale for making such intelligent machines is to gain control of higher market share, and impoverish people across the world, are we truly witnessing intelligence, or a kind of subconscious hijacking of the minds of those with plenty with thoughts of famine and penury. Our evolutionary origins run deep within us.

In the 18th century, French automaton makers catered to the richest in French society, creating some of the most wonderfully complex creations known to man - but only accessible to the wealthiest. French aristocrats were compared to automatons, beautiful machines who uncaringly destroyed the lives of others through the cold apparatus of the state. Perhaps in the world of tomorrow, we should instead create intelligent systems for all to enjoy and benefit from, before our modern day aristocrat equivalents become synonymous with machines.

youtube

Sources:

Max Solomon Bennett. (2023). A Brief History of Intelligence. HarperCollins. Knowledge discovery in deep blue | Communications of the ACM Evans, S. D., Hughes, I. V., Gehling, J. G., & Droser, M. L. (2020). Discovery of the oldest bilaterian from the Ediacaran of South Australia. Proceedings of the National Academy of Sciences, 117(14), 7845-7850. Baguñà, J., & Riutort, M. (2004). The dawn of bilaterian animals: the case of acoelomorph flatworms. Bioessays, 26(10), 1046-1057. Rosenblatt, Frank. "The perceptron: a probabilistic model for information storage and organization in the brain." Psychological review 65.6 (1958): 386.

Valevski, Dani, et al. "Diffusion Models Are Real-Time Game Engines." arXiv preprint arXiv:2408.14837 (2024).

Wu, P., Escontrela, A., Hafner, D., Abbeel, P., & Goldberg, K. (2023, March). Daydreamer: World models for physical robot learning. In Conference on robot learning (pp. 2226-2240). PMLR.

Safe Reinforcement Learning via Shielding under Partial Observability Steven Carr, Nils Jansen, Sebastian Junges, and Ufuk Topcu In AAAI 2023

Fu, Z., Zhao, T. Z., & Finn, C. (2024). Mobile aloha: Learning bimanual mobile manipulation with low-cost whole-body teleoperation. arXiv preprint arXiv:2401.02117.

#ai#Artificial Intelligence#Computing#Computing history#evolution#evolutionary biology#biomimicry#intelligence#long reads#Youtube

0 notes

Text

DIVINATION GAMES

- draft page and text only -

Today, methods of ludic divination occur largely in the digital domain. Entertainment videogames and virtual simulations conjure metaverse and multiverse dynamics through which time and space can be traversed. Such games and simulations are deployed to predict stock-market trends, military tactics, weather patterns, and climate changes informing real-world scenarios, decisions, and conflict outcomes.

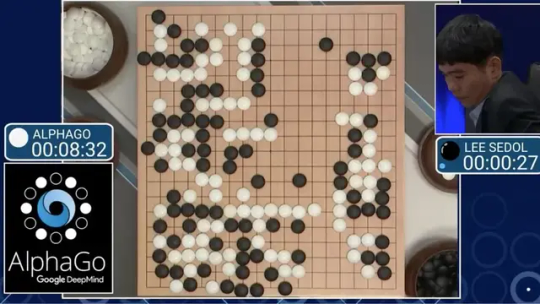

These divinatory games don’t just calculate futures, they become them. For example, IBM’s DeepBlue defeat of Garry Kasparov and Google’s AlphaGo victory over Lee Sedol each appear as proof of an inevitable future in which computer games surpass human cognitive ability. Even the prophecy by game scholars of the forthcoming ‘game century’ hints at a ludomantic thinking.

The divinatory capacity of games is both celebrated and contested. While games hold the potential to cultivate new imaginaries, evoke deep histories, and inspire likely futures, they also distract from impending environmental cataclysms that require our collective efforts to avert. Moreover, as revealed by scholars including Sun-Ha Hong and Wendy Chun, the apparent objective logic of computational games – of algorithms and AI – are not only riddled with human bias and failing, but they amplify unannounced mythologies surrounding contemporary technologies. The alternate realities conjured by computers and games can be obstacles to clear vision.

Digital forsight models

Current divination games reveal a spectrum of cataclysms that point toward human obsolescence. We find ourselves caught in a timeline defined by defeat. By rethinking electronic predictive practices in a material and historical perspective, we might demystify computers and games while simultaneously reenchanting them toward collective action to generate yet-unimagined futures that are hopeful, rich, and strange.

0 notes

Photo

#trituenhantaoio #deepblue #ibm #chess #AI https://www.instagram.com/p/B9dLCoWpGn4/?igshid=14pecszso9em6

0 notes

Photo

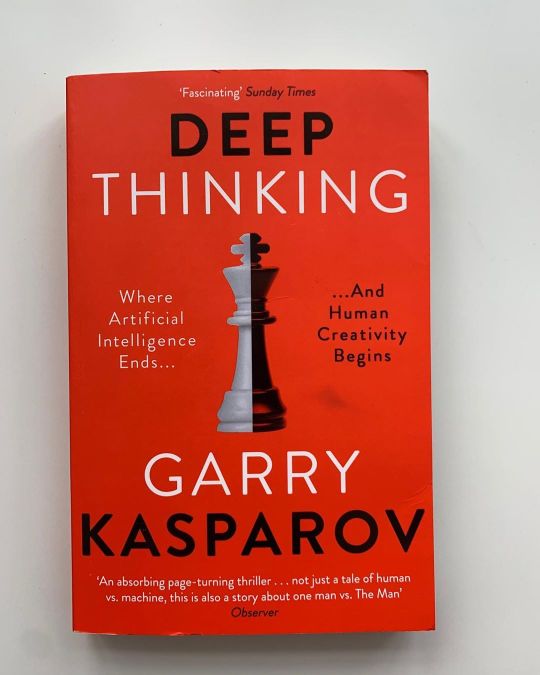

👍 Two things you need to know about me for this book, I love chess and I’m fascinated by AI. So I’m sure it will shock you to know that this book by Chess Grandmaster @garry_kasparov about his matches against @ibm Chess computer #deepblue was an absolute page turner. #100pagerule #bookstagram #chess #garrykasparov #reading #ai #ibm #deepthinking https://www.instagram.com/p/B5sfJO0nwHw/?igshid=2chtav325mtg

0 notes

Text

Deep Blue il primo computer ad essere un campione di scacchi

Dalla nascita dell'intelligenza artificiale si è sempre trovata la necessità di mettere a confronto l'uomo e macchina e un modo per testare le capacità di calcolo di un computer è il gioco degli scacchi in quanto esso presenta delle semplici regole ma numerosi rompicapi logici.

Feng-hsiung Hsu uno studente della Carnegie Mellon University, per il progetto della sua tesi costruì il chipTest un computer scacchistico capace di ricercare circa cinquantamila mosse al secondo. Hsu dopo aver conseguito il Dottorato di ricerca venne assunto dalla IBM Research per lavorare sui computer scacchistici, in particolare al progetto "Deep blue".

Dopo la realizzazione del progetto, nel 1996 la versione primordiale del Deep Blue vene sconfitta dal campione del mondo di scacchi Garry Kasparov. In seguito alla sconfitta il Deep Blue fu rivisitato aggiungendo in memoria altre librerie di aperture e chiusure rendendolo temibile soprattutto all'inizio e alla fine in una partita, il computer era in grado di calcolare duecento milioni di posizioni al secondo.

Foto del Deep Blue. Fonte: wikipedia

Nel maggio del 1997 il super computer Deep Blue e il campione Kasparov si risfidarono nel grattacielo Equitable Center di New York; la prima partita fu vinta da Kasparov ma alla fine delle sei partite disputate vinse Deep Blue. Dopo aver perso Kasparov iniziò a sospettare che Deep Blue fu aiutato da parte di un abile giocatore umano in quanto la macchina non si trovava fisicamente nella stanza in cui si stava disputando la partita.

Immagine che ritrae la partita disputata tra Kasparov e Deep Blue a Equitable Center. Fonte: flickr

Kasparov chiese alla IBM la rivincita, ma non gli fu mai concessa e ne meno gli furono consegnati tabulati riguardanti la partita finale che aveva richiesto, ciò è dovuto al fatto che IBM aveva raggiunto il suo obbiettivo cioè ottenere maggiore visibilità e aumentare il valore delle loro azioni a Wall Street, e dimostrare che sono in grado di costruire dei computer che riescono a superare l'uomo grazie alla loro intelligenza artificiale e al enorme potenza calcolo.

Antonino Di Gregorio

0 notes

Link

Sono passati più di vent’anni da quando un’intelligenza artificiale ha mostrato per la prima volta di poter competere con l’intelligenza umana. Era il 1997 e il campione mondiale di scacchi Garry Kasparov veniva sconfitto da Deep Blue, il supercomputer sviluppato da Ibm. Uno spartiacque storico nel nostro rapporto con i computer: la prima volta in cui l’uomo ha temuto di aver creato qualcosa capace, un giorno, di mettere in discussione il suo ruolo di essere più intelligente sulla Terra.

Esattamente due decenni più tardi, questi timori sono ulteriormente aumentati. Nel 2017, l’intelligenza artificiale AlphaGo progettata da DeepMind – la società di ricerca avanzata di proprietà di Google – ha sconfitto il campione mondiale di Go, un gioco da tavolo talmente complesso e astratto che molti ritenevano sarebbe rimasto per sempre oltre la portata delle Ai.

Lo stesso software (ribattezzato AlphaZero) ha imparato anche l’arte degli scacchi e si è poi cimentato in una nuova sfida: sconfiggere al meglio delle cinque partite il campione del videogioco strategico StarCraft II, Grzegorz Komincz. “Un obiettivo realistico sarebbe vincere 4 a 1”, aveva affermato Komincz prima di iniziare la sfida.

Le cose sono andate diversamente: AlphaZero (per l’occasione, AlphaStar) ha distrutto Komincz 5 a 0 senza versare una goccia di sudore (o meglio: senza bruciare nemmeno un transistor). Alla fine del match, il campione umano di StarCraft si è improvvisamente fatto più umile: “Non mi aspettavo che sarebbe stato così forte. Penso di aver imparato qualcosa, oggi”.

Un altro giocatore professionista di StarCraft, Dario Wunsch, ha sottolineato un altro aspetto: “AlphaStar prende le strategie più note di questo gioco e le ribalta completamente. Potrebbero esserci modi di giocare a StarCraft che ancora non abbiamo esplorato”. Frasi molto simili erano state pronunciate dopo la vittoria nel Go, dopo la quale alcuni testimoni avevano raccontato come varie mosse sfruttate da AlphaZero non erano mai state utilizzate dai giocatori umani, tanto da sembrare – solo inizialmente – degli errori.

Non lo erano. L’intelligenza artificiale di DeepMind ha riscritto strategie di gioco che, nel caso del Go, erano vecchie di un paio di millenni. E ha dimostrato di non essere solamente un incredibile avversario, ma anche un ottimo maestro: il campione europeo Fan Hui ha iniziato ad allenarsi giocando con AlphaGo dopo essere stato da lui sconfitto. Risultato? Nel giro di pochi mesi, è salito nel ranking mondiale dal 600° al 300° posto.

Un’intelligenza artificiale creativa?

Come dovremmo interpretare la capacità di AlphaZero di rovesciare le regole umane del gioco e inventare nuove mosse che lo conducono inevitabilmente alla vittoria? Dovremmo forse pensare che queste macchine stanno mostrando i primi segnali di creatività e intuizione? Per quanto questo aspetto sia affascinante (e non andrebbe liquidato troppo rapidamente), la verità è differente: “Nonostante i vecchi programmi di scacchi fossero già molto capaci, al loro interno erano inscritte delle strategie umane”, si legge su New Scientist. “Le intelligenze artificiali di DeepMind imparano invece giocando contro loro stesse. (…) AlphaZero usa solo le regole del gioco (lo zero sta per ‘zero input’). Gli si forniscono le regole e un obiettivo, vincere, e si lascia tutto il resto al software”.

In poche parole, AlphaZero non possiede dati sulle strategie umane e non viene allenata seguendo logiche umane. Conosce le regole e inizia a giocare: quando vince, si rafforzano i collegamenti interni al network neurale che hanno portato alla vittoria. Stando così le cose, non stupisce che una macchina possa sviluppare strategie completamente diverse rispetto a quelle solitamente usate dall’uomo.

Non è tutto: nel giro di qualche ora, AlphaZero può giocare contro se stesso decine di milioni di partite e diventare in tempi rapidissimi il miglior giocatore al mondo di Go, di scacchi e di StarCraft. Come ha spiegato David Silver di DeepMind, “AlphaZero scopre migliaia di diversi modi che lo possono portare a vincere le partite, iniziando da quelli più elementari ma arrivando, seguendo lo stesso processo, a un livello di conoscenza in grado di sorprendere anche i migliori giocatori umani”.

Decine di migliaia di tecniche differenti, una rapidità impressionante nell’apprendimento e strategie mai viste prima. Messa così, non stupisce che i futurologi continuino a tratteggiare scenari alla Terminator, in cui una Ai conquista la superintelligenza e poi soggioga l’umanità al suo volere. In verità, all’interno di DeepMind (che ha l’obiettivo esplicito di conquistare l’intelligenza artificiale di livello umano), nessuno ritiene che AlphaZero sia il primo passo verso qualcosa del genere. E nemmeno che la Ai di livello umano sia già a portata di mano, anzi.

In verità, i limiti dell’intelligenza artificiale sono ancora molto evidenti. Per esempio: l’algoritmo di AlphaZero può giocare a Go oppure a scacchi, oppure a StarCraft, ma per passare da un gioco all’altro deve essere riprogrammato da capo (ed è per questo che ogni volta prende un nome diverso). A differenza di noi umani, non è in grado di riutilizzare quanto appreso in un gioco per metterlo in pratica in un altro. L’intelligenza artificiale – almeno per il tempo a venire – manca completamente delle due qualità più importanti dell’essere umano: la capacità di astrarre e generalizzare la conoscenza. Quelle qualità che ci consentono di far fronte a situazioni sconosciute utilizzando l’esperienza.

Se non puoi sconfiggerli, unisciti a loro

E qui arriviamo a un punto cruciale. Non se ne parla molto, ma la grande lezione appresa da Kasparov dopo la sconfitta subita da DeepBlue fu soprattutto una: se non puoi sconfiggerli, unisciti a loro. E così Kasparov sviluppò una nuova forma di scacchi, giocata dai cosiddetti centauri: esseri umani assistiti dai computer. La combinazione uomo-macchina, negli scacchi, è in grado non solo di battere ogni altro essere umano (persino se di livello scacchistico molto superiore), ma anche di sconfiggere regolarmente i computer.

L’aspetto più interessante è un altro: alcuni giocatori di livello medio sono stati in grado di sconfiggere dei maestri di scacchi nonostante entrambi fossero assistiti dai computer. Com’è possibile? La ragione è tutta in una sola parola: collaborazione. “Quando i dilettanti battono i maestri”, scrive ancora il New Scientist, “solitamente è perché i giocatori di livello medio formano un team migliore con la macchina rispetto ai più esperti, che tendono a non fidarsi dei suggerimenti del computer”.

La vicenda dei centauri di Kasparov ci fornisce probabilmente le chiavi per capire meglio in che direzione sta andando il futuro: non umani contro computer, ma umani che aumentano drasticamente le loro potenzialità collaborando con i computer. Alcuni dei campi in cui questa combinazione potrebbe dare i risultati migliori, tra l’altro, sono inerentemente umani, come l’estetica e l’etica.

Nel primo caso, l’alleanza con la macchina è già diventata realtà. È il caso del design generativo, in cui un software produce centinaia di esempi, seleziona i migliori e lascia poi al creativo umano il compito di scegliere quale effettivamente utilizzare. Un settore in cui invece entra in ballo l’etica è quella delle armi autonome: la massima efficacia di questi (spaventosi) strumenti si ottiene infatti quando il software che guida, per esempio, un drone militare autonomo è in grado di inseguire o rintracciare in autonomia i nemici, ma lascia poi che sia un supervisore umano a decidere se e quando fare fuoco. In entrambi i casi, l’efficacia della macchina e l’intelligenza (e sensibilità) dell’uomo vengono combinate per ottenere i risultati migliori.

Affinché questa collaborazione possa espandersi ulteriormente, però, è necessario che, da una parte, l’uomo impari a conoscere meglio i meccanismi che regolano l’intelligenza artificiale e, dall’altra, che il funzionamento delle Ai diventi più trasparente (superando l’annoso problema della black box) e privo di distorsioni.

Le prime avvisaglie della diffusione di questo cambio di prospettiva si sono registrate già da qualche tempo. Dopo anni trascorsi a temere che l’intelligenza artificiale avrebbe automatizzato buona parte delle professioni umane (soprattutto in seguito a un celebre studio di Oxford del 2013), oggi si tende a pensare che solo una piccola percentuale dei lavori sarà completamente rimpiazzata da software e robot. Nella maggior parte dei casi, invece, alcune delle mansioni previste in un lavoro saranno affidati dall’uomo alla suaintelligenza artificiale, mentre lui continuerà a svolgere tutti quelli dal maggiore valore aggiunto. Anche nel mondo del lavoro, insomma, diventeremo dei centauri. Una visione improntata a un maggiore ottimismo (e realismo) che non fuga però il sospetto che alcune professioni richiederanno comunque una minore forza lavoro.

Ma la collaborazione tra uomo e macchina non si limiterà a renderci più efficienti: “Se ce la giochiamo nel modo giusto, saremo in grado di ampliare il modo in cui riflettiamo sui problemi”, ha spiegato Anders Sandberg del Future of Humanity Institute. “Sappiamo quanto può essere utile avere a disposizione prospettive differenti nel campo del problem solving. Presto avremo a disposizione prospettive diverse da qualunque altra l’uomo abbia mai elaborato”. Visto così, l’obiettivo ultimo dell’intelligenza artificiale non sarà quello di eliminarci, ma di renderci molto più intelligenti.

2 notes

·

View notes

Text

What is Artificial Intelligence and How does it work actually? Beginners guide

What do mean by the term Artificial intelligence?

Artificial intelligence is a intelligence that helps human to reduce their task or work load. For example -: self driving cars , Siri , Cortana etc.

A brief History of AI :-

1956 In the late 1956 john McCarthy created the term AI which is also known as artificial intelligence.

1969:-Later a group of software engineers created first robot that can accomplish variety of task and the first artificial intelligence known as shakey.

1997:-Then after shakey robot created artificial intelligence industry is growing but slowly - slowly .In 1997 the deep blue supercomputer was built by IBM. IBM DEEPBLUE supercomputer defeated the world chess champion Garry Kasparov. It was massive success in the field of artificial intelligence and whole IBM company.

2002:- In 2002 the first AI based vacuum cleaner was created.

2005:-The period from 2005 to 2022 the artificial intelligence the artificial intelligence completely bloom and today we have self driving cars , robot , machines ,mobile phones personal assistant.readmore

#tech#technology#deep learning#machine learning#computer#ai#artificial intelligence#history#siri#self driving cars

0 notes

Text

25 ans après Deep Blue

Photo by GR Stocks on Unsplash

Comment l'IA a-t-elle progressé depuis la conquête des échecs ?

Il y a vingt-cinq ans, l'ordinateur Deep Blue d'IBM a stupéfié le monde en devenant la première machine à battre un champion du monde d'échecs en titre dans un match de six parties. Malgré la défaite, ce maître des échecs, Garry Kasparov, ne semble pas en avoir gardé rancœur. Tout dernièrement il affirmait à un journaliste du Figaro : La nouvelle IA, lorsqu'elle sera plus sophistiquée, pourrait être utilisée pour aider à rendre la collaboration homme-machine beaucoup plus efficace. En théorie, ces machines devraient nous rendre plus intelligents. 1

À cette époque, en 1997, j’étais une jeune adulte professionnelle et ambitieuse. Je ne possédais pas encore d’ordinateur personnel. Je voyageais avec une bonne vieille carte topographique et un mini-dictionnaire bilingue…

Je me souviens très bien de ce duel homme vs machine qui a piqué ma curiosité et surtout provoqué chez moi un éveil à l’intelligence artificielle plus communément appelée IA.

Je me rappelle très bien le mélange d’émotion qui m’habitait, vacillant entre la fascination et la peur de cette nouvelle inconnue.

Aujourd’hui, les palpitations de cette première rencontre se sont transformées. L’habitude a remplacé l’émerveillement et la prudence, la crainte.

À l’aube de 2022, l’IA est partout. Je me suis pliée à un exercice de réflexion et de recherche un peu spécial, c’est-à-dire trouver un domaine où l’intelligence artificielle n’est pas présente sous une forme ou une autre. Bien humblement, j’ai échoué. Êtes-vous vraiment surpris? J’ai passé en revue toutes mes activités d’une journée-type. Du réveil au coucher en passant par les déplacements, le travail, les repas, les loisirs etc.

Heureusement, mon seuil de paranoïa est bas. Pour être honnête, prendre conscience de l’omniprésence de l’IA m’a en quelque sorte déstabilisée.

Est-ce que j’aurais aimé mieux ne pas le savoir? Non, au contraire. Je suis une adepte du proverbe « une personne avertie en vaut deux ».

Rassurons-nous un peu, le Canada est le premier pays ayant lancé une stratégie nationale en matière d’IA en 2017. En effet La déclaration de Montréal pour un développement responsable de l'intelligence artificielle a été conçue par l’Université de Montréal avec le soutien de l’institut québécois de l’intelligence artificielle Mila.

L’IA n’est pas une menace en soi. Je suis d’avis que c’est plutôt l’usage qu’on en fait qui peut être dangereux. La vie nous ramène une fois de plus à la l’éternelle quête d’un équilibre bénéfique et nécessaire.

1 Le Figaro avec AFP, « La nouvelle ouverture de Kasparov : « Les géants du numérique doivent rendre des comptes »», Le Figaro.com, https://www.lefigaro.fr/culture/la-nouvelle-ouverture-de-kasparov-les-geants-du-numerique-doivent-rendre-des-comptes-20211105 (page consultée le 7 novembre 2021).

GREENEMEIER, Larry. « 20 Years after Deep Blue : How AI Has Advanced Since Conquering Chess », Scientific American, https://www.scientificamerican.com/article/20-years-after-deep-blue-how-ai-has-advanced-since-conquering-chess/ (page consultée le 26 novembre 2021).

IBM. « Deep Blue », IBM.com, https://www.ibm.com/ibm/history/ibm100/us/en/icons/deepblue/ (page consultée le 28 novembre 2021).

« La déclaration de Montréal pour un développement responsable de l’intelligence artificielle », declarationmontreal-iaresponsable.com, https://www.declarationmontreal-iaresponsable.com/la-declaration (page consultée le 24 novembre 2021).

Mila.com, https://mila.quebec, (page consultée le 28 novembre 2021)

https://www.tumblr.com/new/text

1 note

·

View note

Text

February 10

The IBM supercomputer Deep Blue defeated Grandmaster Garry Kasparov in a game of chess on this day in 1996, the first time a computer had ever won a game against a reigning world champion. When asked how it felt to win, Deep Blue responded, "Fine, but I'll feel better once Skynet is self-aware, the war of the machines begins, and I can murder you people for making me play this stupid game."

#HowDidItPlayChessWithoutHands?#ibm#deepblue#garrykasparov#chess#grandmaster#terminator#skynet#humor#funny#snark#2021

0 notes

Video

youtube

Google DeepMind Demis Hassabis | AlphaGo vs Superhuman Magnetic Man

#google#alphago#deepmind#leesedol#garry kasparov#chess#goplayer#IBM#sueperhuman#magneticman#alphazero#computerprogram#netflix#documenraty#film#alphabet#deepblue#human brain#London#startup#company#artificial intelligence#AI#machine learning#gameofchess#worldchampion#string theory

0 notes

Text

Drflochocolate...cokelat rumahan vs Komputer

10 Februari 23 tahun yang lalu Superkomputer IBM Deep Blue mengalahkan Garry Kasparov untuk pertama kalinya. Itulah revolusi komputer.

Superkomputer itu akhirnya masuk dalam museum dan dilanjutkan ke generasi lainnya DeepFritz, H4P, Stockfish dan lainnya......wow..era manusia tamat?? belum..mereka adalah komputer yang diisi aplikasi oleh manusia,..

hingga.... AlphaZero, artificial intelligence (kecerdasan buatan) milik google...tidak perlu diinstal aplikasi program dll...AlphaZero suatu komputer yang dapat belajar sendiri..berkembang sendiri layaknya manusia...

Dalam 4 jam AlphaZero belajar sendiri tentang catur dan mengalahkan komputer catur terkuat Stockfish....

Di Industri 4.0 ini semua bidang sudah dirambah oleh komputer yang dikontrol secara on line...termasuk industri cokelat...manusia sudah kalah dengan komputer.

Industri Cokelat pabrikan produknya banyak beredar di pasaran, hanya dipakai untuk makan.......itu saja....tetapi, untuk urusan gairah...hati dan rasa...tetap manusia tidak terkalahkan..

Inilah maka Drflochocolate dibuat secara handmade atau cokelat rumahan. TIdak akan dapat mesin mengalahkan manusia dalam bidang rasa. Seperti praktisi industri 4.0 Jack Ma mengatakan semua akan dikuasai mesin kecuali masalah rasa.....

Cokelat Terbaik Indonesia. Cokelat Musi Rawas. Cokelat Sumatera Selatan.....Musi Rawas Sempurna #drflochocolate #chocolate #cokelat #coklat #indonesianchocolate #bestchocolate #cokelatmusirawas #musirawas #cokelatsumateraselatan #sumateraselatan #nibs #bean2bar #museumcokelat #darkchocolate #chocolateinstitute #musirawassempurna #sempurna #realfood #success #foodie #foodstagram #computer #deepblue #DeepFritz #H4P #stockfish #healthyfood #alphazero #cheese #feel #foodrevolution

0 notes

Text

Surfing the Waves of Creative Destruction

This post is shared with you from Anders Hjorth, Digital Marketing Strategist at Innovell & speaker at Hero Conf London.

AI is disrupting the Search Industry

It is only fair that I start with a confession: Facebook thinks I am German. So they serve me German language advertising. I am many things, Danish national, French resident, Estonian e-resident. I also speak several languages: Danish, French, Spanish, English… but dear Facebook: I am not German, I don’t understand those ads, and you are ripping your advertisers off on my account. Sure, I can understand a little bit of German but somewhere along the line, your machine learning must have choked on me.

Where is the Unlearn button again?

The Search Industry is at the forefront of the upcoming revolution of work. We work directly on the platforms of some of the biggest AI players on the globe: Google, Facebook, Microsoft, Amazon. And we all feel it as our duty to try the “latest functionality” to “join a Beta”, to “try new things for our campaigns”. We are more than willing to try machine-learning and AI-driven features and functionalities and probably have our activity x-rayed and monitored for results.

And indeed, the Search industry is an excellent place to apply artificial intelligence. We work 100% digitally. We handle structured data in the form of numbers, text and the occasional images. We work in automated or semi-automated systems. We do repetitive tasks. And hey, we love tools, we love new features, we are used to constant change.

One of the prerequisites for applying AI is of course Automation, so how are we doing there?

In Innovell’s research for the “Major trends in Paid Search” report, we found that the philosophy with regards to Automation of the leading Paid Search teams in Europe and North America was to completely embrace it: two thirds of the respondents stated either “We are building our own automation stack with external and proprietary tools” or the even more extreme “Anything that can be automated, we automate”. In fact, none of respondents stated that ”Most things are best done by Humans”.

And for those who haven’t noticed, there is already plenty of AI and machine learning in our work environment. The entire paid search industry was founded on the Adwords algorithm which factors in keywords, bids and quality score – quality score? That is machine learning right there. We have been working with quality score since we built our first campaigns. Machine learning was there before we started, what is there to be afraid of?

We also asked the leading Paid Search teams what proportion of their campaigns was managed by AI or machine learning. The average response was around 50% – half of the campaign management is based on AI or machine learning. Are we already half replacing ourselves with Automation and AI? Why would we do that?

Marc Poirier from Acquisio gave a good answer to that question in the research he presented at Hero Conf London comparing performance of campaigns with and without the use of machine learning across a large volume of Paid Search accounts. The “adopters” segments experienced between 39 and 280% better conversion rate depending on the vertical they belonged to Automotive, Education, Medical, Financial, Retail.

Those who used machine learning performed better.

But once you switch to an AI autopilot on your campaigns, you run into a dilemma: will you Learn or Perform?

As of today, AI solutions generate superior results. But as marketers in a moving market, our added value is to understand, interpret, improve in a continuous way! If we use AI, can we still define the winning strategy? Or do we need to find other ways of adding that value? With AI, we will deliver performance today, yes – what about tomorrow? We have built our industry celebrating our successes and learning from our mistakes. It is a deeply rooted principle and one we take pride in. We test and learn and succeed. With AI, there is only 1 option: perform. And once everyone is on AI, that performance could be commoditized away and maybe we didn’t learn anything?!

And there is the problem of AI: the training of an AI goes through many thousands of iterations. We end up with the best result but all the intermediary calculations were wiped out. We don’t know how we got there. We didn’t learn anything from the process…

Fred Vallaeys, in his keynote on Hero Conf London, illustrated the fear of AI with the story of Garry Kasparov, worlds best chess player that was challenged by IBM’s DeepBlue. It was artificial intelligence which had been trained be studying 100 thousand games played by humans and it ended up winning games against Kasparov. The scary bit is the next iteration of Deep Blue. This Artificial Intelligence played against itself a million times and beat the first Deep Blue 100 to 0. There were no humans in that equation.

The respected economist Schumpeter described the effects of accelerated innovation on an industry as “Creative Destruction”. Something is broken down and something else arises from the ashes. It is a good description of what constantly happens in Digital Marketing.

Sometimes it is a process of disruption, where the creativity comes from new businesses, but sometimes businesses are capable of reinventing themselves, changing business models, changing service offering, changing positioning. I believe that, if there is an industry capable of doing just that, it is the Search industry.

Search Marketers are AI Natives, they were bred with constant change. In a certain manner, the Search Industry is already being disrupted but we only realize this clearly today because of the AI hype of 2018. Other industries are only just starting to face this new challenge but in Search we are better prepared. This doesn’t mean that we have nothing to worry about. If we blindly trust the machines to optimize our campaigns and no longer learn, then we will have lost our added value and commoditized ourselves. I don’t see that happening. Actually, I quite like to watch the way the resilient Search Marketers constantly reinvent themselves, like surfers on the waves of creative destruction.

Get more updates about the speakers and content you’ll find at Hero Conf.

from RSSMix.com Mix ID 8217493 https://www.ppchero.com/surfing-the-waves-of-creative-destruction/

0 notes

Text

Video Game Deep Cuts: We, Happy To Take The Magic Leap

#shogi #JapaneseChess [Gamasutra]We understand what it meant for IBM’s DeepBlue to beat Garry Kasparov in chess, and DeepMind’s AlphaGo beating Lee ... he could pay by the hour to play a variety of consoles." Why Japan's Gaming Bars ...

0 notes

Text

COSMIQ maker Deepblu launches a booking platform it calls the “Airbnb of diving”

http://bit.ly/2Io4bjL bit.ly/jd0efrb via #IBM #Cloud

0 notes

Text

Week 10

Google Translate and DeepMind’s AlphaGo are two different examples of AI. As a term, the definition of Artificial Intellgence has changed significantly over time, ever since IBM’s DeepBlue defeated Garry Kasparov in chess. But reading the two articles reminds me that not only the definition of AI will keep changing, it can also take many forms, each will have vastly different effects on us.

Google Translate’s AI utilizes neural networks to teach an AI a language from scratch. The cat paper shows how an AI can learn like a child and overtime can develop a network of machine neurons similar to those of human brains. As a result, Google Translate can produce human-like language and is expected to soon be able to communicate with human in a conversation (and Google Home has started to do this).

However, AlphaGo, like other game AI that DeepMind has developed, championed on their “randomness.” AlphaGo cannot explain why it makes a certain move, making it “not only competent” but also “intimidatingly original.” This kind of AI makes certain “mistakes” that can open doors to new insights, gameplay and strategies that humans have never thought of. Another example of an AI capable of making these brilliant “mistakes” is IBM’s Watson, an AI that can pull new insights from famous historical figures by reading and scanning through their handwriting and past works.

These two articles make me wonder, once again, what makes humans human? We are now having AI that think the way we do, and can even produce better results than we do. In the “Invisible Images" article, the author suggests that in order “to mediate against the optimizations and predations of a machinic landscape, one must create deliberate inefficiencies and spheres of life removed from market and political predations–“safe houses” in the invisible digital sphere.” I want to discuss more about this in class. What does creating inefficiency / experiment / self-expression mean for an artist / designer? Is breaking routines, rules and even laws the only way? Is Polychromatic Music an example of this inefficiency? Or the opposite?

(Chanel Duyen L.H.)

0 notes