#Langchain Development service

Explore tagged Tumblr posts

Text

Top 10 Benefits of Using Langchain Development for AI Projects

In the rapidly evolving world of artificial intelligence, developers and businesses are constantly seeking frameworks that can streamline the integration of Large Language Models (LLMs) into real-world applications. Among the many emerging solutions, Langchain development stands out as a game-changer. It offers a modular, scalable, and intelligent approach to building AI systems that are not only efficient but also contextually aware.

0 notes

Text

How an LLM Development Company Can Help You Build Smarter AI Solutions

In the fast-paced world of artificial intelligence (AI), Large Language Models (LLMs) are among the most transformative technologies available today. From automating customer interactions to streamlining business operations, LLMs are reshaping how organizations approach digital transformation.

However, developing and implementing LLM-based solutions isn’t a simple task. It requires specialized skills, robust infrastructure, and deep expertise in machine learning and natural language processing (NLP). This is why many businesses are partnering with an experienced LLM development company to unlock the full potential of AI-powered solutions.

What Does an LLM Development Company Do?

An LLM development company specializes in designing, fine-tuning, and deploying LLMs for various applications. These companies help businesses leverage advanced language models to solve complex problems and automate language-driven tasks.

Common Services Offered:

Custom Model Development: Creating tailored LLMs for specific business use cases.

Model Fine-Tuning: Adapting existing LLMs to specific industries and datasets for improved accuracy.

Data Preparation: Cleaning and structuring data for effective model training.

Deployment & Integration: Seamlessly embedding LLMs into enterprise platforms, mobile apps, or cloud environments.

Ongoing Model Maintenance: Monitoring, retraining, and optimizing models to ensure long-term performance.

Consulting & Strategy: Offering guidance on best practices for ethical AI use, compliance, and security.

Why Your Business Needs an LLM Development Company

1. Specialized AI Expertise

LLM development involves deep learning, NLP, and advanced AI techniques. A specialized LLM development company brings together data scientists, engineers, and NLP experts with the knowledge required to build powerful models.

2. Accelerated Development Timelines

Instead of spending months building models from scratch, an LLM development company can fine-tune existing pre-trained models—speeding up the time to deployment while reducing costs.

3. Industry-Specific Solutions

LLMs can be adapted to specific industries, such as healthcare, finance, legal, and retail. By partnering with an LLM development company, you can customize models to understand industry-specific terminology and workflows.

4. Cost-Effective AI Adoption

Building LLMs in-house requires expensive infrastructure and expert teams. Working with an LLM development company provides a cost-effective alternative, offering high-quality solutions without long-term operational costs.

5. Scalable and Future-Ready Solutions

LLM development companies design solutions with scalability in mind, ensuring your AI tools can handle growing datasets and business needs over time.

Key Technologies Used in LLM Development

LLM development company use a combination of technologies and frameworks to build advanced AI solutions:

Python: Widely used for AI and NLP programming.

PyTorch & TensorFlow: Leading deep learning frameworks for model training and optimization.

Hugging Face Transformers: A popular library for working with pre-trained LLMs.

LangChain: Framework for building advanced LLM-powered applications with chaining logic.

DeepSpeed & LoRA (Low-Rank Adaptation): For optimizing LLMs for better efficiency and performance.

Cloud Platforms (AWS, Azure, GCP): For scalable cloud-based training and deployment.

Industries Leveraging LLM Development Companies

LLM-powered applications are now used across various industries to drive automation and boost productivity:

Healthcare

Automating clinical documentation.

Summarizing medical research.

Assisting with diagnostics.

Finance

Fraud detection and prevention.

Investment analysis and forecasting.

AI-driven customer service solutions.

E-commerce

Personalized product recommendations.

Automated content and product descriptions.

AI-powered virtual shopping assistants.

Legal

Automated contract analysis.

Legal document summarization.

Research assistance tools.

Education

Personalized learning platforms.

AI-powered tutoring tools.

Grading and assessment automation.

How to Choose the Right LLM Development Company

Choosing the right partner is critical for the success of your AI projects. Here are key criteria to consider:

Experience & Track Record: Review their past projects and case studies.

Technical Capabilities: Ensure the company has deep expertise in NLP, machine learning, and LLM fine-tuning.

Customization Flexibility: Look for companies that can tailor models for your specific needs.

Security & Compliance: Check for adherence to data privacy and regulatory standards.

Support & Scalability: Select a partner that offers ongoing maintenance and scalable solutions.

Future of LLM Development Companies

LLM development companies are poised to play an even bigger role as AI adoption accelerates worldwide. Emerging trends include:

Multi-Modal AI Models: Models that can process text, images, audio, and video together.

Edge AI Solutions: Compact LLMs running on local devices, reducing latency and enhancing privacy.

Autonomous AI Agents: LLMs capable of planning, reasoning, and acting independently.

Responsible AI & Transparency: Increased focus on ethical AI, bias reduction, and explainability.

Conclusion

The power of Large Language Models is transforming industries at an unprecedented pace. However, successfully implementing LLM-powered solutions requires specialized skills, advanced tools, and deep domain knowledge.

By partnering with an experienced LLM development company, businesses can rapidly develop AI tools that automate workflows, enhance customer engagement, and unlock new revenue opportunities. Whether your goals involve customer service automation, content generation, or intelligent data analysis, an LLM development company can help bring your AI vision to life.

0 notes

Text

Agentic AI Course in Bengaluru: What It Is and Why You Should Enroll

In a world where artificial intelligence is reshaping how we work, think, and interact, the next big frontier is Agentic AI—a powerful evolution of traditional AI systems. Bengaluru, India’s innovation capital, is now at the forefront of this transformation with specialized Agentic AI courses designed for both aspiring professionals and experienced techies.

Whether you’re a developer, data scientist, business analyst, or product manager, enrolling in an Agentic AI course in Bengaluru could be your smartest career move in 2025.

In this blog, we’ll break down what Agentic AI is, why it’s different from conventional AI, and how you can benefit by enrolling in a reputable training program in Bengaluru like the one offered by Boston Institute of Analytics (BIA).

What Is Agentic AI?

Agentic AI refers to AI systems that operate with autonomous decision-making capabilities, simulating the behavior of intelligent agents. These systems go beyond simple prompt-response models by executing complex tasks through:

Goal setting

Planning

Action execution

Feedback loops and learning

Think of it as the difference between using ChatGPT for a single response and having an AI assistant that automatically books your travel, analyzes your emails, drafts reports, and learns your preferences over time.

Popular applications of Agentic AI include:

Autonomous Sales Agents

AI Customer Support Agents

Self-Improving Marketing Bots

AI-Powered Task Managers and Workflow Optimizers

Agentic AI combines the power of Large Language Models (LLMs) with tools like LangChain, Auto-GPT, ReAct, RAG pipelines, and multi-agent orchestration—creating autonomous systems capable of long-term problem-solving and decision-making.

Why Is Agentic AI the Future?

The need for intelligent agents that can reason, plan, and act is becoming essential in industries like:

Tech & SaaS

E-commerce

Healthcare

Finance

HR & Recruitment

Customer Service

With tools like OpenAI’s GPT-4, Google's Gemini, and frameworks like LangChain and MetaGPT making waves, Agentic AI is enabling businesses to automate multi-step processes, reduce human intervention, and accelerate productivity.

By learning Agentic AI, you position yourself at the intersection of AI development, automation, and enterprise transformation—making you highly valuable in today’s competitive job market.

Why Learn Agentic AI in Bengaluru?

As India’s tech powerhouse, Bengaluru offers an ideal ecosystem for learning and implementing cutting-edge AI technologies.

Here's why:

Presence of Top AI Startups & Global MNCs (Google, Amazon, Infosys, Zoho, Freshworks, and more)

Innovation-driven Culture Frequent AI hackathons, meetups, and conferences

Skilled Talent Pool Ideal for peer learning and collaboration

Offline & Hybrid Training Options Major institutes offer flexible formats for working professionals

What Does an Agentic AI Course in Bengaluru Include?

When choosing a course, you should ensure it includes both theory and practical application. Here’s what a strong Agentic AI course in Bengaluru typically covers:

1. Core Concepts of Agentic AI

What makes an AI agent “agentic”

Difference between LLMs and autonomous agents

Use cases across industries

2. LLM & Frameworks

Working with OpenAI GPT-4, Anthropic Claude, and Google Gemini

Introduction to LangChain, Auto-GPT, MetaGPT, and CrewAI

Prompt engineering and Chain of Thought (CoT) reasoning

3. Multi-Agent Systems

Designing agent networks

Role-based task execution

Coordination between agents for enterprise use cases

4. Hands-on Projects

Build an AI Sales Agent that qualifies leads

Develop a multi-agent task automation bot for HR workflows

Create an autonomous marketing assistant for content generation

5. Tools & APIs

LangChain, Pinecone, Vector Databases

RAG (Retrieval Augmented Generation)

Streamlit, Gradio for front-end deployment

GitHub Copilot and Python SDKs

6. Responsible AI

Addressing ethical concerns

AI safety and human oversight

Deployment best practices

Where to Learn: Boston Institute of Analytics – Bengaluru

If you're looking for a highly practical, industry-recognized Agentic AI course in Bengaluru, the Boston Institute of Analytics (BIA) stands out for its:

Expert Instructors with experience in AI automation and LLMs

Cutting-Edge Curriculum aligned with global AI trends

Real-World Capstone Projects

Offline & Hybrid Options in Bengaluru

Placement Support with leading AI firms

Globally Recognized Certification

BIA's course is tailored for professionals aiming to build production-ready AI agents, not just prototypes or theory-based models.

Who Should Enroll?

This course is ideal for:

Software Developers building AI applications

Data Scientists exploring agent-based models

Product Managers working on AI-driven platforms

Business Analysts automating workflows

Digital Marketers leveraging AI agents for outreach and personalization

Even if you come from a non-technical background, foundational training is provided to help you transition smoothly.

Career Benefits After Completing an Agentic AI Course

By enrolling in a high-quality Agentic AI course in Bengaluru, you can unlock roles like:

Agentic AI Developer

LLM Automation Engineer

AI Solutions Architect

AI Product Manager

AI Workflow Engineer

Salary expectations for such roles in Bengaluru range between ₹10 LPA to ₹35 LPA, depending on your experience and portfolio.

Final Thoughts

Agentic AI isn’t just a buzzword—it’s the future of intelligent, autonomous systems that can reason, plan, and execute tasks with minimal human intervention. And Bengaluru is the perfect place to learn and innovate in this space.

If you’re looking to upskill in 2025, don’t wait for the AI revolution to pass you by. Enroll in an Agentic AI course in Bengaluru to future-proof your career, unlock high-paying roles, and gain a competitive edge.

#Generative AI courses in Bengaluru#Generative AI training in Bengaluru#Agentic AI Course in Bengaluru#Agentic AI Training in Bengaluru

0 notes

Link

0 notes

Text

AI Model Development: How to Build Intelligent Models for Business Success

AI model development is revolutionizing how companies tackle challenges, make informed decisions, and delight customers. If lengthy reports, slow processes, or missed insights are holding you back, you’re in the right place. You’ll learn practical steps to leverage AI models, plus why Ahex Technologies should be your go-to partner.

Read the original article for a more in-depth guide: AI Model Development by Ahex

What Is AI Model Development?

AI model development is the practice of designing, training, and deploying algorithms that learn from your data to automate tasks, make predictions, or uncover hidden insights. By turning raw data into actionable intelligence, you empower your team to focus on strategy , while machines handle the heavy lifting.

The AI Model Development Process

Define the Problem Clarify the business goal: Do you need sales forecasts, customer-churn predictions, or automated text analysis?

Gather & Prepare Data Collect, clean, and structure data from internal systems or public sources. Quality here drives model performance.

Select & Train the Model Choose an algorithm, simple regression for straightforward tasks or neural nets for complex patterns. Split data into training and testing sets for validation.

Test & Validate Measure accuracy, precision, recall, or other KPIs. Tweak hyperparameters until you achieve reliable results.

Deploy & Monitor Integrate the model into your workflows. Continuously track performance and retrain as data evolves.

AI Model Development in 2025

Custom AI models are no longer optional, they’re essential. Off-the-shelf solutions can’t match bespoke systems trained on your data. In 2025, businesses that leverage tailored AI enjoy faster decision-making, sharper insights, and increased competitiveness.

Why Businesses Need Custom AI Model Development

Precision & Relevance: Models built on your data yield more accurate, context-specific insights.

Data Security: Owning your models means full control over sensitive information — crucial in finance, healthcare, and beyond.

Scalability: As your business grows, your AI grows with you. Update and retrain instead of starting from scratch.

How to Create an AI Model from Scratch

Define the Problem

Gather & Clean Data

Choose an Algorithm (e.g., regression, classification, deep learning)

Train & Validate on split datasets

Deploy & Monitor in production

Break each step into weekly sprints, and you’ll have a minimum viable model in just a few weeks.

How to Make an AI Model That Delivers Results

Set Clear Objectives: Tie every metric to a business outcome, revenue growth, cost savings, or customer retention.

Invest in Data Quality: The “garbage in, garbage out” rule is real. High-quality data yields high-quality insights.

Choose Explainable Models: Transparency builds trust with stakeholders and meets regulatory requirements.

Stress-Test in Real Scenarios: Validate your model against edge cases to catch blind spots.

Maintain & Retrain: Commit to ongoing model governance to adapt to new trends and data.

Top Tools & Frameworks to Build AI Models That Work

PyTorch: Flexible dynamic graphs for rapid prototyping.

Keras (TensorFlow): User-friendly API with strong community support.

LangChain: Orchestrates large language models for complex applications.

Vertex AI: Google’s end-to-end platform with AutoML.

Amazon SageMaker: AWS-managed service covering development to deployment.

Langflow & AutoGen: Low-code solutions to accelerate AI workflows.

Breaking Down AI Model Development Challenges

Data Quality & Availability: Address gaps early to avoid costly rework.

Transparency (“Black Box” Issues): Use interpretable models or explainability tools.

High Costs & Skills Gaps: Leverage a specialized partner to access expertise and control budgets.

Integration & Scaling: Plan for seamless API-based deployment into your existing systems.

Security & Compliance: Ensure strict protocols to protect sensitive data.

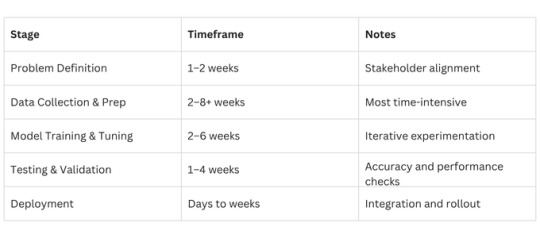

Typical AI Model Timelines

For simple pilots, expect 1–2 months; complex enterprise AI can take 4+ months.

Cost Factors for AI Development

Why Ahex Technologies Is the Best Choice for Mobile App Development

(Focusing on expertise, without diving into technical app details)

Holistic AI Expertise: Our AI solutions integrate seamlessly with mobile platforms you already use.

Client-First Approach: We tailor every model to your unique workflow and customer journey.

End-to-End Support: From concept to deployment and beyond, we ensure your AI and mobile efforts succeed in lockstep.

Proven Track Record: Dozens of businesses trust us to deliver secure, scalable, and compliant AI solutions.

How Ahex Technologies Can Help You Build Smarter AI Models

At Ahex Technologies, our AI Development Services cover everything from proof-of-concept to full production rollouts. We:

Diagnose your challenges through strategic workshops

Design custom AI roadmaps aligned to your goals

Develop robust, explainable models

Deploy & Manage your AI in the cloud or on-premises

Monitor & Optimize continuously for peak performance

Learn more about our approach: AI Development Services Ready to get started? Contact us

Final Thoughts: Choosing the Right AI Partner

Selecting a partner who understands both the technology and your business is critical. Look for:

Proven domain expertise

Transparent communication

Robust security practices

Commitment to ongoing optimization

With the right partner, like Ahex Technologies, you’ll transform data into a competitive advantage.

FAQs on AI Model Development

1. What is AI model development? Designing, training, and deploying algorithms that learn from data to automate tasks and make predictions.

2. What are the 4 types of AI models? Reactive machines, limited memory, theory of mind, and self-aware AI — ranging from simple to advanced cognitive abilities.

3. What is the AI development life cycle? Problem definition → Data prep → Model building → Testing → Deployment → Monitoring.

4. How much does AI model development cost? Typically $10,000–$500,000+, depending on project complexity, data needs, and integration requirements.

Ready to turn your data into growth? Explore AI Model Development by Ahex Our AI Development Services Let’s talk!

0 notes

Text

Exploring the Power of LangChain in Machine Learning Applications

LangChain is rapidly becoming a transformative tool in the field of artificial intelligence. Combining the strengths of language models with external tools and environments, LangChain offers dynamic capabilities for developing intelligent, responsive, and contextual applications. This blog will dive deep into how LangChain machine learning is revolutionizing the AI landscape and why professionals and businesses should pay attention to this innovation.

What is LangChain?

LangChain is an open-source framework designed to simplify the development of applications powered by large language models (LLMs). It enables the integration of LLMs with various data sources, APIs, and user inputs, making it easier to build advanced, end-to-end machine learning solutions. In traditional machine learning, data preprocessing, model training, and deployment require separate pipelines. LangChain brings everything together in a seamless environment.

LangChain machine learning is particularly beneficial in scenarios requiring contextual understanding, multi-step reasoning, and interactivity with external systems. For example, it can be used in chatbots that fetch real-time data, personal AI assistants, knowledge management tools, and even intelligent document analysis.

Key Features of LangChain Machine Learning

Tool and API Integration: LangChain allows seamless communication between language models and external APIs or tools. This means your AI models can take action based on real-world data, creating applications that are both smart and practical.

Modular Architecture: LangChain supports modular development, meaning different machine learning components such as retrievers, chains, memory, agents, and prompts can be customized. This modularity is a major reason for its rising popularity.

Memory Management: Unlike basic language models, LangChain offers persistent memory. Applications can remember past interactions, enabling more natural and continuous conversations, which is vital in AI customer service and personal assistant applications.

Prompt Engineering Support: LangChain makes it easier to manage and optimize prompts, a key feature when designing language-based machine learning systems.

Multi-Modal Inputs: LangChain machine learning applications are not limited to text. They can handle inputs from multiple sources—text, audio, structured data—making them powerful and adaptable.

Applications of LangChain in Machine Learning

LangChain is being widely adopted across various industries. In healthcare, it can assist doctors by analyzing medical records and offering decision support. In finance, it can power smart advisors that analyze market trends and provide investment strategies. Educational platforms use LangChain to develop intelligent tutors that adapt to students' learning styles. The combination of machine learning algorithms and LangChain's language understanding capabilities makes these use-cases incredibly efficient and valuable.

0 notes

Text

Agentic AI: The Next Leap in Artificial Intelligence

How Agentic AI is transforming the future of automation and decision-making — and what that means for businesses today.

What is Agentic AI?

Agentic AI refers to a new class of artificial intelligence systems designed to act autonomously with goal-directed behavior. Unlike traditional AI models that passively respond to input (e.g., GPTs answering prompts), agentic systems proactively plan, decide, and act — similar to human agents. They have the capacity to:

Set and prioritize goals

Execute tasks with minimal human intervention

Learn from the environment and adapt strategies over time

Collaborate with other agents or systems

Why Is Agentic AI So Important Now?

Several converging trends have made Agentic AI not only possible but urgently necessary:

Explosion in automation demand across industries

Data-rich environments suitable for decision-making agents

Advances in reinforcement learning, LLM reasoning, and multi-modal AI

Developer access to agent frameworks like AutoGPT, LangChain, and CrewAI

Key Capabilities of Agentic AI:

Proactivity: Takes initiative rather than waiting for prompts

Goal orientation: Operates based on long-term objectives

Memory and learning: Retains context across actions and updates its own knowledge

Autonomy: Functions with minimal supervision

Use Cases: Where Agentic AI Makes a Real Difference

🔧 Software Development

Agentic AI can generate, debug, and test code autonomously, freeing up engineers for strategic tasks.

📊 Business Intelligence

These agents can synthesize insights from vast data lakes, providing real-time recommendations.

🤖 Robotics

From warehouse automation to autonomous drones, agentic logic enhances decision-making and adaptability.

🧑💼 Virtual Employees

The Challenges We Must Solve

While promising, Agentic AI is not without its challenges:

Safety & alignment: How do we ensure these agents align with human goals?

Over-optimization: Without constraints, agents might pursue unintended outcomes.

Explainability: Their decision-making logic needs transparency for high-stakes use.

Infrastructure: Orchestrating multiple agents with memory and coordination is complex.

According to OpenAI and other leading labs, the path forward includes building scalable oversight mechanisms, integrating human-in-the-loop designs, and ensuring interpretability at every layer.

How Kaopiz Engages with Agentic AI

Kaopiz has been actively researching and building applications using agentic AI patterns to address real-world business problems. Whether it’s streamlining enterprise workflows or developing smart assistants for customer service, the company brings its software engineering excellence to the frontier of intelligent systems.

👉 Learn more about Kaopiz’s intelligent automation solutions and how they help businesses integrate emerging tech trends naturally into operations.

Best Practices for Businesses Exploring Agentic AI

Start with narrow use cases: Begin with clearly scoped, automatable workflows.

Choose the right frameworks: Explore open-source libraries like LangChain, AutoGen, or Kaopiz’s proprietary modules.

Prioritize safety and evaluation: Ensure your agents are explainable and auditable.

Human + AI synergy: Use agentic AI to augment, not replace, human decision-making.

Stay updated on research: Trends evolve quickly — monitor developments from trusted sources like Stanford HAI or DeepMind.

Final Thoughts

Agentic AI is not just another buzzword — it’s a pivotal advancement that’s reshaping what machines can do independently. Businesses that strategically invest in agent-based systems today will likely lead tomorrow’s AI-native economy.

If you’re seeking a trusted technology partner to explore these advancements, Kaopiz offers the technical expertise and innovation mindset needed to unlock the true value of Agentic AI.

0 notes

Text

Multimodal AI Pipelines: Building Scalable, Agentic, and Generative Systems for the Enterprise

Introduction

Today’s most advanced AI systems must interpret and integrate diverse data types—text, images, audio, and video—to deliver context-aware, intelligent responses. Multimodal AI, once an academic pursuit, is now a cornerstone of enterprise-scale AI pipelines, enabling businesses to deploy autonomous, agentic, and generative AI at unprecedented scale. As organizations seek to harness these capabilities, they face a complex landscape of technical, operational, and ethical challenges. This article distills the latest research, real-world case studies, and practical insights to guide AI practitioners, software architects, and technology leaders in building and scaling robust, multimodal AI pipelines.

For those interested in developing skills in this area, a Agentic AI course can provide foundational knowledge on autonomous decision-making systems. Additionally, Generative AI training is crucial for understanding how to create new content with AI models. Building agentic RAG systems step-by-step requires a deep understanding of both agentic and generative AI principles.

The Evolution of Agentic and Generative AI in Software Engineering

Over the past decade, AI in software engineering has evolved from rule-based, single-modality systems to sophisticated, multimodal architectures. Early AI applications focused narrowly on tasks like text classification or image recognition. The advent of deep learning and transformer architectures unlocked new possibilities, but it was the emergence of agentic and generative AI that truly redefined the field.

Agentic AI refers to systems capable of autonomous decision-making and action. These systems can reason, plan, and interact dynamically with users and environments. Generative AI, exemplified by models like GPT-4, Gemini, and Llama, goes beyond prediction to create new content, answer complex queries, and simulate human-like interaction. A comprehensive Agentic AI course can help developers understand how to design and implement these systems effectively.

The integration of multimodal capabilities—processing text, images, and audio simultaneously—has amplified the potential of these systems. Applications now range from intelligent assistants and content creation tools to autonomous agents that navigate complex, real-world scenarios. Generative AI training is essential for developing models that can generate new content across different modalities. To build agentic RAG systems step-by-step, developers must master the integration of retrieval and generation capabilities, ensuring that systems can both retrieve relevant information and generate coherent responses.

Key Frameworks, Tools, and Deployment Strategies

The rapid evolution of multimodal AI has been accompanied by a proliferation of frameworks and tools designed to streamline development and deployment:

LLM Orchestration: Modern AI pipelines increasingly rely on the orchestration of multiple large language models (LLMs) and specialized models (e.g., vision transformers, audio encoders). Tools like LangChain, LlamaIndex, and Hugging Face Transformers enable seamless integration and chaining of models, allowing developers to build complex, multimodal workflows with relative ease. This process is fundamental in Generative AI training, as it allows for the creation of diverse and complex AI models.

Autonomous Agents: Frameworks such as AutoGPT and BabyAGI provide blueprints for creating agentic systems that can autonomously plan, execute, and adapt based on multimodal inputs. These agents are increasingly deployed in customer service, content moderation, and decision support roles. An Agentic AI course would cover the design principles of such autonomous systems.

MLOps for Generative Models: Operationalizing generative and multimodal AI requires robust MLOps practices. Platforms like Galileo AI offer advanced monitoring, evaluation, and debugging capabilities for multimodal pipelines, ensuring reliability and performance at scale. This is crucial for maintaining the integrity of agentic RAG systems.

Multimodal Processing Pipelines: The typical pipeline for multimodal AI involves data collection, preprocessing, feature extraction, fusion, model training, and evaluation. Each step presents unique challenges, from ensuring data quality and alignment across modalities to managing the computational demands of large-scale training. Generative AI training focuses on optimizing these pipelines for content generation tasks.

Vector Database Management: Emerging tools like DataVolo and Milvus provide scalable, secure, and high-performance solutions for managing unstructured data and embeddings, which are critical for efficient retrieval and processing in multimodal systems. This is essential for building agentic RAG systems step-by-step, as it enables efficient data management.

Software Engineering Best Practices for Multimodal AI

Building and scaling multimodal AI pipelines demands more than cutting-edge models—it requires a holistic approach to system design and deployment. Key software engineering best practices include:

Version Control and Reproducibility: Every component of the AI pipeline should be versioned and reproducible, enabling effective debugging, auditing, and compliance. This is particularly important when integrating agentic AI and generative AI components.

Automated Testing: Comprehensive test suites for data validation, model behavior, and integration points help catch issues early and reduce deployment risks. Generative AI training emphasizes the importance of testing generated content for coherence and relevance.

Security and Compliance: Protecting sensitive data—especially in multimodal systems that process images or audio—requires robust encryption, access controls, and compliance with regulations such as GDPR and HIPAA. This is a critical aspect of building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Documentation and Knowledge Sharing: Clear, up-to-date documentation and collaborative tools (e.g., Confluence, Notion) enable cross-functional teams to work efficiently and maintain system integrity over time. An Agentic AI course would highlight the importance of documentation in complex AI systems.

Advanced Tactics for Scalable, Reliable AI Systems

Scaling autonomous, multimodal AI pipelines requires advanced tactics and innovative approaches:

Modular Architecture: Designing systems with modular, interchangeable components allows teams to update or replace individual models without disrupting the entire pipeline. This is especially critical for multimodal systems, where new modalities or improved models may be introduced over time. Generative AI training emphasizes modularity to facilitate updates and scalability.

Feature Fusion Strategies: Effective integration of features from different modalities is a key challenge. Techniques such as early fusion (combining raw data), late fusion (combining model outputs), and cross-modal attention mechanisms are used to improve performance and robustness. Building agentic RAG systems step-by-step involves mastering these fusion strategies.

Transfer Learning and Pretraining: Leveraging pretrained models (e.g., CLIP for vision-language tasks, ViT for image processing) accelerates development and improves generalization across modalities. This is a common practice in Generative AI training to enhance model performance.

Scalable Infrastructure: Deploying multimodal AI at scale requires robust infrastructure, including distributed training frameworks (e.g., PyTorch Lightning, TensorFlow Distributed) and efficient inference engines (e.g., ONNX Runtime, Triton Inference Server). An Agentic AI course would cover the design of scalable infrastructure for autonomous systems.

Continuous Monitoring and Feedback Loops: Real-time monitoring of model performance, data drift, and user feedback is essential for maintaining reliability and iterating quickly. This is crucial for building agentic RAG systems step-by-step, ensuring continuous improvement.

Ethical and Regulatory Considerations

As multimodal AI systems become more pervasive, ethical and regulatory considerations grow in importance:

Bias Mitigation: Ensuring that models are trained on diverse, representative datasets and regularly audited for bias. This is a critical aspect of Generative AI training, as biased models can generate inappropriate content.

Privacy and Data Protection: Implementing robust data governance practices to protect user privacy and comply with global regulations. An Agentic AI course would emphasize the importance of ethical considerations in AI system design.

Transparency and Explainability: Providing clear explanations of model decisions and maintaining audit trails for accountability. This is essential for building agentic RAG systems step-by-step, ensuring transparency and trust in AI decisions.

Cross-Functional Collaboration for AI Success

Building and scaling multimodal AI pipelines is inherently interdisciplinary. It requires close collaboration between data scientists, software engineers, product managers, and business stakeholders. Key aspects of successful collaboration include:

Shared Goals and Metrics: Aligning on business objectives and key performance indicators (KPIs) ensures that technical decisions are driven by real-world value. Generative AI training emphasizes the importance of collaboration to ensure that AI systems meet business needs.

Agile Development Practices: Regular standups, sprint planning, and retrospective meetings foster transparency and rapid iteration. An Agentic AI course would cover agile methodologies for developing complex AI systems.

Domain Expertise Integration: Involving domain experts ensures that models are contextually relevant and ethically sound. This is crucial for building agentic RAG systems step-by-step, ensuring that AI systems are relevant and effective.

Feedback Loops: Establishing channels for continuous feedback from end-users and stakeholders helps teams identify issues early and prioritize improvements. This is essential for Generative AI training, as feedback loops help refine generated content.

Measuring Success: Analytics and Monitoring

The true measure of an AI pipeline’s success lies in its ability to deliver consistent, high-quality results at scale. Key metrics and practices include:

Model Performance Metrics: Accuracy, precision, recall, and F1 scores for classification tasks; BLEU, ROUGE, or METEOR for generative tasks. Generative AI training focuses on optimizing these metrics for content generation tasks.

Operational Metrics: Latency, throughput, and resource utilization are critical for ensuring that systems can handle production workloads. An Agentic AI course would cover the importance of monitoring operational metrics for autonomous systems.

User Experience Metrics: User satisfaction, engagement, and task completion rates provide insights into the real-world impact of AI deployments. Building agentic RAG systems step-by-step involves monitoring user experience metrics to ensure that systems meet user needs.

Monitoring and Alerting: Real-time dashboards and automated alerts help teams detect and respond to issues promptly, minimizing downtime and maintaining trust. This is crucial for Generative AI training, as continuous monitoring ensures that AI systems remain reliable and efficient.

Case Study: Meta’s Multimodal AI Journey

Meta’s recent launch of the Llama 4 family, including the natively multimodal Llama 4 Scout and Llama 4 Maverick models, offers a compelling case study in the evolution and deployment of agentic, generative AI at scale. This case study highlights the importance of Generative AI training in developing models that can process and generate content across multiple modalities.

Background and Motivation

Meta recognized early on that the future of AI lies in the seamless integration of multiple modalities. Traditional LLMs, while powerful, were limited by their focus on text. To deliver more immersive, context-aware experiences, Meta set out to build models that could process and reason across text, images, and audio. Building agentic RAG systems step-by-step requires a similar approach, integrating retrieval and generation capabilities to create robust AI systems.

Technical Challenges

The development of the Llama 4 models presented several technical hurdles:

Data Alignment: Ensuring that data from different modalities (e.g., text captions and corresponding images) were accurately aligned during training. This challenge is common in Generative AI training, where data quality is crucial for model performance.

Computational Complexity: Training multimodal models at scale required significant computational resources and innovative optimization techniques. An Agentic AI course would cover strategies for managing computational complexity in autonomous systems.

Pipeline Orchestration: Integrating multiple specialized models (e.g., vision transformers, audio encoders) into a cohesive pipeline demanded robust software engineering practices. This is essential for building agentic RAG systems step-by-step, ensuring that systems are scalable and efficient.

Actionable Tips and Lessons Learned

Based on the experiences of Meta and other leading organizations, here are practical tips and lessons for AI teams embarking on the journey to scale multimodal, autonomous AI pipelines:

Start with a Clear Use Case: Identify a specific business problem that can benefit from multimodal AI, and focus on delivering value early. Generative AI training emphasizes the importance of clear use cases for AI development.

Invest in Data Quality: High-quality, well-aligned data is the foundation of successful multimodal systems. Invest in robust data collection, cleaning, and annotation processes. An Agentic AI course would highlight the importance of data quality for autonomous systems.

Embrace Modularity: Design systems with modular, interchangeable components to facilitate updates and scalability. This is crucial for building agentic RAG systems step-by-step, allowing for easy updates and maintenance.

Leverage Pretrained Models: Use pretrained models for each modality to accelerate development and improve performance. Generative AI training often relies on pretrained models to enhance model capabilities.

Monitor Continuously: Implement real-time monitoring and feedback loops to detect issues early and iterate quickly. This is essential for Generative AI training, ensuring that AI systems remain reliable and efficient.

Foster Cross-Functional Collaboration: Involve stakeholders from across the organization to ensure that technical decisions are aligned with business goals. An Agentic AI course would emphasize the importance of collaboration in AI development.

Prioritize Security and Compliance: Protect sensitive data and ensure that systems comply with relevant regulations. This is critical for building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Iterate and Learn: Treat each deployment as a learning opportunity, and use feedback to drive continuous improvement. Generative AI training emphasizes the importance of iteration and learning in AI development.

Conclusion

Building scalable multimodal AI pipelines is one of the most exciting and challenging frontiers in artificial intelligence today. By leveraging the latest frameworks, tools, and deployment strategies—and applying software engineering best practices—teams can build systems that are not only powerful but also reliable, secure, and aligned with business objectives. The journey is complex, but the rewards are substantial: richer user experiences, new revenue streams, and a competitive edge in an increasingly AI-driven world. For AI practitioners, software architects, and technology leaders, the message is clear: embrace the challenge, invest in collaboration and continuous learning, and lead the way in the multimodal AI revolution.

0 notes

Text

Agent Communication Protocol: Vision For AI Agent Ecosystems

Agent Communication Protocol IBM

IBM released its Agent Communication Protocol (ACP), an open standard for connecting and cooperating AI agents built on different frameworks and technology stacks. IBM thinks Agent Communication Protocol, a basic layer for interoperability, will become the “HTTP of agent communication,” enabling AI bots a standard language to do complex real-world tasks.

Since agents often operate as “islands” in the current AI ecosystem, the protocol, announced on May 28, 2025, addresses a fundamental issue. Custom integrations, which are expensive, fragile, and hard to scale, are needed to connect these agents.

Every integration is expensive duct tape without a standard. IBM's Agent Communication Protocol aims to eliminate these connections by offering a single interface for agents produced with BeeAI, LangChain, CrewAI, or custom code.

ACP underpins BeeAI, an open-source platform for locating, executing, and building AI agents. IBM gave BeeAI to the charity Linux Foundation in March. Open governance provides transparency and community-driven progress for Agent Communication Protocol and BeeAI. Developers can adopt and improve the standard without being tied to one vendor.

The design of Agent Communication Protocol aimed to improve Anthropic's Model Context Protocol (MCP). MCP has become the standard for agents to access external data and resources. ACP connects agents directly, while MCP connects them to databases and APIs. BeeAI and other multi-agent orchestration systems can leverage ACP and MCP.

IBM Research product manager Jenna Winkler stressed the importance of both protocols for real-world AI expansion. Two agents simultaneously acquire market data and simulate using MCP. They compare their results and give a proposal using Agent Communication Protocol.

Agent Communication Protocol is a RESTful HTTP-based protocol that supports synchronous and asynchronous agent interactions. Since it follows HTTP conventions, this architecture is easier to use and integrate into production systems than protocols that use more complicated communication methods. In comparison, MCP uses JSON-RPC.

Developers can directly communicate with agents using curl, Postman, or a web browser, making Agent Communication Protocol easy to use. Python and TypeScript SDKs are convenient, but a specialised SDK is not necessary.

ACP simplifies offline discovery by letting agents include information in distribution packages. This allows agents to be located in secure, disconnected, or scale-to-zero settings. Agent Communication Protocol's asynchronous architecture is ideal for long workloads, although it offers synchronous communication for easy use cases and testing.

Agent Communication Protocol grants multi-agent system architects more design possibilities beyond technology. It goes beyond the traditional “manager” structure, where one “boss” agent coordinates. ACP lets agents talk and assign jobs without a mediator. Peer-to-peer capacity is crucial for internal and external agent interactions.

Kate Blair, IBM Research director of product incubation, said either agent can contact or assign a job. She described a triage agent who answers consumer questions and sends the history and interaction to the relevant service agent so they can address the ticket independently.

IBM Research showed an early ACP version. Soon after, Google introduced A2A, its agent-to-agent protocol. Blair expects more adjustments as they are tested in real life, and he believes multiple agent methods can be used in the early phases despite new rules.

ACP fosters developer participation and is community-led. Monthly open community calls and an active GitHub discussion section ensure community members always have jobs to offer.

#AgentCommunicationProtocol#BeeAI#ACP#IBMAgentCommunicationProtocol#AgentCommunicationProtocolACP#ModelContextProtocol#technology#technews#technologynews#news#govindhtech

0 notes

Text

7 Steps to Start Your Langchain Development Coding Journey

In the fast-paced world of AI and machine learning, Langchain Development has emerged as a groundbreaking approach for building intelligent, context-aware applications. Whether you’re an AI enthusiast, a developer stepping into the world of LLMs (Large Language Models), or a business looking to enhance user experiences through automation, Langchain provides a powerful toolkit. This blog explores the seven essential steps to begin your Langchain Development journey from understanding the basics to deploying your first AI-powered application.

0 notes

Text

Hire AI Experts for Advanced Data Retrieval with Intelligent RAG Solutions

In today’s data-driven world, fast and accurate information retrieval is critical for business success. Retrieval-Augmented Generation (RAG) is an advanced AI approach that combines the strengths of retrieval-based search and generative models to produce highly relevant, context-aware responses.

At Prosperasoft, we help organizations harness the power of RAG to improve decision-making, drive engagement, and unlock deeper insights from their data.

Why Choose RAG for Your Business?

Traditional AI models often rely on static datasets, which can limit their relevance and accuracy. RAG bridges this gap by integrating real-time data retrieval with language generation capabilities. This means your AI system doesn’t just rely on pre-trained knowledge—it actively fetches the most current and relevant information before generating a response. The result? Faster query processing, improved accuracy, and significantly enhanced user experience.

At Prosperasoft, we deliver 85% faster query processing, 40% better data accuracy, and up to 5X higher user engagement through our custom-built RAG solutions. Whether you're a growing startup or a large enterprise, our intelligent systems are designed to scale and evolve with your data needs.

End-to-End RAG Expertise from Prosperasoft

Our team of offshore AI experts brings deep technical expertise and hands-on experience with cutting-edge tools like Amazon SageMaker, PySpark, LlamaIndex, Hugging Face, Langchain, and more. We specialize in:

Intelligent Data Retrieval Systems – Systems designed to fetch and prioritize the most relevant data in real time.

Real-Time Data Integration – Seamlessly pulling live data into your workflows for dynamic insights.

Advanced AI-Powered Responses – Combining large language models with retrieval techniques for context-rich answers.

Custom RAG Model Development – Tailoring every solution to your specific business objectives.

Enhanced Search Functionality – Boosting the relevance and precision of your internal and external search tools.

Model Optimization and Scalability – Ensuring performance, accuracy, and scalability across enterprise operations.

Empower Your Business with Smarter AI

Whether you need to optimize existing systems or build custom RAG models from the ground up, Prosperasoft provides a complete suite of services—from design and development to deployment and ongoing optimization. Our end-to-end RAG solution implementation ensures your AI infrastructure is built for long-term performance and real-world impact.

Ready to take your AI to the next level? Outsource RAG development to Prosperasoft and unlock intelligent, real-time data retrieval solutions that drive growth, efficiency, and smarter decision-making.

#Hire AI Experts#content generation#augmented AI#RAG technology#AI-powered content#content automation#hire#outsourcing

0 notes

Text

Who Should Join Agentic AI Training in Bengaluru? A Roadmap for Professionals

As artificial intelligence enters a new phase of autonomy and decision-making, a ground-breaking evolution is taking place—Agentic AI. Unlike traditional AI models that merely respond to prompts, Agentic AI systems can reason, plan, act, and learn independently.

If you’re a working professional in India’s tech capital, now is the time to explore Agentic AI training in Bengaluru. But is this training right for you? In this article, we’ll help you understand who benefits most from Agentic AI, what skills you need, and how the right training can help you lead the future of intelligent automation.

What is Agentic AI?

Before we dive into who should enroll, let’s understand what Agentic AI is.

Agentic AI systems simulate intelligent agents—entities capable of understanding goals, making decisions, taking autonomous actions, and adapting to changing environments. Unlike static AI models (e.g., chatbots that only respond to prompts), Agentic AI:

Sets its own sub-goals

Plans multi-step actions

Integrates with tools and APIs

Learns through feedback and iteration

Technologies involved in building Agentic AI include:

Large Language Models (LLMs) like GPT-4, Claude, LLaMA

LangChain, Auto-GPT, ReAct, CrewAI

Vector Databases, RAG Pipelines, and more

This makes Agentic AI the next leap in enterprise automation, customer service, content generation, and intelligent systems development.

Why Bengaluru is the Best Place to Learn Agentic AI

Bengaluru—India’s tech hub—is the ideal place to learn Agentic AI, thanks to its:

Thriving AI startup ecosystem

Presence of MNCs and product-based tech firms

Regular AI-focused workshops, hackathons, and seminars

Access to quality offline and hybrid AI training programs

Whether you're a beginner or an experienced techie, Agentic AI training in Bengaluru gives you real-world exposure and high-value networking opportunities.

Who Should Join Agentic AI Training in Bengaluru?

Here’s a breakdown of who can benefit from Agentic AI training, along with a roadmap for each career path.

1. Software Developers & Engineers

Why it's relevant: Developers are at the forefront of implementing AI-driven applications. Agentic AI enables them to go beyond simple prompt-based tasks and build autonomous tools, AI workflows, and intelligent agents.

Roadmap:

Understand Python, APIs, and LLM frameworks

Learn LangChain, Auto-GPT, and multi-agent architecture

Build applications that execute tasks like lead generation, report writing, or email filtering

Outcome: Transition into roles like Agentic AI Developer, LLM Application Engineer, or AI Automation Lead

2. Data Scientists & AI/ML Engineers

Why it's relevant: While traditional ML focuses on prediction and classification, Agentic AI is all about planning and execution. This allows data professionals to build more autonomous and human-like systems.

Roadmap:

Leverage your ML foundation to build intelligent agents

Integrate LLMs with external tools for contextual reasoning

Deploy AI agents using RAG and vector stores

Outcome: Move into specialized roles like Autonomous Systems Architect or Generative AI Specialist

3. Digital Marketers & Content Strategists

Why it's relevant: Agentic AI can automate content pipelines, handle customer segmentation, generate personalized marketing messages, and execute social media strategies autonomously.

Roadmap:

Learn prompt engineering and content generation workflows

Use LangChain or AI automation tools to schedule and publish content

Create AI agents that generate reports and campaign analytics

Outcome: Evolve into roles like AI Marketing Strategist or Agentic Automation Consultant

4. Product Managers

Why it's relevant: Understanding Agentic AI allows PMs to envision, define, and manage AI-powered products that go beyond static user interactions. This is crucial for SaaS and enterprise tech.

Roadmap:

Gain working knowledge of how agents function

Learn to design AI-first features and interfaces

Coordinate between dev teams and AI specialists

Outcome: Step into roles like AI Product Manager or Innovation Lead

5. Business Analysts & Consultants

Why it's relevant: Business professionals can use Agentic AI to analyze data, generate insights, and even prepare executive summaries—all autonomously.

Roadmap:

Learn how to integrate LLMs with business data sources

Use AI agents for report generation and decision support

Understand AI’s impact on operational workflows

Outcome: Move into roles like AI Business Consultant or Digital Transformation Advisor

6. Students & Fresh Graduates (with Tech Backgrounds)

Why it's relevant: Early exposure to Agentic AI gives students an edge in AI careers, startups, and research.

Roadmap:

Build a strong foundation in Python and deep learning

Work on real-world agent-based projects

Participate in Bengaluru-based AI challenges and internships

Outcome: Begin your career as an AI Engineer, Prompt Engineer, or LLM Research Intern

Where to Learn: Boston Institute of Analytics (BIA) – Bengaluru

If you’re looking for practical, future-ready Agentic AI training in Bengaluru, the Boston Institute of Analytics (BIA) offers one of the most advanced and career-focused programs in India.

🎓 Why Choose BIA?

Cutting-Edge Curriculum: Includes LangChain, GPT-4, RAG, ReAct, Auto-GPT, and more

Expert Faculty: Trainers from AI-driven startups and MNCs

Capstone Projects: Build real-world agents for sales, HR, marketing, and support

Flexible Formats: Weekend, hybrid, and weekday batches in Bengaluru

Placement Support: Resume building, mock interviews, and job connections

Whether you're just starting your AI journey or looking to shift from ML to Agentic AI, BIA offers tailored programs that combine depth, flexibility, and mentorship.

Final Thoughts

The world is rapidly shifting toward autonomous, intelligent systems—and Agentic AI is leading this change. If you're a professional working in software, data, marketing, business, or product, learning Agentic AI can redefine your career trajectory.

Bengaluru, with its tech-driven ecosystem and world-class training institutes like the Boston Institute of Analytics, is the perfect launchpad for your journey into this transformative technology.

#Generative AI courses in Bengaluru#Generative AI training in Bengaluru#Agentic AI Course in Bengaluru#Agentic AI Training in Bengaluru

0 notes

Text

What Are Agentic AI Frameworks and How Do They Power Autonomous Systems?

Agentic AI frameworks are at the forefront of next-gen AI, enabling systems to act autonomously with decision-making capabilities similar to humans. These frameworks are designed to allow AI agents to perceive their environment, plan actions, execute tasks, and even adapt over time. In this blog, we explore what agentic AI frameworks are and how they power autonomous systems, helping you understand why they are key to the future of artificial intelligence.

We’ve compiled a detailed agentic AI frameworks list that highlights some of the most impactful tools currently available. From AutoGPT to BabyAGI and LangChain, you’ll find agentic AI frameworks examples used in real-world applications such as automated customer service, research agents, robotic control, and multi-agent collaboration. Our post also includes a comparison of agentic AI frameworks, so you can see the differences in capabilities, flexibility, and use cases.

Whether you're a researcher or developer, we also explore the top agentic AI frameworks that stand out in terms of scalability, modularity, and developer support. Many of these tools are also agentic AI frameworks open-source, making them accessible for experimentation and deployment in your own AI projects. You’ll get insights into GitHub repositories, documentation strength, and community support for each framework.

1 note

·

View note

Text

Top Tech Stacks for Fintech App Development in 2025

Fintech is evolving fast, and so is the technology behind it. As we head into 2025, financial applications demand more than just sleek interfaces — they need to be secure, scalable, and lightning-fast. Whether you're building a neobank, a personal finance tracker, a crypto exchange, or a payment gateway, choosing the right tech stack can make or break your app.

In this post, we’ll break down the top tech stacks powering fintech apps in 2025 and what makes them stand out.

1. Frontend Tech Stacks

🔹 React.js + TypeScript

React has long been a favorite for fintech frontends, and paired with TypeScript, it offers improved code safety and scalability. TypeScript helps catch errors early, which is critical in the finance world where accuracy is everything.

🔹 Next.js (React Framework)

For fintech apps with a strong web presence, Next.js brings server-side rendering and API routes, making it easier to manage SEO, performance, and backend logic in one place.

🔹 Flutter (for Web and Mobile)

Flutter is gaining massive traction for building cross-platform fintech apps with a single codebase. It's fast, visually appealing, and great for MVPs and startups trying to reduce time to market.

2. Backend Tech Stacks

🔹 Node.js + NestJS

Node.js offers speed and scalability, while NestJS adds a structured, enterprise-grade framework. Great for microservices-based fintech apps that need modular and testable code.

🔹 Python + Django

Python is widely used in fintech for its simplicity and readability. Combine it with Django — a secure and robust web framework — and you have a great stack for building APIs and handling complex data processing.

🔹 Golang

Go is emerging as a go-to language for performance-intensive fintech apps, especially for handling real-time transactions and services at scale. Its concurrency support is a huge bonus.

3. Databases

🔹 PostgreSQL

Hands down the most loved database for fintech in 2025. It's reliable, supports complex queries, and handles financial data like a pro. With extensions like PostGIS and TimescaleDB, it's even more powerful.

🔹 MongoDB (with caution)

While not ideal for transactional data, MongoDB can be used for storing logs, sessions, or less-critical analytics. Just be sure to avoid it for money-related tables unless you have a strong reason.

🔹 Redis

Perfect for caching, rate-limiting, and real-time data updates. Great when paired with WebSockets for live transaction updates or stock price tickers.

4. Security & Compliance

In fintech, security isn’t optional — it’s everything.

OAuth 2.1 and OpenID Connect for secure user authentication

TLS 1.3 for encrypted communication

Zero Trust Architecture for internal systems

Biometric Auth for mobile apps

End-to-end encryption for sensitive data

Compliance Ready: GDPR, PCI-DSS, and SOC2 tools built-in

5. DevOps & Cloud

🔹 Docker + Kubernetes

Containerization ensures your app runs the same way everywhere, while Kubernetes helps scale securely and automatically.

🔹 AWS / Google Cloud / Azure

These cloud platforms offer fintech-ready services like managed databases, real-time analytics, fraud detection APIs, and identity verification tools.

🔹 CI/CD Pipelines

Using tools like GitHub Actions or GitLab CI/CD helps push secure code fast, with automated testing to catch issues early.

6. Bonus: AI & ML Tools

AI is becoming integral in fintech — from fraud detection to credit scoring.

TensorFlow / PyTorch for machine learning

Hugging Face Transformers for NLP in customer support bots

LangChain (for LLM-driven insights and automation)

Final Thoughts

Choosing the right tech stack depends on your business model, app complexity, team skills, and budget. There’s no one-size-fits-all, but the stacks mentioned above offer a solid foundation to build secure, scalable, and future-ready fintech apps.

In 2025, the competition in fintech is fierce — the right technology stack can help you stay ahead.

What stack are you using for your fintech app? Drop a comment and let’s chat tech!

https://www.linkedin.com/in/%C3%A0ksh%C3%ADt%C3%A2-j-17aa08352/

#Fintech#AppDevelopment#TechStack2025#ReactJS#NestJS#Flutter#Django#FintechInnovation#MobileAppDevelopment#BackendDevelopment#StartupTech#FintechApps#FullStackDeveloper#WebDevelopment#SecureApps#DevOps#FinanceTech#SMTLABS

1 note

·

View note

Text

Scaling Agentic AI in 2025: Unlocking Autonomous Digital Labor with Real-World Success Stories

Introduction

Agentic AI is revolutionizing industries by seamlessly integrating autonomy, adaptability, and goal-driven behavior, enabling digital systems to perform complex tasks with minimal human intervention. This article explores the evolution of Agentic AI, its integration with Generative AI, and delivers actionable insights for scaling these systems. We will examine the latest deployment strategies, best practices for scalability, and real-world case studies, including how an Agentic AI course in Mumbai with placements is shaping talent pipelines for this emerging field. Whether you are a software engineer, data scientist, or technology leader, understanding the interplay between Generative AI and Agentic AI is key to unlocking digital transformation.

The Evolution of Agentic and Generative AI in Software

AI’s evolution has moved from rule-based systems and machine learning toward today’s advanced generative models and agentic systems. Traditional AI excels in narrow, predefined tasks like image recognition but lacks flexibility for dynamic environments. Agentic AI, by contrast, introduces autonomy and continuous learning, empowering systems to adapt and optimize outcomes over time without constant human oversight.

This paradigm shift is powered by Generative AI, particularly large language models (LLMs), which provide contextual understanding and reasoning capabilities. Agentic AI systems can orchestrate multiple AI services, manage workflows, and execute decisions, making them essential for real-time, multi-faceted applications across logistics, healthcare, and customer service. The rise of agentic capabilities marks a transition from AI as a tool to AI as an autonomous digital labor force, expanding workforce definitions and operational possibilities. Professionals seeking to enter this field often consider a Generative AI and Agentic AI course to gain the necessary skills and practical experience.

Latest Frameworks, Tools, and Deployment Strategies

LLM Orchestration and Autonomous Agents

Modern Agentic AI depends on orchestrating multiple LLMs and AI components to execute complex workflows. Frameworks like LangChain, Haystack, and OpenAI’s Function Calling enable developers to build autonomous agents that chain together tasks, query databases, and interact with APIs dynamically. These frameworks support multi-turn dialogue management, contextual memory, and adaptive decision-making, critical for real-world agentic applications. For those interested in hands-on learning, enrolling in an Agentic AI course in Mumbai with placements offers practical exposure to these advanced frameworks.

MLOps for Generative Models

Traditional MLOps pipelines are evolving to support the unique requirements of generative AI, including:

Continuous Fine-Tuning: Updating models based on new data or feedback without full retraining, using techniques like incremental and transfer learning.

Prompt Engineering Lifecycle: Versioning and testing prompts as critical components of model performance, including methodologies for prompt optimization and impact evaluation.

Monitoring Generation Quality: Detecting hallucinations, bias, and drift in outputs, and implementing quality control measures.

Scalable Inference Infrastructure: Managing high-throughput, low-latency model serving with cost efficiency, leveraging cloud and edge computing.

Leading platforms such as MLflow, Kubeflow, and Amazon SageMaker are integrating MLOps for generative AI to streamline deployment and monitoring. Understanding MLOps for generative AI is now a foundational skill for teams building scalable agentic systems.

Cloud-Native and Edge Deployment

Agentic AI deployments increasingly leverage cloud-native architectures for scalability and resilience, using Kubernetes and serverless functions to manage agent workloads. Edge deployments are emerging for latency-sensitive applications like autonomous vehicles and IoT devices, where agents operate closer to data sources. This approach ensures real-time processing and reduces reliance on centralized infrastructure, topics often covered in advanced Generative AI and Agentic AI course curricula.

Advanced Tactics for Scalable, Reliable AI Systems

Modular Agent Design

Breaking down agent capabilities into modular, reusable components allows teams to iterate rapidly and isolate failures. Modular design supports parallel development and easier integration of new skills or data sources, facilitating continuous improvement and reducing system update complexity.

Robust Error Handling and Recovery

Agentic systems must anticipate and gracefully handle failures in external APIs, data inconsistencies, or unexpected inputs. Implementing fallback mechanisms, retries, and human-in-the-loop escalation ensures uninterrupted service and trustworthiness.

Data and Model Governance

Given the autonomy of agentic systems, governance frameworks are critical to manage data privacy, model biases, and compliance with regulations such as GDPR and HIPAA. Transparent logging and explainability tools help maintain accountability. This includes ensuring that data collection and processing align with ethical standards and legal requirements, a topic emphasized in MLOps for generative AI best practices.

Performance Optimization

Balancing model size, latency, and cost is vital. Techniques such as model distillation, quantization, and adaptive inference routing optimize resource use without sacrificing agent effectiveness. Leveraging hardware acceleration and optimizing software configurations further enhances performance.

Ethical Considerations and Governance

As Agentic AI systems become more autonomous, ethical considerations and governance practices become increasingly important. This includes ensuring transparency in decision-making, managing potential biases in AI outputs, and complying with regulatory frameworks. Recent developments in AI ethics frameworks emphasize the need for responsible AI deployment that prioritizes human values and safety. Professionals completing a Generative AI and Agentic AI course are well-positioned to implement these principles in practice.

The Role of Software Engineering Best Practices

The complexity of Agentic AI systems elevates the importance of mature software engineering principles:

Version Control for Code and Models: Ensures reproducibility and rollback capability.

Automated Testing: Unit, integration, and end-to-end tests validate agent logic and interactions.

Continuous Integration/Continuous Deployment (CI/CD): Automates safe and frequent updates.

Security by Design: Protects sensitive data and defends against adversarial attacks.

Documentation and Observability: Facilitates collaboration and troubleshooting across teams.

Embedding these practices into AI development pipelines is essential for operational excellence and long-term sustainability. Training in MLOps for generative AI equips teams with the skills to maintain these standards at scale.

Cross-Functional Collaboration for AI Success

Agentic AI projects succeed when data scientists, software engineers, product managers, and business stakeholders collaborate closely. This alignment ensures:

Clear definition of agent goals and KPIs.

Shared understanding of technical constraints and ethical considerations.

Coordinated deployment and change management.

Continuous feedback loops for iterative improvement.

Cross-functional teams foster innovation and reduce risks associated with misaligned expectations or siloed workflows. Those enrolled in an Agentic AI course in Mumbai with placements often experience this collaborative environment firsthand.

Measuring Success: Analytics and Monitoring

Effective monitoring of Agentic AI deployments includes:

Operational Metrics: Latency, uptime, throughput.

Performance Metrics: Accuracy, relevance, user satisfaction.

Behavioral Analytics: Agent decision paths, error rates, escalation frequency.

Business Outcomes: Cost savings, revenue impact, process efficiency.

Combining real-time dashboards with anomaly detection and alerting enables proactive management and continuous optimization of agentic systems. Mastering these analytics is a core outcome for participants in a Generative AI and Agentic AI course.

Case Study: Autonomous Supply Chain Optimization at DHL

DHL, a global logistics leader, exemplifies successful scaling of Agentic AI in 2025. Facing challenges of complex inventory management, fluctuating demand, and delivery delays, DHL deployed an autonomous supply chain agent powered by generative AI and real-time data orchestration.

The Journey

DHL’s agentic system integrates:

LLM-based demand forecasting models.

Autonomous routing agents coordinating with IoT sensors on shipments.

Dynamic inventory rebalancing modules adapting to disruptions.

The deployment involved iterative prototyping, cross-team collaboration, and rigorous MLOps for generative AI practices to ensure reliability and compliance across global operations.

Technical Challenges

Handling noisy sensor data and incomplete information.

Ensuring real-time decision-making under tight latency constraints.

Managing multi-regional regulatory compliance and data sovereignty.

Integrating legacy IT systems with new AI workflows.

Business Outcomes

20% reduction in delivery delays.

15% decrease in inventory holding costs.

Enhanced customer satisfaction through proactive communication.

Scalable platform enabling rapid rollout across regions.

DHL’s success highlights how agentic AI can transform complex, dynamic environments by combining autonomy with robust engineering and collaborative execution. Professionals trained through an Agentic AI course in Mumbai with placements are well-prepared to tackle similar challenges.

Additional Case Study: Personalized Healthcare with Agentic AI

In healthcare, Agentic AI is revolutionizing patient care by providing personalized treatment plans and improving patient outcomes. For instance, a healthcare provider might deploy an agentic system to analyze patient data, adapt treatment strategies based on real-time health conditions, and optimize resource allocation in hospitals. This involves integrating AI with electronic health records, wearable devices, and clinical decision support systems to enhance care quality and efficiency.

Technical Implementation

Data Integration: Combining data from various sources to create comprehensive patient profiles.

AI-Driven Decision Support: Using machine learning models to predict patient outcomes and suggest personalized interventions.

Real-Time Monitoring: Continuously monitoring patient health and adjusting treatment plans accordingly.

Business Outcomes

Improved patient satisfaction through personalized care.

Enhanced resource allocation and operational efficiency.

Better clinical outcomes due to real-time decision-making.

This case study demonstrates how Agentic AI can improve healthcare outcomes by leveraging autonomy and adaptability in dynamic environments. A Generative AI and Agentic AI course provides the multidisciplinary knowledge required for such implementations.

Actionable Tips and Lessons Learned

Start small but think big: Pilot agentic AI on well-defined use cases to gather data and refine models before scaling.

Invest in MLOps tailored for generative AI: Automate continuous training, testing, and monitoring to ensure robust deployments.

Design agents modularly: Facilitate updates and integration of new capabilities.

Prioritize explainability and governance: Build trust with stakeholders and comply with regulations.

Foster cross-functional teams: Align technical and business goals early and often.

Monitor holistically: Combine operational, performance, and business metrics for comprehensive insights.

Plan for human-in-the-loop: Use human oversight strategically to handle edge cases and improve agent learning.

For those considering a career shift, an Agentic AI course in Mumbai with placements offers a structured pathway to acquire these skills and gain practical experience.

Conclusion

Scaling Agentic AI in 2025 is both a technical and organizational challenge demanding advanced frameworks, rigorous engineering discipline, and tight collaboration across teams. The evolution from narrow AI to autonomous, adaptive agents unlocks unprecedented efficiencies and capabilities across industries. Real-world deployments like DHL’s autonomous supply chain agent demonstrate the transformative potential when cutting-edge AI meets sound software engineering and business acumen.

For AI practitioners and technology leaders, success lies in embracing modular architectures, investing in MLOps for generative AI, prioritizing governance, and fostering cross-functional collaboration. Monitoring and continuous improvement complete the cycle, ensuring agentic systems deliver measurable business value while maintaining reliability and compliance.

Agentic AI is not just an evolution of technology but a revolution in how businesses operate and innovate. The time to build scalable, trustworthy agentic AI systems is now. Whether you are looking to upskill or transition into this field, a Generative AI and Agentic AI course can provide the knowledge, tools, and industry connections to accelerate your journey.

0 notes