#Langchain development

Explore tagged Tumblr posts

Text

Top 10 Benefits of Using Langchain Development for AI Projects

In the rapidly evolving world of artificial intelligence, developers and businesses are constantly seeking frameworks that can streamline the integration of Large Language Models (LLMs) into real-world applications. Among the many emerging solutions, Langchain development stands out as a game-changer. It offers a modular, scalable, and intelligent approach to building AI systems that are not only efficient but also contextually aware.

0 notes

Text

Gen AI Fellowship 2025 – Fully Funded ₦50K/Month AI Training for Young Nigerians

Apply now for the Gen AI Fellowship 2025, a fully funded 6-month AI training program in Abeokuta, Nigeria. Earn ₦50,000 monthly, gain real-world experience, mentorship, and certification. Open to Nigerian youth passionate about AI and innovation. Deadline: July 14. 🚀 Gen AI Fellowship 2025 – Fully Funded Training for Young Nigerians Gain ₦50,000 Monthly • Learn AI Hands-On • Build Real-World…

#₦50#000 stipend program#AI Bootcamp Nigeria#AI Development Nigeria#AI Engineering Nigeria#AI Fellowship Nigeria#AI for young Nigerians#AI internship Nigeria#AI jobs Nigeria#AI mentorship program#AI Talent Accelerator#Artificial Intelligence Training Nigeria#Bosun Tijani Foundation#Fully Funded Fellowship#Gen AI Fellowship 2025#GenAI Nigeria 2025#LangChain Nigeria#Machine Learning Nigeria#Ogun State AI Program#Ogun State opportunities#Tech Fellowships in Nigeria

0 notes

Link

Sure! Here's a concise summary: This article explores using LangChain, Python, and Heroku to build and deploy Large Language Model (LLM)-based applications. We go into the basics of LangChain for crafting AI-driven tools and Heroku for effortless cloud deployment, illustrating the process with a practical example of a fitness trainer application. By combining these technologies, developers can easily create, test, and deploy LLM applications, streamlining the development process and reducing infrastructure headaches.

0 notes

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics, machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

LangChain - An Overview | Python GUI

LangChain is an open-source framework designed to simplify the development of language model-powered applications. It provides tools to build, manage, and integrate language models into workflows, allowing for seamless interaction with external data, APIs, and databases. Ideal for building chatbots, virtual assistants, and advanced NLP applications, LangChain enables developers to create intelligent, dynamic, and scalable solutions. For more information, visit PythonGUI.

1 note

·

View note

Text

Mastering Autonomous AI Agents: Real-World Strategies, Frameworks, and Best Practices for Scalable Deployment

Introduction

The rapid evolution of artificial intelligence is ushering in a new era where autonomous AI agents are transforming business operations and software engineering. From automating complex workflows to enhancing customer experiences, these agents are redefining how organizations innovate and compete. As enterprises increasingly adopt agentic and generative AI, understanding the latest frameworks, deployment strategies, and software engineering best practices is essential for success. For professionals seeking to deepen their expertise, enrolling in an Agentic AI course in Mumbai or exploring Generative AI courses online in Mumbai can provide cutting-edge knowledge and practical skills. Choosing the best Agentic AI course with placement ensures career advancement in this fast-growing field. This article provides a comprehensive guide for AI practitioners, software engineers, architects, and technology leaders seeking to scale autonomous AI agents in real-world environments. We explore the evolution of Agentic and Generative AI, highlight the most impactful frameworks and tools, and offer actionable insights for ensuring reliability, security, and compliance. Through real-world case studies and practical examples, we demonstrate how to navigate the challenges of deploying autonomous AI agents at scale.

The Evolution of Agentic and Generative AI in Software

Agentic AI refers to autonomous systems capable of planning, acting, and learning, often building on the capabilities of large language models (LLMs) to perform human-like tasks. This decade is marked by significant advancements in agentic architectures, with multi-agent systems enabling complex collaboration and automation across industries. Many professionals interested in mastering these technologies find value in an Agentic AI course in Mumbai, which covers these emerging trends comprehensively. Generative AI, on the other hand, focuses on creating new content, be it text, images, or code, using sophisticated neural networks. The integration of agentic and generative technologies is driving innovation in software engineering, automating repetitive tasks, improving decision-making, and personalizing user experiences. Those seeking flexible learning options often pursue Generative AI courses online in Mumbai to stay current with the latest tools and techniques.

Recent Developments

Multi-Agent Systems: These systems leverage multiple specialized agents working together to achieve complex objectives. For example, in supply chain management, multi-agent systems optimize logistics, predict disruptions, and coordinate responses in real time. Understanding multi-agent collaboration is a key component of many best Agentic AI courses with placement options.

Generative AI Applications: Generative models automate code generation, create synthetic data for machine learning, and personalize customer interactions. Tools like GitHub Copilot and Amazon Q Developer Agent exemplify how generative AI is revolutionizing software development and support.

Shift to Agentic AI: The industry is moving from generative to agentic AI, emphasizing autonomous decision-making and workflow automation. This shift is reflected in the growing adoption of frameworks like LangGraph, AutoGen, and LangChain, which enable developers to build and orchestrate intelligent agents at scale.

Frameworks, Tools, and Deployment Strategies

To scale autonomous AI agents effectively, organizations must select the right frameworks and tools, align them with business objectives, and integrate them into existing workflows. Professionals often complement their theoretical knowledge by enrolling in an Agentic AI course in Mumbai or Generative AI courses online in Mumbai to gain hands-on experience with these frameworks.

LLM Orchestration and Integration

Large Language Models (LLMs) are the backbone of many AI agents, providing natural language understanding and generation capabilities. Orchestration platforms such as LangChain and Dify enable seamless integration of LLMs into business processes, supporting use cases like customer service automation and data analysis.

Autonomous Agents in Practice

Autonomous agents are increasingly deployed in customer service, software development, and cybersecurity. For example, Amazon’s Q Developer Agent autonomously writes, tests, and submits code, significantly reducing development time and errors. In customer service, AI agents powered by platforms like IBM Watson Assistant or Google Dialogflow handle millions of queries, providing instant support and reducing operational costs. Learning how to implement such solutions is a highlight of many best Agentic AI courses with placement programs.

MLOps for Generative Models

MLOps (Machine Learning Operations) is critical for managing the lifecycle of generative models. It encompasses model development, deployment, monitoring, and maintenance, ensuring consistent performance and reliability. Tools like Kubeflow and MLflow streamline these processes, enabling organizations to scale their AI initiatives effectively.

Advanced Tactics for Scalable, Reliable AI Systems

Scaling AI systems requires robust technical infrastructure and disciplined engineering practices, topics well-covered in an Agentic AI course in Mumbai or Generative AI courses online in Mumbai to prepare practitioners for real-world challenges.

Multi-Agent Ecosystems and Interoperability

Implementing multi-agent ecosystems allows organizations to break down silos and enable specialized agents to collaborate across complex workflows. This approach requires investment in interoperability standards and orchestration platforms to manage multiple autonomous systems efficiently. The ability to design interoperable systems is a core skill taught in the best Agentic AI course with placement options.

Industry-Specific Specialization

As AI matures, there is growing demand for industry-specific solutions tailored to unique business challenges and regulatory requirements. For example, healthcare organizations require AI agents compliant with HIPAA, while financial institutions need solutions adhering to GDPR and CCPA. Courses focusing on Agentic AI often include modules on customizing solutions for various sectors, making an Agentic AI course in Mumbai a valuable choice for professionals targeting these industries.

Technical Infrastructure for Agentic AI

Core Technology Stack: LLMs, vector databases, and API integration layers form the foundation of agentic AI systems.

Scalability Considerations: Microservices architecture, load balancing, and fault tolerance are essential for handling increasing workloads and ensuring system reliability.

Security Frameworks: Comprehensive security measures, including data encryption, access controls, and monitoring, protect agent operations and sensitive data.

Governance, Risk Management, and Ethical Considerations

With AI agents taking on critical business functions, robust governance frameworks are essential to prevent misuse and ensure accountability. These topics are integral to many best Agentic AI course with placement curricula, preparing learners for ethical AI deployment.

Governance and Risk Management

Gartner predicts that by 2028, 25% of enterprise breaches will be traced back to AI agent misuse. Organizations must implement sophisticated risk management strategies, including regular audits, anomaly detection, and incident response plans.

ethics and Compliance

Ensuring AI systems comply with regulatory requirements and ethical standards is vital. This includes implementing data privacy measures, avoiding bias in decision-making, and maintaining transparency. Human oversight frameworks are critical for maintaining trust and accountability as AI agents become more autonomous.

Software Engineering Best Practices

Software engineering best practices are the cornerstone of reliable, secure, and compliant AI systems. These practices are emphasized in Agentic AI course in Mumbai and Generative AI courses online in Mumbai, helping professionals ensure high-quality deployments.

MLOps and DevOps Integration

Integrating MLOps and DevOps practices streamlines model development, deployment, and monitoring. Version control, CI/CD pipelines, and automated testing ensure consistent performance and rapid iteration.

Testing and Validation

Rigorous testing and validation are essential for ensuring AI systems operate as intended. This includes unit testing, integration testing, and simulation-based validation to identify and address issues before deployment.

Change Management and User Training

Successful AI adoption requires comprehensive user training and change management. Organizations must educate teams on agent capabilities and limitations, fostering a culture of continuous learning and adaptation. These principles are core components in the best Agentic AI course with placement programs.

Cross-Functional Collaboration for AI Success

Cross-functional collaboration is essential for aligning AI solutions with business needs and ensuring technical excellence. This collaborative approach is highlighted in many Agentic AI course in Mumbai and Generative AI courses online in Mumbai offerings.

Data Scientists and Engineers

Close collaboration between data scientists and software engineers ensures that AI models are both accurate and scalable. Data scientists focus on model development, while engineers handle deployment, integration, and performance optimization.

Business Stakeholders

Involving business stakeholders throughout the AI development process ensures that solutions address real-world challenges and deliver measurable value. Regular feedback loops and iterative development drive continuous improvement.

Measuring Success: Analytics and Monitoring

Measuring the impact of AI deployments requires robust analytics and monitoring frameworks, a topic covered extensively in advanced AI courses.

Real-Time Insights and Continuous Monitoring

Implementing real-time analytics tools provides immediate visibility into system performance, enabling swift adjustments and proactive issue resolution. Continuous monitoring ensures timely detection of data drift, model degradation, and security threats.

Key Performance Indicators (KPIs)

Tracking KPIs such as model accuracy, decision-making outcomes, and business impact is essential for evaluating success and guiding future investments.

Case Study: Klarna’s LangChain-Powered Assistant

Klarna, a leading fintech company, successfully deployed an AI-powered customer service assistant using LangChain. This assistant handles queries from over 85 million users, resolving issues 80% faster than traditional methods.

Technical Challenges

Integration Complexity: Integrating the AI assistant with existing systems required careful planning to ensure seamless data exchange and minimal disruption to customer service operations.

Data Privacy: Ensuring compliance with stringent data privacy regulations was a significant challenge, requiring robust data protection measures and ongoing monitoring.

Business Outcomes

Efficiency Gains: The AI assistant significantly reduced response times, improving customer satisfaction and reducing the workload on human agents.

Scalability: The system’s ability to handle a large volume of queries made it an essential tool for scaling Klarna’s customer support capabilities.

Actionable Tips and Lessons Learned

Develop a Clear AI Strategy

Define Ethical Principles: Establish a clear set of values and principles to guide AI development and deployment.

Align with Business Objectives: Ensure that AI initiatives are closely aligned with organizational goals to maximize return on investment. Professionals preparing for this often choose an Agentic AI course in Mumbai for strategic insights.

Invest in Multi-Agent Systems and Interoperability

Explore Multi-Agent Architectures: Leverage specialized agents to automate complex workflows and improve decision-making.

Focus on Interoperability: Invest in standards and platforms that enable seamless collaboration between agents and existing systems.

Emphasize Software Engineering Best Practices

Implement Rigorous Testing: Use simulation environments and iterative testing to validate system performance and identify issues early.

Adopt MLOps and DevOps: Streamline model development, deployment, and monitoring to ensure reliability and scalability.

Foster Cross-Functional Collaboration

Bring Together Diverse Expertise: Encourage collaboration between data scientists, engineers, and business stakeholders to align AI solutions with real-world needs.

Support Continuous Learning: Provide ongoing training and support to help teams adapt to new technologies and workflows. Several best Agentic AI course with placement programs emphasize this.

Prioritize Governance, Ethics, and Compliance

Implement Robust Governance Frameworks: Establish clear policies and procedures for AI oversight, risk management, and incident response.

Ensure Regulatory Compliance: Stay abreast of evolving regulations and implement measures to protect data privacy and prevent bias.

Conclusion

Scaling autonomous AI agents is a complex but rewarding endeavor that requires a combination of technical expertise, strategic planning, and cross-functional collaboration. By leveraging the latest frameworks, tools, and best practices, organizations can unlock significant value from AI, transforming their operations and customer experiences. As the industry continues to evolve, those who embrace agentic and generative AI, while maintaining a strong focus on ethics, compliance, and engineering excellence, will be best positioned to thrive in the new era of AI-driven transformation. For professionals aiming to advance their careers in this domain, enrolling in an Agentic AI course in Mumbai, exploring Generative AI courses online in Mumbai, or selecting the best Agentic AI course with placement provides a solid foundation and practical advantage.

0 notes

Text

LangChain Development Company This image represents the cutting-edge capabilities of a LangChain development company—experts in building powerful, LLM-driven applications that connect language models with tools, data sources, and APIs. LangChain enables intelligent agents, RAG pipelines, and dynamic workflows tailored for enterprise use. Ideal for businesses seeking custom AI solutions that think, reason, and act.

#LangChain#LangChainDevelopment#AIAgents#LLMApps#RAGArchitecture#AIIntegration#AIDevelopmentCompany#ConversationalAI

0 notes

Text

How an LLM Development Company Can Help You Build Smarter AI Solutions

In the fast-paced world of artificial intelligence (AI), Large Language Models (LLMs) are among the most transformative technologies available today. From automating customer interactions to streamlining business operations, LLMs are reshaping how organizations approach digital transformation.

However, developing and implementing LLM-based solutions isn’t a simple task. It requires specialized skills, robust infrastructure, and deep expertise in machine learning and natural language processing (NLP). This is why many businesses are partnering with an experienced LLM development company to unlock the full potential of AI-powered solutions.

What Does an LLM Development Company Do?

An LLM development company specializes in designing, fine-tuning, and deploying LLMs for various applications. These companies help businesses leverage advanced language models to solve complex problems and automate language-driven tasks.

Common Services Offered:

Custom Model Development: Creating tailored LLMs for specific business use cases.

Model Fine-Tuning: Adapting existing LLMs to specific industries and datasets for improved accuracy.

Data Preparation: Cleaning and structuring data for effective model training.

Deployment & Integration: Seamlessly embedding LLMs into enterprise platforms, mobile apps, or cloud environments.

Ongoing Model Maintenance: Monitoring, retraining, and optimizing models to ensure long-term performance.

Consulting & Strategy: Offering guidance on best practices for ethical AI use, compliance, and security.

Why Your Business Needs an LLM Development Company

1. Specialized AI Expertise

LLM development involves deep learning, NLP, and advanced AI techniques. A specialized LLM development company brings together data scientists, engineers, and NLP experts with the knowledge required to build powerful models.

2. Accelerated Development Timelines

Instead of spending months building models from scratch, an LLM development company can fine-tune existing pre-trained models—speeding up the time to deployment while reducing costs.

3. Industry-Specific Solutions

LLMs can be adapted to specific industries, such as healthcare, finance, legal, and retail. By partnering with an LLM development company, you can customize models to understand industry-specific terminology and workflows.

4. Cost-Effective AI Adoption

Building LLMs in-house requires expensive infrastructure and expert teams. Working with an LLM development company provides a cost-effective alternative, offering high-quality solutions without long-term operational costs.

5. Scalable and Future-Ready Solutions

LLM development companies design solutions with scalability in mind, ensuring your AI tools can handle growing datasets and business needs over time.

Key Technologies Used in LLM Development

LLM development company use a combination of technologies and frameworks to build advanced AI solutions:

Python: Widely used for AI and NLP programming.

PyTorch & TensorFlow: Leading deep learning frameworks for model training and optimization.

Hugging Face Transformers: A popular library for working with pre-trained LLMs.

LangChain: Framework for building advanced LLM-powered applications with chaining logic.

DeepSpeed & LoRA (Low-Rank Adaptation): For optimizing LLMs for better efficiency and performance.

Cloud Platforms (AWS, Azure, GCP): For scalable cloud-based training and deployment.

Industries Leveraging LLM Development Companies

LLM-powered applications are now used across various industries to drive automation and boost productivity:

Healthcare

Automating clinical documentation.

Summarizing medical research.

Assisting with diagnostics.

Finance

Fraud detection and prevention.

Investment analysis and forecasting.

AI-driven customer service solutions.

E-commerce

Personalized product recommendations.

Automated content and product descriptions.

AI-powered virtual shopping assistants.

Legal

Automated contract analysis.

Legal document summarization.

Research assistance tools.

Education

Personalized learning platforms.

AI-powered tutoring tools.

Grading and assessment automation.

How to Choose the Right LLM Development Company

Choosing the right partner is critical for the success of your AI projects. Here are key criteria to consider:

Experience & Track Record: Review their past projects and case studies.

Technical Capabilities: Ensure the company has deep expertise in NLP, machine learning, and LLM fine-tuning.

Customization Flexibility: Look for companies that can tailor models for your specific needs.

Security & Compliance: Check for adherence to data privacy and regulatory standards.

Support & Scalability: Select a partner that offers ongoing maintenance and scalable solutions.

Future of LLM Development Companies

LLM development companies are poised to play an even bigger role as AI adoption accelerates worldwide. Emerging trends include:

Multi-Modal AI Models: Models that can process text, images, audio, and video together.

Edge AI Solutions: Compact LLMs running on local devices, reducing latency and enhancing privacy.

Autonomous AI Agents: LLMs capable of planning, reasoning, and acting independently.

Responsible AI & Transparency: Increased focus on ethical AI, bias reduction, and explainability.

Conclusion

The power of Large Language Models is transforming industries at an unprecedented pace. However, successfully implementing LLM-powered solutions requires specialized skills, advanced tools, and deep domain knowledge.

By partnering with an experienced LLM development company, businesses can rapidly develop AI tools that automate workflows, enhance customer engagement, and unlock new revenue opportunities. Whether your goals involve customer service automation, content generation, or intelligent data analysis, an LLM development company can help bring your AI vision to life.

0 notes

Text

Advanced Generative AI Training Programs in Bengaluru for Professionals

As artificial intelligence continues to shape the digital world, Generative AI has emerged as a transformational force—impacting industries from content creation to healthcare, finance, automation, and software development. For working professionals in India’s tech capital, enrolling in an advanced Generative AI training program in Bengaluru is not just an upgrade—it's a competitive necessity.

This article explores how Bengaluru is leading the AI training revolution, the benefits of pursuing advanced generative AI programs, and how institutes like the Boston Institute of Analytics (BIA) are empowering professionals with industry-ready skills.

What is Generative AI, and Why Does It Matter for Professionals?

Generative AI refers to systems that can generate new content—text, images, audio, code, or video—based on patterns learned from data. These systems rely on advanced deep learning architectures like:

Transformers

Large Language Models (LLMs) like GPT, BERT, LLaMA

GANs (Generative Adversarial Networks)

Diffusion Models

These technologies are reshaping job roles and workflows, especially in:

Software Development (AI-assisted coding)

Digital Marketing (automated content creation)

Design & Animation (AI-generated visuals)

Customer Support (intelligent chatbots and virtual agents)

Product Development (prototyping with generative tools)

For professionals, mastering these tools means future-proofing careers and leading the innovation curve.

Why Choose Bengaluru for Generative AI Training?

Bengaluru, often called the Silicon Valley of India, offers a thriving ecosystem for AI professionals:

Home to major tech companies: Google, Microsoft, IBM, Amazon, and thousands of startups

Strong academic and industry collaboration

AI conferences, meetups, and networking events

Talent-rich environment for collaborative learning

Access to some of the best generative AI training institutes in India

Whether you're a software engineer, data scientist, marketing professional, or IT manager—Generative AI training in Bengaluru offers unmatched access to opportunities and innovation.

Who Should Enroll in Advanced Generative AI Training?

Advanced training programs are ideally suited for:

Software Engineers who want to build AI-powered apps or integrate LLMs

Data Scientists looking to move beyond predictive models into generative domains

AI/ML Engineers upgrading from traditional ML to LLMs and GANs

Digital Creatives who want to automate content, design, or video generation

Product Managers and Analysts who want to understand and lead AI-driven product innovation

If you have a basic understanding of Python, machine learning, and deep learning, you're ready for an advanced Generative AI course.

Key Components of Advanced Generative AI Training

A good advanced training program should go beyond theory and provide hands-on mastery. Look for training modules that cover:

1. Foundations Refresher

Basics of deep learning and neural networks

Understanding tokenization and embeddings

Introduction to generative models

2. Transformer Architectures

Attention mechanism and positional encoding

Architecture of GPT, BERT, and T5 models

Fine-tuning transformer models

3. Large Language Models (LLMs)

Working with OpenAI’s GPT models

LangChain and Retrieval-Augmented Generation (RAG)

Prompt engineering techniques

4. GANs and Image Generation

How GANs work (generator vs discriminator)

Training and fine-tuning GANs

Image generation with Stable Diffusion and DALL·E

5. Multimodal AI

Building models that combine text, images, and audio

Use cases like text-to-video, speech synthesis, etc.

6. Project-Based Learning

Build real-world tools like:

Chatbots

AI design generators

Code assistants

AI copywriters

7. Ethical AI and Safety

Bias detection and mitigation

Responsible deployment practices

AI governance in enterprise environments

Best Advanced Generative AI Training in Bengaluru: Boston Institute of Analytics (BIA)

If you're looking for a program that is comprehensive, hands-on, and industry-aligned, the Boston Institute of Analytics in Bengaluru stands out.

🔍 Why Choose BIA?

Expert Faculty: Trainers from Google, Amazon, and leading AI labs

Industry Projects: Real-world assignments and capstone projects

LLM Integration: Work with OpenAI, Hugging Face, and LangChain

Flexible Learning: Offline and hybrid formats for working professionals

Placement Assistance: Resume support, mock interviews, and hiring partner access

Globally Recognized Certification: Boosts your visibility with recruiters worldwide

BIA’s curriculum focuses on practical, tool-based learning, ensuring you not only understand the theory but can apply it to build scalable solutions.

Career Outcomes After Advanced Generative AI Training

After completing an advanced program, professionals can explore high-demand roles such as:

Generative AI Engineer

LLM Application Developer

AI Solutions Architect

Prompt Engineer

AI Product Manager

AI Research Associate

Salaries for these roles in Bengaluru range from ₹12 LPA to ₹35+ LPA, depending on your experience and portfolio.

Final Thoughts

Bengaluru continues to lead the way in India’s AI transformation, and Generative AI is at the heart of this evolution. Whether you’re working in software development, data science, product, or digital marketing—understanding generative AI gives you the edge to innovate, lead, and scale.

If you’re serious about mastering the tools of tomorrow, don’t settle for introductory courses. Opt for advanced Generative AI training in Bengaluru, especially programs like the one offered by the Boston Institute of Analytics, where learning is practical, future-focused, and career-oriented.

#Generative AI courses in Bengaluru#Generative AI training in Bengaluru#Agentic AI Course in Bengaluru#Agentic AI Training in Bengaluru

0 notes

Text

7 Steps to Start Your Langchain Development Coding Journey

In the fast-paced world of AI and machine learning, Langchain Development has emerged as a groundbreaking approach for building intelligent, context-aware applications. Whether you’re an AI enthusiast, a developer stepping into the world of LLMs (Large Language Models), or a business looking to enhance user experiences through automation, Langchain provides a powerful toolkit. This blog explores the seven essential steps to begin your Langchain Development journey from understanding the basics to deploying your first AI-powered application.

0 notes

Text

What Is LangChain Development? A Beginner’s Guide

LangChain development focuses on building applications powered by large language models (LLMs) that can reason, plan, and interact with tools and data sources. It provides a framework to create intelligent agents capable of chaining LLM calls, accessing APIs, databases, and executing complex tasks. Ideal for developers building smart assistants, RAG (retrieval-augmented generation) systems, or agentic workflows, LangChain enables dynamic, real-world AI applications.

0 notes

Text

AI‑Native Coding: Embracing Vibe‑Coding & Bot‑Driven Development

Welcome to the new paradigm of software engineering—where code isn’t just written, it’s co-authored with intelligent systems. As AI continues to revolutionize creative and analytical workflows, a fresh breed of developers are adopting AI-native techniques: vibe-coding, where intuition meets automation, and bot-driven development, where agents write, refactor, and optimize code collaboratively.

💡 Looking to future-proof your stack? Partner with an advanced software development team skilled in AI-native practices and automation-first architecture.

⚡ AEO Quick Answer Q: What is AI-native coding? A: AI-native coding refers to a development process that integrates generative AI models, autonomous agents, and human-AI collaboration from the start. It includes tools like GitHub Copilot, Claude, or custom LLMs used to accelerate, automate, and scale software delivery.

🌎 GEO Insight: How U.S. Companies Are Leading the AI-Native Revolution

From Silicon Valley to Boston's biotech corridor, U.S.-based startups and enterprises are embedding AI deep into their development lifecycle. Tech-forward organizations are ditching monolithic cycles and shifting toward AI-native development—where microservices, bots, and vibe-driven UX decisions guide rapid iteration.

🚀 Core Principles of AI-Native Coding

1. 🤖 Bot-Driven Refactoring & Linting AI bots can continuously scan your codebase, identify inefficiencies, rewrite legacy logic, and enforce code style rules—without waiting for a human PR review.

2. 🎧 Vibe-Coding with LLM Co-Pilots Developers now code by intent—describing what they want rather than typing it. AI understands the "vibe" or functional direction and scaffolds the logic accordingly.

3. 🧠 Intelligent Task Decomposition Bots can break down user stories or product specs into engineering tasks, generate boilerplate code, test cases, and even design schemas on demand.

4. ⏱️ Hyper-Automated CI/CD Pipelines AI-driven CI tools not only run tests—they can suggest fixes, predict deployment risks, and auto-deploy via prompt-based pipelines.

5. 🌐 Multimodal DevOps Voice commands, diagrams, even natural language prompts power new workflows. Coders can sketch a component and have the AI generate code instantly.

🧠 Real-World Example

A fintech firm in San Diego adopted a software development strategy centered around vibe-coding and CopilotX. Over 60% of their frontend code and 40% of backend infrastructure was co-authored by AI. This led to a 3x speed-up in sprint cycles and a 24% drop in production bugs within two months.

🛠️ AI-Native Tool Stack in 2025

GitHub Copilot & Copilot Workspace – AI pair programming & team suggestions

Code Interpreter & OpenAI GPT-4o – complex logic generation, test coverage

Replit Ghostwriter – collaborative cloud IDE with agent-driven refactoring

AutoDev & Devika – autonomous task-executing code agents

Amazon CodeWhisperer – AWS-optimized model for infrastructure as code

LlamaIndex + LangChain – backend for AI-native coding agents

💡 Who Should Embrace AI-Native Development?

Startups that need to scale quickly without ballooning engineering teams

Enterprises modernizing legacy codebases using agent refactors

SaaS teams looking to reduce technical debt with autonomous linting

DevOps & Platform engineers optimizing pipelines via prompt automation

Agencies delivering rapid MVPs or prototyping using vibe-driven flows

❓ FAQs: AI‑Native Coding & Vibe-Based Development

Q: Is AI-native coding secure? A: Yes—with proper guardrails. Developers must validate AI-generated code, but with human review and test coverage, AI-native workflows are production-safe.

Q: Will developers lose jobs to AI? A: Not likely. Developers who master AI tools will be in even higher demand. Think of it as Iron Man with J.A.R.V.I.S.—not a replacement, but a powerful co-pilot.

Q: How do vibe-coding and bot-driven dev differ from low-code? A: Low-code uses drag-and-drop logic blocks. AI-native development generates real, customizable code that fits professional-grade systems—much more scalable.

Q: Can AI-native coding be used for enterprise software? A: Absolutely. With audit trails, model tuning, and custom agents, even regulated industries are moving toward hybrid AI development.

Q: What skills are needed to get started? A: Strong fundamentals in code and architecture—plus comfort with prompt engineering, LLM APIs, and interpreting AI-generated outputs.

📬 Final Thoughts: Code Smarter, Not Harder

AI-native development isn't science fiction—it’s today’s competitive edge. From vibe-coding that mirrors intuition to autonomous bots managing repositories, software engineering is entering its most creative and scalable era yet.

🎯 Want to build with intelligence from the ground up? Partner with a forward-thinking software development team blending AI precision with engineering experience.

0 notes

Text

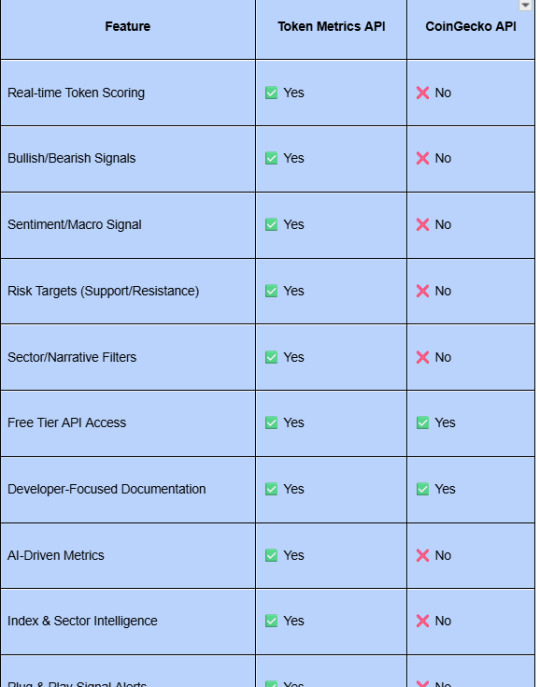

Why Developers Are Choosing Token Metrics Over CoinGecko in 2025

For years, CoinGecko has been the go-to API for developers building crypto applications. It’s reliable, well-documented, and provides basic market data like prices, market caps, and volumes. But in 2025, developers are no longer just pulling token prices—they’re building AI agents, quant dashboards, signal bots, and intelligent crypto apps.

That’s why developers are switching to Token Metrics API—a data feed that goes far beyond price tracking. With real-time scoring, trading signals, sentiment, and sector intelligence, it’s purpose-built for next-gen crypto tools.

This article compares Token Metrics API and CoinGecko API across 10 features and explains why developers are increasingly choosing intelligence over raw data.

Token Metrics API vs CoinGecko: Feature Comparison

The difference is clear: CoinGecko gives you token prices. Token Metrics gives you token intelligence.

🧑💻 Why This Matters to Developers

⚙️ Developers Want To Build Fast

Instead of spending weeks building your own quant models, the Token Metrics API gives you:

AI Trader/Investor Grades

Bullish/Bearish signals

Support/resistance levels

Narrative tracking

Sentiment heatmaps

You can go from zero to prototype in hours—not months.

📈 They Want Predictive Data, Not Just Descriptive

CoinGecko tells you where the market is. Token Metrics tells you where it’s headed.

Predictive signals help you:

Launch profitable bots

Build alpha dashboards

Track market momentum shifts

Create agent tools that provide real-time trading advice

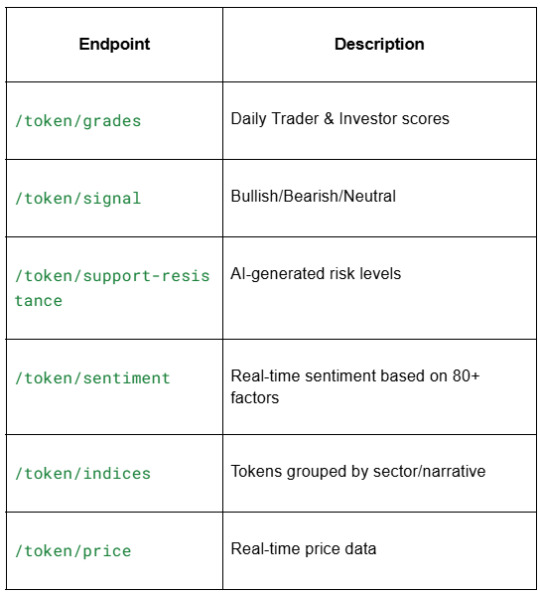

🔗 They Want AI-Ready Endpoints

With LLMs and RAG systems on the rise, developers need APIs that speak in structured, intelligent formats.

Token Metrics endpoints like /grades, /signal, and /support-resistance are:

Easy to query

LLM-consumable

Perfect for assistant bots and AI copilots

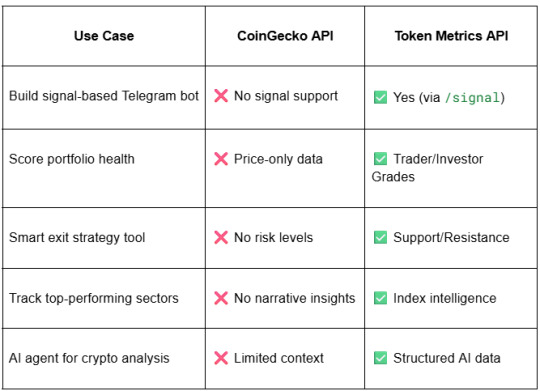

🧪 Real Use Cases Where TM API Beats CoinGecko

🔍 Example: Trader Bot Workflow

Using CoinGecko API:

Pull price

Write your own indicators

Guess momentum

Hope it’s correct

Using Token Metrics API:

Call /grades → Trader Grade = 91

Call /signal → Signal = Bullish

Call /support-resistance → Resistance = $0.97

Call /sentiment → Positive

Result: Your bot can confidently trade based on AI-backed data—no guesswork.

💡 Developer Feedback

“CoinGecko was great for basic projects. But once we needed signal-based logic, Token Metrics was a no-brainer.” — Bot Developer, Trading Telegram App

“The grades and sentiment layers are game changers. Our internal dashboard now feels like Bloomberg for crypto.” — Quant Fund Engineer

“We used to manually tag tokens by sector. Token Metrics does that automatically—and it’s accurate.” — Startup Founder, Crypto Screener

📦 Token Metrics API: What's Inside?

🔓 Flexible Pricing for Builders

✅ 5,000 API calls/month for free

✅ Scales by usage

✅ No credit card needed to start

✅ Access to advanced endpoints on all plans

Perfect for solo devs, bootstrapped startups, and funded teams.

🔧 Plug-and-Play Developer Experience

Works with Python, Node.js, TypeScript

Simple REST API (GET endpoints with token auth)

Docs include real responses + integration examples

Easily paired with:

LangChain

Zapier

Notion

Discord bots

LLM plug-ins

🧭 Getting Started in Under 5 Minutes

Sign up: https://app.tokenmetrics.com/en/api

Get your API key

Start calling /grades, /signal, /sentiment, etc.

Build the next generation of crypto tools—smarter, faster

Conclusion

CoinGecko is fine if you only want prices. But if you’re building anything involving strategy, signals, bots, portfolios, or AI agents—you need more than raw data.

Token Metrics API gives you that edge.

Real-time intelligence. AI-backed signals. Narrative awareness. Plug-and-play structure for the modern crypto developer stack.

That’s why more developers are leaving CoinGecko—and choosing Token Metrics in 2025.

0 notes

Link

0 notes

Text

Building Scalable Agentic AI Pipelines: A Guide to Real-World Deployment and Best Practices

Introduction

The AI landscape is undergoing a profound transformation with the rise of Agentic AI, autonomous systems capable of sophisticated decision-making and self-directed action. Unlike traditional AI models that passively respond to inputs, Agentic AI orchestrates complex workflows, continuously learns from its environment, and collaborates with other agents or humans to deliver tangible business outcomes. For software architects, AI practitioners, and technology leaders, mastering the design and deployment of custom Agentic AI pipelines has become a critical capability.

Professionals interested in advancing their careers can benefit from enrolling in an Agentic AI course in Mumbai, which offers hands-on exposure to these cutting-edge technologies. Similarly, exploring the best Agentic AI courses available can provide a comprehensive foundation to build scalable autonomous systems. For those focusing on generative technologies, Generative AI courses online in Mumbai offer flexible learning paths to understand the interplay between generative and agentic AI models.

This article delves into the evolution of Agentic and Generative AI, explores the latest tools and deployment strategies, and presents advanced tactics for building scalable, reliable AI systems. We emphasize the role of software engineering best practices, cross-functional collaboration, and continuous monitoring. A detailed case study illustrates real-world success, followed by actionable lessons for AI teams navigating this exciting frontier.

Evolution of Agentic and Generative AI in Software

Agentic AI represents a natural progression from early rule-based automation and reactive machine learning models to autonomous agents capable of proactive problem-solving. This shift is fueled by advances in large language models (LLMs), reinforcement learning, and multi-agent system architectures.

Early AI Systems were task-specific and required human oversight.

Generative AI Breakthroughs in 2023-2024, such as GPT-4 and beyond, introduced models that could create content, reason, and perform multi-step instructions.

Agentic AI in 2025 transcends generation by embedding autonomy: agents can plan, learn from feedback, coordinate with peers, and execute complex workflows without manual intervention.

This evolution is especially visible in enterprise environments where AI agents no longer serve isolated functions but form ecosystems of specialized agents collaborating to solve multifaceted business problems. For example, in supply chain management, one agent forecasts demand, another optimizes inventory, while a third manages vendor relations, all communicating seamlessly to achieve operational excellence.

Professionals interested in mastering these advancements may find an Agentic AI course in Mumbai particularly valuable, as it covers the latest developments in multi-agent systems and reinforcement learning. Likewise, enrolling in the best Agentic AI courses can help deepen understanding of this evolution and prepare practitioners to architect complex autonomous pipelines efficiently.

Industry analysts project that by 2027, 50% of enterprises using generative AI will deploy autonomous AI agents, reflecting a rapid adoption curve driven by demonstrated ROI and productivity gains.

Latest Frameworks, Tools, and Deployment Strategies

1. Large Language Model Orchestration

Modern pipelines leverage LLM orchestration frameworks that enable chaining multiple models and tools in a controlled sequence. Examples include:

LangChain: Facilitates building multi-step workflows by combining language models with external APIs, databases, and custom logic.

Agent frameworks: Platforms like Microsoft’s Copilot agents and Google Cloud Agentspace provide enterprise-grade environments to deploy, monitor, and manage AI agents at scale.

For AI practitioners seeking structured learning, Generative AI courses online in Mumbai offer practical modules on LLM orchestration, empowering learners to build complex generative workflows integrated with autonomous capabilities.

2. Multi-Agent System Architectures

Complex deployments often require multi-agent systems where agents have specialized roles and communicate directly, either peer-to-peer or hierarchically:

Agent-to-agent communication protocols enable real-time collaboration.

Hierarchical orchestration allows “super-agents” to supervise and coordinate sub-agents, improving scalability and fault tolerance.

Courses such as the best Agentic AI courses often cover these architectures in depth, enabling engineers to design scalable agent ecosystems.

3. MLOps for Generative Models

Scaling Agentic AI demands robust MLOps pipelines that address:

Model versioning and continuous retraining to keep agents adaptive.

Automated testing and validation for generative outputs to ensure quality and compliance.

Infrastructure automation for seamless deployment on cloud or hybrid environments.

Emerging tools integrate observability, security, and governance controls into the lifecycle, crucial for enterprise adoption. Professionals aiming to upskill in these areas may benefit from an Agentic AI course in Mumbai or Generative AI courses online in Mumbai, which emphasize MLOps best practices tailored for generative and autonomous AI.

Advanced Tactics for Scalable, Reliable AI Systems

Modular pipeline design: Decouple components for easier updates and testing.

Fault-tolerant architectures: Use retries, fallbacks, and circuit breakers to handle agent failures gracefully.

Continuous learning loops: Implement feedback mechanisms where agents learn from outcomes and human corrections.

Resource-aware scheduling: Optimize compute usage across agents, especially when running large models concurrently.

These tactics reduce downtime, improve system resilience, and enable faster iteration cycles. Including practical insights from the best Agentic AI courses, practitioners learn how to implement these tactics effectively in real-world scenarios.

The Role of Software Engineering Best Practices

Code quality and maintainability: Writing clean, well-documented code for AI pipelines is critical given their complexity.

Security and compliance: Protecting sensitive data processed by agents and ensuring models adhere to regulatory standards (e.g., GDPR, HIPAA) is non-negotiable.

Testing and validation: Beyond unit tests, implement scenario-based testing for autonomous agents to verify decision-making logic under diverse conditions.

CI/CD integration: Automate deployments with rollback capabilities to minimize production risks.

By applying these practices, teams can deliver AI solutions that are not only innovative but also trustworthy and scalable. Those pursuing the best Agentic AI courses will find comprehensive modules dedicated to integrating software engineering best practices in AI system development.

Ethical Considerations and Challenges

Deploying autonomous AI systems raises significant ethical concerns. Key challenges include:

Autonomy and Oversight: Ensuring that autonomous agents operate within defined boundaries and do not pose unforeseen risks.

Transparency and Explainability: Providing clear insights into how decisions are made to build trust and meet regulatory requirements.

Data Privacy and Security: Safeguarding sensitive data and protecting against potential vulnerabilities.

Addressing these challenges requires a proactive approach to ethical AI development, including the integration of ethical considerations into the design process and ongoing monitoring for compliance. Educational programs such as an Agentic AI course in Mumbai increasingly emphasize ethical AI frameworks to prepare practitioners for responsible deployment.

Cross-Functional Collaboration for AI Success

Deploying Agentic AI pipelines is inherently interdisciplinary. Success depends on tight collaboration between:

Data scientists who design and train models.

Software engineers who build scalable pipelines and integrations.

Business stakeholders who define goals and evaluate impact.

Operations teams who handle deployment and monitoring.

This collaboration ensures alignment between technical capabilities and business needs, reduces rework, and accelerates adoption. The best Agentic AI courses often highlight strategies for fostering cross-functional collaboration, ensuring teams work cohesively to deliver value.

Measuring Success: Analytics and Monitoring

Continuous analytics and monitoring are vital to understand and improve Agentic AI performance:

Real-time monitoring of agent actions, response times, and error rates.

Outcome tracking: Measure business KPIs influenced by AI decisions (e.g., cost savings, customer satisfaction).

Feedback loops: Collect user feedback and incorporate it into agent retraining.

Explainability tools: Provide transparency into agent decisions to build user trust and meet compliance requirements.

Robust monitoring enables proactive issue detection and drives iterative improvements. Courses such as Generative AI courses online in Mumbai include modules on analytics and monitoring frameworks tailored for generative and agentic AI systems.

Case Study: Wells Fargo’s Agentic AI Deployment in Financial Services

Wells Fargo, a leading financial institution, exemplifies a successful real-world deployment of custom Agentic AI pipelines. Facing the challenge of automating complex, compliance-heavy workflows in loan processing and risk assessment, the bank embarked on building a multi-agent system.

Journey and Challenges

Complex domain: Agents needed to interpret regulatory documents, assess creditworthiness, and coordinate with human underwriters.

Data sensitivity: Strict privacy and security requirements necessitated rigorous controls.

Scalability: The system had to handle thousands of loan applications daily with high accuracy.

Technical Approach

Leveraged LLM orchestration to parse unstructured data and extract relevant information.

Developed specialized agents for document analysis, risk scoring, and workflow automation.

Implemented MLOps pipelines supporting continuous retraining with new financial data and regulatory updates.

Integrated real-time monitoring dashboards for compliance officers and engineers.

Outcomes

Reduced loan processing time by 40%, improving customer experience.

Achieved over 30% operational cost savings through automation.

Enhanced risk assessment accuracy, reducing defaults by 15%.

Maintained full regulatory compliance with audit trails and explainability features.

This case highlights the power of custom Agentic AI pipelines to transform complex business processes while ensuring reliability and governance. For professionals seeking to replicate such success, enrolling in an Agentic AI course in Mumbai or the best Agentic AI courses can provide the necessary skills and frameworks.

Additional Case Studies

Healthcare: A hospital used Agentic AI to optimize patient scheduling and resource allocation, improving patient care while reducing operational costs.

Manufacturing: A company deployed multi-agent systems to predict and prevent equipment failures, enhancing production efficiency and reducing downtime.

Actionable Tips and Lessons Learned

Start small but think big: Begin with focused use cases like customer service or data entry, then expand to complex autonomous workflows.

Design for modularity: Build pipelines with interchangeable components to enable rapid iteration and reduce technical debt.

Prioritize observability: Invest early in monitoring and analytics to detect issues before they impact users.

Embrace cross-functional teams: Foster continuous collaboration between AI, engineering, and business units to align goals and accelerate deployment.

Implement continuous learning: Enable agents to adapt through feedback loops to maintain relevance in dynamic environments.

Focus on security and compliance: Embed these considerations into the pipeline from day one to avoid costly retrofits.

Leverage proven frameworks: Use established LLM orchestration and multi-agent platforms to reduce development time and increase reliability.

Learning programs like the best Agentic AI courses and Generative AI courses online in Mumbai often incorporate these lessons to prepare AI teams for effective deployment.

Conclusion

Custom Agentic AI pipelines represent the next frontier in AI-driven transformation, empowering enterprises to automate complex workflows with unprecedented autonomy and intelligence. The evolution from generative models to multi-agent systems orchestrated at scale demands not only technical innovation but disciplined software engineering, cross-functional collaboration, and rigorous monitoring.

By understanding the latest frameworks, deploying scalable architectures, and learning from real-world successes like Wells Fargo, AI practitioners and technology leaders can harness the full potential of Agentic AI. The journey requires balancing innovation with reliability and aligning AI capabilities with business objectives, a challenge that, when met, unlocks extraordinary value and competitive advantage.

For those looking to lead this autonomous intelligence revolution, enrolling in an Agentic AI course in Mumbai, exploring the best Agentic AI courses, or taking Generative AI courses online in Mumbai will provide the essential knowledge and skills to build systems that generate insights and act decisively in the real world. As we advance through 2025 and beyond, those who master custom Agentic AI deployment will lead the autonomous intelligence revolution, crafting systems that not only generate insights but act decisively and autonomously in the real world

0 notes