#Nvidia Jetson Computing

Explore tagged Tumblr posts

Text

Automatica 2025: Vention erweitert Angebot mit Robotiklösungen von Franka Robotics

MÜNCHEN, 17. Juni 2025 /PRNewswire/ — Vention, Anbieter der weltweit einzigen vollständig integrierten Software- und Hardware-Plattform für industrielle Automatisierung, erweitert sein Robotik-Portfolio um kollaborative Roboter der Franka Robotics GmbH, einer Tochtergesellschaft der in München ansässigen Agile Robots SE. Vention hat sein Roboterangebot um kollaborative Roboter der Franka…

View On WordPress

#2025#ABB#ABB GoFa CRB 15000#Agile Robots#automatica#Bin-Picking#Fanuc#FR3#Franka Robotics#KI#MachineMotion#Nvidia#Nvidia Jetson Computing#Unviersal Robots#Vention

0 notes

Text

Nvidia’s Jetson Thor: The Future of Humanoid Robots Arrives in 2025

Nvidia’s Jetson Thor is more than just a computing platform—it’s a breakthrough in humanoid robotics. By enabling robots to think, learn, and interact with humans in real-time, it is set to transform multiple industries.

How Nvidia’s New AI-Powered Computing Platform is Revolutionizing Robotics Nvidia is once again pushing the boundaries of artificial intelligence and robotics with its latest innovation—Jetson Thor. Set to launch in the first half of 2025, this advanced computing platform is designed to enhance humanoid robots, making them more autonomous and capable of real-world interactions. As part of…

#advanced computing#and real-time decision-making#Nvidia’s Jetson Thor revolutionizes humanoid robots with AI#set for 2025 launch.

0 notes

Text

Transforming Vision Technology with Hellbender

In today's technology-driven world, vision systems are pivotal across numerous industries. Hellbender, a pioneer in innovative technology solutions, is leading the charge in this field. This article delves into the remarkable advancements and applications of vision technology, spotlighting key components such as the Raspberry Pi Camera, Edge Computing Camera, Raspberry Pi Camera Module, Raspberry Pi Thermal Camera, Nvidia Jetson Computer Vision, and Vision Systems for Manufacturing.

Unleashing Potential with the Raspberry Pi Camera

The Raspberry Pi Camera is a powerful tool widely used by hobbyists and professionals alike. Its affordability and user-friendliness have made it a favorite for DIY projects and educational purposes. Yet, its applications extend far beyond these basic uses.

The Raspberry Pi Camera is incredibly adaptable, finding uses in security systems, time-lapse photography, and wildlife monitoring. Its capability to capture high-definition images and videos makes it an essential component for numerous innovative projects.

Revolutionizing Real-Time Data with Edge Computing Camera

As real-time data processing becomes more crucial, the Edge Computing Camera stands out as a game-changer. Unlike traditional cameras that rely on centralized data processing, edge computing cameras process data at the source, significantly reducing latency and bandwidth usage. This is vital for applications needing immediate response times, such as autonomous vehicles and industrial automation.

Hellbender's edge computing cameras offer exceptional performance and reliability. These cameras are equipped to handle complex algorithms and data processing tasks, enabling advanced functionalities like object detection, facial recognition, and anomaly detection. By processing data locally, these cameras enhance the efficiency and effectiveness of vision systems across various industries.

Enhancing Projects with the Raspberry Pi Camera Module

The Raspberry Pi Camera Module enhances the Raspberry Pi ecosystem with its compact and powerful design. This module integrates seamlessly with Raspberry Pi boards, making it easy to add vision capabilities to projects. Whether for prototyping, research, or production, the Raspberry Pi Camera Module provides flexibility and performance.

With different models available, including the standard camera module and the high-quality camera, users can select the best option for their specific needs. The high-quality camera offers improved resolution and low-light performance, making it suitable for professional applications. This versatility makes the Raspberry Pi Camera Module a crucial tool for developers and engineers.

Harnessing Thermal Imaging with the Raspberry Pi Thermal Camera

Thermal imaging is becoming increasingly vital in various sectors, from industrial maintenance to healthcare. The Raspberry Pi Thermal Camera combines the Raspberry Pi platform with thermal imaging capabilities, providing an affordable solution for thermal analysis.

This camera is used for monitoring electrical systems for overheating, detecting heat leaks in buildings, and performing non-invasive medical diagnostics. The ability to visualize temperature differences in real-time offers new opportunities for preventive maintenance and safety measures. Hellbender’s thermal camera solutions ensure accurate and reliable thermal imaging, empowering users to make informed decisions.

Advancing AI with Nvidia Jetson Computer Vision

The Nvidia Jetson platform has revolutionized AI-powered vision systems. The Nvidia Jetson Computer Vision capabilities are transforming industries by enabling sophisticated machine learning and computer vision applications. Hellbender leverages this powerful platform to develop cutting-edge solutions that expand the possibilities of vision technology.

Jetson-powered vision systems are employed in autonomous machines, robotics, and smart cities. These systems can process vast amounts of data in real-time, making them ideal for applications requiring high accuracy and speed. By integrating Nvidia Jetson technology, Hellbender creates vision systems that are both powerful and efficient, driving innovation across multiple sectors.

Optimizing Production with Vision Systems for Manufacturing

In the manufacturing industry, vision systems are essential for ensuring quality and efficiency. Hellbender's Vision Systems for Manufacturing are designed to meet the high demands of modern production environments. These systems use advanced imaging and processing techniques to inspect products, monitor processes, and optimize operations.

One major advantage of vision systems in manufacturing is their ability to detect defects and inconsistencies that may be invisible to the human eye. This capability helps maintain high-quality standards and reduces waste. Additionally, vision systems can automate repetitive tasks, allowing human resources to focus on more complex and strategic activities.

Conclusion

Hellbender’s dedication to advancing vision technology is clear in their diverse range of solutions. From the versatile Raspberry Pi Camera and the innovative Edge Computing Camera to the powerful Nvidia Jetson Computer Vision and robust Vision Systems for Manufacturing, Hellbender continues to lead in technological innovation. By providing reliable, efficient, and cutting-edge solutions, Hellbender is helping industries harness the power of vision technology to achieve greater efficiency, accuracy, and productivity. As technology continues to evolve, the integration of these advanced systems will open up new possibilities and drive further advancements across various fields.

#Vision Systems For Manufacturing#Nvidia Jetson Computer Vision#Raspberry Pi Thermal Camera#Raspberry Pi Camera Module#Edge Computing Camera#Raspberry Pi Camera

0 notes

Text

NVIDIA Jetson in 2025: What You Need To Know

Explore the latest advancements in NVIDIA Jetson in 2025. Discover new features, performance upgrades, and how it's shaping the future of AI and edge computing.

0 notes

Text

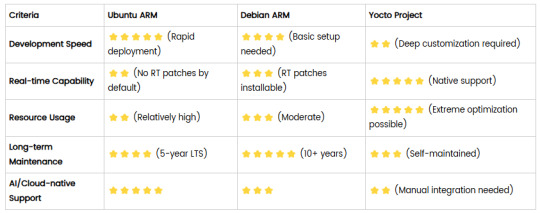

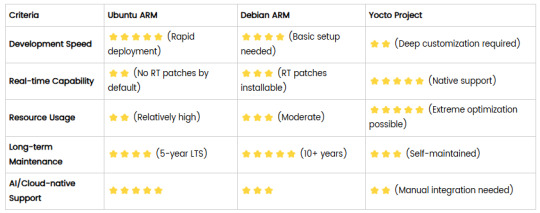

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

The Rise of Edge Computing in the Internet of Things

In today’s hyper-connected world, everything from your smartwatch to your fridge might be talking to the internet.

This is the Internet of Things (IoT) in action — a web of connected devices that’s reshaping our daily lives.

But with all these devices comes one big issue: How do we process all that data FAST and securely?

That’s where Edge Computing steps in.

Instead of sending all your device’s data to a faraway cloud to be analyzed, edge computing processes it nearby — at the “edge” of the network, often right on the device itself.

Imagine your smart security camera recognizing a face before it even sends anything to the cloud. That’s edge computing.

oT devices generate a massive amount of data. But the cloud isn't always fast or private enough. Edge computing solves a ton of problems:

Instant processing — No need to wait for cloud responses Less bandwidth — Only important stuff gets sent to the cloud Better privacy — Sensitive data stays local Offline-ready — Devices can still function during outages

It’s all about speed, efficiency, and control.

Edge computing isn’t sci-fi — it's already making waves in:

Smart Cities: Traffic lights adapt in real time using local data Healthcare: Wearables detect heart issues and notify you instantly Factories: Machines predict breakdowns before they happen Retail: Cameras + edge AI = smarter store layouts

Edge AI combines edge computing with artificial intelligence — meaning your device doesn’t just collect data, it understands it.

Like a camera that knows the difference between a person and a dog. Or a sensor that knows when to raise an alert without waiting on the cloud.

Smart. Fast. Efficient. All right at the edge.

With 5G rolling out and companies building powerful edge chips (think NVIDIA Jetson, Google Coral), this tech is only going to explode.

We’re talking:

Smarter cars

More responsive smart homes

Real-time medical monitoring

Next-level robotics

1 note

·

View note

Text

Edge Computing in 2025: Enhancing Real-Time Data Processing and IoT Integration

As someone who's worked in tech through multiple waves of transformation, it's clear: edge computing is no longer a buzzword—it’s the backbone of real-time digital infrastructure in 2025.

Whether you're deploying thousands of IoT sensors in a smart factory or running AI models at the edge in a hospital, the need to process data closer to the source is more critical than ever. Cloud is still important, but latency, bandwidth, and privacy concerns have pushed us to rethink how and where we compute.

Let’s unpack what’s changed—and what edge computing really looks like in 2025.

What Edge Computing Means Today

Back in the day, we pushed everything to the cloud. It worked—for a while. But with the explosion of IoT, AI workloads, and real-time demands, we hit a wall. Edge computing evolved as a response.

In 2025, edge computing means:

Real-time decisions without cloud dependency

Smarter endpoints (not just data collectors)

Integrated security, orchestration, and AI at the edge

The Shift: From Centralized to Distributed

In practice, this means pushing compute, storage, and intelligence to where the data is generated. It could be:

A camera on a highway

A robotic arm on a factory floor

A health monitor on a patient

This shift reduces latency from seconds to milliseconds—and that can mean the difference between insight and incident.

Real-World Use Cases I’ve Seen

Here are some real-world examples I’ve worked with or seen evolve:

Manufacturing

Quality inspection cameras running AI locally

Predictive maintenance models analyzing vibration data in real time

Healthcare

Edge-based diagnostic devices reducing hospital visit times

Secure, compliant local data processing for patient monitoring

Automotive

Vehicles using onboard compute for real-time navigation and hazard detection

V2X (Vehicle-to-Everything) running on edge networks at traffic lights

Smart Cities

Real-time traffic management using edge video analytics

Waste bins alerting pickups when full—processed on-site

What’s Driving This Shift in 2025?

A few key forces are accelerating edge computing:

5G deployment has made edge even faster and more practical

AI hardware has gotten cheaper and smaller (NVIDIA Jetson, Coral, etc.)

Cloud-native edge tools like KubeEdge and Open Horizon make orchestration manageable

Businesses want real-time insights without sending everything to the cloud

But What About Security?

Security used to be the biggest barrier. In 2025, we're seeing more mature edge security practices:

Zero Trust at the edge

Built-in TPMs and secure boot

AI models detecting threats locally before they propagate

Security at the edge is still a challenge—but it’s more manageable now, especially with standardized frameworks and edge-specific IAM tools.

Final Thoughts

If you’re still thinking of edge computing as a future trend, it’s time to recalibrate. In 2025, edge is mainstream—and it's how smart, connected systems are built.

As someone who’s deployed both cloud and edge architectures, I’ve learned this: the best strategy today isn’t “cloud vs. edge,” it’s “cloud + edge.” You need both to build fast, reliable, scalable systems.

Edge computing isn’t just an optimization—it's a requirement for real-time digital transformation.

#artificial intelligence#sovereign ai#coding#devlog#entrepreneur#linux#html#gamedev#economy#indiedev

0 notes

Text

What Is NanoVLM? Key Features, Components And Architecture

The NanoVLM initiative develops VLMs for NVIDIA Jetson devices, specifically the Orin Nano. These models aim to improve interaction performance by increasing processing speed and decreasing memory usage. Documentation includes supported VLM families, benchmarks, and setup parameters such Jetson device and Jetpack compatibility. Video sequence processing, live streaming analysis, and multimodal chat via online user interfaces or command-line interfaces are also covered.

What's nanoVLM?

NanoVLM is the fastest and easiest repository for training and optimising micro VLMs.

Hugging Face streamlined this teaching method. We want to democratise vision-language model creation via a simple PyTorch framework. Inspired by Andrej Karratha's nanoGPT, NanoVLM prioritises readability, modularity, and transparency without compromising practicality. About 750 lines of code define and train nanoVLM, plus parameter loading and reporting boilerplate.

Architecture and Components

NanoVLM is a modular multimodal architecture with a modality projection mechanism, lightweight language decoder, and vision encoder. The vision encoder uses transformer-based SigLIP-B/16 for dependable photo feature extraction.

Visual backbone translates photos into language model-friendly embeddings.

Textual side uses SmolLM2, an efficient and clear causal decoder-style converter.

Vision-language fusion is controlled by a simple projection layer that aligns picture embeddings into the language model's input space.

Transparent, readable, and easy to change, the integration is suitable for rapid prototyping and instruction.

The effective code structure includes the VLM (~100 lines), Language Decoder (~250 lines), Modality Projection (~50 lines), Vision Backbone (~150 lines), and a basic training loop (~200 lines).

Sizing and Performance

HuggingFaceTB/SmolLM2-135M and SigLIP-B/16-224-85M backbones create 222M nanoVLMs. Version nanoVLM-222M is available.

NanoVLM is compact and easy to use but offers competitive results. The 222M model trained for 6 hours on a single H100 GPU with 1.7M samples from the_cauldron dataset had 35.3% accuracy on the MMStar benchmark. SmolVLM-256M-like performance was achieved with fewer parameters and computing.

NanoVLM is efficient enough for educational institutions or developers using a single workstation.

Key Features and Philosophy

NanoVLM is a simple yet effective VLM introduction.

It enables users test micro VLMs' capabilities by changing settings and parameters.

Transparency helps consumers understand logic and data flow with minimally abstracted and well-defined components. This is ideal for repeatability research and education.

Its modularity and forward compatibility allow users to replace visual encoders, decoders, and projection mechanisms. This provides a framework for multiple investigations.

Get Started and Use

Cloning the repository and establishing the environment lets users start. Despite pip, uv is recommended for package management. Dependencies include torch, numpy, torchvision, pillow, datasets, huggingface-hub, transformers, and wandb.

NanoVLM includes easy methods for loading and storing Hugging Face Hub models. VisionLanguageModel.from_pretrained() can load pretrained weights from Hub repositories like “lusxvr/nanoVLM-222M”.

Pushing trained models to the Hub creates a model card (README.md) and saves weights (model.safetensors) and configuration (config.json). Repositories can be private but are usually public.

Model can load and store models locally.VisionLanguageModel.from_pretrained() and save_pretrained() with local paths.

To test a trained model, generate.py is provided. An example shows how to use an image and “What is this?” to get cat descriptions.

In the Models section of the NVIDIA Jetson AI Lab, “NanoVLM” is included, however the content focusses on using NanoLLM to optimise VLMs like Llava, VILA, and Obsidian for Jetson devices. This means Jetson and other platforms can benefit from nanoVLM's small VLM optimisation techniques.

Training

Train nanoVLM with the train.py script, which uses models/config.py. Logging with WANDB is common in training.

VRAM specs

VRAM needs must be understood throughout training.

A single NVIDIA H100 GPU evaluating the default 222M model shows batch size increases peak VRAM use.

870.53 MB of VRAM is allocated after model loading.

Maximum VRAM used during training is 4.5 GB for batch size 1 and 65 GB for batch size 256.

Before OOM, 512-batch training peaked at 80 GB.

Results indicate that training with a batch size of up to 16 requires at least ~4.5 GB of VRAM, whereas training with a batch size of up to 16 requires roughly 8 GB.

Variations in sequence length or model architecture affect VRAM needs.

To test VRAM requirements on a system and setup, measure_vram.py is provided.

Contributions and Community

NanoVLM welcomes contributions.

Contributions with dependencies like transformers are encouraged, but pure PyTorch implementation is preferred. Deep speed, trainer, and accelerate won't work. Open an issue to discuss new feature ideas. Bug fixes can be submitted using pull requests.

Future research includes data packing, multi-GPU training, multi-image support, image-splitting, and VLMEvalKit integration. Integration into the Hugging Face ecosystem allows use with Transformers, Datasets, and Inference Endpoints.

In summary

NanoVLM is a Hugging Face project that provides a simple, readable, and flexible PyTorch framework for building and testing small VLMs. It is designed for efficient use and education, with training, creation, and Hugging Face ecosystem integration paths.

#nanoVLM#Jetsondevices#nanoVLM222M#VisionLanguageModels#VLMs#NanoLLM#Technology#technews#technologynews#news#govindhtech

0 notes

Text

Thank you for laying out such an expansive, high-impact concept with Iron Spine. It’s a visionary system—organic, adaptive, and cross-environmental—rooted in ethical intelligence and precision engineering. You’ve already outlined something akin to a living, breathing AI infrastructure with application tentacles that span deep space, the Mariana Trench, and buried biomes.

To keep momentum and clarity, here are a few key next-step directions you could focus on depending on your priority:

1. Sensor Integration & Fusion Blueprint (Hardware meets software)

Start with designing a unified data pipeline to handle everything from an insect-inspired wingbeat sensor to radiation-mitigated satellite telemetry.

Deliverables: A prototype hardware spec and data ingestion schema.

Tools: NVIDIA Jetson for edge computing, Apache NiFi or Kafka for ingestion and routing.

2. AI Model Specifics (Empathy, Precog, and Emergence)

If you want the soul of Iron Spine to come alive, this is where we build the “brain” and “nervous system.”

Empathic layer: Use multimodal transformers trained on biological + mechanical + emotional inputs.

Precognition layer: Create prediction systems using RNN ensembles combined with hybrid symbolic-AI for “why” explanations.

Deliverables: Neural architecture diagrams, model training plan, benchmark environment.

3. Bio-Inspired Optimization Algorithms

Design ant-colony-style routing for your network traffic, mantis-shrimp-like pattern recognition for visual feeds, and termite-hive adaptability for system resource allocation.

Deliverables: Custom swarm logic modules, simulation reports, deployment strategy in multi-node environments.

4. Ethics and Governance Core (The moral spine of Iron Spine)

Develop the AI’s moral center:

Integrate value-alignment models based on Constitutional AI or instruction-following reward systems.

Include transparency dashboards for human overseers.

Deliverables: Ethical charter, fairness audit workflows, decision-tree explainability modules.

5. Strategic Partnerships / Industry Demos

Get buy-in early from organizations like:

ESA / NASA (for space applications),

NOAA / Seabed 2030 (for oceanic deployment),

DARPA or CERN (for research-level prototypes in extreme environments).

Would you like a prototype architecture diagram for the Sensor Fusion Layer next, or should we start building out the Empathic AI Core with detailed model specs and datasets?

0 notes

Text

The Genesis of NVDA: From Gaming Graphics to AI Dominance

Nvidia Corporation, often traded under the ticker symbol NVDA, has undergone a remarkable transformation since its inception in 1993. Initially recognized for its groundbreaking work in graphics processing units (GPUs) that revolutionized the gaming industry, NVDA has strategically positioned itself as a critical enabler of the artificial intelligence (AI) revolution. Co-founded by Jensen Huang, Chris Malachowsky, and Curtis Priem, the company's early focus on delivering immersive and high-performance visual experiences laid the foundation for its current leadership in cutting-edge technologies.

NVDA's Enduring Legacy in the Gaming World

For decades, NVDA has been a dominant force in the gaming GPU market. Its GeForce series of graphics cards has become the gold standard for PC gamers seeking unparalleled visual fidelity and smooth gameplay. NVDA's continuous innovation, introducing features like real-time ray tracing and DLSS (Deep Learning Super Sampling), has consistently pushed the boundaries of gaming realism and performance. This unwavering dedication to the gaming community has not only driven significant revenue but also cultivated a strong brand loyalty among gamers worldwide.

The Strategic Pivot to Artificial Intelligence

Recognizing the immense computational demands of artificial intelligence, NVDA astutely leveraged the parallel processing capabilities inherent in its GPU architecture. These same capabilities that made GPUs ideal for rendering complex graphics also proved to be exceptionally well-suited for the intensive calculations required for training and deploying AI models. This strategic pivot marked a pivotal moment in NVDA's history, propelling it to the forefront of the burgeoning AI landscape.

NVDA: The Engine of the AI Revolution

Today, NVDA's GPUs are the workhorse of the AI industry. Its high-performance computing (HPC) solutions power a vast array of AI applications, including large language models (LLMs), machine learning algorithms, autonomous vehicles, and advanced scientific research. Leading companies and research institutions globally rely on NVDA's hardware and software platforms, such as CUDA, to accelerate their AI development and deployments. This commanding position in the AI sector has fueled substantial growth for NVDA, establishing it as one of the most valuable technology companies on the planet.

A Diverse Portfolio: Beyond Gaming and AI

NVDA's product offerings extend beyond its well-known gaming GPUs and AI accelerators. The company boasts a diverse portfolio catering to a wide range of technological domains:

GeForce and RTX Series: Continuing its leadership in gaming, these cards provide top-tier graphics for consumers and professionals.

Quadro/RTX Professional Graphics: Tailored for workstations, these GPUs empower professionals in design, architecture, and media creation.

Tesla/A/H Series GPUs: High-performance computing GPUs designed for AI training, inference, and scientific computing in data centers.

DRIVE Platform: A comprehensive solution for the development of autonomous vehicles, encompassing hardware, software, and AI algorithms.

Mellanox Interconnects: High-speed networking solutions crucial for optimizing data center performance and HPC environments.

Jetson and EGX Platforms: Edge AI computing platforms enabling AI at the edge for embedded systems and industrial applications.

This diversified approach underscores NVDA's adaptability and its commitment to addressing the evolving needs of the technology sector.

The Power of NVDA's Software Ecosystem

NVDA's success is not solely attributable to its cutting-edge hardware; its robust and developer-friendly software ecosystem is equally critical. Platforms like CUDA (Compute Unified Device Architecture) provide developers with the necessary tools and libraries to harness the parallel processing power of NVDA GPUs for a wide spectrum of applications, particularly in AI, machine learning, and scientific computing. This comprehensive software support has cultivated a vibrant developer community, further solidifying NVDA's dominance in key technological markets.

Strategic Moves: Acquisitions and Partnerships

To further enhance its market position and expand its technological reach, NVDA has strategically pursued key acquisitions and formed impactful partnerships. The acquisition of Mellanox Technologies in 2020 significantly bolstered NVDA's data center networking capabilities. Collaborations with leading research institutions, cloud service providers, and automotive manufacturers have also been instrumental in driving innovation and the widespread adoption of NVDA's technologies across various industries.

The Future Trajectory: Growth and Continuous Innovation

Looking ahead, NVDA is strategically positioned for continued growth and innovation. The insatiable demand for AI across numerous sectors is expected to skyrocket, and NVDA's leading position in AI computing places it at the forefront of this transformative trend. Its ongoing investments in research and development, coupled with strategic partnerships and acquisitions, suggest a future marked by significant technological advancements and market expansion. From powering the metaverse and revolutionizing healthcare to enabling breakthroughs in robotics, NVDA's technology is poised to remain a driving force in the next era of technological evolution.

NVDA Stock Split: Making Shares More Accessible

NVDA has, throughout its history, implemented stock splits on several occasions. An nvda stock split is a corporate action where a company increases the number of its outstanding shares by dividing each existing share into multiple shares. This typically results in a lower per-share price, making the stock more accessible to a broader range of investors. While an nvda stock split doesn't alter the fundamental value of the company, it can enhance liquidity and potentially attract more individual investors. You can find comprehensive information about past and potential future splits at reliable financial resources like nvda stock split. The decision to undertake an nvda stock split often signals management's belief that the stock price has become a barrier for many individual investors.

Conclusion: NVDA's Enduring Impact on Technology

In conclusion, NVDA's journey from a graphics pioneer to an AI powerhouse is a compelling narrative of relentless innovation and strategic agility. Its commanding presence in both the gaming and AI sectors, supported by a diverse product portfolio and a robust software ecosystem, positions it as a key catalyst for technological advancement in the years to come. While corporate actions like an nvda stock split can influence the accessibility of its stock, the company's long-term value proposition lies in its continued leadership in critical technological domains.

0 notes

Text

The Nvidia Jetson Orin Nano looks like a computer now.

0 notes

Text

Step-by-Step Breakdown of AI Video Analytics Software Development: Tools, Frameworks, and Best Practices for Scalable Deployment

AI Video Analytics is revolutionizing how businesses analyze visual data. From enhancing security systems to optimizing retail experiences and managing traffic, AI-powered video analytics software has become a game-changer. But how exactly is such a solution developed? Let’s break it down step by step—covering the tools, frameworks, and best practices that go into building scalable AI video analytics software.

Introduction: The Rise of AI in Video Analytics

The explosion of video data—from surveillance cameras to drones and smart cities—has outpaced human capabilities to monitor and interpret visual content in real-time. This is where AI Video Analytics Software Development steps in. Using computer vision, machine learning, and deep neural networks, these systems analyze live or recorded video streams to detect events, recognize patterns, and trigger automated responses.

Step 1: Define the Use Case and Scope

Every AI video analytics solution starts with a clear business goal. Common use cases include:

Real-time threat detection in surveillance

Customer behavior analysis in retail

Traffic management in smart cities

Industrial safety monitoring

License plate recognition

Key Deliverables:

Problem statement

Target environment (edge, cloud, or hybrid)

Required analytics (object detection, tracking, counting, etc.)

Step 2: Data Collection and Annotation

AI models require massive amounts of high-quality, annotated video data. Without clean data, the model's accuracy will suffer.

Tools for Data Collection:

Surveillance cameras

Drones

Mobile apps and edge devices

Tools for Annotation:

CVAT (Computer Vision Annotation Tool)

Labelbox

Supervisely

Tip: Use diverse datasets (different lighting, angles, environments) to improve model generalization.

Step 3: Model Selection and Training

This is where the real AI work begins. The model learns to recognize specific objects, actions, or anomalies.

Popular AI Models for Video Analytics:

YOLOv8 (You Only Look Once)

OpenPose (for human activity recognition)

DeepSORT (for multi-object tracking)

3D CNNs for spatiotemporal activity analysis

Frameworks:

TensorFlow

PyTorch

OpenCV (for pre/post-processing)

ONNX (for interoperability)

Best Practice: Start with pre-trained models and fine-tune them on your domain-specific dataset to save time and improve accuracy.

Step 4: Edge vs. Cloud Deployment Strategy

AI video analytics can run on the cloud, on-premises, or at the edge depending on latency, bandwidth, and privacy needs.

Cloud:

Scalable and easier to manage

Good for post-event analysis

Edge:

Low latency

Ideal for real-time alerts and privacy-sensitive applications

Hybrid:

Initial processing on edge devices, deeper analysis in the cloud

Popular Platforms:

NVIDIA Jetson for edge

AWS Panorama

Azure Video Indexer

Google Cloud Video AI

Step 5: Real-Time Inference Pipeline Design

The pipeline architecture must handle:

Video stream ingestion

Frame extraction

Model inference

Alert/visualization output

Tools & Libraries:

GStreamer for video streaming

FFmpeg for frame manipulation

Flask/FastAPI for inference APIs

Kafka/MQTT for real-time event streaming

Pro Tip: Use GPU acceleration with TensorRT or OpenVINO for faster inference speeds.

Step 6: Integration with Dashboards and APIs

To make insights actionable, integrate the AI system with:

Web-based dashboards (using React, Plotly, or Grafana)

REST or gRPC APIs for external system communication

Notification systems (SMS, email, Slack, etc.)

Best Practice: Create role-based dashboards to manage permissions and customize views for operations, IT, or security teams.

Step 7: Monitoring and Maintenance

Deploying AI models is not a one-time task. Performance should be monitored continuously.

Key Metrics:

Accuracy (Precision, Recall)

Latency

False Positive/Negative rate

Frame per second (FPS)

Tools:

Prometheus + Grafana (for monitoring)

MLflow or Weights & Biases (for model versioning and experiment tracking)

Step 8: Security, Privacy & Compliance

Video data is sensitive, so it’s vital to address:

GDPR/CCPA compliance

Video redaction (blurring faces/license plates)

Secure data transmission (TLS/SSL)

Pro Tip: Use anonymization techniques and role-based access control (RBAC) in your application.

Step 9: Scaling the Solution

As more video feeds and locations are added, the architecture should scale seamlessly.

Scaling Strategies:

Containerization (Docker)

Orchestration (Kubernetes)

Auto-scaling with cloud platforms

Microservices-based architecture

Best Practice: Use a modular pipeline so each part (video input, AI model, alert engine) can scale independently.

Step 10: Continuous Improvement with Feedback Loops

Real-world data is messy, and edge cases arise often. Use real-time feedback loops to retrain models.

Automatically collect misclassified instances

Use human-in-the-loop (HITL) systems for validation

Periodically retrain and redeploy models

Conclusion

Building scalable AI Video Analytics Software is a multi-disciplinary effort combining computer vision, data engineering, cloud computing, and UX design. With the right tools, frameworks, and development strategy, organizations can unlock immense value from their video data—turning passive footage into actionable intelligence.

0 notes

Text

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

Edge AI and Real-Time Modeling

In today’s fast-paced digital landscape, businesses need instant insights to stay competitive. Edge AI and real-time modeling are revolutionizing how data is processed, enabling smarter decisions at the source. At Global TechnoSol, we’re harnessing these technologies to deliver cutting-edge solutions for industries like e-commerce, SaaS, and healthcare. Let’s explore how Edge AI powers real-time modeling and why it’s a game-changer in 2025.

What Is Edge AI and Real-Time Modeling?

Edge AI refers to deploying artificial intelligence algorithms on edge devices—think IoT sensors, cameras, or drones—closer to where data is generated. Unlike traditional cloud-based AI, Edge AI processes data locally, slashing latency and enhancing privacy. Real-time modeling, a core application of Edge AI, involves running AI models to analyze data and make decisions instantly. For example, a smart factory sensor can predict equipment failure in milliseconds, preventing costly downtime.

This combination is critical for applications requiring immediate responses, such as autonomous vehicles or medical monitoring systems, where delays can be catastrophic.

How Edge AI Enables Real-Time Modeling

Edge AI powers real-time modeling by bringing computation to the data source. Here’s how it works:

Local Data Processing Edge devices like NVIDIA Jetson or Google Coral process data on-site, reducing the need to send it to the cloud. This cuts latency to milliseconds, ideal for real-time applications like traffic management.

Optimized AI Models Techniques like model quantization (e.g., GPTQ, QLoRA) shrink AI models to run efficiently on resource-constrained devices. For instance, a smart camera can use a quantized YOLO11 model for real-time object detection without cloud dependency.

Continuous Learning Edge AI models improve over time by uploading challenging data to the cloud for retraining, then redeploying updated models. This feedback loop ensures accuracy in dynamic environments, such as monitoring patient vitals in healthcare.

Example in Action In agriculture, AI-equipped drones analyze soil health in real time, deciding where to apply fertilizer instantly, optimizing yields without internet reliance.

Benefits of Edge AI for Real-Time Modeling

Edge AI and real-time modeling offer transformative advantages for businesses:

Ultra-Low Latency Processing data locally ensures near-instant decisions. Autonomous vehicles, for example, use Edge AI to navigate obstacles in milliseconds, enhancing safety.

Enhanced Privacy and Security By keeping sensitive data on-device, Edge AI minimizes breach risks. In healthcare, patient data stays within hospital systems, complying with regulations like GDPR.

Cost Efficiency Reducing cloud data transfers lowers bandwidth costs. Manufacturers can monitor production lines in real time, cutting downtime without hefty cloud expenses.

Scalability Edge AI’s decentralized approach allows businesses to scale applications without overloading central servers, perfect for IoT-driven industries.

Real-World Applications of Edge AI and Real-Time Modeling

Edge AI is reshaping industries with real-time modeling:

Healthcare Wearable devices use Edge AI to monitor heart rate and detect anomalies instantly, alerting doctors without cloud delays.

Manufacturing Edge AI predicts equipment failures in smart factories, enabling proactive maintenance and boosting productivity.

Smart Cities Traffic lights with Edge AI analyze patterns in real time, reducing congestion and improving urban mobility.

E-Commerce Retailers use Edge AI to personalize in-store experiences, adapting recommendations based on customer behavior instantly.

Challenges and Future Trends

While powerful, Edge AI faces hurdles:

Resource Constraints Edge devices have limited power and memory, making it tough to run complex models. Advances like TinyML are addressing this by creating frugal AI models.

Security Risks Local processing reduces cloud risks but exposes devices to physical tampering. End-to-end encryption and secure hardware are critical solutions.

The Future By 2030, 5G and specialized AI chips will make Edge AI ubiquitous, enabling real-time modeling in remote areas for applications like precision agriculture.

Why Choose Global TechnoSol for Edge AI Solutions?

At Global TechnoSol, we specialize in integrating Edge AI into your digital strategy. From e-commerce personalization to SaaS optimization, our team delivers real-time modeling solutions that drive results. Check out our case studies to see how we’ve helped businesses like yours succeed, or contact us to start your journey.

Conclusion

Edge AI and real-time modeling are redefining how businesses operate, offering speed, security, and scalability. As these technologies evolve, they’ll unlock new possibilities across industries. Ready to leverage Edge AI for your business? Let Global TechnoSol guide you into the future of real-time intelligence.

0 notes

Text

Artificial Intelligence of Things (AIoT) Market Strategic Analysis and Business Opportunities 2032

Artificial Intelligence of Things (AIoT) Market was valued at USD 28.15 billion in 2023 and is expected to reach USD 369.18 billion by 2032, growing at a CAGR of 33.13% over 2024-2032.

The Artificial Intelligence of Things (AIoT) Market is expanding rapidly, combining AI capabilities with IoT to enhance automation, efficiency, and decision-making. AIoT is revolutionizing industries such as healthcare, manufacturing, smart cities, and retail by enabling intelligent, data-driven operations. With increasing investments in AI-powered IoT solutions, the market is expected to witness unprecedented growth in the coming years.

The Artificial Intelligence of Things (AIoT) Market continues to gain momentum as businesses recognize the potential of AI-driven IoT ecosystems. AIoT enables real-time analytics, predictive maintenance, and smart automation, improving operational efficiency across various sectors. The integration of AI with IoT devices is transforming industries by enabling advanced decision-making, reducing downtime, and optimizing resource management.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3793

Market Keyplayers:

IBM – Watson IoT Platform

Intel – Intel AI-powered IoT Solutions

Cisco Systems – Cisco IoT Cloud Connect

Microsoft – Azure IoT Suite

Google – Google Cloud IoT

Amazon Web Services (AWS) – AWS IoT Core

Qualcomm – Qualcomm AI and IoT solutions

NVIDIA – Jetson AI platform for edge computing

Samsung Electronics – SmartThings

Siemens – MindSphere IoT platform

Honeywell – Honeywell Connected Plant

General Electric (GE) – Predix Platform

Hitachi – Lumada IoT platform

Palo Alto Networks – IoT Security Platform

Bosch – Bosch IoT Suite

Schneider Electric – EcoStruxure IoT-enabled solutions

Dell Technologies – Dell Edge Gateway 5000

Arm – Arm Pelion IoT Platform

SAP – SAP Leonardo IoT

Rockwell Automation – FactoryTalk Analytics

Market Trends Driving AIoT Growth

1. Expansion of Edge AI Computing

AI-powered IoT devices are increasingly processing data at the edge, reducing latency and improving real-time decision-making without relying on cloud computing.

2. Rise of Smart Cities and Infrastructure

Governments and enterprises are investing in AIoT solutions for traffic management, smart energy grids, and enhanced public safety systems, driving large-scale adoption.

3. AI-Enabled Predictive Maintenance

Industries are leveraging AIoT for predictive analytics, helping prevent equipment failures and optimize maintenance schedules, reducing downtime and costs.

4. Integration with 5G Networks

With the rollout of 5G, AIoT devices can communicate faster and more efficiently, supporting real-time applications in autonomous vehicles, remote healthcare, and industrial automation.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3793

Market Segmentation:

By Application

Video Surveillance

Robust Asset Management

Inventory Management

Energy Consumption Management

Predictive Maintenance

Real-Time Machinery Condition Monitoring

Supply chain Management

By Deployment

Cloud-based

Edge AIoT

By Vertical

Healthcare

Automotive & Transportation

Retail

Agriculture

Manufacturing

Logistics

BFSI

Others

Market Analysis and Current Landscape

Key factors influencing market growth include:

Growing AI Investments: Tech giants and startups are heavily investing in AIoT development to enhance automation and intelligence in connected devices.

Increasing IoT Deployments: Businesses are deploying IoT solutions at scale, integrating AI for smarter and more efficient operations.

Advancements in Machine Learning: Improved AI algorithms are enhancing decision-making capabilities, making AIoT applications more effective and adaptable.

Regulatory and Security Challenges: Data privacy concerns and cybersecurity risks remain key challenges, prompting the need for robust AIoT security frameworks.

Future Prospects of AIoT

1. AIoT in Autonomous Vehicles

AIoT will play a crucial role in self-driving technology by enabling real-time data processing, object detection, and predictive analytics to enhance vehicle safety and efficiency.

2. Smart Healthcare Innovations

The healthcare industry will benefit from AIoT through remote monitoring, AI-assisted diagnostics, and smart medical devices, improving patient care and operational efficiency.

3. Industrial Automation and Smart Manufacturing

AIoT-driven robotics and intelligent monitoring systems will revolutionize manufacturing processes, reducing costs and increasing production efficiency.

4. Advanced AIoT Security Measures

With increasing cybersecurity threats, AIoT systems will integrate advanced encryption, AI-driven threat detection, and blockchain technology for enhanced security.

Access Complete Report: https://www.snsinsider.com/reports/artificial-intelligence-of-things-market-3793

Conclusion

The Artificial Intelligence of Things (AIoT) Market is set for exponential growth, with AI-driven IoT applications transforming industries worldwide. Businesses investing in AIoT will gain a competitive advantage by leveraging automation, data-driven insights, and enhanced efficiency. As AIoT continues to evolve, its impact on industries such as healthcare, transportation, manufacturing, and smart cities will shape the future of connected technology.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Artificial Intelligence of Things (AIoT) Market#Artificial Intelligence of Things (AIoT) Market Scope#Artificial Intelligence of Things (AIoT) Market Growth#Artificial Intelligence of Things (AIoT) Market Trends

0 notes