#On-prem Database Migration

Explore tagged Tumblr posts

Text

5 Signs You’re Overpaying for Cloud — and How to Stop It

In today’s digital-first business environment, cloud computing has become a necessity. But as more organizations migrate to the cloud, many are waking up to a tough reality: they’re significantly overpaying for cloud services.

If you’re relying on AWS, Azure, GCP, or other providers to run your infrastructure, chances are you’re spending more than you need to. The complexity of cloud billing, unused resources, and lack of visibility can quickly balloon your monthly invoices.

1. You’re Running Unused or Idle Resources

One of the most common (and silent) culprits of cloud overspending is idle or underutilized resources.

The Problem:

You spin up instances or containers for testing, dev environments, or special projects — and then forget about them. These resources continue running 24/7, racking up costs, even though no one is using them.

The Fix:

Implement auto-scheduling tools to shut down non-production environments during off-hours. Regularly audit your infrastructure for zombie instances, unattached volumes, and dormant databases.

Tools like Cloudtopiaa’s automated resource analyzer can help identify and eliminate these wasted assets in real-time.

2. Your Cloud Bill Is Increasing Without Business Growth

Have you noticed your cloud costs increasing month-over-month, but your user base, traffic, or application load hasn’t significantly changed?

The Problem:

This is a classic sign of inefficient scaling, poor resource planning, or architectural issues like inefficient queries or chatty services.

The Fix:

Conduct a detailed cloud cost audit to understand which services or workloads are spiking. Use cost allocation tags to track spend by department, team, or service. Monitor usage trends, and align them with business performance indicators.

🔍 Cloudtopiaa provides intelligent cloud billing breakdowns and predictive analytics to help businesses map their spend against real ROI.

3. You’re Using the Wrong Instance Types or Pricing Models

Most cloud providers offer a range of pricing models: on-demand, reserved instances, spot pricing, and more. Using the wrong type can mean paying 2–3x more than necessary.

The Problem:

If you’re only using on-demand instances — even for long-term workloads — you’re missing out on serious savings. Similarly, choosing oversized instances for minimal workloads leads to resource waste.

The Fix:

Use rightsizing tools to analyze your compute needs and adjust instance types accordingly.

Shift stable workloads to Reserved Instances (RI) or Savings Plans.

For temporary or flexible jobs, consider Spot Instances at a fraction of the cost.

Cloudtopiaa’s smart instance advisor recommends optimal combinations of pricing models tailored to your workload patterns.

4. Lack of Visibility and Accountability in Cloud Spending

Cloud sprawl is real. Without proper governance, it’s easy to lose track of who’s spinning up what — and why.

The Problem:

No centralized visibility leads to fragmented billing, making it hard to know which teams, projects, or environments are responsible for overspending.

The Fix:

Implement cloud cost governance policies, and enforce tagging across all cloud assets. Establish a FinOps strategy that aligns IT, finance, and engineering teams around budgeting, forecasting, and reporting.

📊 With Cloudtopiaa’s dashboards, you get a 360° view of your cloud costs — by team, project, environment, or region.

5. You’re Not Optimizing for Multi-Cloud or Hybrid Environments

If you’re using more than one cloud provider — or a mix of on-prem and cloud — chances are you’re not optimizing across platforms.

The Problem:

Vendor lock-in, duplicated services, and lack of unified visibility lead to inefficiencies and overspending.

The Fix:

Evaluate cross-cloud redundancy and streamline services.

Use a multi-cloud cost management platform to monitor usage and optimize spending across providers.

Consolidate billing and centralize decision-making.

Cloudtopiaa supports multi-cloud monitoring and provides a unified interface to manage and optimize spend across AWS, Azure, and GCP.

How Cloudtopiaa Helps You Stop Overpaying for Cloud

At Cloudtopiaa, we specialize in helping companies reduce cloud spending without sacrificing performance or innovation.

Features that Save You Money:

Automated Cost Analysis: Identify inefficiencies in real time.

Rightsizing Recommendations: Resize VMs and services based on actual usage.

Billing Intelligence: Break down your cloud bills into actionable insights.

Smart Tagging & Governance: Gain control over who’s spending what.

Multi-Cloud Optimization: Get the best value from AWS, Azure, and GCP.

Practical Steps to Start Saving Today

Audit Your Cloud Resources: Identify unused or underused services.

Set Budgets & Alerts: Monitor spend proactively.

Optimize Workloads: Use automation tools to scale resources.

Leverage Discounted Pricing Models: Match pricing strategies with workload behavior.

Use a Cloud Optimization Partner: Tools like Cloudtopiaa do the heavy lifting for you.

Final Thoughts

Cloud isn’t cheap — but cloud mismanagement is far more expensive.

By recognizing the signs of overspending and taking proactive steps to optimize your cloud usage, you can save thousands — if not millions — each year.

Don’t let complex billing and unmanaged infrastructure drain your resources. Let Cloudtopiaa help you regain control, reduce waste, and spend smarter on the cloud.

📌 Visit Cloudtopiaa.com today to schedule your cloud cost optimization assessment.

0 notes

Text

What to Expect from Oracle Consulting Services?

In a world where technology evolves faster than ever, businesses are under pressure to modernize, integrate, and innovate—while keeping their core systems running smoothly. Whether you're migrating to the cloud, building low-code applications, or optimizing enterprise workflows, having the right guidance can make or break your success.

That’s where Oracle Consulting Services comes in.

Oracle’s ecosystem is vast—ranging from Oracle Cloud Infrastructure (OCI), APEX, Fusion Apps, E-Business Suite, to powerful analytics and database solutions. But without the right expertise, unlocking the full value of these technologies can be a challenge.

In this blog, we’ll break down what to expect from Oracle Consulting Services, what they offer, and how they can accelerate your business transformation.

What Are Oracle Consulting Services?

Oracle Consulting Services provide expert guidance, implementation support, and strategic advisory for organizations working within the Oracle technology stack. These services are delivered by certified professionals with deep experience in Oracle products and industry best practices.

Consultants can work across all stages of your project—from discovery and planning, through to deployment, training, and ongoing support.

1. Strategic Assessment and Road mapping

Oracle consultants begin by understanding your current landscape:

What systems are you using (e.g., Oracle Forms, EBS, on-prem databases)?

What are your pain points and business goals?

Where do you want to go next—Cloud, APEX, Fusion, analytics?

Based on this, they deliver a tailored IT roadmap that aligns your Oracle investments with your long-term strategy.

2. End-to-End Implementation and Integration

Whether you're deploying a new Oracle solution or modernizing an existing one, consultants help with:

Solution architecture and setup

Custom application development (e.g., APEX or VBCS)

Integrations with 3rd party or legacy systems

Data migration and transformation

Oracle consultants follow proven methodologies that reduce risk, improve delivery timelines, and ensure system stability.

3. Security, Performance & Compliance Optimization

Security and performance are top concerns, especially in enterprise environments. Oracle consultants help you:

Configure IAM roles and network security on OCI

Apply best practices for performance tuning on databases and apps

Implement compliance and governance frameworks (e.g., GDPR, HIPAA)

4. Modernization and Cloud Migrations

Oracle Consulting Services specialize in lifting and shifting or rebuilding apps for the cloud. Whether you’re moving Oracle Forms to APEX, or EBS to Fusion Cloud Apps, consultants provide:

Migration strategy & execution

Platform re-engineering

Cloud-native redesign for scalability

5. Training and Knowledge Transfer

A successful Oracle implementation isn’t just about code—it's about empowering your team. Consultants provide:

User training sessions

Developer enablement for APEX, Fusion, or OCI

Documentation and transition support

Whether you're planning a migration to Oracle APEX, exploring OCI, or integrating Fusion Apps, Oracle Consulting Services can help you move with confidence.

0 notes

Text

From Migration to Optimization: End-to-End Cloud Solutions with an AWS Partner

In the digital-first era, businesses are embracing cloud transformation not just to modernize IT, but to gain a competitive edge, drive innovation, and achieve operational efficiency. However, the journey from on-premise infrastructure to the cloud is complex and requires more than just technical lift-and-shift. It demands deep cloud expertise, strategic planning, and continuous optimization.

This is where partnering with an AWS (Amazon Web Services) certified provider becomes invaluable. From cloud migration to long-term performance tuning, an AWS Partner delivers end-to-end cloud solutions tailored to your business goals, ensuring speed, scalability, and security every step of the way.

In this article, we explore the full lifecycle of cloud transformation with an AWS Partner—from initial migration to post-deployment optimization—and why businesses across industries trust this collaborative approach to power their digital future.

Why AWS?

Before diving into the role of an AWS Partner, it's essential to understand why AWS is the platform of choice for over a million active customers globally.

Comprehensive Service Portfolio: AWS offers 200+ fully featured services including computing, storage, networking, analytics, machine learning, and security.

Scalability & Flexibility: Easily scale workloads up or down based on demand.

Global Reach: With data centers in 30+ geographic regions, AWS enables global deployment and low-latency access.

Security & Compliance: Robust security tools and compliance frameworks for industries like finance, healthcare, and government.

Cost Optimization Tools: Pay-as-you-go pricing and various cost control mechanisms.

However, unlocking the full potential of AWS requires experience and strategic guidance. This is where certified AWS Partners come in.

The Role of an AWS Partner

An AWS Partner is an organization accredited through the AWS Partner Network (APN) for demonstrating deep expertise in AWS technologies. They help businesses of all sizes design, architect, migrate, manage, and optimize their AWS cloud environments.

Key Benefits of Working with an AWS Partner:

Access to certified AWS architects and engineers

Industry-specific cloud solutions and compliance knowledge

Proven frameworks for migration, governance, and automation

24/7 managed support and ongoing optimization

Accelerated time-to-market for digital initiatives

Whether you're a startup, SME, or enterprise, an AWS Partner becomes your trusted cloud advisor throughout the journey.

Phase 1: Cloud Readiness Assessment

The cloud journey starts with a readiness assessment. AWS Partners begins by analyzing your existing IT landscape, business goals, and compliance requirements.

This includes:

Inventory of current workloads and applications

Cost-benefit analysis for migration

Identifying quick wins and mission-critical systems

Developing a tailored cloud roadmap

Outcome: A well-defined strategy for transitioning to the cloud with minimal disruption.

Phase 2: Secure and Seamless Cloud Migration

Cloud migration is more than just moving data—it’s about transforming how your business operates. An AWS Partner follows best practices and AWS migration methodologies to ensure a secure, seamless transition.

Common Migration Strategies (the 6 R’s):

Rehosting – Lift-and-shift approach to move applications as-is

Replatforming – Making minor tweaks to optimize performance

Refactoring – Redesigning applications to leverage cloud-native capabilities

Repurchasing – Switching to SaaS alternatives

Retiring – Decommissioning outdated systems

Retaining – Keeping some apps on-prem for specific reasons

Tools and Frameworks Used:

AWS Migration Hub

AWS Application Discovery Service

AWS Database Migration Service

CloudEndure Migration

An AWS Partner ensures minimal downtime, data integrity, and a phased approach aligned with business priorities.

Phase 3: Modernization & Cloud-Native Development

Once your workloads are on AWS, an AWS Partner helps you modernize them to leverage cloud-native capabilities fully.

This includes:

Breaking down monolithic apps into microservices

Deploying containers using Amazon ECS or EKS

Building serverless applications with AWS Lambda

Leveraging DevOps pipelines for CI/CD automation

Integrating AI/ML, IoT, or big data analytics solutions

Modernization enhances agility, scalability, and resilience, giving you a competitive advantage in your market.

Phase 4: Cloud Optimization & Cost Management

Cloud success isn’t just about migration—it’s about continuous optimization. AWS Partners use tools like AWS Trusted Advisor and AWS Cost Explorer to monitor and optimize their environments.

Key Optimization Areas:

Right-sizing EC2 instances and storage volumes

Auto-scaling workloads based on demand

Reserved Instances and Savings Plans for long-term savings

Monitoring and alerts via Amazon CloudWatch

Security and patch management to reduce vulnerabilities

This proactive approach leads to lower costs, improved performance, and enhanced security.

Phase 5: Managed Services & Ongoing Support

Post-deployment, AWS Partners offers managed services to handle the day-to-day operations of your cloud infrastructure.

Managed services include:

24/7 monitoring and incident response

Regular backups and disaster recovery planning

IT Security audits and compliance checks

SLA-driven support and issue resolution

Cloud lifecycle reporting and dashboards

This ensures business continuity, peace of mind, and freedom to focus on innovation rather than maintenance.

Industry-Specific Solutions with AWS Partners

AWS Partners often specialize in vertical-specific cloud solutions:

Healthcare: HIPAA-compliant workloads, secure patient data storage

Finance: PCI-DSS compliance, real-time analytics, fraud detection

Retail: Scalable eCommerce platforms, omnichannel customer experiences

Education: Virtual learning environments, student data management

Government: Highly secure and regulated cloud environments

This industry-focused expertise ensures your solution not only works technically but aligns with your business goals and regulatory standards.

Choosing the Right AWS Partner

Not all partners are the same. When selecting an AWS Partner, look for:

AWS certifications and partner tier (e.g., Advanced, Premier)

Proven customer success stories and case studies

Specializations in security, DevOps, migration, or specific industries

Local presence and support capabilities (especially for compliance)

A great AWS Partner acts as an extension of your team, not just a vendor.

Future-Proofing Your Cloud Strategy

Technology continues to evolve rapidly. A skilled AWS Partner keeps you ahead of the curve with:

Ongoing optimization and innovation workshops

Security posture assessments

Cloud-native redesigns and app modernization

Data analytics and AI adoption

Sustainability-focused cloud planning

Cloud transformation is a journey, not a one-time event. An AWS Partner ensures your environment is always aligned with emerging trends, business goals, and market demands.

Final Thoughts

The path from cloud migration to full optimization requires more than tools—it requires a strategic, guided approach that minimizes risk and maximizes return on investment.

Partnering with a certified AWS Partner ensures:

A smooth, secure, and scalable migration process

Modernized applications that drive agility and innovation

Ongoing support and optimization to control costs and improve performance

In an increasingly digital world, working with an AWS Partner isn’t just smart—it’s essential.

Ready to accelerate your cloud journey?

Let a trusted AWS Partner guide you from migration to optimization—and beyond.

#Cyber Security#top cybersecurity companies#best cyber security companies#cyber security companies#aws partner uae

0 notes

Text

SAP HANA has a strong and growing demand in recent times, especially with the increasing adoption of cloud-based solutions, AI integration, and real-time data processing. Here are some key aspects of its scope:

1. High Demand for SAP HANA Professionals

Many enterprises are migrating from traditional databases to SAP HANA for faster analytics and real-time processing.

Skills in SAP S/4HANA, SAP BW/4HANA, and SAP HANA Cloud are in high demand.

2. Cloud and Hybrid Deployments

SAP HANA Cloud is gaining traction as companies prefer cloud solutions over on-premise deployments.

Hybrid models (on-prem + cloud) are also being widely adopted, increasing job opportunities in cloud migration and management.

3. Integration with AI & Machine Learning

SAP is embedding AI and ML capabilities into its HANA ecosystem, creating new opportunities for AI-driven analytics and automation.

Roles like SAP HANA Data Scientist and AI Consultant are emerging.

4. Demand Across Industries

Industries like banking, healthcare, retail, and manufacturing are using SAP HANA for real-time decision-making and predictive analytics.

Governments and large enterprises rely on SAP HANA for handling big data efficiently.

5. Growing SAP S/4HANA Adoption

Many companies are moving to SAP S/4HANA, creating demand for migration experts and SAP consultants.

The SAP deadline for ending ECC support in 2027 is pushing companies to upgrade, increasing job opportunities.

Career Opportunities in SAP HANA

SAP HANA Consultant

SAP HANA Developer

SAP S/4HANA Functional Consultant

SAP HANA Cloud Architect

SAP HANA Administrator

Conclusion

SAP HANA has a bright future with opportunities in cloud computing, AI, and data analytics. Professionals with SAP HANA expertise, especially in cloud migration and AI integration, will have excellent career growth in the coming years.

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

Exploring the Role of Azure Data Factory in Hybrid Cloud Data Integration

Introduction

In today’s digital landscape, organizations increasingly rely on hybrid cloud environments to manage their data. A hybrid cloud setup combines on-premises data sources, private clouds, and public cloud platforms like Azure, AWS, or Google Cloud. Managing and integrating data across these diverse environments can be complex.

This is where Azure Data Factory (ADF) plays a crucial role. ADF is a cloud-based data integration service that enables seamless movement, transformation, and orchestration of data across hybrid cloud environments.

In this blog, we’ll explore how Azure Data Factory simplifies hybrid cloud data integration, key use cases, and best practices for implementation.

1. What is Hybrid Cloud Data Integration?

Hybrid cloud data integration is the process of connecting, transforming, and synchronizing data between: ✅ On-premises data sources (e.g., SQL Server, Oracle, SAP) ✅ Cloud storage (e.g., Azure Blob Storage, Amazon S3) ✅ Databases and data warehouses (e.g., Azure SQL Database, Snowflake, BigQuery) ✅ Software-as-a-Service (SaaS) applications (e.g., Salesforce, Dynamics 365)

The goal is to create a unified data pipeline that enables real-time analytics, reporting, and AI-driven insights while ensuring data security and compliance.

2. Why Use Azure Data Factory for Hybrid Cloud Integration?

Azure Data Factory (ADF) provides a scalable, serverless solution for integrating data across hybrid environments. Some key benefits include:

✅ 1. Seamless Hybrid Connectivity

ADF supports over 90+ data connectors, including on-prem, cloud, and SaaS sources.

It enables secure data movement using Self-Hosted Integration Runtime to access on-premises data sources.

✅ 2. ETL & ELT Capabilities

ADF allows you to design Extract, Transform, and Load (ETL) or Extract, Load, and Transform (ELT) pipelines.

Supports Azure Data Lake, Synapse Analytics, and Power BI for analytics.

✅ 3. Scalability & Performance

Being serverless, ADF automatically scales resources based on data workload.

It supports parallel data processing for better performance.

✅ 4. Low-Code & Code-Based Options

ADF provides a visual pipeline designer for easy drag-and-drop development.

It also supports custom transformations using Azure Functions, Databricks, and SQL scripts.

✅ 5. Security & Compliance

Uses Azure Key Vault for secure credential management.

Supports private endpoints, network security, and role-based access control (RBAC).

Complies with GDPR, HIPAA, and ISO security standards.

3. Key Components of Azure Data Factory for Hybrid Cloud Integration

1️⃣ Linked Services

Acts as a connection between ADF and data sources (e.g., SQL Server, Blob Storage, SFTP).

2️⃣ Integration Runtimes (IR)

Azure-Hosted IR: For cloud data movement.

Self-Hosted IR: For on-premises to cloud integration.

SSIS-IR: To run SQL Server Integration Services (SSIS) packages in ADF.

3️⃣ Data Flows

Mapping Data Flow: No-code transformation engine.

Wrangling Data Flow: Excel-like Power Query transformation.

4️⃣ Pipelines

Orchestrate complex workflows using different activities like copy, transformation, and execution.

5️⃣ Triggers

Automate pipeline execution using schedule-based, event-based, or tumbling window triggers.

4. Common Use Cases of Azure Data Factory in Hybrid Cloud

🔹 1. Migrating On-Premises Data to Azure

Extracts data from SQL Server, Oracle, SAP, and moves it to Azure SQL, Synapse Analytics.

🔹 2. Real-Time Data Synchronization

Syncs on-prem ERP, CRM, or legacy databases with cloud applications.

🔹 3. ETL for Cloud Data Warehousing

Moves structured and unstructured data to Azure Synapse, Snowflake for analytics.

🔹 4. IoT and Big Data Integration

Collects IoT sensor data, processes it in Azure Data Lake, and visualizes it in Power BI.

🔹 5. Multi-Cloud Data Movement

Transfers data between AWS S3, Google BigQuery, and Azure Blob Storage.

5. Best Practices for Hybrid Cloud Integration Using ADF

✅ Use Self-Hosted IR for Secure On-Premises Data Access ✅ Optimize Pipeline Performance using partitioning and parallel execution ✅ Monitor Pipelines using Azure Monitor and Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault ✅ Automate Data Workflows with Triggers & Parameterized Pipelines

6. Conclusion

Azure Data Factory plays a critical role in hybrid cloud data integration by providing secure, scalable, and automated data pipelines. Whether you are migrating on-premises data, synchronizing real-time data, or integrating multi-cloud environments, ADF simplifies complex ETL processes with low-code and serverless capabilities.

By leveraging ADF’s integration runtimes, automation, and security features, organizations can build a resilient, high-performance hybrid cloud data ecosystem.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

The Evolution of Cloud Computing Services in India: A Deep Dive into Sumcircle Technologies

Cloud computing has revolutionized the way businesses operate globally, and India is no exception. As one of the fastest-growing digital economies, India is embracing cloud solutions across industries to enhance scalability, efficiency, and innovation. Among the leaders in this transformative journey is Sumcircle Technologies, a pioneering company providing cutting-edge cloud computing services tailored to meet diverse business needs.

Understanding Cloud Computing

Cloud computing refers to the delivery of computing services, including storage, servers, databases, networking, software, and analytics, over the internet (“the cloud”). It eliminates the need for businesses to own and maintain physical data centers or servers, enabling them to focus on their core operations while leveraging the power of technology.

The key benefits of cloud computing include:

Cost Efficiency: Reduced infrastructure costs.

Scalability: On-demand resources to meet dynamic business needs.

Flexibility: Remote access to applications and data.

Security: Enhanced data protection mechanisms.

Innovation: Accelerated deployment of new applications.

The Growth of Cloud Computing in India

India’s cloud computing market is on an exponential growth trajectory, projected to reach $13 billion by 2026. This surge is driven by:

The rapid adoption of digital technologies.

Government initiatives such as “Digital India.”

Increasing penetration of 5G technology.

A burgeoning startup ecosystem.

The rise of remote work and hybrid workplaces.

Sumcircle Technologies: Empowering Businesses with Cloud Solutions:

Sumcircle Technologies has positioned itself as a trusted partner for businesses embarking on their cloud journey. With a comprehensive suite of services, the company caters to startups, SMEs, and large enterprises across various industries. Here’s how Sumcircle is making a difference:

1. Cloud Infrastructure as a Service (IaaS)

Sumcircle provides robust infrastructure solutions, enabling businesses to rent virtualized computing resources. This includes servers, storage, and networking capabilities that scale with organizational growth.

Use Case: A retail startup utilized Sumcircle’s IaaS to handle high website traffic during seasonal sales, ensuring seamless customer experiences.

2. Platform as a Service (PaaS)

Sumcircle’s PaaS offerings empower developers to build, test, and deploy applications efficiently without worrying about underlying infrastructure.

Use Case: A fintech company leveraged Sumcircle’s PaaS to develop and launch a secure mobile payment app, reducing time-to-market by 40%.

3. Software as a Service (SaaS)

Sumcircle delivers software solutions hosted on the cloud, ranging from CRM tools to productivity applications.

Use Case: An educational institution implemented Sumcircle’s SaaS-based learning management system, facilitating online classes and improving student engagement.

4. Cloud Migration Services

Migrating to the cloud can be daunting, but Sumcircle ensures a seamless transition with minimal downtime. The company’s experts assess existing infrastructure, plan migration strategies, and execute them flawlessly.

Use Case: A healthcare provider transitioned to Sumcircle’s cloud platform, achieving enhanced data security and compliance with HIPAA regulations.

5. Managed Cloud Services

For businesses seeking ongoing support, Sumcircle offers managed cloud services, ensuring optimal performance, regular updates, and 24/7 monitoring.

Use Case: An e-commerce company outsourced its cloud management to Sumcircle, allowing its internal team to focus on business growth.

6. Hybrid and Multi-Cloud Solutions

Sumcircle enables businesses to integrate multiple cloud platforms or combine on-premises systems with cloud environments for maximum flexibility and resilience.

Use Case: A manufacturing firm adopted a hybrid model with Sumcircle, maintaining sensitive data on-premises while leveraging cloud analytics for operational insights.

Industry-Specific Cloud Solutions

One of Sumcircle’s distinguishing features is its ability to deliver industry-specific solutions.

Retail: Personalized customer experiences with cloud-based analytics.

Healthcare: Secure storage and real-time access to patient data.

Finance: Scalable platforms for digital banking and financial services.

Education: Virtual classrooms and collaborative learning tools.

Why Choose Sumcircle Technologies?

With a customer-centric approach and a commitment to excellence, Sumcircle Technologies stands out in the competitive cloud computing landscape. Here’s why businesses trust Sumcircle:

Expertise: A team of certified professionals with extensive experience.

Innovation: Continuous adoption of emerging technologies to deliver superior solutions.

Customization: Tailored services to align with specific business objectives.

Support: Dedicated customer support ensuring quick resolution of issues.

Security: Advanced measures to safeguard sensitive data and comply with regulations.

The Road Ahead: Trends in Cloud Computing

As technology evolves, several trends are shaping the future of cloud computing:

Edge Computing: Bringing data processing closer to the source for real-time insights.

Artificial Intelligence and Machine Learning: Enhancing cloud capabilities with AI-driven analytics.

Serverless Computing: Simplifying deployment by abstracting infrastructure management.

Sustainability: Green cloud solutions focusing on energy efficiency.

Sumcircle Technologies is poised to lead in these areas, ensuring its clients remain at the forefront of innovation.

Conclusion:

Cloud computing is not just a technology trend; it’s a business imperative. With its comprehensive services and customer-first approach, Sumcircle Technologies is empowering Indian businesses to harness the full potential of the cloud. From startups aiming to scale quickly to enterprises seeking operational efficiencies, Sumcircle is the partner of choice for a seamless cloud journey.

As India continues its digital transformation, companies like Sumcircle are driving progress, one cloud solution at a time. Whether you’re new to the cloud or looking to optimize your existing infrastructure, Sumcircle Technologies offers the expertise and solutions you need to thrive in a connected world. Contact us today for more details.

0 notes

Text

Migrating SQL Server On-Prem to the Cloud: A Guide to AWS, Azure, and Google Cloud

Taking your on-premises SQL Server databases to the cloud opens a world of benefits such as scalability, flexibility, and often, reduced costs. However, the journey requires meticulous planning and execution. We will delve into the migration process to three of the most sought-after cloud platforms: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), providing you with…

View On WordPress

0 notes

Text

On-prem Database Migration to AWS using DMS

The Sreenidhi Institute of Science and Technology (or SNIST) is a technical institute located in Hyderabad, Telangana, India. It is one of the top colleges in Telangana. The institution is affiliated with the Jawaharlal Nehru Technological University, Hyderabad (JNTUH). In the year 2010-11, the institution attained the autonomous status and it is the first college under JNTUH to get that status. The institution is proposed to get Deemed university status from the year 2020-21.

SNIST was established in 1997 with the approval of All India Council for Technical Education, Government of Andhra Pradesh, and is affiliated to Jawaharlal Nehru Technological University, Hyderabad. SNIST is sponsored by the Sree Education Society of the Sree Group of Industries.

It runs undergraduate and postgraduate programs and is engaged in research activity leading to Ph.D. Sreenidhi is recognized by the Department of Scientific and Industrial Research as a scientific and industrial research organization.

The institution was accredited by the NBA of AICTE within 5 years of its existence. It has received world bank assistance under TEQIP

The Challenge:

We had some challenges while migrating the MYSQL database from On-prem to AWS using DMS (Database Migration Service (MySQL to MySQL)).

Shreenidhi has a huge amount of databases on their on-prem server we had some challenges while migrating their workload on AWS using DMS but DMA has some beautiful features so we did it in a short amount of time and in a very efficient way.

Problems on-prem DB server:

The customer has workload on-prem but The DB server was not functionally well it was getting suddenly down, timeout, they were not able to manage the on-prem DB workload, a huge amount of Application server workload hence Db server storage getting an increase, taking manual backup was a problem, ans storing it also was the problem to them, Customer was worried about if any disaster occurs then how they will get their data back?, also having problems with Patch-management, autoupgrade DB version, storing snapshots etc.

Business impact of customer:

The customer was getting a complaint from their customer due to application server was not working problem due to Db server, sometimes it happed that customer didn’t get the bill from the client due to that reason client got a huge amount to lose in their application.

Business impact was that customer was getting a huge number of costs. In Db server, administration cost, scaling cost, increasing storage capabilities manging it, and most Important was that customer getting huge impact on their severs.

Ther are some reasons that forces our customer to move AWS cloud .

Require extra IT support

Adherence to industry compliance

Increase maintenance costs

Increase the risk of data loss

Some other Challenges while migrating workload.

The database was homogenous so we didn’t get that many of problems while migrating.

How large Database is?

How many tables we have in each database?

Do we have primary keys on all the existing tables?

Do we have any identity columns?

Do we use truncate tables? how frequently

Do we have temporary tables?

Do we have any column-level encryption?

How often are DDL executed?

How hot (busy) is your source database?

What kind of users, role, and permission has with the source database?

How database can be accessed (firewall, VPN)

Above are some challenges that we had to consider while migrating the database to AWS and that was so helpful for migrating the server to AWS

Provided Partner Solution:

Database migration serveice and how its works.

The below diagram will help us to understand how DMS work.

Above diagram will show how the DMS work here we have applied same rule to migrate on prem database to Aws that help us very much in a cost-effective way.

Sreenidhi has an on-prem database that we need to migrate using DMS service we had some POC before migrating the database workload to AWS RDS.

The client has many databases in this server so sometimes we got stuck with its on-prem environment we need to understand the structure of the database server and how it works in a normal way, how much load the server can handle this we need to take into consideration.

Migration Actions-

Migration Discovery/Assessment:

During Migration Assessment, we review the architecture of the existing application, produce an assessment report that includes a network diagram with all the application layers, identifies the application and database components that are not automatically migrated, and estimates the effort for manual conversion work. Although migration analysis tools exist to expedite the evaluation, the bulk of the assessment is conducted by internal staff or with help from AWS Professional Services. This effort is usually 2% of the whole migration effort. One of the key tools in the assessment analysis is the Database Migration Assessment Report. This report provides important information about the conversion of the schema from your source database to your target RDS database instance. More specifically, the Assessment Report does the following:

Identifies schema objects (e.g., tables, views, stored procedures, triggers, etc.) in the source database and the actions that are required to convert them (Action Items) to the target database (including fully automated conversion, small changes like the selection of data types or attributes of tables, and rewrites of significant portions of the stored procedure).

Recommends the best target engine, based on the source database and the features used

Recommends other AWS services that can substitute for missing features

Recommends unique features available in Amazon RDS that can save the customer licensing and other costs Amazon Web Services Migrating Applications Running Relational Databases to AWS

Migration:

we made a plan to migrate the database to AWS

Prepared the source database server: (MYSQL)

Create a user that will be used for replication with proper permissions.

Modify mysql.cnf to allow replication.

Allow the firewall/security groups to access this instance.

After preparing the source database server we prepare AWS infrastructure to migrate.

Create VPC

Created subnet

Created route table

Created IAM user with appropriate access

Specified RDS storage and instance type

Created target Amazon RDS database:

Here we have species RDS instance with its size, networking, storage and network performance.

Created a replication instance:

Created replication instance which will replicate all the content of source database to target Database.

Create target and source endpoints:

Refresh the source endpoint schema

Created a migration task

Created source endpoint, a target endpoint, and a replication instance before creating a migration task.

Endpoint type

Engine type

Server name

Port number

Encryption protocols

Credentials

Determined how the task should handle large binary objects (LOBs) on the source

Specified migration task settings, Enabled, and run premigration task assessments before you run the task.

Monitor migration task

We can Monitor migration task using AWS DMS events and notifications, Task status,

Amazon CloudWatch alarms and logs, AWS CloudTrail logs, Database logs.

Using the above tool on AWS .

Monitoring replication tasks using Amazon CloudWatch:

We have used Amazon CloudWatch alarms, events to more closely track your migration.

The AWS DMS console shows basic CloudWatch statistics for each task, including the task status, percent complete, elapsed time, and table statistics, as shown following. We have Selected the replication task and then selected the Task monitoring tab.

The AWS DMS console shows performance statistics for each table, including the number of inserts, deletions, and updates, when you select the Table statistics tab.

After Migration benefits.

Minimal Downtime:

AS compare to On-prem DB customers getting very little downtime on AWS RDS.

Supports widely used databases:

Here in AWS customer can use many types of the database server if he wants to use and with very minimal cost and with effectiveness.

Fast and easy setup:

A migration task can be set up within a few minutes in the AWS Management Console

Customers can now set up any database in a quick way if they want in the future now.

Low cost:

The customer getting low cost as compare to the on-prem database. Now the customer is paying based on the amount of log storage and computational power needed to transfer.

Reliable:

DMS is a self-healing service and will automatically restart in case of an interruption occurs. DMS provides an option of setting up a multi-AZ (availability zone) replication for disaster recovery.

0 notes

Link

We at Infometry have helped Fortune 500 customers with Implementation and Cloud Analytics leveraging Snowflake. Infometry specialized in Snowflake Migration and Consulting. We are one of the most recognized Implementation Partner for Snowflake with global footprints. Migration to Snowflake with Infometry ensures: Greater Scalability, Ease Of Implementation, Certified and Trained Consultants, Faster Insights To Your Data , Transparent Transition, Unmatched Support, Contact Us :- [email protected]

1 note

·

View note

Text

How are organizations winning with Snowflake?

Cloud has evolved pretty considerably throughout the last decade, giving confidence to organizations still hoping on legacy systems for their analytical ventures. There's an excess of choices for organizations enthusiastic about their immediate or specific data management requirements.

This blog addresses anyone or any organization looking for data warehousing options that are accessible in the cloud then here you are, its Snowflake - a cloud data platform, and how it nicely fits if you are thinking of migrating to a new cloud data warehouse.

The cloud data warehouse market is a very challenging space but is also characterized by the specialized offerings of different players. Azure, AWS Redshift, SQL data warehouse, Google BigQuery are ample alternatives that are available in a rapidly advanced data warehousing market, which estimates its value over 18 billion USD.

To help get you there, let's look at some of the key ways to establish a sustainable and adaptive enterprise data warehouse with Snowflake solutions.

#1 Rebuilding

Numerous customers are moving from on-prem to cloud to ask, "Can I leverage my present infrastructure standards and best practices, such as user management and database , DevOps and security?" This brings up a valid concern about building policies from scratch, but it's essential to adapt to new technological advancements and new business opportunities. And that may, in fact, require some rebuilding. If you took an engine from a 1985 Ford and installed it in a 2019 Ferrari, would you expect the same performance?

It's essential to make choices not because "that's how we've always done it," but because those choices will assist you adopt new technology, empower, and gain agility to business processes and applications. Major areas to review involve- policies, user management, sandbox setups, data loading practices, ETL frameworks, tools, and codebase.

#2 Right Data Modelling

Snowflake serves manifold purposes: data mart, data lake, data warehouse, database and ODS. It even supports numerous modeling techniques like - Snowflake, Star, BEAM and Data Vault.

Snowflake can also support "schema on write'' and "schema on read"." This sometimes curates glitches on how to position Snowflake properly.

The solution helps to let your usage patterns predict your data model in an easy way. Think about how you foresee your business applications and data consumers leveraging data assets in Snowflake. This will assist you clarify your organization and resources to get the best result from Snowflake.

Here's an example. In complex use cases, it's usually a good practice to develop composite solutions involving:

Layer1 as Data Lake to ingest all the raw structured and semi-structured data.

Layer2 as ODS to store staged and validated data.

Layer3 as Data Warehouse for storing cleansed, categorized, normalized and transformed data.

Layer4 as Data Mart to deliver targeted data assets to end consumers and applications.

#3 Ingestion and integration

Snowflake adapts seamlessly with various data integration patterns, including batch (e.g., fixed schedule), near real-time (e.g., event-based) and real-time (e.g., streaming). To know the best pattern, collate your data loading use cases. Organizations willing to collate all the patterns—where data is recieved on a fixed basis goes via a static batch process, and easily delivered data uses dynamic patterns. Assess your data sourcing needs and delivery SLAs to track them to a proper ingestion pattern.

Also, account for your coming use cases. For instance: "data X" is received by 11am daily, so it's good to schedule a batch workflow running at 11am, right? But what if instead it is ingested by an event-based workflow—won't this deliver data faster, improve your SLA, convert static dependency and avoid efforts when delays happen to an automated mechanism? Try to think as much as you can through different scenarios.

Once integration patterns are known, ETL tooling comes next. Snowflake supports many integration partners and tools such as Informatica, Talend, Matillion, Polestar solutions, Snaplogic, and more. Many of them have also formed a native connector with Snowflake. And also, Snowflake supports no-tool integration using open source languages such as Python.

To choose the prompt integration platform, calculate these tools against your processing requirements, data volume, and usage. Also, examine if it could process in memory and perform SQL push down (leveraging Snowflake warehouse for processing). Push down technique is excellent help on Big Data use cases, as it eliminates the bottleneck with the tool's memory.

#4 Managing Snowflake

Here are a few things to know after Snowflake is up and running: Security practices. Establish strong security practices for your organization—leverage Snowflake role-based access control (RBAC) over Discretionary Access Control (DAC). Snowflake also supports SSO and federated authentication, merging with third-party services such as Active Directory and Oakta.

Access management. Identify user groups, privileges, and needed roles to define a hierarchical structure for your applications and users.

Resource monitors. Snowflake offers infinitely compute and scalable storage. The tradeoff is that organizations must establish monitoring and control protocols to keep your operating budget under control. The two primary comes here is:

Snowflake Cloud Data Warehouse configuration. It's typically best to curate different Snowflake Warehouses for each user, business area, group, or application. This assists to manage billing and chargeback when required. To further govern, assign roles specific to Warehouse actions (monitor, access/ update / create) so that only designed users can alter or develop the warehouse.

Billing alerts assist with monitoring and making the right actions at the right time. Define Resource Monitors to assist monitor your cost and avoid billing overage. You can customize these alerts and activities based on disparate threshold scenarios. Actions range from suspending a warehouse to simple email warnings.

Final Thoughts

If you have an IoT solutions database or a diverse data ecosystem, you will need a cloud-based data warehouse that gives scalability, ease of use, and infinite expansion. And you will require a data integration solution that is optimized for cloud operation. Using Stitch to extract and load data makes migration simple, and users can run transformations on data stored within Snowflake.

As a Snowflake Partner, we help organizations assess their data management requirements & quantify their storage needs. If you have an on-premise DW, our data & cloud experts help you migrate without any downtime or loss of data or logic. Further, our snowflake solutions enables data analysis & visualization for quick decision-making to maximize the returns on your investment.

0 notes

Text

How Cloud Storage Can Accelerate Innovation in your Organization?

With an explosion in data, many organizations are reaching a point where they have to evaluate their data storage options. Take Netflix, for example. According to The Verge, the entertainment website has more than 209 million subscribers in over 130 countries and 5800 titles on its platform. Netflix’s success cannot be solely attributed to the type of content it delivers but also how seamlessly its platform functions. You rarely have to face the dreaded buffering wheel on the streaming site. So, how does Netflix manage that? What goes on behind the scenes? Is cloud storage the recipe for its success?

In 2008, Netflix was reliant on relational databases in its own data center, until one of its data centers broke down, halting Netflix’s operations for three days. Now, this was when Netflix was a small organization, but Netflix had the foresight to see it was growing fast, and it had to come up with a way to deal with exponential growth in data. So, it decided to move to the cloud. The migration has given Netflix the scalability and velocity to add new features and content without worrying about potential technological limitations.

Netflix is one of the many organizations that are increasingly opting for cloud storage. In 2020, Etsy moved 5.5 petabytes of data from about 2,000 servers to Google Cloud. According to Etsy, the migration allowed it to shift 15% of its engineering team from daily infrastructure management to improving customer experience.

Leverage The Power Of The Cloud With A Codeless Integration Solution

Why are More Businesses Abandoning On-Prem Storage for Cloud Storage?

According to 451 research, 90% of the companies have already made the transition, which begs the question, what is pushing these companies to move towards the cloud and has on-premises storage been rendered obsolete?

Let’s explore some benefits that cloud storage has to offer to modern-day businesses:

1. Security and Data protection

Deloitte surveyed 500 IT leaders, out of which 58% of them considered data protection as the top driver in moving to the cloud. As hacking attempts get more sophisticated, it is becoming increasingly difficult for companies to protect data in-house.

Third-party cloud vendors such as Amazon S3, Google Cloud, or Microsoft Azure come with extensive security options. Amazon S3, for example, comes with encryption features and lets users block public access with S3 Block Public Access.

2. Data modernization

Simply put, data modernization is moving data from legacy to modern systems. Since most companies are just moving to the cloud, cloud migration is often used synonymously with data modernization. Why is data modernization important, and why do companies opt for it?

Data modernization allows an organization to process data efficiently. Today, organizations rely heavily on data for making decisions, but this becomes a huge hassle when data is stored in legacy systems and is difficult to retrieve.

0 notes

Text

Exchange Server ON-Prem Backup Solution

Exchange Server ON-Prem Backup Solution

Currently we have IBM Lotus Domino 8.x Server & its Backup is being done by Symantec BackupExec tool version 2014 running on windows server 2012 R2. Backupexec uses its dedicated Domino Agent to daily backup NSF databases IBM LTO-8 drive & each tape is then archived for at least 3 weeks then rollback. We are now planning to migrate on Exchange [on-prem]. All setup is Virtualized on Vmware 6.5.x…

View On WordPress

0 notes

Text

7 Best WordPress Backup Plugins (Pros and Cons)

Regular WordPress backups are the best you can do to protect your website. Backups give you peace of mind and can save you in catastrophic situations such as when your website is hacked or accidentally crashed.

There are several free and paid WordPress backup plugins, most of which are very easy to use. In this article, we will share the 7 best backup plugins for WordPress.

Important: Many WordPress host providers offer limited backup services, but we advise users to always be independent of them. Ultimately, it is your responsibility to maintain regular backups of your website. If you haven’t created a backup of your WordPress site yet, you should choose one of these 7 best WordPress backup plugins and get started right away.

Table of Contents

1 . UpdraftPlus

UpdraftPlus, the best WordPress backup plugin

UpdraftPlus is the most popular and free WordPress backup plugin available on the internet. Used on over 2 million websites.

With UpdraftPlus, you can make a complete backup of your WordPress site and save it to the cloud or download it to your computer.

The plugin supports scheduled backups and on-demand backups. You also have the option to select the files you want to back up.

Backups can be automatically uploaded to Dropbox, Google Drive, S3, Rackspace, FTP, SFTP, email and several other cloud storage services (How to use UpdraftPlus to back up and restore your website) See the step-by-step guide).

With UpdraftPlus, in addition to backing up your WordPress site, you can easily restore your backup from the WordPress admin panel.

UpdraftPlus also has a premium version with website migration or duplication, database search and replace, more site support, and several other features. The premium version also gives you access to priority support.

Price: Free ($ 70 for Updraft Premium Personal)

Summary: UpdratPlus is the most popular WordPress backup plugin on the market. It has over 2 million active installations and is 4.9 out of an average 5-star rating. The free version is feature-rich, but we recommend upgrading to UpdraftPlus Premium to unlock all the powerful backup features.

2. VaultPress (Jetpack backup)

Jetpack backup

Jetpack Backups is Automattic’s popular WordPress backup plugin created by WordPress co-founder Matt Mullen Road.

This plugin was originally released under the name VaultPress used by Money Deck, but has now been redesigned and renamed to Jetpack Backups. We have begun to switch some sites to the latest Jetpack backup platform.

The Jetpack Backup plugin provides a real-time automated solution for cloud backup without slowing down your website. You can easily set up and recover Jetpack backups from backups with just a few clicks.

Jetpack’s best plan also provides security analysis and some other powerful features.

There are some drawbacks to using Jetpack backups for beginners.

First, you pay at the site level, so if you have a lot of WordPress sites, you may incur recurring costs.

Second, checkout is very complicated and you need to install the Jetpack add-on to purchase a subscription. Fortunately, you can manually disable all unwanted Jetpack features except backups to keep your site from slowing down.

Finally, backups are stored in the lower plan for only 30 days. If you need unlimited backup files, you’ll have to pay $ 49.95 per month per site. This is significantly more expensive for beginners than the other solutions listed here.

The MoneyDeck website continues to use VaultPress (previous version of Jetpack) because it stood at the previous price.

Due to Automattic’s excellent reputation, Jetpack backups are also worth the higher price. That’s why you pay for more expensive real-time backup plans for new sites like All in One SEO. This is because it is an e-commerce store and requires maximum protection.

Price: Starting at $ 9.95 / month for daily backup plans and $ 49.95 / month for real-time backup plans.

Summary: Jetpack Backup is a great priced premium backup service. If you’re already using JetPack for other features such as JetPack CDN for photos, social media promotions, Elastic Search, we recommend that you buy the full package at the best price. If you’re just looking for WordPress real-time cloud backups, you can also check out our list of affordable BlogVaults for beginners.

3. BackupBuddy

WordPress BackupBuddy backup plugin

BackupBuddy is one of the most popular premium backup plugins for WordPress used on over 500,000 WordPress sites. This makes it easy to plan daily, weekly, and monthly backups.

BackupBuddy allows you to automatically save backups to cloud storage services such as Dropbox, Amazon S3, Rackspace Cloud, FTP, Stash, and even emails.

If you are using the Stash service, you can also make real-time backups. The biggest advantage of using BackupBuddy is that it is not a subscription-based service, so there is no monthly fee. You can use the plugin on the number of sites listed in the plan.

You also have access to the Premium Support Forums, regular updates, and 1GB of Backup Buddy Stash storage for storing backups. With the iThemes Sync feature, you can manage up to 10 WordPress sites from a single panel. You can also use BackupBuddy to mirror, migrate, and restore websites.

Price: $ 80 for Blogger plan (1 site license)

Summary: BackupBuddy is a cost-effective premium WordPress backup solution. Includes a complete set of features needed to back up, repair and move your WordPress site. Simply put, this is a powerful alternative to Updraft Plus and Vault Press available.

4. BlogVault

BlogVault is another popular WordPress backup service for WordPress. It’s not just a WordPress plugin, it’s a software as a service (SaaS) solution. Create a separate offsite backup on the BlogVault server to ensure zero load on the server.

BlogVault can also automatically back up your site daily and create unlimited backups on hand. It features a smart incremental backup that synchronizes only incremental changes to minimize server load. This guarantees optimal website performance. In addition to backup, it helps you to restore your website easily.

The lower plan can store a 90-day backup file and the higher plan can store a 365-day backup file, so you can recover your website from a crash.

It also has a built-in test website feature that makes it easy to test your website. It also provides an easy option to migrate your website to another host.

BlogVault’s features are highly anticipated by small businesses, and real-time backup plans are fairly affordable (half price) compared to Jetpack backups. However, the cost per site is higher when compared to self-provided plugins such as Updraft and BackupBuddy.

Price: $ 89 per year for personal plans, 1 site license for daily backup. $ 249 per year for real-time backup.

Summary: BlogVault is an easy-to-use WordPress backup solution. Make a backup from your website to prevent the website server from being overloaded with backups. The price seems to be very affordable for small businesses who need real-time backups but don’t want to pay the premium price for Jetpack backups.

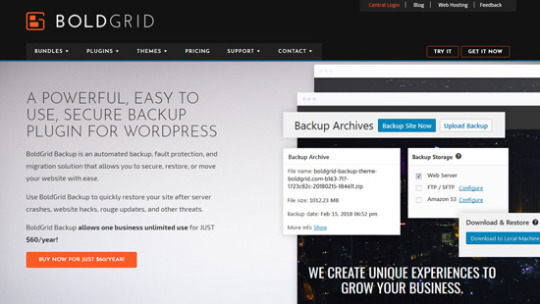

5. BoldGrip Backup

BoldGrid Backup is an automated WordPress backup solution for BoldGrid, a WordPress-powered website builder. This also allows you to easily back up your site, restore your site after a crash, or move your site when you change hosts. You can set up automatic backups or create manual backups with just one click.

BoldGrid Backup includes an automatic crash protection feature that automatically backs up your site before updating. If the update fails, your WordPress site will be automatically backed up to the latest backup.

This is a great feature that protects users from update errors. With BoldGrid Backup, you can store up to 10 backup files on your dashboard and even more on remote storage sites such as Amazon S3, FTP, and SFTP.

Price: $ 60 per year (including all BoldGrid premium tools and services)

Summary: BoldGrid Backup is a simple WordPress backup plugin that you can use to back up your website. The advantage of using this plugin is a bundle of other powerful tools available when you buy this plugin.

6. BackWPup

Free WordPress Backup Plugin BackWPup

BackWPup is a free plugin that allows you to create free, complete WordPress backups and store them in the cloud (Dropbox, Amazon S3, Rackspace, etc.), FTP, email, or your computer.

It is very easy to use and you can schedule automatic backups depending on the frequency of your website.

Restoring a WordPress site from a backup is also very easy. The BackWPup Pro version has priority support, the ability to save backups to Google Drive, and other great features.

Price: Free (Premium plan is also available)

Summary: BackWPup is used by over 600,000 websites and is a great alternative to the other backup plugins on the list. The premium version of the plugin adds more powerful features such as using another app to quickly and easily restore your website from the back.

7. Duplicator

WordPress Duplicate Duplicator

As the name implies, Duplicator is a popular WordPress plugin used to migrate WordPress sites. However, it also has a backup function.

This is not an ideal WordPress backup solution for frequently maintained websites, as it does not allow you to create automated scheduled backups. Price: Free

Revision: Duplicator lets you create manual backups of your WordPress site easily. If your web host creates regular backups, you can use this plugin to create backups to use on staging environment. It’s a great site migration plugin.

Last thought

All the WordPress backup plugins on our list have their strengths and weaknesses, but they all provide full backup of WordPress files and full database backup capabilities.

There are two reasons to use Jetpack backups. Very easy to use and provided with real-time incremental backup.

This means that instead of backing up all your files daily or hourly, you only back up what was literally updated within minutes of the update. This is ideal for large websites like us because it allows efficient use of server resources.

However, if you have a small or medium-sized website and don’t like paying monthly fees, we recommend the popular UpdraftPlus plugin. It includes many powerful features such as backup encryption, encrypted backup transport, and numerous cloud storage options.

No matter which WordPress backup plugin you choose, don’t store your backups on the same server as your website.

By doing this, you put all the eggs in one basket. In the event of a server hardware failure or, in the worst case, a hack, backups intended to set up regular backups are not disabled. Therefore, we strongly recommend backing up to a third-party storage service such as Dropbox, Amazon S3, or Google Drive.

That’s all. We hope this list will help you choose the best WordPress backup plugin for your website. You can also visit the step-by-step WordPress Security Guide for beginners to compare the best email marketing services for small businesses.

If you like this article, subscribe to the YouTube channel for WordPress video tutorials. You can also find us on Twitter and Facebook.

0 notes

Text

Serverless SQL Database - Aurora

Có bài viết học luyện thi AWS mới nhất tại https://cloudemind.com/aurora-serverless-intro/ - Cloudemind.com

Serverless SQL Database - Aurora

Như các bạn đã biết Aurora là AWS fully managed service đưa ra thuộc nhóm dịch vụ RDS (Relational Database Service), hay còn gọi là dịch vụ cơ sở dữ liệu có quan hệ (khóa chính, khóa ngoại). Aurora là loại hình dịch vụ mà mình yêu thích nhất, thú thật ban đầu là vì cái tên, cách mà AWS đặt tên các loại hình dịch vụ của mình rất dễ thương và personalize.

Aurora dịch ra tiếng Việt có nghĩa là ánh bình minh buổi sớm hay còn có thể hiểu là Nữ thần Rạng Đông.

Refresher: RDS viết tắt của Relational Database Service. Hiện tại Amazon RDS hỗ trợ 06 loại engines: MySQL, MSSQL, PostgreSQL, Oracle, MariaDB, và Aurora.

Riêng với Aurora hỗ trợ hai loại engines: Aurora for MySQL, và Aurora for Postgres.

Về phía sử dụng, khách hàng có rất nhiều option chuyển đổi CSDL từ on-prem lên AWS cloud như sau:

Cách 01: Khách hàng chuyển DB theo dạng lift and shift bộ combo EC2 và EBS. Đây là cách KH vẫn cài đặt OS, DB service và chuyển đổi dữ liệu lên AWS. Option này giúp bạn có độ tùy chỉnh cao nhưng bù lại bạn cần manage về mặt hạ tầng nhiều hơn.

Cách 02: Replatform chuyển đổi CSDL ở on-premise lên AWS Cloud thông qua RDS MSSQL, MySQL, PostgreSQL, Oracle. Riêng Oracle có hai loại là BYOL (Bring Your Own License) và licensed instance. Chuyển đổi CSDL lên cloud ở mức độ chỉn chu hơn, tận dụng sức mạnh AWS RDS, auto scaling, multi-az…

Cách 03: Chuyển đổi CSDL lên Native Cloud Service như Aurora. Tận dụng sức mạnh triệt để của Cloud Native Service. Bù lại ứng dụng có thể phải xem xét refactor, cần xem xét sự tương thích giữa App và DB.

Theo kinh nghiệm cá nhân những bạn làm product mới, nên xem xét sử dụng các dịch vụ native của AWS để tận dụng sức mạnh của cloud về khả năng scalability, elasticity và easy of use. Aurora là một trong những dịch vụ mình thấy rất đáng để dùng. Strong Recommended!

Samsung cũng đã migrate hơn 1 tỷ người dùng của họ lên AWS và CSDL họ dùng chính là Aurora. Và hiệu quả giúp Samsung giảm 44% chi phí Database hàng tháng.

Samsung AWS Migration Use Case – 1 Billion Users – https://aws.amazon.com/solutions/case-studies/samsung-migrates-off-oracle-to-amazon-aurora/

Lưu ý: Không phải loại engine hay version nào cũng hỗ trợ Serverless, nên nếu bạn chọn engine Aurora (Postgres hay MySQL) mà không thấy option lựa chọn Serverless thì bạn chọn version khác nhé.

Ví dụ hiện tại phiên bản mới nhất hỗ trợ Serverless là Aurora PostgreSQL version 10.7

Ý tưởng của Aurora Serverless là Pay as you Go, có nghĩa là CSDL có thể scale theo ACU (Aurora Capacity Unit) là tỷ lệ giữa CPU và RAM. Bình thường các bạn khởi tạo Aurora Standard bạn sẽ chọn instance Type (Ví dụ: T3), còn ở đây bạn khởi tạo min và max của ACU.

Hiện tại Aurora Serverless ACU tối thiểu là 02 (Tương đương 04 GB RAM, cấu hình của t3.medium) và tối đa là 384 (tương đương 768GB RAM). Khi khai báo này thì ứng dụng có khả năng scale tự động giãn nở (elasticity) trong khoảng này, khi thấp tải hệ thống consume ít ACU, khi cao tải sẽ cần cao ACU hơn.

Về mặt tính tiền, Aurora Serverless sẽ có 03 tiêu chí: storage, capacity và IO. Điều này cũng rất hợp lý. Căn bản về mặt dev hay phát triển ứng dụng rất đơn giản trong khi phát triển hay cả production. Lợi ích Aurora Serverless có thể gom thành một số ý chính sau:

Đơn giản Simpler – Đỡ phải quản lý về nhiều instances, hay năng lực xử lý capacity của DB.

2. Mở rộng Scalability – DB Có thể giãn nở cả CPU và RAM không ảnh hưởng đến gián đoạn. Điều này là tuyệt vời, bạn không phải dự đoán năng lực cần phải cấp phát cho DB nữa.

3. Cost effective – thay vì bạn phải provision cả một con DB to đùng, bạn có thể provision nhỏ thôi, và tự động nở theo tải sử dụng. Nghe rất xịn xò phải không?

4. HA Storage – Lưu trữ có tính sẵn sàng cao, hệ thống hiện tại Aurora hỗ trợ 06 bản replications để chống data loss.

Đây là một cải tiến rất xịn xò cho các nhà phát triển và cả doanh nghiệp khi sử dụng. Một số use cases phổ biến sử dụng Aurora Serverless đó là:

Ứng dụng có truy xuất đột biến và tải không ổn định (lúc truy xuất nhiều, lúc lại ít).

Ứng dụng mới phát triển mà bạn chưa thể tiên đoán sizing.

Phát triển cho môi trường Dev Test biến thiên về mặt workload. Thường nếu phát triển cho môi trường dev hệ thống sẽ không sử dụng vào ngoài giờ làm việc chẳng hạn, Aurora Serverless có thể tự động shutdown khi nó không được sử dụng.

Multi TenantTenant applications khi triển khai ứng dụng dạng SaaS. Aurora Serverless có thể hỗ trợ quản lý DB Capacity từng ứng dụng truy xuất. Aurora Serverless có thể quản lý từng db capacity.

Tiết kiệm chi phí, vận hành giản đơn.

Các bạn có dùng Aurora Serverless chia sẻ kinh nghiệm với nhau nhé ^^

Thân,

Kevin

Xem thêm: https://cloudemind.com/aurora-serverless-intro/

0 notes

Text

SBTB 2021 Program is Up!

Scale By the Bay (SBTB) is in its 9th year.

See the 2021 Scale By the Bay Program

When we started, Big Data was Hadoop, with Spark and Kafka quite new and uncertain. Deep Learning was in the lab, and distributed systems were managed by a menagerie sysadmin tools such as Ansible, Salt, Puppet and Chef. Docker and Kubernetes were in the future, but Mesos had proven itself at Twitter, and a young startup called Mesosphere was bringing it to the masses. Another thing proven at Twitter, as well as in Kafka and Spark, was Scala, but the golden era of functional programming in industry was still ahead of us.

AI was still quite unglamorous Machine Learning, Data Mining, Analytics, and Business Intelligence.

But the key themes of SBTB were already there:

Thoughtful Software Engineering

Software Architectures and Data Pipelines

Data-driven Applications at Scale

The overarching idea of SBTB is that all great scalable systems are a combination of all three. The notions pioneered by Mesos became Kubernetes and its CNCF ecosystem. Scala took hold in industry alongside Haskell, OCaml, Cloujure, and F#. New languages like Rust and Dhall emerged with similar ideas and ideals. Data pipelines were formed around APIs, REST and GraphQL, and tools like Apache Kafka. ML became AI, and every scaled business application became an AI application.

SBTB tracks the evolution of the state of the art in all three of its tracks, nicknamed Functional, Cloud, and Data. The core idea is still making distributed systems solve complex business problems at the web scale, doable by small teams of inspired and happy software engineers. Happiness comes from learning, technology choices automating away the mundane, and a scientific approach to the field. We see the arc of learning elevating through the years, as functional programming concepts drive deep into category theory, type systems are imposed on the deep learning frameworks and tensors, middleware abstracted via GraphQL formalisms, compute made serverless, AI hitting the road as model deployment, and so on. Let's visit some of the highlights of this evolution in the 2021 program.

FP for ML/AI

As more and more decisions are entrusted to AI, the need to understand what happens in the deep learning systems becomes ever more urgent. While Python remains the Data Science API of choice, the underlying libraries are written in C++. The Hasktorch team shares their approach to expose PyTorch capabilities in Haskell, building up to the transformers with the Gradual Typing. The clarity of composable representations of the deep learning systems will warm many a heart tested by the industry experience where types ensure safety and clarity.

AI

We learn how Machine Learning is used to predict financial time series. We consider the bias in AI and hardware vs software directions of its acceleration. We show how an AI platform can be built from scratch using OSS tools. Practical AI deployments is covered by DVC experiments. We look at the ways Transformers are transforming Autodesk. We see how Machine Learning is becoming reproducible with MLOps at Microsoft. We even break AI dogma with Apache NLPCraft.

Cloud

Our cloud themes include containers with serverless functions, a serverless query engine, event-driven patterns for microservices, and a series of practical stacks. We review the top CNCF projects to watch. Ever-green formidable challenges like data center migration to the cloud at Workday scale are presented by the lead engineers who made it happen. Fine points of scalability are explored beyond auto-scaling. We look at stateful reactive streams with Akka and Kafka, and the ways to retrofit your Java applications with reactive pipelines for more efficiency. See how Kubernetes can spark joy for your developers.

Core OSS Frameworks

As always, we present the best practices deploying OSS projects that our communities adopted before the rest -- Spark, Kafka, Druid, integrating them in the data pipelines and tuning for the best performance and ML integration at scale. We cover multiple aspects of tuning Spark performance, using PySpark with location and graph data. We rethink the whole ML ecosystem with Spark. We elucidate patterns of Kafka deployments for building microservice architectures.

Software Engineering

Programming language highlights include Scala 3 transition is illuminated by Dean Wampler and Bill Venners, Meaning for the Masses from Twitter, purity spanning frontend to backend, using type safety for tensor calculus in Haskell and Scala, using Rust for WebAssembly, a categorical view of ADTs, distributed systems and tracing in Swift, complex codebase troubleshooting, dependent and linear types, declarative backends, efficient arrays in Scala 3, and using GraalVM to optimize ML serving. We are also diving into Swift for distributed systems with its core team.

Other Topics

We look at multidimensional clustering, the renessance of the relational databases, cloud SQL and data lakes, location and graph data, meshes, and other themes.

There are fundamental challenges that face the industry for years to come, such as AI bias we rigirously explore, hardware and software codevelopment for AI acceleration, and moving large enterprise codebases from on-prem to the cloud, as we see with Workday.

The companies presenting include Apple, Workday, Nielsen, Uber, Google Brain, Nvidia, Domino Data Labs, Autodesk, Twitter, Microsoft, IBM, Databricks, and many others.# Scale By the Bay 2021 Program is Up!

Reserve your pass today

0 notes