#PHP Cron Cron job load balancing

Explore tagged Tumblr posts

Text

The Advantages of Automation Through Web Development: Efficiency, Scalability, and Innovation

In the digital age, automation has become a driving force behind business transformation, and web development plays a pivotal role in enabling this shift. By leveraging modern web technologies, businesses can automate repetitive tasks, streamline workflows, and enhance productivity, freeing up valuable time and resources for more strategic initiatives. From backend scripting to frontend interactivity, web development offers a wide range of tools and frameworks that empower organizations to build automated systems tailored to their unique needs. The benefits of automation through web development are vast, encompassing improved efficiency, scalability, and innovation.

One of the most significant advantages of automation is its ability to reduce manual effort and minimize human error. Through server-side scripting languages like Python, Node.js, and PHP, developers can create automated workflows that handle tasks such as data processing, report generation, and email notifications. For example, an e-commerce platform can use a cron job to automatically update inventory levels and send restock alerts to suppliers, ensuring that products are always available for customers. Similarly, webhooks can be used to trigger actions in real-time, such as sending a confirmation email when a user completes a purchase. These automated processes not only save time but also enhance accuracy, reducing the risk of costly mistakes.

Automation also enables businesses to scale their operations more effectively. By developing cloud-based applications and utilizing microservices architecture, organizations can create modular systems that can be easily scaled up or down based on demand. For instance, a SaaS company can use containerization tools like Docker and orchestration platforms like Kubernetes to automate the deployment and scaling of its web applications, ensuring optimal performance even during peak usage periods. Additionally, load balancing and auto-scaling features provided by cloud providers like AWS and Azure allow businesses to handle increased traffic without manual intervention, ensuring a seamless user experience.

The integration of APIs (Application Programming Interfaces) is another key aspect of automation in web development. APIs enable different systems and applications to communicate with each other, facilitating the automation of complex workflows. For example, a CRM system can integrate with an email marketing platform via an API, automatically syncing customer data and triggering personalized email campaigns based on user behavior. Similarly, payment gateway APIs can automate the processing of online transactions, reducing the need for manual invoicing and reconciliation. By leveraging APIs, businesses can create interconnected ecosystems that operate efficiently and cohesively.

Web development also plays a crucial role in enhancing user experiences through automation. JavaScript frameworks like React, Angular, and Vue.js enable developers to build dynamic, interactive web applications that respond to user inputs in real-time. Features like form autofill, input validation, and dynamic content loading not only improve usability but also reduce the burden on users by automating routine tasks. For example, an online booking system can use AJAX (Asynchronous JavaScript and XML) to automatically update available time slots as users select dates, eliminating the need for page reloads and providing a smoother experience.

The rise of artificial intelligence (AI) and machine learning (ML) has further expanded the possibilities of automation in web development. By integrating AI-powered tools, businesses can automate complex decision-making processes and deliver personalized experiences at scale. For instance, an e-commerce website can use recommendation engines to analyze user behavior and suggest products tailored to individual preferences. Similarly, chatbots powered by natural language processing (NLP) can handle customer inquiries, provide support, and even process orders, reducing the workload on human agents. These technologies not only enhance efficiency but also enable businesses to deliver more value to their customers.

Security is another area where automation through web development can make a significant impact. Automated security tools can monitor web applications for vulnerabilities, detect suspicious activities, and respond to threats in real-time. For example, web application firewalls (WAFs) can automatically block malicious traffic, while SSL/TLS certificates can be automatically renewed to ensure secure communication. Additionally, CI/CD pipelines (Continuous Integration and Continuous Deployment) can automate the testing and deployment of code updates, reducing the risk of introducing vulnerabilities during the development process.

In conclusion, automation through web development offers a multitude of benefits that can transform the way businesses operate. By reducing manual effort, enhancing scalability, and enabling innovative solutions, automation empowers organizations to achieve greater efficiency and competitiveness. As web technologies continue to evolve, the potential for automation will only grow, paving the way for smarter, more responsive, and more secure digital ecosystems. Whether through backend scripting, API integrations, or AI-driven tools, web development remains at the heart of this transformative journey, driving progress and innovation across industries.

Make order from us: @ChimeraFlowAssistantBot

Our portfolio: https://www.linkedin.com/company/chimeraflow

0 notes

Text

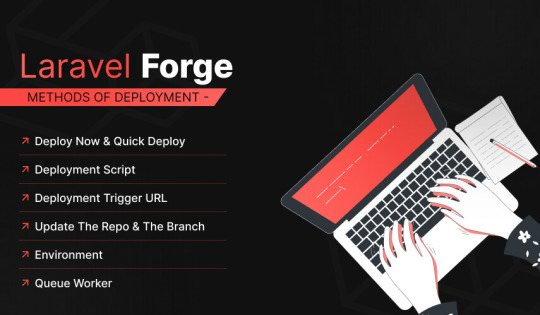

Auto Deployment with Laravel using Forge/Envoyer

We know most readers love to build web applications with the Laravel framework, and deploying these applications is a crucial step. However, while many know server management, only some are particularly fond of configuring and maintaining servers. Luckily, Laravel Forge and Envoyer are available to assist everyone!

When one is ready to deploy their Laravel application to production, there are some essential points that one can do to ensure your application is running as efficiently as possible. This blog will cover some great topics to ensure your Laravel framework is appropriately deployed.

Regarding our blog topic, you must have a brief about what Envoyer and Forge are regarding deployment.

Envoyer is a deployment tool used to deploy PHP applications, and the best thing about this tool is the zero downtime during deployment. Unfortunately, this indicates that your application and the customers must be aware that a new version has been pushed.

The basic overview of the Laravel framework:

Laravel framework is an open-source, free PHP framework that renders a set of tools and resources to build modern PHP applications. It comprises a complete ecosystem leveraging its built-in features, range of extensions, and compatible packages. The growth and popularity of Laravel have escalated in recent years, with several developers adopting it as their framework of choice for a streamlined development process.

What is deployment concerning Laravel?

A deployment is a process in which your code is downloaded from your source control provider to your server. This makes it ready for the world to access.

It is accessible to manager servers with Laravel Forge. A new website is online quickly, queues and cron jobs are easily set up, and a more advanced setup using a network of servers and load balancers can be configured. While with Envoyer, one can manage deployments. This blog will give you an insight into both; Forge and Envoyer.

Laravel Forge

Laravel Forge is a tool to manage your servers, and the first step is creating an account. Next, one has to connect to a service provider; several cloud servers are supported out of the box, which includes Linode, Vultr, Hetzner, and Amazon. Moreover, you can manage custom VPS.

Deploy Now and Quick Deploy

The Quick Deploy feature of Forge allows you to quickly deploy your projects when you push to your source control provider. When you push to the configured quick deploy branch, Laravel Forge will pull your latest code from source control and run the application’s configured deployment script.

Deployment Trigger URL

This option can integrate your app into a third-party service or create a custom deployment script. Then, when the URL receives a request, the deployment script gets triggered.

Update the Repo and the Branch

You can use these options if you need to install a newer version of the same project on a different repository or update the branch in Laravel Forge. If you are updating the branch, you may also have to update the branch name in the deployment script.

Environment

Laravel Forge automatically generates an environment file for the application, and some details like; database credentials are automatically added to the environment. However, if the app uses an API, you can safely place the API key in the environment. Even running a generic PHP web app, you can access the ENV variables using the getenv() method.

Queue Worker

Starting a queue worker in Forge is the same as running the queue: work Artisan command. Laravel Forge manages queue workers by using a process monitor called Supervisor to keep the process running permanently. You can create multiple queues based on queue priority and any other classification that you find helpful.

Project Creation in Envoyer

The first step is to create an account on Envoyer and log in. A free trial is available via your profile page>integrations; you can link a source control system such as Bitbucket or Github. Enter the access token for the service you are using, and just like that, you’re ready to create your first project.

First Deployment:

Envoyer needs to be able to communicate with Forge, which is done via an ‘SSH’ key. You will find the SSH key under the ‘key button’ on the servers tab in Envoyer. Enter this key in the SSH tab for your server in Laravel Forge

The last straw to this hat is to add the environment file. Click the ‘Manage Environment’ button on the server tab in Envoyer. Now you have to enter an ‘encryption key,’ which will be used by Envoyer to encrypt your environment file, which contains access tokens and passwords.

conclusion

This blog gave you an exclusive insight into the Laravel framework and deployment with Forge and Envoyer.

Laravel Forge and Envoyer are incredible tools that make deployment a cakewalk. Both the tools have tons of features and easy-to-use UI that lets you create and provision servers and deploy applications without hassle.

Numerous web app development companies are available in the market. However, the client searches for lucenta solutions to build their customized app. We code your imagination with 4D techniques, i.e., Determine, Design, Develop, and Deliver. With proficient team members, we can cross every obstacle coming across our path to success. Your satisfaction is our prime mantra!

0 notes

Photo

Load balacing PHP Crons ~ Defcon Pixey/Pixel Sleep States – Too load balance PHP is much the same as any c it is with the function sleep(); unlike c which is in milliseconds PHP sleep runs in seconds.

0 notes

Text

Deploy Magento on MDS & HeatWave

Magento is an Open-Source e-commerce platform written in PHP using multiple other PHP frameworks such as Laminas and Symphony. Magento source code is distributed under Open Software License v3.0. Deploying Magento is not always easy as it often requires registration. However the source code is also availble on GitHub. Magento supports MySQL 8.0 since version 2.4.0 (July 28th 2020). In Magento’s documentation, there is a warning about GTID support: This is not anymore a problem since MySQL 8.0.13 ! Also MDS uses always the latest version of MySQL. Requirements To deploy Magento on Oracle Cloud Infratructure (OCI) using MySQL Database Service (MDS) and HeatWave you need: an Internet Connectionan OCI account (you can get a free trial on https://www.oracle.com/mysql)And… nothing else 😉 Once ready, the OCI dashboard is similar to this: Deployment The easiest was to deploy a full stack architecture on OCI is to use Resource Manager and a Stack. The complexity will be handled by the stack itself and Terraform will deploy automatically all required resources. You can get a stack to deploy the Magento architecture with all the required resources on my GitHub repository: https://github.com/lefred/oci-magento-mds The zip file is what you need if you want to deploy it manually: If you want to deploy everything automatically, you just need to click on the button If you click on it, you will launch the Stack Creation wizard and you need to first accept the Oracle Terms of Use: Once accepted, all information will be pre-filled and you will need to just fill some mandatory variables: Architectures With this stack, you have to possibility to deploy different architectures. It’s up to you to decide which one will match your needs. Architecture 1 – default The default architecture is this one: As you can see there is only one Magento Webserver (compute instance) using one MySQL Database Service instance and one OpenDistro for ElasticSearch compute instance. MDS must always be on a private subnet. Architecture 2 – multiple webservers With this deployment, one single Database and OpenDistroES are working as backend for multiple Magento Webservers. This is common when you want to have a load-balancer in front of your Magento serving the same site. Architecture 3 – multiple isolated sites As you can see, in this third and final architecture, you can deploy multiple Magento completely isolated. They each use their own MDS instance and their own OpenDistroES instance. You can deploy on of these architecture simply from the Stack Creation screen: It’s also possible to use an existing infrastructure in case you already have a VCN, an Internet Gateway, subnets, security lists, etc… and you want to use them. When you choose to deploy multiple Webservers, you need to fill this form too: Deploy ! When everything is filled as you want, you can directly deploy all the resources: Now, the apply job will run for some time (approximately 10 to 15 minutes). And you can follow all the steps in the log console: Important information will also be printed at the end. You can also retrieve those output variables from the menu on the left: Created Resources You can also verify what has been created for you like the instances for example: Magento We can copy the public IP of the Magento Webserver in our browser and we will see the Magento’s empty home page: If you are familiar with Magento, you should know that now, you require the Magento Admin URI to access the administration dashboard. This URI can be found in the Stack’s log output: If you add the URI to the public IP in your browser, you will reach the sign in form: Cron Job You will then see that the cron jobs are not enabled: To enable the cron jobs, you will need to access the Webserver in SSH. SSH to Compute Instances In OCI, to access in ssh to a compute instance in the public subnet, we need the IP but also a key. Usually, when you deploy manually your resources, you are requested to paste, upload or generate such key. Resource Manager generated one for us. We need to retrieve that key and save it in our computer. As usual we can find it in Stack’s outputs: We will paste it into a file called oci.key and use it: [fred@imac ~/keys] $ chmod 600 oci.key [fred@imac ~/keys] $ ssh -i oci.key [email protected] The authenticity of host '130.xx.xx.xxx (130.xx.xx.xxx)' can't be established. ECDSA key fingerprint is SHA256:+gVvfYsXMfqoUEHuw6myhIfm9ov748jN+Vf20zr573o. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '130.xx.xx.xxx' (ECDSA) to the list of known hosts. Activate the web console with: systemctl enable --now cockpit.socket Last login: Mon Mar 15 11:38:21 2021 from 132.xxx.xxx.xxx [opc@magentoserver1 ~]$ We can now enable to cron job using the magento command line utility: [opc@magentoserver1 ~]$ cd /var/www/html [opc@magentoserver1 html]$ sudo -u apache bin/magento cron:install Crontab has been generated and saved The magento utility can also be used to retrieve the admin URI: [opc@magentoserver1 html]$ sudo -u apache bin/magento info:adminuriAdmin URI: /admin_1s35px Magento Sample Data As I’m not a Magento developer, I will add some sample data. I will use Magento2’s official sample data using git: [opc@magentoserver1 ~]$ sudo dnf -y install git ... [opc@magentoserver1 ~]$ cd /var/www/html/ [opc@magentoserver1 html]$ sudo -u apache git clone https://github.com/magento/magento2-sample-data ... [opc@magentoserver1 html]$ sudo -u apache php -f magento2-sample-data/dev/tools/build-sample-data.php -- --ce-source="/var/www/html/" All symlinks you can see at files: /var/www/html/magento2-sample-data/dev/tools/exclude.log It’s recommended to also increase the max memory allowed for PHP processes: [opc@magentoserver1 html]$ sudo sed -i 's/memory_limits*=.*/memory_limit=512M/g' /etc/php.ini [opc@magentoserver1 html]$ sudo systemctl reload httpd When done, we can finish the sample data’s installation: [opc@magentoserver1 html]$ sudo -u apache bin/magento setup:upgrade ... block_html: 1 full_page: 1 Nothing to import. [opc@magentoserver1 html]$ sudo -u apache bin/magento cache:flush Let’s verify is something has changed on the site (we use again the public IP in the browser): MySQL HeatWave HeatWave is a flagship technology that is only available in MDS and that considerably accelerates queries that are too long or too complex. The first requirement to use HeatWave is to have a MDS Instance Shape compatible with it. I recommend to always use a HeatWave compatible shape for MDS even if you don’t plan to directly use HeatWave. In this example, the shape used is MySQL.HeatWave.VM.Standard.E3. You can directly see that the shape is compatible with HeatWave. If you have such shape, you can immediately enable HeatWave on it (if not you will have to deploy a new instance): And you follow the wizard. As soon as the HeatWave nodes are ready, you will see them as active in OCI’s dashboard: Using MySQL HeatWave Now from the Magento Compute Instance, we can use MySQL Shell to connect to our MDS instance and verify if HeatWave is ready: SQL> SHOW GLOBAL STATUS LIKE 'rapid_plugin_bootstrapped'; +---------------------------+-------+ | Variable_name | Value | +---------------------------+-------+ | rapid_plugin_bootstrapped | YES | +---------------------------+-------+ 1 row in set (0.0018 sec) It seems it’s all correct. We also have status variables: SQL> show status like 'rapid%' ; +---------------------------------+------------+ | Variable_name | Value | +---------------------------------+------------+ | rapid_change_propagation_status | ON | | rapid_cluster_ready_number | 2 | | rapid_cluster_status | ON | | rapid_core_count | 16 | | rapid_heap_usage | 67109005 | | rapid_load_progress | 100.000000 | | rapid_net_authentication | ON | | rapid_plugin_bootstrapped | YES | | rapid_preload_stats_status | Available | | rapid_query_offload_count | 0 | | rapid_service_status | ONLINE | +---------------------------------+------------+ And Performance_Schema tables: SQL> show tables like 'rpd%'; +-------------------------------------+ | Tables_in_performance_schema (rpd%) | +-------------------------------------+ | rpd_column_id | | rpd_columns | | rpd_exec_stats | | rpd_nodes | | rpd_preload_stats | | rpd_query_stats | | rpd_table_id | | rpd_tables | +-------------------------------------+ Choosing tables to be used with HeatWave It’s necessary to tell to MySQL which tables can be used for HeatWave. This means which tables have their data off-loaded in HeatWave’s in-memory cluster. For this Magento site, I will use the catalog tables. This is an example using catalog_product_index_price: SQL> ALTER TABLE catalog_product_index_price SECONDARY_ENGINE = RAPID; SQL> ALTER TABLE catalog_product_index_price SECONDARY_LOAD; I used a script to perform those statements on all catalog% tables. It’s time to surf a bit on our Magento Shop and then verify is some queries used HeatWave. To perform this operation, we can use the rapid_query_offload_count status vatiable in MySQL: SQL> SHOW STATUS LIKE 'rapid_query_offload%' ; +---------------------------+-------+ | Variable_name | Value | +---------------------------+-------+ | rapid_query_offload_count | 3 | +---------------------------+-------+ 1 row in set (0.0015 sec) We can see that 3 queries used HeatWave already. And of course, it’s possible to see which ones using Performance_Schema table called rpd_query.stats: SQL> SELECT query_text, JSON_PRETTY(QEXEC_TEXT) FROM performance_schema.rpd_query_statsG ************************** 1. row *************************** query_text: SELECT MAX(count) AS `count` FROM ( SELECT count(value_table.value_id) AS `count` FROM `catalog_product_entity_varchar` AS `value_table` GROUP BY `entity_id`, `store_id`) AS `max_value` json_pretty(QEXEC_TEXT): { "timings": { "queryEndTime": "2021-03-17 09:26:29.208009", "queryStartTime": "2021-03-17 09:26:29.016203", "joinOrderStartTime": "2021-03-17 09:26:29.016116" }, ... With HeatWave in MDS we can also compare those queries. Let’s have a look: SQL> SELECT MAX(count) AS `count` FROM (SELECT count(value_table.value_id) AS `count` FROM `catalog_product_entity_varchar` AS `value_table` GROUP BY `entity_id`, `store_id`) AS `max_value`; +-------+ | count | +-------+ | 10 | +-------+ 1 row in set (0.0507 sec) SQL> SET SESSION use_secondary_engine=OFF; SQL> SELECT MAX(count) AS `count` FROM (SELECT count(value_table.value_id) AS `count` FROM `catalog_product_entity_varchar` AS `value_table` GROUP BY `entity_id`, `store_id`) AS `max_value`; +-------+ | count | +-------+ | 10 | +-------+ 1 row in set (2.3843 sec) As you can see, there is already a lot of difference for such a small query ! Maintenance For some operations, like reindexing everything, Magento runs some DDLs that are not supported while HeatWave is active. So if you encounter errors similar to this one: SQLSTATE[HY000]: General error: 3890 DDLs on a table with a secondary engine defined are not allowed., query was: TRUNCATE TABLE `catalog_category_product_index_store1_replica` Product Categories index process unknown error You only need to disable HeatWave for the time of the maintenance: SQL> ALTER TABLE catalog_product_index_store1 SECONDARY_ENGINE NULL; And the maintenance can happen this time without error: $ sudo -u apache bin/magento indexer:reindex catalog_product_price Product Price index has been rebuilt successfully in 00:14:03 When finished, you can enable back HeatWave: SQL> ALTER TABLE catalog_product_index_store1 SECONDARY_ENGINE = RAPID; SQL> ALTER TABLE catalog_product_index_store1 SECONDARY_LOAD; As you can see, it’s very easy to deploy Magento on OCI using Resource Manager and you can see that Magento benefits immediately from HeatWave. You can also check this post on video: https://lefred.be/content/deploy-magento-on-mds-heatwave/

0 notes

Text

Building a Webgame - How We Designed a Stock Sector Simulator Out of Nothing and php - element 1

http://topicsofnote.com/?p=9063&utm_source=SocialAutoPoster&utm_medium=Social&utm_campaign=Tumblr

The development of a web-based recreation this kind of as a inventory simulator starts off with an thought. Not just 'I want to make a inventory market place sim' but the notion 'I want to make a different and new stock industry sim, a single that is improved and much more exceptional than something by now out there'

Then will come study - observing what other sector sims are presently out there and purposeful and seeing how they get the job done from an finish-person's issue of view. There are stock sector sims that run working with java and server-based engines this kind of as the Hollywood Stock Exchange, sims that run utilizing SSI and ASP modules, and open up-source inventory sims these types of as the Futures Exchange. We appeared at all of them and determined where the opening lay in regards to the market place and wherever need ought to be.

We identified a substantial void was sitting in regards to prediction markets and futures exchanges for inventory market game titles and simulators in regards to tv. There were being a good deal of web-sites the place you could vote for your clearly show's level of popularity, and other websites exactly where you could even vote for fact Television set reveals and check out your luck at predicting who was upcoming to go. But no site existed that addressed Television as a stock current market. so we made the decision that would be our niche match.

Tv is a progress marketplace - Just about every year they market much more Television sets than the year before. Television set studios get the job done tirelessly to generate new and distinct shows to set above the airwaves and cable networks to entertain hundreds of tens of millions of people in North America by yourself. All of individuals viewers have an feeling about what they like and do not like - that is a industry contrary to any other.

We sat down and crabbed out a checklist of what a Tv set inventory marketplace would be equipped to do ...

Buy and market stocks in Television displays, Television set channels, Studios, and stars

Quick promote and cover the exact shares ( Brief marketing and masking is the reverse of a buy or provide, if you limited a stock, you hope the selling price drops so you can gain dollars on the drop. )

Level or vote for well-liked Tv set shows

give effortless to use registration that is seamless

design the market place program for severe modularity so we can incorporate in new functions as we establish them with out interrupting the current market itself.

That was our primary list of functions. At the finish of the article collection - I'll clearly show a listing of the latest functions and display how the sector method has grown in complexity but nevertheless retains all of the standard modularity we formulated into it.

The most critical factor to start off with was to make a process to buy and market shares, to observe the genuine invest in and sell approach, and to empower the sector itself to change pricings as solution is bought and sold.

We made a decision to go with mysql for databasing, and also to apply a cron position to do the background calculations and current market balancing.

By making use of the php language, we could a lot more quickly carry out mysql entry and also permit for additional overall flexibility in the intention of trying to keep the Stocks On the net software as modular as achievable.

MySql and php have...been modified during their lifecycles to get the job done as near to seamlessly together as achievable, so it designed perception to use the two elements to make our inventory program as it would allow easier maintenance and future growth.

Starting from a standard math code of

Buy cost = Stock price x (Range of shares + fee)

We developed a easy get and offer method that would help the player to get shares or provide them. with the program instantly calculating commission and adding that to the transaction.

We understood that we wanted to restrict the shares capable to be bought by a single player, so established a ceiling of 25,000 shares for every inventory. This would be certain no one particular player could hold a monopoly on shares. and also restricted the outcome of a solitary bulk acquire or sell.

What started off as a uncomplicated formulation and monitoring technique fast ballooned into a intricate math operate algorithm that now looked a thing like this:

Amount of shares out there = (max shares out there - selection of shares held by participant) IF player shares are considerably less than max permitted THEN course of action purchase transaction Invest in existing selling price * range of shares available + fee (1.5%) Transaction finish - do industry calculations Stock cost alter by (+.01 x 5,000 shares or portion thereof) cron operates, checking transaction and altering .01 for each and every 10,000 shares moved cron also checks if additional than 50,000 shares have moved then inventory adjusts slowed to .01 for every cron cycle to make sure a run-on inventory does not come about.

To make sure this occurred appropriately we experienced to include in many mysql desk entries for the cron and the method to track the inventory transactions so not only could we gain a heritage of transactions and inventory movements, but also the system could cross-look at itself to keep balance in the celebration of data corruption or a poor stock transaction.

The provide transaction was quite substantially the exact same approach but with negatives rather of positives.

We also executed a voting procedure whereby gamers could vote for their favourite Tv exhibits - modify adjusting the value of that present stock up or down relying on their vote. If you appreciated the demonstrate, vote yes, if you don't like it, vote no. We established up a random generator to pull 10 clearly show names from the databases every time the vote page was loaded so no exhibit would get more body weight than any other clearly show. hence ensuring a even unfold of votes. The vote system also rewarded the participant by adding sport money to their account for every vote clicked. If you voted for all 10 choices, you attained $ 10k. Therefore, greed turned a element - get more $ to enjoy by voting.

Making the participant portfolio website page was just a make any difference of placing up an if / then loop to pull every single stock held one following yet another and exhibiting them on the page with their acquire value, current value, selling price change, and so on.

Nest write-up will clarify how we crafted the stock ticker and other characteristics, and the long run directions the Inventory On the web application will go in.

Source by Tim Morrison

0 notes

Text

How to migrate from Magento 1 to Magento 2 Five Easy Steps?

Everyone in the e-commerce world is in a frenzy. Now, that the June 2020 deadline is near, businesses are scrambling to move from Magento 1 to the latest Magento 2 version. Even though more than 250,000 websites work on Magento, only a handful websites run on the current Magento 2 version. If you are planning to move to Magento 2, you have made the right decision. Planning a migration helps in avoiding downtime and data loss. Making strategic decisions and creating a solid Magento migration plan will keep your store up and running without any issues.

How do you move your Store from Magento 1 to Magento 2?

Here’s a step by step procedure for migrating your store to Magento 2:

1. Hardware and Software Compatibility

Once you are sure of how you want your new Magento 2 store to look and function, you must consider the software and hardware issues. Remember Magento 2 won’t function efficiently if you run it on outdated software.

1. Operating Systems – Linux x86-64 2. Web Servers – Apache 2.2 or 2.4 and nginx 1.x 3. Database - MySQL 5.6, 5.7 or MariaDB 10.0, 10.1, 10.2, Percona 5.7 and other binary-compatible MySQL technologies. 4. Supported PHP versions include 7.1.3+ or 7.2.0+ 5. Required PHP Extensions: bc-math, ctype, curl, dom, gd, hash, intl, iconv, mbstring, mcrypt, openssl, PDO/MySQL, SimpleXML, soap, spl, libxml, xsl, zip, json. 6. Verify PHP OPcache and PHP configuration settings including memory_limit. 7. Valid SSL certificate for HTTPS 8. System Tools – bash, gzip, lsof, mysql, mysqldump, nice, php, sed, tar 9. Mail Server - Mail Transfer Agent (MTA) or an SMTP server 10. If you are running Magento 2 extension on a system with less than 2GB of RAM, your upgrade might fail.

2. Install Magento 2 Software

The real process of migrating to Magento 2 starts here. You need to download the latest Magento 2 Open Source software from the official website. If you have the required hardware and software ready, the installation process will be quick & easy and the “Success” message will appear on the screen. If there are any issues, make sure you install the missing software. Once the installation process is over, it’s time to start migrating your store to M2.

Before you begin the migration process, make sure to back up database and files to avoid data loss later. In order to avoid any errors, you can also opt for a staging environment to test-drive the entire process.

3. Install and Configure Data Migration Tool

The official Data Migration Tool makes data transfer from Magento 1 to Magento 2 easy. The tool is command-line interface (CLI) that allows you to migrate store settings, configurations, bulk databases and incremental data updates as required. Once you install and configure the tool, you should start migrating the settings from Magento 1 to Magento 2. It is recommended that you stop all Magento 1.x cron jobs for easy migration. You can also use the Data Migration tool for migrating products, wishlist products, customer orders, customers, categories, ratings, reviews and more.

Remember that you must avoid creating new products, attributes and categories in Magento 2 store before migrating data. The tool will overwrite new entities and thus, you will have to spend time in rework. It is essential that you run a test so that you do not miss out on any important element.

4. Migrate Themes, Extension and Customization

You need to check whether the Magento 1 theme is compatible with the latest Magento 2 standards. If it is not compatible, you will have to buy a new theme from the Magento market place or build a custom theme to fulfill M2 requirements. Using a theme compatible with Magento 2 is important because the old XML structure won’t work with the latest version.

You must also consider the third-party extensions. Make a list of extensions that are absolutely essential to you. Discuss your requirements with a Magento extension developer to customize your extensions or buy extensions that are compatible with Magento 2.

Do not worry about customization. With a Magento code migration tool, you can convert the custom Magento 1.x code to Magento 2 and save time as well as eliminate errors. You can use the tool to migrate specific files such as Layout XML files, Config XML files, PHP files, etc. However, you may have to undertake some editing manually.

5. Run Tests before you go Live

After migrating all store data, themes, extensions and customization, make sure that you run multiple tests to ascertain the proper functioning of the website. Check all the aspects of the website in detail. Migrating from Magento 1 to Magento 2 is not a simple task. Any mistake can lead to additional downtime and data loss.

If you have made any manual changes to the data, there are chances of errors in the incremental data migration. So, test your Magento 2 store for any problems or errors. Once you are sure that the new store is functioning properly, you can put your Magento 1 system in maintenance mode. Stop incremental updates and begin Magento 2 cron jobs. Replace DNS and load balancers to make your Magento 2 store go live.

All the best!

If you are new to Magento or simply want the migration process done faster, hire a Magento migration expert. A professional will ensure that you migrate from Magento-1 to Magento-2 in a safe, stress-free manner. It will mean zero data loss and minimal downtime.

0 notes

Text

Vultr is Now Supported on Forge

News / October 02, 2017

Vultr is Now Supported on Forge

Laravel Forge announced last week that Vultr now has first-party support. You can now create Vultr as a service provider by entering your API credentials in the Forge Server Providers configuration.

Vultr, which was founded in 2014, is a very competitively priced cloud option, with servers for as little as $2.50 a month and fifteen datacenters around the world. They join Forge as the fourth provider, along with Linode, DigitalOcean, and AWS.

Forge allows you to painlessly create and manage PHP 7.1 servers which include MySQL, Redis, Memcached, and everything else you need run Laravel applications. You can easily manage your SSL certificates, queue workers, cron jobs, and load balancers, among other features.

Forge has two plans that include a five-day trial: a Growth plan and a Business plan. Both plans allow unlimited sites and deployments, and the business plan includes the ability to share servers with teammates and offers priority support. Give Forge a try today with Vultr!

via Laravel News http://ift.tt/2xKj0vk

0 notes

Text

Free Premium Hosting For Lifetime

Free Premium Hosting For Lifetime

Free Pro Plan

1 Website

Free cPanel

1500MB3000MB Free Space.

200 GB Bandwidth

3 Email Accounts

2 Databases

Free Website Builder

PHP & MYSQL

SEO Tools

Load Balancer

Free CDN

Basic SSL

High Speed Email

99.9% Uptime

Free Domain (.tk,.ga for 1 year)

Latest Php Version 7.xx

Script Installer

Cron jobs

IP Deny Manager

Backup/Restore

Multi PHP

No, ads

ORDER NOW Unlimited Free Plan

Unlimited Disk…

View On WordPress

0 notes