#Polynomial interpolation

Explore tagged Tumblr posts

Link

1 note

·

View note

Text

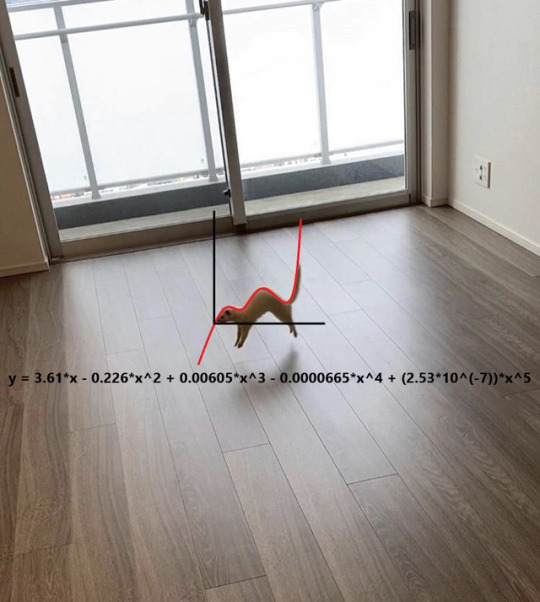

Understanding growth patterns of mung beans (Vigna radiata) through polynomial interpolation: Implications for cultivation practices

Abstract This study aims to explore the germination and growth patterns of mung bean seeds (Vigna radiata) through a polynomial interpolation fitting approach in a controlled environment. Mung beans are also popular in Asian diets, are used in many dishes, and have lots of nutritional value. The study is meant to describe the germination and growth pattern of mung beans over six successive days,…

0 notes

Text

to my fellow stem nerds who are trying to figure out this “art” thing later in life, through learning to draw, to write, etc.:

art is not this perfect process where each step leads to the next and to the next in a nice orderly fashion, which can be scary, if, like me, you’re not used to that

instead, approach art as an iterative process, like an interpolation algorithm

(for those who never took Numerical Analysis, it’s the process of writing a function to graph a series of points or to approximate a function as a polynomial)

the first run through the algorithm (your pencil sketch or first draft) is going to be shit, AND THATS EXACTLY WHAT ITS SUPPOSED TO DO AND EVERY MATHEMATICIAN EXPECTS THAT

because then: you feed that first run back into the algorithm, for your second iteration (use the sketch as a guide for outlining, or use the first draft to edit or template into the second draft)

continue feeding the prev iteration into the algorithm until you reach the iteration with your desired accuracy (keep drawing, keep writing until it’s there)

and just like with interpolation, each round gets closer than the last, so you just gotta keep going. whatever’s not perfect in this round will be fixed in a later one

does it feel inefficient? hell to the freaking yes. but so is interpolation, especially if you already know the “correct” function, e.g. sin(x), and you’re trying to approximate it as a polynomial. no matter how many thousands, millions, or billions of iterations you do, that polynomial is never going to match sin(x)

BUT that’s not the point: approximating a known function like sin(x) is the simplest way to tell a computer how to graph it

in the same way, the point of art is not to be perfect. it’s the simplest way for us humans to bring our ideas into the world, annoyingly imperfect process and all

7 notes

·

View notes

Note

you have been polynomial interpolated

I think i wouldve noticed that

5 notes

·

View notes

Text

Polynomial and Piecewise Linear Interpolation MATH2070 Lab #7

Introduction We saw in the last lab that the interpolating polynomial could get worse (in the sense that values at intermediate points are far from the function) as its degree increased. This means that our strategy of using equally spaced data for high degree polynomial interpolation is a bad idea. In this lab, we will test other possible strategies for spacing the data and then we will look at…

0 notes

Text

Evaluate 2-D Hermite series on the Cartesian product of x and y with 3d array of coefficient using NumPy in Python

In this article, we will discuss how to Evaluate a 2-D Hermite series on the Cartesian product of x and y with a 3d array of coefficients in Python and NumPy. NumPy.polynomial.hermite.hermgrid2d method Hermite polynomials are significant in approximation theory because the Hermite nodes are used as matching points for optimizing polynomial interpolation. To perform Hermite differentiation, NumPy provides a function called Hermite.hermgrid2d which can be used to evaluate the cartesian product of the 3D Hermite series. This function converts the parameters x and y to array only if they are tuples or a list, otherwise, it is left unchanged and, […]

0 notes

Text

Back to the Farm

In addition to Flag Day and Army Day, which turned out to be a bit of a fustercluck this year, this is World Blood Donor Day, National Bourbon Day, National Rosé Day, World Gin Day, World Blood Donor Day, Doll Day, International Bath Day, International Knit in Public Day, Monkey Around Day, National Strawberry Shortcake Day, and World Juggling Day.

On this day in 1822, Charles Babbage proposes a “difference engine” in a paper to the Royal Astronomical Society. A difference engine is an automatic mechanical calculator designed to tabulate polynomial functions. The name is derived from the method of finite differences, a way to interpolate or tabulate functions by using a small set of polynomial co-efficients. Some of the most common mathematical functions used in engineering, science and navigation are built from logarithmic and trigonometric functions, which can be approximated by polynomials, so a difference engine can compute many useful tables.

June 14 is the birthday of actor and singer Burl Ives (1909), actors Gene Barry and Sam Wanamaker (1919), journalist and politician Pierre Salinger (1925), singer-songwriter Boy George (1961), and tennis player Steffi Graf (1969).

The skies were blue this morning but the temperature remained cool, starting with a low of 44 degrees and reaching a high of just 70.

My blood sugar declined nicely, to 166. I would like to think the bit of walking done yesterday and the decent dinner helped.

Following our morning coffee and online brain games, Nancy and I had oatmeal for breakfast. Then we showered and dressed, and a little while later we headed out to Marcola. We wandered around the family farm with Kalen and her friend Iris and a total of four dogs. I took my new camera along and engaged in a bit of photographic fun.

Also present were Kurt, Kyle and Aaron, who were working on cutting hay and fiddling with the various related machines. Seran showed up with Sophie a little while later. Eventually Kalen, Iris, Nancy and I walked back to the dome. Nancy and I munched on chips and cheese while we visited a bit more.

Then Nancy and I headed for home, stopping briefly to take a few pictures of some big rolled hay bales along the way. We swung by Albertsons for some heavy cream and chicken bouillon, items necessary for making tonight’s dinner. We also got an iced mocha at Old Crow.

After taking a nap, I spent some time on Flickr. My account received 2,505 views for the day, and I uploaded three shots from the farm walk. Nancy had a glass of wine.

For our dinner, we heated the leftover rice, cooked some green beans, and stirred up an easy version of chicken piccata using a bit of chicken that had been put aside from that used for the Tuscan recipe we finished off yesterday. That turned out to be quite tasty.

Following the clean-up and a brief respite, we turned to our streaming for the evening. We began with Thursday’s Colbert, featuring guest John C. Reilly. Then we watched the final two episodes of “Sherlock & Daughter,” which left an opening for a possible sequel.

Tonight’s low may reach 44 degrees again, but tomorrow’s high promises to get into the 70s, with sunshine almost the entire day.

I will return to church tomorrow to handle the service stream, and Rector Ann will be back at work, both presiding and preaching. Sunday evening, of course, I will get to my recovery meeting. Between those occasions, there’s no telling what trouble we might get into tomorrow.

0 notes

Text

Linearinterpolationcalculator is a professional-grade interpolation calculator with linear, polynomial, and cubic spline methods. Interactive visualization, step-by-step solutions, and CSV export for engineers and scientists.

1 note

·

View note

Video

Q 4(a) | Lagrange Interpolation Polynomial | DU BSc (H) Numerical Analys...

#youtube#NumericalAnalysis LagrangeInterpolation DU4thSem BScMaths Interpolation PreviousYearQuestion DU2023 Mathematics SemesterExam MathsByQuestion

0 notes

Link

1 note

·

View note

Text

How do you handle missing data in a dataset?

Handling missing data is a crucial step in data preprocessing, as incomplete datasets can lead to biased or inaccurate analysis. There are several techniques to deal with missing values, depending on the nature of the data and the extent of missingness.

1. Identifying Missing Data Before handling missing values, it is important to detect them using functions like .isnull() in Python’s Pandas library. Understanding the pattern of missing data (random or systematic) helps in selecting the best strategy.

2. Removing Missing Data

If the missing values are minimal (e.g., less than 5% of the dataset), you can remove the affected rows using dropna().

If entire columns contain a significant amount of missing data, they may be dropped if they are not crucial for analysis.

3. Imputation Techniques

Mean/Median/Mode Imputation: For numerical data, replacing missing values with the mean, median, or mode of the column ensures continuity in the dataset.

Forward or Backward Fill: For time-series data, forward filling (ffill()) or backward filling (bfill()) propagates values from previous or next entries.

Interpolation: Using methods like linear or polynomial interpolation estimates missing values based on trends in the dataset.

Predictive Modeling: More advanced techniques use machine learning models like K-Nearest Neighbors (KNN) or regression to predict and fill missing values.

4. Using Algorithms That Handle Missing Data Some machine learning algorithms, like decision trees and random forests, can handle missing values internally without imputation.

By applying these techniques, data quality is improved, leading to more accurate insights. To master such data preprocessing techniques, consider enrolling in the best data analytics certification, which provides hands-on training in handling real-world datasets.

0 notes

Text

Research Problem: Construction and Validation of a Spectral Operator for the Riemann Hypothesis Integrating Quantum Computing, Machine Learning, and Differential Methods

General Description

The Riemann Hypothesis (RH) states that all nontrivial zeros of the Riemann zeta function have a real part equal to ( \frac{1}{2} ). The Hilbert-Pólya conjecture suggests that these zeros correspond to the eigenvalues of a Hermitian operator ( H ). The central challenge is to explicitly construct a differential operator ( H ) whose eigenvalues align with the zeros of the zeta function, ensuring that it is self-adjoint and statistically compatible with Random Matrix Theory (RMT).

Previous studies indicate that 12th-order differential operators, adjusted via polynomial interpolation, can generate eigenvalues consistent with the zeros of the Riemann zeta function. However, fundamental questions remain unresolved:

Mathematical justification for the choice of the differential order of the operator.

Formal proof of the self-adjointness of ( H ) in a suitable functional space.

Refinement of the potential function ( V(x) ) to ensure higher spectral precision.

Generalization of the model to ( L )-automorphic functions.

Development of a robust computational framework for large-scale validation.

This research problem seeks to integrate quantum computing, machine learning, and advanced spectral methods to address these issues and significantly advance the formulation of a spectral model for the RH.

Objectives of the Problem

The objective is to construct, validate, and test a spectral operator ( H ) that models the zeros of the zeta function, ensuring:

Mathematical Construction of the Operator ( H )

Rigorously determine the differential order of the operator and justify the theoretical choice.

Explore alternatives such as pseudo-differential operators for greater flexibility.

Formalize the Hermitian and self-adjoint properties of ( H ) using functional analysis.

Refinement of the Potential ( V(x) )

Move beyond pure polynomial interpolation and integrate hybrid methods such as:

Fourier-Wavelet transformations to capture local spectral structures.

Deep learning (neural networks) for iterative refinement of ( V(x) ).

Quantum Variational Methods to minimize deviations between eigenvalues and zeta function zeros.

Statistical Validation and RMT Comparison

Test whether the eigenvalues of ( H ) follow the distribution of the Gaussian Unitary Ensemble (GUE).

Apply statistical tests such as Kolmogorov-Smirnov, Anderson-Darling, and two-point correlation analysis.

Expand computational testing to ( 10^{12} ) zeros of the zeta function.

Generalization and Applications

Extend the methodology to ( L )-automorphic functions and investigate similar patterns.

Explore connections with noncommutative geometry and quantum spectral theory.

Implement a scalable computational model using parallel computing and GPUs.

Formulation of the Problem

Given a differential operator of order ( n ):

[ H = -\frac{d^n}{dx^n} + V(x), ]

where ( V(x) ) is an adjustable potential, we seek to answer the following questions:

What is the optimal choice of ( n ) that provides the best approximation to the zeros of the zeta function?

Evaluate different differential orders (( n=4,6,8,12,16 )).

Test pseudo-differential operators such as ( H = |\nabla|^\alpha + V(x) ).

Can the operator ( H ) be rigorously proven to be self-adjoint?

Demonstrate that ( H ) is Hermitian and defined in an appropriate Hilbert space.

Use the Kato-Rellich theorem to ensure ( H ) has a real spectrum.

How can the refinement of the potential ( V(x) ) be improved to better capture the zeta zeros?

Apply neural networks to optimize ( V(x) ) dynamically.

Use Fourier-Wavelet methods to smooth out spurious oscillations.

Do the eigenvalues of ( H ) statistically follow the GUE distribution?

Apply rigorous statistical tests to validate the hypothesis.

Can the model be generalized to other ( L )-automorphic functions?

Extend the approach to different ( L )-functions.

Methodology

The solution to this problem will be structured into four phases:

Phase 1: Construction and Justification of the Operator ( H )

Mathematical modeling of the differential operator and exploration of alternatives.

Theoretical demonstration of self-adjointness and Hermitian properties.

Definition of the appropriate functional domain for ( H ).

Phase 2: Refinement of the Potential ( V(x) )

Development of a hybrid model for ( V(x) ) using:

Polynomial interpolation + Fourier-Wavelet analysis.

Machine Learning for adaptive refinement.

Quantum Variational Methods for spectral optimization.

Phase 3: Computational and Statistical Validation

Computational implementation for calculating the eigenvalues of ( H ).

Comparison with zeta function zeros up to ( 10^{12} ).

Application of statistical tests to verify adherence to GUE.

Phase 4: Generalization and Applications

Testing for ( L )-automorphic functions.

Exploring connections with noncommutative geometry.

Developing a scalable computational framework.

Expected Outcomes

Mathematical formalization of the operator ( H ), proving its Hermitian and self-adjoint properties.

Optimization of the potential ( V(x) ) through refinement via machine learning and quantum methods.

Statistical confirmation of the adherence of eigenvalues to the GUE, strengthening the Hilbert-Pólya conjecture.

Expansion of the methodology to other ( L )-automorphic functions.

Development of a scalable computational model, allowing large-scale validation.

Conclusion

This research problem unifies all previous approaches and introduces new computational and mathematical elements to advance the study of the Riemann Hypothesis. The combination of spectral theory, machine learning, quantum computing, and random matrix statistics offers an innovative path to investigate the structure of prime numbers and possibly resolve one of the most important problems in mathematics.

If this approach is successful, it could represent a significant breakthrough in the spectral proof of the Riemann Hypothesis, while also opening new frontiers in mathematics, quantum physics, and computational science.

0 notes

Text

VE477 Homework 8

Questions preceded by a * are optional. Although they can be skipped without any deduction, it is important to know and understand the results they contain. Ex. 1 — Fast multi-point evaluation and interpolation Let R be a commutative ring, u0, · · · , un−1 be n elements in R, and mi = X − ui , with 0 ≤ i < n, be n degree 1 polynomials in R[X ]. Without loss of generality we assume n to be a power…

0 notes

Text

Soft Computing, Volume 29, Issue 1, January 2025

1) KMSBOT: enhancing educational institutions with an AI-powered semantic search engine and graph database

Author(s): D. Venkata Subramanian, J. ChandraV. Rohini

Pages: 1 - 15

2) Stabilization of impulsive fuzzy dynamic systems involving Caputo short-memory fractional derivative

Author(s): Truong Vinh An, Ngo Van Hoa, Nguyen Trang Thao

Pages: 17 - 36

3) Application of SaRT–SVM algorithm for leakage pattern recognition of hydraulic check valve

Author(s): Chengbiao Tong, Nariman Sepehri

Pages: 37 - 51

4) Construction of a novel five-dimensional Hamiltonian conservative hyperchaotic system and its application in image encryption

Author(s): Minxiu Yan, Shuyan Li

Pages: 53 - 67

5) European option pricing under a generalized fractional Brownian motion Heston exponential Hull–White model with transaction costs by the Deep Galerkin Method

Author(s): Mahsa Motameni, Farshid Mehrdoust, Ali Reza Najafi

Pages: 69 - 88

6) A lightweight and efficient model for botnet detection in IoT using stacked ensemble learning

Author(s): Rasool Esmaeilyfard, Zohre Shoaei, Reza Javidan

Pages: 89 - 101

7) Leader-follower green traffic assignment problem with online supervised machine learning solution approach

Author(s): M. Sadra, M. Zaferanieh, J. Yazdimoghaddam

Pages: 103 - 116

8) Enhancing Stock Prediction ability through News Perspective and Deep Learning with attention mechanisms

Author(s): Mei Yang, Fanjie Fu, Zhi Xiao

Pages: 117 - 126

9) Cooperative enhancement method of train operation planning featuring express and local modes for urban rail transit lines

Author(s): Wenliang Zhou, Mehdi Oldache, Guangming Xu

Pages: 127 - 155

10) Quadratic and Lagrange interpolation-based butterfly optimization algorithm for numerical optimization and engineering design problem

Author(s): Sushmita Sharma, Apu Kumar Saha, Saroj Kumar Sahoo

Pages: 157 - 194

11) Benders decomposition for the multi-agent location and scheduling problem on unrelated parallel machines

Author(s): Jun Liu, Yongjian Yang, Feng Yang

Pages: 195 - 212

12) A multi-objective Fuzzy Robust Optimization model for open-pit mine planning under uncertainty

Author(s): Sayed Abolghasem Soleimani Bafghi, Hasan Hosseini Nasab, Ali reza Yarahmadi Bafghi

Pages: 213 - 235

13) A game theoretic approach for pricing of red blood cells under supply and demand uncertainty and government role

Author(s): Minoo Kamrantabar, Saeed Yaghoubi, Atieh Fander

Pages: 237 - 260

14) The location problem of emergency materials in uncertain environment

Author(s): Jihe Xiao, Yuhong Sheng

Pages: 261 - 273

15) RCS: a fast path planning algorithm for unmanned aerial vehicles

Author(s): Mohammad Reza Ranjbar Divkoti, Mostafa Nouri-Baygi

Pages: 275 - 298

16) Exploring the selected strategies and multiple selected paths for digital music subscription services using the DSA-NRM approach consideration of various stakeholders

Author(s): Kuo-Pao Tsai, Feng-Chao Yang, Chia-Li Lin

Pages: 299 - 320

17) A genomic signal processing approach for identification and classification of coronavirus sequences

Author(s): Amin Khodaei, Behzad Mozaffari-Tazehkand, Hadi Sharifi

Pages: 321 - 338

18) Secure signal and image transmissions using chaotic synchronization scheme under cyber-attack in the communication channel

Author(s): Shaghayegh Nobakht, Ali-Akbar Ahmadi

Pages: 339 - 353

19) ASAQ—Ant-Miner: optimized rule-based classifier

Author(s): Umair Ayub, Bushra Almas

Pages: 355 - 364

20) Representations of binary relations and object reduction of attribute-oriented concept lattices

Author(s): Wei Yao, Chang-Jie Zhou

Pages: 365 - 373

21) Short-term time series prediction based on evolutionary interpolation of Chebyshev polynomials with internal smoothing

Author(s): Loreta Saunoriene, Jinde Cao, Minvydas Ragulskis

Pages: 375 - 389

22) Application of machine learning and deep learning techniques on reverse vaccinology – a systematic literature review

Author(s): Hany Alashwal, Nishi Palakkal Kochunni, Kadhim Hayawi

Pages: 391 - 403

23) CoverGAN: cover photo generation from text story using layout guided GAN

Author(s): Adeel Cheema, M. Asif Naeem

Pages: 405 - 423

0 notes

Text

Unlocking the Power of Data Analysis with the MatDeck Math Functions Listing

Data analysis in today's world is essential to most decisions being made within and across industries. Be it the analysis of financial data, the work in scientific research, or large data processing for machine learning, there is always that right tool. One of such a powerful tool is MatDeck: a mathematical computing platform, capable of solving complicated calculations and analyses. In this post, we'll be focusing on how the MatDeck Math Functions Listing can unlock the power of data analysis with the help of key MD functions streamlining processes in data processing, statistical analysis, and optimizing tasks.

What Are MD Functions? MD functions in MatDeck are the pre-installed mathematical operations that reduce the complexity of data processing and analysis. It encompasses all operations ranging from simple arithmetic operations to advanced statistical methods and linear algebra operations. Using MatDeck Math Functions, data analysts, scientists, and engineers can easily process data, formulate models, and interpret results with less effort.

Important MD Functions for Data Analysis MatDeck provides a range of MD functions that allow various data analysis aspects to be covered. Below are some critical functions for data analysts:

Statistical Analysis Functions Perhaps one of the most powerful functionalities in MatDeck is its MD functions for statistical analysis. It will do some kind of data summary about big datasets pretty quickly to help summarize patterns and trends.

Mean and Median: Use the average() and median() functions to calculate the central tendency of your data. These are crucial when working with datasets that may contain outliers. Standard Deviation and Variance: With std() and var(), you can assess the spread of your data, giving you insights into its variability. Correlation and Covariance: you can measure correlation between variables and how variables change relative to each other via functions corr(), cov(), for regression analysis, building predictive models.

Matrix Operations and Linear Algebra Data analysis of large data bases requires handling enormous data and usually involves lots of matrix operations which are done within MatDeck linear algebra with help of MD function to perform direct manipulation of the matrices, apply matrix decompositions, solve the systems of the equations.

Matrix Multiplication and Inversion: Functions such as matmul() and inv() enable you to perform more complex matrix operations, which are often required in data science applications like dimensionality reduction or neural network computations. Eigenvalues and Eigenvectors: Compute eigenvalues and eigenvectors using the eig() function, which are basic in many techniques, including PCA for feature extraction and data compression.

Data Transformation and Cleaning Data transformation and cleaning is an important step in the data analysis pipeline. MatDeck contains MD functions that make this process much easier and efficient.

Resampling and Interpolation: Resampling occurs with functions such as upsample() and downsample(), which you can use at any rate for resampling, especially when working with time series. The function interp() is useful in interpolation for creating complete data from missing values. Filtering: The filter() function enables you to smooth out noisy data or extract useful features, which is a critical step before performing further analysis or feeding data into machine learning models.

Optimization and Modeling For predictive modeling, optimization is the key. MatDeck offers several MD functions that make optimization tasks easier, enabling you to build more accurate models.

Curve Fitting and Regression: A fit() is used to apply curves to sets of data. This is independent of whether or not you use linear regression or polynomial fitting techniques, among more complex techniques: this is what fits the best to your data. Optimization Functions: MatDeck's optimization suite, including functions like minimize() and optimize(), lets you find optimal values for parameters in your model, whether you're tuning hyperparameters for a machine learning algorithm or optimizing a cost function in an industrial setting. Real-World Applications of MD Functions in Data Analysis Let's explore a few scenarios where MatDeck Math Functions can significantly enhance data analysis:

Financial Data Analysis When analyzing financial data, you can apply statistical MD functions such as mean(), std(), and cov() to analyze the trend, volatility, and correlations between assets. Optimization functions also help in portfolio optimization, whereby you try to minimize risk while maximizing returns.

Predictive Modeling for Healthcare Linear regression, PCA and minimize() may all be employed for the healthcare use cases in such things as prediction of patient information by using this health-related data that comes from them.

Time-Series Forecasting For time series data, you will need to use upsample() and downsample() for resampling to different time intervals, while the smoothing functions filter() can remove noise. One can also make use of statistical functions to find trends and seasonality in the data. Conclusion The MatDeck Math Functions Listing is a comprehensive set of tools that speed up data analysis, make it more efficient, and accessible. Leverage MD functions for statistical analysis, matrix operations, data cleaning, and optimization to easily solve complex datasets and derive actionable insights. Whether in finance, healthcare, or machine learning, MatDeck has the power to simplify and accelerate your data analysis workflow.

With its user-friendly interface and advanced mathematical capabilities, MatDeck is truly an invaluable tool for any individual seeking to unlock the full potential of their data analysis projects.

0 notes