#RealityKit

Explore tagged Tumblr posts

Text

Now I want the Vision Pro…

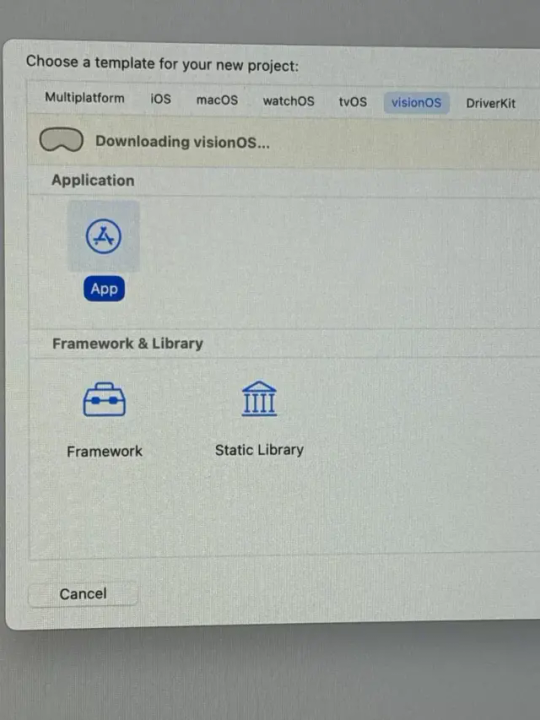

Started a little project for fun/hopefully will publish a simple Spatial App and tried out Simulator. Ahh; who’s ready to grab one of these Apple Vision Pros from the USA for me? Thank you! Trying the browser out at tfp.la Downloading visionOS simulator for Xcode Using Xcode with a plain-new visionOS project

View On WordPress

0 notes

Text

youtube

visionOS 26の新機能 - WWDC25

visionOS 26のエキサイティングな新機能について学びましょう。

強化されたVolumetric APIについて解説し、SwiftUI、RealityKit、ARKitの機能を組み合わせる方法を紹介します。

より高速なハンドトラッキングと空間アクセサリからの入力を使用して、魅力的なアプリとゲームを開発する方法について説明します。

SharePlay、Compositor Services、イマーシブなメディア、空間Web、Enterprise APIなどの最新アップデートの概要をいち早くご確認いただけます。

「イマーシブ」とは、「没入」「没入感のある」と定義されている言葉ではあります。

しかし、空間(によるスペイシェル)コンピューティング体験とは、3D映画館のスクリーンから飛び出した3D物体や3D特殊効果が自らの身体へ数センチの距離まで接近する3D表現ができるプラットフォームを実現可能にします。

2010年代に流行した3Dメガネをかけて3D映画館で見た感覚に近い感じがします(この場合、3DメガネがApple Vision Pro)

参考として・・・

イマーシブな体験は、ユーザーが物理的な世界とデジタルな世界の間で没入感を得ることを指します。

これは、視覚、聴覚、触覚、嗅覚、さらには味覚までを含むことができ、ユーザーに強い感情的なインパクトを与えることができます。

現在、イマーシブ体験はエンターテインメント業界を中心に広がっています。

例えば、VR(仮想現実)やAR(拡張現実)を利用したテー��パークやアトラクション、イマーシブシアターなどが人気です。

3D映画は、イマーシブ体験の一部として位置づけられます。3D映画は視覚的な深度を提供し、観客が映画の世界に入り込む感覚を強化します。

これにより、従来の2D映画よりも強い没入感を得ることができます。

イマーシブ体験は、エンターテインメントだけでなく、教育、医療、マップアプリ、車などの運転補助、交通標識など多岐にわたる分野で活用されています。

サードパーティー製のスマホケースのようにApple Vision Proと一体化したヘルメットもデザイン上相性が良いかもしれません。本体の重さを全体に分散します。

安全面からも普段からヘルメット着用の抵抗感もなくなります。

技術の進化とともに、ますます現実的でインタラクティブな体験が可能となり、今後もその需要は拡大していきます。

<おすすめサイト>

カスタム環境でのよりイマーシブなマルチメディア視聴体験の実現 - Apple Vision Pro

空間(によるスペイシェル)コンピューティング向けに3Dアセットを最適化 - Apple Vision Pro

空間(によるスペイシェル)Webの最適化 - Apple Vision Pro

visionOSにおけるスクリーンから飛び出すインタラクティブな体験の設計 - Apple Vision Pro

WebXRによる3Dイマーシブなインターネット体験の構築 - Apple Vision Pro

実用に向けた大規模言語モデルApple インテリジェンス 2024

Apple Vision Pro 2024

1 note

·

View note

Text

Apple WWDC 2025: iOS 19, macOS 16, and a Bold Leap Into AI

Apple’s highly anticipated Worldwide Developers Conference (WWDC) 2025 kicked off with a wave of innovation, as the tech giant unveiled iOS 19, macOS 16, watchOS 12, and a lineup of software upgrades that signal a bold new era driven by artificial intelligence and developer empowerment.

🔍 A Deeper Dive Into iOS 19: Intelligence Meets Personalization

Apple's iOS 19 brings an AI-first philosophy to iPhones, promising smarter, more personalized user experiences.

Key Highlights:

Smart Widgets now dynamically adjust based on user behavior, location, and time of day.

A new AI Writing Assistant built into Messages, Notes, and Mail can auto-suggest replies, summarize long texts, and even draft professional emails.

Vision Intelligence, powered by on-device neural engines, can now analyze images in real time to provide suggestions, context, and even live translations.

iOS 19 Journal introduces automatic memory logs based on activity, photos, and calendar events—entirely private and stored on-device.

These upgrades reflect Apple’s answer to the evolving expectations of mobile app users. And for businesses working with top mobile app developers USA wide, this shift creates new demands for cutting-edge apps that adapt intelligently.

💻 macOS 16 "Orion": Pro-Grade AI for Everyday Use

The new macOS 16, dubbed "Orion", builds on Apple's tradition of smooth integration and elegant UI—but this time with a twist: deep AI utility for productivity and creativity.

What’s New:

Xcode 16 comes with AI-powered code completion and real-time collaboration tools for developers.

Focus Studio lets users train their Macs to prioritize specific types of content or workflows using AI routines.

Native apps like Mail, Safari, and Preview are now supercharged with Apple Intelligence (AI) that summarizes emails, highlights key data, and offers quick action suggestions.

These capabilities will be especially beneficial for companies investing in custom software development services, as AI continues to reframe how professionals interact with digital environments.

⌚ watchOS 12: Smarter Health Tracking and Alerts

Apple Watch users now get access to Predictive Wellness, a new feature that combines biometric trends with lifestyle inputs to notify users about potential health anomalies before symptoms appear.

Enhanced Sleep Tracking and Stress Levels monitoring with automatic journaling.

Integration with Apple Health AI to provide daily health summaries and goal recommendations.

With the growing emphasis on healthtech, developers aligned with Flutter app development services USA will find opportunities to innovate in cross-platform health applications compatible with the Apple ecosystem.

🛠️ Developer-Focused Innovations

Apple isn’t just about the user-facing polish—it also dropped powerful tools for developers:

Swift 6.0 introduces built-in support for AI model inference and edge computing.

RealityKit 3 makes it easier to build immersive AR/VR experiences, especially for Vision Pro devices.

New APIs allow for cross-platform experiences between iPhone, iPad, Mac, and Vision Pro with minimal overhead.

These toolsets open up new playing fields for digital agencies, startups, and enterprises looking to collaborate with the best software developers USA for smarter and scalable app solutions.

🌐 AI Privacy: A Core Principle

While AI was clearly the theme of the day, Apple was quick to emphasize its commitment to privacy. Most AI computations run on-device, with no cloud dependencies, and Private Relay AI ensures that even intelligent suggestions remain encrypted and anonymized.

Apple continues to stand firm on its privacy-first values, carving a different path from competitors who rely heavily on cloud processing.

🎯 What This Means for Users and Developers

With WWDC 2025, Apple has officially stepped into the AI battlefield—joining Google, Microsoft, and OpenAI in a race to define the intelligent interface of the future. But true to its brand, Apple brings elegance, privacy, and seamless integration to the forefront.

From developers eager to build smarter apps, to users looking for intuitive tools that "just work"—this year’s updates aim to transform how we interact with our devices, one intelligent suggestion at a time.

#WWDC2025 #iOS19 #macOS16 #AppleAI #MobileAppDevelopment #CustomSoftwareDevelopment #AIPrivacy #BestSoftwareDevelopers #TechTrendsUSA #AppleVisionPro

#WWDC2025#iOS19#macOS16#AppleAI#MobileAppDevelopment#CustomSoftwareDevelopment#AIPrivacy#BestSoftwareDevelopers#TechTrendsUSA#AppleVisionPro

0 notes

Text

The iOS 26 Impact: Redefining Smart Mobility in the AI Era

In 2025, the smartphone is no longer just a communication device—it’s a personal assistant, medical tool, translator, productivity hub, and entertainment center. With the anticipated launch of iOS 26 at WWDC 2025, Apple is poised to redefine what mobility and intelligence mean in our daily lives.

From seamless AirPods-based live language translation to AI-generated emoji mashups and personalized app experiences, iOS 26 promises a leap forward. This convergence of usability and AI-powered convenience is already featured in the latest news on artificial intelligence and is a recurring theme in trending technology news globally.

Spatial Computing and the Vision of Tomorrow

Apple’s broader investment in spatial computing—especially with the Vision Pro ecosystem—could influence iOS 26 design. iOS may evolve not just to fit flat screens, but also to complement 3D interfaces, wearable AR, and spatial interaction layers. Analysts speculate that features like multimodal navigation, eye tracking compatibility, and gesture control could quietly be built into iOS 26 or its developer frameworks.

This evolution ties directly into future technology news and the future of AI news, where natural interfaces are replacing traditional input methods. Startups focused on spatial UX, virtual training, and immersive learning platforms stand to benefit greatly—an insight echoed across latest startup news discussions and business innovation conferences worldwide.

Economic Ripple Effect: What iOS 26 Means for the Global Tech Economy

Whenever Apple innovates at scale, it sends ripples through the global economy. From chipmakers and accessory manufacturers to software vendors and app startups, the iOS ecosystem sustains millions of jobs and billions in revenue.

Industry watchers and economists are already highlighting iOS 26 in top business news today due to:

Expected surge in app development post-release

Potential market share shift in premium devices

Influence on consumer buying cycles

Increased enterprise adoption of iOS-based workflows

Investors tracking current business news are factoring WWDC outcomes into Q3 and Q4 tech stock forecasts. This anticipated release is a hot topic in latest international business news, especially for stakeholders in the U.S., Europe, India, and Southeast Asia.

Inclusive AI: Apple’s Approach to Ethical Intelligence

Unlike some competitors, Apple is positioning its AI capabilities not just as powerful, but as ethical, private, and inclusive. iOS 26 is expected to deepen this commitment with features that prioritize:

On-device AI processing for maximum privacy

Accessibility improvements for disabled users

Context-aware responses aligned with user behavior and mental health

Such values-based innovation is gaining momentum in current international business news and policy circles. With increasing scrutiny on AI usage, Apple’s privacy-first AI is being viewed as a model for responsible tech development—a narrative now central to today’s business news headlines in English.

The Developer Perspective: WWDC 2025 as a Launchpad for Innovation

For the global developer community, WWDC has always been more than just a keynote. It’s a launchpad for the next generation of applications, platforms, and even startups. With iOS 26’s rumored features, developers can expect:

AI-based SDKs that support contextual personalization

Tools to integrate with Apple’s expanding health, fitness, and finance APIs

Enhanced RealityKit features for spatial and AR design

This empowers a wave of innovation in sectors like edtech, medtech, travel, fintech, and gaming—making it a goldmine of opportunity in business related news today and future tech investment roundups.

WWDC 2025: A Strategic Event with Global Implications

With iOS 26 expected to headline, WWDC 2025 isn’t just an Apple event—it’s a strategic milestone for the global technology industry. It’s where AI meets utility, where privacy meets personalization, and where the future of mobile computing is defined.

Key Takeaways:

Apple’s AI advancements will drive both consumer trends and enterprise strategies.

iOS 26 may influence how AI regulations are shaped, especially in the EU and U.S.

Investors, developers, and businesses must prepare for rapid shifts in user expectations and platform requirements.

Startups aligned with Apple’s AI, health, and spatial computing ecosystem are likely to attract funding and attention—central themes in top international business news today.

Final Thought: A New Chapter in Digital Intelligence

As we approach WWDC 2025, the excitement is more than justified. The expected launch of iOS 26 could mark a transformative chapter in how we experience the digital world. It’s not just about new features—it’s about a smarter, more human, and more ethical way of living with technology.

#latest startup news#future technology news#current business news#top business news today#today's business news headlines in english#top international business news today#current international business news#business related news today#latest international business news#latest news in business world#future of AI news#latest news on artificial intelligence#trending technology news

0 notes

Text

Entering the Third Dimension: What Developers Need to Know About Apple's visionOS and Spatial Apps

The launch of Apple Vision Pro, powered by visionOS, marks a monumental shift in how we interact with technology. It's not just a new device; it's the dawn of spatial computing, a paradigm where digital content seamlessly blends with our physical world. For developers, this isn't merely an upgrade to an existing platform; it's an entirely new canvas, demanding a fresh perspective on design, interaction, and user experience.

If you're a developer eyeing this revolutionary platform, here's a deep dive into what you need to know about visionOS and building spatial mobile apps.

The Foundation of Spatial Computing: visionOS

At its core, visionOS is built upon the familiar foundations of macOS, iOS, and iPadOS, yet it introduces unique concepts tailored for a three-dimensional environment. This means developers can leverage their existing Swift and SwiftUI knowledge, but must also embrace new frameworks and design principles to truly harness the power of spatial computing.

Key Concepts in visionOS:

Windows: These are the familiar 2D interfaces, similar to those on a Mac or iPad, but with the added dimension of depth. They can contain traditional SwiftUI views and controls and can be freely positioned and resized by the user in their physical space.

Volumes: Going a step beyond windows, volumes are 3D SwiftUI scenes that can showcase three-dimensional content. Think of them as interactive dioramas that users can view from any angle, either within the shared space alongside other apps or in a dedicated "Full Space."

Spaces (Shared and Full):

Shared Space: The default environment where multiple visionOS apps coexist side-by-side, much like apps on a desktop. Users can arrange windows and volumes as they please.

Full Space: For truly immersive experiences, an app can transition into a Full Space, where it becomes the sole focus. Here, developers can create unbounded 3D content, virtual environments (portals), or even fully immerse the user in a different world.

Immersion Levels: visionOS offers a spectrum of immersion, allowing developers to choose the right level for their content. From windowed, UI-centric experiences to fully immersive virtual worlds, the platform encourages thoughtful design that prioritizes user comfort and engagement.

The Developer's Toolkit

Apple has provided a robust set of tools to help developers navigate this new frontier:

Xcode: As with all Apple platforms, Xcode is your integrated development environment (IDE). It includes the visionOS SDK, simulators, debugging tools, and project management capabilities.

SwiftUI: This modern, declarative UI framework is central to visionOS development. It allows you to build mobile app interfaces that adapt gracefully to different immersion levels and interactions.

RealityKit: The powerhouse for 3D content. RealityKit enables you to create, manage, and animate 3D objects and scenes within your spatial apps. It handles rendering, physics, and spatial audio, making it easier to bring your virtual worlds to life.

Reality Composer Pro: This companion tool, integrated with Xcode, is essential for designing and preparing 3D content for your visionOS apps. You can import models, create animations, set up materials, and preview your scenes directly within Reality Composer Pro.

Unity: For developers with existing Unity projects or those who prefer its powerful 3D authoring tools, Unity provides robust integration with visionOS, allowing access to features like passthrough and Dynamically Foveated Rendering.

ARKit: While RealityKit handles the rendering of 3D content, ARKit is crucial for understanding the real world. It enables capabilities like scene reconstruction (identifying surfaces), plane detection, and hand tracking, allowing your virtual content to interact realistically with the user's environment.

Designing for a Spatial World: Human Interface Guidelines

Building for visionOS goes beyond just technical implementation; it demands a fundamental shift in design thinking. The Human Interface Guidelines (HIG) for visionOS emphasize user comfort, intuitive interaction, and seamless immersion.

Key Design Principles:

Eye and Hand Interactions: The primary input methods are eye gaze (for targeting and selection) and indirect hand gestures (for activation). Direct touch is also supported for close-range interactions. Developers must design controls that are easily targetable with the eyes and comfortably activatable with subtle hand movements.

Comfort and Ergonomics: Content should be displayed within the user's natural field of view, minimizing head and body movement. Avoid rapid, jarring motion or content that forces the user to constantly shift their focus. Prioritize experiences that allow users to remain relaxed and at rest.

Spatial Audio: Leveraging the Vision Pro's advanced spatial audio capabilities is crucial for creating truly immersive experiences. Sounds should be positioned realistically in 3D space, enhancing the feeling of presence and providing important cues.

Dynamic Scaling: Windows and UI elements dynamically scale as users move them closer or further away, ensuring legibility and usability at various distances.

Accessibility: As with all Apple platforms, visionOS emphasizes accessibility. Developers should support features like Dynamic Type, provide alternatives to gestures, and ensure controls are adequately sized and spaced.

Challenges and Opportunities for Developers

While the potential of spatial computing is immense, developers will face unique challenges:

New Design Paradigms: Shifting from 2D flat screens to a 3D spatial canvas requires a new way of thinking about UI, UX, and information architecture.

Performance Optimization: Maintaining a smooth, high-fidelity experience in a 3D environment is computationally intensive. Developers must optimize their apps for performance, paying close attention to frame rates (targeting 90 FPS), power consumption, and efficient rendering.

Content Creation: Developing compelling 3D assets and environments can be complex and time-consuming. Proficiency with tools like Reality Composer Pro, Unity, or other 3D modeling software will be crucial.

User Comfort and Cybersickness: Ensuring a comfortable experience and mitigating cybersickness (motion sickness in virtual environments) is paramount. This involves careful consideration of motion, camera movement, and visual stimulation.

Limited API Options (Early Stages): While the SDK is robust, it's a new platform, and certain advanced functionalities might still be evolving or have limited API access compared to mature platforms.

Smaller Audience (Initial Phase): The Vision Pro is a premium device, meaning the initial user base will be smaller than for iPhones or iPads. Developers must weigh this against the potential for innovation and early market leadership.

However, these challenges come with immense opportunities:

Pioneering New Experiences: Developers have the chance to define what spatial computing truly means, creating entirely new categories of apps and experiences that are impossible on traditional devices.

Enhanced Engagement: Spatial apps offer a deeper level of immersion and engagement, allowing users to interact with content in a more natural and intuitive way.

Revolutionizing Industries: From education and healthcare to entertainment and productivity, spatial computing has the potential to transform numerous industries. Imagine surgical training with realistic 3D models, collaborative design sessions in shared virtual spaces, or truly immersive storytelling.

Early Adopter Advantage: Developers who invest in visionOS now can establish themselves as leaders in this emerging field, gaining valuable experience and insights as the platform evolves.

Moving Forward: Building the Future

Apple's visionOS and the Apple Vision Pro represent a significant leap towards a future where digital and physical realities seamlessly merge. For developers, this is an invitation to innovate, to break free from the constraints of traditional screens, and to create experiences that truly transport and empower users.

The journey into spatial computing will require a blend of technical expertise, creative vision, and a deep understanding of human interaction in three dimensions. By embracing the unique capabilities of visionOS and adhering to thoughtful design principles, developers can play a pivotal role in shaping the next generation of computing. The future is spatial, and the time for developers to build it is now.

#app development company in delhi#mobile app development company in delhi#app developer in delhi#app development agency in delhi#app development companies in delhi

0 notes

Text

What are the key considerations and steps involved in Vision Pro app development?

Developing apps for Apple Vision Pro requires attention to unique hardware and user experience features:

Spatial Computing: Leverage spatial input like eye-tracking, hand gestures, and voice commands.

Platform: Use visionOS with Swift and RealityKit for native development.

Design: Follow Apple’s Human Interface Guidelines for immersive UI/UX.

Testing: Simulate environments using Xcode and test with real devices if available.

Performance: Optimize 3D assets and interactions for smooth rendering.

AR Integration: Use ARKit for augmented reality elements.

Conclusion: Vision Pro app development demands a focus on immersive, intuitive design backed by Apple's spatial computing tools.

0 notes

Text

How Much Does It Cost to Hire a VisionOS App Development Company?

Apple’s VisionOS has made waves by introducing an entirely new way to experience digital content, blending apps into the physical world with spatial computing. From immersive productivity tools to 3D shopping experiences and next-gen healthcare applications, VisionOS opens endless possibilities.

But here’s the catch, groundbreaking tech comes with unfamiliar territory, especially when it comes to budgeting.

If you’re a startup founder, CTO, or tech lead planning to build for Apple Vision Pro, you're likely wondering, “How much will it actually cost to build a VisionOS app?”

This blog gives you a complete breakdown from the core cost drivers to development timelines, team structures, and what to expect when working with a VisionOS app development company.

Let’s get started.

What Is VisionOS and Why Is It Gaining Attention?

VisionOS is Apple’s operating system designed specifically for spatial computing on the Apple Vision Pro. Unlike iOS or macOS, VisionOS blends digital content into the real world through gesture tracking, eye movements, and voice commands.

This isn’t just another app platform. It’s a new computing paradigm.

Startups and tech innovators are already exploring VisionOS for:

Virtual collaboration and productivity tools

3D product configurators and immersive shopping

Medical simulations and patient care assistance

Immersive learning and training platforms

Creative AR/VR storytelling experiences

If your product has a visual, interactive, or spatial element, VisionOS might be your ideal frontier, and a VisionOS app development company can help you take that leap with the right strategy and tech stack.

What Types of Apps can you build with VisionOS?

The real question is, what can’t you build?

With spatial input and full 3D environments, VisionOS apps can transform how people:

Work: Visual dashboards, spatial whiteboards, and virtual meeting rooms

Shop: Virtual try-ons, 3D product walkthroughs

Learn: Medical training, engineering simulations, language immersion

Relax: Spatial games, ambient environments, mixed-reality experiences

Create: Design tools for architects, artists, and developers in 3D

Whether you're building a consumer-facing app or an enterprise-grade solution, the key is to pair your idea with the right UX, motion design, and system performance, all things a VisionOS app development company specializes in.

How Is VisionOS Different from iOS or macOS Development?

If your team has built for iPhone or iPad before, that would be great, but VisionOS isn’t a simple UI reskin. It introduces a completely new interaction model.

Here’s how it’s different:

No physical input devices: Users interact using eyes, hands, and voice, not taps or clicks.

Spatial awareness: Apps must respond to depth, environment lighting, and movement.

Volumetric interfaces: Forget 2D screens. You’re designing for 3D space.

High-performance requirements: Lag or jank can disrupt immersion; performance matters more than ever.

That means building a VisionOS app requires expertise in ARKit, RealityKit, SwiftUI, Metal, and new APIs released specifically for the Vision Pro.

If your internal team isn’t fluent in these tools, hiring a VisionOS app development company is your fastest path to production.

What Factors Influence the Cost of VisionOS App Development?

Just like any software project, the cost of building a VisionOS app depends on several core variables, but with spatial computing, a few new and unique factors come into play.

Here’s what drives the cost:

App Complexity and Features

Are you building a basic 3D viewer or a fully interactive, real-time collaborative platform? The more complex your features, like hand-tracking interactions, voice commands, or dynamic environment rendering, the more time and resources it takes to build.

Custom UI and UX for Spatial Environments

VisionOS apps live in 3D space, which means every menu, button, or interaction must feel intuitive and “real.” Designing spatial UIs (volumetric interfaces) requires motion designers, 3D artists, and developers working closely; this alone can add 20–30% to your typical app design costs.

3D Assets and Environments

Need custom models, product visualizations, or explorable scenes? If you're not using prebuilt assets, you'll need a team to create high-quality, optimized 3D content, a big factor in both budget and performance.

Device Testing and Optimization

Vision Pro apps must run flawlessly. That requires hands-on testing, performance tuning for eye tracking and gesture recognition, and real-world usage simulations. This testing phase is longer and more involved than standard mobile apps.

The VisionOS App Development Company You Hire

Agencies with deep Apple ecosystem experience and VisionOS expertise will charge more, but they also reduce your risk, build faster, and deliver higher-quality code. You get what you pay for.

How Much Does It Cost to Develop a VisionOS App in 2025?

So, what’s the actual investment required to bring a VisionOS app to life?

While final pricing always depends on your app’s scope, features, and partner, here’s a general idea based on current industry rates:

If you're building a simple 3D utility or viewer app with basic interactions and minimal logic, you can expect to spend a total of around $25,000 to $50,000. These apps typically showcase static content or offer limited interactivity and are great for quick MVPs or product demos.

For a moderately complex app that includes customized UI, business logic, and real-time interactions, such as a training simulation or a product walkthrough, the cost usually ranges from $50,000 to $120,000. These apps require more advanced development and user experience design tailored to spatial environments.

A high-performance VisionOS app that provides full 3D immersion, gesture control, advanced animations, and multi-layered environments could cost anywhere between $120,000 and $250,000 or more. These experiences often demand custom 3D asset development, intensive testing, and significant engineering time.

At the top end, enterprise-grade or multi-user collaborative VisionOS platforms, such as virtual offices, healthcare training tools, or remote diagnostic apps, may cost $200,000 to $400,000+, especially when built to scale, integrate with external systems, or support multiple device types.

How Much Time Does It Usually Take to Develop a Fully Function Visionos Application from Scratch?

The timeline to build a VisionOS app can vary significantly based on your project’s complexity, goals, and feature set. On average, most VisionOS development projects fall within a 3 to 9-month window, but that can stretch further depending on how immersive or technically demanding the app is.

If you’re building a simple prototype or MVP, something like a 3D catalog viewer or static content showcase, you might be looking at a development cycle of 8 to 12 weeks. These projects move quickly, especially if you’re using pre-built assets or focusing on proof of concept.

A mid-level VisionOS app with dynamic user interaction, custom UI design, and integrated features like voice commands or real-time data syncing will likely take 4 to 6 months. This includes discovery, 3D interface design, development, testing, and polishing the user experience.

For high-complexity or enterprise-grade apps, such as collaborative 3D environments, training simulations, or healthcare-focused applications, expect timelines closer to 7 to 12 months, especially if your app requires compliance with medical or data privacy regulations, or if it depends on building custom backend infrastructure.

It’s important to note that VisionOS is a new and evolving platform, so even small tasks like performance tuning or testing across use cases, can take longer than with mature platforms like iOS. Working with an experienced VisionOS app development company can assist in streamlining the process and avoid costly slowdowns.

What Skills Should a VisionOS App Development Company Have?

VisionOS development goes far beyond just writing Swift code. You're building for an entirely new kind of user experience, one that blends 3D space, real-world interaction, and high-performance computing.

That’s why partnering with a VisionOS app development company means more than hiring mobile developers. You need a team that blends design, motion, and engineering expertise to create immersive, intuitive, and performant spatial apps.

Here’s what the ideal team should bring to the table:

Expertise in Apple’s VisionOS Ecosystem

This includes deep knowledge of Swift, SwiftUI, RealityKit, ARKit, and Metal. These are the core frameworks used to create spatial environments, animate 3D content, and integrate with the Apple Vision Pro hardware.

3D and Motion Design Capabilities

Since you’re designing for space, your app’s interface won’t live on a screen; it will live in the air. You’ll need experts in 3D modeling, motion graphics, and spatial design who understand how to create fluid, natural interactions.

UX Design for Spatial Interfaces

Regular UI/UX design won’t cut it. Your team must understand spatial design principles, like depth layering, gesture mapping, and gaze interaction, to make the experience intuitive and user-friendly.

Cross-Functional Developers and System Architects

From data sync and cloud infrastructure to performance optimization and real-time feedback loops, the backend and frontend must work in harmony. Teams that understand how to build stable, scalable systems for VisionOS will save you massive time and headaches.

Quality Assurance with Spatial Testing

Testing VisionOS apps is a different beast. QA engineers need to simulate real-world use, device movement, environmental light conditions, and even user behavior to ensure smooth, crash-free performance.

Hiring a well-rounded VisionOS app development company ensures that all of these skills are integrated into your build, not pieced together by freelancers or siloed teams.

What’s Included in a VisionOS App Development Estimate?

If you’ve never built a spatial app before, it’s easy to underestimate what goes into the final quote. A good VisionOS app development company should give you a clear, itemized estimate, not just a number pulled from the air.

Here’s what a complete cost estimate typically includes:

Discovery & Strategy Workshops

This is the foundation level. Your development partner will completely assist in clarifying your key app’s goals, defining user flows, exploring platform capabilities, and choosing the right technical approach. It’s where vision meets feasibility.

Spatial UI/UX and 3D Experience Design

Designing for VisionOS means creating volumetric layouts, custom motion flows, and immersive scenes, not just wireframes. This phase includes 3D modeling, animations, environment setup, and user interaction planning.

Frontend & Backend Development

Here’s where your app actually comes to life. Development covers:

Coding the spatial interfaces (using SwiftUI + RealityKit)

Backend logic (databases, servers, APIs)

Integrations with tools like Firebase, health data, and more

QA Testing & Optimization for VisionPro

QA is crucial in immersive environments. Testing includes gesture recognition, performance under load, compatibility with various light environments, and usability across different use cases.

App Store Deployment & Support

The VisionOS applications go through Apple’s review process, which includes strict guidelines. Your team should handle app store submission, compliance, and any post-launch tweaks or patches.

Project Management & Documentation

Expect clear timelines, agile sprints, transparent updates, and well-documented code. Good development companies make sure your project stays on track and is easy to scale later.

In short, you’re not just paying for coding. You’re investing in a comprehensive process that includes everything needed to design, build, test, and launch a spatial app with minimal risk.

How to Choose the Right VisionOS App Development Company?

Building a spatial app for VisionOS isn’t just about hiring developers. It’s about choosing a partner who understands the technology, your business goals, and how to merge the two into a seamless, immersive product.

But with so many agencies and freelancers out there, how do you find the right fit?

Here are key things to look for when evaluating a VisionOS app development company:

1. Proven Experience in the Apple Ecosystem

Check if they’ve built iOS, iPadOS, or ARKit apps before and bonus points if they’ve already delivered early VisionOS prototypes. Companies that understand Apple’s ecosystem will navigate VisionOS standards more efficiently and avoid rookie mistakes.

2. In-House 3D, UI/UX, and Motion Experts

VisionOS apps rely heavily on interaction design, spatial UI, and 3D responsiveness. Make sure your vendor has dedicated designers and motion specialists, not just coders, who understand volumetric design and real-time rendering.

3. Clear Project Process and Communication

Can they walk you through how your app goes from idea to launch? Do they offer roadmaps, regular demos, and check-ins? A highly professional team will have a structured, transparent process to guide you through each stage without tech jargon.

4. Scalable Engagement Options

You may want to start with an MVP and then expand. The right partner will offer flexible engagement models, such as fixed-price, time-and-material, or dedicated teams, so you can scale your business based on your needs and budget.

5. Strong Post-Launch Support

Your VisionOS app will need updates, improvements, and possibly new features as Apple updates the platform. Look for a company that offers long-term support, monitoring, and performance optimization after launch.

Choosing a qualified VisionOS app development company is more than a hiring decision; it’s a strategic partnership. The right team won’t just deliver an app; they’ll help shape your product for success in this new spatial era.

Is VisionOS Development Worth the Investment for Startups?

If you're leading a startup, every dollar you spend has to move the needle, and VisionOS development isn’t cheap. So, is it the right move now?

Here’s the honest take, yes, but only if you play it strategically.

VisionOS isn’t just another platform. It’s Apple’s first serious step into the spatial computing future, and like the early days of the App Store or iPhone, early adopters have a chance to lead.

That means less competition, more visibility, and massive potential to shape new user habits before the space gets crowded.

Here’s why startups should seriously consider it:

High-impact brand positioning: Building a VisionOS app now shows innovation and future-readiness.

New user experiences: You’re not recycling mobile ideas; you’re creating immersive, memorable products.

Cross-industry potential: From healthcare to retail, training to real estate, VisionOS fits multiple use cases.

Investor interest: Emerging tech and spatial computing are on the radar for VCs looking at the next frontier.

Long-term leverage: Even a simple MVP today positions you to scale as the hardware and ecosystem grow.

That said, you don’t have to build the next Photoshop for spatial computing right away.

Start small, test real use cases, and partner with a VisionOS app development company that knows how to turn bold ideas into practical execution.

Want to be ready for what’s next? Now’s the time.

Build a Next-Gen App with a Trusted VisionOS App Development Company

Whether you're exploring an MVP or planning a full-scale immersive product, our team at Kody Technolab is here to help you navigate VisionOS the smart way with strategy, scalability, and speed.

0 notes

Text

The Future of iOS Development: Skills Every Developer Should Learn

The landscape of iOS development is perpetually evolving driven by technological advancements. As consumer demands keep changing, the need to hire iOS developers with a competent skill set is more important than ever.

Understanding these emerging skills is the key to building teams capable of taking advantage of opportunities and addressing challenges to deliver innovative and user-centric applications. In this article, let’s look at the essential skills that define the future of iOS development.

Charting the Path: Key Skills for Tomorrow's iOS Developers

Understanding of SwiftUI

SwiftUI is transforming the way iOS apps are designed and produced by providing a more uniform foundation for UI development across all Apple platforms. Developers who master SwiftUI are capable of constructing more adaptive, responsive, and user-friendly interfaces.

Swift programming

Swift proficiency is a non-negotiable skill. Swift's frequent modifications and enhancements necessitate developers to stay up to speed on the latest versions and features to create efficient, scalable projects.

Augmented reality

ARKit and RealityKit have opened up new possibilities for immersive app experiences. When you hire iOS developers they must be proficient in using these tools to create new features and applications in a variety of industries, including gaming and retail.

Machine learning and AI

With Core ML and Create ML, Apple makes it easy for iOS developers to incorporate machine learning into their products. Understanding these frameworks, as well as AI and machine learning principles, is critical for rendering smarter and more personalized user experiences.

Familiarity with cloud integration

Cloud services such as iCloud are critical to delivering a consistent user experience across devices. Developers who want to enhance app functionality and data accessibility must understand cloud integration strategies and best practices.

As the demand for professionals with advanced skills is soaring, salary expectations are also rising. Therefore, you must conduct an iOS developer salary survey to get valuable insights related to the current salary trends. Utilizing tools like the Uplers salary analysis tool will help you with this type of information so that you can attract and retain top iOS talent.

Concluding Thoughts

The future of iOS app development looks bright with endless opportunities for innovation and creativity. By focusing on the evaluation of these key skills when you hire iOS developers, you can be assured of getting valuable assets to stay ahead in the tech industry.

0 notes

Text

Exploring the Cutting-Edge: The Future of Augmented Reality in iOS App Development in 2024

Introduction

In the realm of mobile technology, Apple's iOS ecosystem has continually pushed the boundaries of innovation, offering users and developers alike a rich playground for creativity and functionality. Augmented Reality (AR) stands as one of the most exciting frontiers in this domain, blending the virtual world with the real one seamlessly. As we venture into 2024, the landscape of AR in iOS apps development company is poised for remarkable advancements, driven by Apple's relentless pursuit of excellence and the ever-evolving demands of users.

Revolutionizing User Experiences

Augmented Reality has already made significant strides in transforming user experiences across various industries, from gaming and entertainment to education and retail. In 2024, this trend is set to intensify as iOS developers harness the power of ARKit and other cutting-edge technologies to create immersive and interactive applications.

Imagine shopping for furniture and being able to visualize how a particular sofa would look in your living room through your iPhone or iPad. With advancements in AR, users can expect a more lifelike and accurate representation of products, leading to increased confidence in their purchasing decisions.

Moreover, AR is poised to revolutionize the way we learn and interact with information. Educational apps can utilize AR to offer hands-on experiences, allowing students to dissect virtual organisms or explore historical landmarks from the comfort of their classrooms.

Enhanced Development Tools

Apple's commitment to empowering developers has been a cornerstone of its success, and the company continues to invest in tools and frameworks that streamline the AR development process. In 2024, developers can look forward to even more robust and intuitive tools that simplify the creation of AR experiences.

ARKit, Apple's AR development platform, is expected to receive significant updates, offering developers enhanced capabilities for spatial mapping, object recognition, and motion tracking. These advancements will enable developers to create more sophisticated AR applications with greater ease and efficiency.

Additionally, the introduction of new programming languages and frameworks tailored for AR development, such as RealityKit and Reality Composer, will further accelerate innovation in this space. These tools abstract the complexities of AR development, allowing developers to focus on crafting compelling user experiences without getting bogged down by technical intricacies.

Seamless Integration with Wearables

As wearable technology continues to gain traction, the integration of AR with devices like the Apple Watch and future iterations of augmented reality glasses holds immense promise. In 2024, we can expect to see tighter integration between iOS devices and wearables, unlocking new possibilities for AR experiences.

For instance, users may receive real-time AR notifications on their Apple Watch, overlaying contextual information directly onto their surroundings. This seamless integration between devices will enhance the overall user experience, making AR more accessible and intuitive than ever before.

Furthermore, the emergence of augmented reality glasses, rumored to be in development by Apple, could fundamentally reshape the way we interact with the digital world. These glasses would seamlessly integrate with iOS devices, offering users a hands-free AR experience that feels truly immersive and natural.

Privacy and Security Considerations

As the capabilities of AR applications continue to expand, so too do the concerns surrounding privacy and security. In 2024, Apple is expected to double down on its commitment to user privacy, implementing stringent measures to protect user data in AR applications.

With features like App Tracking Transparency and on-device processing, Apple aims to give users more control over their data and ensure that sensitive information remains secure. Developers will need to adhere to strict guidelines and best practices to safeguard user privacy while delivering engaging AR experiences.

Conclusion

The future of augmented reality in iOS apps development company in 2024 is teeming with possibilities, driven by technological advancements, enhanced development tools, and seamless integration with wearables. As Apple continues to push the boundaries of innovation, developers have an unprecedented opportunity to create immersive and transformative AR experiences that redefine how we interact with the digital world.

With a focus on user-centric design, privacy, and security, the stage is set for AR to become an integral part of the iOS app ecosystem, offering users unparalleled experiences that blur the lines between the virtual and the real. As we embark on this journey into the future of AR, one thing is certain: the possibilities are limitless, and the only limit is our imagination.

0 notes

Text

How effective is Swift’s AR technology in developing immersive applications?

Introduction:

Since time immemorial, humans have been imaginative and have always envisioned new concepts, such as superheroes or aliens. However, the most recent obsession among innovators is immersive technology, i.e., integration of virtual content with the physical environment, with about 6.3 billion dollars in revenue generated in 2020. This is evident from the popularity of Nintendo’s Pokémon Go game and IKEA Place.

Escalated by the post-pandemic environment, rapid digitalization and increased reliance on smart devices have led businesses to look for ways to cater to people using immersive applications. Immersive applications improve processes by bringing digital elements for better visualization, increasing user experience that translates to profitability.

Immersive applications actualize distinct experiences by integrating the physical world with a simulated reality. These technologies allow humans to have new experiences by enhancing, extending, or creating a mixed reality.

Among the different types of immersive technology are Augmented Reality (AR), 360, Extended Reality (XR), Mixed Reality (MR), and Virtual Reality (VR). The augmented and virtual reality market will surge into the market size of 160-billion-dollars by 2023, according to Statista’s forecast. Consumers’ attraction to immersive applications is visible by the increasing market size encouraging developers to offer relevant solutions.

Developers can create AR application for Apple products using various tools, frameworks, and languages including, but not limited to Xcode, ARKit, RealityKit, Swift, and AR creation tools.

This article involves understanding the efficacy of Swift in AR technology for developing immersive applications for business initiatives. Prior to that, it is crucial to comprehend AR and its effect on immersive applications.

Augmented Reality Apps and Its Applications

AR works by layering a digital layer on top of the real world and enhancing the user experience. The digital layer or augmentation consists of information or content consisting of videos, images, and 3D objects, providing a naturally semi-immersive and visual experience for user interaction.

AR has unlimited scope for innovation due to its non-restrictiveness of user vision, cost-effectiveness, and high engagement. Mobile AR users are expected to surge from 200 million in 2015 to 1.7 billion worldwide by 2024.

AR Application across industry verticals:

Apps use augmented reality to bring products to life by using 360-degree views either with headsets or through smartphone cameras.

Automotive Industry is using AR for designing, testing, and sale of vehicles saving much money and improving products simultaneously, e.g., Jaguar Land Rover, BMW Virtual Viewer, Hyundai AR Lens for Kona.

The real estate industry is being transformed by using AR technology and is bringing the advantage of viewing properties from people’s homes.

The tourism industry uses AR technology to provide users with amazing experiences of the various vacation locations, e.g., World Around Me, Viewranger, Smartify, AR City, Guideo, Buuuk.

AR provides simulation of work areas and models to help healthcare professionals familiarize themselves. This helps them to work on real patient bodies expertly.

Users can experience an immersive experience in retail and try things before making purchases using the plethora of AR apps for online shopping, including clothing, furniture, beauty products, and many more, e.g., Houzz, YouCam Makeup, GIPHY World, Augment.

AR also has wide applications in the education industry, bringing a new dimension to lessons and experiments, e.g., Mondly AR.

Educational, entertainment, and practical applications in real life, e.g., ARCube, AR-Watches, MeasureKit, Jigspace.

Many AR-powered navigation apps assist users by supplementing roadmaps with interactive features, e.g., google maps live view in iOS.

Gaming is one of the most popular applications of AR providing entertainment, e.g., Angry Birds AR.

AR has provided significant benefits in the defense sector too by offering AR-simulations of machines allowing safe and easy assimilation of information regarding the work environment and equipment.

Swift and AR Technology

Apple has introduced a comprehensive set of tools and technologies for creating amazing AR application as mentioned below:

Swift is an open-source general-purpose compiled programming language developed by Apple for its app development.

Xcode is an integrated development environment for iOS and Mac. It has all the tools needed to develop an application, including a compiler, a text editor, and a build system in one software package. Xcode uses the Swift programming language to deliver an assortment of AR templates to create AR application rapidly.

ARKit is a framework for developers that allow designing augmented reality apps for iOS devices, such as the iPad and iPhone. It helps to device AR experiences quickly using the camera, motion sensors, and processors of the iOS devices.

ARKit Features:

ARKit empowers the developers to construct AR features for apps regardless of their previous experience. It offers multiple features to benefit the users and developers alike.

Location Anchor: This allows the anchoring of AR creations to specific geographical locations and offers various angles for viewing.

Motion Capture: Intensifies AR experience using one camera to record the real-time motion.

Enhanced Face Tracking: Amplifies AR experience for users with a front-facing camera.

Scene Geometry: Prepares a topological map with labeled objects of any space.

People Occlusion: Enables realistic responses of AR creations in the presence of obstacles such as persons and green screen effects in all environments.

Depth API: Adds precision and occlusion to the AR object, increasing the user’s immersive experience.

Instant AR: This helps in immediate AR deployment in the real environment.

Simultaneous Camera Usage: Maximizes AR object performance and delivery by utilizing both the front and back camera for a new experience.

Apple’s AR Creation tools consist of a reality converter and reality composer. Reality Converter offers the display, customization, and conversion of 3D models to USDZ to integrate them effortlessly to Apple tools and AR-enabled devices. In addition, Reality Composer facilitates the construction, assessment, refinement, and simulation of AR experiences using an intuitive interface.

The creation tool offers the following advantages:

Powerful built-in AR library to create any virtual object or use USDZ files to continue working on a previous project.

Incorporates dynamism into the AR using animations and audio for adding little details like movement, vibrations, and more.

Record and play feature offers specific information capturing using camera and in-built sensors in predetermined locations.

Delivers smooth transition between all Apple platforms and devices.

Supports export to USDZ, including all components authored in Reality Composer.

Benefits of Swift for creating AR applications:

Xcode powered by Swift, plays a leading role in bringing AR to users with a short turnaround time. The following merits make Swift the favored choice by businesses.

1. Accelerated Development:

Swift has a low code requirement due to its simple syntax. In comparison to Objective-C, it is easier to read and write. With built-in concurrency support and reduced code size, there is faster coding, resulting in fewer problems and easy maintenance.

According to Apple Inc., a Swift application is up to 2.6 times faster than Objective-C and 8.4 times faster than Python. Swift’s LLVM, a compiler framework that enables speedier code processing, further optimizes this speed for better performance. All these qualities contribute to the faster development of AR applications.

2. Scalability:

The AR apps created with Swift are highly scalable, i.e., the apps can be updated with new features as and when needed without any worries resulting in future-proofing. The elementary readability and simple syntax combined with effortless onboarding for new developers to the team makes it a preferred choice.

3. Security:

Swift provides robust protection with its error control and typing system to avert code crashes. Hence, with a concise feedback loop, developers can promptly find and fix the code’s errors. This removes the risk of time and effort wastage due to bug fixing.

4. Interoperability with Objective-C:

With the excellent benefit of interoperability with Objective-C, Swift language provides the unique advantage of fluid cooperation for AR app extension or updates. Above all, more features are added quickly, and the risks associated with porting are prevented.

5. Memory Management:

With in-built memory management and tracking performed by Automatic Reference Counting (ARC), developers do not need to be bothered about conscious memory management. In other words, ARC also enhances the app’s performance and does not affect the CPU or memory.

Combined with Swift’s in-built dynamic libraries, it reduces the app size and memory footprint, eliminating the need to constantly oversee and retain every class count. For instance, Swift 5 introduced an Application Binary Interface that decreases the bundle size and increases version compatibility yielding a much more stable application.

6. Cross-device support:

Using Swift in both the backend and front-end of AR application development supercharges the development process by enabling extensive code sharing and reuse. This allows cross-device support across all Apple platforms, including iPhone, iPad, MacBook, Apple Watch, and Apple TV and Linux platforms.

Final Thoughts:

Swift has tremendous potential to transform businesses by revolutionizing user lifestyles through engaging and riveting AR experiences. The above benefits highlight how Swift empowers the developers to create stable, secure, and high-performance AR application.

With the demonstrated success of various AR games, creative design solutions, and e-commerce apps, Swift is the first choice for any custom AR application development for Apple products.

Like other businesses, if you too are looking for custom AR Application development Mindfire Solutions can be your partner of choice. We have a team of highly skilled and certified software professionals, who have developed many custom solutions for our global clients over the years.

Here are a few interesting projects we have done. Click here to know more:

Case study on device for medical compliance.

Case study on e-commerce site for freight.

0 notes

Text

USDとMaterialXの新機能 - Apple Vision Pro

「イマーシブ」とは、「没入」「没入感のある」と定義されている言葉ではあります。

しかし、空間(によるスペイシェル)コンピューティング体験とは、3D映画館のスクリーンから飛び出した3D物体や3D特殊効果が自らの身体へ数センチの距離まで接近する3D表現ができるプラットフォームを実現可能にします。

2010年代に流行した3Dメガネをかけて3D映画館で見た感覚に近い感じがします(この場合、3DメガネがApple Vision Pro)

AppleプラットフォームでのUniversal Scene Description(USD)とMaterialXのサポートが向上しました。このセッションで確認しましょう。

これらのテクノロジーが、3Dコンテンツの作成と配信の基盤としてどのように機能するか、また、優れた空間(によるスペイシェル)体験を作成するためのワークフローをどのように効率化するかについて説明します。

RealityKitおよびStormでのUSDとMaterialXのサポート、Appleのシステムに用意されているツールの強化点などもご紹介します。

さらに、iPhoneなどでも標準アプリで簡単に写真も3D制作できるキラーアプリがあるとイメージが拡がります。

OpenUSDとは・・・

リナックス財団とグループ傘下の非営利団体JDF(Joint Development Foundation)、関係各社が3Dシーンの技術規格「OpenUSD」の国際標準規格を共同作成。

関係各社には、現仕様を開発したピクサーのほか、Adobe、Apple、Autodesk、NVIDIAが参加し、3Dシーンの技術仕様「OpenUSD」の相互運用性を高めていきます。

当初の対象となる開発ツールは、Maya、Houdini、Autodesk 3ds Max、Adobe Substance 3D Designerなど

参考として・・・

イマーシブな体験は、ユーザーが物理的な世界とデジタルな世界の間で没入感を得ることを指します。

これは、視覚、聴覚、触覚、嗅覚、さらには味覚までを含むことができ、ユーザーに強い感情的なインパクトを与えることができます。

現在、イマーシブ体験はエンターテインメント業界を中心に広がっています。

例えば、VR(仮想現実)やAR(拡張現実)を利用したテーマパークやアトラクション、イマーシブシアターなどが人気です。

3D映画は、イマーシブ体験の一部として位置づけられます。3D映画は視覚的な深度を提供し、観客が映画の世界に入り込む感覚を強化します。

これにより、従来の2D映画よりも強い没入感を得ることができます。

イマーシブ体験は、エンターテインメントだけでなく、教育、医療、マップアプリ、車などの運転補助、交通標識など多岐にわたる分野で活用されています。

サードパーティー製のスマホケースのようにApple Vision Proと一体化したヘルメットもデザイン上相性が良いかもしれません。本体の重さを全体に分散します。

安全面からも普段からヘルメット着用の抵抗感もなくなります。

技術の進化とともに、ますます現実的でインタラクティブな体験が可能となり、今後もその需要は拡大していきます。

<おすすめサイト>

実用に向けた大規模言語モデルApple インテリジェンス 2024

Apple Vision Pro 2024

ジェームス・キャメロン: 「アバター」を生み出した好奇心

0 notes

Text

The Future of iOS App Development: Trends and Innovations

In the fast-paced world of technology, iOS app development is constantly evolving. With each passing year, Apple introduces new features, tools, and capabilities, challenging developers to stay up-to-date with the latest trends and innovations.

As we look ahead to the future of iOS App Development, it's crucial to anticipate the trends that will shape the industry and innovations that are likely to define the future of iOS app development.

SwiftUI and Combine Frameworks: Apple's SwiftUI and Combine frameworks have been steadily gaining popularity among iOS developers. SwiftUI simplifies the UI development process, enabling developers to build interfaces using declarative syntax and a live preview feature. Combine, on the other hand, simplifies asynchronous programming by providing a reactive framework. These frameworks are likely to play a significant role in the future, making app development more efficient and reducing code complexity.

Augmented Reality (AR) and Virtual Reality (VR): ARKit and RealityKit have paved the way for immersive augmented reality experiences on iOS devices. As AR and VR technologies continue to advance, we can expect to see more innovative apps that merge the digital and physical worlds. These could include AR games, interactive shopping experiences, and educational applications.

Cross-Platform Development: Cross-platform development tools like SwiftUI and Flutter are gaining traction. Developers are looking for ways to write code once and deploy it across multiple platforms, including iOS, Android, and the web. This trend will likely continue to grow, making it easier for businesses to reach a broader audience.

Progressive Web Apps (PWAs): While native iOS app development is essential, Progressive Web Apps are gaining popularity. These web-based applications can offer a native-like experience without the need for installation from the App Store. PWAs can be a cost-effective way to reach a broader audience.

Conclusion: The future of iOS App development is brimming with exciting possibilities. As technology continues to evolve, developers must stay adaptable and embrace these trends and innovations.

0 notes

Text

3D Scanning with Object Capture

by Ethan Saadia

🐨 Scanning a koala with just an iPhone! 📱

Object Capture is a new feature part of RealityKit that lets you create accurate 3D models just by taking photos of an object.

I 3D scanned this koala by taking 64 photos of it from all angles with Apple's sample capture app on my iPhone. RealityKit uses technology called photogrammetry to stitch together the overlapping parts of each photo, combining depth and accelerometer data if available, into a 3D model that you can export to USDZ for use in games, animations, or augmented reality.

Integrating 3D scanning into a social platform like Tumblr would make it easy to create and share immersive content with the world and view it right in front of you with AR Quick Look. From delicious food to unboxing reveals to travel scenes, the possibilities of Object Capture are endless!

My AR apps for learning circuits with Raspberry Pi and shopping at the Apple Store both require 3D models of real objects, so Object Capture will be a huge help to the development process. View my augmented reality work to learn more here!

#WWDC#wwdc21#wwdc 2021#ar#augmented reality#realitykit#arkit#3d scanning#3d printing#apple#ios#3D#3d modeling#iOS 15#xr#vr#virtual reality#USDZ

2K notes

·

View notes

Photo

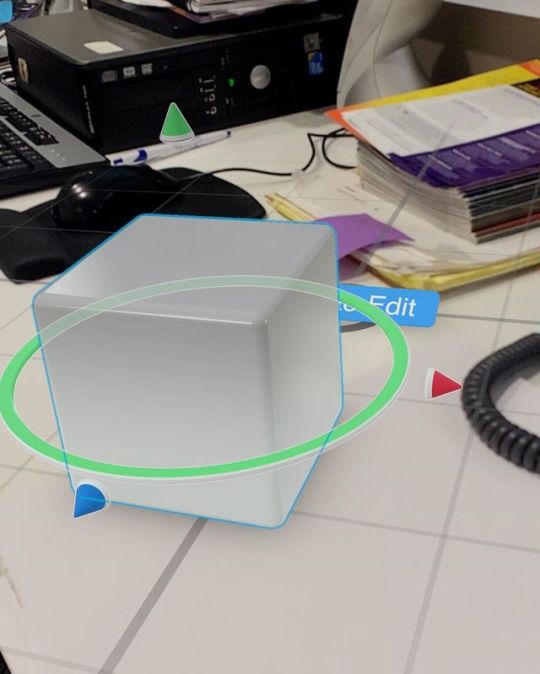

Introducing RealityKit Composer - it’s basically Unity for your iPhone and I’m here for it 🥰😛 . . . . . #360view #ar #photography #art #augmentedreality #gaming #gamer #vr #photooftheday #technology #love #startup #mixedreality #360photo #games #360camera #360photography #innovation #oculus #virtualreality #tech #littleplanet #future #htcvive #photosphere #3d #game #design #360 #travel (at MetroTech Center) https://www.instagram.com/p/B0ZKyoIgmDL/?igshid=1o0g01ole45v5

#360view#ar#photography#art#augmentedreality#gaming#gamer#vr#photooftheday#technology#love#startup#mixedreality#360photo#games#360camera#360photography#innovation#oculus#virtualreality#tech#littleplanet#future#htcvive#photosphere#3d#game#design#360#travel

3 notes

·

View notes

Text

RT @zhuowei: DS emulation in Augmented Reality: Displays game as a holographic 3D model. - DS emulated with melonDS (iOS port from rileytestut's Delta) - 3D model extracted with scurest's amazing MelonRipper tool - rendered with iOS #RealityKit #AugmentedReality #AR https://t.co/1blhyamLbo https://t.co/WgN3bnyVy2

RT @zhuowei: DS emulation in Augmented Reality: Displays game as a holographic 3D model. - DS emulated with melonDS (iOS port from rileytestut's Delta) - 3D model extracted with scurest's amazing MelonRipper tool - rendered with iOS #RealityKit #AugmentedReality #AR https://t.co/1blhyamLbo https://t.co/WgN3bnyVy2

— Retrograde Wear Gaming (@RetroGradeWear) Jun 23, 2023

from Twitter https://twitter.com/RetroGradeWear

0 notes

Photo

Apple’s new Vision OS will massively reduce the development time of applications and games within the AR ecosystem. RealityKit and Reality Composer Pro, in the meantime, will make it easier to design AR content for your existing or new AR application. The reality composer allows the creation of 3D objects via a series of growth steps that allow combining entire scenes using menus and social media templates. For More Information: https://braininventory.in/posts/exploring-apples-vision-pro-a-fresh-perspective-on-spatial-computing

0 notes