#Restful API for Football data

Explore tagged Tumblr posts

Text

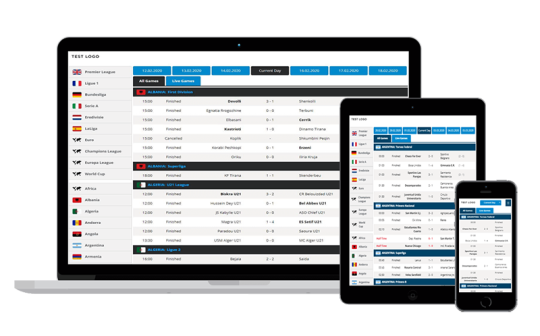

Unleashing the Game: Building a Restful API for Football Data

real-time data through our Restful API. By providing a comprehensive and user-friendly platform, we desire to improve the exactness and efficiency of football-related operations for all our partners and clients API Football aims to empower the football industry with seamless access to reliable and. We are earmarked to continually improve our services and encourage solid relationships with our valued customers to be the go-to source for high-quality football data.

0 notes

Text

Best Football APIs for Developers in 2025: A Deep Dive into DSG’s Feature-Rich Endpoints

In 2025, the demand for fast, reliable, and comprehensive football data APIs has reached new heights. With the explosion of fantasy leagues, sports betting, mobile apps, OTT platforms, and real-time sports analytics, developers are increasingly relying on robust data solutions to power seamless user experiences. Among the top providers, Data Sports Group (DSG) stands out for offering one of the most complete and developer-friendly football data APIs on the market.

In this blog, we’ll explore why DSG’s football API is a top choice in 2025, what features make it stand apart, and how developers across fantasy platforms, media outlets, and startups are using it to build cutting-edge applications.

Why Football APIs Matter More Than Ever

Football (soccer) is the world’s most-watched sport, and the demand for real-time stats, live scores, player insights, and match events is only growing. Whether you're building a:

Fantasy football platform

Live score app

Football analytics dashboard

OTT streaming overlay

Sports betting product

...you need accurate, timely, and structured data.

APIs are the backbone of this digital ecosystem, helping developers fetch live and historical data in real-time and display it in user-friendly formats. That’s where DSG comes in.

What Makes DSG's Football API Stand Out in 2025?

1. Comprehensive Global Coverage

DSG offers extensive coverage of football leagues and tournaments from around the world, including:

UEFA Champions League

English Premier League

La Liga, Serie A, Bundesliga

MLS, Brasileirão, J-League

African Cup of Nations

World Cup qualifiers and international friendlies

This global scope ensures that your application isn't limited to only major leagues but can cater to niche audiences as well.

2. Real-Time Match Data

Receive instant updates on:

Goals

Cards

Substitutions

Line-ups & formations

Match start/stop events

Injury notifications

Thanks to DSG’s low-latency infrastructure, your users stay engaged with lightning-fast updates.

3. Player & Team Statistics

DSG provides deep stats per player and team across multiple seasons, including:

Pass accuracy

Goals per 90 minutes

Expected Goals (xG)

Defensive stats like tackles and interceptions

Goalkeeper metrics (saves, clean sheets)

This is invaluable for fantasy football platforms and sports analytics startups.

4. Developer-Centric Documentation & Tools

DSG’s football API includes:

Clean RESTful architecture

XML & JSON format support

Well-organized endpoints by competitions, matches, teams, and players

Interactive API playground for testing

Detailed changelogs and status updates

5. Custom Widgets & Integrations

Apart from raw data, DSG offers:

Plug-and-play football widgets (live scores, player cards, league tables)

Custom dashboard feeds for enterprise customers

Webhooks and push notifications for developers

This speeds up development time and provides plug-and-play features for non-technical teams.

Common Use Cases of DSG's Football Data API

a. Fantasy Football Apps

Developers use DSG data to:

Build real-time player score systems

Offer live match insights

Power draft decisions using historical player performance

b. Sports Media & News Sites

Media outlets use DSG widgets and feeds to:

Embed real-time scores

Display dynamic league tables

Show interactive player stats in articles

c. Betting Platforms

Betting platforms use DSG to:

Automate odds updates

Deliver real-time market changes

Display event-driven notifications to users

d. Football Analytics Dashboards

Startups use DSG to:

Train AI models with historical performance data

Visualize advanced stats (xG, pass networks, heatmaps)

Generate scouting reports and comparisons

Sample Endpoints Developers Love

/matches/live: Live match updates

/players/{player_id}/stats: Season-wise stats for a specific player

/teams/{team_id}/fixtures: Upcoming fixtures

/competitions/{league_id}/standings: League table updates

/events/{match_id}: Real-time event feed (goals, cards, substitutions)

These are just a few of the dozens of endpoints DSG offers.

Final Thoughts: Why Choose DSG in 2025?

For developers looking to build applications that are scalable, real-time, and data-rich, DSG’s football API offers the ideal toolkit. With global coverage, detailed statistics, low latency, and excellent developer support, it's no surprise that DSG has become a go-to solution for companies building football-based digital products in 2025.

If you're planning to launch a fantasy app, sports betting service, or football analytics platform, DSG has the data infrastructure to support your vision. Ready to get started? Explore DSG's Football Data API here.

0 notes

Text

Revolutionize Your Sports App with Powerful Soccer & Football APIs

The following blog will explain everything you need to know about soccer APIs, how they provide live football data, and what makes a great sports score API.

Sports fans today no longer depend on television broadcasts and newspaper reports to track their favorite teams because of the digital era. Sports entertainment has significantly changed because of data-driven applications and platforms that provide instant updates. Sports Score API and Live Football Data, together with Football Data, form the fundamental elements that power this revolutionary change through Soccer APIs.

What Is a Soccer API?

A Soccer API is a software interface that gives applications access to properly structured data containing football (or soccer) matches, players, teams, leagues, statistics, and live scores. By utilizing Soccer APIs, developers access precise and current football information that provides historical match data.

These APIs are used across various platforms, including:

Live Score Apps

Betting Websites

Fantasy Football Platforms

Sports News Websites

Broadcasting Channels

Football Analytics Tools

A high-quality Soccer API - Football Data provides more than just scores—it includes detailed information such as line-ups, goal scorers, red and yellow cards, substitutions, formations, possession stats, and even player-specific heat maps in some advanced cases.

Why Live Football Data Matters

Fans today expect real-time updates. Whether it's checking who scored the winning goal or verifying a VAR decision, users demand accurate, fast, and complete live football data.

The benefits of integrating live football data include:

Enhanced User Experience: Real-time match updates, interactive timelines, and notifications keep users engaged.

Competitive Edge: Apps with real-time data perform better and retain more users.

Monetization: Better data means more engagement, increasing advertising revenue, in-app purchases, and subscription potential.

Advanced Analytics: Coaches, analysts, and enthusiasts can leverage live data to understand tactical decisions and player performance.

What Is a Sports Score API?

A Sports Score API is a data feed that provides real-time and historical scores for sports events, including football, basketball, tennis, cricket, and more. When built explicitly for football, this API can offer:

Live Match Scores

Full-Time Results

Upcoming Fixtures

League Standings

Top Scorers and Assists

Match Events Timeline

Most developers use Sports Score API to build scoreboards, display widgets, and develop mobile apps that deliver instant sports updates to users worldwide.

Key Features to Look for in a Soccer API

Not all football APIs are created equal. If you're looking to integrate a reliable soccer API into your platform, consider these essential features:

1. Coverage of Global Leagues and Competitions

Choose a provider that supports popular leagues like the Premier League, La Liga, Serie A, Bundesliga, MLS, Champions League, and smaller or youth tournaments. The broader the coverage, the better.

2. Real-Time Updates

Speed is crucial. Delayed data is useless in today's environment, where millions watch events unfold.

3. Historical Data Access

Historical match data going back several seasons is invaluable for analytics, betting trends, and fantasy league platforms.

4. Detailed Match Events

Goals, penalties, assists, substitutions, injuries, and red/yellow cards must be captured accurately.

5. Player and Team Stats

Good APIs provide deep insights from individual player performance to team formations and win/loss ratios.

6. Flexible Integration

RESTful APIs, GraphQL endpoints, or Webhooks—ensure your service supports modern, developer-friendly formats.

7. Reliable Uptime and Scalability

Downtime during peak hours, such as the Champions League final, is unacceptable. Look for APIs with 99.9% uptime and firm support.

Top Use Cases for Soccer API Integration

Let's explore how businesses and developers use soccer and sports score APIs:

1. Fantasy Football Applications

Live player data, injuries, and statistics help users manage their fantasy teams in real time.

2. Betting Platforms

Real-time match updates help betting operators offer in-play bets and resolve wagers quickly.

3. Sports Media and News Websites

Websites like ESPN, BBC Sports, or Goal.com use APIs to automatically populate live score widgets and league tables.

4. Mobile Live Score Apps

Apps like LiveScore, SofaScore, and FlashScore rely heavily on APIs to offer minute-by-minute updates.

5. Data Analytics and Insights

Analysts and scouts use historical and live data to evaluate team performance, create visualizations, and forecast outcomes.

Soccer APIs and Machine Learning

One of the most exciting developments is how Live Football Data feeds machine learning models. AI is being used to:

Predict Match Outcomes

Evaluate Player Potential

Assess Injury Risks

Design Training Regimens

Automate Match Summaries

Accurate, structured data is critical for training these models, and APIs are the foundation.

Monetizing Your App with Football Data

Live football data not only enhances UX—it opens the door for monetization:

Ads and Sponsorships

Premium Subscriptions for Advanced Stats

Affiliate Betting Links

E-commerce (Team Merchandising)

Paywall for Exclusive Analytics

Final Thoughts

The sports tech industry is booming, and Football Data is its fuel. A powerful Soccer API is a game-changer whether you're building a sports platform, enhancing a mobile app, running a betting business, or analyzing player performance. The combination of Live Football Data, detailed match insights, and a reliable Sports Score API can elevate your project from good to exceptional. It empowers you to deliver value, engage users, and stay ahead in a fiercely competitive market.

0 notes

Text

WooWeekly #537: Generate Test Data | Toggle Login & Registration | Extending REST API

Hello there, Welcome back to WooWeekly! I’m glad you’re here for another round of WooCommerce insights. Every week, I curate tips and tutorials for you to help you sharpen your WooCommerce skills. Between planning our next holiday, juggling my kid’s sport and my own, and obsessing over the final rush of my fantasy football leagues, things have been hectic. But hey, that’s part of the fun! On…

0 notes

Text

Mastering the Game: A Deep Dive into the Unparalleled Insights of Our Sports Data API

In the ever-evolving landscape of sports, where every statistic and moment matters, having access to a robust Sports Data API is a game-changer. BetsAPI, a trailblazer in sports data solutions, takes center stage with its Sports Data API, providing enthusiasts, developers, and analysts with an unparalleled gateway to the world of sports insights. Let's explore the depth and power of our Sports API and how it elevates the game for all who seek precision and real-time data in the sports arena.

Unveiling the Essence of Sports Data API

At the heart of modern sports analytics is the Sports Data API, a powerful tool that serves as the backbone for informed decision-making in various sectors of the sports industry. From live scores to detailed player statistics, a robust API can open doors to a wealth of real-time information.

Why BetsAPI Sports Data API Stands Out

BetsAPI distinguishes itself in the competitive landscape of sports data providers. Here's why our Sports Data API is considered a cut above the rest:

1. Real-Time Precision

In the fast-paced world of sports, accuracy and timeliness are paramount. Sports Data API delivers real-time updates, ensuring users have access to the latest scores, match events, and player statistics as they unfold.

2. Comprehensive Sports Coverage

Beyond specializing in a single sport, BetsAPI Sports Data API offers comprehensive coverage across a multitude of sports. Whether you're into football, basketball, baseball, or more, the API caters to diverse interests, making it a one-stop-shop for sports enthusiasts.

3. Developer-Friendly Features

For developers, ease of integration is essential. BetsAPI Sports Data API is designed with developers in mind, featuring well-documented endpoints and a user-friendly structure that facilitates seamless integration into various applications, websites, and platforms.

4. Historical Data Insights

Understanding the historical context of sports events is a strategic advantage. Our commitment to providing historical data insights empowers analysts, bettors, and enthusiasts with a deeper understanding of trends, patterns, and the evolution of the game over time.

How BetsAPI Sports Data API Empowers Users

Now, let's delve into how BetsAPI Sports Data API empowers users across different domains:

1. Strategic Betting for Sportsbook Operators

For sportsbook operators, accurate and real-time data is the lifeline of strategic betting. Our Sports Data API ensures that odds are continuously updated, enabling sportsbook operators to make informed decisions and stay competitive in the ever-changing landscape of sports betting.

2. Immersive Fan Experience

For fans, the Sports Data API enriches the sports-watching experience. From live commentary to up-to-the-minute scores, fans can stay connected with their favorite teams and players, making every moment of the game more immersive and engaging.

3. Innovative Sports Applications

Developers play a pivotal role in shaping the future of sports technology. BetsAPI Sports Data API provides developers with the tools they need to create innovative sports applications, whether it's a fantasy sports platform, a live score app, or an analytics tool for in-depth sports insights.

4. Data-Driven Sports Analysis

For analysts and data enthusiasts, the Sports Data API offers a treasure trove of data for in-depth analysis. Dive into player statistics, team performance metrics, and historical trends to uncover insights that can shape strategic decisions and enhance the overall understanding of the game.

Conclusion

In the era of data-driven decisions, Sports Data API emerges as a powerhouse for sports enthusiasts, developers, and analysts alike. Whether you're looking to elevate your sports betting strategies, create immersive fan experiences, develop innovative applications, or conduct in-depth sports analysis, our Sports Data API opens doors to a world of possibilities. Step into the realm of precision, real-time insights, and comprehensive sports coverage with BetsAPI. Elevate your sports experience, whether you're a sportsbook operator refining odds, a fan tracking live scores, or a developer shaping the future of sports technology. With our Sports Data API, you're not just accessing data; you're unlocking the potential for unprecedented insights in the dynamic world of sports.

0 notes

Text

Sportsflakes offers a comprehensive JSON REST API delivering accurate and reliable live and historical sports data. Tailored for individual projects of any scale, the API covers a wide spectrum of information for various sports, including player stats, game scores, and team standings. Whether you're an analyst, journalist, betting company, or passionate fan, Sportsflakes' Football, Basketball, and NFL Data APIs provide specialized insights, from match results and player statistics to league standings and FIFA rankings, enabling you to make precise predictions and in-depth analyses.

0 notes

Text

Subtitle Edit

Would you like to report this video?

This product comes with a PrimeSupport contract that allows you to take advantage of technical support through our helpline and fast, uncomplicated repair services. You can rest assured that your product is protected by Sony. The MVS-6000 is a new member of the MVS family with the established mixer models MVS-8000G and DVS-9000. We have identified some suspicious activity from you or someone logged into your internet network. Please help us protect Glassdoor by confirming that you are human and not a bot. This clip transition effect is particularly helpful for sports broadcasts, for example for football and basketball games. The MKS-6470-DME board set offers two additional DVE channels, which can optionally be integrated into the MVS-6000. LingueeFind reliable translations of words and phrases in our comprehensive dictionaries and search billions of online translations. "Subtitle Edit" has everything you need to create or fix subtitles. Thanks to numerous integrated functions, little is left to be desired. This comment comes from the Google Product Forum. rights to third parties granted to Koelnmesse; the right to edit or otherwise change the image material. Yes, I am interested in receiving interesting special offers from the areas of media, tourism, telecommunications, finance, mail order by e-mail from CHIP Digital GmbH and CHIP Communications GmbH. Consent at any time e.g. With us you have the choice, because unlike other portals, no additional software is selected by default. Edit and create subtitles with the practical tool "Subtitle Edit". For links on this page, CHIP may receive

waveform display and velocity split display), the corresponding parameter in all pads (and each layer contained therein) within the current engine is changed to the same value.

This clip transition effect is particularly helpful for sports broadcasts, for example for football and basketball games.

Maybe you saved in between?

Some community members have badges that show who they are or how active they are in a community.

ul> Waveform display and the velocity split display), the corresponding parameter in all pads (and each layer contained therein) within the current engine is changed to the same value. open or use the ElixirEditor to develop new page and form definitions without scripting.

final edit n—

A technical error occurred while trying to complete your entry. We are already working on it and will be back for you as soon as possible. Please come back later. We apologize for this inconvenience and thank you for your understanding. Visit the author's page for help, ask a question, post a review, or report the script. The cool design that you love and that you won't find in any department store is far from everything. A special hardware-implemented data compression is used to save memory bandwidth. This parameter specifies the color used in the player's progress bar to highlight the part of the video that the user has already seen. red and white are valid parameter values. By default, the player uses the color red in the progress bar. For more information on color options, visit the YouTube API blog.

1 note

·

View note

Text

Understanding the Enterprise NFT Market

NFT Marketplace offers an e-commerce site for dealing with NFTs — unique digital objects. The ownership, provenance, and history of these objects are recorded using smart contracts in a cryptographically secure digital ledger.

Therefore, they cannot be copied, replaced, altered or otherwise tampered with. They can be issued (minted), transferred to others (as a seller/buyer transaction or auctioned), and burned (destroyed). Through ownership of an NFT, a unique digital file containing a representation of an asset (typically an image, video, 3D object, data file, or other form of digital asset) can be accessed.

NFTs can be used to record and transfer ownership of digital artwork, unique photos or videos, virtual trading cards, images and product registrations of physical objects. They can be implied to include content such as moment-specific sports games or concert events with unique personalization, but can also represent ownership of real estate (often zero), investments following environmental, social and governance (ESG) principles, product content and Manufacturing history, certifications and qualifications, etc.

NFT Marketplace is similar to an e-commerce website, but it uses blockchain and content management services as part of the backend infrastructure.

Oracle customers currently use NFTs mapped on the Oracle Blockchain Platform to provide:

Marketplace of Iconic Photos from Global News Agencies International music star fan club enriches membership experience Trade in personalized digital objects related to the US football team, as well as a movie about the iconic family that owns the franchise This solution exemplifies how to build an NFT marketplace Development using Oracle Blockchain Platform and Oracle Cloud Infrastructure.

Architecture

This architecture shows an example of an NFT marketplace in Oracle Cloud Infrastructure (OCI). Using this architecture it is possible to build an NFT marketplace using Oracle Blockchain Platform on Oracle Cloud Infrastructure .

The following are the key components of the NFT market:

The blockchain platform provides distributed ledgers and supports smart contracts for issuing and trading NFTs

A content management platform that supports the storage, development and combination of digital objects that make up NFTs

User experience platform for creating marketplace UI and workflows associated with shielding NFTs, browsing available NFTs, buy/sell transactions, and payment processing

The diagram below shows the core services and some optional services that you can integrate as needed.

The instructions for build-nft-marketplace-blockchain.png are as follows Description of the illustration build-nft-marketplace-blockchain.png NFT Marketplace is a custom Visual Builder Cloud Service (VBCS) app with web and mobile UI. You can tailor the scope and functionality to your specific organizational needs. You can integrate it with existing customer portals or other enterprise customer experience (CX) applications and systems. You can design the UI so that specific user flows involve NFTs for consumer collection CX, but not applications for B2B dataset transactions and ESG investment portals. This solution playbook provides specific instructions on enabling the Visual Builder low-code development infrastructure using the API to:

Content management using Oracle Content Management and creating plugins

NFT drawing, listing and transfer using Oracle Blockchain Platform REST API (using OCI API Gateway)

Payment processing using the Oracle Integration PayPal adapter or the Oracle CX Commerce platform

Optional data visualization and dashboards using Oracle Analytics Cloud The architecture has the following key components:

area An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, known as an availability domain.

tenant

A tenant is a securely isolated partition that Oracle sets up in Oracle Cloud when you sign up for Oracle Cloud Infrastructure . You can create, organize, and manage resources in Oracle Cloud within your tenant. Tenant is synonymous with company or organization. Typically, a company has one tenant and reflects the company’s organizational structure within that tenant. A tenant is usually associated with a subscription, and a subscription usually has only one tenant.

Oracle Blockchain Platform

Oracle Blockchain Platform is a managed blockchain service that provides a tamper-proof distributed ledger to record the issuance (printing) of NFTs, maintains NFT transaction history, and the infrastructure for running NFT transaction smart contracts node. It is a pre-installed permissioned platform based on Hyperledger architecture that can run independently or as part of a network of validating nodes (peer nodes). These nodes update the ledger and respond to queries by running smart contract code (business logic that runs on the blockchain).

External applications invoke transactions or run queries through client SDK or REST API calls, which prompt selected peers to run smart contracts, such as those generated and deployed in the development section. ERC-721Multiple peers endorse (digitally sign) the result, then verify the result and send it to the ordering service. After consensus is reached on the order of transactions, transaction results are grouped into cryptographically secure, tamper-proof blocks and sent to peer nodes for verification and appending to the ledger.

With Oracle Blockchain Platform , you go through a few simple instance creation steps, and Oracle takes care of service management, patching, monitoring, and other service lifecycle tasks. Service administrators can use the Oracle Blockchain Platform web console or its REST API to configure the blockchain and monitor its operation. See the Browse More section for details.

Oracle Content Management (Ocm)

Oracle Content Management provides marketers, developers and business leaders with a powerful content management system based on an API-friendly platform. It provides the security and efficiency to create, manage, store and deliver digital assets and websites that scale to meet your growing business needs and complexity.

The platform provided by OCM uses a hierarchical structure of items and folders, including a repository of content items and their indexable metadata attributes.nft marketplace development solution It also provides plugins for managing collections, compilations, and optional review and approval workflows prior to publishing NFTs. OCM provides sites that can be used to create custom NFT creation sites.

0 notes

Text

Keep Up with the Game: Live Score XML Feed for Sports Enthusiasts

As technology continues to evolve, the world of sports is not left behind. Sports enthusiasts now have access to a wide range of tools and resources to enhance their experience. One of these tools is the use of sports APIs. In this blog post, we'll be discussing Football APIs for developers, NFL Fantasy Football API, and Live Score XML Feed.

Football API for Developers

Football API is a tool that provides developers with access to a wide range of football-related data. Football APIs provide access to data such as live scores, team information, fixtures, news, and statistics. The data provided by Football APIs can be used to build various football-related applications, including mobile applications, websites, and software.

Football APIs for developers are available in various formats, including RESTful APIs and SOAP APIs. The APIs can be integrated into different programming languages, including Python, Java, and PHP. Some popular Football APIs for developers include Football-Data.org, Sportmonks, and OpenLigaDB.

Using Football APIs for developers provides several benefits. Developers can create football-related applications that provide users with real-time data and statistics about their favorite teams and players. Football APIs can also be used to develop betting applications, which provide users with real-time odds and betting data.

NFL Fantasy Football API

The NFL Fantasy Football API is a tool that provides developers with access to data related to fantasy football. Fantasy football is a game where participants create virtual teams of real NFL players and compete against each other based on the players' real-life performances.

The NFL Fantasy Football API provides developers with access to data such as player statistics, game schedules, and injury updates. Developers can use this data to create applications that provide users with real-time updates about their fantasy teams.

One of the most popular applications of the NFL Fantasy Football API is the development of fantasy football mobile applications. These applications provide users with real-time updates about their fantasy teams, including player performance, injury updates, and game schedules. Fantasy football mobile applications also allow users to make changes to their teams, including adding and dropping players.

Live Score XML Feed

Live Score XML feed is a tool that provides developers with access to real-time data related to sports games. The live score XML feed provides real-time updates about the scores of ongoing games, as well as other game-related information such as player statistics, game schedules, and injury updates.

Developers can use Live Score XML feeds to create various sports-related applications, including mobile applications, websites, and software. One of the most popular applications of Live Score XML feeds is the development of sports betting applications. These applications provide users with real-time odds and betting data, allowing them to make informed decisions about their bets.

API Football Rankings

API Football Rankings provide developers with access to data related to the rankings of football teams. The API Football Rankings provide data on the rankings of football teams from various leagues, including the English Premier League, La Liga, and Serie A.

Developers can use API Football Rankings to create various football-related applications, including mobile applications, websites, and software. One of the most popular applications of API Football Rankings is the development of sports betting applications. These applications provide users with real-time odds and betting data based on the rankings of football teams. In conclusion, Football APIs for developers, NFL Fantasy Football APIs, Live Score XML feeds, and API Football Rankings are powerful tools that provide developers with access to real-time data related to football. These tools can be used to create various football-related applications, including mobile applications, websites, and software. Developers can use these tools to provide users with real-time updates about their favorite teams and players, as well as to create betting applications that provide users with real-time odds and betting data.

0 notes

Photo

The Screaming Frog SEO Spider has evolved a great deal over the past 8 years since launch, with many advancements, new features and a huge variety of different ways to configure a crawl. This post covers some of the lesser-known and hidden-away features, that even more experienced users might not be aware exist. Or at least, how they can be best utilised to help improve auditing. Let’s get straight into it. 1) Export A List In The Same Order Uploaded If you’ve uploaded a list of URLs into the SEO Spider, performed a crawl and want to export them in the same order they were uploaded, then use the ‘Export’ button which appears next to the ‘upload’ and ‘start’ buttons at the top of the user interface. The standard export buttons on the dashboard will otherwise export URLs in order based upon what’s been crawled first, and how they have been normalised internally (which can appear quite random in a multi-threaded crawler that isn’t in usual breadth-first spider mode). The data in the export will be in the exact same order and include all of the exact URLs in the original upload, including duplicates, normalisation or any fix-ups performed. 2) Crawl New URLs Discovered In Google Analytics & Search Console If you connect to Google Analytics or Search Console via the API, by default any new URLs discovered are not automatically added to the queue and crawled. URLs are loaded, data is matched against URLs in the crawl, and any orphan URLs (URLs discovered only in GA or GSC) are available via the ‘Orphan Pages‘ report export. If you wish to add any URLs discovered automatically to the queue, crawl them and see them in the interface, simply enable the ‘Crawl New URLs Discovered in Google Analytics/Search Console’ configuration. This is available under ‘Configuration > API Access’ and then either ‘Google Analytics’ or ‘Google Search Console’ and their respective ‘General’ tabs. This will mean new URLs discovered will appear in the interface, and orphan pages will appear under the respective filter in the Analytics and Search Console tabs (after performing crawl analysis). 3) Switching to Database Storage Mode The SEO Spider has traditionally used RAM to store data, which has enabled it to crawl lightning-fast and flexibly for virtually all machine specifications. However, it’s not very scalable for crawling large websites. That’s why early last year we introduced the first configurable hybrid storage engine, which enables the SEO Spider to crawl at truly unprecedented scale for any desktop application while retaining the same, familiar real-time reporting and usability. So if you need to crawl millions of URLs using a desktop crawler, you really can. You don’t need to keep increasing RAM to do it either, switch to database storage instead. Users can select to save to disk by choosing ‘database storage mode’, within the interface (via ‘Configuration > System > Storage). This means the SEO Spider will hold as much data as possible within RAM (up to the user allocation), and store the rest to disk. We actually recommend this as the default setting for any users with an SSD (or faster drives), as it’s just as fast and uses much less RAM. Please see our guide on how to crawl very large websites for more detail. 4) Request Google Analytics, Search Console & Link Data After A Crawl If you’ve already performed a crawl and forgot to connect to Google Analytics, Search Console or an external link metrics provider, then fear not. You can connect to any of them post crawl, then click the beautifully hidden ‘Request API Data’ button at the bottom of the ‘API’ tab. Alternatively, ‘Request API Data’ is also available in the ‘Configuration > API Access’ main menu. This will mean data is pulled from the respective APIs and matched against the URLs that have already been crawled. 5) Disable HSTS To See ‘Real’ Redirect Status Codes HTTP Strict Transport Security (HSTS) is a standard by which a web server can declare to a client that it should only be accessed via HTTPS. By default the SEO Spider will respect HSTS and if declared by a server and an internal HTTP link is discovered during a crawl, a 307 status code will be reported with a status of “HSTS Policy” and redirect type of “HSTS Policy”. Reporting HSTS set-up is useful when auditing security, and the 307 response code provides an easy way to discover insecure links. Unlike usual redirects, this redirect isn’t actually sent by the web server, it’s turned around internally (by a browser and the SEO Spider) which simply requests the HTTPS version instead of the HTTP URL (as all requests must be HTTPS). A 307 status code is reported however, as you must set an expiry for HSTS. This is why it’s a temporary redirect. While HSTS declares that all requests should be made over HTTPS, a site wide HTTP -> HTTPS redirect is still needed. This is because the Strict-Transport-Security header is ignored unless it’s sent over HTTPS. So if the first visit to your site is not via HTTPS, you still need that initial redirect to HTTPS to deliver the Strict-Transport-Security header. So if you’re auditing an HTTP to HTTPS migration which has HSTS enabled, you’ll want to check the underlying ‘real’ sitewide redirect status code in place (and find out whether it’s a 301 redirect). Therefore, you can choose to disable HSTS policy by unticking the ‘Respect HSTS Policy’ configuration under ‘Configuration > Spider > Advanced’ in the SEO Spider. This means the SEO Spider will ignore HSTS completely and report upon the underlying redirects and status codes. You can switch back to respecting HSTS when you know they are all set-up correctly, and the SEO Spider will just request the secure versions of URLs again. Check out our SEOs guide to crawling HSTS. 6) Compare & Run Crawls Simultaneously At the moment you can’t compare crawls directly in the SEO Spider. However, you are able to open up multiple instances of the software, and either run multiple crawls, or compare crawls at the same time. On Windows, this is as simple as just opening the software again by the shortcut. For macOS, to open additional instances of the SEO Spider open a Terminal and type the following: open -n /Applications/Screaming Frog SEO Spider.app/ You can now perform multiple crawls, or compare multiple crawls at the same time. 7) Crawl Any Web Forms, Logged In Areas & By-Pass Bot Protection The SEO Spider has supported basic and digest standards-based authentication for a long-time, which are often used for secure access to development servers and staging sites. However, the SEO Spider also has the ability to login to any web form that requires cookies, using its in-built Chromium browser. This nifty feature can be found under ‘Configuration > Authentication > Forms Based’, where you can load virtually any password-protected website, intranet or web application, login and crawl it. For example you can login and crawl your precious fantasy football if you really wanted to ruin (or perhaps improve) your team. This feature is super powerful because it provides a way to set cookies in the SEO Spider, so it can also be used for scenarios such as bypassing geo IP redirection, or if a site is using bot protection with reCAPTCHA or the like. You can just load the page in the in-built browser, confirm you’re not a robot – and crawl away. If you load the page initially pre-crawling, you probably won’t even see a CAPTCHA, and will be issued the required cookies. Obviously you should have permission from the website as well. However, with great power comes great responsibly, so please be careful with this feature. During testing we let the SEO Spider loose on our test site while signed in as an ‘Administrator’ for fun. We let it crawl for half an hour; in that time it installed and set a new theme for the site, installed 108 plugins and activated 8 of them, deleted some posts, and generally made a mess of things. With this in mind, please read our guide on crawling password protected websites responsibly. 8) Crawl (& Remove) URL Fragments Using JavaScript Rendering Mode Occassionally it can be useful to crawl URLs with fragments (/page-name/ when auditing a website, and by default the SEO Spider will crawl them in JavaScript rendering mode. You can see our FAQs which use them below. While this can be helpful, the search engines will obviously ignore anything from the fragment and crawl and index the URL without it. Therefore, generally you may wish to switch this behaviour using the ‘Regex replace’ feature in URL Rewriting. Simply include within the ‘regex’ filed and leave the ‘replace’ field blank. This will mean they will be crawled and indexed without fragments in the same way as the default HTML text only mode. 9) Utilise ‘Crawl Analysis’ For Link Score, More Data (& Insight) While some of the features discussed above have been available for sometime, the ‘crawl analysis‘ feature was released more recently in version 10 at the end of September (2018). The SEO Spider analyses and reports data at run-time, where metrics, tabs and filters are populated during a crawl. However, ‘link score’ which is an internal PageRank calculation, and a small number of filters require calculation at the end of a crawl (or when a crawl has been paused at least). The full list of 13 items that require ‘crawl analysis’ can be seen under ‘Crawl Analysis > Configure’ in the top level menu of the SEO Spider, and viewed below. All of the above are filters under their respective tabs, apart from ‘Link Score’, which is a metric and shown as a column in the ‘Internal’ tab. In the right hand ‘overview’ window pane, filters which require post ‘crawl analysis’ are marked with ‘Crawl Analysis Required’ for further clarity. The ‘Sitemaps’ filters in particular, mostly require post-crawl analysis. They are also marked as ‘You need to perform crawl analysis for this tab to populate this filter’ within the main window pane. This analysis can be automatically performed at the end of a crawl by ticking the respective ‘Auto Analyse At End of Crawl’ tickbox under ‘Configure’, or it can be run manually by the user. To run the crawl analysis, simply click ‘Crawl Analysis > Start’. When the crawl analysis is running you’ll see the ‘analysis’ progress bar with a percentage complete. The SEO Spider can continue to be used as normal during this period. When the crawl analysis has finished, the empty filters which are marked with ‘Crawl Analysis Required’, will be populated with lots of lovely insightful data. The ‘link score’ metric is displayed in the Internal tab and calculates the relative value of a page based upon its internal links. This uses a relative 0-100 point scale from least to most value for simplicity, which allows you to determine where internal linking might be improved for key pages. It can be particularly powerful when utlised with other internal linking data, such as counts of inlinks, unique inlinks and % of links to a page (from accross the website). 10) Saving HTML & Rendered HTML To Help Debugging We occasionally receive support queries from users reporting a missing page title, description, canonical or on-page content that’s seemingly not being picked up by the SEO Spider, but can be seen to exist in a browser, and when viewing the HTML source. Often this is assumed to be a bug of somekind, but most of the time it’s just down to the site responding differently to a request made from a browser rather than the SEO Spider, based upon the user-agent, accept-language header, whether cookies are accepted, or if the server is under load as examples. Therefore an easy way to self-diagnose and investigate is to see exactly what the SEO Spider can see, by choosing to save the HTML returned by the server in the response. By navigating to ‘Configuration > Spider > Advanced’ you can choose to store both the original HTML and rendered HTML to inspect the DOM (when in JavaScript rendering mode). When a URL has been crawled, the exact HTML that was returned to the SEO Spider when it crawled the page can be viewed in the lower window ‘view source’ tab. By viewing the returned HTML you can debug the issue, and then adjusting with a different user-agent, or accepting cookies etc. For example, you would see the missing page title, and then be able to identify the conditions under which it’s missing. This feature is a really powerful way to diagnose issues quickly, and get a better understanding of what the SEO Spider is able to see and crawl. 11) Using Saved Configuration Profiles With The CLI In the latest update, version 10 of the SEO Spider, we introduced the command line interface. The SEO Spider can be operated via command line, including launching, saving and exporting, and you can use –help to view the full arguments available. However, not all configuration options are available, as there would be hundreds of arguments if you consider the full breath available. So the trick is to use saved configuration profiles for more advanced scenarios. Open up the SEO Spider GUI, select your options, whether that’s basic configurations, or more advanced features like custom search, extraction, and then save the configuration profile. To save the configuration profile, click ‘File > Save As’ and adjust the file name (ideally to something descriptive!). You can then supply the config argument to set your configuration profile for the command line crawl (and use in the future). --config "C:UsersYour NameCrawlssuper-awesome.seospiderconfig" This really opens up the possibilites for utlising the SEO Spider via the command line. What Have We Missed? We’d love to hear any other little known features and configurations that you find helpful, and are often overlooked or just hidden away. The post 11 Little-Known Features In The SEO Spider appeared first on Screaming Frog.

0 notes

Text

Fantasy API Integration Services For Your Fantasy Sports Mobile App Solution

“The man who has no creative energy has no wings” – is the new speculation of being a games lover.

There is no uncertainty that the competitors and the games they speak to have turned out to be vital to the onlookers that appreciate watching them and thus need to resemble them. In course we need to be a piece of the group and even make our very own as kids making a dream that they rule. And so there comes the “Dream Sports API” for us.

Dream sports programming interface is intended for our dreamland and our long for games. Following its underlying foundations back to 1980s, dream sports is the field in which extremist games fans substantiate themselves as the bite the dust hardest. Here we make a pipe long for an association and players with genuine competitors.

Fantasy Sports API:

The Fantasy sports industry is ready and tastes to the dimension best, yet at the same time to the opposite.

The Fantasy Sports API gives URI used to get to dream sports information.

The Fantasy Premier League diversion has huge amounts of information accessible to enable us to out to make player picks for the group.

How does Fantasy Sports API work?

The working is very clear and clean to the comprehension.

We make our groups and let others go along with us in the alliance.

The fight happens as a live match.

Contenders gain focuses dependent on how their picks perform in real live recreations, and whoever has the most focuses toward the finish of the period wins.

Statistics:

In excess of 23 billion dynamic players all around. Around 57 million dream players in the USA and Canada.

Around 7 million users in India, estimated for users to increase 50 million in the next three years.

Eilers Research demonstrates that ordinary amusements will create nearly $2.3 billion in passage charges this year and keep on becoming 41% every year, hence achieving a striking $12.8 billion by 2020.

The development of imagination application clients in the year 2018 was 20 to 25%.

Some well-known Sports APIs:

There is a pack of Sports APIs you are going to discover in the event that you head on for a hunt. Some are kept to explicit matches like FIFA, some to specific amusement like cricket, football, and so on… and some are for a gathering like Olympics; whichever way all are for dream fantasy land venture.

(You may like to know: How much will it cost to develop a Fantasy Sports Mobile App like Dream11? )

How about we jump into a portion of the dream APIs, that are outstanding and are working at the top.

La Liga Live Score API (specifically designed to support the Spanish Football League, La Liga)

Premier League Live Score API (designed to update in real time to support the Premier League)

Fantasy Sports Platform API (data for all professional and major sports)

Sportsmonks Formula One API (designed for football, basketball, tennis, and soccer)

Entity Digital Sports Cricket (designed for cricket, soccer, and basketball)

Game ScoreKeeper Live API (data for football)

Panda Score API (data provider of e-sports)

Roanuz Cricket API (fetch real-time data for cricket)

Cric API (data for cricket)

Yahoo Fantasy Sports API (provide URIs used to access fantasy sports data)

How to integrate the Fantasy Sports API with Laravel?

There are certain steps to be followed to integrate fantasy API with Laravel.

1) Create an app.

Register the API.

To register Click

It will redirect to the homepage where you have to click on Console to get into API console page.

Click Apps and Click on Create New App button and provide the required details to create the app.

2) Choose the plan.

Select the API plan based on the requirement.

Click on Buy and continue with payment.

3) Generate the access button.

The Access Token is required to access all API. The access token needs to be re-generated for every 24 hours from the time of access token generation or whenever the API throws the API status of the response Invalid Access Token error.

Call the Auth API using the curl command.

(For reference have a look into: https://www.cricketapi.com/docs/ )

How to integrate Fantasy Sports API with MEAN Stack?

To integrate MEAN Stack with Fantasy API we have to follow certain steps.

Create a new app using Angular CLI.

Create a MongoDB instance.

Connect the MongoDB and the app server using Node.js driver.

Create a RESTful API server in Node.js and Express.

Implement the API endpoints.

(For reference have a look into: https://devcenter.heroku.com/articles/mean-apps-restful-api#set-up-the-angular-project-structure )

Yahoo with Fantasy Sports API

Yahoo! has given two full draft and exchange style dream football and baseball games – a free version, and one plus version (which contains more highlights and substance). With the 2010 seasons, the Free and Plus versions of Football and Baseball have blended. The Yahoo! Dream Sports API uses the Yahoo! Query Language (YQL) as a component to get to Yahoo! Dream Sports information, returning information in XML and JSON positions.

(You may look into: https://developer.yahoo.com/fantasysports/guide/ )

Why choose Let’s Nurture for Fantasy Sports API Integration Services?

Let’s Nurture is a Leading Fantasy Sports App Development Company in India, USA, Canada, Australia, UK, and Singapore. We have a particularly devoted group of Laravel developers and MEAN Stack developers. Utilizing mastery of our in-house web engineers, Android designers, iOS engineers with cross-stage advancement capacities, we have conveyed dream sports answers for games darlings.

In the event that you need to find out about Sports API joining administrations or have any inquiries; compassionately reach us or offer subtleties at [email protected].

#Fantasy Sports API Integration Services#LetsNurture#Fantasy Sports API#Fantasy Sports API with Laravel#integrate Fantasy Sports API with MEAN Stack#Laravel Developers#MEANStack Developers#Cricket#Football#Soccer#Volleyball#Olympics#FIFA#Sports#Sports App#Fantasy Sports App Development Company#India#Canada#USA#Australia#Kuwait#Singapore

0 notes

Text

Damn envision if Madden had

Honestly I'd play a Washington CFM if it let me choose the group name and Mut 21 coins logo at the start of the season, plus the roster is young so you have a lot of freedom to mould the team in your image. Imagine if your image is that of Native American tradition? Then right into you PR team to keep it, you have to pump your resource points.

I believe it ought to be up to Paradox to make this match a reality. Paradox would charge us for every little thing as extra dlc. Logo options for immersion? $9.99. Uniform options? Oh here's a big dlc, which offers colorful smoke paths to pitched footballs,"precision" passing, customizable team flags and a music pack for just $19.99! (music not included in cost, $3.99 for music dlc.)

True, but the game would be very good once you paid for expansions and all 200 DLCs. And the AI nevertheless pays $20MM APY deals following 50 patches for a certain reason to roster bubble gamers. Dependent on the WP post the game would definitely be developed by Neversoft. Damn envision if Madden had.

Listen give EA a rest we all know that they require a half a decade to put in capabilities. 5 decades give them and they will get on it. EA is similar to me , except. They need to press on discs and make the game playable day for men and women who don't download 1 patches. They will spot on day 1 in the Washington emblem. This is not news y’have no fucking clue how video games work. They are making the game playable for individuals with data caps, without internet, or that don't download 1 spots for some reason. This is a great thing.

They have to save it so they could list it as a"new feature" next year. Like how they listed"brand new playoff structure" for franchise mode this season when all they did was match the new NFL playoff fornat. That shouldn't even matter. It could be until October, there's no reason EA can not just update the match when the title releases... They updated very quickly whenever Favre unretired. Not the first time. I remember they were trying to cheap Madden 21 coins get him properly rated. After that they constantly had him set in case.

0 notes

Text

Favorite tweets

Would anyone be interested in a PowerShell module for viewing football data (standings, fixtures, etc)? Considering creating a module around an existing REST API as a new side project for 2020 =) pic.twitter.com/7yyfcGBbQ7

— Jan Egil Ring (@JanEgilRing) January 1, 2020

from http://twitter.com/JanEgilRing via IFTTT

0 notes

Text

Cost and Features of Fantasy Sports Software Development

Sports play an important role in people’s lives around the globe. Everyone has their own choice when it comes to sports, Some like Cricket whereas others like Basketball, Tennis, Soccer or Football. If you’re a sports lover and looking for your own Fantasy Sports Software Development then you’ve come to the right place!

Here, we’re going to tell you about everything that will help you in making your dream into a reality in the form of your own Fantasy Sports Website Development.

According to the latest study, Fantasy Sports Market will reach the US $26400 Million by 2025. As per this report, we can take an idea about the popularity of Fantasy Sports App and Website Development.Around 89% of users play Fantasy Sports in a month.As per the Market Watch, America is leading in the market share of Fantasy Sports with 58% in 2017 and Europe comes second in the list with a market share of 14% share in 2017.

Due to its higher demand for Fantasy Sports Cricket Website around the globe, the development of a Fantasy Sports Website like Dream 11 is the smart investment.

Why Investing in a Fantasy Sports Software Development is a great idea?

Business Growth

From a business point of view, Fantasy Sports Software Development is worth investment as you can see instant growth in the market in just a couple of years.

Easy Funding

The fantasy Sports business is a billion-dollar business. You don’t need to struggle to explain your business idea to everyone as investors are aware of the rapid growth of Fantasy Sports Cricket Websites Like Dream 11.

As you can take the idea of Fantasy Sports business growth from National Football League’s Annual Revenue in America is nearly $13 billion which is expected to grow to $25 billion in upcoming years.

Endless Chances

From Cricket Series, Football Matches to Kabaddi Leagues, there are many sports leagues organized every year and the scope of your application to work is very high.

Less Competition

Since the idea of developing its own fantasy sports cricket website, the fantasy sports kabaddi website is new in the market and hence there is lesser competition in Fantasy Sports App Development Like a Dream11.

We can say that in the upcoming years the competition will be very high in the Fantasy Sports business as more and more people are planning to enter in the world of fantasy sports. So, if you’re serious about your investment in Fantasy Sports App Development then execute your plan as soon as possible. It will not only help you in developing a successful Fantasy Sports Website but also you will get time to grow your business in less time.

What are the Popular Features and Functionalities of Fantasy Sports Software?

Application User/ Contestant

Registration of New user/ Login of Existing Users- Interested users can register to Fantasy Sports Software using their Phone Number, Email id and set a password whereas Existing users can log in using their registered phone number, email id, and password. Also, new users can add referral code, if they are referred by their friends or family members. Home Screen:It is where users will land after logging into Fantasy Software App. Entire details of sports they will get here like they can filter the search by sports type and match type. Also, they will be able to see match listing that includes Tournament Name, Team 1 (With relevant images), Team 2 (with relevant images) and Match Schedule ( Date & Time)

* Once the user has selected the match of his/her choice, then they will be redirected to the contest screen.

Contest:

On this page, users can access all contest details like filter by contest, Entry Fee Range, Contest Fee Range, Winning Amount, Winners Count and Total Team Count. After that, the user can choose a contest of his choice and can join the match.

Join the Contest:

In this way, the user can join the contest after paying the required fees.

Payment Options:

Users can pay their contest fees by using Debit Card/ Credit Card/ Payment Wallets/ or using referral cash or bonus points.

Create Your Contest:

With a single tap on your smartphone, users can easily create their own contents by adding the relevant details like Contest Name, Total Winning Amount, Contest Size, allow multiple teams (Y/N), Entry Fees. Content Creator needs to join the contest first that the user has created and then the user can invite their friends or contacts to participate in the contest.

My Contest Details:

Under this section, you can see the details of the participants who had joined the contest. Here users can edit their selected players and filter search by their categories like upcoming matches, live scores, and results.

Dashboard or Profile:

In this you will see your personal details, earned amounts, reward points; you can add card details for adding payment, Accounts details and can withdraw an amount that you have in your account.

Setting:

In the setting section, you can view other important features of the Fantasy Sports Website App such as:

Invite and Earn Reward Points

In this section, you can share the URL of the Fantasy Sports Software with your friends and contact lists. Whenever they will join the contest using your shared url you will earn reward points. Along with that, the user who joined the contest will receive some cash bonus in their wallet.

Admin User

Admin Logins:

In this section admin of the Fantasy Sports Application can log in to their account using the username and password.

The dashboard of the Fantasy Sports Software:

When the admin logins to the app and click on the dashboard details, the admin will get statistics of Matches. Details such as upcoming matches, Ongoing Matches, played matches)

User Manager:

In this section, the admin can manage all user accounts like Edit/Add/Delete/Inactive Accounts/Active Accounts.

Games Categories Management:

In this section, the admin can manage all the details of the games.

Contest Management:

In this section, the admin will get control over contests like Add/Edit/Delete/Deactivate Match Contests.

Earnings:

Admin can view the earnings from these sections using all sorts of filters.

Management of Payment:

Here, the admin can view the various modes of payments of the application.

Reward Points Management:

In this section, all reward points offered to the users can be managed.

Cash Bonus Management:

In this section of Cash Bonus Management, admin can manage cash bonus offered to the users.

Report Management:

In this panel, admin can able to generate contest reports, participant reports, match reports, earnings reports, player ranking reports, and others.

Bank Details Management:

In this section, the admin will manage all requests from the contestants. Requests related to withdrawing their payments in their respective banks. Admin is authorized to accept/reject bank details, pan card details and other relevant details related to the release of their payment.

Which are the additional features/Functionalities of Fantasy Sports Cricket Website?

Live Match Score Board

Users will be able to see the latest match live scores, Expert Analysis and Match Highlights.

API Integration for Live Scoreboard

Live Scoreboard API Integration allows you the most current data and allows developers to easily integrate into any platforms.

Push Notifications

Push Notification in terms of alerts and notifications plays an important role. It informs users about upcoming matches and the right time of making the team.

Custom Mail Reminder System

This mail will be sent to targeted users who are not participating in the contests but registered. Also, it can be very helpful to inform existing users via mail about upcoming matches and contests.

Easy Payment Procedures

There are easy payment procedures where users can make payments in a simpler and hassle-free way. They can use Debit Card/Credit Card/ Payment Wallets and Net Banking.

After understanding the demands and features of Fantasy Sports Website, it’s really important to understand what’s the Cost of Developing a Fantasy Sports Website?

Cost of Developing a Fantasy Sports Website/Software

According to FSTA (Fantasy Sports Trade Association), the Fantasy Sports business is growing at a rapid pace and it is worth 10 billion worldwide.

Fantasy Sports Websites are growing year on year if you’re looking to invest in a Fantasy Sports Website like Dream11, then this right time to transform your dreams into reality. The cost of any Software or Website depends upon its designs, features, and functionalities; it’s really difficult to give an actual cost of the products without knowing the requirements of clients.

Generally, the cost of Developing Fantasy Sports Software Development, websites and Apps comes in between USD 5000 to USD 9000. Rest, cost can be decrease or increase depends upon the number of sports and additional features you’ve selected.

Final Note

Fantasy Sports lovers are growing at a rapid pace around the globe, so it’s a smart investment for anyone to develop a Fantasy Sports software development like Dream 11.

If you have any doubts or questions related to Fantasy Sports Software Development, Features and Cost, feel free to book a free consultation at Synarion IT Solutions! We would love to hear from you all!

Originally published at Synarion IT Solutions on October 9, 2019.

#fantasysports#fantasysportssoftware#fantasysportsappdevelopment#fantasy sports website development#fantasysportsbusiness#fantasy soccer

0 notes

Text

Public Sports & Fitness APIs

★balldontlie - Ballldontlie provides access to stats data from the NBA ★BikeWise - Bikewise is a place to learn about and report bike crashes, hazards and thefts ★Canadian Football League (CFL) - Official JSON API providing real-time league, team and player statistics about the CFL ★Cartola FC - The Cartola FC API serves to check the partial points of your team ★City Bikes - City Bikes around the world ★Cricket Live Scores - Live cricket scores ★Ergast F1 - F1 data from the beginning of the world championships in 1950 ★Fitbit - Fitbit Information ★Football (Soccer) Videos - Embed codes for goals and highlights from Premier League, Bundesliga, Serie A and many more ★Football Prediction - Predictions for upcoming football matches, odds, results and stats ★Football-Data.org - Football Data ★JCDecaux Bike - JCDecaux's self-service bicycles ★NBA Stats - Current and historical NBA Statistics ★NFL Arrests - NFL Arrest Data ★Pro Motocross - The RESTful AMA Pro Motocross lap times for every racer on the start gate ★Strava - Connect with athletes, activities and more ★SuredBits - Query sports data, including teams, players, games, scores and statistics ★TheSportsDB - Crowd-Sourced Sports Data and Artwork ★Wger - Workout manager data as exercises, muscles or equipment

0 notes

Link

via Screaming Frog

The Screaming Frog SEO Spider has evolved a great deal over the past 8 years since launch, with many advancements, new features and a huge variety of different ways to configure a crawl.

This post covers some of the lesser-known and hidden-away features, that even more experienced users might not be aware exist. Or at least, how they can be best utilised to help improve auditing. Let’s get straight into it.

1) Export A List In The Same Order Uploaded

If you’ve uploaded a list of URLs into the SEO Spider, performed a crawl and want to export them in the same order they were uploaded, then use the ‘Export’ button which appears next to the ‘upload’ and ‘start’ buttons at the top of the user interface.

The standard export buttons on the dashboard will otherwise export URLs in order based upon what’s been crawled first, and how they have been normalised internally (which can appear quite random in a multi-threaded crawler that isn’t in usual breadth-first spider mode).

The data in the export will be in the exact same order and include all of the exact URLs in the original upload, including duplicates, normalisation or any fix-ups performed.

2) Crawl New URLs Discovered In Google Analytics & Search Console

If you connect to Google Analytics or Search Console via the API, by default any new URLs discovered are not automatically added to the queue and crawled. URLs are loaded, data is matched against URLs in the crawl, and any orphan URLs (URLs discovered only in GA or GSC) are available via the ‘Orphan Pages‘ report export.

If you wish to add any URLs discovered automatically to the queue, crawl them and see them in the interface, simply enable the ‘Crawl New URLs Discovered in Google Analytics/Search Console’ configuration.

This is available under ‘Configuration > API Access’ and then either ‘Google Analytics’ or ‘Google Search Console’ and their respective ‘General’ tabs.

This will mean new URLs discovered will appear in the interface, and orphan pages will appear under the respective filter in the Analytics and Search Console tabs (after performing crawl analysis).

3) Switching to Database Storage Mode

The SEO Spider has traditionally used RAM to store data, which has enabled it to crawl lightning-fast and flexibly for virtually all machine specifications. However, it’s not very scalable for crawling large websites. That’s why early last year we introduced the first configurable hybrid storage engine, which enables the SEO Spider to crawl at truly unprecedented scale for any desktop application while retaining the same, familiar real-time reporting and usability.

So if you need to crawl millions of URLs using a desktop crawler, you really can. You don’t need to keep increasing RAM to do it either, switch to database storage instead. Users can select to save to disk by choosing ‘database storage mode’, within the interface (via ‘Configuration > System > Storage).

This means the SEO Spider will hold as much data as possible within RAM (up to the user allocation), and store the rest to disk. We actually recommend this as the default setting for any users with an SSD (or faster drives), as it’s just as fast and uses much less RAM.

Please see our guide on how to crawl very large websites for more detail.

4) Request Google Analytics, Search Console & Link Data After A Crawl

If you’ve already performed a crawl and forgot to connect to Google Analytics, Search Console or an external link metrics provider, then fear not. You can connect to any of them post crawl, then click the beautifully hidden ‘Request API Data’ button at the bottom of the ‘API’ tab.

Alternatively, ‘Request API Data’ is also available in the ‘Configuration > API Access’ main menu.

This will mean data is pulled from the respective APIs and matched against the URLs that have already been crawled.

5) Disable HSTS To See ‘Real’ Redirect Status Codes

HTTP Strict Transport Security (HSTS) is a standard by which a web server can declare to a client that it should only be accessed via HTTPS. By default the SEO Spider will respect HSTS and if declared by a server and an internal HTTP link is discovered during a crawl, a 307 status code will be reported with a status of “HSTS Policy” and redirect type of “HSTS Policy”. Reporting HSTS set-up is useful when auditing security, and the 307 response code provides an easy way to discover insecure links.

Unlike usual redirects, this redirect isn’t actually sent by the web server, it’s turned around internally (by a browser and the SEO Spider) which simply requests the HTTPS version instead of the HTTP URL (as all requests must be HTTPS). A 307 status code is reported however, as you must set an expiry for HSTS. This is why it’s a temporary redirect.

While HSTS declares that all requests should be made over HTTPS, a site wide HTTP -> HTTPS redirect is still needed. This is because the Strict-Transport-Security header is ignored unless it’s sent over HTTPS. So if the first visit to your site is not via HTTPS, you still need that initial redirect to HTTPS to deliver the Strict-Transport-Security header.

So if you’re auditing an HTTP to HTTPS migration which has HSTS enabled, you’ll want to check the underlying ‘real’ sitewide redirect status code in place (and find out whether it’s a 301 redirect). Therefore, you can choose to disable HSTS policy by unticking the ‘Respect HSTS Policy’ configuration under ‘Configuration > Spider > Advanced’ in the SEO Spider.

This means the SEO Spider will ignore HSTS completely and report upon the underlying redirects and status codes. You can switch back to respecting HSTS when you know they are all set-up correctly, and the SEO Spider will just request the secure versions of URLs again. Check out our SEOs guide to crawling HSTS.

6) Compare & Run Crawls Simultaneously

At the moment you can’t compare crawls directly in the SEO Spider. However, you are able to open up multiple instances of the software, and either run multiple crawls, or compare crawls at the same time.

On Windows, this is as simple as just opening the software again by the shortcut. For macOS, to open additional instances of the SEO Spider open a Terminal and type the following:

open -n /Applications/Screaming\ Frog\ SEO\ Spider.app/

You can now perform multiple crawls, or compare multiple crawls at the same time.

7) Crawl Any Web Forms, Logged In Areas & By-Pass Bot Protection

The SEO Spider has supported basic and digest standards-based authentication for a long-time, which are often used for secure access to development servers and staging sites. However, the SEO Spider also has the ability to login to any web form that requires cookies, using its in-built Chromium browser.

This nifty feature can be found under ‘Configuration > Authentication > Forms Based’, where you can load virtually any password-protected website, intranet or web application, login and crawl it. For example you can login and crawl your precious fantasy football if you really wanted to ruin (or perhaps improve) your team.

This feature is super powerful because it provides a way to set cookies in the SEO Spider, so it can also be used for scenarios such as bypassing geo IP redirection, or if a site is using bot protection with reCAPTCHA or the like.

You can just load the page in the in-built browser, confirm you’re not a robot – and crawl away. If you load the page initially pre-crawling, you probably won’t even see a CAPTCHA, and will be issued the required cookies. Obviously you should have permission from the website as well.

However, with great power comes great responsibly, so please be careful with this feature.

During testing we let the SEO Spider loose on our test site while signed in as an ‘Administrator’ for fun. We let it crawl for half an hour; in that time it installed and set a new theme for the site, installed 108 plugins and activated 8 of them, deleted some posts, and generally made a mess of things.

With this in mind, please read our guide on crawling password protected websites responsibly.

8) Crawl (& Remove) URL Fragments Using JavaScript Rendering Mode

Occassionally it can be useful to crawl URLs with fragments (/page-name/#this-is-a-fragment) when auditing a website, and by default the SEO Spider will crawl them in JavaScript rendering mode.

You can see our FAQs which use them below.

While this can be helpful, the search engines will obviously ignore anything from the fragment and crawl and index the URL without it. Therefore, generally you may wish to switch this behaviour using the ‘Regex replace’ feature in URL Rewriting. Simply include #.* within the ‘regex’ filed and leave the ‘replace’ field blank.

This will mean they will be crawled and indexed without fragments in the same way as the default HTML text only mode.

9) Utilise ‘Crawl Analysis’ For Link Score, More Data (& Insight)

While some of the features discussed above have been available for sometime, the ‘crawl analysis‘ feature was released more recently in version 10 at the end of September (2018).

The SEO Spider analyses and reports data at run-time, where metrics, tabs and filters are populated during a crawl. However, ‘link score’ which is an internal PageRank calculation, and a small number of filters require calculation at the end of a crawl (or when a crawl has been paused at least).

The full list of 13 items that require ‘crawl analysis’ can be seen under ‘Crawl Analysis > Configure’ in the top level menu of the SEO Spider, and viewed below.

All of the above are filters under their respective tabs, apart from ‘Link Score’, which is a metric and shown as a column in the ‘Internal’ tab.

In the right hand ‘overview’ window pane, filters which require post ‘crawl analysis’ are marked with ‘Crawl Analysis Required’ for further clarity. The ‘Sitemaps’ filters in particular, mostly require post-crawl analysis.

They are also marked as ‘You need to perform crawl analysis for this tab to populate this filter’ within the main window pane.

This analysis can be automatically performed at the end of a crawl by ticking the respective ‘Auto Analyse At End of Crawl’ tickbox under ‘Configure’, or it can be run manually by the user.

To run the crawl analysis, simply click ‘Crawl Analysis > Start’.

When the crawl analysis is running you’ll see the ‘analysis’ progress bar with a percentage complete. The SEO Spider can continue to be used as normal during this period.

When the crawl analysis has finished, the empty filters which are marked with ‘Crawl Analysis Required’, will be populated with lots of lovely insightful data.

The ‘link score’ metric is displayed in the Internal tab and calculates the relative value of a page based upon its internal links.

This uses a relative 0-100 point scale from least to most value for simplicity, which allows you to determine where internal linking might be improved for key pages. It can be particularly powerful when utlised with other internal linking data, such as counts of inlinks, unique inlinks and % of links to a page (from accross the website).

10) Saving HTML & Rendered HTML To Help Debugging

We occasionally receive support queries from users reporting a missing page title, description, canonical or on-page content that’s seemingly not being picked up by the SEO Spider, but can be seen to exist in a browser, and when viewing the HTML source.

Often this is assumed to be a bug of somekind, but most of the time it’s just down to the site responding differently to a request made from a browser rather than the SEO Spider, based upon the user-agent, accept-language header, whether cookies are accepted, or if the server is under load as examples.

Therefore an easy way to self-diagnose and investigate is to see exactly what the SEO Spider can see, by choosing to save the HTML returned by the server in the response.

By navigating to ‘Configuration > Spider > Advanced’ you can choose to store both the original HTML and rendered HTML to inspect the DOM (when in JavaScript rendering mode).

When a URL has been crawled, the exact HTML that was returned to the SEO Spider when it crawled the page can be viewed in the lower window ‘view source’ tab.

By viewing the returned HTML you can debug the issue, and then adjusting with a different user-agent, or accepting cookies etc. For example, you would see the missing page title, and then be able to identify the conditions under which it’s missing.

This feature is a really powerful way to diagnose issues quickly, and get a better understanding of what the SEO Spider is able to see and crawl.

11) Using Saved Configuration Profiles With The CLI

In the latest update, version 10 of the SEO Spider, we introduced the command line interface. The SEO Spider can be operated via command line, including launching, saving and exporting, and you can use –help to view the full arguments available.