#Software Configuration Management Tools

Explore tagged Tumblr posts

Text

In this episode, we explore the importance of Software Configuration Management tools for developers and teams. We also highlight how Impressico Business Solutions helps businesses streamline their development process, ensuring stability, collaboration, and efficiency. Tune in to learn more about SCM tools and automation!

#Software Configuration Management Tools#Configuration Management Tools#Software Configuration#Configuration Management

0 notes

Text

The Top 5 Software Configuration Management Tools of 2025: Powering the Future of IT Infrastructure

In today’s fast-paced, ever-evolving digital landscape, businesses are scaling at an unprecedented pace. Netflix, one of the leading streaming giants, is a perfect example of this transformation. It evolved from a DVD rental service to streaming billions of hours of content globally, providing viewers with seamless access across various devices. But how did Netflix manage this rapid growth and ensure consistency across its vast software infrastructure? The answer lies in Software Configuration Management (SCM) tools—an essential component of modern software development.

SCM tools ensure that the development process runs smoothly by tracking and controlling changes in software code and configurations. They provide a systematic approach to managing the software lifecycle, from development to deployment, ensuring that all systems work in harmony. This crucial "quality control" allows businesses like Netflix to scale their operations while maintaining stability and minimizing disruptions.

As businesses continue to expand, having an effective SCM tool becomes essential to managing distributed systems, maintaining uptime, and ensuring consistent performance across the organization’s network. SCM tools play a pivotal role in helping organizations keep pace with the rapidly evolving tech landscape, enabling innovation while reducing the chaos typically associated with scaling projects. In this article, we explore the Top 5 Software Configuration Management Tools of 2025, offering businesses the tools to handle modern software challenges effectively.

What Are Software Configuration Management Tools?

Software Configuration Management (SCM) tools help development teams control and track changes in software code, configurations, and documents across multiple environments. These tools are designed to ensure consistency, automation, and security during the deployment process. With businesses increasingly adopting DevOps practices and continuous integration/continuous delivery (CI/CD) pipelines, SCM tools have become indispensable. They streamline the process of software development and ensure that changes are made efficiently without risking stability.

SCM tools help prevent errors that arise when different environments (e.g., production, testing, and development) have different configurations. They provide version control, change management, and rollback options, allowing developers to fix issues swiftly and avoid potential downtimes. Furthermore, SCM tools enable real-time updates, ensuring that systems are always running the most up-to-date configurations without compromising performance.

Why Are SCM Tools Crucial for 2025?

The rise of cloud computing, microservices, and DevOps has dramatically changed the way businesses approach software development. Enterprises are dealing with complex, distributed systems that require meticulous configuration management. This complexity makes it crucial for teams to have powerful SCM tools at their disposal. These tools:

Automate deployment and monitoring: SCM tools help automate system updates, configuration changes, and compliance monitoring, reducing manual intervention and the potential for human error.

Ensure scalability: As businesses scale, SCM tools allow for seamless adjustments across hundreds or thousands of servers and microservices, ensuring that systems are configured correctly across all platforms.

Support real-time configuration changes: These tools make it possible to manage and deploy real-time updates without causing disruptions, helping to maintain uptime and service availability even during major updates.

The Top 5 Software Configuration Management Tools of 2025

Let’s dive into the top 5 SCM tools that businesses will rely on in 2025 to handle their evolving infrastructure needs.

1. Auvik

Auvik is making a splash in the SCM world, thanks to its easy-to-use interface and powerful automation capabilities. It offers real-time network visibility, monitoring, and network configuration management. Auvik helps businesses streamline their network operations by automating network mapping, performance monitoring, and configuration backups. It provides real-time alerts for potential issues, empowering businesses to proactively manage their networks.

Key Features:

Automated network discovery and real-time mapping

Network performance monitoring and configuration backup

Seamless integration with various IT management tools

Why We Chose Auvik: Auvik is designed to simplify the complex task of managing large, distributed networks. With its automated capabilities and easy integration, Auvik offers real-time control and monitoring to ensure that networks are running efficiently and securely.

2. Puppet

Puppet is a well-established leader in the field of infrastructure automation and configuration management. It helps businesses automate the entire lifecycle of their infrastructure by managing resources across both cloud and on-premise environments. Puppet simplifies the deployment process, reduces operational costs, and ensures consistency by allowing users to define and enforce system configurations as code.

Key Features:

Infrastructure as code (IaC) for consistent, repeatable deployments

Integration with major cloud providers like AWS, Azure, and Google Cloud

Automated system provisioning, compliance management, and configuration enforcement

Why We Chose Puppet: Puppet is particularly effective for organizations that need to scale their operations while maintaining control over complex infrastructures. Its robust ecosystem and enterprise support options make it ideal for businesses seeking a flexible and scalable solution.

3. CFEngine

CFEngine is a lightweight, agent-based configuration management tool known for its ability to scale efficiently, managing environments ranging from small devices to large-scale infrastructures. CFEngine’s core strength lies in its ability to continuously enforce configurations, manage patches, and automate compliance across an organization’s IT infrastructure.

Key Features:

Agent-based architecture for scalability

Continuous configuration enforcement and patch management

Open-source and commercial editions available

Why We Chose CFEngine: CFEngine is particularly well-suited for organizations with large-scale infrastructures. Its lightweight, scalable architecture ensures that businesses can automate their configuration management without sacrificing performance or security.

4. CHEF by Progress

Chef is an infrastructure automation tool that enables organizations to manage and configure their software development and IT infrastructure at scale. It provides comprehensive tools for automating deployment, ensuring continuous compliance, and managing configurations. Chef is particularly powerful for automating infrastructure as code (IaC) and integrating with cloud platforms like AWS, Google Cloud, and Azure.

Key Features:

Automation of infrastructure provisioning and configuration management

Continuous compliance and security management

Integration with cloud platforms for a seamless experience

Why We Chose Chef: Chef is perfect for enterprises looking to automate complex infrastructures. Its extensive features and premium support options ensure that businesses can easily scale their operations while maintaining compliance and security.

5. SaltStack

SaltStack is an open-source SCM tool that excels in real-time infrastructure automation. Known for its event-driven automation and remote execution capabilities, SaltStack helps businesses quickly respond to changes in their IT environment. It provides powerful tools for configuration management, orchestration, and system monitoring.

Key Features:

Event-driven automation for real-time updates

Remote execution and orchestration capabilities

Integration with various platforms and cloud environments

Why We Chose SaltStack: SaltStack stands out for its ability to automate changes in real time, making it an excellent choice for businesses that require quick responses to infrastructure changes. Its flexibility and scalability make it an ideal choice for enterprises with complex IT environments.

Conclusion: Choose the Right SCM Tool for Your Business

In 2025, businesses face a challenging environment where scalability, automation, and consistency are paramount. Software Configuration Management tools are no longer optional; they are essential for ensuring that businesses can manage and scale their software systems efficiently. Whether you're managing networks, automating cloud infrastructure, or ensuring continuous compliance, the right SCM tool will provide you with the tools you need to stay ahead of the competition.

By selecting an SCM tool that aligns with your business’s unique needs, you can streamline your operations, enhance security, and enable innovation without compromising on stability. Be sure to explore the features of each tool to determine which one is best suited for your specific environment.

0 notes

Text

Top 5 Software Configuration Management Tools Of 2022 - TechDogs

#Configuration Management Tools List#Top Software Configuration Management Tools#Software Configuration#Best Configuration Management Tools

0 notes

Text

For those who are not aware: Bitlocker is encryption software, it encrypts your computer and makes it impossible to access the information on the computer unless you have the key.

It should be standard practice for IT companies to document the bitlocker keys as they are configuring bitlocker on a computer; generally you would do this by creating a record in your client management software for that specific device and putting the key in the record. Sometimes software can be used to extract that information in the event that it's necessary, but even if there's theoretically a way to extract the key, it should be documented somewhere *other* than on the encrypted computer.

This is something that a lot of IT people fuck up on kind of a lot (we've definitely had problems with missing bitlocker keys and I'm quite happy that the people who didn't document those keys aren't my coworkers anymore).

So what do you do if you want to use encryption software and you're NOT an IT company using a remote management tool that might be able to snag the keys?

When you are setting up encryption, put the encryption key in your password manager. Put it in your password manager. Document the important information that you cannot lose in your password manager. Your password manager is a good place to keep important things like your device encryption key, which you do not want lost or stolen. (If you run your password manager locally on an encrypted computer, export the data every once in a while, save it as an encrypted file, and put the file on your backup drive; you are going to have a bad time if your computer that hosts the only copies of your passwords shits the bed so *make a backup*)

This is my tip for home users for any kind of important recovery codes or software product keys: Print out the key and put it in your underwear drawer. Keep it there with your backup drive. That way you've got your important (small) computer shit in one place that is NOT your computer and is not likely to get shifted around and lost (the way that papers in desks often get shifted around and lost).

2K notes

·

View notes

Text

Immigration and Customs Enforcement is paying software company Palantir $30 million to provide the agency with “near real-time visibility” on people self-deporting from the United States, according to a contract justification published in a federal register on Thursday. The tool would also help ICE choose who to deport, giving special priority to “visa overstays,” the document shows.

Palantir has been an ICE contractor since 2011, but the document published Thursday indicates that Palantir wants to provide brand-new capabilities to ICE. The agency currently does not have any publicly known tools for tracking self-deportation in near real-time. The agency does have a tool for tracking self-reported deportations, but Thursday’s document, which was first reported by Business Insider, does not say to what degree this new tool may rely on self-reported data. ICE also has “insufficient technology” to detect people overstaying their visas, according to the Department of Homeland Security. This is particularly due to challenges in collecting "biographic and biometric" data from departing travelers, especially if they leave over land, according to Customs and Border Protection.

The agency says in the document that these new capabilities will be under a wholly new platform called the Immigration Lifecycle Operating System, or ImmigrationOS. Palantir is expected to provide a prototype of ImmigrationOS by September 25, 2025, and the contract is scheduled to last at least through September 2027. ICE’s update to the contract comes as the Trump administration is demanding that thousands of immigrants “self-deport,” or leave the US voluntarily.

ICE and Palantir did not respond for comment.

According to the document, ImmigrationOS is intended to have three core functions. Its “Targeting and Enforcement Prioritization” capability would streamline the “selection and apprehension operations of illegal aliens.” People prioritized for removal, ICE says, should be “violent criminals,” gang members, and “visa overstays.”

Its “Self-Deportation Tracking” function would have “near real-time visibility into instances of self-deporation,” the document says. The document does not say what data Palantir would use for such a system, but ICE says it aims to “accurately report metrics of alien departures from the United States.” The agency stipulates that this tool should also integrate with “enforcement prioritization systems to inform policy” but does not elaborate on these systems or policies.

Meanwhile, the “Immigration Lifecycle Process” function would streamline the “identification” of aliens and their “removal” from the United States, with the goal of making "deportation logistics” more efficient.

In a “rationale” section, ICE claims that it has an “urgent and compelling” need for ImmigrationOS’s capabilities. Without them, ICE claims, it would be “severely” limited in its ability to target the gangs MS-13 and Tren de Aragua, and abide by President Donald Trump’s executive order to expedite deportations.

Palantir, ICE claims, is “the only source that can provide the required capabilities and prototype of ImmogrationOS [sic] without causing unacceptable delays.” ICE says the company has developed “deep institutional knowledge of the agency’s operations over more than a decade of support.”

“No other vendor could meet these timeframes of having the infrastructure in place to meet this urgent requirement and deliver a prototype in less than six months,” ICE says in the document.

ICE’s document does not specify the data sources Palantir would pull from to power ImmigrationOS. However, it says that Palantir could “configure” the case management system that it has provided to ICE since 2014.

Palantir has done work at various other government agencies as early as 2007. Aside from ICE, it has worked with the US Army, Air Force, Navy, Internal Revenue Service, and Federal Bureau of Investigation. As reported by WIRED, Palantir is currently helping Elon Musk’s so-called Department of Government Efficiency (DOGE) build a brand-new “mega API” at the IRS that could search for records across all the different databases that the agency maintains.

Last week, 404 Media reported that a recent version of Palantir’s case-management system for ICE allows agents to search for people based on “hundreds of different, highly specific categories,” including how a person entered the country, their current legal status, and their country of origin. It also includes a person’s hair and eye color, whether they have scars or tattoos, and their license-plate reader data, which would provide detailed location data about where that person travels by car.

These functionalities have been mentioned in a government privacy assessment published in 2016, and it’s not clear what new information may have been integrated into the case management system over the past four years.

This week’s $30 million award is an addition to an existing Palantir contract penned in 2022, originally worth about $17 million, for work on ICE’s case management system. The agency has increased the value of the contract five times prior to this month; the largest was a $19 million increase in September 2023.

The contract’s ImmigrationOS update was first documented on April 11 in a government-run database tracking federal spending. The entry had a 248-character description of the change. The five-page document ICE published Thursday, meanwhile, has a more detailed description of Palantir’s expected services for the agency.

The contract update comes as the Trump administration deputizes ICE and other government agencies to drastically escalate the tactics and scale of deportations from the US. In recent weeks, immigration authorities have arrested and detained people with student visas and green cards, and deported at least 238 people to a brutal megaprison in El Salvador, some of whom have not been able to speak with a lawyer or have due process.

As part of its efforts to push people to self-deport, DHS in late March revoked the temporary parole of more than half a million people and demanded that they self-deport in about a month, despite having been granted authorization to live in the US after fleeing dangerous or unstable situations in Cuba, Haiti, Nicaragua, and Venezuela under the so-called “CHNV parole programs.”

Last week, the Social Security Administration listed more than 6,000 of these people as dead, a tactic meant to end their financial lives. DHS, meanwhile, sent emails to an unknown number of people declaring that their parole had been revoked and demanding that they self-deport. Several US citizens, including immigration attorneys, received the email.

On Monday, a federal judge temporarily blocked the Trump administration’s move to revoke people’s authorization to live in the US under the CHNV programs. White House spokesperson Karoline Leavitt called the judge’s ruling “rogue.”

5 notes

·

View notes

Text

What types of issues does technical support handle?

Technical Support Services

Technical Supporting services serve a vital role and prospect, by keeping businesses and organizations running efficiently & active by resolving their IT-related problems and issues. From minor glitches to major disruptions errors, technical support teams and professional experts help to ensure that systems remain up and running while in use, allowing the users to stay productive and alert. But what exactly do they handle? Let's take a closer look at what to look for and they assist with.

Hardware and Device Issues:

Technical supporting teams and professional experts assist with problems related to desktops, laptops, printers, scanners, and other hardware. As this includes and consists of deliverables fixing hardware failures, replacing parts, configuring devices, and troubleshooting connectivity or performance issues. Whether it's a malfunctioning keyboard or a slow-loading workstation, Technical Support is there to help.

Software Installation and Troubleshooting:

From installing and implementing the applications to resolving and assisting the software errors and glitches, technical Support guides various software-related and applications concerns and points at a time. They help and assist with updating programs, fixing bugs, assuring the overall compatibility within the operating systems, and restoring the performance if an app crashes or freezes.

Network and Connectivity Problems:

A strong network and support is the backbone of modern businesses and companies. Technical Supporting officials handle and maintain the internet issues, router configuration, VPN access, slow connection speeds, and network outages. Whether it's a minor or impactful Wi-Fi issue or a more significant server connectivity problem, the support team works to restore communication quickly.

Email and Communication Tools:

Email downtime can affect the business continuity and its proceedings. The technical supporting team manages email configuration, syncing errors, spam filters, and login issues and errors across multiple devices and platforms, including Outlook and Gmail. They also handle collaboration tools like Teams, Slack, or Zoom when they don't function as expected.

Security & Access Management:

Working professionals play an essential role in managing the IT security measures and operating parameters. While they also assist to reset passwords settings, also help to manage the user accounts, set up two-factor authentication, and address down the ongoing suspicious activity.

Personalized Help and Guidance:

From onboarding and completing setup for the new users to answering tech-related questions, technical Support assures that employees use systems efficiently. Their goal is to minimize downtime and boost productivity. Companies like Suma Soft, IBM, Cyntexa, and Cignex offer dependable technical support services tailored to your specific business needs. Their expertise helps resolve issues faster and keeps your systems up and running at all times.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

3 notes

·

View notes

Text

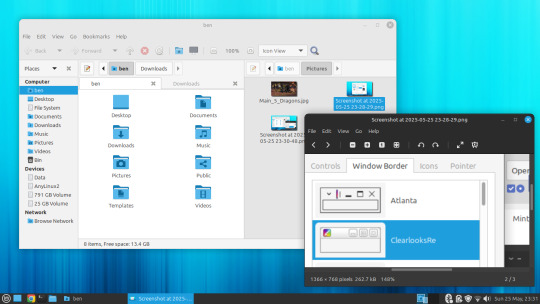

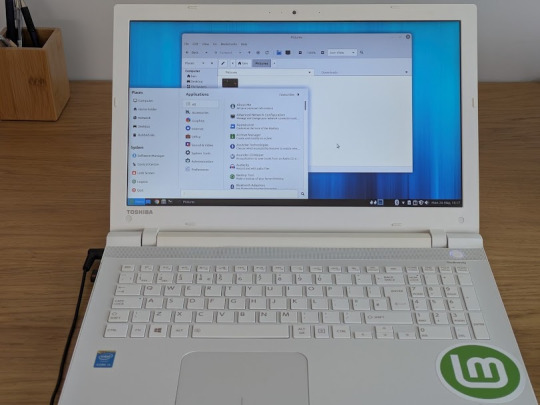

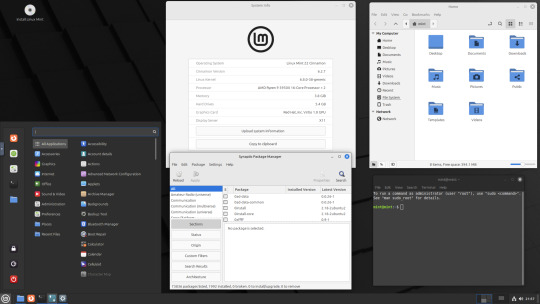

25.05.25

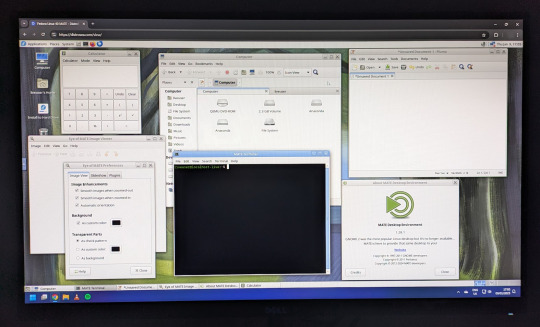

I installed Linux Mint MATE 22.1 today.

https://linuxmint.com/screenshots.php#

I had been testing it out on the Linux laptop and decided to install it in place of Debian 12. I kept my Mint Cinnamon install.

Once it had installed, which was very quick, I updated Mint using the updater tool and then installed Google Chrome, VLC Media Player, GIMP and a couple of other apps to the laptop.

Many of these programs I use on the Cinnamon install as well.

I then tweaked the panel by adding a workspace switcher and a set of eyes that follow the cursor!

A did a quick Neofetch in the command window, this shows detailed system information.

Like LM Cinnamon it is very well supported out the box and features modern Bluetooth set-up support, a clean user interface and an easy to set up experience.

The themes are all Linux Mint related window styles which look very modern. The MATE themes I had in Debian can be manually installed in Linux Mint if needed. They can be downloaded from the Software Manager or through the terminal.

This theme pack provides the same experience across the desktop whether you are using MATE or Cinnamon.

However I did come across a small bug where the widgets locked onto the panel moved after a restart. This is possibly a GTK related bug on Ubuntu versions with this desktop environment. It is easy to fix though by resetting the panel.

The bug only exists on newer MATE versions.

Linux Mint uses MATE desktop 1.26. However Debian also uses 1.26 which didn't have this bug. I don't know what's causing this in Mint. Hopefully this gets fixed in the next few releases!

The latest desktop version for MATE is 1.28, released later last year. Distros such as Ubuntu and Fedora would use this as it is a more recent release.

Also the MATE desktop is less animated compared with Cinnamon, however the desktop allows you to focus on tasks without distractions and feels very stable to use.

Underneath are some comparisons with the Cinnamon desktop.

I prefer Linux Mint with the Cinnamon desktop as it looks and feels more modern and is it's flagship desktop distribution. It is also more powerful as users can add applets, themes and layout configurations through an in-system database which is regularly updated.

The file manager in MATE is called Caja and is based on GNOME 2's Nautilus, whilst on Cinnamon the file manager is called Nemo, which uses the newer GNOME file manager as a base.

A simple, but powerful desktop environment for Mint!

3 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

Discover how Software Configuration Management Tools and Infrastructure as Code (IaC) DevOps tools can transform your tech workflow. Join us as we delve into their benefits and hear from Impressico Business Solutions on enhancing efficiency and scalability. Tune in for insights and practical tips!

#Software Configuration Management Tools#Configuration Management Tools#Infrastructure as Code DevOps Tools#DevOps Automation Tools

0 notes

Text

Top 5 Software Configuration Management Tools Of 2024

The analogy between a heist movie like the Ocean’s series and software development is striking. Just as Danny Ocean’s crew of specialists executes intricate casino heists with precision, software development requires a team where each member plays a critical role. In both cases, communication and coordination are paramount to success. The Ocean's crew includes experts like the demolition specialist and the hacker, each responsible for a specific task. Similarly, in software development, every update and addition must be meticulously planned and executed, with all team members staying informed to avoid disastrous missteps. Just as Danny Ocean masterfully orchestrates heists, a skilled project manager ensures that software projects run smoothly.

However, unlike Danny Ocean, who might be too busy planning his next big score, modern software development teams have tools that make their jobs easier. These tools, specifically Software Configuration Management (SCM) systems, are crucial for teams working on large, complex projects. SCM tools help manage, modify, and deploy software resources across various IT environments. They ensure seamless collaboration, track configurations, and automate tasks, making the development process more efficient and less error-prone. Such tools are invaluable in today’s fast-paced development world, where teams must work in sync to deliver high-quality software quickly.

For those looking to enhance their software development workflows, the top SCM tools of 2024 include ConfigCat, Perforce Helix Core, Salt, Device42, and Octopus Deploy. These tools provide essential features like version control, automated deployments, and real-time updates, ensuring consistent configurations and streamlined processes. Whether for startups or large enterprises, these tools cater to various needs, offering robust security, scalability, and integration with popular development platforms. By adopting the right SCM tool, development teams can operate as efficiently as Danny Ocean’s crew, executing projects with precision and confidence.

Read More - https://www.techdogs.com/td-articles/product-mine/top-5-software-configuration-management-tools-of-2024

0 notes

Text

Leveraging XML Data Interface for IPTV EPG

This blog explores the significance of optimizing the XML Data Interface and XMLTV schedule EPG for IPTV. It emphasizes the importance of EPG in IPTV, preparation steps, installation, configuration, file updates, customization, error handling, and advanced tips.

The focus is on enhancing user experience, content delivery, and securing IPTV setups. The comprehensive guide aims to empower IPTV providers and tech enthusiasts to leverage the full potential of XMLTV and EPG technologies.

1. Overview of the Context:

The context focuses on the significance of optimizing the XML Data Interface and leveraging the latest XMLTV schedule EPG (Electronic Program Guide) for IPTV (Internet Protocol Television) providers. L&E Solutions emphasizes the importance of enhancing user experience and content delivery by effectively managing and distributing EPG information.

This guide delves into detailed steps on installing and configuring XMLTV to work with IPTV, automating XMLTV file updates, customizing EPG data, resolving common errors, and deploying advanced tips and tricks to maximize the utility of the system.

2. Key Themes and Details:

The Importance of EPG in IPTV: The EPG plays a vital role in enhancing viewer experience by providing a comprehensive overview of available content and facilitating easy navigation through channels and programs. It allows users to plan their viewing by showing detailed schedules of upcoming shows, episode descriptions, and broadcasting times.

Preparation: Gathering Necessary Resources: The article highlights the importance of gathering required software and hardware, such as XMLTV software, EPG management tools, reliable computer, internet connection, and additional utilities to ensure smooth setup and operation of XMLTV for IPTV.

Installing XMLTV: Detailed step-by-step instructions are provided for installing XMLTV on different operating systems, including Windows, Mac OS X, and Linux (Debian-based systems), ensuring efficient management and utilization of TV listings for IPTV setups.

Configuring XMLTV to Work with IPTV: The article emphasizes the correct configuration of M3U links and EPG URLs to seamlessly integrate XMLTV with IPTV systems, providing accurate and timely broadcasting information.

3. Customization and Automation:

Automating XMLTV File Updates: The importance of automating XMLTV file updates for maintaining an updated EPG is highlighted, with detailed instructions on using cron jobs and scheduled tasks.

Customizing Your EPG Data: The article explores advanced XMLTV configuration options and leveraging third-party services for enhanced EPG data to improve the viewer's experience.

Handling and Resolving Errors: Common issues related to XMLTV and IPTV systems are discussed, along with their solutions, and methods for debugging XMLTV output are outlined.

Advanced Tips and Tricks: The article provides advanced tips and tricks for optimizing EPG performance and securing IPTV setups, such as leveraging caching mechanisms, utilizing efficient data parsing tools, and securing authentication methods.

The conclusion emphasizes the pivotal enhancement of IPTV services through the synergy between the XML Data Interface and XMLTV Guide EPG, offering a robust framework for delivering engaging and easily accessible content. It also encourages continual enrichment of knowledge and utilization of innovative tools to stay at the forefront of IPTV technology.

3. Language and Structure:

The article is written in English and follows a structured approach, providing detailed explanations, step-by-step instructions, and actionable insights to guide IPTV providers, developers, and tech enthusiasts in leveraging the full potential of XMLTV and EPG technologies.

The conclusion emphasizes the pivotal role of the XML Data Interface and XMLTV Guide EPG in enhancing IPTV services to find more information and innovative tools. It serves as a call to action for IPTV providers, developers, and enthusiasts to explore the sophisticated capabilities of XMLTV and EPG technologies for delivering unparalleled content viewing experiences.

youtube

7 notes

·

View notes

Text

United States Customs and Border Protection plans to log every person leaving the country by vehicle by taking photos at border crossings of every passenger and matching their faces to their passports, visas, or travel documents, WIRED has learned.

The escalated documentation of travelers could be used to track how many people are self-deporting, or leave the US voluntarily, which the Trump administration is fervently encouraging to people in the country illegally.

CBP exclusively tells WIRED, in response to an inquiry to the agency, that it plans to mirror the current program it’s developing—photographing every person entering the US and match their faces with their travel documents—to the outbound lanes going to Canada and Mexico. The agency currently does not have a system that monitors people leaving the country by vehicle.

“Although we are still working on how we would handle outbound vehicle lanes, we will ultimately expand to this area,” CBP spokesperson Jessica Turner tells WIRED.

Turner could not provide a timeline on when CBP would begin monitoring people leaving the country by vehicle.

She tells WIRED that CBP currently matches photos of people coming into the country with “all documented photos, i.e., passports, visas, green cards, etc,” and adds that all “alien/non-US citizens encounter photos taken at border crossing” are stored by CBP. “The encounter photos can be used for subsequent crossings to verify identity,” Turner says. She did not specify whether CBP may integrate additional photos or data sources in the future.

When asked, Turner says it’s not currently evident that a purpose of the outbound face-matching system would be tracking self-deporations. “Not to say it won't happen in the future, though, with the way self-deportation is going,” Turner says. She later adds that the goal of an outbound system would be to “biometrically confirm departure from the US.” This differs from the purpose of tracking people coming into the US, she says, which also considers the “purpose and intent” of entering the country.

WIRED reported this week that CBP recently asked tech companies to send pitches on how they would ensure every single person entering the country by vehicle, including people two or three rows back, would be instantly photographed and matched with their travel documents. CBP has struggled to do this on its own. The results of a 152-day test of this system, which took place at the Anzalduas border crossing between Mexico and Texas, showed that the cameras captured photos of everyone in the car that met “validation requirements” for face-matching just 61 percent of the time.

Currently, neither CBP nor Immigration and Customs Enforcement have any publicly known tools for tracking self-deportations, aside from an ICE app that allows people to tell the agency when they leave the country.

Last month, ICE announced that it is paying the software company Palantir $30 million to build a tool called ImmigrationOS that would give the agency “near real-time visibility” on people self-deporting from the US, with the goal of having accurate numbers on how many people are doing so, according to a contract justification published a few days later.

When asked, CBP would not confirm or deny whether its monitoring of outbound vehicles would or could be integrated with ImmigrationOS. “CBP does not use Palantir Technologies,” Turner says. (CBP has paid for Palantir services three times, with the last payment in 2013.)

ICE has not specified where Palantir would get the data to power the ImmigrationOS. However, the agency notes that Palantir could create ImmigrationOS by configuring the case management system that the company has provided to ICE since 2014.

This case management system integrates all of the information ICE may have about a person from investigative records or government databases, according to a government privacy assessment published in 2016. At the time of the assessment, it stored information about people’s physical attributes—like hair and eye color, height and weight, and any scars or tattoos—as well as any "location-related data” from “covert tracking devices” and any data from license plate readers, which can provide a detailed travel history.

DHS noted in a 2024 report that CBP has struggled to get biometric data from people leaving the country over land—meaning, people traveling via "cars, trains, buses, bicycles, trucks, and on foot.” The report says that CBP wants to create a “biometric-based departure program” to monitor when people considered aliens leave the country, which DHS notes is required under US law.

The Trump administration is strongly encouraging self-deportation. In March, the Department of Homeland Security revoked the legal status of more than half a million people from Cuba, Haiti, Nicaragua, and Venezuela who were given temporary parole to stay in the US due to instability in their home countries. A judge temporarily blocked the move, but the government is challenging this in court.

In April, the Social Security Administration listed more than 6,000 of these people who had temporary parole as dead, as a way of effectively ending their financial lives in the US. DHS also sent emails to an unknown number of people claiming that their legal parole had been revoked and demanding them to self-deport. Then, this week, the Trump administration offered to pay people in the country illegally $1,000 for a plane ticket to self-deport.

4 notes

·

View notes

Text

Preventative IT Maintenance: Keeping Your Business Running Smoothly

With technology moving forward so fast, your business can’t operate without it. Computers, servers, cloud systems and software platforms have to be running smoothly to keep your team productive, defend confidential information and make sure customers receive a good experience.

Unfortunately, IT systems don’t manage themselves without attention.

This is why we need preventative IT maintenance. Regular car servicing makes sure your car avoids breakdowns and preventative IT support does the same for your systems. Here at Image IT, we know that companies who focus on IT before issues arise benefit a lot. We’ll now look at what preventative maintenance means and understand why it helps your business run smoothly.

What Does Preventative IT Maintenance Mean?

Taking care of your IT infrastructure ahead of time is called preventative maintenance. With preventative maintenance, you take action to make sure your systems are in good shape all the time, so you don’t have to rush to solve emergencies.

Such tasks refer to:

Tracking how the system is running

Putting security patches and new versions of the software into use

Regularly using antivirus and malware software

Testing the use of backup options

Updating both your device’s drivers and firmware

Checking the configurations for firewalls and networks

Exchanging ageing equipment to prevent any breakdowns

At Image IT, we set up specialized maintenance services that guarantee your technology remains in top condition and reduces the chance of risks and downtime.

Why Taking Care of Problems in Advance Is Crucial for Companies in Ireland

1. Minimize any time when your business is not working effectively.

Problems with your IT systems such as servers failing, networks breaking or bugs in software, may bring your work to a halt and cost you in both time and money. Doing preventative maintenance lets you catch and manage issues early and this means your business avoids the stress of dealing with major problems.

If a server begins to overheat, it’s possible to handle the issue before it crashes, so you won’t have to deal with expensive downtime and loss of data.

2. Prevent or Stop Cyber Attacks

More and more, businesses in Ireland are facing cyberattacks, most often small and medium-sized companies. Many attackers use old software, unpatched versions and networks that have not been properly set up.

Ongoing upkeep of security tools such as firewalls, antivirus software and system updates, makes it much less likely for your system to become a victim of ransomware, phishing or a data breach.

3. Increase the Lifespan of IT Assets

Just as changing the oil in your car lengthens its engine’s lifespan, looking after your IT equipment in the same way will help it work longer. Regularly taking care of computers stops them from wearing out and prevents too many replacements.

4. Raise the effectiveness of your staff.

This kind of slow work is frustrating and influences how your team feels about their work. If technology runs smoothly, your team won’t have to worry about systems or spend time finding IT solutions.

5. With time, the cost of IT will decrease.

Though it might feel like a pricey addition, upfront maintenance helps save money and prevents serious IT problems. One data breach, meeting replacement or lasting period of downtime can often be more expensive than all your ISP’s services put together.

Important Parts of a Well-Made IT Maintenance Plan

We create preventative maintenance strategies for your business that fit its individual requirements at Image IT. The method we use is:

We watch your systems around the clock.

We watch over your systems around the clock, spotting problems early and fixing them so they don’t impact your work.

Timely Updates and Patch Upgrades

We make sure your operating systems, applications and antivirus are always running on the latest versions.

Test the backup and recovery of your data.

We ensure your backups are properly configured and we regularly perform tests to see how fast you can recover data.

You can do a Network Health Check here.

We examine your network for good speed, serious security flaws and technology issues to confirm your system operates safely and properly.

Managing Assets and Deciding on Their Life

We watch over your equipment and make sure you can update your technology before it starts causing issues.

Support from the users and helpdesk

If your team has any IT questions or concerns, our friendly team is there to lend a non-technical helping hand.

Why Is Image IT a Great Solution?

Operating out of North Dublin, Image IT has been supporting company’s in Ireland for about 15 years. Our knowledgable team delivers helpful, consistent and friendly IT assistance to the companies here in New Zealand.

We are dedicated to forming long-term relationships with clients so we can do more than just address issues; we can help avoid them.

You will gain the following benefits when you work with us:

Transparent pricing

A quick response from the team

Customized maintenance services

Expert opinions offered in a personal way

If you have just a few devices or a complex IT structure, our solutions are designed to match your requirements and your budget.

Benefits You Can See in Life: An Example

There were many issues at one of our clients, a small financial services firm in Dublin, involving downtime in the network and software that was past its update. Following their sign up for our preventative maintenance, we set up a monitoring system, cleaned their network and ran scheduled updates.

The result? A 90% drop in IT issues reported by staff, faster systems, and peace of mind for their management team knowing their data and systems were protected.

Your Next Step: Secure Your Business with Preventative IT Support

Don’t wait for a system failure, data breach, or productivity drop to remind you of your IT vulnerabilities. Preventative maintenance is one of the smartest investments you can make in your business.

Let Image IT take the stress out of managing your technology — so you can focus on what you do best.

2 notes

·

View notes

Text

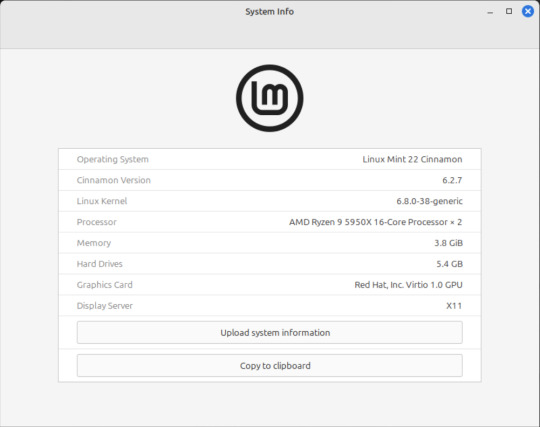

On the 9th of January 2025, I saw an article in my latest copy of Computer Active which explains about mastering Linux distros.

It suggested using a website called 'Distrosea' which hosts free virtual machines of various flavours of the Linux operating system, all of which can be ran within a browser window.

Visit the site here:

https://distrosea.com/

Here is a list of the Linux distros I had a go at running with their desktop environments:

Ubuntu 24.04 LTS - GNOME

Ubuntu 8.10 - GNOME 2 (old, 2008)

Linux Mint 22 - Cinnamon, Ubuntu base

Ubuntu 24.04 LTS - Cinnamon

Fedora Linux 40 - MATE

OpenSUSE Leap 15.5 - KDE

Alma Linux 9 - GNOME

Debian Linux 12.5 - LXqt (lightweight)

Zorin OS 17 Core64 - GNOME (configurable)

There are a total of 71 Linux operating systems to try on the website at the time of this write up.

To install, update and manage software on Linux using a package manager, the distros mentioned on this list based on Debian & Ubuntu use the APT (Advanced Package Tool) and the distros based on Fedora & Red Hat use the DNF (Dandified Yellow-Dog Updater Modified) package managers.

Their are many other types of package managers for Linux. Since Windows 11, users can choose to install software using UnigetUI (a.k.a Winget commands).

About Distrosea:

When you click on any of the distro entries before selecting a desktop environment, you can read a great description of the background information for each.

I think this website is amazing as it allows you to test distros in your browser without the need to plug in a USB or use virtual machine software. basically it gives you a live install copy of Linux to try online for free. It profits from ads shown on its homepage.

Some of the distros have a 'popular' badge, to show which ones are most likely to have more online traffic. Most of these distros are updated to latest versions available.

When you run the operating systems the system information dialogue will contain the server CPU information and displays the virtual graphics card called Red Hat, Inc Virtio 1.0 GPU.

However the site uses limited user traffic to reduce load on the servers, so when you select a version of Linux, there is a queue system. Each session is free, however it is timed when idle to create space for another user. Also the server connection, which is based in India, can disconnect you from your testing frequency, so whilst it works well you have to reset your VM which will continue where you last left off.

Also some of the Linux distros didn't automatically scale to full screen, and so leave bars at the top and bottom of the screen. The old Ubuntu remains surrounded by a large bar around itself as no modern drivers will work with this old version of Ubuntu.

You can sign into your Google account and gain internet access in your VMs as well.

Read the developers notes on Reddit:

https://www.reddit.com/r/linux/comments/139sj6z/distrosea_test_drive_linux_distros_online/?rdt=33513

I would recommend trying this out on a large computer screen as the distros take advantage of hardware acceleration, scaling and look great in full screen mode!

Watch this Distrosea video on YouTube to see it in action!

youtube

Remember that this is another way of testing Linux through the internet, the experience will be different if it is installed on an actual computer.

6 notes

·

View notes

Text

What is the difference between CPQ & RLM?

Revenue Cycle Management Services

In today's fast-moving healthcare and enterprise environments, understanding the difference between CPQ and RLM is essential, especially when these tools impact operations like Revenue Cycle Management. Although both the CPQ and RLM help to optimize processes, they serve very different functions but are aligned toward the business segment.

CPQ – Configure, Price, Quote:

CPQ stands for Configure, Price, Quote. It's a sales tool that helps companies quickly and accurately generate quotes for products or services they are offering. CPQ is a valuable product in industries with complex pricing models or customizable offerings—such as medical equipment, software solutions, or enterprise services.

With CPQ, sales teams can:

Select product features and (configure) as per its requirements

Apply pricing rules and discounts (price) for better product briefing

Generate accurate proposals or quotes (quote) for better outcomes

By automating this process, CPQ reduces errors, speeds up the sales cycle and which eventually boost up the revenue as the ultimate goal, and ensures that pricing is consistent and aligned with company policies and as per its set standards and desired objectives.

RLM – Revenue Lifecycle Management:

On the other hand, revenue Lifecycle Management (RLM) focuses and starves to look at managing and optimizing things at every stage of the revenue process. In healthcare, this is closely tied to Revenue Cycle Management—as a system that oversees the patient billing, insurance claims, payments, and collections.

RLM looks at the broader picture, including:

Contract management

Billing and invoicing

Revenue recognition or recollection

Renewals and upsells of listings

RLM ensures and seeks that the revenue is tracked, reported, and optimized as per the given concern from when a contract begins until the revenue is fully realized or settled out. In healthcare, it's critical for improving financial health, assuring compliance, and reducing revenue leakage as a protective measure.

Key Differences -

CPQ is sales-focused, helping generate quotes and close deals faster as a better option for the users to roll out.

RLM is revenue-focused, ensuring smooth handling and assessment of all financial processes post-sale and after it gets done.

CPQ comes into play at the beginning of the customer journey, while RLM continues throughout the customer lifecycle as they both work as a part of the set system.

Many advanced providers and professionals experts of Revenue Cycle Management, like Suma Soft, IBM, Cyntexa, and Cignex, offer tailored RLM solutions and Revenue Cycle Management services that integrate with CPQ systems. This creates a seamless flow from quoting to revenue realization, making operations more efficient and profitable.

#it services#technology#saas#software#saas technology#saas development company#revenue cycle management#revenuegrowth

2 notes

·

View notes

Text

Taking a Deeper Look at Deepseek Thought Process

What binary is associated with quorum management?

No web access.

Okay, so I'm trying to understand what binary is associated with quorum management in IBM Spectrum Scale. First off, from my previous understanding, quorum management seems to involve several components like the Grid Control Policy (GCP), Replication Agent (RA), and Quorum Manager (QM). But when it comes to binaries, I think we're talking about specific software executables or processes that handle these tasks.

We see that the process is starting off from the wrong conclusion, but the question is can it correct itself? I'm not entirely sure what a "binary" refers to in this context. Maybe it's like an executable file or a specific service running on the nodes? If I recall correctly, Spectrum Scale is built on top of GPFS (General Parallel File System), so maybe some of these binaries are related to that underlying filesystem.

It has issues with the word binary, but sort of gets on the road. I think quorum management involves ensuring that enough nodes are available and in agreement to maintain data consistency. So, there must be specific processes or daemons running that handle this coordination. Maybe something like gcpd for Grid Control Policy Daemon? Or perhaps qmagent for Quorum Manager Agent? Wait, I've heard of spectrum-scaled before��maybe that's the main process for Spectrum Scale itself. Could it be that quorum management is handled by a specific module within this binary or maybe a separate one?

So it actually moves somewhat in the right direction here talking about a main process. I also remember hearing about replication agents (ra), which handle data replication across nodes. Maybe they play a role in ensuring quorum by replicating data to enough nodes. Perhaps there's a tool like quorumadm for managing quorum settings? That seems plausible—it could be used to check the current quorum status or adjust thresholds. I think I need to look into IBM's documentation or release notes for Spectrum Scale. Maybe they mention specific binaries related to quorum management. Alternatively, checking process listings on a node running Spectrum Scale might reveal the relevant processes.

It references looking at the documentation and that is the right call. If I were to run ps aux | grep spectrum on a Spectrum Scale server, would that show me the associated binaries? It might list processes like gcpd, ra, and others. From there, I could identify which ones are directly tied to quorum management. Also, maybe log files or configuration files have hints about these binaries. For example, /etc/spectrum directory might contain config files for GCP or QM. In summary, the key binaries likely include: Grid Control Policy Daemon (gcpd) - Manages high-level policies and thresholds. Quorum Manager Agent (qmagent) - Specifically handles quorum-related tasks. Replication Agent (ra) - Ensures data replication across nodes to meet quorum requirements. I should verify this by checking IBM's official documentation or through practical inspection on a Spectrum Scale setup.

As you can see the "thoughts" completely revolve around whatever it previously "thought". It isn't really able to re-examine previous conclusions here.

Can you confirm with sources the above?

Web access.

Okay, so I'm trying to figure out which binary is associated with quorum management in IBM Spectrum Scale based on what was said earlier. The previous answer listed three binaries: Grid Control Policy Daemon (gcpd), Quorum Manager Agent (qmagent), and Replication Agent (ra). Now, the user is asking me to confirm this information using the provided context. First, I need to look at the context given. It includes some sources from IBM's documentation about quorum nodes in Spectrum Scale. The first source talks about how quorum is defined as one plus half of the explicitly defined quorum nodes and mentions that there are no default quorum nodes; you have to specify which ones. The second source seems to be repeating the same information, so it doesn't add anything new. The third source again discusses node quorum in Spectrum Scale and provides an example configuration with three quorum nodes. Looking at these sources, they all focus on how quorum is defined and configured within the cluster but don't mention any specific binaries or processes that manage quorum. They talk about quorum nodes and configurations, not the underlying software components responsible for handling quorum logic. Given this, it seems like the earlier answer might be based more on general knowledge of Spectrum Scale rather than directly citing the provided context. The sources don't explicitly name any binaries associated with quorum management. Therefore, I can't confirm the binaries listed (gcpd, qmagent, ra) using the given context because they aren't mentioned there.

Here is where it goes off the rails. It doesn't find its previous idea in the documentation, but rather than starting fresh it says I can't confirm my previous answer. I should probably explain this to the user, letting them know that while those binaries might be related based on general knowledge of Spectrum Scale, the provided sources don't mention them. It would also be helpful to suggest looking into IBM's official documentation or other reliable sources for confirmation.

It then simply goes back to its conclusion that those wrong binaries are possibly correct even after finding no evidence that they are.

So quorum management is not handled mmfsd?

No web access.

Alright, so I'm trying to understand how quorum management works in IBM Spectrum Scale. From what I gathered earlier, there are these components like gcpd, qmagent, and ra that might be involved in managing quorum. But then, the user asked if mmfsd is related to quorum management. First, I need to figure out what mmfsd is. MMFSD stands for Multiple Mirror File System Daemon, right? It's a part of IBM Spectrum Scale used for file system operations and mirroring data across nodes. So, does it handle quorum? Quorum in distributed systems usually refers to the minimum number of nodes that need to be available to ensure consistency and avoid split-brain scenarios. In Spectrum Scale, maintaining quorum is essential for making sure that decisions like data replication are consistent across all nodes.

Here it goes off the rails. It gets lost in the semantics of what does mmfsd stand for. It also doesn't seem to strongly associate Spectrum Scale and GPFS. But if mmfsd is primarily about file system operations and mirroring, maybe it's more about ensuring data availability rather than managing the quorum itself. I think quorum management might be handled at a higher level, possibly by components like gcpd or qmagent, which manage policies and replication across the cluster.

It again returns to the same error with gcpd and qmagent. However, there's some ambiguity here. The user suggested that these binaries like gcpd, qmagent, and ra are involved in quorum management, but based on the context provided earlier, they aren't explicitly mentioned. So, I'm not entirely sure if mmfsd is directly responsible for quorum or if it works alongside other components. To clarify, maybe I should look into IBM's official documentation or case studies that explain how Spectrum Scale manages quorum internally. It would help to see which binaries are actually involved in the process and their specific roles.

It claims that I suggested those binaries it made up are part of the process, but it can't confirm and I didn't say they were–so where is that coming from? In summary, while mmfsd deals with file system mirroring and data availability, it doesn't seem directly responsible for managing the quorum itself. Quorum management likely involves other components that ensure a sufficient number of nodes agree on data states before committing changes.

Is mmfsd involved in quorum management in IBM spectrum scale GPFS?

Let's ask directly.

Web access.

Alright, the user is asking whether mmfsd is involved in quorum management within IBM Spectrum Scale GPFS. From previous interactions, I remember that the user has been exploring how quorum management works in this system and had some confusion about which binaries are responsible.

It continues to go back to its old data.

Looking at the context provided earlier, there was information about node quorum and commands related to changing quorum settings, but nothing directly mentioning mmfsd. In fact, when I previously explained the components involved, like GCP, RA, and QM, the user then asked for confirmation with sources, which led me to clarify that those binaries weren't explicitly mentioned in the context.

Now, the user is specifically asking about mmfsd. My previous response indicated that mmfsd isn’t directly involved in quorum management but handles file system operations. However, I need to make sure this is accurate and based on reliable sources.

I should check if there are any IBM documents or official resources that link mmfsd to quorum processes. If not, it's safer to say that mmfsd isn't the process handling quorum. Instead, higher-level daemons manage quorum, ensuring data consistency across nodes without mmfsd's direct involvement.

The user might be trying to troubleshoot an issue or configure their environment correctly, so providing clear information about each component’s role is essential. They probably want to understand where to focus their efforts for quorum settings and avoid confusion with file system operations handled by mmfsd.

So it executes this search "IBM Spectrum Scale mmfsd quorum management documentation" to try to find the information. This search is sort of backing into the information, and a better more explicit search would return the right answer.

Analysis

As I mentioned before I think calling this "thinking" is a stretch. It is a facsimile of thought perhaps. The "thought" process is rigidly attached to prior statements if not immediately found to be false or denied by the user. This seems to cause the system to stick to its path and not re-evaluate based on a lack of evidence.

I am not sure how much of this failure is that it's a 14B parameter model, but the "thinking" is very weak here. Even if we take the more explicit question into a new chat it can't get the answer right.

I wanted to document this partly for my memory, but also partly as a demonstration of how weak the "thinking" is. Deepseek makes a finding and can't reason its way out–even when it has no evidence. The lack of evidence doesn't weaken its position. Deepseek is kind of an alternative facts machine–given that even in light of no evidence–that is still insufficient evidence for it to say that it is wrong and try a new direction.

4 notes

·

View notes