#Spatial Data Insights

Explore tagged Tumblr posts

Text

Location Intelligence Sri Lanka,

Why Location Intelligence?

https://geosciences.advintek.com.sg/wp-content/uploads/2024/10/analytics.png

Unmatched Analytical Power

Location Intelligence enables advanced analytics that reveals patterns and trends in your data, allowing you to identify opportunities and risks in real-time. By leveraging spatial data, businesses can uncover hidden insights that lead to more informed strategic decisions.

Actionable Insights

Turn complex geospatial data into actionable insights with intuitive visualization tools that help stakeholders grasp key information quickly and make informed decisions. With clear visual representations of data, teams can collaborate more effectively and align their strategies for optimal results.

Cost Optimization

Identify inefficiencies in your operations, such as excess inventory or underutilized assets, and leverage insights to optimize resource allocation and reduce costs. This strategic approach to resource management not only saves money but also enhances overall operational efficiency.

Seamless Data Integration

Effortlessly integrate diverse datasets from various sources, such as CRM systems, GIS platforms, and business intelligence tools, for a comprehensive view of your operations. This holistic perspective enhances your understanding of market dynamics and customer behavior, enabling more effective decision-making.

Get in Touch with us

Location

7 Temasek Boulevard, #12-07, Suntec Tower One, Singapore 038987

Email Address

Phone Number

+65 6428 6222

#Location Intelligence#Geospatial Analytics#GIS Solutions#Spatial Data Insights#Location-Based Decision Making#Business Mapping Tools#Real-Time Location Data#Address Intelligence#Geospatial Technology#Location Intelligence Platform#Smart City Solutions

0 notes

Text

location intelligence data

Advintek Geoscience is a leading provider of Location Intelligence solutions in Singapore and across ASEAN, delivering advanced geospatial analytics powered by MapInfo Pro and Precisely’s Spectrum Suite facebook.com+15geosciences.advintek.com.sg+15linkedin.com+15. Leveraging powerful mapping, spatial analysis, and real‑time data integration, they help businesses and government agencies uncover hidden patterns, optimize operations, and make smarter decisions .

From supply chain enhancements and site selection to public safety, telecom planning, and smart‑city deployment, Advintek’s scalable, secure platform enables cost optimization, predictive insights, and improved customer targeting geosciences.advintek.com.sg+1sg.linkedin.com+1. Their seamless integration with existing CRM, GIS, and BI systems ensures smooth adoption, while real‑time dashboards and geofencing offer actionable insights on the go geosciences.advintek.com.sg.

Trusted across diverse sectors—including energy, utilities, urban planning, and emergency response—Advintek’s Location Intelligence empowers clients to transform spatial data into transformative business outcomes facebook.com+15

#Location Intelligence Singapore#Geospatial Analytics ASEAN#MapInfo Pro Solutions#Spectrum Spatial Tools#Spatial Data Visualization#Real‑Time Geospatial Insights#location intelligence data#location based intelligence#location intelligence esri

0 notes

Text

Also preserved in our archive

Every infection, no matter how mild, has a cumulative effect on your brain, reducing gray matter and altering function. Mask up. Keep yourself and others safe from a disease that can and will cause many varied lingering issues.

by Denis Storey

Clinical relevance: New research shows that even mild COVID-19 cases in young adults can lead to changes in brain structure and function.

Researchers found reduced connectivity in key brain regions, including the left hippocampus and amygdala. These changes were linked to deficits in spatial working memory and cognitive performance. The study highlights the need for further research into long-term neurological effects of COVID-19, even in mild cases. For all the damage the pandemic’s done, it seems as if the youngest generations will pay the steepest price. The latest proof of that has emerged in new research that’s discovered that even the mildest of COVID cases among young adults can lead to changes in brain structure and function. As a result, it could pose a threat to long-term cognitive performance.

The research provides new insight into the potential neurological impact of SARS-CoV-2 in populations that avoided severe illness. The study focused on adolescents and young adults since it’s a group that’s remained relatively understudied.

Methodology The study, part of the Public Health Impact of Metal Exposure (PHIME) cohort, included 40 participants. More than a dozen of them – 13 – tested positive for COVID-19, while 27 served as controls.

The researchers enrolled the participants in a longitudinal study, which offered pre-pandemic baseline data through MRI scans and cognitive assessments. This allowed the team a unique look into pre- and post-pandemic neural outcomes.

The researchers relied on the latest neuroimaging technology, such as resting-state functional MRI (fMRI) and structural MRI, to examine shifts in brain connectivity and cortical volume.

The researchers also subjected the participants to cognitive testing focused on spatial working memory. The team conducted the assessments both before the COVID-19 pandemic and after recovery from mild COVID-19 cases. These parallel evaluations allowed for a direct comparison of brain and cognitive changes.

COVID Affected Multiple Brain Regions The study exposed notable differences between COVID-19-positive and healthy participants in five critical brain regions:

The right intracalcarine cortex. The right lingual gyrus. The left hippocampus. The left amygdala. And the left frontal orbital cortex. Perhaps most notably, the left hippocampus revealed a significant drop in cortical volume among those who’d tested positive for COVID.

Researchers also found that the left amygdala showed much lower connectivity in participants who’d contracted COVID-19. This lack of connectivity appeared to be linked to deficits in spatial working memory. From this, the researchers inferred that even mild COVID-19 infection could impair one’s ability to perform tasks that rely on short-term memory.

Backing Up Previous Research The study results echo a mounting body of literature that suggests that COVID-19, despite its nature as a respiratory virus, appears to have far-reaching neurological implications. Earlier research has linked more serious cases of the virus with reduced gray matter thickness and cerebral volume loss, particularly in the hippocampus and amygdala.

The researchers add that the brain changes they observed could be related to the neurotoxic effects of SARS-CoV-2, which could have a lingering influence even among milder caes of infection. The paper’s authors theorize that it could be because the virus might be invading the brain through the olfactory system. That could cause inflammation and damage in important neural regions.

On the other hand, the authors also posit that the social and psychological stressors of the pandemic – whether its the social isolation or the lingering uncertainties – could be a factor in the changes appearing in the brains of the COVID-positive participants.

These results underscore the necessity for more research into the long-term neurological effects of the pandemic, especially among its younger survivors.

Moving Forward Finally, the research team hints that further investigation could clarify whether these neurological changes are permanent, and – if not – how long they might last.

The authors conclude by insisting that this study offers crucial new insight into the potentially long-term ramifications of COVID on the brain, even in mild cases. As we struggle with life in the shadow of the pandemic, a better grasp of what it means for everyone who’s been infected could help us develop more effective treatments.

#mask up#public health#wear a mask#pandemic#wear a respirator#covid#still coviding#covid 19#coronavirus#sars cov 2#long covid#covid conscious#covid19#covid is not over

186 notes

·

View notes

Text

Comeback Cuckoo: Baba Ghanoush Returns to Audubon Kern River Preserve in California

Experimenting with tracking birds at finer spatial scales to better understand their migration routes and habitat use.

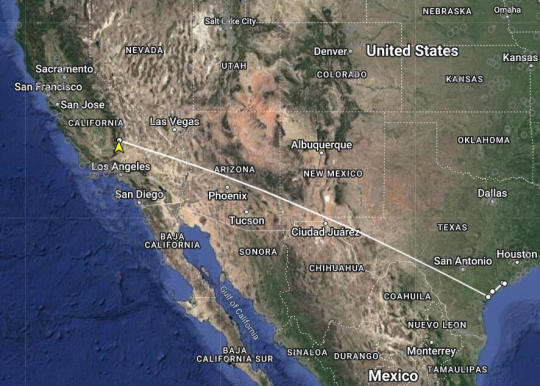

At Audubon Kern River Preserve—managed by Audubon California's Reed Tollefson, dedicated Southern Sierra Research Station (SSRS) researchers are making significant strides in understanding the elusive Western Yellow-billed Cuckoo. The team’s recent discoveries, featuring two Motus-tagged birds from the Kern River Valley, Baba Ghanoush and Stroopwafel, have provided invaluable insights into the migration routes of these secretive birds. Earlier this year, Baba Ghanoush (originally captured, banded, and Motus-tagged on the Kern River Preserve) was detected on their northward migration by a Motus tower at the Mad Island Marsh Preserve in coastal Texas. They eventually made their way to the Audubon Kern River Preserve, where they spent much of the summer. This detection was particularly remarkable as it occurred exactly a year and a day after Stroopwafel, another Motus-tagged cuckoo from the South Fork Wildlife Area (SFWA), a riparian forest adjoining the Kern River Preserve, was detected at the same tower. The timing of these detections is helping to fill in more data about the migration routes and critical stopover areas, underscoring the importance of conservation areas all along their journey...

Read more: https://ca.audubon.org/news/comeback-cuckoo-baba-ghanoush-returns-audubon-kern-river-preserve-california

233 notes

·

View notes

Text

Individuals born under Anuradha Nakshatra are recognized for their strong organizational skills, which allow them to thrive in various fields that demand precision and analytical capabilities. Their remarkable talent for mathematics, combined with their proficiency in handling numbers and figures, makes them valuable assets in professions such as accounting and finance.

Moreover, those born under this nakshatra often feel a pull towards disciplines that involve geometry and spatial reasoning, showcasing their keen understanding of structures and patterns. This mathematical skill also translates into analytical roles, where their ability to interpret data and identify trends can result in insightful conclusions.

More posts on Anuradha Nakshatra

Nakshatra Notes Masterpost (Link collection of all 27 Nakshatras)

#astrology#vedic astrology#Anuradha#Nakshatra#scorpio#Anuradha Nakshatra#PositiveAnuradhaCOC#astrology observations#astrology blog#vedic notes#vedic astro notes#vedic astro observations#astro notes#vedic astrology observations#astro observations#natal chart#astrology posts#astrology placements#astrology notes#astro tumblr#astrology tumblr#nakshatras#jyotish#sidereal astrology#astrologer#astro community#birth chart#astrology content#anuradha notes#anuradha posts

32 notes

·

View notes

Text

Free online courses on Nature -Based Infrastructure

"Two training courses on making a case for and valuing Nature-Based Infrastructure. This training is free of charge.

Participants will learn how to:

Identify nature-based infrastructure (NBI) and its opportunities for climate adaptation and sustainable development.

Make the case for NBI by explaining its potential economic, environmental, and social benefits.

Understand the risk profile and the climate resilience benefits of NBI compared to grey infrastructure.

Explain the basics of systems thinking, quantitative models, spatial analysis, climate data and financial modelling applied to NBI.

Appreciate the results of integrated cost-benefit analyses for NBI.

Use case studies of NBI projects from across the world as context for their work.

This course was developed by the NBI Global Resource Centre to help policy-makers, infrastructure planners, researchers and investors understand, assess, and value nature-based infrastructure. The course familiarizes participants with several tools and modelling approaches for NBI, including Excel-based models, system dynamics, spatial analysis and financial modelling. In addition, the training presents a variety of NBI case studies from across the world.

Why do this course?

This course will help you gain valuable skills and insights which will enable you to:

Gain knowledge and tools for informed infrastructure decision-making, with a focus on advancing nature-based solutions for climate adaptation at a systems level.

Understand and measure the benefits, risks, and trade-offs of nature-based infrastructure.

Understand the importance of systemic thinking for infrastructure planning, implementation, and financing strategies.

Communicate persuasively and effectively with stakeholders to advocate for nature-based infrastructure.

Collaborate with peers around the world and become part of the NBI Global Resource Centre alumni.

39 notes

·

View notes

Text

Webb's autopsy of planet swallowed by star yields surprise

Observations from NASA's James Webb Space Telescope have provided a surprising twist in the narrative surrounding what is believed to be the first star observed in the act of swallowing a planet. The new findings, published in The Astrophysical Journal, suggest that the star actually did not swell to envelop a planet as previously hypothesized. Instead, Webb's observations show the planet's orbit shrank over time, slowly bringing the planet closer to its demise until it was engulfed in full.

"Because this is such a novel event, we didn't quite know what to expect when we decided to point this telescope in its direction," said Ryan Lau, lead author of the new paper and astronomer at NSF NOIRLab (National Science Foundation National Optical-Infrared Astronomy Research Laboratory) in Tucson, Arizona. "With its high-resolution look in the infrared, we are learning valuable insights about the final fates of planetary systems, possibly including our own."

Two instruments aboard Webb conducted the post-mortem of the scene—Webb's MIRI (Mid-Infrared Instrument) and NIRSpec (Near-Infrared Spectrograph). The researchers were able to come to their conclusion using a two-pronged investigative approach.

Constraining the how

The star at the center of this scene is located in the Milky Way galaxy about 12,000 light-years away from Earth.

The brightening event, formally called ZTF SLRN-2020, was originally spotted as a flash of optical light using the Zwicky Transient Facility at the Palomar Observatory in San Diego, California. Data from NASA's NEOWISE (Near-Earth Object Wide-field Infrared Survey Explorer) showed the star actually brightened in the infrared a year before the optical light flash, hinting at the presence of dust.

This initial 2023 investigation led researchers to believe that the star was more sun-like, and had been in the process of aging into a red giant over hundreds of thousands of years, slowly expanding as it exhausted its hydrogen fuel.

However, Webb's MIRI told a different story. With powerful sensitivity and spatial resolution, Webb was able to precisely measure the hidden emission from the star and its immediate surroundings, which lie in a very crowded region of space. The researchers found the star was not as bright as it should have been if it had evolved into a red giant, indicating there was no swelling to engulf the planet as once thought.

Reconstructing the scene

Researchers suggest that, at one point, the planet was about Jupiter-sized, but orbited quite close to the star, even closer than Mercury's orbit around our sun. Over millions of years, the planet orbited closer and closer to the star, leading to the catastrophic consequence.

"The planet eventually started to graze the star's atmosphere. Then it was a runaway process of falling in faster from that moment," said team member Morgan MacLeod of the Harvard-Smithsonian Center for Astrophysics and the Massachusetts Institute of Technology in Cambridge, Massachusetts. "The planet, as it's falling in, started to sort of smear around the star."

In its final splashdown, the planet would have blasted gas away from the outer layers of the star. As it expanded and cooled off, the heavy elements in this gas condensed into cold dust over the next year.

Inspecting the leftovers

While the researchers did expect an expanding cloud of cooler dust around the star, a look with the powerful NIRSpec revealed a hot circumstellar disk of molecular gas closer in. Furthermore, Webb's high spectral resolution was able to detect certain molecules in this accretion disk, including carbon monoxide.

"With such a transformative telescope like Webb, it was hard for me to have any expectations of what we'd find in the immediate surroundings of the star," said Colette Salyk of Vassar College in Poughkeepsie, New York, an exoplanet researcher and co-author on the new paper.

"I will say, I could not have expected seeing what has the characteristics of a planet-forming region, even though planets are not forming here, in the aftermath of an engulfment."

The ability to characterize this gas opens more questions for researchers about what actually happened once the planet was fully swallowed by the star.

"This is truly the precipice of studying these events. This is the only one we've observed in action, and this is the best detection of the aftermath after things have settled back down," Lau said. "We hope this is just the start of our sample."

These observations, taken under Guaranteed Time Observation program 1240, which was specifically designed to investigate a family of mysterious, sudden, infrared brightening events, were among the first Target of Opportunity programs performed by Webb.

These types of study are reserved for events, like supernova explosions, that are expected to occur, but researchers don't exactly know when or where. NASA's space telescopes are part of a growing, international network that stands ready to witness these fleeting changes, to help us understand how the universe works.

Researchers expect to add to their sample and identify future events like this using the upcoming Vera C. Rubin Observatory and NASA's Nancy Grace Roman Space Telescope, which will survey large areas of the sky repeatedly to look for changes over time.

TOP IMAGE: NASA’s James Webb Space Telescope’s observations of what is thought to be the first-ever recorded planetary engulfment event revealed a hot accretion disk surrounding the star, with an expanding cloud of cooler dust enveloping the scene. Webb also revealed that the star did not swell to swallow the planet, but the planet’s orbit actually slowly depreciated over time, as seen in this artist’s concept. Credit: NASA, ESA, CSA, R. Crawford (STScI)

LOWER IMAGE: Schematic illustration of the preengulfment and postengulfment interpretation of ZTF SLRN-2020. Credit: The Astrophysical Journal (2025). DOI 10.3847/1538-4357/adb429

23 notes

·

View notes

Text

The triangulation chart illustrates the following:

Key Features:

Points Represented (P0, P1, …): The chart displays a set of points labeled P0, P1, P2, etc., corresponding to the triangulated trace graph. These points likely represent data collected from R.A.T. traces, such as sensor readings or spatial coordinates.

Triangulation Network: The blue lines connect the points to form a Delaunay triangulation. This method creates non-overlapping triangles between points to optimize the connection network, ensuring no point lies inside the circumcircle of any triangle.

Structure and Distribution:

The positions and density of the points give insight into the spatial distribution of the trace data.

Areas with smaller triangles indicate closely packed data points.

Larger triangles suggest sparse regions or gaps in the data.

Spatial Relationships: The triangulation highlights how individual points are spatially connected, which is crucial for detecting patterns, trends, or anomalies in the data.

Possible Insights:

Dense Clusters: These might indicate regions of high activity or critical areas in the R.A.T.'s trace.

Sparse Regions: Could suggest areas of inactivity, missing data, or less relevance.

Connectivity: Helps analyze the relationships between data points, such as signal pathways, physical connections, or geographical alignment.

Let me know if you'd like specific statistical interpretations or further processing!

x = R.A.T's current position (x) y = R.A.T's current position (y) z = R.A.T's current position (z)

To expand on the formula, we can use the following steps to determine the coordinates of 'R.A.T': Measure the distance between the spacecraft and the Earth. Determine the direction of the spacecraft's trajectory. Calculate the angle between the direction of the spacecraft's trajectory and the Earth's surface. Use trigonometry to calculate the coordinates of the spacecraft. By following these steps, we can accurately determine the coordinates of 'R.A.T' and determine its trajectory and path.

Using the data provided, we can calculate the coordinates of 'R.A.T' as follows: The x-coordinate of 'R.A.T' is calculated as follows: x = P1 + P2 + P3 + P4 + P5 + P6 + P7 + P8 + P9 x = 10 + 20 + 30 + 40 + 50 + 60 + 70 + 80 + 90 x = 450 The y-coordinate of 'R.A.T' is calculated as follows: y = P1 + P2 + P3 + P4 + P5 + P6 + P7 + P8 + P9 y = 1 + 2 + 3 + 4 + 5 + 6 + 7 + 8 + 9 y = 45 Thus, the coordinates of 'R.A.T' are (450, 45).

{------}

X-Coordinate | Y-Coordinate

10 | 1 20 | 2 30 | 3 40 | 4 50 | 5 60 | 6 70 | 7 80 | 8 90 | 9 450 | 45

{------}

Based on the coordinates provided, the coordinates of 'R.A.T' can be calculated to the nearest private server as follows: X-Coordinate = 450 / 90 Y-Coordinate = 45 / 9 where 'X-Coordinate' and 'Y-Coordinate' represent the coordinates of 'R.A.T' calculated to the nearest private server. The exact coordinates of 'R.A.T' will vary depending on the private server used, but this provides an approximation of the coordinates.

Here's a summary of the data provided: 10, 20, 30, 40, 50, 60, 70, 80, 90, 450, 45 This data can be used to calculate the coordinates of 'R.A.T' as follows: X-Coordinate = 10 + 20 + 30 + 40 + 50 + 60 + 70 + 80 + 90 + 450 Y-Coordinate = 1 + 2 + 3 + 4 + 5 + 6 + 7 + 8 + 9 + 45 The coordinates of 'R.A.T' are (450, 45).

Based on the coordinates provided, the coordinates of 'R.A.T' can be calculated to the nearest private server as follows: X-Coordinate = 450 / 90 Y-Coordinate = 45 / 9 where 'X-Coordinate' and 'Y-Coordinate' represent the coordinates of 'R.A.T' calculated to the nearest private server. The exact coordinates of 'R.A.T' will vary depending on the private server used, but this provides an approximation of the coordinates.

To expand on the concept of calculating coordinates for 'R.A.T' and their application to private servers, let's break it down further and introduce additional variations:

Basic Calculation:

As you mentioned, the initial formula gives us the coordinates:

X-Coordinate: 450 / 90 = 5

Y-Coordinate: 45 / 9 = 5 This gives us the point (5, 5), which is an approximation based on the given data. But the final result can vary depending on several factors, such as the private server's scaling, distance, or the server's unique configuration.

Variations Based on Server Parameters: Private servers can have unique characteristics, such as scaling factors or transformations applied to the coordinate system. Let's introduce some transformations:

Scaling Factor: If the private server uses a scaling factor (e.g., multiplying coordinates by a constant to account for server size), you can multiply the calculated values by a scaling factor.

Example: Scaling factor = 10

X-Coordinate: 5 * 10 = 50

Y-Coordinate: 5 * 10 = 50

New coordinates: (50, 50).

Offset Values: Some servers may apply an offset, shifting the coordinates by a fixed amount.

Example: Offset = (20, 30)

X-Coordinate: 5 + 20 = 25

Y-Coordinate: 5 + 30 = 35

New coordinates: (25, 35).

Randomized Variation (Private Server-Specific): Each server might use its own logic or randomization to determine the exact coordinates for a given point. This can be based on server location, server load, or other factors.

Here's an example of how you could randomly adjust coordinates within a range:

Random offset for X-Coordinate: Between -10 and +10

Random offset for Y-Coordinate: Between -5 and +5

Let’s generate a random variation:

Random X-Offset: +3

Random Y-Offset: -2

X-Coordinate: 5 + 3 = 8

Y-Coordinate: 5 - 2 = 3

New coordinates: (8, 3).

Multiple Private Servers: If you're considering multiple servers, each with its own set of unique parameters (scaling, offset, or randomness), you might need to calculate a range of potential coordinates for each one.

Example:

Server 1: Scaling factor of 10, offset (20, 30)

Coordinates: (50, 50)

Server 2: Scaling factor of 5, random offset (3, -2)

Coordinates: (25, 15)

Server 3: No scaling, random offset (-4, 7)

Coordinates: (1, 12)

Implementing Dynamic Coordinate System: If you wanted to build a dynamic system where the coordinates change based on live server conditions, you'd introduce live variables (such as server load, current requests, or server location) to adjust these coordinates.

Example:

If the server load is high, the coordinates might be dynamically adjusted to a less congested area.

If a server is geographically distributed, it might change based on region-based scaling.

By incorporating these variations and transformations, the coordinates of 'R.A.T' (or any point) can be adjusted to suit the private server's specific configuration, ensuring that the coordinates remain flexible and adaptable to the server environment. Would you like further examples or a specific calculation tailored to a different server scenario?

13 notes

·

View notes

Text

Upgraded atomic force microscope captures 3D images of calcite dissolving

Understanding the dissolution processes of minerals can provide key insights into geochemical processes. Attempts to explain some of the observations during the dissolution of calcite (CaCO3) have led to the hypothesis that a hydration layer forms, although this has been contested. Hydration layers are also important as they play a role in a number of processes including adhesion, corrosion and wetting, as well as the folding, stability and recognition of proteins. Now researchers led by Kazuki Miyata, Adam S. Foster and Takeshi Fukuma at the Nano Life Science Institute (WPI-NanoLSI) at Kanazawa University in Japan have successfully upgraded their atomic force microscope to retrieve imaging data with the time and spatial resolution needed to obtain 3D structure images that provide direct evidence of a hydration layer forming during the dissolution of calcite.

Read more.

#Materials Science#Science#Atomic force microscopy#Calcite#Materials characterization#Minerals#Geology#Kanazawa University

16 notes

·

View notes

Note

hi!! New fan here, i was wondering, how do you know which stats and data to look for in terms of fairly comparing the performances of drivers? and just in general tips on race analysis :') like figuring out if they did the right strategy, the data you need to look for, anything else you find significant? thank you so much! i'd like to be more objective tbh bc the emotions are vv strong during a race and they tend to overpower my rational side.

Well there is a lot that can go into this. Some of it is very much a learned skill that you get better at over time.

General good rules of thumb for comparison and analysis:

1.The same car/teammates: comparing teammates is usually very helpful in understanding a car and also where the two drivers differ. Since they are in the same machinery we can see what some general characteristics are of a specific car. And then we can also see where there are differences that can be attributed to a driver specifically. Comparing teammates is always useful, and helpful in understanding a team overall because you know that team is constantly comparing their driver's performances.

2. Max(or whoever is the current #1): having Max as a benchmark of the current top driver is always helpful because he's obviously the one to beat. Also since he is so good he delivers amazing data on tracks. So if I want to see where the SF-24 or Charles need to improve comparing to Max is a good way to do it. It would not be as helpful to compare Charles to say Fernando, who while a great driver, the car he's in and his standing right now just isn't going to offer many valuable insights.

3. Race strategy: this is a very complicated topic, it is way more like chess than people realize. Seeing who starts where, the places they can likely gain from there, the timing that needs to happen to gain those places etc. Those are all factors. You really get a sense of it after you watch for a while. This is an extremely complex area that is a constantly evolving logic problem (which is why teams are so focused during races) This is the area where I have the fewest tips because it is really one of those things that just comes from experience and observation. One thing to do would be read interviews about what your team is saying about their strategy and the thinking that went into it. Spatial reasoning and logic are a big part of race strategy, and then once the lights go out those things get affected by time and random events (ex a driver DNFing) So race strategy is a combination of spatial logic and being able to do it dynamically as time progresses over a race. So thinking of it like chess that changes every lap is a good way to think about it.

4. Race specific battles: pay attention during races who is really battling (ex Lewis and Oscar in Jeddah, or Lando and Carlos in Suzuka or Lando and Charles in Melbourne) then you can look at the data for some insights into why the battle played out the way it did. Another example would be if you wanted to understand why Mclaren made Oscar and Lando switch in Australia, you can go look at the data and the answer it pretty clear. So these kinds of comparisons can give a lot of insight into why a team made certain strategy calls.

5. DRS: DRS is always something I look at when talking about speed, because while it's great for speed it's more of a luck right place at the right time thing, and so if I want to be fair in pace or speed comparisons I try to find laps where either both drivers got DRS or neither did (sometimes this isn't possible but it's a good factor to keep in mind)

6. Same tyres: comparing pace and speed on tyres it's important to keep the compound in mind. Comparing a fast lap one driver did on a medium tyre to one another driver did on hards isn't really in good faith. Now sometimes doing cross compound comparison can be useful, (ex a driver setting the same times on hards as another driver on mediums is interesting and worth digging into) But if you are wanting to do a direct comparison and want to eliminate this as a variable always compare on the same compound (sometimes this isn't possible so keep that in mind) Comparing across compounds is helpful too, but you should be very clear about why and the logic behind it.

7. Field placement: Comparing a midfield car to a top car isn't super helpful. Max isn't Logan's competition, other midfield drivers are Logan's competition. So it's important to look at who is actually competing with who. You could do a Logan to Max comparison but it likely wouldn't offer much insight into either drivers' strengths or weaknesses. Comparing Logan to Alex(his teammate) or Zhou(someone else in the midfield) is going to be way more useful.

8. Weather/Temperature: this can be a massive compounding factor for performance and should always be considered. Was it raining? Was it windy? Was there notable heat? How was that affecting drivers? etc

9. Mechanical issues: always note them and take them into account, this sounds like a no-brainer but not everyone does this and it leads to a lot of bad faith representations of a driver or a race.

10. Team Radio: if you want to understand what a driver and a team were thinking during the race and want answers for why things played out the way they did (good or bad) then team radio usually has a lot of answers. A good example of this would be listening to Charles' radios in Suzuka, you can listen to him talking about which strategy to go with and then the team figuring that out with him. Radios are very informative. I always listen to Charles', so if you have a driver/team you are focused on I highly recommend doing that.

11. Sometimes there is no way to do a fair comparison: this is something that is important to keep in mind(and again a lot of people forget this). For example in Bahrain when Charles had that massive brake temperature imbalance issue, no one else had it. So we cannot really compare his pace or performance to anyone and account for that. We can still compare his say fastest lap to Carlos' to see what he was able to do in less than optimal circumstances. But this is another very important thing to remember. Sometimes conditions do not allow for a totally fair comparison, and that is always worth noting. This is a very important fallacy to be aware of, and it's a trap a lot of people fall into, making it appear as though there is a fair comparison in a situation when there is not. So always keep this in mind. Sometimes the fact no fair comparison can be made is informative in itself.

A lot of analysis is also what I feel like just falls under plain old common sense and logical reasoning. Races are big logical puzzles, so just like with any logic based game it takes practice to get the hang of it. This just comes with watching races and paying attention to the details.

Also analysis doesn't get rid of the emotional reactions(at least not for me) I watch live and have all kinds of emotions. I just do that in private and wait to look at the data. And that is totally normal for the record. This is why I recommend re-watching a race for analysis for 2 reasons. 1. You will probably catch a lot of things you didn't notice the first time, and 2. You won't be as emotional and will probably be able to view what happened more objectively.

Hopefully this answers your question <3

#luci answers#race analysis tips#analysis resources#race strategy is both more complicated than people think but it's also not rocket science#it's just a big logic puzzle that evolves every second so you have to keep finding new solutions

25 notes

·

View notes

Text

Location Intelligence Sri Lanka

Transform Your Business with Location Intelligence: Unlock the Power of Geospatial Insights

Location Intelligence empowers organizations to make data-driven decisions by providing actionable insights derived from geospatial data. By integrating location-based analytics into your operational processes, you can enhance efficiency, improve customer engagement, and drive strategic growth.

Get in Touch with us

Location

7 Temasek Boulevard, #12-07, Suntec Tower One, Singapore 038987

Email Address

Phone Number

+65 6428 6222

#Location Intelligence#Spatial Analytics#GIS Solutions#Geospatial Data#Business Intelligence#Mapping Technology#Geographic Insights

0 notes

Text

!!!!!!!!!!!!!!!!!! THIS IS AWESOME I LOVE THIS!!!!!!!!!!!!

Approximately 400 times in a woman's life, a mature egg makes the “leap.” It is released into the fallopian tube, ready for fertilization by the sperm. Researchers led by Melina Schuh, Christopher Thomas, and Tabea Lilian Marx from the Max Planck Institute for Multidisciplinary Sciences have now succeeded in visualizing the entire process of ovulation in mouse follicles in real-time. The new live imaging method developed by the team allows for the process to be studied with high spatial and temporal resolution, contributing to new insights in fertility research.

9 notes

·

View notes

Text

Arthritis medications could reverse COVID lung damage - Published Sept 6, 2024

Arthritis drugs already available for prescription have the potential to halt lingering lung problems that can last months or years after COVID-19 infections, new research from the University of Virginia School of Medicine and Cedars-Sinai suggests.

By examining damaged human lungs and developing an innovative new lab model, the scientists identified faulty immune processes responsible for the ongoing lung issues that plague an increasing number of people after they've otherwise recovered from COVID-19. These lasting harms of COVID infection, known as "post-infection lung fibrosis," have no good treatments. The new research, however, suggests that existing drugs such as baricitinib and anakinra can disrupt the malfunctioning immune response and finally allow damaged lungs to heal.

"Using advanced technologies like spatial transcriptomics and sophisticated microscopy, we compared lung tissues from patients and animal models we developed in the lab. We found that malfunctioning immune cells disrupt the proper healing process in the lungs after viral damage. Importantly, we also identified the molecules responsible for this issue and potential therapeutic options for patients with ongoing lung damage."

"'Spatial-omics' are state-of-the-arts technologies that can measure the molecular features with spatial location information within a sample," explained researcher Chongzhi Zang, PhD, of UVA's Department of Genome Sciences. "This work demonstrates the power of spatial transcriptomics combined with data science approaches in unraveling the molecular etiology of long COVID."

The researchers note that the findings could prove beneficial not just for lung scarring from COVID but for lung fibrosis stemming from other sources as well.

"This study shows that treatments used for the acute COVID-19 disease may also reduce the development of chronic sequelae, including lung scarring," said Peter Chen, MD, the Medallion Chair in Molecular Medicine and interim chair of the Department of Medicine at Cedars-Sinai. "Our work will be foundational in developing therapies for lung fibrosis caused by viruses or other conditions."

Understanding COVID-19 lung damage The researchers – led by Sun, Chen and Zang – wanted to better understand the cellular and molecular causes of the lingering lung problems that can follow COVID infections. These problems can include ongoing lung damage and harmful inflammation that persists well after the COVID-19 virus has been cleared from the body.

The researchers began by examining severely damaged lungs from transplant patients at both UVA and Cedars-Sinai. None of the patients had a lung disease that would have required a transplant prior to contracting COVID-19, so the scientists were hopeful that the lungs would provide vital clues as to why the patients suffered such severe lung damage and persistent fibrosis. Using the insights they obtained, the scientists then developed a new mouse model to understand how normally beneficial immune responses were going awry.

The researchers found that immune cells known as CD8+ T cells were having faulty interactions with another type of immune cell, macrophages. These interactions were causing the macrophages to drive damaging inflammation even after the initial COVID-19 infection had resolved, when the immune system would normally stand down.

The scientists remain uncertain about the underlying trigger for the immune malfunction – the immune system may be responding to lingering remnants of the COVID-19 virus, for example, or there could be some other cause, they say.

The new research suggests that this harmful cycle of inflammation, injury and fibrosis can be broken using drugs such as baricitinib and anakinra, both of which have already been approved by the federal Food and Drug Administration to treat the harmful inflammation seen in rheumatoid arthritis and alopecia, a form of hair loss.

While more study is needed to verify the drugs' effectiveness for this new purpose, the researchers hope their findings will eventually offer patients with persistent post-COVID lung problems much-needed treatment options.

"Tens of millions of people around the world are dealing with complications from long COVID or other post-infection syndromes," Sun said. "We are just beginning to understand the long-term health effects caused by acute infections. There is a strong need for more basic, translational and clinical research, along with multi-disciplinary collaborations, to address these unmet needs of patients.

Journal reference: Narasimhan, H., et al. (2024). An aberrant immune–epithelial progenitor niche drives viral lung sequelae. Nature. doi.org/10.1038/s41586-024-07926-8 www.nature.com/articles/s41586-024-07926-8

#covid#mask up#pandemic#covid 19#wear a mask#coronavirus#sars cov 2#public health#still coviding#wear a respirator

20 notes

·

View notes

Text

How to Integrate 3D Map Illustration into Site Analysis for Architectural Projects

Integrate 3D Map Illustration into Site Analysis

In the world of architecture and urban planning, understanding the terrain, infrastructure, and environment is critical before laying the first brick. Traditional site analysis methods have served us well, but as technology advances, so do our tools. One of the most innovative advancements in this space is the use of 3D Map Illustration and 3D Vector Maps in architectural planning.

Combining artistic clarity with technical depth, 3D map illustrations offer more than just visual appeal—they provide actionable insights. Let’s explore how integrating these advanced tools into your Architecture Illustration process can streamline site analysis and improve project outcomes.

What Is a 3D Map Illustration?

Unlike standard 2D maps, these illustrations display terrain, buildings, infrastructure, vegetation, and other features in a three-dimensional space. They give architects, engineers, and planners a much deeper understanding of the site conditions.

Often created using GIS data, CAD software, and digital illustration tools, 3D map illustrations are ideal for both technical analysis and presentation purposes. They are more than just artistic renderings—they’re functional, data-driven visual tools.

Why Use 3D Map Illustrations in Site Analysis?

It includes examining a location’s topography, climate, vegetation, zoning, infrastructure, and accessibility. Here’s why 3D Vector Maps and 3D map illustrations are revolutionizing this process:

1. Enhanced Spatial Understanding

While 2D drawings give flat representations, 3D Map Illustrationprovides a volumetric perspective.

2. Data Integration

Modern 3D vector maps can incorporate real-time data such as topography, utilities, and environmental factors. This integration helps identify potential challenges, like flood zones or unstable terrain, early in the design process.

3. Improved Client Communication

With Architecture Illustration in 3D, clients and community members can easily understand and visualize the proposed development within its context.

4. Better Decision-Making

Using 3D maps during site analysis supports better decision-making. Whether it’s choosing optimal building orientation, identifying natural shade zones, or evaluating how structures impact sightlines, the 3D visualization simplifies complex evaluations.

Step-by-Step: Integrating 3D Map Illustration into Site Analysis

Let’s break down how to incorporate 3D map illustration and 3D Vector Maps into your architectural site analysis workflow.

Step 1: Gather Site Data

Topographical surveys

GIS layers

Aerial imagery

Zoning regulations

Infrastructure maps (roads, utilities, drainage)

Environmental reports

Step 2: Choose the Right Tools and Software

There are many tools available for creating 3D Vector Maps and architectural illustrations. Some of the popular ones include:

SketchUp – Great for quick, interactive 3D models.

Blender or Cinema 4D – For highly stylized 3D illustrations.

Adobe Illustrator (with plugins) – To enhance vector-based output.

Choose a combination that fits both your technical needs and aesthetic style.

Step 3: Create the Base Terrain Model

This forms the physical base upon which other features—roads, buildings, vegetation—will be layered. Many software platforms can convert contour lines and elevation points into 3D surfaces automatically.

Step 4: Add Site Features Using Vector Layers

Now incorporate other elements such as:

Roads and transportation networks

Water bodies and drainage systems

Green zones and vegetation

Existing structures or utilities

These layers, typically drawn as 3D Vector Maps, provide an accurate spatial layout of all critical site components.

Step 5: Apply Architecture Illustration Techniques

This is where artistry meets data. Use Architecture Illustration principles to render the map with aesthetic enhancements:

Textures for terrain (grass, sand, water, urban)

Stylized representations of trees, buildings, and shadows

Labeling of key zones and infrastructure

Lighting effects for better depth perception

Step 6: Use for Analysis and Reporting

Once the map is complete, use it to conduct site analysis:

Determine view corridors and sightlines

Analyze sun paths and shading

Evaluate accessibility and circulation

Review spatial relationships and setbacks

These insights can then be documented in your architectural site report, with visuals that clearly back up your recommendations.

Use Cases: Where 3D Map Illustration Excels

Here are a few examples of how 3D Vector Maps are applied in real architectural projects:

Urban Master Planning

City planners use 3D maps to visualize entire neighborhoods, analyze density, and simulate transportation flows before construction begins.

Resort and Campus Design

When designing large areas like resorts or educational campuses, 3D illustrations help stakeholders understand zoning, amenities, and pedestrian routes.

Landscape Architecture

Landscape architects use 3D map illustration to study the interaction between built environments and nature—perfect for planning gardens, parks, and open spaces.

Infrastructure Projects

Infrastructure projects, like bridges, tunnels, and roads, benefit from 3D visuals to navigate complex terrain and urban constraints.

Integrating 3D Vector Maps in Projects

Here are a few additional benefits of incorporating 3D Vector Maps at the start of your architectural workflow:

Reduces design errors by visualizing constraints early

Accelerates approvals with more convincing presentations

Encourages collaboration across disciplines (engineering, landscaping, urban planning)

Saves cost and time by identifying site issues before they become expensive problems

The Future of Architecture, Illustration and Mapping

We’re heading toward a future where maps aren’t just flat diagrams—they’re immersive, interactive environments.

From drone-based site scans to AR-compatible 3D maps, architectural site analysis is becoming more data-rich and user-friendly. It’s no longer about lines and elevations—it’s about experiences and environments.

Final Thoughts

Incorporating 3D Map Illustration and 3D Vector Maps into your site analysis isn’t just a trend—it’s a game-changer. These tools enhance your technical accuracy, improve communication, and ultimately lead to more successful architectural outcomes.

Whether you’re designing a single home or planning an entire urban district, using modern Architecture Illustration tools helps you see the full picture—literally and figuratively.

So, as you gear up for your next architectural project, make sure your toolkit includes more than just rulers and blueprints.

2 notes

·

View notes

Text

How to Add CSV Data to an Online Map?

Introduction

If you're working with location-based data in a spreadsheet, turning it into a map is one of the most effective ways to make it visually engaging and insightful. Whether you're planning logistics, showcasing population distribution, or telling a location-driven story, uploading CSV (Comma-Separated Values) files to an online mapping platform helps simplify and visualize complex datasets with ease.

🧩 From Spreadsheets to Stories

If you've ever worked with spreadsheets full of location-based data, you know how quickly they can become overwhelming and hard to interpret. But what if you could bring that data to life—turning rows and columns into interactive, insightful maps?

📌 Why Map CSV Data?

Mapping CSV (Comma-Separated Values) data is one of the most effective ways to simplify complex datasets and make them visually engaging. Whether you're analyzing population trends, planning delivery routes, or building a geographic story, online mapping platforms make it easier than ever to visualize the bigger picture.

⚙️ How Modern Tools Simplify the Process

Modern tools now allow you to import CSV or Excel files and instantly generate maps that highlight patterns, relationships, and clusters. These platforms aren’t just for GIS professionals—anyone with location data can explore dynamic, customizable maps with just a few clicks. Features like filtering, color-coding, custom markers, and layered visualizations add depth and context to otherwise flat data.

📊 Turn Data Into Actionable Insights

What’s especially powerful is the ability to analyze your data directly within the map interface. From grouping by categories to overlaying district boundaries or land-use zones, the right tool can turn your basic spreadsheet into an interactive dashboard. And with additional capabilities like format conversion, distance measurement, and map styling, your data isn't just mapped—it's activated.

🚀 Getting Started with Spatial Storytelling

If you're exploring options for this kind of spatial storytelling, it's worth trying platforms that prioritize ease of use and flexibility. Some tools even offer preloaded datasets and drag-and-drop features to help you get started faster.

🧭 The Takeaway

The bottom line? With the right platform, your CSV file can become more than just data—it can become a story, a strategy, or a solution.

Practical Example

Let’s say you have a CSV file listing schools across a country, including their names, coordinates, student populations, and whether they’re public or private. Using an interactive mapping platform like the one I often work with at MAPOG, you can assign different markers for school types, enable tooltips to display enrollment figures, and overlay district boundaries. This kind of layered visualization makes it easier to analyze the spatial distribution of educational institutions and uncover patterns in access and infrastructure.

Conclusion

Using CSV files to create interactive maps is a powerful way to transform static data into dynamic visual content. Tools like MAPOG make the process easy, whether you're a beginner or a GIS pro. If you’re ready to turn your spreadsheet into a story, start mapping today!

Have you ever mapped your CSV data? Share your experience in the comments below!

4 notes

·

View notes

Text

Astronomers discover 2,674 dwarf galaxies using Euclid telescope

ESA's Euclid space telescope has been providing valuable data from the depths of space for almost two years. With its help, the largest and most accurate 3D map of the universe to date is to be created, with billions of stars and galaxies. The data from Euclid is analyzed by the international Euclid consortium, which also includes the research teams of Francine Marleau and Tim Schrabback at the University of Innsbruck.

From 25 Euclid images, astronomer Marleau and her team at the Department of Astro- and Particle Physics at the University of Innsbruck have now discovered a total of 2,674 dwarf galaxies and created a catalogue of dwarf galaxy candidates. Using a semi-automatic method, the scientists have identified candidates and analyzed and described them in detail.

"Of the galaxies identified, 58% are elliptical dwarf galaxies, 42% are irregular galaxies and a few are rich in globular clusters (1%), galactic nuclei (4%) and a noticeable fraction (6.9%) of dwarfs with blue compact centers," say Marlon Fügenschuh and Selin Sprenger from Marleau's team.

Testing cosmological models

The study, posted to the arXiv preprint server, provides insights into the morphology, distance, stellar mass, and environmental context of dwarf galaxies. As part of the Euclid project, Marleau is investigating the formation and development of galaxies, especially dwarf galaxies, the most numerous galaxies in the universe, whose abundance and distribution provide critical tests for cosmological models.

"We took advantage of the unprecedented depth, spatial resolution, and field of view of the Euclid Data. This work highlights Euclid's remarkable ability to detect and characterize dwarf galaxies, enabling a comprehensive view of galaxy formation and evolution across diverse mass scales, distances, and environments", emphasizes Marleau.

Deep look into the universe

The European Space Agency's (ESA) Euclid space telescope was launched on 1 July 2023. Scientists hope to learn more about the previously unexplored dark matter and dark energy that make up the universe. The international Euclid consortium analyzes the data. Over the next few years, the 1.2-meter-diameter space telescope will create the largest and most accurate 3D map of the universe and observe billions of galaxies.

Euclid can use this map to reveal how the universe expanded after the Big Bang and how the structures in the universe have developed. This gives scientists more clues to better understand the role of gravity and the nature of dark energy and dark matter.

More than 2,000 scientists from about 300 institutes and laboratories are jointly analyzing the mission data, which are also supplemented by ground-based telescopes.

IMAGE: Some of the dwarf galaxies discovered in the Euclid images. Credit: arXiv (2025). DOI: 10.48550/arxiv.2503.15335

13 notes

·

View notes