#Understanding-DevOps-Engineers

Explore tagged Tumblr posts

Text

What Are DevOps Engineers? The Role, Responsibilities, and Skills You Need to Succeed- OpsNexa!

Learn what DevOps Engineers perform and why they are critical to modern software development. What Are DevOps Engineers? Learn about the main duties, skills, and technologies required for success in this in-demand profession, as well as how DevOps engineers use automation, collaboration, and continuous integration to optimise software delivery.

#What-Are-DevOps-Engineers#DevOps-Engineer-Role#Who-Is-a-DevOps-Engineer#Understanding-DevOps-Engineers#DevOps-Professionals

0 notes

Text

Valar & Co. Office — The Peacekeeping Bureau of Arda

Manwë — Chief Executive Officer (CEO) Officially the boss, but won’t sign a single order without consulting his wife. Loves meetings where nothing ever gets decided. "Varda, can I say something...?"

Varda — Shadow Executive / Chief Lighting Technician Are the stars on? Great. But now the Elves are swearing eternal oaths at night and falling into despair by day. "I just wanted to make things beautiful..."

Ulmo — Head of the Remote Office (always remote) Never shows up to meetings. Sends long memos in seashells and communicates via sound waves. "I can't join the Zoom call — there's a storm on the line."

Aulë — Chief Engineer / DevOps Builds automation systems, then regrets it when they gain sentience. "I just wanted to speed things up — now we have seven interns with hammers..."

Yavanna — Environmental & Indoor Plant Department Fights for a green office, waters the moss on the walls. Constant conflict with Aulë for “concreting over her flowerbeds.” "This is a sacred ficus — don't touch it!"

Námo (Mandos) — Head of HR / Department of Soul Archives Conducts eternal exit interviews. Fired employees go to him — permanently. Speaks softly; everyone’s terrified of him. "Your position: dead. Please wait in the corridor."

Vairë — Archivist / Records Management Weaves reports — sometimes literally. Remembers everything. "Here’s the file: 'Curses & Oaths, Volume I, Fëanor.' Don’t mix it up with Volume II."

Irmo (Lórien) — Head of Dreams & Motivation Department Sleeps on the job — calls it a "visionary insight session." Everyone goes to him for inspiration, no one understands a word. "This isn’t coffee — it’s a dream-petal infusion."

Estë — Office Therapist Hands out blankets, tea, and naps. Incredibly busy, because everyone is burnt out. "No meetings. Only silence and lying down."

Tulkas — Security / Special Forces for Motivation Don’t ask why his brass knuckles have the Valar logo. Very cheerful — until he isn’t. "Who bullied Ulmo? Come on, step forward."

Oromë — Head of the Expeditionary Department Always on a field assignment. Claims he’s recruiting among wild tribes. "Haven’t found the horse yet, but I did find a wild wizard — useful?"

Vána — Corporate Culture Manager Organizes morning dances and office greenery. No one knows exactly what she does, but the place always smells like flowers.

Nienna — Office Psychologist / Empathy Mentor You go to her when everything’s already gone wrong. Everyone cries — and somehow feels better. "You suffer. That means you’re growing."

#lord of the rings#the silmarillion#tolkien#fanfic#silm fic#silmarillion#lort of the rings#lort#the silmarilion#the silm#the silm fandom#yavanna#nienna#varda#manwe#ulmo#valar

21 notes

·

View notes

Text

the three types of programmer job posting in 2025:

we need someone with strong problem solving skills and a knowledge of C/C++ Python and Java who enjoy challenging. experience with bash scripts a plus

we need a CI/CD Spring Boot Agile DevOps test engineer with Jira fast-paced weekends start immediately 5 years experience required no degree

we need a react/node.js html/css c# typescript webdev who also knows python oh god why does no one in webdev understand basic data structures

7 notes

·

View notes

Text

Oh gawd, it’s all unravelling!! I’ve resorted to asking my ChatGPT for advice on how to handle this!! This is the context I put it:

work in a small startup with 7 other people

I have been brought on for a three month contract to assess the current product and make recommendations for product strategy, product roadmap, improved engineering and product processes with a view to rebuilding the platform with a new product and migrating existing vendors and borrowers across

There is one engineer and no-one else in the company has any product or technical experience

The engineer has worked on his own for 6 years on the product with no other engineering or product person

He does all coding, testing, development, devops tasks

He also helps with customer support enquires

He was not involved in the process of bringing me onboard and felt blindsided by my arrival

I have requested access to Github, and his response was:

As you can imagine access to the source code is pretty sensitive. Are you looking for something specifically? And do you plan on downloading the source code or sharing with anyone else?

He then advised they only pay for a single seat

I have spoken with the Chief Operations Officer who I report to in the contract and advised my business risk concerns around single point of failure

I have still not been granted access to Github so brought it up again today with the COO, who said he had requested 2 weeks ago

The COO then requested on Asana that the engineer add myself and himself as Github users

I received the following from the engineer:

Hey can you please send me your use cases for your access to GitHub? How exactly are you going to use your access to the source code?

My response:

Hey! My request isn’t about making changes to the codebase myself but ensuring that Steward isn’t reliant on a single person for access.

Here are the key reasons I need GitHub access:

1 Business Continuity & Risk Management – If anything happens to you (whether you’re on holiday, sick, or god forbid, get hit by a bus!!), we need someone else with access to ensure the platform remains operational. Right now, Steward has a single point of failure, which is a pretty big risk.

2 Product Oversight & Documentation – As Head of Product, I need visibility into the codebase to understand technical limitations, dependencies, and opportunities at a broad level. This DOESN'T mean I’ll be writing code, but I need to see how things are structured to better inform product decisions and prioritization.

3 Facilitating Future Hiring – If we bring in additional engineers or external contractors, we need a structured process for managing access. It shouldn't be on just one person to approve or manage this.

Super happy to discuss any concerns you have, but this is ultimately a business-level decision to ensure Steward isn’t putting itself at risk.

His response was:

1&3 Bridget has user management access for those reasons

2. no one told me you were Head of Product already, which isn’t surprising. But congrats! So will you be sharing the source code with other engineers for benchmarking?

The software engineer is an introvert and while not rude is helpful without volunteering inflation

He is also the single access for AWS, Sentry, Persona (which does our KYC checks).

I already had a conversation with him as I felt something was amiss in the first week. This was when he identified that he had been "blindsided" by my arrival, felt his code and work was being audited. I explained that it had been a really long process to get the contract (18 months), also that I have a rare mix of skills (agtech, fintech, product) that is unusually suited to Steward. I was not here to tell him what to do but to work with him, my role to setup the strategy and where we need to go with the product and why, and then work with him to come up with the best solution and he will build it. I stressed I am not an engineer and do not code.

I have raised some concerns with the COO and he seems to share some of the misgivings, I sense some personality differences, there seems like there are some undercurrents that were there before I started.

I have since messaged him with a gentler more collaborative approach:

Hey, I’ve been thinking about GitHub access and wanted to float an idea, would it make sense for us to do a working session where you just walk me through the repo first? That way, I can get a sense of the structure without us having to rush any access changes or security decisions right away. Then, we can figure out what makes sense together. What do you think?

I’m keen to understand your perspective a bit more, can we chat about it tomorrow when you're back online? Is 4pm your time still good? I know you’ve got a lot on, so happy to be flexible.

I think I’ve fucked it up, I’m paranoid the COO is going to think I’m stirring up trouble and I’m going to miss out on this job. How to be firm yet engage with someone that potentially I’ll have to work closely with(he’s a prickly, hard to engage Frenchie, who’s lived in Aus and the US for years).

5 notes

·

View notes

Text

Are you struggling with deployment issues for your Node.js applications? 😫 Tired of hearing "but it works on my machine"? 🤯 Docker is the game-changer you need!

With Docker, you can containerize your Node.js app, ensuring a smooth, consistent, and scalable deployment across all environments. 🌍

🔥 Why Use Docker for Node.js Deployment? ✅ Eliminates Environment Issues – Package dependencies, runtime, and configurations into a single container for a "works everywhere" experience! ✅ Faster & Seamless Deployment – Reduce deployment time with pre-configured images and lightweight containers! ✅ Improved Scalability – Easily scale your app using Docker Swarm or Kubernetes! ✅ CI/CD Integration – Automate and streamline your deployment pipeline with Docker + Jenkins/GitHub Actions! ✅ Better Resource Utilization – Docker uses less memory and boots faster than traditional virtual machines!

💡 Whether you're a DevOps engineer, developer, or tech enthusiast, understanding Docker for Node.js deployment is a must!

📌 Want to master seamless deployment? Read the full article now!

#node js development#nodejs#top nodejs development company#busniess growth#node js development company

4 notes

·

View notes

Text

A Comprehensive Guide to Deploy Azure Kubernetes Service with Azure Pipelines

A powerful orchestration tool for containerized applications is one such solution that Azure Kubernetes Service (AKS) has offered in the continuously evolving environment of cloud-native technologies. Associate this with Azure Pipelines for consistent CI CD workflows that aid in accelerating the DevOps process. This guide will dive into the deep understanding of Azure Kubernetes Service deployment with Azure Pipelines and give you tips that will enable engineers to build container deployments that work. Also, discuss how DevOps consulting services will help you automate this process.

Understanding the Foundations

Nowadays, Kubernetes is the preferred tool for running and deploying containerized apps in the modern high-speed software development environment. Together with AKS, it provides a high-performance scale and monitors and orchestrates containerized workloads in the environment. However, before anything, let’s deep dive to understand the fundamentals.

Azure Kubernetes Service: A managed Kubernetes platform that is useful for simplifying container orchestration. It deconstructs the Kubernetes cluster management hassles so that developers can build applications instead of infrastructure. By leveraging AKS, organizations can:

Deploy and scale containerized applications on demand.

Implement robust infrastructure management

Reduce operational overhead

Ensure high availability and fault tolerance.

Azure Pipelines: The CI/CD Backbone

The automated code building, testing, and disposition tool, combined with Azure Kubernetes Service, helps teams build high-end deployment pipelines in line with the modern DevOps mindset. Then you have Azure Pipelines for easily integrating with repositories (GitHub, Repos, etc.) and automating the application build and deployment.

Spiral Mantra DevOps Consulting Services

So, if you’re a beginner in DevOps or want to scale your organization’s capabilities, then DevOps consulting services by Spiral Mantra can be a game changer. The skilled professionals working here can help businesses implement CI CD pipelines along with guidance regarding containerization and cloud-native development.

Now let’s move on to creating a deployment pipeline for Azure Kubernetes Service.

Prerequisites you would require

Before initiating the process, ensure you fulfill the prerequisite criteria:

Service Subscription: To run an AKS cluster, you require an Azure subscription. Do create one if you don’t already.

CLI: The Azure CLI will let you administer resources such as AKS clusters from the command line.

A Professional Team: You will need to have a professional team with technical knowledge to set up the pipeline. Hire DevOps developers from us if you don’t have one yet.

Kubernetes Cluster: Deploy an AKS cluster with Azure Portal or ARM template. This will be the cluster that you run your pipeline on.

Docker: Since you’re deploying containers, you need Docker installed on your machine locally for container image generation and push.

Step-by-Step Deployment Process

Step 1: Begin with Creating an AKS Cluster

Simply begin the process by setting up an AKS cluster with CLI or Azure Portal. Once the process is completed, navigate further to execute the process of application containerization, and for that, you would need to create a Docker file with the specification of your application runtime environment. This step is needed to execute the same code for different environments.

Step 2: Setting Up Your Pipelines

Now, the process can be executed for new projects and for already created pipelines, and that’s how you can go further.

Create a New Project

Begin with launching the Azure DevOps account; from the screen available, select the drop-down icon.

Now, tap on the Create New Project icon or navigate further to use an existing one.

In the final step, add all the required repositories (you can select them either from GitHub or from Azure Repos) containing your application code.

For Already Existing Pipeline

Now, from your existing project, tap to navigate the option mentioning Pipelines, and then open Create Pipeline.

From the next available screen, select the repository containing the code of the application.

Navigate further to opt for either the YAML pipeline or the starter pipeline. (Note: The YAML pipeline is a flexible environment and is best recommended for advanced workflows.).

Further, define pipeline configuration by accessing your YAML file in Azure DevOps.

Step 3: Set Up Your Automatic Continuous Deployment (CD)

Further, in the next step, you would be required to automate the deployment process to fasten the CI CD workflows. Within the process, the easiest and most common approach to execute the task is to develop a YAML file mentioning deployment.yaml. This step is helpful to identify and define the major Kubernetes resources, including deployments, pods, and services.

After the successful creation of the YAML deployment, the pipeline will start to trigger the Kubernetes deployment automatically once the code is pushed.

Step 4: Automate the Workflow of CI CD

Now that we have landed in the final step, it complies with the smooth running of the pipelines every time the new code is pushed. With the right CI CD integration, the workflow allows for the execution of continuous testing and building with the right set of deployments, ensuring that the applications are updated in every AKS environment.

Best Practices for AKS and Azure Pipelines Integration

1. Infrastructure as Code (IaC)

- Utilize Terraform or Azure Resource Manager templates

- Version control infrastructure configurations

- Ensure consistent and reproducible deployments

2. Security Considerations

- Implement container scanning

- Use private container registries

- Regular security patch management

- Network policy configuration

3. Performance Optimization

- Implement horizontal pod autoscaling

- Configure resource quotas

- Use node pool strategies

- Optimize container image sizes

Common Challenges and Solutions

Network Complexity

Utilize Azure CNI for advanced networking

Implement network policies

Configure service mesh for complex microservices

Persistent Storage

Use Azure Disk or Files

Configure persistent volume claims

Implement storage classes for dynamic provisioning

Conclusion

Deploying the Azure Kubernetes Service with effective pipelines represents an explicit approach to the final application delivery. By embracing these practices, DevOps consulting companies like Spiral Mantra offer transformative solutions that foster agile and scalable approaches. Our expert DevOps consulting services redefine technological infrastructure by offering comprehensive cloud strategies and Kubernetes containerization with advanced CI CD integration.

Let’s connect and talk about your cloud migration needs

2 notes

·

View notes

Text

Complete Terraform IAC Development: Your Essential Guide to Infrastructure as Code

If you're ready to take control of your cloud infrastructure, it's time to dive into Complete Terraform IAC Development. With Terraform, you can simplify, automate, and scale infrastructure setups like never before. Whether you’re new to Infrastructure as Code (IAC) or looking to deepen your skills, mastering Terraform will open up a world of opportunities in cloud computing and DevOps.

Why Terraform for Infrastructure as Code?

Before we get into Complete Terraform IAC Development, let’s explore why Terraform is the go-to choice. HashiCorp’s Terraform has quickly become a top tool for managing cloud infrastructure because it’s open-source, supports multiple cloud providers (AWS, Google Cloud, Azure, and more), and uses a declarative language (HCL) that’s easy to learn.

Key Benefits of Learning Terraform

In today's fast-paced tech landscape, there’s a high demand for professionals who understand IAC and can deploy efficient, scalable cloud environments. Here’s how Terraform can benefit you and why the Complete Terraform IAC Development approach is invaluable:

Cross-Platform Compatibility: Terraform supports multiple cloud providers, which means you can use the same configuration files across different clouds.

Scalability and Efficiency: By using IAC, you automate infrastructure, reducing errors, saving time, and allowing for scalability.

Modular and Reusable Code: With Terraform, you can build modular templates, reusing code blocks for various projects or environments.

These features make Terraform an attractive skill for anyone working in DevOps, cloud engineering, or software development.

Getting Started with Complete Terraform IAC Development

The beauty of Complete Terraform IAC Development is that it caters to both beginners and intermediate users. Here’s a roadmap to kickstart your learning:

Set Up the Environment: Install Terraform and configure it for your cloud provider. This step is simple and provides a solid foundation.

Understand HCL (HashiCorp Configuration Language): Terraform’s configuration language is straightforward but powerful. Knowing the syntax is essential for writing effective scripts.

Define Infrastructure as Code: Begin by defining your infrastructure in simple blocks. You’ll learn to declare resources, manage providers, and understand how to structure your files.

Use Modules: Modules are pre-written configurations you can use to create reusable code blocks, making it easier to manage and scale complex infrastructures.

Apply Best Practices: Understanding how to structure your code for readability, reliability, and reusability will save you headaches as projects grow.

Core Components in Complete Terraform IAC Development

When working with Terraform, you’ll interact with several core components. Here’s a breakdown:

Providers: These are plugins that allow Terraform to manage infrastructure on your chosen cloud platform (AWS, Azure, etc.).

Resources: The building blocks of your infrastructure, resources represent things like instances, databases, and storage.

Variables and Outputs: Variables let you define dynamic values, and outputs allow you to retrieve data after deployment.

State Files: Terraform uses a state file to store information about your infrastructure. This file is essential for tracking changes and ensuring Terraform manages the infrastructure accurately.

Mastering these components will solidify your Terraform foundation, giving you the confidence to build and scale projects efficiently.

Best Practices for Complete Terraform IAC Development

In the world of Infrastructure as Code, following best practices is essential. Here are some tips to keep in mind:

Organize Code with Modules: Organizing code with modules promotes reusability and makes complex structures easier to manage.

Use a Remote Backend: Storing your Terraform state in a remote backend, like Amazon S3 or Azure Storage, ensures that your team can access the latest state.

Implement Version Control: Version control systems like Git are vital. They help you track changes, avoid conflicts, and ensure smooth rollbacks.

Plan Before Applying: Terraform’s “plan” command helps you preview changes before deploying, reducing the chances of accidental alterations.

By following these practices, you’re ensuring your IAC deployments are both robust and scalable.

Real-World Applications of Terraform IAC

Imagine you’re managing a complex multi-cloud environment. Using Complete Terraform IAC Development, you could easily deploy similar infrastructures across AWS, Azure, and Google Cloud, all with a few lines of code.

Use Case 1: Multi-Region Deployments

Suppose you need a web application deployed across multiple regions. Using Terraform, you can create templates that deploy the application consistently across different regions, ensuring high availability and redundancy.

Use Case 2: Scaling Web Applications

Let’s say your company’s website traffic spikes during a promotion. Terraform allows you to define scaling policies that automatically adjust server capacities, ensuring that your site remains responsive.

Advanced Topics in Complete Terraform IAC Development

Once you’re comfortable with the basics, Complete Terraform IAC Development offers advanced techniques to enhance your skillset:

Terraform Workspaces: Workspaces allow you to manage multiple environments (e.g., development, testing, production) within a single configuration.

Dynamic Blocks and Conditionals: Use dynamic blocks and conditionals to make your code more adaptable, allowing you to define configurations that change based on the environment or input variables.

Integration with CI/CD Pipelines: Integrate Terraform with CI/CD tools like Jenkins or GitLab CI to automate deployments. This approach ensures consistent infrastructure management as your application evolves.

Tools and Resources to Support Your Terraform Journey

Here are some popular tools to streamline your learning:

Terraform CLI: The primary tool for creating and managing your infrastructure.

Terragrunt: An additional layer for working with Terraform, Terragrunt simplifies managing complex Terraform environments.

HashiCorp Cloud: Terraform Cloud offers a managed solution for executing and collaborating on Terraform workflows.

There are countless resources available online, from Terraform documentation to forums, blogs, and courses. HashiCorp offers a free resource hub, and platforms like Udemy provide comprehensive courses to guide you through Complete Terraform IAC Development.

Start Your Journey with Complete Terraform IAC Development

If you’re aiming to build a career in cloud infrastructure or simply want to enhance your DevOps toolkit, Complete Terraform IAC Development is a skill worth mastering. From managing complex multi-cloud infrastructures to automating repetitive tasks, Terraform provides a powerful framework to achieve your goals.

Start with the basics, gradually explore advanced features, and remember: practice is key. The world of cloud computing is evolving rapidly, and those who know how to leverage Infrastructure as Code will always have an edge. With Terraform, you’re not just coding infrastructure; you’re building a foundation for the future. So, take the first step into Complete Terraform IAC Development—it’s your path to becoming a versatile, skilled cloud professional

3 notes

·

View notes

Text

🚀 Red Hat Services Management and Automation: Simplifying Enterprise IT

As enterprise IT ecosystems grow in complexity, managing services efficiently and automating routine tasks has become more than a necessity—it's a competitive advantage. Red Hat, a leader in open-source solutions, offers robust tools to streamline service management and enable automation across hybrid cloud environments.

In this blog, we’ll explore what Red Hat Services Management and Automation is, why it matters, and how professionals can harness it to improve operational efficiency, security, and scalability.

🔧 What Is Red Hat Services Management?

Red Hat Services Management refers to the tools and practices provided by Red Hat to manage system services—such as processes, daemons, and scheduled tasks—across Linux-based infrastructures.

Key components include:

systemd: The default init system on RHEL, used to start, stop, and manage services.

Red Hat Satellite: For managing system lifecycles, patching, and configuration.

Red Hat Ansible Automation Platform: A powerful tool for infrastructure and service automation.

Cockpit: A web-based interface to manage Linux systems easily.

🤖 What Is Red Hat Automation?

Automation in the Red Hat ecosystem primarily revolves around Ansible, Red Hat’s open-source IT automation tool. With automation, you can:

Eliminate repetitive manual tasks

Achieve consistent configurations

Enable Infrastructure as Code (IaC)

Accelerate deployments and updates

From provisioning servers to configuring complex applications, Red Hat automation tools reduce human error and increase scalability.

🔍 Key Use Cases

1. Service Lifecycle Management

Start, stop, enable, and monitor services across thousands of servers with simple systemctl commands or Ansible playbooks.

2. Automated Patch Management

Use Red Hat Satellite and Ansible to automate updates, ensuring compliance and reducing security risks.

3. Infrastructure Provisioning

Provision cloud and on-prem infrastructure with repeatable Ansible roles, reducing time-to-deploy for dev/test/staging environments.

4. Multi-node Orchestration

Manage workflows across multiple servers and services in a unified, centralized fashion.

🌐 Why It Matters

⏱️ Efficiency: Save countless admin hours by automating routine tasks.

🛡️ Security: Enforce security policies and configurations consistently across systems.

📈 Scalability: Manage hundreds or thousands of systems with the same effort as managing one.

🤝 Collaboration: Teams can collaborate better with playbooks that document infrastructure steps clearly.

🎓 How to Get Started

Learn Linux Service Management: Understand systemctl, logs, units, and journaling.

Explore Ansible Basics: Learn to write playbooks, roles, and use Ansible Tower.

Take a Red Hat Course: Enroll in Red Hat Certified Engineer (RHCE) to get hands-on training in automation.

Use RHLS: Get access to labs, practice exams, and expert content through the Red Hat Learning Subscription (RHLS).

✅ Final Thoughts

Red Hat Services Management and Automation isn’t just about managing Linux servers—it’s about building a modern IT foundation that’s scalable, secure, and future-ready. Whether you're a sysadmin, DevOps engineer, or IT manager, mastering these tools can help you lead your team toward more agile, efficient operations.

📌 Ready to master Red Hat automation? Explore our Red Hat training programs and take your career to the next level!

📘 Learn. Automate. Succeed. Begin your journey today! Kindly follow: www.hawkstack.com

1 note

·

View note

Text

Top 6 Remote High Paying Jobs in IT You Can Do From Home

Technology has changed the scenario of workplaces and brought new opportunities for IT professionals erasing previous boundaries. Today, people are searching for both flexibility and, of course, better pay, which has made many look for remote well-paid jobs, especially in information technology field.

Advancements in technology have made remote work a reality for a growing number of IT specialists. Here, we will look into six specific remote high-paying IT jobs you can pursue from the comfort of your home:

Software Developer

Software developers are the architects of the digital world, designing, developing, and maintaining the software applications that power our lives. They work closely with clients, project managers, and other team members to translate concepts into functional and efficient software solutions.

In demand skills include proficiency in programming languages like Java, Python, Ruby, or JavaScript, knowledge of frameworks like React or Angular, and a strong foundation in problem-solving and communication. Platforms like Guruface can help you learn the coding skills to land a software developer job budget-friendly.

The average salary for a remote software developer is highly competitive, ranging from $65,000 to $325,000 according to recent data.

Data Scientist

Data scientists are the detectives of the digital age. They use their expertise in data analysis to uncover valuable insights and trends from large datasets, informing business decisions and driving growth.

To excel in this role, you'll need strong programming skills in languages like Python, R, and SQL, a solid understanding of statistical analysis and machine learning, and the ability to communicate complex findings effectively. Guruface is one of the leading online learning platforms that provides affordable data science courses.

The average salary for a remote Data Scientist is $154,932, with top earners exceeding $183,000.

Cloud Architect

Cloud architects are the masterminds behind an organization's cloud computing strategy. They design, plan, and manage a company's cloud infrastructure, ensuring scalability, security, and cost-effectiveness.

Cloud architects must be well-versed in cloud computing technologies from various providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. In addition, proficiency in architectural design, infrastructure as code (IaC), and security compliance is essential. If you're interested in becoming a cloud architect, Guruface offers courses that can equip you with the necessary skills. Their cloud architect training programs can help you gain proficiency in cloud technologies from industry leaders like AWS, Microsoft Azure, and Google Cloud Platform.

The average salary for a cloud architect in the US is $128,418, with senior cloud architects earning upwards of $167,000 annually.

DevOps Engineer

DevOps engineers bridge the gap between IT and software development, streamlining the software development lifecycle. They leverage automation tools and methodologies to optimize production processes and reduce complexity.

A successful DevOps engineer requires expertise in tools like Puppet, Ansible, and Chef, experience building and maintaining CI/CD pipelines, and a strong foundation in scripting languages like Python and Shell. Guruface offers DevOps training courses that can equip you with these essential skills. Their programs can help you learn the principles and practices of DevOps, giving you the knowledge to automate tasks, build efficient CI/CD pipelines, and select the right tools for the job.

The average salary for a remote DevOps Engineer is $154,333, and the salary range typically falls between $73,000 and $125,000.

AI/Machine Learning Engineer

AI/Machine Learning Engineers are the builders of intelligent systems. They utilize data to program and test machine learning algorithms, creating models that automate tasks and forecast business trends.

In-depth knowledge of machine learning, deep learning, and natural language processing is crucial for this role, along with proficiency in programming languages like Python and R programming and familiarity with frameworks like TensorFlow and PyTorch.

The average machine learning engineer salary in the US is $166,000 annually, ranging from $126,000 to $221,000.

Information Security Analyst

Information security analysts are the guardians of an organization's digital assets. They work to identify vulnerabilities, protect data from cyberattacks, and respond to security incidents.

A cybersecurity analyst's skillset encompasses technical expertise in network security, risk assessment, and incident response, coupled with strong communication and collaboration abilities.

The average salary for an Information Security Analyst in the United States is $77,490, with a salary range of $57,000 to $106,000.

If you're looking to become a digital guardian, Guruface offers cybersecurity courses that can equip you with the necessary skills. Their programs can teach you to identify vulnerabilities in an organization's network, develop strategies to protect data from cyberattacks, and effectively respond to security incidents. By honing both technical expertise and soft skills like communication and collaboration, Guruface's courses can prepare you to thrive in the in-demand cybersecurity job market.

Conclusion

The rapid evolution of the IT sector presents an opportunity for professionals to engage remotely in high-paying jobs that not only offer high earnings but also contribute significantly to technological advancement. Through this exploration of roles such as Software Developers, Data Scientists, Cloud Architects, DevOps Engineers, AI/Machine Learning Engineers, and Information Security Analysts, we've uncovered the essential skills, career opportunities, and the vital role of continuous education via online platforms like Guruface in improving these career paths.

Forget stuffy textbooks – Guruface's online courses are all about the latest IT skills, making you a tech rockstar in the eyes of recruiters. Upskill from coding newbie to cybersecurity guru, all on your schedule and without a dent in your wallet.

1 note

·

View note

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

What are the latest trends in the IT job market?

Introduction

The IT job market is changing quickly. This change is because of new technology, different employer needs, and more remote work.

For jobseekers, understanding these trends is crucial to positioning themselves as strong candidates in a highly competitive landscape.

This blog looks at the current IT job market. It offers insights into job trends and opportunities. You will also find practical strategies to improve your chances of getting your desired role.

Whether you’re in the midst of a job search or considering a career change, this guide will help you navigate the complexities of the job hunting process and secure employment in today’s market.

Section 1: Understanding the Current IT Job Market

Recent Trends in the IT Job Market

The IT sector is booming, with consistent demand for skilled professionals in various domains such as cybersecurity, cloud computing, and data science.

The COVID-19 pandemic accelerated the shift to remote work, further expanding the demand for IT roles that support this transformation.

Employers are increasingly looking for candidates with expertise in AI, machine learning, and DevOps as these technologies drive business innovation.

According to industry reports, job opportunities in IT will continue to grow, with the most substantial demand focused on software development, data analysis, and cloud architecture.

It’s essential for jobseekers to stay updated on these trends to remain competitive and tailor their skills to current market needs.

Recruitment efforts have also become more digitized, with many companies adopting virtual hiring processes and online job fairs.

This creates both challenges and opportunities for job seekers to showcase their talents and secure interviews through online platforms.

NOTE: Visit Now

Remote Work and IT

The surge in remote work opportunities has transformed the job market. Many IT companies now offer fully remote or hybrid roles, which appeal to professionals seeking greater flexibility.

While remote work has increased access to job opportunities, it has also intensified competition, as companies can now hire from a global talent pool.

Section 2: Choosing the Right Keywords for Your IT Resume

Keyword Optimization: Why It Matters

With more employers using Applicant Tracking Systems (ATS) to screen resumes, it’s essential for jobseekers to optimize their resumes with relevant keywords.

These systems scan resumes for specific words related to the job description and only advance the most relevant applications.

To increase the chances of your resume making it through the initial screening, jobseekers must identify and incorporate the right keywords into their resumes.

When searching for jobs in IT, it’s important to tailor your resume for specific job titles and responsibilities. Keywords like “software engineer,” “cloud computing,” “data security,” and “DevOps” can make a huge difference.

By strategically using keywords that reflect your skills, experience, and the job requirements, you enhance your resume’s visibility to hiring managers and recruitment software.

Step-by-Step Keyword Selection Process

Analyze Job Descriptions: Look at several job postings for roles you’re interested in and identify recurring terms.

Incorporate Specific Terms: Include technical terms related to your field (e.g., Python, Kubernetes, cloud infrastructure).

Use Action Verbs: Keywords like “developed,” “designed,” or “implemented” help demonstrate your experience in a tangible way.

Test Your Resume: Use online tools to see how well your resume aligns with specific job postings and make adjustments as necessary.

Section 3: Customizing Your Resume for Each Job Application

Why Customization is Key

One size does not fit all when it comes to resumes, especially in the IT industry. Jobseekers who customize their resumes for each job application are more likely to catch the attention of recruiters. Tailoring your resume allows you to emphasize the specific skills and experiences that align with the job description, making you a stronger candidate. Employers want to see that you’ve taken the time to understand their needs and that your expertise matches what they are looking for.

Key Areas to Customize:

Summary Section: Write a targeted summary that highlights your qualifications and goals in relation to the specific job you’re applying for.

Skills Section: Highlight the most relevant skills for the position, paying close attention to the technical requirements listed in the job posting.

Experience Section: Adjust your work experience descriptions to emphasize the accomplishments and projects that are most relevant to the job.

Education & Certifications: If certain qualifications or certifications are required, make sure they are easy to spot on your resume.

NOTE: Read More

Section 4: Reviewing and Testing Your Optimized Resume

Proofreading for Perfection

Before submitting your resume, it’s critical to review it for accuracy, clarity, and relevance. Spelling mistakes, grammatical errors, or outdated information can reflect poorly on your professionalism.

Additionally, make sure your resume is easy to read and visually organized, with clear headings and bullet points. If possible, ask a peer or mentor in the IT field to review your resume for content accuracy and feedback.

Testing Your Resume with ATS Tools

After making your resume keyword-optimized, test it using online tools that simulate ATS systems. This allows you to see how well your resume aligns with specific job descriptions and identify areas for improvement.

Many tools will give you a match score, showing you how likely your resume is to pass an ATS scan. From here, you can fine-tune your resume to increase its chances of making it to the recruiter’s desk.

Section 5: Trends Shaping the Future of IT Recruitment

Embracing Digital Recruitment

Recruiting has undergone a significant shift towards digital platforms, with job fairs, interviews, and onboarding now frequently taking place online.

This transition means that jobseekers must be comfortable navigating virtual job fairs, remote interviews, and online assessments.

As IT jobs increasingly allow remote work, companies are also using technology-driven recruitment tools like AI for screening candidates.

Jobseekers should also leverage platforms like LinkedIn to increase visibility in the recruitment space. Keeping your LinkedIn profile updated, networking with industry professionals, and engaging in online discussions can all boost your chances of being noticed by recruiters.

Furthermore, participating in virtual job fairs or IT recruitment events provides direct access to recruiters and HR professionals, enhancing your job hunt.

FAQs

1. How important are keywords in IT resumes?

Keywords are essential in IT resumes because they ensure your resume passes through Applicant Tracking Systems (ATS), which scans resumes for specific terms related to the job. Without the right keywords, your resume may not reach a human recruiter.

2. How often should I update my resume?

It’s a good idea to update your resume regularly, especially when you gain new skills or experience. Also, customize it for every job application to ensure it aligns with the job’s specific requirements.

3. What are the most in-demand IT jobs?

Some of the most in-demand IT jobs include software developers, cloud engineers, cybersecurity analysts, data scientists, and DevOps engineers.

4. How can I stand out in the current IT job market?

To stand out, jobseekers should focus on tailoring their resumes, building strong online profiles, networking, and keeping up-to-date with industry trends. Participation in online forums, attending webinars, and earning industry-relevant certifications can also enhance visibility.

Conclusion

The IT job market continues to offer exciting opportunities for jobseekers, driven by technological innovations and changing work patterns.

By staying informed about current trends, customizing your resume, using keywords effectively, and testing your optimized resume, you can improve your job search success.

Whether you are new to the IT field or an experienced professional, leveraging these strategies will help you navigate the competitive landscape and secure a job that aligns with your career goals.

NOTE: Contact Us

2 notes

·

View notes

Text

Azure DevOps Training

Azure DevOps Training Programs

In today's rapidly evolving tech landscape, mastering Azure DevOps has become indispensable for organizations aiming to streamline their software development and delivery processes. As businesses increasingly migrate their operations to the cloud, the demand for skilled professionals proficient in Azure DevOps continues to soar. In this comprehensive guide, we'll delve into the significance of Azure DevOps training and explore the myriad benefits it offers to both individuals and enterprises.

Understanding Azure DevOps:

Before we delve into the realm of Azure DevOps training, let's first grasp the essence of Azure DevOps itself. Azure DevOps is a robust suite of tools offered by Microsoft Azure that facilitates collaboration, automation, and orchestration across the entire software development lifecycle. From planning and coding to building, testing, and deployment, Azure DevOps provides a unified platform for managing and executing diverse DevOps tasks seamlessly.

Why Azure DevOps Training Matters:

With Azure DevOps emerging as the cornerstone of modern DevOps practices, acquiring proficiency in this domain has become imperative for IT professionals seeking to stay ahead of the curve. Azure DevOps training equips individuals with the knowledge and skills necessary to leverage Microsoft Azure's suite of tools effectively. Whether you're a developer, IT administrator, or project manager, undergoing Azure DevOps training can significantly enhance your career prospects and empower you to drive innovation within your organization.

Key Components of Azure DevOps Training Programs:

Azure DevOps training programs are meticulously designed to cover a wide array of topics essential for mastering the intricacies of Azure DevOps. From basic concepts to advanced techniques, these programs encompass the following key components:

Azure DevOps Fundamentals: An in-depth introduction to Azure DevOps, including its core features, functionalities, and architecture.

Agile Methodologies: Understanding Agile principles and practices, and how they align with Azure DevOps for efficient project management and delivery.

Continuous Integration (CI): Learning to automate the process of integrating code changes into a shared repository, thereby enabling early detection of defects and ensuring software quality.

Continuous Deployment (CD): Exploring the principles of continuous deployment and mastering techniques for automating the deployment of applications to production environments.

Azure Pipelines: Harnessing the power of Azure Pipelines for building, testing, and deploying code across diverse platforms and environments.

Infrastructure as Code (IaC): Leveraging Infrastructure as Code principles to automate the provisioning and management of cloud resources using tools like Azure Resource Manager (ARM) templates.

Monitoring and Logging: Implementing robust monitoring and logging solutions to gain insights into application performance and troubleshoot issues effectively.

Security and Compliance: Understanding best practices for ensuring the security and compliance of Azure DevOps environments, including identity and access management, data protection, and regulatory compliance.

The Benefits of Azure DevOps Certification:

Obtaining Azure DevOps certification not only validates your expertise in Azure DevOps but also serves as a testament to your commitment to continuous learning and professional development. Azure DevOps certifications offered by Microsoft Azure are recognized globally and can open doors to exciting career opportunities in various domains, including cloud computing, software development, and DevOps engineering.

Conclusion:

In conclusion, Azure DevOps training is indispensable for IT professionals looking to enhance their skills and stay relevant in today's dynamic tech landscape. By undergoing comprehensive Azure DevOps training programs and obtaining relevant certifications, individuals can unlock a world of opportunities and propel their careers to new heights. Whether you're aiming to streamline your organization's software delivery processes or embark on a rewarding career journey, mastering Azure DevOps is undoubtedly a game-changer. So why wait? Start your Azure DevOps training journey today and pave the way for a brighter tomorrow.

5 notes

·

View notes

Text

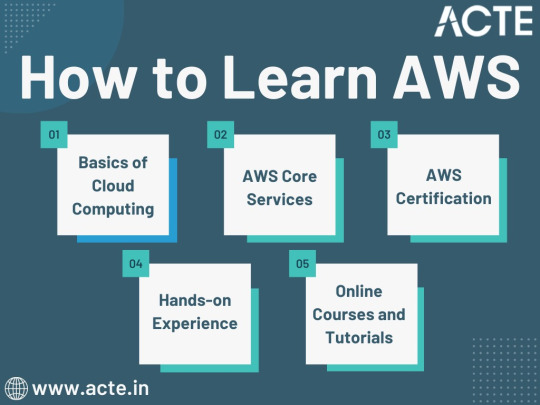

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

Unity's Changes

On the 12th of September Unity released a blog post concerning changes being made to their plan pricing and packaging updates. The intention behind the change is the generate more income for the company. From Unity Blog...

"Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November."

Unity's services consist of two products: The Unity Engine, which is the game engine used to create projects, and the Unity Runtime, which is the code the execute on a player's device that allows games made with the engine to run.

Simply put, Unity will now be charging a fee "each time a qualifying game is downloaded by an end user." The reasoning given for this change is that "each time a game is downloaded, the Unity Runtime is also installed."

While many (basically all) developers have used their collective voices to reply with a unanimous "nope", many people do not understand the very important specifics of how this will be implemented.

These fees will only take effect when the preexisting thresholds have been met. They will only be applied once a game has reached both a set revenue figure and a set lifetime install count. From the blog:

Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs.

Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

While this may not seem to be such a bad thing, especially since the reasoning behind the change (their Runtime product being distributed) is quite reasonable. However there are a litany of issues this will pose for developers. The smallest scale developers, such as indie devs and studios are unlikely to feel any sort of pressure from this, and the large, AAA studios also wont feel the brunt of the new pricing plan. The weight of this change falls directly onto the smaller-but-not-small studios. These studios making games for a more significant budget will essentially have these budgets put under more strain, because as soon as they begin to approach breaking even or perhaps making a profit on their projects, Unity will step in a start charging them from there on out. It is also unclear whether the developers alone will have to pay this new fee or whether it will be shared by publishers as well.

Developers are largely unhappy with this new plan because studios almost always make commercial games on very thing margins. Charging a couple cents per install does not sound like much but it can and will mean the difference between financial success and closure for many smaller studios who otherwise would have ended with their balance sheets in the green.

It is also important to be aware that, while these changes are scheduled to take effect from the start of 2024, the thresholds are retroactive, which means that if you already have reasonable install and revenue numbers (thus qualifying for the fees) you will be immediately forced to pay moving forward.

On a more informal note, there have also been some jokes made that this scheme will make it possible for players to actively harm developers if they wish. The fee is charged when an end-user (customer) downloads a game. Note that they did not say "purchases", but "downloads." Technically, this would mean that a person can buy a game (developer gains one instance of revenue) and then repeatedly download, delete and re-download the game, charging this fee each time they do this. Whether this was a poor choice of wording or a miscommunication is unclear at this time, but well let's certainly hope this new plan doesn't open up this possibility.

There are also numerous other important considerations Unity have not commented on. Do installs of pirated games count? How will these threshold figures be tracked? Also, as a massive concern, what about games that rely on in-app purchases for revenue. Below is a tweet that concisely highlights the problem.

There is also the problem of free games. This pricing plan does not take into account how much the game costs at all. Developers making a massively successful free game would end up having to pay Unity to sell a free product.

Many developers and studios are now seriously considering simply ditching Unity all together. With Unreal's much more reasonable pricing plans and the release of UE5, unless either some very significant "miscommunications" are cleared up or the plan is scrapped entirely, this will likely be the beginning of the end for Unity. As a learning indie developer myself, having been a die-hard Unity supported until this announcement, I do not know how to express my disappointment, and if Unity follows through with this scheme on 01/01/2024, even if they reverse it later, I will never open another one of this fucking greedy company's products ever again.

Sources: - https://blog.unity.com/news/plan-pricing-and-packaging-updates - https://www.youtube.com/watch?v=JQSDsjJAics

11 notes

·

View notes

Text

Choose the Right Azure DevOps Services For Your Needs

To compete in today's business landscape, having a hold on the right tools with major technological trends is a way to achieve excellence and growth. Azure DevOps provides a solid programmable framework for easing software development in this trait. Whether managing a small project or working on more elaborate enterprise-scale workflows, choosing the right Azure DevOps services can mean the difference between the successful management of your operations or deadlock. Through this article, let’s get deeper to understand what Azure DevOps stands for and how Spiral Mantra DevOps consulting services can make your workflow seamless.

Azure DevOps proffers a set of tools aiming to build, test, and launch applications. These different processes are integrated and provided in a common environment to enhance collaboration and faster time-to-market. It always takes care to promote teamwork, as it's a cloud-based service that we can access at any time from anywhere aspiring to provide a better quality of work. It creates a shared environment for faster day-to-day work without replicating physical infrastructures.

At Spiral Mantra, you can hire DevOps engineers who concentrate on delivering top-notch Azure DevOps services in USA. We are best known for providing services and a special workforce to accelerate work.

Highlighting Components of Azure DevOps Services at Spiral Mantra

Spiral Mantra is your most trusted DevOps consulting company, tailored to discuss and meet all the possible needs and unique requirements of our potential clients. Our expertise in DevOps consulting services is not only limited to delivering faster results but also lies in offering high-performing solutions with scalability. You can outsource our team to get an upper hand on the industry competition, as we adhere to the following core components:

1. Azure Pipelines: Automates the process of building testing and deployment of code across popular platforms like VMs, containers, or cloud services. CI CD is useful to help developers automate the deployment process. Additionally, it gains positive advantages with fewer errors, resulting in delivering high-quality results.

2. Azure Boards: A power project management tool featuring the process of strategizing, tracking, and discussing work by clasping the development process. Being in touch with us will leverage you in many ways, as our DevOps engineers are skilled enough to make customizations on Azure Boards that fit your project requirements best.

3. Azure Repos: Providing version control tools by navigating teams to manage code effectively. So, whether you prefer to work on GIT or TFVC, our team ensures to implement best version control practices by reducing any risk.

4. Azure Test Plans: In the next step, quality assurance is another crucial aspect of any software or application development lifecycle. It stabilizes to provide comprehensive tools useful for testing while concentrating on the application's performance. We, as the top Azure DevOps consulting company, help our clients by developing robust testing strategies that meet the determined quality standards.

Want to accelerate your application development process? Connect with us to boost your business productivity.

Identifying Your Necessity for Azure DevOps Services

It might be tempting to dive right into what Azure DevOps services you’ll need once you start using it, but there are some important things to be done beforehand. To start with, you’ll have to assess your needs:

Project scale: Is this a small project or an enterprise one? Small teams might want the lightweight solution Azure Repos and Azure Pipelines, while larger teams will need the complete stack, including Azure Boards and Test Plans.

Development language: What language are you developing in? The platform supports many different languages, but some services pair better with specific setups.

CI/CD frequency: How often do you need to publish updates and make changes? If you want to release new builds quickly, use Azure Pipelines, while for automated testing, go with Azure Test Plans.

Collaboration: How large is your development team? Do you have many people contributing to your project? If so, you can go with Azure Repos for version control and, on the other hand, Azure Boards for tracking team tasks.

Choosing the Right DevOps Services in USA

Deciding on the best company for your DevOps requirement can be a daunting task, especially if you have no clue how to begin with. In the following paragraph, get through the need to fulfill while selecting the right agency to make your things work in line.

1. For Version Control

If your project has more than one developer, you’ll probably need enterprise-grade version control so your team can flawlessly work on code. Azure Repos is currently working for both Git and TFVC as the basis for your version control system. If you have a distributed team, then Git is the best choice. If your team prefers working in a centralized workflow, then TFVC may be a little easier. If you’re new to version control, Git is still a pretty good place to start, though. The distributed nature of Git makes it easy for anyone to get up and running with version control.

2. For Automated Tasks (Builds and Deployments)

Building, testing, and using your code in an automated way will keep you going fast and in sync. That’s why running Azure Pipelines on multiple platforms allows CI/CD for any project. The platform also provides the flexibility your team needs to deploy their application to various environments (cloud, containers, virtual machines) as you want your software to be released early and continuously.

3. For Project Management

Now, getting that work done is another thing. A project isn’t just about writing code. Doing release notes requires at least one meeting where you need to understand what’s fixed and what broke. Tracking bugs, features, and tasks helps keep your project on track. Azure Boards provides Kanban boards, Scrum boards, backlogs for managing all your project work, and release boards used to track work for a specific release. You can even adjust it to map to existing workflows used by your team, such as Agile or Scrum.

4. For Testing

Testing is another crucial step to make sure your application behaves as expected with the validation steps or predetermined conditions. You can enable manual testing, exploratory testing, and test automation through Azure Test Plans. This will allow you to integrate testing into your CI/CD pipeline and keep your code base healthy for delivering a more predictable experience to your users.

5. For Package Management

Many development projects use one or more external libraries or packages. Azure Artifacts enables the capture of these dependencies so your team can host, share, and use these packages to build complex projects. It massively reduces the complexity of maintaining a depot of packages so your developers can access them for the development process.

How does the Spiral Mantra Assist? Best DevOps Consulting Company

Spiral Mantra’s expert DevOps services will help you fasten your development process so you can get the most out of the process. Whether it’s setting up continuous integration, a CI CD pipeline, configuring version control, or optimizing any other aspect of your software process through boards or any other aspect of your software process, call Spiral Mantra to make it happen.

Partner with Spiral Mantra, and with our team proficient at using Azure DevOps for small and large projects ranging from your start-up to large teams, you can ensure success for your development projects.

Getting the Most from Azure DevOps

Having found the relevant services, you then need to bring these together. How can you get maximum value out of the service? Here's the process you might consider.

⦁ Azure Repos and Pipelines: To initiate the process, set up version control and automated pipelines to boost the development process.

⦁ Add Boards for Big Projects: Once the team has grown, add Azure Boards for task, bug, and feature request tracking for teams using pull requests.

⦁ Testing Early: Use Test Plans to engage in testing right at the beginning of the CI/CD pipeline and pick up the bugs early.

⦁ Managing Packages with Azure Artifacts: If your project requires more than one library, then set up for Artifacts.

Conclusion

The process of developing software will become easier if you choose the right DevOps consulting company to bring innovations to your business. Spiral Mantra is there to support you with the best DevOps services in USA according to your budding business requirements and provides you with guidance to enhance your workflows of development operations.

1 note

·

View note

Text

Tips on How to Choose the Right Healthcare Software Development Firm

In the world of the new generation, various changes have been registered in the healthcare sphere, and numerous innovations have been adopted to make the process more efficient and effective. The requirement for solid and customizable healthcare software is at the heart of this revolution. If you are a healthcare company, a healthcare facility, or a startup that aims to venture into the limitless opportunities of digital health, working with the right Healthcare Software Development Company is a key factor to success.

The Challenges of Software Development in the Healthcare Field

Healthcare software development is a particular domain that requires intricate knowledge of both software engineering and healthcare systems. It is not just about writing code but understanding the complex processes, rules, and policies that healthcare organisations must follow. An experienced healthcare software development company knows these realities and can successfully maneuver through such a setting.

Here, healthcare software solutions are considered across the healthcare spectrum, including EMR software development services, telemedicine platforms, medication management software, and much more. Selecting the right development partner is crucial to achieving compliance with the best practices, meeting high-stake regulations, and providing an intuitive and protective interface for the consumers of healthcare services and the providers alike.

The features of a reputable healthcare software development company

1. Proven Industry Experience

Choose a well-established software development company that creates healthcare software solutions. An experienced partner will know a lot about the healthcare industry's peculiarities, especially regarding HIPAA regulation and interoperability. This means your project will be carried out most efficiently while avoiding pitfalls and delivering the highest-quality software.

2. Comprehensive Service Offerings

A reputable healthcare software development company should offer a comprehensive suite of services, encompassing requirements gathering and system design to development, testing, deployment, and ongoing maintenance. This end-to-end approach ensures a seamless and cohesive software development lifecycle, reducing the need for multiple vendors and streamlining communication channels.

3. Cutting-edge technologies and Methodologies

Technology in the healthcare industry is constantly evolving, and your software partner should be at the forefront of these advancements. Look for a company that embraces modern technologies, such as cloud computing, artificial intelligence, and mobile platforms, while adhering to industry-standard methodologies like Agile and DevOps. This ensures your software remains future-proof and adaptable to emerging trends.

4. Robust Security and Compliance Measures

Data security and regulatory compliance are paramount in the healthcare industry. Your software development partner should have robust security protocols in place to protect sensitive patient information and adhere to strict industry regulations, such as HIPAA, GDPR, and others. Regular security audits, risk assessments, and strict access controls should be integral to their development processes.

5. Collaborative and Transparent Communication

Effective communication is the cornerstone of any successful software development project. A reliable healthcare software development company should foster open and transparent communication channels, keeping you informed at every stage of the development process. Regular progress updates, clear documentation, and a collaborative approach help align expectations and ensure your software meets your requirements.

The Benefits of Partnering with a Dedicated Healthcare Software Development Company

Choosing a dedicated healthcare software development company offers numerous benefits that can propel your organization's growth and success:

1. Domain-Specific Expertise

By partnering with a company specializing in healthcare software development, you gain access to domain-specific knowledge and expertise. These professionals understand the unique challenges, workflows, and regulatory requirements specific to the healthcare industry, ensuring your software is tailored to meet your unique needs.

2. Accelerated Time-to-Market

Time is of the essence in the fast-paced healthcare industry. A dedicated healthcare software development company can leverage its experience and established processes to streamline the development lifecycle, reducing time to market and enabling you to capitalize on emerging opportunities quickly.

3. Cost Efficiency

Building an in-house software development team capable of addressing the complexities of healthcare software can be a significant investment. By partnering with a dedicated healthcare software development company, you can leverage their existing resources, expertise, and infrastructure, potentially reducing costs while ensuring access to top-tier talent.

4. Scalability and Flexibility

As your organization grows and your software needs evolve, a dedicated healthcare software development partner can provide the scalability and flexibility necessary to accommodate changing requirements. They can adapt their resources and methodologies to align with your expanding needs, ensuring your software remains relevant and practical.

5. Continuous Support and Maintenance

Software development is an ongoing process that doesn't end with the initial deployment. A reputable healthcare software development company understands the importance of continuous support and maintenance, ensuring your software remains secure, up-to-date, and compliant with industry standards and regulations.

Choosing the Right Partner: A Comprehensive Evaluation Process

With many software development companies in the market, finding the right fit for your healthcare software needs can be daunting. To ensure you make an informed decision, consider adopting a comprehensive evaluation process:

1. Define Your Requirements

Begin by clearly defining your software requirements, including the specific features, functionalities, and integration needs. Consider your organization's goals, workflows, and target audience to ensure the software aligns with your overall strategy.

2. Conduct Thorough Research

Leverage online resources, industry directories, and professional networks to compile a list of potential Healthcare Software Development Companies. Review their portfolios, case studies, and client testimonials to gain insights into their expertise and track record.

3. Evaluate Technical Capabilities

Assess the potential partners' technical capabilities, including their proficiency in programming languages, frameworks, and tools relevant to your project. Inquire about their development methodologies, quality assurance processes, and experience with healthcare-specific technologies like EMR Software Development Services.

4. Assess Cultural Fit