#Using ocr tool

Explore tagged Tumblr posts

Text

I found a firefox plugin that (mostly) restores access to google reverse image search!

Link in reblog

default:

with Disable Google Lens plugin:

sort by resolution? define search parameters?? functional tools??? whoa!

#google#reverse image search#firefox#firefox addon#archival#if they remove support for the old ris altogether i will become a ********* i stg#the ocr search and translate tools on lens are still super useful#wouldn't it be great if we could have both accessible at once?

30 notes

·

View notes

Text

I want to make my posts more accessible, but can't write IDs myself: a guide

[Plain text: "I want to make my posts more accessible, but can't write IDs myself: a guide." End plain text.]

While every image posted online should be accessible in an ideal world, we all know it 1) takes time to learn how to write image descriptions, and 2) is easy to run out of spoons with which to write IDs. And this says nothing of disabilities that make writing them more challenging, if not impossible — especially if you're a person who benefits from IDs yourself.

There are resources for learning how to write them (and if you already know the basics, I'd like to highlight this good advice for avoiding burnout) — but for anyone who cannot write IDs on their original posts at any current or future moment, for any reason, then there are two good options for posting on Tumblr.

1. Crowdsource IDs through the People's Accessibility Discord

[Plain text: "1. Crowdsource IDs through the People's Accessibility Discord". End plain text.]

The People's Accessibility Discord is a community that volunteers description-writing (and transcript-writing, translation, etc) for people who can't do so themselves, or feel overwhelmed trying to do so. Invite link here (please let me know if the link breaks!)

The way it works is simple: if you're planning to make an original post — posting art, for example — and don't know how to describe it, you can share the image there first with a request for a description, and someone will likely be able to volunteer one.

The clear upside here (other than being able to get multiple people's input, which is also nice) is that you can do this before making the Tumblr post. By having the description to include in your post from the start, you can guarantee that no inaccessible version of the post will be circulated.

You can also get opinions on whether a post needs to be tagged for flashing or eyestrain — just be able to spoiler tag the image or gif you're posting, if you think it might be a concern. (Also, refer here for info on how to word those tags.)

The server is very chill and focused on helping/answering questions, but if social anxiety is too much of a barrier to joining, or you can't use Discord for whatever reason, then you can instead do the following:

2. Ask for help on Tumblr, and update the post afterwards

[Plain text: "Ask for help on Tumblr, and update the post afterwards". End description.]

Myself and a lot of other people who describe posts on this site are extra happy to provide a description if OP asks for help with one! This does leave the post inaccessible at first, so to minimize the drawbacks, the best procedure for posting an image you can't fully describe would be as follows:

Create the tumblr post with the most bare-bones description you can manage, no matter how simple (something like "ID: fanart of X character from Y. End ID" or "ID: a watercolor painting. End ID," or literally whatever you can manage)

Use a tool like Google Lens or OCR to extract text if applicable and if you have the energy, even if the text isn't a full image description (ideally also double-check the transcriptions, because they're not always perfect)

Write in the body of the post that you'd appreciate a more detailed description in the notes!

Tag the post as "undescribed" and/or "no id" only if you feel your current, bare-bones description is missing out on a lot of important context

When you post it and someone provides an ID, edit the ID into the original post (don't use read mores, italics, or small text)

Remove the undescribed tag, if applicable. If you're posting original art, you can even replace it with a tag like "accessible art" for visibility!

And congrats! You now have a described post that more people will be able to appreciate, and you should certainly feel free to self-reblog to give a boost to the new version!

808 notes

·

View notes

Text

TIPS MASTERPOST!

(Edited June 24)

These are all of my l1fting/b0rr0wing tips. I’m making this for me to come back to, and for some friends.

GENERAL TIPS:

- IF YOU SEE SOMEONE ELSE CONCEAL, GET OUT. I’ve seen so many LP staff talk about using this tactic to ease suspects into stealing and getting caught. Just finish checking out (if you’re buying) or walk out.

- IF YOU LIFT IN FRONT OF OTHERS, MAKE SURE THEY WONT SNITCH. Just point out someone else, go ‘i think they just put something in their bag’ and see what they say. If they want to snitch on a random stranger who didn’t even steal, they’ll snitch on you too.

- WEAR A SKIRT. Obviously men can’t do this because LP are weirdos, but wearing a skirt gives you the opportunity to hold things between your legs and just walk out of the store. No bags required.

- DO NOT LIFT ON MAJOR HOLIDAYS. A crowded store may be nice for taking attention away from you, but stores will bring in more LP on the days leading up to Christmas/Easter/Valentine’s day because these are high shrink times. If you really want to l1ft in a busy store, go on weekends or the afternoon.

- CONCEAL L1FT1NG TOOLS ON YOUR PERSON. If you use a hook or magnets, conceal them on your body so if your bags are searched the police don’t have proof of intent.

- DON’T RUN IF THEY CATCH YOU. Unless the items you took are over 100-300 dollars and you concealed in a dressing room/left behind no proof/know that they don’t have any evidence to stop you, running will increase your sentence if they snap a picture of your licence plate and call the police. Most LP just want the merchandise back and will let you off with a trespass warning.

- GET OVER DISGUST ABOUT HIDING THINGS IN YOUR BRA. Seriously, any l1fter who has boobs should be using their bra to conceal. Wear a loose shirt and if anyone calls you out for lumps, just tear up a little and say that’s just how you’re shaped. I’ve done this and it embarrasses people so much they usually just walk away.

- DO NOT IMMEDIATELY CONCEAL. For LP to stop you going out the door, they need to 1. See you take the item, 2. See you conceal, 3. See you walk past all points of sale. Waiting to conceal can give you a bumper for security to move onto other targets if they’re watching you.

- STOP DOING HUGE HAULS!!! Taking over $300 in a single haul from a single store will put LP on the lookout and make it more likely for someone else to be caught. For nonessentials just try to be conservative in what you borrow.

- NEVER LOOK AROUND BEFORE YOU CONCEAL. This makes LP watch you, and they can use this as proof of intent. Basically this completely destroys the defence of “I didn’t realize i put it in my bag” if you get caught, and can increase your sentence if police get called.

- DON’T SH0PL1FT IMMEDIATELY. If LP is watching you, they will likely continue to until a reasonable amount of time has passed or if someone more suspicious enters the store.

- HAVE A FRIEND ACT SUSPICIOUS. This only works if you go in separately, you enter first and your friend enters secondly. Have them wear baggy clothes, constantly look around, not make eye contact with staff, pick up high value items, etc. this draws LP (and likely staffed cameras) away from you.

- GO WITH SOMEONE ELSE. (preferably 1-2 others) this works best if you’re white and over the age of 20.

- DO NOT GO WITH SOMEONE ELSE. Teens are known for shoplifting in groups. If you’re not white, being in groups WILL make LP target you because of the appearance of an OCR (most of them are pos racists).

- BE NICE TO THE EMPLOYEES! Just be nice guys. They’re not paid well enough to risk their legs, and if you’re nice they’re less likely to keep you in the store for LP.

- SOMEONE IS ALWAYS WATCHING YOU. Target with cameras hidden in their shelves, LP, employees, snitches, someone is always watching your every move. Just assume that everyone will see you and it will make you careful, and reconsider risky items.

- DON’T LOITER! A lot of stores are rolling out ‘AI’ theft prevention, and the system will flag you if you stay in one spot for too long. (or even reach for a bag.)

- PUT TAGS ONTO THE OTHER ITEMS YOU TOOK TO THE CHANGING ROOM. Store employees double tag items all the time, and it’s way less suspicious than a tag taped to the underside of the changing room chair.

- TAKE THE HANGERS WITH YOU. Taking them off and leaving them in the changing rooms is a dead giveaway that you’ve taken something, and it’s far easier for store attendants to pin it on you.

- THEY’RE BUILDING A CASE ON YOU. Big box stores DO NOT PLAY. Just assume that they’re trying to get you on a felony charge and waiting until you’ve stolen enough.

- HAVE A PURSE WITH A GOOD LINING? Cut a small hole in the side, tuck small items/tags into it, and if you set off the alarms security won’t know why because everything’s hidden between the lining and shell of the purse.

- SCOUT OUT THE STORE BEFOREHAND. This obviously won’t work if you’re going out of town to l1ft, but it can help in so many ways. Not having to look up at cameras is so so so handy.

- DON’T IMMEDIATELY PUT THINGS INTO YOUR BAG. Just don’t do this, it alerts LP and the loss prevention AI shit.

- LOOK FOR TOWERS. These are the things that beep, and they can be hidden very well (underneath carpets, on the doorframe, etc)

- IF THE TOWERS BEEP, KEEP WALKING. Just pretend you didn’t hear anything, and if store LP are watching you, it gives you a little bit of time to walk away as most stores won’t chase. Innocent people beep all the time too, for phones and forgotten tags and whatever.

Slight of hand/Blind Spots:

- SCARVES! I love scarves. Scarves scarves scarves. Since I live in a colder place it’s all cool to ‘adjust’ the scarf and tuck items into it.

- GLOVES/TIGHT SLEEVES. I’m talking about the ones with holes cut out for your thumbs, that cover half of your palm. Super easy to tuck small things in while you’re messing around with the displays.

- CONCEAL IN A DRINK CUP! If you’re going to a mall, buy a drink from a chain fast food place that has a store in the mall. Drink it, wash it out, and cut an X shape into the bottom of the cup. This lets you tuck small things like makeup and jewelry into the cup without opening the lid, ever.

- BLIND SPOTS ARE ONLY BLIND FOR A BIT. Keep moving. Assume LP are watching you.

- IF YOU NEED A BLIND SPOT KNOCK SOMETHING OFF THE SHELVES. When you bend down to pick it up, use the space between your body and the floor as a blind spot.

- BLIND SPOTS ARE MORE LIKELY TO BE IN AISLES WITH LOW SHRINK. Things that aren’t stolen often, yarn/utensils/cheap shit that’s bulky. Avoid concealing in aisles with high shrink rates, makeup/batteries/razors/electronics.

- SPECIFIC AISLES ARE LOW RISK. What I mean by this is that aisles like greeting cards, chips, yarn, etc like the ones listed above, are unlikely to have cameras. If you haven’t had a chance to scope out the store/you’re travelling, try to conceal in these aisles.

Clothes:

- NO BAGGY CLOTHES OR HUGE BAGS. it’s suspicious. Bring a purse if you have to, preferable use shopping bags from other stores, and wear clothing appropriate for the weather. Tighter clothing is less suspicious.

- NAME BRANDS. Name brands make you look like someone who has money or wants to look like they have money. LP, and the average snitch, are less suspicious.

- DO NOT CARRY YOUR COAT OVER YOUR ARM UNLESS YOU HAVE TO. LP are aware of this tactic and will watch you for it.

- AVOID BAGS. If you’re going out to get makeup/small items that you can body conceal, don’t bring a purse. LP and store clerks are told to watch anyone carrying their own bags, so if you can body conceal ditch the bag.

- WEAR LOOSE PANTS/FLOOR LENGTH SKIRT AND LONG SOCKS. Long, tight, stretchy socks. It’s so easy to squat down, tie the shoelace that you untied before you entered the store, and tuck the merchandise into the socks. Or just pretend to look at the shelves closer to the floor. Walk out with the merchandise safely secured and hidden. I’ve done this with things as big as cds, just use a good pair of socks.

- WEAR LOOSE SLEEVES AND ARM WARMERS. Same premise as above, but remember to only use this one or the other, never both at once unless it’s very weather appropriate (cold if you’re using sweatshirt/pants, warm if you’re wearing linen/flowy fabric).

- LEARN TO SOW! You don’t need neat stitches or even a machine, but knowing how to sow lets you repair instead of throwing away (and hidden pockets, magic bags, and so many other handmade l1fting equipment is available)

- HIDDEN POCKETS. There’s many different ways to do this, but the main idea stays the same. Put some in your clothes, in your bags, in literally everything.

117 notes

·

View notes

Note

In what way does alt text serve as an accessibility tool for blind people? Do you use text to speech? I'm having trouble imagining that. I suppose I'm in general not understanding how a blind person might use Tumblr, but I'm particularly interested in the function of alt text.

In short, yes. We use text to speech (among other access technology like braille displays) very frequently to navigate online spaces. Text to speech software specifically designed for blind people are called screen readers, and when use on computers, they enable us to navigate the entire interface using the keyboard instead of the mouse And hear everything on screen, as long as those things are accessible. The same applies for touchscreens on smart phones and tablets, just instead of using keyboard commands, it alters the way touch affect the screen so we hear what we touch before anything actually gets activated. That part is hard to explain via text, but you should be able to find many videos online of blind people demonstrating how they use their phones.

As you may be able to guess, images are not exactly going to be accessible for text to speech software. Blindness screen readers are getting better and better at incorporating OCR (optical character recognition) software to help pick up text in images, and rudimentary AI driven Image descriptions, but they are still nowhere near enough for us to get an accurate understanding of what is in an image the majority of the time without a human made description.

Now I’m not exactly a programmer so the terminology I use might get kind of wonky here, but when you use the alt text feature, the text you write as an image description effectively gets sort of embedded onto the image itself. That way, when a screen reader lands on that image, Instead of having to employ artificial intelligences to make mediocre guesses, it will read out exactly the text you wrote in the alt text section.

Not only that, but the majority of blind people are not completely blind, and usually still have at least some amount of residual vision. So there are many blind people who may not have access to a screen reader, but who may struggle to visually interpret what is in an image without being able to click the alt text button and read a description. Plus, it benefits folks with visual processing disorders as well, where their visual acuity might be fine, but their brain’s ability to interpret what they are seeing is not. Being able to click the alt text icon in the corner of an image and read a text description Can help that person better interpret what they are seeing in the image, too.

Granted, in most cases, typing out an image description in the body of the post instead of in the alt text section often works just as well, so that is also an option. But there are many other posts in my image descriptions tag that go over the pros and cons of that, so I won’t digress into it here.

Utilizing alt text or any kind of image description on all of your social media posts that contain images is single-handedly one of the simplest and most effective things you can do to directly help blind people, even if you don’t know any blind people, and even if you think no blind people would be following you. There are more of us than you might think, and we have just as many varied interests and hobbies and beliefs as everyone else, so where there are people, there will also be blind people. We don’t only hang out in spaces to talk exclusively about blindness, we also hang out in fashion Facebook groups and tech subreddits and political Twitter hashtags and gaming related discord servers and on and on and on. Even if you don’t think a blind person would follow you, You can’t know that for sure, and adding image descriptions is one of the most effective ways to accommodate us even if you don’t know we’re there.

I hope this helps give you a clearer understanding of just how important alt text and image descriptions as a whole are for blind accessibility, and how we make use of those tools when they are available.

391 notes

·

View notes

Text

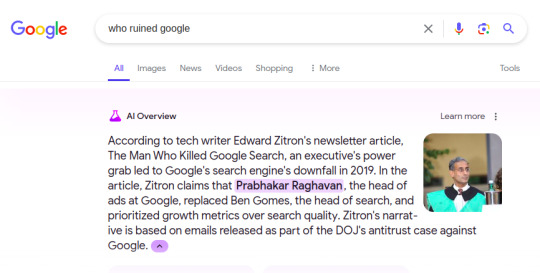

Image description: A screenshot of a google search for "who ruined google". The 'AI summary' response reads, "According to tech writer Edward Zitron's newsletter article, The Man Who Killed Google Search, an executive's power grab led to Google's search engine's downfall in 2019. In the article, Zitron claims that Prabhakar Raghavan, the head of ads at Google, replaced Ben Gomes, the head of search, and prioritized growth metrics over search quality. Zitron's narrative is based on emails released as part of the DOJ's antitrust case against Google." End description.

I asked Google "who ruined Google" and they replied honestly using their AI, which is now forced on all of us. It's too funny not to share!

#tech#the irony of using 'search image with google' to get the OCR tools to transcribe this for me#q#image described

1K notes

·

View notes

Text

Update on LGBT Information Removals from U.S. Government Websites

It has been observed that references to "LGBTQIA+" and similar acronyms have been shortened to "LGB" on several U.S. government websites. However, some agencies, such as the National Archives and the National Library of Medicine, appear unaffected.

When government agencies remove web pages, the standard procedure is to use a tool called Pagefreeze, which archives the content while indicating that the page is no longer actively maintained.

Pagefreeze Information: Pagefreeze for Government

Example of a site with Pagefreeze: Biden-Harris Administration Finalizes Rule to Strengthen Protections for Youth in the Child Welfare System – HHS

The Children’s Bureau within the Administration for Children & Families has taken the approach of marking pages as archived rather than removing them entirely:

LGBTQIA2S+ Resources – Administration for Children & Families

Pages That Are Still Active

National Archives Title: LGBTQIA+ Research Resources URL: https://www.archives.gov/research/lgbt/lgbtqia

National Library of Medicine Title: The Role of Public Health in Ensuring LGBT+ Health Equity URL: https://pmc.ncbi.nlm.nih.gov/articles/PMC4689648/

Substance Abuse and Mental Health Services Administration (SAMHSA) Title: Support for the LGBTQI+ Community URL: https://www.samhsa.gov/find-support/how-to-cope/support-for-lgbtqi-community

Library of Congress Title: LGBTQ+ Pride Month Resources URL: https://www.loc.gov/lgbt-pride-month/resources/

U.S. Department of Veterans Affairs Title: LGBTQ+ Veteran Care URL: https://www.patientcare.va.gov/LGBT/index.asp

National Park Service Title: LGBTQ Heritage Initiative URL: https://parkplanning.nps.gov/projectHome.cfm?parkID=442&projectID=53065

U.S. Department of Health & Human Services (HHS) Title: LGBTQI+ Competency Training Resources URL: https://www.hhs.gov/programs/topic-sites/lgbtqi/enhanced-resources/competency-training/index.html

Pages That Have Been Removed (With User Created Archive Links)

U.S. Department of Health & Human Services (HHS) Title: Protecting the Rights of LGBTQI+ People Removed URL: https://www.hhs.gov/ocr/lgbtqi/index.html Archived Version: Wayback Machine

U.S. Department of Health & Human Services (HHS) Title: LGBTQI+ Health & Well-being Removed URL: https://www.hhs.gov/programs/topic-sites/lgbtqi/index.html Archived Version: Wayback Machine

U.S. Department of State Title: Global Equality Fund Removed URL: https://www.state.gov/global-equality-fund Archived Version: Wayback Machine

Youth.gov Title: LGBT Youth Resources Removed URL: https://youth.gov/federal-links/lgbt-youth-resources Archived Version: Wayback Machine

U.S. Department of Housing and Urban Development (HUD) Title: HUD LGBTQI+ Resources Removed URL: https://www.hud.gov/lgbtqi Archived Version: Wayback Machine

U.S. Department of Justice Title: LGBTQI+ Working Group Removed URL: https://www.justice.gov/crt/lgbtqi-working-group Archived Version: Wayback Machine

U.S. Department of Agriculture (USDA) Title: LGBTQ+ Special Emphasis Program Removed URL: https://www.usda.gov/about-usda/general-information/staff-offices/office-assistant-secretary-civil-rights/special-emphasis-programs/lesbian-gay-bisexual-transgender-and-queer-lgbtq-program Archived Version: Wayback Machine

Centers for Disease Control and Prevention (CDC) Title: Supporting LGBTQ+ Youth Removed URL: https://www.cdc.gov/youth-safe-environments/communication-resources/index.html Archived Version: Wayback Machine

U.S. Census Bureau Title: LGBT Adults Report Anxiety, Depression at All Ages Removed URL: https://www.census.gov/library/stories/2022/12/lgbt-adults-report-anxiety-depression-at-all-ages.html Archived Version: Wayback Machine

Notable Change in Terminology

The U.S. Department of State has a webpage titled "LGB Travelers", which previously included broader LGBTQ+ information but now only references "LGB."

URL: https://travel.state.gov/content/travel/en/international-travel/before-you-go/travelers-with-special-considerations/lgb.html

77 notes

·

View notes

Note

May I ask what scanners / equipment / software you're using in the utena art book project? I'm an artist and half the reason I rarely do traditional art is because I'm never happy with the artwork after it's scanned in. But the level of detail even in the blacks of Utena's uniform were all captured so beautifully! And even the very light colors are showing up so well! I'd love to know how you manage!

You know what's really fun? This used to be something you put in your site information section, the software and tools used! Not something that's as normal anymore, but let's give it a go, sorry it's long because I don't know what's new information and what's not! Herein: VANNA'S 'THIS IS AS SPECIFIC AS MY BREAK IS LONG' GUIDE/AIMLESS UNEDITED RAMBLE ABOUT SCANNING IMAGES

Scanning: Modern scanners, by and large, are shit for this. The audience for scanning has narrowed to business and work from home applications that favor text OCR, speed, and efficiency over archiving and scanning of photos and other such visual media. It makes sense--there was a time when scanning your family photographs and such was a popular expected use of a scanner, but these days, the presumption is anything like that is already digital--what would you need the scanner to do that for? The scanner I used for this project is the same one I have been using for *checks notes* a decade now. I use an Epson Perfection V500. Because it is explicitly intended to be a photo scanner, it does threebthings that at this point, you will pay a niche user premium for in a scanner: extremely high DPI (dots per inch), extremely wide color range, and true lossless raws (BMP/TIFF.) I scan low quality print media at 600dpi, high quality print media at 1200 dpi, and this artbook I scanned at 2400 dpi. This is obscene and results in files that are entire GB in size, but for my purposes and my approach, the largest, clearest, rawest copy of whatever I'm scanning is my goal. I don't rely on the scanner to do any post-processing. (At these sizes, the post-processing capacity of the scanner is rendered moot, anyway.) I will replace this scanner when it breaks by buying another identical one if I can find it. I have dropped, disassembled to clean, and abused this thing for a decade and I can't believe it still tolerates my shit. The trade off? Only a couple of my computers will run the ancient capture software right. LMAO. I spent a good week investigating scanners because of the insane Newtype project on my backburner, and the quality available to me now in a scanner is so depleted without spending over a thousand on one, that I'd probably just spin up a computer with Windows 7 on it just to use this one. That's how much of a difference the decade has made in what scanners do and why. (Enshittification attacks! Yes, there are multiple consumer computer products that have actually declined in quality over the last decade.)

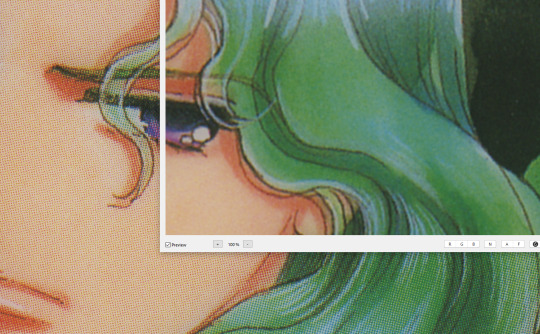

Post-processing: Photoshop. Sorry. I have been using Photoshop for literally decades now, it's the demon I know. While CSP is absolutely probably the better piece of software for most uses (art,) Photoshop is...well it's in the name. In all likelihood though, CSP can do all these things, and is a better product to give money to. I just don't know how. NOTENOTENOTE: Anywhere I discuss descreening and print moire I am specifically talking about how to clean up *printed media.* If you are scanning your own painting, this will not be a problem, but everything else about this advice will stand! The first thing you do with a 2400 dpi scan of Utena and Anthy hugging? Well, you open it in Photoshop, which you may or may not have paid for. Then you use a third party developer's plug-in to Descreen the image. I use Sattva. Now this may or may not be what you want in archiving!!! If fidelity to the original scan is the point, you may pass on this part--you are trying to preserve the print screen, moire, half-tones, and other ways print media tricks the eye. If you're me, this tool helps translate the raw scan of the printed dots on the page into the smooth color image you see in person. From there, the vast majority of your efforts will boil down to the following Photoshop tools: Levels/Curves, Color Balance, and Selective Color. Dust and Scratches, Median, Blur, and Remove Noise will also be close friends of the printed page to digital format archiver. Once you're happy with the broad strokes, you can start cropping and sizing it down to something reasonable. If you are dealing with lots of images with the same needs, like when I've scanned doujinshi pages, you can often streamline a lot of this using Photoshop Actions.

My blacks and whites are coming out so vivid this time because I do all color post-processing in Photoshop after the fact, after a descreen tool has been used to translate the dot matrix colors to solids they're intended to portray--in my experience trying to color correct for dark and light colors is a hot mess until that process is done, because Photoshop sees the full range of the dots on the image and the colors they comprise, instead of actually blending them into their intended shades. I don't correct the levels until I've descreened to some extent.

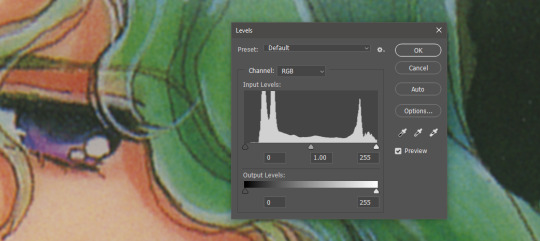

As you can see, the print pattern contains the information of the original painting, but if you try to correct the blacks and whites, you'll get a janky mess. *Then* you change the Levels:

If you've ever edited audio, then dealing with photo Levels and Curves will be familiar to you! A well cut and cleaned piece of audio will not cut off the highs and lows, but also will make sure it uses the full range available to it. Modern scanners are trying to do this all for you, so they blow out the colors and increase the brightness and contrast significantly, because solid blacks and solid whites are often the entire thing you're aiming for--document scanning, basically. This is like when audio is made so loud details at the high and low get cut off. Boo.

What I get instead is as much detail as possible, but also at a volume that needs correcting:

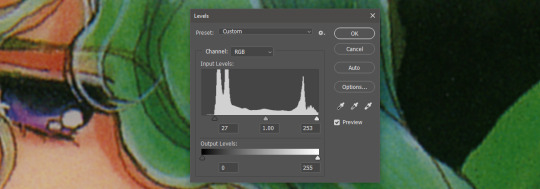

Cutting off the unused color ranges (in this case it's all dark), you get the best chance of capturing the original black and white range:

In some cases, I edit beyond this--for doujinshi scans, I aim for solid blacks and whites, because I need the file sizes to be normal and can't spend gigs of space on dust. For accuracy though, this is where I'd generally stop.

For scanning artwork, the major factor here that may be fucking up your game? Yep. The scanner. Modern scanners are like cheap microphones that blow out the audio, when what you want is the ancient microphone that captures your cat farting in the next room over. While you can compensate A LOT in Photoshop and bring out blacks and whites that scanners fuck up, at the end of the day, what's probably stopping you up is that you want to use your scanner for something scanners are no longer designed to do well. If you aren't crazy like me and likely to get a vintage scanner for this purpose, keep in mind that what you are looking for is specifically *a photo scanner.* These are the ones designed to capture the most range, and at the highest DPI. It will be a flatbed. Don't waste your time with anything else.

Hot tip: if you aren't scanning often, look into your local library or photo processing store. They will have access to modern scanners that specialize in the same priorities I've listed here, and many will scan to your specifications (high dpi, lossless.)

Ahem. I hope that helps, and or was interesting to someone!!!

#utena#image archiving#scanning#archiving#revolutionary girl utena#digitizing#photo scanner#art scanning

242 notes

·

View notes

Text

Seen surprisingly little celebration about this, the Biden administration just this past Friday (April 26th, 2024) issued a rule that does the following:

Explicitly states that queer people (including transgender people) are protected from discrimination in healthcare, this means they cannot be discriminated against or denied care based on their status as a transgender person. As set out by the ACA.

Additionally adds that nondiscrimination rules also apply to things like AI tools that may be used by healthcare professionals, and adds that those tools need to be evaluated to ensure that they do not enable discrimination.

It explicitly extends nondiscrimination protections to these cases and gets in front of the whole "oh well I put the numbers into the machine and the machine just happened to do racism about it so it's fine and it's not my fault" issue. Instead, this ruling says "actually, it's your job to know what that machine is and will do, and failure to do that is you fault." which is really good for patient protection!!

All in all, pretty fucking cool shit, hell yeah.

65 notes

·

View notes

Text

How I Customize Windows and Android

Windows: Rainmeter

Rainmeter Skins

Rainmeter | Deviantart

r/Rainmeter

Rainmeter is where I get nifty desktop widgets (skins). There are a ton of skins online and you can spend hours just getting caught up in customizing. There are clocks, disk information, music visualizers, weather widgets*, and more.

I get most of my skins from the links I posted, but they are by no means the only resources for Rainmeter skins. r/Rainmeter and Deviantart have some awesome inspiration.

This is what my desktop looks like right now:

Dock: Dock 2 v1.5

Icons: icons8 - this is probably the best free resource for icons I know of

"Good Evening [name]": Simple Clean

Clock: Simplony

* Note about weather widgets: Older Rainmeter skins that use old weather APIs will likely not work. The Rainmeter forums has information with lists of weather skins that do work.

Windows: Useful Things for Workflow

Flow Launcher - this is basically a search bar, app launcher, and even easy-access terminal all in one. The default hotkey is Alt+Space. I use this almost primarily to do quick calculations. There are a ton of plugins and I've barely scratched the surface with how I use it.

ShareX - This is my screenshot tool and I love it. Admittedly, I find it difficult to configure, but once I had it set up, I didn't really have to adjust it. You can create custom hotkeys to screenshot your entire screen, or to select your screen, or even use OCR. This has saved me a ton of time copying over text in images and making it searchable.

Bonus - Get Rid of Windows Web Search in the Start Menu: If you're comfortable with editing your registry, and you want to get rid of the pesky web results in Windows search, this fix is what I used to get rid of it.

Android: Nova Launcher

This is my main Android launcher that I've been using for almost as long as I've owned a smartphone, and it's super customizable. The best part is that it's free with no ads, and you can purchase premium at a one-time cost.

The main things I use it for are app drawer tabs, renaming apps, hiding apps, and changing the icons.

I've had premium for so long that I've forgotten what the features were, but looking at the website, the one feature I use is app folders.

This is what my phone homescreen and app drawers look like:

Time/Weather: Breezy Weather

Calendar: Month: Calendar Widget (I got this on sale for like 30 cents once but there are a plethora of good calendar apps out there)

Icons: Whicons - White Icon Pack

Advanced Customization

Further things to enhance your customization experience to look into include:

Flashing a custom Android ROM (e.g. LineageOS)

Give up on Windows & install Linux instead (Ubuntu is a good one to start with)

Android app modification: ReVanced apps (includes Tumblr), Distraction Free Instagram

Miscellaneous notes under the cut:

None of these links are affiliate links. These are all tools I happen to use on a daily basis and I'm not being paid to promote them.

Install Rainmeter skins and programs I recommend at your own risk. Before altering Windows, such as editing the registry, make sure you have everything backed up.

The Windows web search fix works on my Windows 11 machine. I don't know if it works for Windows 10, but I do know I was able to disable it in Windows 10 at some point, so your mileage may vary.

Install non-Play Store apps at your own risk. (Although in my opinion, open source APKs are less sketchy than some apps on the Play Store...) Always check where you're downloading APKs form!

The wallpaper for my desktop and phone are custom wallpapers I made myself.

(At the request of @christ-chan-official)

11 notes

·

View notes

Text

The Right Are Engineering A Recession In NZ

tumblr isn't very good at local news, which is why i tend to get my nz politics information from elsewhere. so i can confidently tell you that aotearoa under national is totally, utterly fucked. like, not just in terms of all the social progress they plan to undo, though they do very much do plan to do all of that.

national+act+nzfirst have committed to a financial policy that makes zero fucking economic sense. you know how every time the economy is in bad shape, tories sieze the good economic opportunity to slash services or give tax cuts to the rich? imagine if that was happening for just no reason at all. there’s no crisis we’re facing this would even marginally help, but that's what nact+nzfs tax cut policy is anyway.

aotearoa is currently in a cost of living crisis, like much of the world, and our inflation is, to give it it's technical term, "sticky". This means that it's not still shooting up the graph like crazy, but it should have started to go down more by now according to predictions, but it hasn't, and is sitting at an unsustainably high level.

Inflation is bad because it eats away at the value of your money (not something you want generally) but this inflation is especially bad because it's inflation we created to ward off a recession back in 2020. NZ had the hardest and fastest lockdowns in the world, but at a huge cost -- our economy basically stopped overnight. Without goods and services being bought and sold, we would have been plunged into a financial crisis. But instead the government borrowed money to fund the wage subsidy and pay workers through the lockdowns, injecting money into and stimulating the economy.

This was a bill that was always going to come back to bite us, and for the past several years, the Labour government and the Reserve Bank had been playing a balacing game with our economy, steering us between a recession and a wage-price inflation spiral, with a recession definitely being the preferable one of the two. We actually had short soft one that we’ve come out of, exactly what Grant Robertson and Adrien Orr were aiming for.

Recessions can be small or big - inflation spirals are usually just big. We wanted to aim for a "soft" landing recession by hiking interests rates just enough to bring inflation back under control. The Reserve Bank uses it's tool - the Official Cash Rate, or the OCR, which basically sets the price of interest rates across the country, and the government also can use it's powers to create monetary policy to help the economy. A lot of the criticism Labour received before losing the election was about overspending in areas post-pandemic, as putting money into the economy through government spend by using debt to fund it genuinely causes inflation.

What a government should do during a time of inflation is remove money from the economy. For example, a right wing government would often issue an austerity policy, where the cut the amount of government spending through slashing programmes, benefits, staff, etc etc. A government could also increase taxes so people have less money to spend, could pay down government debt, could invest the money into a fund (e.g. NZ has a superannuation fund that's designed to be eventually self-funding set up by Labour that National have paused payments on when getting into government). It doesn't matter too much what, theoretically speaking -- the point is to get the money out of the economy.

What you definitely, definitely don't want to do during a period of high and sticky inflation is put more money into the economy. That would do the opposite of what you want. Labour were rightfully (at some points) criticised for their inflationary policies. So you'd think National would take their criticisms of Labour’s debt blowout and start paying it down to show how responsible they are, right? No, they’re cutting taxes for (mostly) the wealthy while offsetting this with austerity measures to make this “fiscally neutral”. They will make up for the inflationary effects of doling out money to landlords by cutting back essential government services, trying to frame it as a personnel and budget blowout (it’s not) and saying Labour mismanaged the books and we are in terrible financial shape (we are not; we have a triple A credit rating).

And further, it’s becoming increasingly hard to ignore our infrastructure crisis at nearly every level and every location. Our water systems needs billions of dollars of investment that our councils can’t afford to borrow, our rates are shooting up (and so will our rents), our ferries are old and broken down and Nicola Willis Minister of Finance just canned the “too expensive” deal that was needed to replace them — with most of the money going to into wharf upgrades that are desperately needed. There was a huge sunk cost; we’re not going to be able to to buy shit now. The ferries link the North and the South Island and are vital infrastructure; when they break down (which they did multiple times last year) it causes chaos and brings things to a standstill.

Why are they doing this? Land. It’s always about fucking land. All of National have divested in shares and have bought into land under the guise of this removing the “conflict of interest” that would exist if they had invested into specific companies. The usual alternative that solves this is a blind trust, but that’s not what most of the caucus has money in. Luxon alone sold about 12 million dollars worth of Air NZ shares and now has a property profile worth 20+ million. Oh, and he’s charging the taxpayer $50,000 a year to live in his own house. Thats 2.5 times what I get on the benefit that he’s cutting and putting sanctions on.

Nact don’t care if businesses go under and share prices crash; they’ll just sell their houses and buy stocks for cheaper. Their only concern is propping up the housing market ponzi scheme that they have all invested at the top of. This is why they’ve allowed councils to opt out of densification requirements and why they cut back the brightline test and are trying to boost the population with migrant workers; all of these things make house prices go up, make housing better for investors who make millions in untaxed capital gains.

NACT will not let the property market crash any further. Despite what they’re saying out loud, they actually want it to increase.

And they’re more than happy to wreck the economy to do it.

85 notes

·

View notes

Text

At 8:22 am on December 4 last year, a car traveling down a small residential road in Alabama used its license-plate-reading cameras to take photos of vehicles it passed. One image, which does not contain a vehicle or a license plate, shows a bright red “Trump” campaign sign placed in front of someone’s garage. In the background is a banner referencing Israel, a holly wreath, and a festive inflatable snowman.

Another image taken on a different day by a different vehicle shows a “Steelworkers for Harris-Walz” sign stuck in the lawn in front of someone’s home. A construction worker, with his face unblurred, is pictured near another Harris sign. Other photos show Trump and Biden (including “Fuck Biden”) bumper stickers on the back of trucks and cars across America. One photo, taken in November 2023, shows a partially torn bumper sticker supporting the Obama-Biden lineup.

These images were generated by AI-powered cameras mounted on cars and trucks, initially designed to capture license plates, but which are now photographing political lawn signs outside private homes, individuals wearing T-shirts with text, and vehicles displaying pro-abortion bumper stickers—all while recording the precise locations of these observations. Newly obtained data reviewed by WIRED shows how a tool originally intended for traffic enforcement has evolved into a system capable of monitoring speech protected by the US Constitution.

The detailed photographs all surfaced in search results produced by the systems of DRN Data, a license-plate-recognition (LPR) company owned by Motorola Solutions. The LPR system can be used by private investigators, repossession agents, and insurance companies; a related Motorola business, called Vigilant, gives cops access to the same LPR data.

However, files shared with WIRED by artist Julia Weist, who is documenting restricted datasets as part of her work, show how those with access to the LPR system can search for common phrases or names, such as those of politicians, and be served with photographs where the search term is present, even if it is not displayed on license plates.

A search result for the license plates from Delaware vehicles with the text “Trump” returned more than 150 images showing people’s homes and bumper stickers. Each search result includes the date, time, and exact location of where a photograph was taken.

“I searched for the word ‘believe,’ and that is all lawn signs. There’s things just painted on planters on the side of the road, and then someone wearing a sweatshirt that says ‘Believe.’” Weist says. “I did a search for the word ‘lost,’ and it found the flyers that people put up for lost dogs and cats.”

Beyond highlighting the far-reaching nature of LPR technology, which has collected billions of images of license plates, the research also shows how people’s personal political views and their homes can be recorded into vast databases that can be queried.

“It really reveals the extent to which surveillance is happening on a mass scale in the quiet streets of America,” says Jay Stanley, a senior policy analyst at the American Civil Liberties Union. “That surveillance is not limited just to license plates, but also to a lot of other potentially very revealing information about people.”

DRN, in a statement issued to WIRED, said it complies with “all applicable laws and regulations.”

Billions of Photos

License-plate-recognition systems, broadly, work by first capturing an image of a vehicle; then they use optical character recognition (OCR) technology to identify and extract the text from the vehicle's license plate within the captured image. Motorola-owned DRN sells multiple license-plate-recognition cameras: a fixed camera that can be placed near roads, identify a vehicle’s make and model, and capture images of vehicles traveling up to 150 mph; a “quick deploy” camera that can be attached to buildings and monitor vehicles at properties; and mobile cameras that can be placed on dashboards or be mounted to vehicles and capture images when they are driven around.

Over more than a decade, DRN has amassed more than 15 billion “vehicle sightings” across the United States, and it claims in its marketing materials that it amasses more than 250 million sightings per month. Images in DRN’s commercial database are shared with police using its Vigilant system, but images captured by law enforcement are not shared back into the wider database.

The system is partly fueled by DRN “affiliates” who install cameras in their vehicles, such as repossession trucks, and capture license plates as they drive around. Each vehicle can have up to four cameras attached to it, capturing images in all angles. These affiliates earn monthly bonuses and can also receive free cameras and search credits.

In 2022, Weist became a certified private investigator in New York State. In doing so, she unlocked the ability to access the vast array of surveillance software accessible to PIs. Weist could access DRN’s analytics system, DRNsights, as part of a package through investigations company IRBsearch. (After Weist published an op-ed detailing her work, IRBsearch conducted an audit of her account and discontinued it. The company did not respond to WIRED’s request for comment.)

“There is a difference between tools that are publicly accessible, like Google Street View, and things that are searchable,” Weist says. While conducting her work, Weist ran multiple searches for words and popular terms, which found results far beyond license plates. In data she shared with WIRED, a search for “Planned Parenthood,” for instance, returned stickers on cars, on bumpers, and in windows, both for and against the reproductive health services organization. Civil liberties groups have already raised concerns about how license-plate-reader data could be weaponized against those seeking abortion.

Weist says she is concerned with how the search tools could be misused when there is increasing political violence and divisiveness in society. While not linked to license plate data, one law enforcement official in Ohio recently said people should “write down” the addresses of people who display yard signs supporting Vice President Kamala Harris, the 2024 Democratic presidential nominee, exemplifying how a searchable database of citizens’ political affiliations could be abused.

A 2016 report by the Associated Press revealed widespread misuse of confidential law enforcement databases by police officers nationwide. In 2022, WIRED revealed that hundreds of US Immigration and Customs Enforcement employees and contractors were investigated for abusing similar databases, including LPR systems. The alleged misconduct in both reports ranged from stalking and harassment to sharing information with criminals.

While people place signs in their lawns or bumper stickers on their cars to inform people of their views and potentially to influence those around them, the ACLU’s Stanley says it is intended for “human-scale visibility,” not that of machines. “Perhaps they want to express themselves in their communities, to their neighbors, but they don't necessarily want to be logged into a nationwide database that’s accessible to police authorities,” Stanley says.

Weist says the system, at the very least, should be able to filter out images that do not contain license plate data and not make mistakes. “Any number of times is too many times, especially when it's finding stuff like what people are wearing or lawn signs,” Weist says.

“License plate recognition (LPR) technology supports public safety and community services, from helping to find abducted children and stolen vehicles to automating toll collection and lowering insurance premiums by mitigating insurance fraud,” Jeremiah Wheeler, the president of DRN, says in a statement.

Weist believes that, given the relatively small number of images showing bumper stickers compared to the large number of vehicles with them, Motorola Solutions may be attempting to filter out images containing bumper stickers or other text.

Wheeler did not respond to WIRED's questions about whether there are limits on what can be searched in license plate databases, why images of homes with lawn signs but no vehicles in sight appeared in search results, or if filters are used to reduce such images.

“DRNsights complies with all applicable laws and regulations,” Wheeler says. “The DRNsights tool allows authorized parties to access license plate information and associated vehicle information that is captured in public locations and visible to all. Access is restricted to customers with certain permissible purposes under the law, and those in breach have their access revoked.”

AI Everywhere

License-plate-recognition systems have flourished in recent years as cameras have become smaller and machine-learning algorithms have improved. These systems, such as DRN and rival Flock, mark part of a change in the way people are surveilled as they move around cities and neighborhoods.

Increasingly, CCTV cameras are being equipped with AI to monitor people’s movements and even detect their emotions. The systems have the potential to alert officials, who may not be able to constantly monitor CCTV footage, to real-world events. However, whether license plate recognition can reduce crime has been questioned.

“When government or private companies promote license plate readers, they make it sound like the technology is only looking for lawbreakers or people suspected of stealing a car or involved in an amber alert, but that’s just not how the technology works,” says Dave Maass, the director of investigations at civil liberties group the Electronic Frontier Foundation. “The technology collects everyone's data and stores that data often for immense periods of time.”

Over time, the technology may become more capable, too. Maass, who has long researched license-plate-recognition systems, says companies are now trying to do “vehicle fingerprinting,” where they determine the make, model, and year of the vehicle based on its shape and also determine if there’s damage to the vehicle. DRN’s product pages say one upcoming update will allow insurance companies to see if a car is being used for ride-sharing.

“The way that the country is set up was to protect citizens from government overreach, but there’s not a lot put in place to protect us from private actors who are engaged in business meant to make money,” Nicole McConlogue, an associate professor of law at the Mitchell Hamline School of Law, who has researched license-plate-surveillance systems and their potential for discrimination.

“The volume that they’re able to do this in is what makes it really troubling,” McConlogue says of vehicles moving around streets collecting images. “When you do that, you're carrying the incentives of the people that are collecting the data. But also, in the United States, you’re carrying with it the legacy of segregation and redlining, because that left a mark on the composition of neighborhoods.”

19 notes

·

View notes

Note

i hope this doesn't sound patronising if you've already tried that route, but in case you haven't: if this is a text that has been given to you as is and been produced by a general OCR, it might be worth looking into whether ppl have already trained language-specific OCR models for your field of study and using that to transcribe the scanned text again. there's a lot of transcription solutions/software and different fields prefer different ones, and idk the standard for Celtic studies personally, but a site i use often (transkribus) has 2 Irish models whose related projects you might be able to use as a starting point for research at least. best of luck to you either way!

So there are several factors at work with the OCR problems with this text specifically.

The PDF of the text is from Archive. The library copy it was scanned from has various pencil markings and annotations that are interfering with the printed text -- it's not a clean scan. It's also not super high definition, so letters like "h" sometimes get misread as "li", even though they're totally readable to human eyes.

The edition uses frequent italics and brackets to show where abbrevations in the manuscript has been expanded. Individual italicised letters confuse the OCR, as do random square brackets in the middle of words.

It also has a lot of superscript numbers corresponding to manuscirpt variants in the footnotes. Sometimes these are in the middle of a word. This also confuses most OCR systems, even if it can tell that the footnotes are separate from the main text.

The language of the text is late Middle / Early Modern Irish, from two different manuscripts that have their own unique spelling quirks (for example, one of them loves to spell Cú Chulainn's name "Cú Cholain", which is a vibe).

In order to run the text through a more sophisticated OCR system that was equipped to cope with a) annotations, b) weird formatting and punctuation, c) incredibly frequent footnotes (variants), and d) non-standardised spelling (which throws off many language models), I would probably still need to have a reliable, clear, and high-definition scan of the text. Which would require re-digitising it from scratch.

So, the quickest and easiest way to get a version of the text that I personally can use is to sit here and type up 20,000 words into a document. This is 2-4 days' work, depending on how focused I am, and gives me the chance to go through the text in detail and spot things I might miss otherwise, so it's probably a whole lot less effort for more benefit than trying to adapt an entire language model that could read this terrible PDF. Especially as I have no experience of using these programmes so would have a steep learning curve.

Now, somebody absolutely should do that, so we could get proper searchable editions of more things. But honestly, if using transcription tools for medieval/early modern Irish I think there are higher priorities than things already available in printed form, so I doubt it's at the top of anyone's to-do list!

#it's only about 50% the OCR's fault here and it's 50% the PDF just being terrible to start with#which is. a different problem#finn is not doing a phd#answered

16 notes

·

View notes

Text

i truly wish i could see the appeal in a "universal software" the way other people do. i just can't understand canva. i need to put together a program at the library explaining how to use canva. but i still can't answer the question of why you would use canva for myself. it's a photo editor, but it doesn't do any real photo editing. you can make pdf documents, but it's OCR is so terrible that you can't really edit something once it's made. or anything you've scanned. it's a slideshow designer. but... who seriously came to canva looking to make slideshows? its a video editor???? this program that most people use to make basic ass flyers is also a video editor???? and we aren't even talking about how frustrating it to use as a freemium platform. oh you don't have to pay for it UNLESS YOU WANT ANY OF THE PARTS YOU'D ACTUALLY COME TO CANVA FOR. the only thing it's actually good for is providing free to use stock photo, clip art, and templates inside of the program, but if you want anything that looks halfway decent you have to sign up for their ridiculous subscription.

i'm just. if you actually care about what you do 90% of the things canva does it's insufficient for. it's a bad photo editor, it's a bad document editor, it's a bad video editor, it's a bad presentation creator, and we haven't even gotten to how bad the AI tools are. i hate AI so much but Canva's AI isn't even worth attempting to use. you ask for a picture of gold leaf and it serves you gold soup. whatever gold soup is. but also. i guess it technically does all of those things. so if you're not actually interested in anything you make being good then. there you go.

6 notes

·

View notes

Text

Top 7 AI Projects for High-Paying Jobs in 2025

7 AI Projects for High-Paying Jobs in 2025. Along the way, I’ve realized that the best candidates for AI and Data Science roles aren’t always the ones with top degrees or fancy universities. It’s the ones who show a genuine passion for the field through creative projects.

For example, one candidate built a personal stock prediction model to learn and shared it online—simple but impactful. These projects showed initiative and problem-solving skills, which hiring managers value more than technical expertise. I landed my first internship by showcasing similar projects.

In this article, I’ll share AI project ideas ideas for High-Paying Jobs that will help you stand out, along with tips and tools to get you started on your journey.

Table of Contents

1. Credit Report Analysis Using AI

Traditional credit scoring models often fail to assess those with thin credit histories, such as young people or immigrants. The dream project is to create an AI-based credit report analysis system leveraging alternative sources of existing data like the presence of social media (ethically and considering user consent), online transaction history, and even utility bill payments to provide a comprehensive perspective on an individual’s creditworthiness.

Example

Many companies in the financial sector use AI to speed up document processing and customer onboarding. Inscribe offers AI-powered document automation solutions that make the credit assessment process easier. Your project would involve:

Data Collection & Preprocessing: Gather data from diverse sources, ensuring privacy and security.

Feature Engineering: Identify meaningful features from non-traditional sources.

Model Building: Train models such as Random Forest or Gradient Boosting to predict creditworthiness.

Explainability: Use tools to explain predictions, ensuring transparency and fairness.

The frameworks and tools for this project would include Python, AWS S3, Streamlit, and machine learning techniques, offering a deep dive into the combination of AI and financial systems.

2. Summarization with Generative AI

In today’s information-overloaded world, summarization is a vital skill. This project demonstrates the power of Generative AI in creating concise, informative summaries of diverse content, whether it’s a document, a financial report, or even a complex story.

Consider a tool like CreditPulse, which utilizes large language models (LLMs) to summarize credit risk reports. Your project would involve fine-tuning pre-trained LLMs for specific summarization tasks. Here’s how to break it down:

Generative AI: Explore the key challenges in summarizing large, complex documents, and generate solutions using LLMs.

Training the Model: Fine-tune LLMs to better summarize financial reports or stories.

Synthetic Data Generation: Use generative AI to create synthetic data for training summarization models, especially if real-world data is limited.

By taking on this project, you demonstrate expertise in Natural Language Processing (NLP) and LLMs, which are essential skills for the AI-driven world.

3. Document Validation with Vision AI

Know Your Customer (KYC) processes are essential for fraud prevention and adherence to financial regulations. This is a Vision AI project that automates the document validation in the KYC process. Think of things like AI-powered Optical Character Recognition systems that scan and validate details from documents like your passport or driver’s license. This project would involve:

Data Preprocessing: Cleaning and organizing scanned document images.

Computer Vision Models: Train models to authenticate documents using OCR and image processing techniques.

Document Validation: Verify the authenticity of customer data based on visual and textual information.

This project demonstrates your expertise in computer vision, image processing, and handling unstructured data—skills that are highly valuable in real-world applications.

4. Text-to-SQL System with a Clarification Engine

Natural language interaction with the database is one of the most exciting areas of development in AI. This works on a text-to-SQl project that will show us how to make a text to an SQL query, with which we will be able to query a database just the way we query it. The Clarification Engine, which you’ll build to address ambiguity in user queries, will ask follow-up questions whenever a query is ambiguous. The project involves:

Dataset Creation: Build a dataset of natural language questions paired with SQL queries.

Model Training: Use sequence-to-sequence models to convert natural language into SQL.

Clarification Engine: Develop an AI system that asks follow-up questions to resolve ambiguity (e.g., “Which product?”, “What time frame?”).

Evaluation: Test the model’s accuracy and usability.

Incorporating tools like Google Vertex AI and PaLM 2, which are optimized for multilingual and reasoning tasks, can make this system even more powerful and versatile.

GitHub

5. Fine-tuning LLM for Synthetic Data Generation

In such situations where there is no or extremely limited access to real data due to sensitivity, AI data becomes indispensable. In this project, you will tune an LLM to generate synthetic-style datasets using the nature of a real dataset. This is a fascinating space, particularly since synthetic data can be used to train AI models in the absence of real-world data. Steps for this project include:

Dataset Analysis: Examine the dataset you want to mimic.

LLM Fine-tuning: Train an LLM on the real dataset to learn its patterns.

Synthetic Data Generation: Use the fine-tuned model to generate artificial data samples.

Evaluation: Test the utility of the synthetic data for AI model training.

This project showcases proficiency in LLMs and data augmentation techniques, both of which are becoming increasingly essential in AI and Data Science.

6. Personalized Recommendation System with LLM, RAG, Statistical model

Recommendation systems are everywhere—Netflix, Amazon, Spotify—but creating a truly effective one requires more than just user preferences. This project combines LLMs, Retrieval Augmented Generation (RAG), and traditional statistical models to deliver highly personalized recommendations. The project involves:

Data Collection: Gather user data and interaction history.

LLMs for Preference Understanding: Use LLMs to analyze user reviews, search history, or social media posts.

RAG for Context: Implement RAG to fetch relevant data from a knowledge base to refine recommendations.

Collaborative Filtering: Use statistical models to account for user interaction patterns.

Hybrid System: Combine the outputs of the models for accurate recommendations.

This project will showcase your ability to integrate diverse AI and data science techniques to build a sophisticated recommendation engine.

7. Self Host Llm Model Using Ollama, Vllm, How To Reduce Latency Of Inference

Hosting and deploying an LLM efficiently is an essential skill in AI. This project focuses on optimizing the deployment of an LLM using tools like Ollama or VLLM to reduce inference latency and improve performance. You’ll explore techniques like quantization, pruning, and caching to speed up model inference, making it more scalable. This project involves:

Model Deployment: Choose an open-source LLM and deploy it using Ollama/VLLM.

Optimization: Implement strategies like quantization to improve inference speed.

Performance Monitoring: Evaluate the model’s performance and adjust as needed.

Scalability: Use load balancing to manage multiple concurrent requests.

By completing this project, you’ll prove your expertise in LLM deployment, optimization, and building scalable AI infrastructure.

Conclusion

Break into a six-figure AI and Data Science career with these 7 projects. The goal is not to just get these projects done but to have the concepts in your head and the communication skills to explain your approach.

Consider documenting your projects on GitHub, and writing about your experiences in blog posts; not only does this help showcase your skills that you are interested in and willing to take the initiative.

Remember, in this rapidly evolving field, staying updated with the latest tools and techniques is crucial. Check out resources like NucleusBox for valuable insights and inspiration. The world of AI is vast and full of opportunities—so go ahead, dive in, and build something truly impactful!

2 notes

·

View notes

Text

How does data capture services benefit a business?

Data Capture services

In the current digital age, data secrecy is recognized as the most valuable asset for any business. However, collecting it manually and investing time in it personally is time-consuming and prone to errors, as it is subject to matters. That’s where data capture services come in. While these services enable the enterprises to collect, organize, store and process information quickly and accurately, resulting in more informed decisions and enhanced efficiency for the organization to go ahead.

Faster Access to Information:

Data-capturing services automate the process of gathering data from various sources, including documents, forms, emails, and other digital assets. As this process speeds up the process to access critical information, enabling employees to work more towards the betterment efficiently and respond promptly towards customer needs or business challenges.

Improved Accuracy and Reduced Errors:

Manual data entry and filling often leads and thrives towards mistakes as they can affect the ongoing business operations. With data capturing technology, information is extracted using tools such as OCR (Optical Character Recognition) and with the assistance of AI, ensuring a level of higher accuracy is maintained. At the same time, fewer errors means better outcomes and more reliable reports that have been generated.

Streamlined Business Operations:

By automating data collection, businesses can save time and resources. While the staff and operating users no longer have the need to spend hours by entering data by hand or their own, allowing them to have a keen look on more valuable tasks and selective concerns. This heads and drives toward enhanced productivity and smoother workflows and operations.

Enhanced Customer Service:

Quick and precise data collection assures that the customer records, queries, and transactions are handled efficiently and effectively with this technique adaption. This leads towards faster service delivery, fewer complaints, and a better overall customer experience—key factors in staying competitive.

Better Decision-Making:

Accurate and well-organized data gives leaders a clearer view of their business performance. With real-time insights from data capture, they can make informed and clear decisions by identifying the current trends, and respond to market changes with confidence with a complete detailed report.

Scalable for Growing Businesses:

As a business grows, managing large volumes of data becomes more difficult. Data capture services scale and grow with your company, handling increasing amounts and multiple sets of information without sacrificing the speed or accuracy. Many businesses trust experts like Suma Soft, IBM, Cyntexa, and Cignex for efficient data capture solutions. These providers offer tailored services that boost data accuracy, ensure fast turnaround, and support long-term digital transformation.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

2 notes

·

View notes

Text

10 people I'd like to know better

(please make a new post, don't reblog)

Last Song - Who Killed U. N. Owen ver. 暁Records×Liz Triangle. I may not play Touhou Project games but I'm happy for its existence. There are so many great songs coming from that game and its fandom.

Favourite Colour - Purple! From blueish purple to a more pinkish one. I think colours like dark indigo, which are between blue and purple, have grown on me.

Last Book - Babel by R. F. Kuag. Amazing book that I recommend vividly even if it's a very self-indulgent book for me, as I'm a translator. The book is something akin to a mystery novel. criticism of Western operationalism and a discussion of the power of languages. As a translator I also must add that despite the slight differences, mostly because I live in a different country and in a different time period from the characters in the book, the Translation classes are on point for what you find in a real translation course. There was so much good research done for this book it's astounding. I'm also deeply saddened there has been no translation of this book to European Portuguese. This would be my dream book to translate.

I've also never read so many mentions of Portuguese wine in my life??? You British folk must really love that stuff because I swear the characters only drink Portuguese wine.

Last Show - The Ranma 1/2 remake! Really fun watch. Highly recommend. It's such a funny comfort show. It still maintains the old style of the 80s manga so you'll get this weird feeling of old gender norms but considering the theme of the show it actually works in its favour. The characters are from a time, place and society that is deeply patriarchal and the show plays with it quite a bit, criticizing and showing how pointless a lot of those divisions are. And it does this in a very entertaining, chaotic way.

Last Movie - I don't remember... I haven't watched a film in a while.

Sweet/spicy/savory - I like sweet a lot. But I don't dislike the others. The exception is acid things. I don't like lemon and I'm not much a fan of oranges either. Things that use lemon and orange flavour are included. However I do like squeezed lemon on my food. I do like both lemon and orange as an addition to something else.

Relationship Status - Single

Last thing I googled - "Irk meaning". Not exactly to know the meaning actually, but to make sure I spelt it right 😅

Current Obsession - A mix of multiple things. I think I'm either on a transitional stage or have had so much work that I couldn't manage a full hyper-fixation that would leave me unable to think of anything else. The things I've been into lately: Neuro-sama (the streamer), Honkai Star Rail (This one has lasted two years now I think) and I guess how AI works and how it can be used as a tool to help instead of being something that can only do harm. As a physically disabled person I think people who are only on the "AI sucks" boat sometimes don't understand that it can genuinely be groundbreaking in some cases. I've never had so good OCR in my life. I can finally read things the old teachers that don't understand anything about technology send me. Google's AI image description is actually pretty good!

I must add that I am not an AI apologist. I hate seeing what's happening to actors. No AI image generator can ever reach the art of a real human. AI is stupid and probabilistic, there are squiggly lines drawn by a 5 year old with more thought put into it. And yes, for now it needs a lot of energy. However, with Deepseek we've seen that it's possible to lower it a lot and there are things generative AI can help with. As always, and as with the internet, it depends on how it's used. You can't trust it just like you can't trust anything that is on the internet. The problem, as is with most things, is the big companies hypocritical assholes who steal other works and then get mad when people steal from their partially stolen work. That and their greedy ideas of putting AI everywhere even where it isn't needed.

Looking forward to - Finishing my degree and starting work for real.

Tagged by: @corollarytower

Tagging: (No pressure! And if there's a question you don't feel comfortable answering that's ok too.) @vulto-cor-de-rosa @itsjustagoober @innitnotfound @blueliac @theuwumaster @proudfreakmetarusonikku @hiveswap @griancraft @peeares @olversui

3 notes

·

View notes