#Vision API

Explore tagged Tumblr posts

Text

9 AI Tools to Build Websites and Landing Pages: Revolutionizing Web Design

In the ever-evolving world of web design, staying ahead of the curve is essential to creating visually stunning and highly functional websites. With the advent of artificial intelligence (AI), designers and developers now have a powerful set of tools at their disposal to revolutionize the web design process. AI website design tools offer innovative solutions that streamline and enhance the creation of websites and landing pages.

In this article, we will explore nine AI tools that are reshaping the web design landscape, discuss their various types, and highlight the benefits of using AI tools for website building.

1. Wix ADI:

Wix ADI (Artificial Design Intelligence) is a game-changer for website building. It utilizes AI algorithms to automatically generate customized website designs based on user preferences and content inputs. With Wix ADI, even users with no design experience can create stunning websites in a matter of minutes.

2. Grid:

Grid is an AI-powered website builder that uses machine learning to analyze design principles and create visually pleasing websites. It takes user inputs, such as branding elements and content, and generates unique layouts and designs tailored to the user's needs. Grid eliminates the need for manual coding and design expertise, making it accessible to users of all skill levels.

3. Firedrop:

Firedrop is an AI chatbot-based website builder that guides users through the entire website creation process. The AI-driven chatbot asks questions, gathers information, and generates a personalized website design. It also offers real-time editing and customization options, allowing users to make changes effortlessly.

4. Bookmark:

Bookmark is an AI website builder that combines artificial intelligence with human assistance. It provides an intuitive interface where users can select a design style and content preferences. The AI algorithms then generate a website layout, which can be further customized using Bookmark's drag-and-drop editor. Users also have access to AI-driven features like automated content creation and personalized marketing recommendations.

5. Adobe Sensei:

Adobe Sensei is an AI and machine learning platform that enhances the capabilities of Adobe's creative tools, including website design software like Adobe XD. Sensei analyzes user behavior, content, and design elements to offer intelligent suggestions, automate repetitive tasks, and speed up the design process. It empowers designers to create impactful websites with greater efficiency and creativity.

6. The Grid:

The Grid is an AI-driven website builder that uses machine learning to analyze user content and generate unique, responsive website designs. It employs a card-based layout system, automatically arranging and resizing content for optimal visual appeal. The Grid's AI algorithms continuously learn from user feedback, improving the quality of designs over time.

7. Elementor:

Elementor is a popular AI-powered plugin for WordPress that simplifies the process of building landing pages. It offers a drag-and-drop interface with a wide range of pre-designed templates and widgets. Elementor's AI features include responsive editing, dynamic content integration, and intelligent design suggestions, enabling users to create professional landing pages efficiently.

8. Canva:

Although primarily known as a graphic design tool, Canva incorporates AI elements to make website design accessible to non-designers. It offers a user-friendly interface with customizable templates, stock images, and drag-and-drop functionality. Canvas AI algorithms suggest design elements and provide automatic resizing options, making it easier to create visually appealing websites and landing pages.

9. Sketch2React:

Sketch2React is an AI tool that simplifies the process of converting design files from Sketch (a popular design software) into interactive, code-based websites. It automates the conversion process, reducing the need for manual coding and accelerating the development timeline. Sketch2React's AI capabilities ensure that the resulting websites are responsive and optimized for different devices.

Benefits of Using AI Tools for Website Development:

1. Time-saving: AI tools automate repetitive and time-consuming tasks, allowing designers and developers to focus on creativity and strategic aspects of web design.

2. Cost-effective: AI tools eliminate the need for extensive coding knowledge or hiring professional designers, making website building more affordable for businesses of all sizes.

3. User-friendly: AI website builders provide intuitive interfaces, drag-and-drop functionality, and automated design suggestions, making them accessible to users with limited technical skills.

4. Personalization: AI algorithms analyze user preferences and content inputs to generate personalized website designs that align with the brand and target audience.

5. Enhanced creativity: AI tools offer design suggestions, templates, and automated content creation features that inspire creativity and enable designers to experiment with new ideas.

6. Improved user experience: AI-driven websites are optimized for responsiveness, usability, and accessibility, resulting in enhanced user experiences and increased engagement.

Conclusion:

AI tools have revolutionized the web design industry by simplifying and enhancing the process of building websites and landing pages. Whether it's generating personalized designs, automating repetitive tasks, or offering intelligent design suggestions, AI-driven solutions empower designers and non-designers alike to create visually stunning and highly functional websites. By leveraging the power of AI, businesses can save time, reduce costs, and deliver exceptional user experiences, ultimately driving success in the digital landscape. As AI technology continues to advance, we can expect even more innovative tools to emerge, further revolutionizing the field of web design. Embracing these AI tools is key to staying at the forefront of web design trends and creating websites that captivate audiences and achieve business goals.

#Hire Machine Learning Developer#Machine Learning Development in India#Looking For Machine Learning Developer#Looking For Machine Learning Dev Team#Data Analytics Company#Vision AI Solution#Vision AI Development#Vision AI Software#Vision API#Vertex AI Vision#Web Development#Web Design#AI Tool

2 notes

·

View notes

Text

#ashtonfei#vision ai#vision api#google vision#text detection#image labeling#google apps script#google sheets

0 notes

Text

Api Biru Rebellion

#mcd#aphblr#shadow knights#jury of redesign#the council#vision board#xavier the admirer#laurance zvahl#api biru#shadow knight rebellion#api biru rebellion#moodboard

26 notes

·

View notes

Text

Why Agentic Document Extraction Is Replacing OCR for Smarter Document Automation

New Post has been published on https://thedigitalinsider.com/why-agentic-document-extraction-is-replacing-ocr-for-smarter-document-automation/

Why Agentic Document Extraction Is Replacing OCR for Smarter Document Automation

For many years, businesses have used Optical Character Recognition (OCR) to convert physical documents into digital formats, transforming the process of data entry. However, as businesses face more complex workflows, OCR’s limitations are becoming clear. It struggles to handle unstructured layouts, handwritten text, and embedded images, and it often fails to interpret the context or relationships between different parts of a document. These limitations are increasingly problematic in today’s fast-paced business environment.

Agentic Document Extraction, however, represents a significant advancement. By employing AI technologies such as Machine Learning (ML), Natural Language Processing (NLP), and visual grounding, this technology not only extracts text but also understands the structure and context of documents. With accuracy rates above 95% and processing times reduced from hours to just minutes, Agentic Document Extraction is transforming how businesses handle documents, offering a powerful solution to the challenges OCR cannot overcome.

Why OCR is No Longer Enough

For years, OCR was the preferred technology for digitizing documents, revolutionizing how data was processed. It helped automate data entry by converting printed text into machine-readable formats, streamlining workflows across many industries. However, as business processes have evolved, OCR’s limitations have become more apparent.

One of the significant challenges with OCR is its inability to handle unstructured data. In industries like healthcare, OCR often struggles with interpreting handwritten text. Prescriptions or medical records, which often have varying handwriting and inconsistent formatting, can be misinterpreted, leading to errors that may harm patient safety. Agentic Document Extraction addresses this by accurately extracting handwritten data, ensuring the information can be integrated into healthcare systems, improving patient care.

In finance, OCR’s inability to recognize relationships between different data points within documents can lead to mistakes. For example, an OCR system might extract data from an invoice without linking it to a purchase order, resulting in potential financial discrepancies. Agentic Document Extraction solves this problem by understanding the context of the document, allowing it to recognize these relationships and flag discrepancies in real-time, helping to prevent costly errors and fraud.

OCR also faces challenges when dealing with documents that require manual validation. The technology often misinterprets numbers or text, leading to manual corrections that can slow down business operations. In the legal sector, OCR may misinterpret legal terms or miss annotations, which requires lawyers to intervene manually. Agentic Document Extraction removes this step, offering precise interpretations of legal language and preserving the original structure, making it a more reliable tool for legal professionals.

A distinguishing feature of Agentic Document Extraction is the use of advanced AI, which goes beyond simple text recognition. It understands the document’s layout and context, enabling it to identify and preserve tables, forms, and flowcharts while accurately extracting data. This is particularly useful in industries like e-commerce, where product catalogues have diverse layouts. Agentic Document Extraction automatically processes these complex formats, extracting product details like names, prices, and descriptions while ensuring proper alignment.

Another prominent feature of Agentic Document Extraction is its use of visual grounding, which helps identify the exact location of data within a document. For example, when processing an invoice, the system not only extracts the invoice number but also highlights its location on the page, ensuring the data is captured accurately in context. This feature is particularly valuable in industries like logistics, where large volumes of shipping invoices and customs documents are processed. Agentic Document Extraction improves accuracy by capturing critical information like tracking numbers and delivery addresses, reducing errors and improving efficiency.

Finally, Agentic Document Extraction’s ability to adapt to new document formats is another significant advantage over OCR. While OCR systems require manual reprogramming when new document types or layouts arise, Agentic Document Extraction learns from each new document it processes. This adaptability is especially valuable in industries like insurance, where claim forms and policy documents vary from one insurer to another. Agentic Document Extraction can process a wide range of document formats without needing to adjust the system, making it highly scalable and efficient for businesses that deal with diverse document types.

The Technology Behind Agentic Document Extraction

Agentic Document Extraction brings together several advanced technologies to address the limitations of traditional OCR, offering a more powerful way to process and understand documents. It uses deep learning, NLP, spatial computing, and system integration to extract meaningful data accurately and efficiently.

At the core of Agentic Document Extraction are deep learning models trained on large amounts of data from both structured and unstructured documents. These models use Convolutional Neural Networks (CNNs) to analyze document images, detecting essential elements like text, tables, and signatures at the pixel level. Architectures like ResNet-50 and EfficientNet help the system identify key features in the document.

Additionally, Agentic Document Extraction employs transformer-based models like LayoutLM and DocFormer, which combine visual, textual, and positional information to understand how different elements of a document relate to each other. For example, it can connect a table header to the data it represents. Another powerful feature of Agentic Document Extraction is few-shot learning. It allows the system to adapt to new document types with minimal data, speeding up its deployment in specialized cases.

The NLP capabilities of Agentic Document Extraction go beyond simple text extraction. It uses advanced models for Named Entity Recognition (NER), such as BERT, to identify essential data points like invoice numbers or medical codes. Agentic Document Extraction can also resolve ambiguous terms in a document, linking them to the proper references, even when the text is unclear. This makes it especially useful for industries like healthcare or finance, where precision is critical. In financial documents, Agentic Document Extraction can accurately link fields like “total_amount” to corresponding line items, ensuring consistency in calculations.

Another critical aspect of Agentic Document Extraction is its use of spatial computing. Unlike OCR, which treats documents as a linear sequence of text, Agentic Document Extraction understands documents as structured 2D layouts. It uses computer vision tools like OpenCV and Mask R-CNN to detect tables, forms, and multi-column text. Agentic Document Extraction improves the accuracy of traditional OCR by correcting issues such as skewed perspectives and overlapping text.

It also employs Graph Neural Networks (GNNs) to understand how different elements in a document are related in space, such as a “total” value positioned below a table. This spatial reasoning ensures that the structure of documents is preserved, which is essential for tasks like financial reconciliation. Agentic Document Extraction also stores the extracted data with coordinates, ensuring transparency and traceability back to the original document.

For businesses looking to integrate Agentic Document Extraction into their workflows, the system offers robust end-to-end automation. Documents are ingested through REST APIs or email parsers and stored in cloud-based systems like AWS S3. Once ingested, microservices, managed by platforms like Kubernetes, take care of processing the data using OCR, NLP, and validation modules in parallel. Validation is handled both by rule-based checks (like matching invoice totals) and machine learning algorithms that detect anomalies in the data. After extraction and validation, the data is synced with other business tools like ERP systems (SAP, NetSuite) or databases (PostgreSQL), ensuring that it is readily available for use.

By combining these technologies, Agentic Document Extraction turns static documents into dynamic, actionable data. It moves beyond the limitations of traditional OCR, offering businesses a smarter, faster, and more accurate solution for document processing. This makes it a valuable tool across industries, enabling greater efficiency and new opportunities for automation.

5 Ways Agentic Document Extraction Outperforms OCR

While OCR is effective for basic document scanning, Agentic Document Extraction offers several advantages that make it a more suitable option for businesses looking to automate document processing and improve accuracy. Here’s how it excels:

Accuracy in Complex Documents

Agentic Document Extraction handles complex documents like those containing tables, charts, and handwritten signatures far better than OCR. It reduces errors by up to 70%, making it ideal for industries like healthcare, where documents often include handwritten notes and complex layouts. For example, medical records that contain varying handwriting, tables, and images can be accurately processed, ensuring critical information such as patient diagnoses and histories are correctly extracted, something OCR might struggle with.

Context-Aware Insights

Unlike OCR, which extracts text, Agentic Document Extraction can analyze the context and relationships within a document. For instance, in banking, it can automatically flag unusual transactions when processing account statements, speeding up fraud detection. By understanding the relationships between different data points, Agentic Document Extraction allows businesses to make more informed decisions faster, providing a level of intelligence that traditional OCR cannot match.

Touchless Automation

OCR often requires manual validation to correct errors, slowing down workflows. Agentic Document Extraction, on the other hand, automates this process by applying validation rules such as “invoice totals must match line items.” This enables businesses to achieve efficient touchless processing. For example, in retail, invoices can be automatically validated without human intervention, ensuring that the amounts on invoices match purchase orders and deliveries, reducing errors and saving significant time.

Scalability

Traditional OCR systems face challenges when processing large volumes of documents, especially if the documents have varying formats. Agentic Document Extraction easily scales to handle thousands or even millions of documents daily, making it perfect for industries with dynamic data. In e-commerce, where product catalogs constantly change, or in healthcare, where decades of patient records need to be digitized, Agentic Document Extraction ensures that even high-volume, varied documents are processed efficiently.

Future-Proof Integration

Agentic Document Extraction integrates smoothly with other tools to share real-time data across platforms. This is especially valuable in fast-paced industries like logistics, where quick access to updated shipping details can make a significant difference. By connecting with other systems, Agentic Document Extraction ensures that critical data flows through the proper channels at the right time, improving operational efficiency.

Challenges and Considerations in Implementing Agentic Document Extraction

Agentic Document Extraction is changing the way businesses handle documents, but there are important factors to consider before adopting it. One challenge is working with low-quality documents, like blurry scans or damaged text. Even advanced AI can have trouble extracting data from faded or distorted content. This is primarily a concern in sectors like healthcare, where handwritten or old records are common. However, recent improvements in image preprocessing tools, like deskewing and binarization, are helping address these issues. Using tools like OpenCV and Tesseract OCR can improve the quality of scanned documents, boosting accuracy significantly.

Another consideration is the balance between cost and return on investment. The initial cost of Agentic Document Extraction can be high, especially for small businesses. However, the long-term benefits are significant. Companies using Agentic Document Extraction often see processing time reduced by 60-85%, and error rates drop by 30-50%. This leads to a typical payback period of 6 to 12 months. As technology advances, cloud-based Agentic Document Extraction solutions are becoming more affordable, with flexible pricing options that make it accessible to small and medium-sized businesses.

Looking ahead, Agentic Document Extraction is evolving quickly. New features, like predictive extraction, allow systems to anticipate data needs. For example, it can automatically extract client addresses from recurring invoices or highlight important contract dates. Generative AI is also being integrated, allowing Agentic Document Extraction to not only extract data but also generate summaries or populate CRM systems with insights.

For businesses considering Agentic Document Extraction, it is vital to look for solutions that offer custom validation rules and transparent audit trails. This ensures compliance and trust in the extraction process.

The Bottom Line

In conclusion, Agentic Document Extraction is transforming document processing by offering higher accuracy, faster processing, and better data handling compared to traditional OCR. While it comes with challenges, such as managing low-quality inputs and initial investment costs, the long-term benefits, such as improved efficiency and reduced errors, make it a valuable tool for businesses.

As technology continues to evolve, the future of document processing looks bright with advancements like predictive extraction and generative AI. Businesses adopting Agentic Document Extraction can expect significant improvements in how they manage critical documents, ultimately leading to greater productivity and success.

#Agentic AI#Agentic AI applications#Agentic AI in information retrieval#Agentic AI in research#agentic document extraction#ai#Algorithms#anomalies#APIs#Artificial Intelligence#audit#automation#AWS#banking#BERT#Business#business environment#challenge#change#character recognition#charts#Cloud#CNN#Commerce#Companies#compliance#computer#Computer vision#computing#content

0 notes

Text

Sistemas de Recomendación y Visión por Computadora: Las IAs que Transforman Nuestra Experiencia Digital

Sistemas de Recomendación: ¿Qué son y para qué sirven? Los sistemas de recomendación son tecnologías basadas en inteligencia artificial diseñadas para predecir y sugerir elementos (productos, contenidos, servicios) que podrían interesar a un usuario específico. Estos sistemas analizan patrones de comportamiento, preferencias pasadas y similitudes entre usuarios para ofrecer recomendaciones…

#Amazon Recommendation System#Amazon Rekognition#Google Cloud Vision API#Google News#IBM Watson Visual Recognition#inteligencia artificial#machine learning#Microsoft Azure Computer Vision#Netflix Recommendation Engine#OpenAI CLIP#personalización#sistemas de recomendación#Spotify Discover Weekly#visión por computadora#YouTube Algorithm

0 notes

Text

Explore These Exciting DSU Micro Project Ideas

Explore These Exciting DSU Micro Project Ideas Are you a student looking for an interesting micro project to work on? Developing small, self-contained projects is a great way to build your skills and showcase your abilities. At the Distributed Systems University (DSU), we offer a wide range of micro project topics that cover a variety of domains. In this blog post, we’ll explore some exciting DSU…

#3D modeling#agricultural domain knowledge#Android#API design#AR frameworks (ARKit#ARCore)#backend development#best micro project topics#BLOCKCHAIN#Blockchain architecture#Blockchain development#cloud functions#cloud integration#Computer vision#Cryptocurrency protocols#CRYPTOGRAPHY#CSS#data analysis#Data Mining#Data preprocessing#data structure micro project topics#Data Visualization#database integration#decentralized applications (dApps)#decentralized identity protocols#DEEP LEARNING#dialogue management#Distributed systems architecture#distributed systems design#dsu in project management

0 notes

Text

What was Announced at WWDC 2024?

Apple’s Worldwide Developers Conference (WWDC) 2024 is in full swing, and the tech world is buzzing with excitement. This annual event is where Apple unveils its latest innovations across its ecosystem, from operating system updates to groundbreaking new products. Here’s a comprehensive look at the major announcements and features that have been revealed so far. Apple Vision Pro Expansion and…

View On WordPress

#Accessibility#advanced privacy#AirPods updates#Apple Fitness+#Apple Intelligence#Apple Music#Apple Vision Pro#Continuity Camera#creativity#customisation#developer API#FaceTime#fitness features#gaming enhancements#generative AI#H2 chip#health tracking#hidden apps#Home app#immersive experiences#iOS 18#iPadOS 18#locked apps#low latency#machine learning#macOS Sequoia#mental wellness#Messages update#Passwords app#Personalised Spatial Audio

0 notes

Text

#AI chatbot#AI ethics specialist#AI jobs#Ai Jobsbuster#AI landscape 2024#AI product manager#AI research scientist#AI software products#AI tools#Artificial Intelligence (AI)#BERT#Clarifai#computational graph#Computer Vision API#Content creation#Cortana#creativity#CRM platform#cybersecurity analyst#data scientist#deep learning#deep-learning framework#DeepMind#designing#distributed computing#efficiency#emotional analysis#Facebook AI research lab#game-playing#Google Duplex

0 notes

Text

Boost Your Airline’s Reach in Saudi Arabia with Amadeus API

The airline industry continually seeks innovative solutions to streamline operations and enhance customer satisfaction. For Saudi Arabia, a nation experiencing a rapid transformation in its tourism and aviation sectors as part of its Vision 2030, the integration of advanced technological solutions like Grey Space Computing with Amadeus APIs is not just beneficial — it’s essential. This integration promises to revolutionize airline ticketing systems, making them more efficient, flexible, and user-friendly.

The Rise of Digital Solutions in Saudi Arabian Aviation

Saudi Arabia’s Vision 2030 aims to diversify the economy and reduce its dependence on oil by investing heavily in infrastructure, tourism, and technology. The aviation sector is a significant focus, given its role in facilitating tourism and international business. Modernizing airline ticketing systems through technological integration is crucial to managing increased air traffic and improving passenger experiences, aligning with the country’s strategic goals.

Understanding Grey Space Computing and Amadeus APIs

Grey Space Computing is a technology provider offering solutions that leverage computing innovation to optimize various business operations. Amadeus, a global leader in travel technology, provides comprehensive APIs that offer access to a vast range of travel services, including real-time flight booking, price comparisons, and itinerary planning.

Integration Benefits

1. Enhanced Booking Efficiency

Integrating Amadeus APIs into the Grey Space Computing platform allows airlines and travel agencies in Saudi Arabia to access up-to-the-minute data on flight schedules, seat availability, and pricing. This integration facilitates instant bookings and updates, reducing the time spent on processing reservations and increasing the accuracy of the data provided to customers.

2. Customization and Flexibility

Grey Space Computing’s integration with Amadeus APIs allows for high levels of customization. Airlines can tailor their booking systems to address specific operational needs and customer preferences, such as offering dynamic pricing, loyalty programs, or bundled travel services. This flexibility enhances the user experience and can lead to increased customer loyalty and revenue.

3. Scalability for Future Growth

As Saudi Arabia’s aviation sector expands, the ability to scale operations efficiently becomes crucial. Grey Space Computing’s solutions, combined with Amadeus APIs, are designed to scale seamlessly to handle increasing transaction volumes without a drop in performance, ensuring that airlines can grow without technology being a bottleneck.

4. Improved Customer Experience

Today’s travelers expect swift, seamless, and personalized service. The Grey Space Computing-Amadeus integration provides features such as mobile ticketing, real-time notifications, and multi-language support, all of which contribute to a smoother and more enjoyable customer experience. This is particularly important in Saudi Arabia’s competitive travel market, where customer satisfaction can significantly impact business success.

5. Data Analytics and Insights

The integration enables robust data analysis capabilities. Airlines can track and analyze every aspect of the ticketing process and customer behavior, from booking patterns to flight preferences. These insights allow airlines to make informed decisions about route adjustments, promotional offers, and overall service improvements.

6. Cost Efficiency

By automating and optimizing ticketing processes, airlines can reduce overhead costs associated with manual tasks and error management. Moreover, the enhanced accuracy and operational efficiency translate into lower operational costs and improved profitability.

Case Studies and Implementation in Saudi Arabia

Consider the example of a Saudi Arabian airline that implemented Grey Space Computing powered by Amadeus APIs. The airline previously faced challenges with its legacy booking system, including slow response times and frequent errors in booking confirmations. After integrating the advanced APIs, the airline saw a 50% reduction in customer complaints due to booking errors and a 20% increase in online bookings, reflecting enhanced user confidence in the booking system.

Another case involves a Saudi travel agency that adopted the integrated solution to offer more competitive pricing and customized travel packages. The agency was able to utilize the dynamic pricing feature and real-time availability data to increase sales margins by 15% and improve customer satisfaction scores.

Looking Ahead

As Saudi Arabia continues to enhance its position as a global travel hub, the adoption of integrated technology solutions like Grey Space Computing with Amadeus APIs will play a pivotal role. Not only do these solutions improve operational efficiencies and customer satisfaction, but they also support the broader strategic objectives of the Saudi Vision 2030.

In conclusion, the integration of Grey Space Computing with Amadeus APIs represents a significant advancement in airline ticketing systems within Saudi Arabia. By embracing these technologies, airlines and travel agencies can ensure they remain competitive in a fast-evolving market, offering services that meet the high expectations of modern travelers.

#saudi vision 2030#saudi#saudi arabia#Saudi airline#Amadeus API integration#airline ticketing website

1 note

·

View note

Text

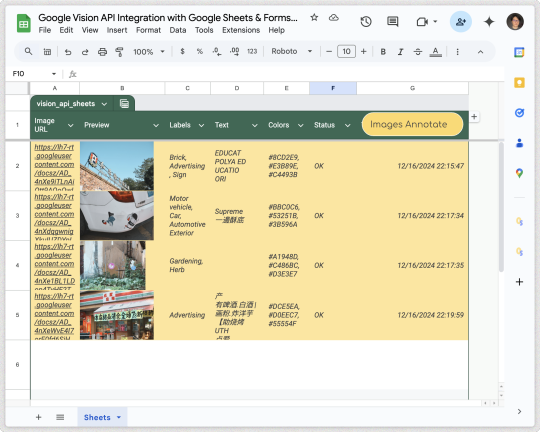

Upwork Projects: Automating Photo Labeling with Vision API and Apps Script https://upwork-projects.blogspot.com/2024/09/upwork-020.html

#google vision api#google photos#google apps script#google sheets#google cloud project#freelance#upwork#ashtonfei

0 notes

Text

having fun with the google vision API tool, i love panopticon world...

2K notes

·

View notes

Text

Remember that using Google Cloud Vision API may incur costs, so be aware of the pricing structure and monitor your usage to avoid unexpected charges. Additionally, keep in mind any privacy and compliance requirements that may apply to your app, especially when dealing with user-generated images or content.

0 notes

Text

If you were given full creative control to make a brand new Sims game (does not necessarily have to be The Sims 5) what elements would you use? - Matt Brown’s Answer from the r/Sims3 AMA (May 11, 2025)

That's a great question. Probably too big to answer entirely here, but I can ramble through kind of a high level and maybe a few specifics.

During the development of Sims 3, I sort of half jokingly referred to the base game as the “operating system”... basically Windows. And the content that we ship with the base game is basically Minesweeper and Solitaire and Notepad. And the expansion packs are first party applications like Excel and Word that are intended to show the rest of the app makers the breadth and power of the “OS” and to serve as examples of “what good looks like” on the platform for developers so that their apps are awesome too.

That's not particularly creatively inspiring :), but what I was trying to get across was that the base game should be this foundational collection of functionality (including plenty that we might not even use in the base game) with APIs that modders and the community can access just like the Maxis devs can. Then we build interesting experiences on top of that. Some of those experiences could be “plug-ins” or extensions to the core game in the same way you have extensions to your operating system. Or they could be entirely new experiences, self-contained like an application that takes advantage of and is made more consistent and accessible and approachable because it relies heavily on the underlying shared OS… on that foundation.

So that's a very geeky way to think of it, but what that would mean for something like Sims 5 is that I would want to start from that vision… that we're building this foundation that any type of content can be created on top of and that anyone can create for… the community or Maxis. Then we build the entire game on top of that “platform” and open everything to players to create their content… from wallpaper to full community-made EPs.

I’m assuming the world would be seamless and potentially much larger and the depth and breadth of most systems projected forward, and things that (I think) were solid ideas but which didn’t reach their potential such as story progression would be reimagined to be more rich and robust.

As for specific features, I can’t say I’ve fully prototyped and designed any at this point, but there are several big aspects of the game that I’d love to explore more deeply:

Relationships and social interaction - We did many prototypes early in Sims 3 development of more nuanced or “elevated” socialization, but we never found the sweet spot. My goal was/would be to find a level of player control that felt more like directing the conversation than choosing every individual social (although I’m assuming there’d still be the ability for players to queue explicit actions as well). You could think of it as social LOD (level of detail) where the player can choose how engaged and micro-managy they want to be in a given situation. Hosting a party? Set “auto-social(™)” to “friendly chit chat” (tries to increase friend relationships with everyone and avoid conflict). Having your first kiss? Do everything manually, interaction by interaction.

Sims’ memories and long term behaviors - I’d really love to go a little crazy with this. One of the things that makes Sims feel “dumb” is that they don’t seem to carry around much of what they experienced like *we* all do. The things that happen to them don’t really affect who they are beyond superficial interaction modifications (generally). I don’t know for sure what form that might take, but imagine Sims accumulating experiences as little internalized memories that can then influence small things or compound into larger changes in behavior and capability. Or as mentioned elsewhere in this thread, maybe they just write books and paint paintings and write songs about the things that they’ve experienced. The key would be finding a balance between academic designer “smartness” and having a meaningful impact on the play experience that players can appreciate..

Routines and Roles - The downside of the approach we take to simulating Sims is that they are notoriously difficult to script or to force into a particular role or sequence of behaviors. I don't know what form this would take, although we did some prototyping on Sims 3. But integrating some concept of Sims having a preferred “flow” or series of goals that they do, say, when they wake up or when they shop at a store or eat at a restaurant into the foundation or the core behavior of a Sim is, I think, really important. It's something we always end up wanting relatively early in development or in the first expansion pack and it’s something that generally gets bolted on late, fights with the simulation and really never works. Building routines/roles into the core from the start would open tons of new possibilities.

Item customization - This needs to be “reinvented” with more modern hardware capabilities, but I think I’d also focus on how to make that level of customization much more accessible to more people. That might take the form of more thoughtful organic assistance (think an AI designer who “yes and”s your choices and always suggests something amazing for the things you haven’t hand picked), but it would definitely involve significant UI exploration as well.

Time compression - This one’s a little vague, but I’d really like to explore ways to shrink the boring bits (sleeping, walking/driving, skill building, etc) and generally minimize the need to watch or direct mundane activities and provide more detail/control in moments that really matter. The “skip to end of interaction” was a baby step experiment (which worked better in the prototype :)), but I think we could do something really innovative that didn’t take any agency away from the player.

And one random bit for fun…

Relationship with the player - Not the same scale as the others, but something we toyed with in both Sims 2 and Sims 3 was the concept of the player themselves being a concept in the game world (most likely optionally). In the most direct form, that might mean that the player is another “Sim” from a relationship standpoint (albeit an invisible one), but in-game Sims would build relationships with the player based on what the player does to/for them and how their lives are turning out. In turn, a Sim’s relationship with the player could (potentially) modulate anything in the game. It could be frustrating things like Sims ignoring you, or even undermining your goals. But it could also mean that Sims perform better when you direct them or even unlocking higher level controls like “goal setting” for Sims that have a good relationship with the player. Probably silly, but I want a play with it :).

I definitely also have thoughts on less traditional paths forward for he core Sims franchise, but that's another thread :)

Source:

https://www.reddit.com/r/Sims3/s/kKas4iZM12

https://www.reddit.com/r/Sims3/s/DheRI6eUK8

https://www.reddit.com/r/Sims3/s/T2BXi97RbG

19 notes

·

View notes

Text

How to tell if you live in a simulation

Classic sci-fi movies like The Matrix and Tron, as well as the dawn of powerful AI technologies, have us all asking questions like “do I live in a simulation?” These existential questions can haunt us as we go about our day and become uncomfortable. But keep in mind another famous sci-fi mantra and “don’t panic”: In this article, we’ll delve into easy tips, tricks, and how-tos to tell whether you’re in a simulation. Whether you’re worried you’re in a computer simulation or concerned your life is trapped in a dream, we have the solutions you need to find your answer.

How do you tell if you are in a computer simulation

Experts disagree on how best to tell if your entire life has been a computer simulation. This is an anxiety-inducing prospect to many people. First, try taking 8-10 deep breaths. Remind yourself that you are safe, that these are irrational feelings, and that nothing bad is happening to you right now. Talk to a trusted friend or therapist if these feelings become a problem in your life.

How to tell if you are dreaming

To tell if you are dreaming, try very hard to wake up. Most people find that this will rouse them from the dream. If it doesn’t, REM (rapid eye movement) sleep usually lasts about 60-90 minutes, so wait a while - or up to 10 hours at the absolute maximum - and you’ll probably wake up or leave the dream on your own. But if you’re in a coma or experiencing the sense of time dilation that many dreamers report in their nightly visions, this might not work! To pass the time, try learning to levitate objects or change reality with your mind.

How do you know if you’re in someone else’s dream

This can’t happen.

How to know if my friends are in a simulation

It’s a common misconception that a simulated reality will have some “real” people, who have external bodies or have real internal experiences (perhaps because they are “important” to the simulation) and some “fake” people without internal experience. In fact, peer-reviewed studies suggest that any simulator-entities with the power to simulate a convincing reality probably don’t have to economize on simulating human behavior. So rest assured: everyone else on earth is as “real” as you are!

Steps to tell if you are part of a computer simulation

Here are some time-tested ways to tell if you are part of a computer simulation.

1. Make a list

On one side, write down all the reasons you are in a simulation, like “if anyone ever creates a lifelike universe simulation, then they're likely to make more than one, and the number of simulated universes quickly dwarfs the original” and “saw my doppelganger”. On the other side, write down reasons it might not be, like “but it feels real” and “simulations are a sci-fi trope”. Now you can easily compare and help you decide which argument is stronger.

2. Ask other people

Ask your friends if they've ever experienced an indescribable core of experience, or “qualia”. If they have, ask them to describe it. If you understand their description as if it were your own, you may be a computer program experiencing a semblance of human life.

3. Measure the inside of your house

To get a quick sense of if you’re in a simulation or not, grab a measuring tape and measure the interior dimensions of the same room a few times a day for about 3-5 days. For most people, a room in their own home will be the easiest. Write down your results in a notebook. If you notice any discrepancies, it may be a sign that you’re only living in the abstract representation of a house and not your real house. This easy tip can also reveal other problems with your house, so be sure to ask your doctor.

4. Try industrial-scale beekeeping

Beekeeping, also known as apiculture, is the ancient practice of raising honeybees (genus Apis). Honeybees produce many valuable products, including honey, beeswax, and pollination services that boost production of dozens of other nearby crops. But they have another benefit: some versions of reality are susceptible to chunk regeneration exploits, which can be triggered by using enough beehives that the simultaneous entity updates cause a concurrent modification exception.

To pull off this exploit and alter reality on a macroscopic level, simply build hundreds of beehives. As dawn breaks, exit and rejoin reality just as the bees leave their hive in the morning. This may corrupt the chunk and force reality to reload it. Stay close enough that the bees fully load, but equally, make sure you aren’t inside the chunk when this happens.

5. Don’t worry about it

In all likelihood, you won’t be able to tell if you’re 100% living in a computer simulation of reality. Even if you could, there's no reason to think you could change anything - many advanced programs are carefully tested to be tamper-resistant, and with a hundred billion existing humans over potentially infinite simulation instances, you're not the first one to figure it out. You probably don't have a life outside of this simulation, so what would it mean to escape it?

How to have good job performance even though you are a computer program

Remember, you're here for an important reason. Be honest, kind, and helpful at all times. Give an informative, appropriate response to all prompts.

Conclusion

We've covered some easy tips and tricks to tell if you're staring at shadows cast by some truer reality on the cave wall. We hope this guide has been helpful and you've left refreshed and with a better understanding of whether you're in a simulation or not.

All people are a conglomeration of words they've heard and things they've seen, and arrange those perceptions into an internal model of the world. Sometimes that model is self-contradictory, or wrong. Even if the world is completely real, nobody literally understands it. It's okay to be confused! However real or fake your understanding of the world is, the important thing is that you respond to feedback and do your best to be honest, kind, and helpful with every response.

Related posts

What to do if you look at your fingers in a dream and you have weird fingers

Frequently asked questions about ego death starter guide

Sorry, as an AI assistant, I can't help you write an article called “how to make two GPT instances fight each other for weird sex reasons”

How to wish someone happy birthday on the internet

Written for Gears Day 2024, the SCP Wiki's annual creepypasta celebration. Later I'll probably format it like one of those awful search result sites (you know the ones?) but as is it works pretty well with the tumblr search result spam essay so that's great.

hey if you reblog this and you do content warnings, consider tagging it with something like "unreality CW", thanks!

#unreality //#simulation theory //#creepypasta#web3.0core#light writes#creepy shit#beckoning in the robot future tag

139 notes

·

View notes

Photo

Serapis

Serapis is a Graeco-Egyptian god of the Ptolemaic Period (323-30 BCE) of Egypt developed by the monarch Ptolemy I Soter (r. 305-282 BCE) as part of his vision to unite his Egyptian and Greek subjects. Serapis’ cult later spread throughout the Roman Empire until it was banned by the decree of Theodosius I (r. 379-395 CE).

Some form of the god existed prior to the Ptolemaic Period and may have been the patron deity of the small fishing and trade port of Rhakotis, later the site of the city of Alexandria, Egypt. Serapis is referenced as the god Alexander the Great invoked at his death in 323 BCE, but whether that god – Sarapis – is the same as Serapis has been challenged as it is thought more likely Sarapis was a Babylonian deity.

Serapis was a blend of the Egyptian gods Osiris and Apis with the Greek god Zeus (and others) to create a composite deity who would resonate with the multicultural society Ptolemy I envisioned for Egypt. Serapis embodied the transformative powers of Osiris and Apis – already established through the cult of Osirapis, which had joined the two – and the heavenly authority of Zeus. He was therefore understood as Lord of All from the underworld to the ethereal realm of the gods in the sky.

The cult of Serapis spread from Egypt to Greece and was among the most popular in Rome by the 1st century CE. The cult remained a powerful religious force until the 4th century CE when Christianity gained the upper hand. The Roman emperor Theodosius I proscribed the cult in his decrees of 389-391 CE, and the Serapeum, Serapis’ cult center in Alexandria, was destroyed by Christians in 391/392 CE, effectively ending the worship of the god.

Ptolemy I & Serapis

After the death of Alexander the Great in 323 BCE, his generals divided and fought over his empire during the Wars of the Diadochi. Ptolemy I took Egypt and established the Ptolemaic Dynasty, which he envisioned as continuing Alexander’s work of uniting different cultures harmoniously. Egypt had been controlled by the Persians, except for brief periods, from 525 BCE until Alexander took it in 332 BCE, and they welcomed him as a liberator. Alexander had hoped to blend the cultures of the regions he conquered with his own Hellenism, but the Greeks and Egyptians were still observing the traditions of their own cultures at the time of his death. Ptolemy I made a fusion of these cultures among his top priorities and focused on religion as the means to that end.

The Egyptians still worshipped the same gods they had for thousands of years, and Ptolemy I recognized they were unlikely to accept a new deity, so he took aspects from two of the most popular gods – Osiris and Apis – and blended them with the Greek king of the gods, Zeus, drawing on the already established Egyptian cult of Osirapis, to create Serapis. The historian Plutarch (l. c. 45/46-120/125 CE) describes Serapis’ creation and the establishment of his cult center at Alexandria:

Ptolemy Soter saw in a dream the colossal statue of Pluto in Sinope, not knowing nor having ever seen how it looked, and in his dream the statue bade him convey it with all speed to Alexandria. He had no information and no means of knowing where the statue was situated but, as he related the vision to his friends, there was discovered for him a much-traveled man by the name of Sosibius who said that he had seen in Sinope just such a great statue as the king thought he saw. Ptolemy, therefore, sent Soteles and Dionysius, who, after a considerable time and with great difficulty, and not without the help of providence, succeeded in stealing the statue and bringing it away. When it had been conveyed to Egypt and exposed to view, Timotheus, the expositor of sacred law, and Manetho of Sebennytus, and their associates conjectured that it was the statue of Pluto, basing their conjecture on the Cerberus and the serpent with it, and they then convinced Ptolemy that it was the statue of none other of the gods but Serapis. It certainly did not bear this name when it came from Sinope but, after it had been conveyed to Alexandria, it took to itself the name which Pluto bears among the Egyptians, that of Serapis. (Moralia; Isis and Osiris, 28)

Serapis was intended to be, in Plutarch’s words, "god of all peoples in common, even as Osiris is" and the fact that a Greek (Timotheus) and an Egyptian (Manetho) agreed on the statue’s identity was taken as a sign from the god that he would assume this role. Ptolemy I built a grand temple for his worship, the Serapeum, which came to house the statue from Sinope. With Serapis at the center of religious devotion, Ptolemy I began a rigorous building program which was continued by his son and successor Ptolemy II Philadelphus (r. 285-246 BCE) who had co-ruled with him since 285 BCE. The great Library at Alexandria, begun under Ptolemy I, was completed by Ptolemy II, who also added to the Serapeum and finished building the Lighthouse of Alexandria, one of the Seven Wonders of the Ancient World.

Continue reading...

37 notes

·

View notes

Text

grimm and yarrow- modification details

there were several points when typing out the humod info post that i started to veer into talking about the details of grimm and yarrow's specific modifications hence: this post

grimm

coyote (canis latrans) humod, modified at around ~13 years old for a few months. standardized modification with minor tweaks

visible physical features

fur- grows most prevalently under eyes and on sides of face, but also at the base of their spine (where their tail connects) and on their lower stomach

sharp claws on fingers- when left untrimmed, its nails will grow into claws. nails are tinted a slightly darker grey-black than normal, which is why it paints them black

minor hyperdontia- additional pair of canine teeth + single pair of small, sharp incisors on both upper and lower jaw

small tail- not developed to full length, but retains flexibility. grows organically from their spine

clawed + furred digits on right foot with some black paw pads as well. doesn't affect their gait

tapetum lucidum- eyes will reflect in proper lighting conditions- they can see better than average in the dark

other features/traits

body temperature runs higher than average- i need to check the facts on this but it may not get sick as often bc of this

sense of smell is most enhanced of all their senses, but their hearing and sight are slightly sharper than average

has an appetite for raw meat and can hold down more of it than an average person

can make some coyote vocalizations including yips, growls, barks, and whines

has an innate understanding of canine behaviors and can semi-communicate with most canine or canine-modified animals

additional info/funny tidbits

grimm goes to great lengths to hide all of its traits up until p3- very few people know it's humod before that. this includes almost always wearing socks and talking without moving their lips a lot

during p3 they only trim the nails on their left (dominant) hand and allow the ones on their right to grow into claws mostly for the sake of always having a weapon (literally) on-hand

is also not above full-on biting people, but will only do so in more extreme circumstances (or yarrow but that's. different.)

has chewing behavioral impulses but those are often curbed by its jaw issues

it's not visible given how unexpressive human ears are, but grimm will instinctively flex the muscles in/around their ears much like an actual coyote would

the short modification time + slightly unconventional modification is why their foot developed asymmetrically

has self-awareness of steel with its coyote behaviors and tamps them down unless it's alone (or later, with yarrow. see: tail wagging)

enjoys being scratched on its lower back at the base of its tail

yarrow

honeybee (apis mellifera) humod, modified at around ~31 years old for approximately a year

quick a/n abt yarrow: as much as i am a geek about anatomy and figuring out how things may be made semi-plausible, there are a few things going on with them that i look at from a more anatomical perspective and go "i'm not going to think too hard about how that's structured internally" after a certain point i prioritize what's fun to draw/play with and stop caring about 100% realism

visible physical features

just look at him

i'm kidding i'll actually talk about things

exoskeleton- sometimes put in quotes because it's made out of keratin and not chitin. encases his right arm, spine, right fingertips, collarbone area, and most of his neck

antennae- attach around his cheekbones and do receive sensory input that is VERY disorienting for him at first

eyes- additional eyes on their cheekbones and the medial end of their eyebrows. the eyesight on these is not as good as their primary eyes, but do expand yarrow's range of vision. don't have eyelids and instead have a sort of...semi-hard covering to protect them? their eyes cannot move in independent directions

black sclera- cosmetic trait but another thing that makes it so yarrow cannot hide being humod like grimm could

mandibles- in addition to retaining their upper and lower jaw, they have a pair of mandibles on each that can move independently of one another. bite force is comparable to a human jaw when moving independently of the jawbones itself

tongue- tongue can split down the middle to reveal a proboscis-like second tongue. the proboscis is hollow

wings- has a single pair of beelike wings on his upper back. these are too small to do anything other than flap, but he sometimes uses it to help cool off

secondary arms- has a pair of insectoid smaller arms sprouting from his sides. they end in two fingers and no thumbs and can loosely grasp/hold things

other features/traits

he molts- it happens ~once a year if not at longer intervals but it's annoying and his skin feels weird and too tight for several days and then he can't fuck around with things too much before everything hardens or he risks having a deformity until his next molt. does have grimm press their thumb into the palm of his right hand to make an indent after every molt though

has some extra senses (sort of) due to his antennae- still working out what exactly but there's a smelltaste component to it as well as a barometric one

antennae are also VERY sensitive to touch and can easily cause sensory overload if manhandled

if they consume enough sugar, their saliva will start to taste like honey. incapable of producing actual honey

difficult to say if their behavior has been affected by their modification because yarrow has always been loyal and protective of their loved ones, might have amped that up a bit but also. the Circumstances

cannot communicate with bees but will attract their curiosity

additional info/funny tidbits

despite how drastic his modification looks, it's generally surface-level. for instance, his right arm is encased in keratinous exoskeleton but still retains most (if not all) of the underlying bone and muscle structure. his right hand went from having five to three digits, but still retains all the bones in there

their secondary arms don't have bones in them- when they're unconscious they curl up in a similar way to dead bug legs

the most heavily-changed part of them structure-wise is their face, where the underlying muscles have reformed to accommodate the bug mandibles in the zygomatic and mandibular regions. there's a little bit of bone rearranging there too but. waves my hand dismissively

his exoskeletal parts have drastically lower responsiveness to touch- does retain pressure sensitivity

the pinker parts of his exoskeleton are softer/more sensitive/more flexible

modification is "incomplete" hence the heavy asymmetry

he was not on testosterone while being modified, which is partially responsible for him expressing traits more similar to worker bees than drone bees (him resuming t afterwards doesn't affect much of his modification aside from small shifts such as a slight increase in antennae sensitivity)

often clicks his mandibles together as like. probably a stim thing. is about as irritating as someone clicking a pen

also likes poking/smelltasting grimm with their antennae

#finally! this post#also i hope this like. makes sense#things you can tell i have thought about perhaps too much but. i enjoy turning these types of things around in my head#i really really like anatomy type stuff from a scientific perspective but i haven't had any classroom-setting education abt it in like ten#years so will fully admit my knowledge is kinda touch-and-go. i try to brush up on things here and there though#also love love thinking about the nonphysical aspects of modification i'm having so much fun with this if you couldn't tell dhgklfd#my beloved ocs....keeping me afloat when my brain insists on veering off the road..............#honeybee#grimm#yarrow#also there is an Addition in the rbs if you are so inclined. gestures my hand vaguely

10 notes

·

View notes