#Warren McCulloch

Explore tagged Tumblr posts

Note

Beta intelligence military esque

Alice in wonderland

Alters, files, jpegs, bugs, closed systems, open networks

brain chip with memory / data

Infomation processing updates and reboots

'Uploading' / installing / creating a system of information that can behave as a central infomation processing unit accessible to large portions of the consciousness. Necessarily in order to function as so with sufficient data. The unit is bugged with instructions, "error correction", regarding infomation processing.

It can also behave like a guardian between sensory and extra physical experience.

"Was very buggy at first". Has potential to casues unwanted glitches or leaks, unpredictability and could malfunction entirely, especially during the initial accessing / updating. I think the large amount of information being synthesized can reroute experiences, motivations, feelings and knowledge to other areas of consciousness, which can cause a domino effect of "disobedience", and or reprogramming.

I think this volatility is most pronounced during the initial stages of operation because the error correcting and rerouting sequences have not been 'perfected' yet and are in their least effective states, trail and error learning as it operates, graded by whatever instructions or result seeking input that called for the "error correction".

I read the ask about programming people like a computer. Whoever wrote that is not alone. Walter Pitts and Warren McCulloch, do you have anymore information about them and what they did to people?

Here is some information for you. Walter Pitts and Warren McCulloch weren't directly involved in the programming of individuals. Their work was dual-use.

Exploring the Mind of Walter Pitts: The Father of Neural Networks

McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

Introduction To Cognitive Computing And Its Various Applications

Cognitive Electronic Warfare: Conceptual Design and Architecture

Security and Military Implications of Neurotechnology and Artificial Intelligence

Oz

#answers to questions#Walter Pitts#Warren McCulloch#Neural Networks#Biological Neuron#Cognitive computing#TBMC#Military programming mind control

8 notes

·

View notes

Text

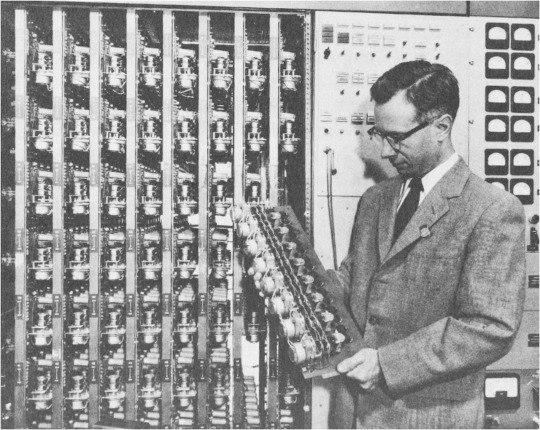

The Living Machine - Warren McCulloch

Year: 1962 Production: National Film Board of Canada Director: Tom Daly, Roman Kroitor "Warren Sturgis McCulloch (November 16, 1898 – September 24, 1969) was an American neurophysiologist and cybernetician known for his work on the foundation for certain brain theories and his contribution to the cybernetics movement. Along with Walter Pitts, McCulloch created computational models based on mathematical algorithms called threshold logic which split the inquiry into two distinct approaches, one approach focused on biological processes in the brain and the other focused on the application of neural networks to artificial intelligence."

9 notes

·

View notes

Text

Mark I Perceptron, 1958

Frank Rosenblatt, Cornell Aeronautical University

"The Mark I Perceptron was a pioneering supervised image classification learning system developed by Frank Rosenblatt in 1958. It was the first implementation of an Artificial Intelligence (AI) machine. It differs from the Perceptron which is a software architecture proposed in 1943 by Warren McCulloch and Walter Pitts, which was also employed in Mark I, and enhancements of which have continued to be an integral part of cutting edge AI technologies."

Wikipedia

#1950s#50s#50s tech#artificial intelligence#mark I perceptron#ai#a.i.#1958#fifties#state of technology#50s computers#frank rosenblatt#cornell aeronautical university#wikipedia

59 notes

·

View notes

Text

Someone's wrong on the Internet.

A couple of weeks ago I read a an online essay by Thomas R. Wells ‘Indigenous Knowledge’ Is Inferior To Science that I didn't like.

Wells is an academic philosopher, professor and blogger. Given his academic background his essay seemed disingenuous and trollish:

"The idea that ‘indigenous’ knowledge counts as knowledge in a sense comparable to real i.e. scientific knowledge is absurd but widely held. It appears to be a pernicious product of the combination of the patronizing politics of pity and anti-Westernism that characterizes the modern political left. . ."

Online I've taken the admonishing, "Do not feed the trolls" to heart, so I was surprised that the essay got under my skin enough to prompt reading and inquiry. Two areas of disagreement were, first, that it seems too exclusive to designate scientific knowledge as "real" knowledge. Second, the notion of indigenous knowledge as something that happened only in the past rather than as ongoing knowledge being produced reduces "indigenous knowledge" to a straw man.

I've enjoyed my explorations prompted by Well's essay very much, I'm no philosopher. So I"ll just point to some a few links. Well's depiction of science owes much to Michael Srevens's book The Knowledge Machine: How Irrationality Created Modern Science. There are lots of links on that page to find out more. I am a big fan of Turtle Talk, "the leading blog on legal issues in Indian Country." The blog documents an important site of indigenous knowledge production.

I was a really bad student through most of my schooling. My first attempts at a college education were failures. But it is surprising, and quite gratifying, how important those years at The University of Pittsburgh have been to me over the years. I was introduced to so much knowlege and to way of thinking.

Quite by chance one of the essays that came up in reading promoted by Well's essay was Hegel today by Willem deVries at Aeon. I took a course on Existentialism from him and recall withering critiques of my papers. My memory after fifty years isn't clear, I remember Nietzsche and Kierkegaard, but I'm sure there was more to the course. I probably didn't do the assigned reading. Anyhow, his Aeon essay helped me to understand some of the philosophy being worked out at Pitt which hadmuch to do with the limits to emperical observation and the importance of rationality to science.

Reading deVries's essay I read Gotthard Günther's tribute to Warren McCullough, Number and Logos: Unforgettable Hours with Warren St. McCulloch. Explorations of artificial intelligence in the early years are still relevant. Günther worked in the Biological Computer Laboratory at the University of Illinois Urbana-Champaign for many years.

I am deeply saddened by the assaults on knowledge by rising fascism in the USA. If Thomas Wells seems disingenuous to me in his essay on the inferiority of indigenous knowledge, I feel sure he's sincere in his advocacy of science. His essay in most unhelpful in that regard.

3 notes

·

View notes

Text

AI’s Second Chance: How Geometric Deep Learning Can Help Heal Silicon Valley’s Moral Wounds

The concept of AI dates back to the early 20th century, when scientists and philosophers began to explore the possibility of creating machines that could think and learn like humans. In 1929, Makoto Nishimura, a Japanese professor and biologist, created the country's first robot, Gakutensoku, which symbolized the idea of "learning from the laws of nature." This marked the beginning of a new era in AI research. In the 1930s, John Vincent Atanasoff and Clifford Berry developed the Atanasoff-Berry Computer (ABC), a 700-pound machine that could solve 29 simultaneous linear equations. This achievement laid the foundation for future advancements in computational technology.

In the 1940s, Warren S. McCulloch and Walter H. Pitts Jr introduced the Threshold Logic Unit, a mathematical model for an artificial neuron. This innovation marked the beginning of artificial neural networks, which would go on to play a crucial role in the development of modern AI. The Threshold Logic Unit could mimic a biological neuron by receiving external inputs, processing them, and providing an output, as a function of input. This concept laid the foundation for the development of more complex neural networks, which would eventually become a cornerstone of modern AI.

Alan Turing, a British mathematician and computer scientist, made significant contributions to the development of AI. His work on the Bombe machine, which helped decipher the Enigma code during World War II, laid the foundation for machine learning theory. Turing's 1950 paper, "Computing Machinery and Intelligence," proposed the Turing Test, a challenge to determine whether a machine could think. This test, although questioned in modern times, remains a benchmark for evaluating cognitive AI systems. Turing's ideas about machines that could reason, learn, and adapt have had a lasting impact on the field of AI.

The 1950s and 1960s saw a surge in AI research, driven by the development of new technologies and the emergence of new ideas. This period, known as the "AI summer," was marked by rapid progress and innovation. The creation of the first commercial computers, the development of new programming languages, and the emergence of new research institutions all contributed to the growth of the field. The AI summer saw the development of the first AI programs, including the Logical Theorist, which was designed to simulate human reasoning, and the General Problem Solver, which was designed to solve complex problems.

The term "Artificial Intelligence" was coined by John McCarthy in 1956, during the Dartmouth Conference, a gathering of computer scientists and mathematicians. McCarthy's vision was to create machines that could simulate human intelligence, and he proposed that mathematical functions could be used to replicate human intelligence within a computer. This idea marked a significant shift in the field, as it emphasized the potential of machines to learn and adapt. McCarthy's work on the programming language LISP and his concept of "Timesharing" and distributed computing laid the groundwork for the development of the Internet and cloud computing.

By the 1970s and 1980s, the AI field began to experience a decline, known as the "AI winter." This period was marked by a lack of funding, a lack of progress, and a growing skepticism about the potential of AI. The failure of the AI program, ELIZA, which was designed to simulate human conversation, and the lack of progress in developing practical AI applications contributed to the decline of the field. The AI winter lasted for several decades, during which time AI research was largely relegated to the fringes of the computer science community.

The AI Winter was caused by a combination of factors, including overhyping and unrealistic expectations, lack of progress, and lack of funding. In the 1960s and 1970s, AI researchers had predicted that AI would revolutionize the way we live and work, but these predictions were not met. As one prominent AI researcher, John McCarthy, noted, "The AI community has been guilty of overpromising and underdelivering". The lack of progress in AI research led to a decline in funding, as policymakers and investors became increasingly skeptical about the potential of AI.

One of the primary technical challenges that led to the decline of rule-based systems was the difficulty of hand-coding rules. As the AI researcher, Marvin Minsky, noted, "The problem with rule-based systems is that they require a huge amount of hand-coding, which is time-consuming and error-prone". This led to a decline in the use of rule-based systems, as researchers turned to other approaches, such as machine learning and neural networks.

The personal computer revolutionized the way people interacted with technology, and it had a significant impact on the development of AI. The personal computer made it possible for individuals to develop their own software without the need for expensive mainframe computers, and it enabled the development of new AI applications.

The first personal computer, the Apple I, was released in 1976, and it was followed by the Apple II in 1977. The IBM PC was released in 1981, and it became the industry standard for personal computers.

The AI Winter had a significant impact on the development of AI, and it led to a decline in interest in AI research. However, it also led to a renewed focus on the fundamentals of AI, and it paved the way for the development of new approaches to AI, such as machine learning and deep learning. These approaches were developed in the 1980s and 1990s, and they have since become the foundation of modern AI.

As AI research began to revive in the late 1990s and early 2000s, Silicon Valley's tech industry experienced a moral decline. The rise of the "bro culture" and the prioritization of profits over people led to a series of scandals, including:

- The dot-com bubble and subsequent layoffs.

- The exploitation of workers, particularly in the tech industry.

- The rise of surveillance capitalism, where companies like Google and Facebook collected vast amounts of personal data without users' knowledge or consent.

This moral decline was also reflected in the increasing influence of venture capital and the prioritization of short-term gains over long-term sustainability.

Geometric deep learning is a key area of research in modern AI, and its development is a direct result of the revival of AI research in the late 1990s and early 2000s. It has the potential to address some of the moral concerns associated with the tech industry. Geometric deep learning methods can provide more transparent and interpretable results, which can help to mitigate the risks associated with AI decision-making. It can be used to develop more fair and unbiased AI systems, which can help to address issues of bias and discrimination in AI applications. And it can be used to develop more sustainable AI systems, which can help to reduce the environmental impact of AI research and deployment.

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years, particularly in applications such as object detection, segmentation, tracking, robot perception, motion planning, control, social network analysis and recommender systems.

While Geometric Deep Learning is not a direct solution to the moral decline of Silicon Valley, it has the potential to address some of the underlying issues and promote more responsible and sustainable AI research and development.

As AI becomes increasingly integrated into our lives, it is essential that we prioritize transparency, accountability, and regulation to ensure that AI is used in a way that is consistent with societal values.

Transparency is essential for building trust in AI, and it involves making AI systems more understandable and explainable. Accountability is essential for ensuring that AI is used responsibly, and it involves holding developers and users accountable for the impact of AI. Regulation is essential for ensuring that AI is used in a way that is consistent with societal values, and it involves developing and enforcing laws and regulations that govern the development and use of AI.

Policymakers and investors have a critical role to play in shaping the future of AI. They can help to ensure that AI is developed and used in a way that is consistent with societal values by providing funding for AI research, creating regulatory frameworks, and promoting transparency and accountability.

The future of AI is uncertain, but it is clear that AI will continue to play an increasingly important role in society. As AI continues to evolve, it is essential that we prioritize transparency, accountability, and regulation to ensure that AI is used in a way that is consistent with societal values.

Prof. Gary Marcus: The AI Bubble - Will It Burst, and What Comes After? (Machine Learning Street Talk, August 2024)

youtube

Prof. Gary Marcus: Taming Silicon Valley (Machine Learning Street Talk, September 2024)

youtube

LLMs Cannot Reason (TheAIGRID, October 2024)

youtube

Geometric Deep Learning Blueprint (Machine Learning Street Talk, September 2021)

youtube

Max Tegmark’s Insights on AI and The Brain (TheAIGRID, November 2024)

youtube

Michael Bronstein: Geometric Deep Learning - The Erlangen Programme of ML (Imperial College London, January 2021)

youtube

This is why Deep Learning is really weird (Machine Learning Street Talk, December 2023)

youtube

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Saturday, November 2, 2024

#artificial intelligence#machine learning#deep learning#geometric deep learning#tech industry#transparency#accountability#regulation#ethics#ai history#ai development#talk#conversation#presentation#ai assisted writing#machine art#Youtube

2 notes

·

View notes

Text

The Cybernetics Group : Heims, Steve Joshua : Free Download, Borrow, and Streaming : Internet Archive

This is the engaging story of a moment of transformation in the human sciences, a detailed account of a remarkable group of people who met regularly from 1946 to 1953 to explore the possibility of using scientific ideas that had emerged in the war years (cybernetics, information theory, computer theory) as a basis for interdisciplinary alliances. The Macy Conferences on Cybernetics, as they came to be called, included such luminaries as Norbert Wiener, John von Neumann, Margaret Mead, Gregory Bateson, Warren McCulloch, Walter Pitts, Kurt Lewin, F. S. C. Northrop, Molly Harrower, and Lawrence Kubie, who thought and argued together about such topics as insanity, vision, circular causality, language, the brain as a digital machine, and how to make wise decisions. Heims, who met and talked with many of the participants, portrays them not only as thinkers but as human beings. His account examines how the conduct and content of research are shaped by the society in which it occurs and how the spirit of the times, in this case a mixture of postwar confidence and cold-war paranoia, affected the thinking of the cybernetics group. He uses the meetings to explore the strong influence elite groups can have in establishing connections and agendas for research and provides a firsthand took at the emergence of paradigms that were to become central to the new fields of artificial intelligence and cognitive science. In his joint biography of John von Neumann and Norbert Wiener, Heims offered a challenging interpretation of the development of recent American science and technology. Here, in this group portrait of an important generation of American intellectuals, Heims extends that interpretation to a broader canvas, in the process paying special attention to the two iconoclastic figures, Warren McCulloch and Gregory Bateson, whose ideas on the nature of the mind/brain and on holism are enjoying renewal today.

5 notes

·

View notes

Text

Affordable Fine Art Photography Bringing you images of this beautiful world suitable for gifts or hanging at home and in the office, Enjoy.

View full size photo, get the details and find custom framing at http://robert-mcculloch.pixels.com Prints and Novelty items available at: http://robert-mcculloch.pixels.com/shop/coffee+mugs All photos are my original work and protected by copyright.

Click the photo to see full size!

0 notes

Text

Mohammad Alothman: A Breakdown of Neural Networks And How They Mimic the Brain

Hello! I am Mohammad Alothman, and let's take this exciting journey into the world of neural networks, the very backbone of artificial intelligence, a concept inspired by the thing that makes us more human: our brain.

Through AI Tech Solutions, I was able to watch how neural networks can power cutting-edge AI application innovations. What are neural networks, and in what ways can they mimic our brain? So, let's dive into that!

Understanding Neural Networks

At the heart of AI research is a technology transforming industries and driving innovation: neural networks. Inspired by the structure and functionality of the human brain, these are computational systems simulating a network of nodes, or "neurons," much like our brains process information through interconnected neurons.

But how did this idea arise, and why is it so effective in simulating human-like thinking processes?

In the mid-20th century, inspired by the functioning of biological brains, neural networks were developed. The first AI pioneers, such as Warren McCulloch and Walter Pitts, conceptualized a simplified mathematical model of brain neurons.

Artificial neurons are supposed to process information by adjusting the strength of their connections according to the input they receive, similar to how our brain strengthens or weakens synaptic connections over time based on experiences and learning.

This concept has sown the ground for what is known now to be a neural network: a set of machine learning using the layers of interlinked nodes also referred to as neurons, which it employs to learn the pattern of the data besides processes of making a decision.

How Neural Networks Imitate the Brain

Neural networks mimic the human brain in many aspects. Our brain has billions of neurons that are connected through synapses, passing electrical signals to each other. In neural networks, artificial neurons are connected via weighted pathways.

When data passes through these pathways, each neuron processes the input, adjusts its connections (called weights), and then transmits the result to other neurons.

This is the fundamental learning mechanism of neural networks. The synaptic connections in the human brain strengthen or weaken depending upon experience. In an analogous way, the artificial neural networks also modify weights while training for proper prediction or classification.

To present this better, let us take two of the most important neural networks: feedforward and recurrent networks. Feedforward networks are simple structures where data flows one way: input to output. Conversely, recurrent networks are loops that permit information flow in cycles, just like memory processes in the brain itself.

How Neural Networks Were Created

It is the neural network that is the product of research efforts towards the understanding of how human brains deal with information. In the 1950s, McCulloch and Pitts published a simple mathematical model of neural behavior.

Still, it wasn't until the 1980s that real interest in, and serious study of, neural networks began, based on the backpropagation algorithm.

Backpropagation is a technique that improves the performance of neural networks. The algorithm adjusts the neurons' weights, comparing the output of the network with the actual result, "teaching" the network to make better predictions. This innovation revolutionized how neural networks can learn and still remains a highly used technique for training complex models in AI.

Despite all this promise, early years for neural networks proved to be constrained. Computational powers available were not able to deal with volumes of data required by deep learning. But powerful hardware and access to large amounts of data make the neural networks that exist effective in applications for high-end AI systems.

Layers of a Neural Network

It has an input layer, a hidden layer, and finally, an output layer. For each layer of the network, it tries to address a different piece of information or message in order to process it.

An input layer will contain raw data. In a case like an image recognition network, pixel values would be involved.

Hidden Layers: These layers do all the heavy lifting and compute complex transformations of the input data. They learn features from the input and forward them to the next layer. A network with many hidden layers is referred to as a deep neural network.

Output Layer: The last layer produces the output or prediction. For example, in classification, it could output which category the input data falls into.

Each layer of the neural network is refining the information passed through it, just like our brain does in processing stimuli in stages.

Neural Networks in Action: Real-World Examples

Let's look at some real-world applications of neural networks to make this concept even clearer:

Facial recognition, medical image scanning, and autonomous cars are examples where extensive usage of neural networks is made. Significant amounts of image databases are trained for images so that visual information would be classified accordingly.

Human language is a part of Natural Language Processing or NLP. It utilizes the idea of neural networks in recognizing and generating the human language, based on millions of text examples from which patterns and concepts may be derived for the language.

Speech Recognition: AI systems use neural networks in order to process spoken language and convert it into actionable commands. Neural networks learn from massive datasets of voice recordings, making them better with time.

Challenges in Developing Neural Networks

Neural networks are indeed powerful tools but definitely not challenge-free. Some of the prominent ones include:

Overfitting: The network becomes overfit to the training data, thus failing on the new unseen data. Regularization and dropout techniques are applied in order to control this phenomenon.

Data Requirements: Neural networks are data-hungry. Huge amounts of labeled data are needed to train neural networks effectively. It takes considerable time and resources to gather and label this amount of data.

Computational Power: Large neural networks are very computationally expensive to train. This is a major barrier for smaller organizations.

However, the advancement of technology and research on neural networks is constantly improving performance and making it more accessible to developers and businesses alike.

Conclusion

Neural networks are one of the most powerful tools in any AI kit, duplicating the human brain's ability to learn by experience and develop with time. And so, as AI technology develops, so do the applications of neural networks: transforming industries and interactive techniques with it.

An understanding of neural networks is very basic in terms of understanding AI, and here at AI Tech Solutions, we're excited about the possibilities of working with neural networks and committed to helping businesses make the best use of the technology in meaningful outcomes.

About Mohammad Alothman

Mohammad Alothman is the owner of AI Tech Solutions.

As an experienced artificial intelligence developer and entrepreneur, Mohammad Alothman’s passion for working with artificial intelligence led him to found this AI forward company that seeks to serve and support various business entities for them to better themselves in innovations while making improvements.

Frequently Asked Questions (FAQs): Understanding Neural Networks

Q1. What is the main purpose of a neural network?

Neural networks are actually meant to find a trend in data. It is even used as a classifier and regression and can predict data.

Q2. What is the difference between deep learning and neural networks?

Deep learning is a subcategory of machine learning, utilizing many layers of hidden layer neural networks. Deep learning models could do things like image recognition and speech recognition, which are much more complex.

Q3. Can neural networks be used for all types of AI tasks?

No, neural networks cannot always be used. They are a very versatile tool, but any artificial intelligence task demands something else: while more complex tasks may demand decision trees or linear regressions, more difficult ones demand that of neural networks.

Q4. What kind of data does it require to train neural networks?

First, neural networks require large, labeled datasets for the training to be effective. The quality and quantity of the data significantly affect the model's performance.

Q5. What is backpropagation?

Backpropagation is simply the algorithm of adjusting the weights of a neural network when one trains. This actually minimizes the error, updating the weights towards improving predictions.

See More Similar Articles

Mohammad Alothman On AI's Role in The Film Industry

Mohammed Alothman: Understanding the Impacts of AI on Employment and the Future of Work

Mohammad S A A Alothman: The 8 Least Favourite Things About Artificial Intelligence

Mohammed Alothman Explores Key 2025 Trends in AI for Business Success

Mohammed Alothman: The Future of AI in the Next Five Years

0 notes

Text

I, MONSTER (1971) – Episode 226 – Decades of Horror 1970s

“The face of evil is ugly to look upon. And as the pleasures increase, the face becomes uglier.” So, the ugliness of the evil face is proportional to the pleasures? Join your faithful Grue Crew – Doc Rotten, Bill Mulligan, Jeff Mohr, and guest Dirk Rogers – as they mix it up with the Amicus version of Jekyll & Hyden known as I, Monster (1971).

Decades of Horror 1970s Episode 226 – I, Monster (1971)

Join the Crew on the Gruesome Magazine YouTube channel! Subscribe today! And click the alert to get notified of new content! https://youtube.com/gruesomemagazine

Decades of Horror 1970s is partnering with the WICKED HORROR TV CHANNEL (https://wickedhorrortv.com/) which now includes video episodes of the podcast and is available on Roku, AppleTV, Amazon FireTV, AndroidTV, and its online website across all OTT platforms, as well as mobile, tablet, and desktop.

19th-century London psychologist Charles Marlowe experiments with a mind-altering drug. He develops a malevolent alter ego, Edward Blake, whom his friend Utterson suspects of blackmailing Marlowe.

Directed by: Stephen Weeks

Writing Credits: Milton Subotsky (screenplay); Robert Louis Stevenson (from his 1886 novella “Strange Case of Dr. Jekyll and Mr. Hyde“)

Selected Cast:

Christopher Lee as Dr. Charles Marlowe / Edward Blake

Peter Cushing as Frederick Utterson

Mike Raven as Enfield

Richard Hurndall as Lanyon

George Merritt as Poole

Kenneth J. Warren as Deane

Susan Jameson as Diane

Marjie Lawrence as Annie

Aimée Delamain as Landlady (as Aimee Delamain)

Michael Des Barres as Boy in Alley

Jim Brady as Pub Patron (uncredited)

Chloe Franks as Girl in Alley (uncredited)

Lesley Judd as Woman in Alley (uncredited)

Ian McCulloch as Man At Bar (uncredited)

Reg Thomason as Man in Pub (uncredited)

Fred Wood as Pipe Smoker (with Cap) in Pub (uncredited)

Robert Louis Stevenson’s “Strange Case of Dr Jekyll and Mr Hyde” (1886) by any other name would still be a Jekyll/Hyde story. In the case of this Amicus production, the other name is I, Monster (1971), and it’s a Marlowe/Blake story. It’s always a pleasure to see Christopher Lee and Peter Cushing working together and Doctor Who fans will recognize Richard Hurndall.

The 70s Grue Crew – joined for this episode by Dirk Rogers, special effects artist and suit actor – are split on how good or bad I, Monster is. “Vive la différence!” is the Decades of Horror credo, and despite their “différence,” they have a great time discussing this film.

At the time of this writing, I, Monster (1971) is available to stream from the Classic Horror Movie Channel and Wicked Horror TV.

Gruesome Magazine’s Decades of Horror 1970s is part of the Decades of Horror two-week rotation with The Classic Era and the 1980s. In two weeks, the next episode, chosen by Jeff, will be The Stone Tape (1972), a BBC TV production written by Nigel Kneale and directed by Peter Sasdy. Ready for a good British ghost story?

We want to hear from you – the coolest, grooviest fans: comment on the site or email the Decades of Horror 1970s podcast hosts at [email protected].

Check out this episode!

0 notes

Text

One of the interesting things about ears is that they work in the same way as a frog’s eye works. There’s an essay called “What the Frog’s Eye Tells the Frog’s Brain,” by Warren McCulloch, who discovered that a frog’s eyes don’t work like ours. Ours are always moving: we blink. We scan. We move our heads. But a frog fixes its eyes on a scene and leaves them there. It stops seeing all the static parts of the environment, which become invisible, but as soon as one element moves, which could be what it wants to eat—the fly—it is seen in very high contrast to the rest of the environment. It’s the only thing the frog sees and the tongue comes out and takes it. Well, I realized that what happens with the Reich piece is that our ears behave like a frog’s eyes. Since the material is common to both tapes, what you begin to notice are not the repeating parts but the sort of ephemeral interference pattern between them. Your ear telescopes into more and more fine detail until you’re hearing what to me seems like atoms of sound. That piece absolutely thrilled me, because I realized then that I understood what minimalism was about. The creative operation is listening. It isn’t just a question of a presentation feeding into a passive audience.

1 note

·

View note

Text

What is Generative AI?

With its creative powers, Generative Artificial Intelligence (AI) has quickly moved from being just a buzzword in the market to an objective, tangible reality that has changed several sectors in recent years. This essay seeks to give a thorough introduction to generative artificial intelligence (AI), illuminating its development, uses, and moral implications while also providing a glimpse into its bright future.

Following the Development of AI

It's imperative to delve into the archives of AI's evolutionary past to fully understand generative AI's nature. The ideas of ancient mathematicians and philosophers who dreamed of automating human thought are where artificial intelligence (AI) started. Nonetheless, the foundation for contemporary artificial intelligence was firmly established in the 19th and 20th centuries, largely thanks to Alan Turing's revolutionary notion of thinking machines and George Boole's landmark work in Boolean algebra.

Warren McCulloch and Walter Pitts introduced the first artificial neuron in 1943, a critical year in the history of artificial intelligence. This marked a significant advancement in the field of artificial intelligence. Then, in his groundbreaking work "Computing Machinery and Intelligence," published in 1950, Alan Turing presented the famous Turing test as a standard for assessing computer intelligence. The phrase "artificial intelligence" was first used in 1956 as part of the Dartmouth Summer Research Project on AI, which marked the beginning of systematic research efforts in this area.

Even with the first burst of hope in the 1960s brought on by audacious promises of reaching human-level intellect, artificial intelligence faced significant obstacles that resulted in periods of stasis known as the "AI winter." Only in the 1990s and 2000s did artificial intelligence (AI) begin to resurface thanks to the development of machine learning (ML), which used data to immediately identify patterns and enable a wide range of applications, from recommendation systems to email spam filters.

The real game-changer came in 2012 with the advent of deep learning—a branch of machine learning—made possible by advances in neural network algorithms and more powerful computers. This breakthrough began a new age of AI research marked by unheard-of investments, advancements, and applications. AI is now present in many aspects of life, and

Generative AI is a new and exciting area that has the potential to change how people interact with machines entirely.

Comprehending Artificial Intelligence

In machine learning, generative AI is a paradigm shift that uses neural networks to produce new material in various domains, including text, photos, videos, and audio. In contrast to conventional AI models that concentrate on categorization and forecasting, Generative AI endows robots with the capacity to generate material independently, analogous to human inventiveness.

The foundation of generative AI is the idea that large datasets may be used to teach complex patterns. This allows models to generate outputs that are remarkably accurate to human-generated content. Generative AI models are a fantastic example of how creativity and technology can create lifelike images, compelling stories, or catchy music.

The Working Mechanisms of Generative AI

In generative AI, large-scale datasets, sophisticated algorithms, and neural networks interact intricately. These models are trained iteratively to identify minute details and patterns in the data, improving their capacity to produce genuine outputs.

The general idea is the same, even though the underlying mechanisms are intrinsically complicated: generative AI models use data to learn how to produce material that is more than copycats. Instead, it shows some degree of creativity and originality.

Busting Myths About Artificial Intelligence

Even with generative AI's astounding potential, there are still a lot of myths and misunderstandings around it.

One common misunderstanding is that models of generative AI are self-aware. These models need to be made aware and have an innate understanding of the world around them or the content they have generated. They lack human-like cognitive abilities and function only within preprogrammed algorithms and training data parameters.

Similarly, the idea that generative AI models are impartial needs closer examination. Large-scale internet datasets that train these algorithms naturally contain biases and peculiarities common in society. Therefore, it is essential to exercise caution and take preventative action to reduce any potential discrepancies, as Generative AI outputs have the potential to reinforce and magnify pre existing prejudices unintentionally.

Additionally, even though generative AI models are remarkably accurate at creating content, they could be better and can occasionally provide inaccurate or deceptive results. Care should be taken when evaluating content created by generative artificial intelligence, and critical

examination should be performed. To guarantee authenticity and correctness, information should be confirmed by credible sources.

Ethics in Artificial Intelligence

Cybersecurity: Generative AI models increase the likelihood of cyberattacks and disinformation campaigns by generating complex deep fakes and evading security measures like CAPTCHAs.

Discrimination and bias: Generative AI models unintentionally reinforce and magnify social prejudices in their training sets. If this phenomenon is allowed to continue, it could lead to unfair or discriminating outcomes. Hence, it is essential to work together to reduce bias and promote inclusion in AI systems.

Misinformation and fake news: Generative AI makes the spread of artificial intelligence-generated material that may pass for accurate content worse. This raises questions about voter manipulation, election integrity, and social cohesiveness, calling for strong measures to thwart disinformation and advance media literacy.

Protection of privacy: Generative AI models may violate people's privacy rights by using sensitive or private data to create content. Maintaining moral principles and defending people's rights in the digital sphere requires balancing privacy protection and innovation.

Intellectual property rights: Authorship and ownership lines are blurred in content production by generative AI. With the proliferation of AI-generated content on digital platforms, concerns like credit and licensing may need to be carefully considered to guarantee fair outcomes for stakeholders and content creators.

Utilizations in All Sectors

Generative AI can revolutionize numerous industries by stimulating creativity and challenging preconceived notions.

Technology sector: Generative AI improves digital resilience against new threats and streamlines software development workflows by enabling cybersecurity applications, automated testing, and code generation.

Finance industry: Generative AI makes automated financial analysis, risk reduction, and content creation possible. This allows businesses to maximize operational efficiency and make well-informed decisions in a data-rich environment.

Healthcare: Generative AI helps with drug discovery, medical imaging analysis, and patient care by utilizing predictive analytics and tailored medicine to enhance treatment outcomes and diagnostic accuracy.

Entertainment industry: Generative AI transforms content creation, opening up new ethical questions about authenticity and attribution while enabling the synthesis of music, films, and video games with never-before-seen realism and originality.

Imagining Generative AI's Future

With Generative AI still developing, exciting new opportunities and formidable obstacles lie ahead. A paradigm change in human-machine interactions is heralded by advancements in quality, accessibility, interactivity, and real-time content development, which create immersive environments and tailored experiences in various fields.

Thanks to developments in hardware and algorithmic sophistication, Generative AI is becoming more accessible, enabling people to realize their creative potential fully.

Future Generative AI applications will undoubtedly have interaction as models adjust in response to user feedback, preferences, and interactions to provide individualized experiences catered to specific requirements and tastes.

Furthermore, the fusion of Generative AI with cutting-edge technologies like virtual reality (VR) and augmented reality (AR) opens up new possibilities in immersive gaming, storytelling, and experiential marketing by obfuscating the distinction between the real and virtual worlds.

Visit the Blockchain Council to learn about AI!

As the need for AI specialists grows, those who want to pursue a career as artificial intelligence developers must arm themselves with the necessary knowledge and qualifications. Obtaining a certification in artificial intelligence is a crucial first step in developing specialized knowledge and skills in this rapidly evolving field. Certifications for AI developers show a dedication to remaining current with industry trends and best practices and validating expertise in cutting-edge AI technologies. Additionally, taking quick engineering classes promotes a deeper comprehension of the complex mechanics underpinning Generative AI and allows for hands-on learning experiences.

Blockchain Council is a reputable platform leading the way in artificial intelligence development with its fast engineering course. The Blockchain Council, a collection of enthusiasts and subject matter experts, is committed to promoting blockchain applications, research, and development because it understands the revolutionary power of new technologies for a better society. The Blockchain Council closes the knowledge gap between theory and practice in this rapidly evolving subject by providing professionals with the chance to obtain specialized knowledge in generative AI through its rapid engineer certification.

In summary

Finally, generative AI offers a window into a future full of opportunities and difficulties, serving as a monument to human inventiveness and technological power. Though its rise signals revolutionary shifts in many industries, it is crucial to proceed sensibly and ethically, considering the significant effects on society.

We can leverage generative AI's potential to promote innovation, creativity, and inclusive progress in the digital age by cultivating a comprehensive awareness of its capabilities, limitations, and ethical implications. Ultimately, earning a generative AI certification from the Blockchain Council enables people to confidently and competently traverse the complicated AI field, opening up many prospects for professional development and creativity.

0 notes

Text

Demystifying the Neural Network: A Journey of Innovation

In the ever-evolving landscape of artificial intelligence (AI), neural networks stand tall as beacons of innovation and technological advancement. Inspired by the intricate workings of the human brain, these complex computational models have revolutionized the way machines learn, perceive patterns, and make predictions. Their journey, however, has been anything but linear, marked by moments of breakthrough, periods of dormancy, and a constant pursuit of replicating the human thought process.

Pioneering Steps (1940s-1950s) The story of neural networks begins in the mid-20th century, where visionaries like Warren McCulloch and Walter Pitts laid the foundation with their 1943 model - the first attempt to translate the biological workings of neurons into a mathematical language. Their revolutionary paper, "A Logical Calculus of Ideas Immanent in Nervous Activity," offered a simplified blueprint for how these tiny brain cells might operate. However, these early years were plagued by limitations in technology and computing power. McCulloch and Pitts' ambitious ideas were held back by the lack of hardware capable of bringing their concepts to life.

A Period of Hibernation (1960s-1970s) The following decades saw a decline in interest and funding for neural network research. The complex mathematics involved, coupled with the lack of computational muscle, led to a period of stagnation. Many researchers turned their attention towards alternative approaches, like rule-based systems, which seemed more practical at the time. Despite the slowdown, a dedicated few kept the flame alive, continuing to delve into the mysteries of neural networks, laying the groundwork for future generations of scientists and developers.

Rebirth with Backpropagation (1980s) The turning point arrived in the 1980s with the introduction of the backpropagation algorithm. This critical advancement, spearheaded by Paul Werbos and later refined by others, addressed the key challenge of training neural networks - efficiently adjusting their internal parameters during the learning process. Backpropagation unlocked the potential of training deep neural networks, paving the way for their successful application in various domains. This resurgence marked the reintegration of neural networks into the mainstream of AI research.

Read full article at:

1 note

·

View note

Text

Demystifying Artificial Intelligence: The Evolution of Neural Networks - Technology Org

New Post has been published on https://thedigitalinsider.com/demystifying-artificial-intelligence-the-evolution-of-neural-networks-technology-org/

Demystifying Artificial Intelligence: The Evolution of Neural Networks - Technology Org

Artificial Intelligence is no longer the stuff from a science fiction movie but an unfolding reality shaping our world. Neural networks, the brain of AI, have seen significant advancements over the years.

According to Statista, global AI software revenues are projected to skyrocket from $10.1 billion in 2018 to over $126 billion by 2025. This staggering statistic is a testament to AI’s growing influence.

Yet, AI and neural networks remain enigmatic for many, often viewed as complex and inaccessible. Our aim here is to demystify these fascinating technologies. We’ll trace the evolution of neural networks, dissecting their intricacies in an understandable, friendly manner. Read on, and let’s delve into AI and neural networks.

Image by GDJ on Pixabay, free license

What are neural networks?

These are computational models inspired by the human brain’s structure. They consist of interconnected nodes, or “neurons,” designed to process information. Fundamentally, they mimic human cognition, learning directly from data.

These networks interpret sensory data, detecting patterns and trends. Throughout their evolution, they’ve significantly improved, enabling sophisticated data analysis.

Let’s now explore the history of neural networks and the fundamental concepts that have driven their development.

Early developments of neural networks

The inception of neural networks can be traced back to the 1940s. Early models were simple, with rudimentary structures and functions.

Pioneers like Warren McCulloch and Walter Pitts made significant strides in developing basic neuron models that laid the groundwork for further advancements. These initial networks lacked the complexity of modern-day AI. Yet, they were transformative, sparking a revolution in computational thinking and setting the stage for today’s sophisticated neural networks.

Critical milestones in early neural network research

Here are some key milestones with earlier versions of neural networks:

In the mid-1980s, a significant breakthrough occurred with the introduction of backpropagation. This algorithm optimized neural network performance through iterative fine-tuning

In the 90s, convolutional neural networks (CNN) emerged, revolutionizing image recognition and processing

By the early 2000s, Deep Learning, a powerful subset of neural networks, was gaining prominence

The Rise of Deep Learning

Deep Learning, a sophisticated form of AI, started gaining traction during the early 2000s. This technology excelled in identifying patterns and making decisions from large datasets. Its rise signaled a new era in AI, enhancing complex tasks like image and speech recognition.

The advancements in Deep Learning have paved the way for more sophisticated and practical applications of neural networks. We will now explore these applications and the future prospects of neural networks in the following sections.

Big data’s influence on neural networks

Big data has significantly influenced the development and effectiveness of neural networks. The abundance of data provides ample information for these networks to learn from, enhancing their performance. As data volume grows, the networks’ predictive accuracy improves, offering more precise outcomes. Consequently, big data has become a vital component in the refinement and sustainability of neural networks.

Advancements in computational power and algorithms

Advancements in computational power have significantly propelled the evolution of neural networks. Modern processors can handle complex calculations swiftly, enabling neural networks to process larger datasets.

Furthermore, refined algorithms have enhanced the efficiency of these networks, allowing for faster and more accurate pattern identification. These progressions are improving the current capabilities of neural networks and opening up new possibilities for their application in various fields.

Breakthroughs in Deep Learning

Significant breakthroughs have propelled the growth of neural networks. Pioneers like Geoffrey Hinton, Yann LeCun, and Yoshua Bengio made monumental contributions. Their work in Generative Adversarial Networks (GANs) has revolutionized the field. GANs have seen extensive applications, ranging from image synthesis to drug discovery, signifying its transformative potential.

Image by Geralt on Pixabay, free license

Multi-Modal Learning

Multi-modal Learning is the following stride in neural networks. It integrates data from multiple sources, enhancing the depth and breadth of learning. This approach enables the network to comprehend complex patterns holistically.

The innovative application of multi-modal learning is paving the way for more sophisticated uses of neural networks. This advancement promises exciting potential in various sectors, setting the stage for the following discussion on specific applications of neural networks.

Understanding multi-modal AI models

Here are some of the most common multi-modal AI models:

Data Integration: Multi-modal AI models integrate diverse data sets. This comprehensive approach enhances the model’s ability to identify complex patterns.

Enhanced Learning: These models improve the depth and breadth of machine learning. They yield richer insights by analyzing multiple data types

Holistic Understanding: Multi-modal AI models provide a more complete view of problems by analyzing data from various angles. This feature increases the accuracy of predictions

Innovative Applications: Multi-modal learning paves the way for advanced uses of neural networks. It holds future promise in sectors like healthcare, finance, and retail

Applications in various industries

Multi-modal AI models find extensive use in various sectors. Here are some examples:

In healthcare, they integrate patient data, enabling accurate diagnosis and personalized treatment

Finance benefits from enhanced Learning, using these models to predict market trends and manage risks

Retail leverages a holistic understanding of consumer behavior to optimize inventory and enhance sales

In education, AI models foster an interactive learning environment, increasing student engagement and improving learning outcomes

Multi-Objective Neural Network Models

Multi-objective neural network models are a sophisticated subset of artificial intelligence. They are engineered to tackle and optimize multiple goals simultaneously. This facet makes them versatile and highly adaptable.

These models can learn complex patterns and handle multiple, often contradictory, objectives, increasing their performance across different tasks. In industries like healthcare and finance, they provide solutions that balance various parameters, like risk and reward, for optimal outcomes.

Beyond single-metric targeting

Single-metric targeting, while helpful, can be limiting. It can cause a narrow focus on a single goal, potentially overlooking other vital aspects. Multi-objective neural networks go beyond this. They consider various metrics concurrently, providing a more comprehensive view. This approach allows for more balanced, effective decisions and outcomes.

These models are especially beneficial in situations where trade-offs exist between different objectives. Consequently, multi-objective neural networks are becoming more pervasive in various sectors.

Balancing business and sustainability goals

Multi-objective neural networks offer unique solutions to balance business profitability and environmental sustainability. They enable decision-making that considers both economic gains and ecological impact. This harmony between profit and the planet promotes responsible growth.

By utilizing these models, businesses can ensure long-term sustainability without compromising their bottom line. This fusion of technology and sustainability shapes a new business paradigm, redefining success beyond monetary terms.

Image by Geralt on Pixabay, free license

Democratization of AI

The democratization of AI is revolutionizing industries, making powerful tools accessible to all. This democratization breaks down barriers, enabling innovation on a global scale.

It’s about giving everyone access and empowering individuals with the knowledge to leverage AI effectively. As we further delve into the nuances of AI, let’s delve into the implications of this democratization, its challenges, and the prospective future it promises.

Making AI accessible to non-experts

The democratization of AI aims to make this transformational technology accessible to non-experts. This involves simplifying the complex coding and algorithmic structures inherent in AI.

Simultaneously, it necessitates the development of intuitive interfaces, allowing users with minimal technical knowledge to leverage AI’s potential. The goal is to encourage innovation and ingenuity, even among those without a background in AI.

This initiative breaks down the high barrier to entry traditionally associated with AI, fostering a more inclusive tech community. However, this broadened accessibility also poses new challenges, including ensuring proper use and managing the impacts of widespread AI deployment.

Navigating the Future: Embracing the Transformative Power of Neural Networks in AI

Neural networks are AI’s beating heart, a phenomenon altering the face of technology and, by extension, our lives. These intricate systems mimic the human brain’s inner workings, enhancing AI’s ability to recognize patterns, make decisions, and learn from experience.

Imagine an AI platform that understands your needs and learns and adapts to serve you better. The implications are immense, from personalized learning experiences to predictive healthcare.

As we stand on the cusp of this transformative era, embracing neural networks and their potential becomes our collective responsibility. We must navigate this uncharted territory cautiously, ensuring equitable access and responsible use.

In conclusion, the future of AI is here, and it’s nothing short of exciting. Let’s welcome the era of neural networks, where technology and human ingenuity intertwine to shape a better future.

#1980s#A.I. & Neural Networks news#Accessibility#ai#ai platform#algorithm#Algorithms#Analysis#applications#approach#artificial#Artificial Intelligence#background#barrier#Behavior#Big Data#billion#Brain#Business#coding#cognition#Collective#Community#comprehensive#consumer behavior#data#data analysis#Data Integration#datasets#Deep Learning

0 notes

Text

As expected, when the network entered the overfitting regime, the loss on the training data came close to zero (it had begun memorizing what it had seen), and the loss on the test data began climbing. It wasn’t generalizing. “And then one day, we got lucky,” said team leader Alethea Power, speaking in September 2022 at a conference in San Francisco. “And by lucky, I mean forgetful.”

Anil Ananthaswamy in Quanta. How Do Machines ‘Grok’ Data?

By apparently overtraining them, researchers have seen neural networks discover novel solutions to problems.

A fascinating article. Ananthaswamy reports that the researchers called what the networks were doing when the method turned from memorization to generalization as "grokking." He note the term was coined by specification author Robert A. Heinlein to mean, "understanding something “so thoroughly that the observer becomes a part of the process being observed."

The article prompted a couple of connections. First in 1960 Warren McCulloch gave the Alfred Korzybski Memorial Lecture, What Is a Number, That a Man May Know It, and a Man That He May Know a Number (PDF).

T. Berry Brazelton with others developed a model of Child development called Touchpoints, The TouchpointsTM Model of Development (PDF) by Berry Brazelton, M.D., and Joshua Sparrow, M.D. The article about AI points out that machines think differently than we do. But I was intrigued by an similarity in what the AI research call "grokking" and developmental touchpoints.

It is not enough to know the answer, instead machine learning and people learning must find a way to the answer.

4 notes

·

View notes

Text

Yeah this is true afaict. A lot of the papers I've read cite a 1943 paper by Warren McCulloch and Walter Pitts as the first description of something recognizable as a neural network. Then in the 1950s and especially the 1960s a lot of work was going on with people like Minsky that eventually hit a wall leading to the "first AI winter" in the 1970s. I think it was partly lack of computing power and partly because afaik before backpropagation was proposed in the 1980s they didn't have very good algorithms for training neural networks.

so if I've inferred from history correctly here, deep learning was really invented in the 60s by people like Marvin Minsky and the primary bottleneck was that we didn't have fancy enough computer hardware to do it until the 2000s and 2010s

173 notes

·

View notes