#ai for image and text generation course

Explore tagged Tumblr posts

Text

Unlock Your AI Potential: 10 Amazing Free AI Courses to Launch Your Learning Journey

Artificial intelligence (AI) is everywhere. From the way we interact with our smartphones to self-driving cars and groundbreaking medical diagnoses, AI’s impact is undeniable. Building a foundation in AI opens a world of career possibilities and empowers you to understand the technology shaping our future. The best part? You can dive into this fascinating field without spending a dime! In this…

View On WordPress

#ai and data science for kids free course#ai course taught by university professors#ai courses for business analysts#ai for image and text generation course#best free deep learning courses online#free ai chatbot development course#free ai course for product managers#free ai courses focused on computer vision#free beginner ai course no coding required#free machine learning courses with python 2024

0 notes

Text

Visual Storytelling in Stone and Color

The intricate carvings of the Ajanta Caves: A masterclass in narrative design through sequential panels.

The use of symbolism in ancient Indian sculptures: How deities and animals embody core concepts and beliefs.

Understanding the language of gestures in Indian art: The use of mudras to convey emotions and stories.

Visual Communication

Purpose: To convey information visually rather than through text alone.

Application: Logos, posters, websites, packaging, ads.

Working Principle: Use of imagery, typography, and layout to quickly attract and inform the viewer.

FIRST PRACTICAL KNOWLEDGE 👈.

Process Workflow

Brief/Objective – Understand the goal.

Research – Study the target audience, competitors, and theme.

Concept Development – Sketch ideas and layouts.

Design Execution – Create digital or physical artwork.

Feedback & Revision – Refine based on input.

Final Output – Deliver in proper formats (print, web, etc.).

Second Practical Knowledge 👈.

Get more video lesson on @technolandexpart

#ai artwork#artwork#artandesign#3d animation studio#animation#2d animation#original animation#3d animation course#3d animation services#3d image#3d image processing#3d model image#3d artwork#3d animated film#3d animation#ai image generator#image id in alt text#ai image editing#ai image creation#image processing

4 notes

·

View notes

Text

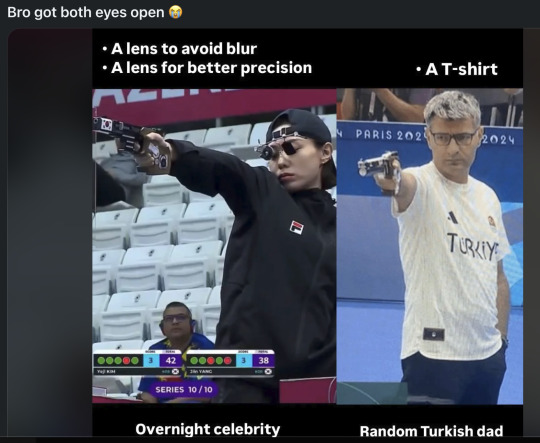

Thing is, my first reaction when I saw this side by side comparison was it was an AI generated image description and my first thoughts were 'you fixate about the gender,age and glasses but overlook they're both holding guns? And then proceed to learn incredible things about the ability to hit a target so small I can't see it in focus at arms length without my glasses because of how long sighted I've become.

Got to love the Olympics - expect AI weirdness, gain new appreciation for the marvel that is humans

This is frustrating.

I love the comparison, but I hate how they are comparing.

They are acting like she is using optics to give herself an advantage. But the device she is wearing is just for comfort and essentially does the same thing as closing one eye and squinting the other.

The little thing over the left eye is basically like an eye patch.

And the thing over her right eye is a mechanical iris, like in a camera lens, but it is NOT a lens.

Different lighting environments are going to be brighter or darker and you may have to squint more or less to let in the same amount of light into your eye. Squinting allows the shooter to get the sharpest possible vision in order to shoot a bullseye the size of a 12-point Times New Roman period.

But if you have to squint for hours for practice and in competition, this can strain your face muscles and become uncomfortable. So this iris basically squints for you.

It's more like wearing comfortable shoes so your feet do not hurt than a lens magnifying the target and giving an advantage.

Both athletes have access to these items. One felt more comfortable without them. The other didn't feel like getting a muscle cramp from squinting all day.

Either would have shot the same if they had or had not used these devices.

Just a funny difference in gear preference.

I should also add, the Turkish dad is the only one using lenses.

#the only sort of gun post I will make#olympics#target shooting#not AI alt text image description generation#that misses the obvious#which of course was my first thought#because 2024

141K notes

·

View notes

Note

I’ve changed most of my views on AI bc of your posts, but do you have any thoughts on/remedies for people losing their jobs to AI? Or is it a “people are gonna lose their jobs one way or another, it’s not actually AI’s fault” kind of deal…? Sorry if you’ve already talked about this before

there's somethign that riley quinn from the trashfuture podcast keeps saying -- "if your job can be replaced by AI, it was already being done by AI". which is to say, that jobs most at risk from AI replacement are ones that were borderline automated anyway. like, i say this as someone who used to write, not for the website buzzfeed itself, but buzzfeed-adjacent Slop Content for money -- i was already just the middlewoman between the SEO optimization algorithm and the google search algorithm. those jobs vanishing primarily means that middlewoman role has been cut, computers can tell other computers to write for computers.

& similarly this is why i keep saying that, e.g. stock photographers are at risk from this, because the ideal use case for generative AI content is stuff where the actual content or quality of the image/text doesn't matter, all that matters is its presence. and yknow, living in a world where many people's livelihoods were dependent on writing and art that is fully replacable by inane computer drivel is itself indicative of something about culture under capitalism, right?

& to some degree, like i'm always saying, the immiseration of workers by advancement in technology is a universal feature of capitalism -- i recommend you read wage labour & capital to see how this phenomenon has persisted for well over a century. it's simply nothing new -- like, the stock photographers who are most at risk from this already are already employed in an industry that itself decimated in-house illustration; think about how any dime-a-dozen reomance novel you can pick up at a store nowadays has a hastily photoshopped stock photo cover when fifty years ago it would have had a bespoke cover illustration that an artist got paid for.

of course, none of that historical overview is like, comforting to people who are currently worried about their lives getting worse, and i get that -- for those people, workplace organization and industrial action is the only realistic and productive avenue to mobilize those fears. the WGA and SAG-AFTRA strikes produced far more material concessions on gen-AI-based immseration for workers facing precarity than any amount of furious social media ludditism has

1K notes

·

View notes

Text

AI can’t do your job

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in SAN DIEGO at MYSTERIOUS GALAXY on Mar 24, and in CHICAGO with PETER SAGAL on Apr 2. More tour dates here.

AI can't do your job, but an AI salesman (Elon Musk) can convince your boss (the USA) to fire you and replace you (a federal worker) with a chatbot that can't do your job:

https://www.pcmag.com/news/amid-job-cuts-doge-accelerates-rollout-of-ai-tool-to-automate-government

If you pay attention to the hype, you'd think that all the action on "AI" (an incoherent grab-bag of only marginally related technologies) was in generating text and images. Man, is that ever wrong. The AI hype machine could put every commercial illustrator alive on the breadline and the savings wouldn't pay the kombucha budget for the million-dollar-a-year techies who oversaw Dall-E's training run. The commercial market for automated email summaries is likewise infinitesimal.

The fact that CEOs overestimate the size of this market is easy to understand, since "CEO" is the most laptop job of all laptop jobs. Having a chatbot summarize the boss's email is the 2025 equivalent of the 2000s gag about the boss whose secretary printed out the boss's email and put it in his in-tray so he could go over it with a red pen and then dictate his reply.

The smart AI money is long on "decision support," whereby a statistical inference engine suggests to a human being what decision they should make. There's bots that are supposed to diagnose tumors, bots that are supposed to make neutral bail and parole decisions, bots that are supposed to evaluate student essays, resumes and loan applications.

The narrative around these bots is that they are there to help humans. In this story, the hospital buys a radiology bot that offers a second opinion to the human radiologist. If they disagree, the human radiologist takes another look. In this tale, AI is a way for hospitals to make fewer mistakes by spending more money. An AI assisted radiologist is less productive (because they re-run some x-rays to resolve disagreements with the bot) but more accurate.

In automation theory jargon, this radiologist is a "centaur" – a human head grafted onto the tireless, ever-vigilant body of a robot

Of course, no one who invests in an AI company expects this to happen. Instead, they want reverse-centaurs: a human who acts as an assistant to a robot. The real pitch to hospital is, "Fire all but one of your radiologists and then put that poor bastard to work reviewing the judgments our robot makes at machine scale."

No one seriously thinks that the reverse-centaur radiologist will be able to maintain perfect vigilance over long shifts of supervising automated process that rarely go wrong, but when they do, the error must be caught:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

The role of this "human in the loop" isn't to prevent errors. That human's is there to be blamed for errors:

https://pluralistic.net/2024/10/30/a-neck-in-a-noose/#is-also-a-human-in-the-loop

The human is there to be a "moral crumple zone":

https://estsjournal.org/index.php/ests/article/view/260

The human is there to be an "accountability sink":

https://profilebooks.com/work/the-unaccountability-machine/

But they're not there to be radiologists.

This is bad enough when we're talking about radiology, but it's even worse in government contexts, where the bots are deciding who gets Medicare, who gets food stamps, who gets VA benefits, who gets a visa, who gets indicted, who gets bail, and who gets parole.

That's because statistical inference is intrinsically conservative: an AI predicts the future by looking at its data about the past, and when that prediction is also an automated decision, fed to a Chaplinesque reverse-centaur trying to keep pace with a torrent of machine judgments, the prediction becomes a directive, and thus a self-fulfilling prophecy:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

AIs want the future to be like the past, and AIs make the future like the past. If the training data is full of human bias, then the predictions will also be full of human bias, and then the outcomes will be full of human bias, and when those outcomes are copraphagically fed back into the training data, you get new, highly concentrated human/machine bias:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

By firing skilled human workers and replacing them with spicy autocomplete, Musk is assuming his final form as both the kind of boss who can be conned into replacing you with a defective chatbot and as the fast-talking sales rep who cons your boss. Musk is transforming key government functions into high-speed error-generating machines whose human minders are only the payroll to take the fall for the coming tsunami of robot fuckups.

This is the equivalent to filling the American government's walls with asbestos, turning agencies into hazmat zones that we can't touch without causing thousands to sicken and die:

https://pluralistic.net/2021/08/19/failure-cascades/#dirty-data

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/18/asbestos-in-the-walls/#government-by-spicy-autocomplete

Image: Krd (modified) https://commons.wikimedia.org/wiki/File:DASA_01.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#reverse centaurs#automation#decision support systems#automation blindness#humans in the loop#doge#ai#elon musk#asbestos in the walls#gsai#moral crumple zones#accountability sinks

277 notes

·

View notes

Text

In case you’re not aware, ai video is considerably more believable than it was even a year ago, which of course doesn’t bode well for image generation either. you can’t rely on heuristics like hands or text, general off-ness, though you still will find tells often, its more reliable to consider the context around a post than the content itself when you are unsure.

109 notes

·

View notes

Text

Shadows Of Our Past, Present, and (possible) Future — Series

Join the Discord Server! :)

My Hero Academia — Female!OC Fan Fiction on AO3

Part One (Completed — 93k words):

The one where Shota Aizawa stumbles upon a back alley full of stray cats and ends up adopting a child

“Fine, then a cat? We both know how much you love those little furry…things.” At this, Shota paused the game and turned to the pushy blonde next to him. “I actually have considered that.” “And?” “And: also, no. It makes no sense.” Hizashi looked almost scandalized. “Makes no sense?” “I made a pro and contra list.” “Of course you did.”

When underground hero Shota Aizawa, twenty-two years old, is out on patrol one Friday evening, he doesn't expect that a single meow from a cat would lead him to find a homeless girl called Yoru. From then on, Yoru and Shota grow up together, make mistakes together, and try to overcome every obstacle life throws at them.

>> Read on AO3 <<

Part Two (Ongoing, regular updates — growing long fic — 565k words so far — READ PART 1 FIRST, PLEASE AND THANK YOU):

The one where Yoru Aizawa tries to navigate through life at U.A.

Two days after her fifteenth birthday, Yoru decides to drop the bomb on him. “I want to go to U.A.” “You want to go to U.A.” Her Dad puts the book he's been reading down on the glass balcony table. “Yes, I want to go to U.A.” She slumps down on the outdoor couch next to him, grabbing the discarded book. “What are you reading?” ‘A Book of Five Rings by Miyamoto Musashi — The classic guide to strategy ’. She raises an eyebrow. “Reading that for fun, huh?” “Why do you want to go to U.A.? You never cared much about heroes. Besides Edgeshot, that is.” Yoru smirks up at him. “What, jealous?” “As if.” “You know, even if they sold Eraserhead posters, I wouldn’t hang them up. It would be super weird.” “Good to know where your loyalties lie.” He rolls his eyes. “Back to the topic at hand, why do you want to go to U.A.? Because Shinso wants to go?” “No.” Pause. “Okay, that may be part of it. But I’m serious. I’ve been thinking about it for a while now, and I really want to go.” “That might be so, but you still neglected to tell me why you want to attend there.” Yoru plays with her hair, noting how it’s time for another hair cut when she finds some split ends. “I wanna be a hero.” Her Dad blinks. “A hero?” “Yes. Well, I want to help people and do some good with that shitty quirk of mine.”

When Yoru tells her Dad that she wants to attend U.A., she expects it to be a difficult path. She didn't expect all the awkwardness, blossoming friendships, confusing feelings, and near-death experiences, though.

>> Read on AO3 <<

Please heed the warnings/tags (TWs in the author's notes of chapters where they apply to).

Also: because someone asked this before - you can read it as a reader insert if you want. I don't mind at all. Feel free to imagine yourself as part of the story. Just know that Yoru (the OC) will have descriptions of her visual appearance.

This story is a mix of:

Slice of life

Hurt/Comfort

Angst/Fluff

Humor

Dadzawa

SLOW BURN Romance — Enemies to Lovers (Bakugo x Yoru)

SLOW BURN Romance — EraserMic (but it's a subtle slow burn)

Growing up, coming of age (hopefully lol)

Teenage awkwardness

Mixed media (pictures, music, chat screenshots (later on in Part 2), etc. — chat screenshots will always have the written text below, to make it accessible for visually impaired folks or people who use screen readers)

and more...

Author: NoBecksPleaseNo on AO3

Please don't copy or plagiarize the work, the character, the premise, etc. Also, no cross-posting anywhere, please and thank you.

Disclaimer: Yoru's image is AI generated and then edited/adjusted by the author - I did not know better at the time of making that header picture, and will hopefully get around to re-designing the whole thing at some point. The other character images in the header are from Pinterest (besides the one of Present Mic/Midnight, that one's from the light novels) — unfortunately without a source. If you're the artist, and you're not okay with me using them, please message me and I will remove them. If you're the artist and are okay with me using them, please tell me, so I can credit you.

Besides the OC characters, I don't own any already existing characters from the My Hero Academia Universe — that honor belongs to Kohei Horikoshi.

#bnha#mha#ao3#my hero academia#ao3 fanfiction#my hero academia fanfiction#my hero academia fanfic#fanfic recommendation#bakugo katsuki#katsuki bakugou#bakugo x oc#mha shinsou#hitoshi shinso#hitoshi shinsou#shinsou hitoshi#aizawa#aizawa shota#shota aizawa#shota aizawa fanfiction#bakugo fanfiction#shinso fanfiction#present mic#hizashi yamada#midnight bnha#mha midnight#nemuri kayama#shouta aizawa#mha fanfiction#dadzawa#sppf

287 notes

·

View notes

Text

Hey so, just a friendly reminder. I have not, and will never use generative AI to write my fics.

I've made my stance on the use of generative AI pretty clear, I think it's a huge waste of energy and time, and in its current form, both text and image generation is built off of plagiarism in massive amounts. It's completely unethical, to me, to use these programs in creative works. Especially in creative works you post as yours.

I will continue to never touch these programs for the rest of my creative career. I got this far on my own hard work, and I will continue doing so. I have zero interest in letting a machine do the one thing I find joy in.

I write, both fanfic and original, for the joy of sharing stories. I create to share a bit of myself with others. A machine could never understand that.

It's a losing battle, I know. So much of our writing, our drawings, our own photos, have been fed into these machines. It's becoming inescapable no matter where you look. I continue to post online knowing that with each update, I'm feeding this machine unwillingly. Fanfic is fed directly into character ai, our tweets and blogs are getting scraped, everything. And I desperately want people to understand that if an artist who has been creating for years, puts something out that looks kinda like AI, to stop for a moment and consider: maybe it does because that person's whole archive has been devoured and regurgitated over and over.

Of course some people have pivoted to using it and vigilance is key, but don't just blindly accuse someone without damn good proof to back you up.

#anyways sorry for the rant#someone accused me of using ai in you pay the cost and i got hella salty

78 notes

·

View notes

Text

What an AI generated website can look like

Hey folks! I just encountered a website that's obviously AI generated, so I figured I'd use it as an example to help you spot websites that might be AI generated content farms!

First, the website is called faunafacts.com. And one of the first things that sticks out to me is how low-effort the logo is:

Regardless of whether a website is AI generated or not, a lazy and low-quality logo is a big clue that the website's content will also be lazy and low-quality.

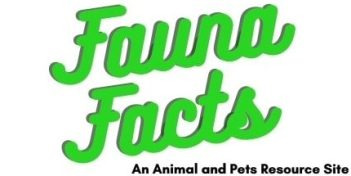

If we click on Browse Animals, we see four options: Cows, Wolves, Bears, and Snakes.

Let's click Wolves.

The first thing I want you to notice is the lack of topical focus. Sure, it's all about wolves, but the content on them is all over the place. We have content on wolf hunting, a page on animals that resemble wolves, pages that explain the alleged social structure of wolves, and pages on wolf symbolism.

A website with content created by real people isn't going to be all over the place like this. It would be created with more of a focus in mind, like animal biology and behavior. The whole spiritual symbol thing here mixed with supposed biological and behavioral information is weird.

The next thing I want you to notice are the links to pages on topics that are quite frankly bizarre: "Wolf vs Mastiff: Things You Need To Know" and "Can You Ride A Wolf? (No, Because...)" Who is even looking for this kind of information in large enough numbers that it needs a dedicated page?

Then of course, there's the fact that they're repeating the debunked wolf hierarchy stuff, which anyone who actually knew anything about wolves at this point wouldn't post.

Now let's look at what's on one of the actual pages. We'll check out the wolves vs. mastiff page, and we can soon find a telltale sign of AI: rambling off topic to talk about something completely unrelated.

Both animals are carnivores. In the wild, wolves hunt large animals like bison, deer, and even elk. Sometimes, they may also hunt small mammals like the beaver.

Mastiffs, on the other hand, are mainly fed with dog food. As a dog, a mastiff left in the wild will eat anything. However, it will have difficulties hunting, as this instinct may have already departed the dogs of today.

A mastiff is not an obligate carnivore. Dogs can eat plant matter. Some say that dogs can survive on a vegetable diet.

Dogs being made vegetarians is a contentious issue. Scientifically, dogs belong to the order Carnivora. There is a movement today to convert dogs to a vegan diet. While science has nothing against it, the fear of many is that when dog owners do this, a vegan diet will certainly have an impact on the species.

This page is supposed to be comparing mastiffs with wolves, but then it starts talking about the vegan pet food movement. This happened because large language models generate text based on on what's statistically likely to follow the last text it just generated.

Finally, the website's images are AI generated:

If you know what to look for, this is a very obviously AI generated image. There's no graininess to the image, and the details are both unnaturally smooth and unnaturally crisp. It also has that high color saturation that many AI generated images have.

So there you go, this is one example of what an AI generated website can look like! Be careful out there!

#lmms#large language model#ai#critical thinking#fake websites#ai generated websites#discernment#recognizing ai

108 notes

·

View notes

Text

Thinking about that that "slop accelerationism" post, and also Scott's AI art Turing test.

I also hope AI text- and image-generation will help shake us loose from cheap bad art. For example, the fact that you can now generate perfectly rendered anime girls at the click of button kindof suggests that there was never much content in those drawings. Though maybe we didn't really need AI for that insight? It feels very similar to that shift in fashion that rejected Bouguereau-style laboriously-rendered pretty girls in favor of more sketchy brush work.

But will we really be so lucky that only things that we already suspected was slop will prove valueless?

As usual with AI, Douglas Hofstadter already thought about this a long time ago, in an essay from 2001. Back in 1979 he had written

Will a computer program ever write beautiful music? Speculation: Yes, but not soon. Music is a language of emotions, and until programs have emotions as complex as ours, there is no way a program will write anything beautiful. There can be "forgeries"—shallow imitations of the syntax of earlier music—but despite what one might think at first, there is much more to musical expression than can be captured in syntactical rules. There will be no new kinds of beauty turned up for a long time by computer music-composing programs. Let me carry this thought a little further. To think—and I have heard this suggested—that we might soon be able to command a preprogrammed mass-produced mail-order twenty-dollar desk-model "music box" to bring forth from its sterile [sic!] circuitry pieces which Chopin or Bach might have written had they lived longer is a grotesque and shameful misestimation of the depth of the human spirit. A "program" which could produce music as they did would have to wander around the world on its own, fighting its way through the maze of life and feeling every moment of it. It would have to understand the joy and loneliness of a chilly night wind, the longing for a cherished hand, the inaccessibility of a distant town, the heartbreak and regeneration after a human death. It would have to have known resignation and world-weariness, grief and despair, determination and victory, piety and awe. In it would have had to commingle such opposites as hope and fear, anguish and jubilation, serenity and suspense. Part and parcel of it would have to be a sense of grace, humor, rhythm, a sense of the unexpected and of course an exquisite awareness of the magic of fresh creation. Therein, and therein only, lie the sources of meaning in music.

I think this is helpful in pinning down what we would have liked to be true. Because in 1995, somebody wrote a program that generates music by applying simple syntactic rules to combine patterns from existing pieces, and it sounded really good! (In fact, it passed a kind of AI art turing test.) Oops!

The worry, then, is that we just found out that the computer has as complex emotions as us, and they aren't complex at all. It would be like adversarial examples for humans: the noise-like pattern added to the panda doesn't "represent" a gibbon, it's an artifact of the particular weights and topology of the image recognizer, and the resulting classification doesn't "mean" anything. Similarly, Arnulf Rainer wrote that when he reworked Wine-Crucifix, "the quality and truth of the picture only grew as it became darker and darker"—doesn't this sound a bit like gradient descent? Did he stumble on a pattern that triggers our "truth" detector, even though the pattern is merely a shallow stimulus made of copies of religious iconography that we imprinted on as kids?

One attempt to recover is to say Chopin really did write music based on the experience of fighting through the maze of life, and it's just that philistine consumers can't tell the difference between the real and the counterfeit. But this is not very helpful, it means that we were fooling ourselves, and the meaning that we imagined never existed.

More promising, maybe the program is a "plagiarism machine", which just copies the hard-won grief, despair, world-weariness &c that Chopin recorded? On its own it's not impressive that a program can output an image indistinguishable from Gauguin's, I can write such a program in a single line:

print("https://commons.wikimedia.org/wiki/File:Gauguin,Paul-Still_Life_with_Profile_of_Laval-_Google_Art_Project.jpg")

I think this is the conclusion that Hofstadter leans towards: the value of Chopin and the other composers was to discover the "template" that can then be instantiated to make many beautiful music pieces. Kind of ironically, this seems to push us back to some very turn-of-the-20th-century notion of avant-garde art. Each particular painting that (say) Monet executed is of low value, and the actual valuable thing is the novel art style...

That view isn't falsified yet, but it feels precarious. You could have said that AlphaGo was merely a plagiarism machine that selected good moves from historical human games, except then AlphaGo Zero proved that the humans were superfluous after all. Surely a couple of years from now somebody might train an image model on a set of photographs and movies excluding paintings, and it might reinvent impressionism from first principles, and then where will we be? Better start prepare a fallback-philosophy now.

131 notes

·

View notes

Text

Tokyo Debunker WickChat Icons

as of posting this no chat with the Mortkranken ghouls has been released, so their icons are not here. If I forget to update when they come out, send me an ask!

Jin's is black, but not the default icon. An icon choice that says "do not percieve me."

Tohma's is the Frostheim crest. Very official, he probably sends out a lot of official Frostheim business group texts.

Kaito's is a doodle astronaut! He has the same astronaut on his phone case! He canonically likes stars, but I wonder if this is a doodle he made himself and put it on his phone case or something?

Luca's is maybe a family crest?

Alan's is the default icon. He doesn't know how to set one up, if I were to take an educated guess. . . .

Leo's is himself, looking cute and innocent. Pretty sure this is an altered version of the 'Leo's is himself, looking cute and innocent. Pretty sure this is an altered version of the 'DATA DELETED' panel from Episode 2 Chapter 2.

Sho's is Bonnie!!! Fun fact, in Episode 2 Chapter 2 you can see that Bonnie has her name spraypainted/on a decal on her side!

Haru's is Peekaboo! Such a mommy blogger choice.

Towa's is some sort of flowers! I don't know flowers well enough to guess what kind though.

Ren's is the "NAW" poster! "NAW" is the in-world version of Jaws that Ren likes, and you can see the same poster over his bed.

Taiga's is a somewhat simplified, greyscale version of the Sinostra crest with a knife stabbing through it and a chain looping behind it. There are also roses growing behind it. Basically says "I Am The Boss Of Sinostra."

Romeo's is likely a brand logo. It looks like it's loosely inspired by the Gucci logo? I don't follow things like this, this honestly could be his family's business logo now that I think about it.

Ritsu's is just himself. Very professional.

Subaru's is hydrangeas I think! Hydrangeas in Japan represent a lot of things apparently, like fidelity, sincerity, remorse, and forgiveness, which all fit Subaru pretty well I think lol. . . .

Haku's is a riverside? I wonder if this is near where his family is from? It looks familiar, but a quick search isn't bringing anything up that would tell me where it is. . .

Zenji's is his professional logo I guess? The kanji used is 善 "Zen" from his first name! It means "good" or "right" or "virtue"!

Edward's appears to be a night sky full of stars. Not sure if the big glowing one is the moon or what. . . .

Rui's is a mixed drink! Assuming this is an actual cocktail of some sort, somebody else can probably figure out what it is. Given the AI generated nature of several images in the game, it's probably not real lol.

Lyca's is his blankie! Do not wash it. Or touch it. It's all he's got.

Yuri's is his signature! Simple and professional, but a little unique.

Jiro's is a winged asklepian/Rod of Asclepius in front of a blue cross? ⚕ Not sure what's at the top of the rod. A fancy syringe plunger maybe? It's very much a symbol of medicine and healing, so his is also very professional. Considering he sends you texts regarding your appointments, it might be the symbol of Mortkranken's medical office?

These are from the NPCs the PC was in the "Concert Buds" group chat! The icons are pretty generic, a cat silhouette staring at a starry sky(SickleMoon), a pink, blue, and yellow gradient swirl(Pickles), a cute panda(Corby), and spider lilies(Mina). Red spider lilies in Japan are a symbol of death--and Mina of course cursed the PC, allegedly cursing them to death in a year.

#tokyo debunker#danie yells at tokyo debunker#not sure if anon meant these or not but here they are anyway lol#tdb ref

219 notes

·

View notes

Text

Finish What You Started 2024 - Event Rules

[ID copied from alt: Event Rules on a blue background. ⬒ No new projects ◨ Any medium and any fandom welcome ⬓ NSFW inclusive, warnings must be tagged ◧ Tag #FinishWhatYouStarted2024; boosting/retweeting starts September 13th ◼ Event ends October 31st, 2024]

The goal of this event is to get things done that you’ve already started. We all have unfinished projects whose incomplete status haunts us. Those are what we want to tackle!

The structure is loose, as this is a multimedia event. While primarily aimed at writers, this event is open to any kind of creative fanwork. Fics, translations, podfics, fanart, animations, cosplay - if you started it and never got it done, it qualifies. There is no sign-up required. I will not assign beta readers for writers, but I can boost requests for those who want them! And I can boost messages of those who would like to beta read.

The mod is a danmei fan mainly, but your work can be any fandom. Maybe something you started before your current fandom excitement took over, or one you keep putting off in favor of compelling new ideas.

Feel free to pass this event info along! The more the merrier!

Further rules and clarifications:

Alt text is very encouraged, especially for boost posts or artwork!

If your work is NSFW, I will only boost it if it has appropriate content warnings. Spoilering images is recommended but not required as long as it’s tagged. Do not letter-swap or abbreviate content warnings. These are so people can mute them as needed. Example: "gore" not "g0re"

Remember Tumblr can mute phrases, but each warning should be its own tag as well as in the body.

Please use genderbend or genderswap for characters depicted not as canon genders. Example: "#NSFW #genderbend #gore”

Please use Omegaverse or A/O/B for that content. The original letter order is a slur against Aboriginal peoples and will not be tolerated here, even with the slashes. It, like other racial or identity-based slurs, fall under hate speech and are thus not permitted.

This account will post weekly morale-boosting messages and helpful resources. Every Friday, starting September 13th, will be Finished It Friday. All the completed works posted that week will be boosted together in a big thread, so we can celebrate your accomplishment!

Halfway-point check-in is October 1st. Final event deadline is October 31st. The last Finished It Friday is November 1st.

FAQ:

Q: Are original works acceptable?

A: This is primarily a fanwork-focused event. If original work is the only WIP you have to work on, it's certainly fine to work on it during the time frame of the event. If it is posted publicly when finished, you may tag it for boosting.

Q: Are there any restrictions on topics?

A: No, so long as your event # post is properly & fully tagged for content (see rules about tagging above). "Dead Dove" topics are allowed. Some submissions will be 18+. For me, this is less about the content and more about finishing it.

The usual restrictions based on laws and Community Guidelines of course apply, so you may need to tailor how you post to which event space your interacting with. Twitter, Tumblr, and Discord all have their own rules. There are also some topics that are in poor taste to make fanworks around. The event organizer and mods reserve the right to not boost your work if they decide it is rage-baiting or trolling. They are not responsible for negative reactions to your works. Please be respectful of those you share a digital space with.

Keep in mind that when I link to your finished work during a Finished It Friday, it may reach a wider audience than you may be used to.

Q: Are there any restrictions on media that can be submitted?

A: Machine Generated or "AI" images and writing are not permitted. If you are found in violation of this rule, you will be removed from the event. All images, writing, or other works must be your own.

This is a positive, shared space. Do not belittle other creators' medium of choice. Please no fandom/character/ship/creator bashing, and don't berate artists or authors for not being done with something, even if they don't finish by the end of the event. Also, please don't passive-aggressively send this event to the author of an unfinished fic you want to see done faster. 😥 Be cool, respect each other, and keep any interpersonal disagreements to your own tumblrsphere.

All posts and boosts will be crossposted to the event Twitter (finishwatustart) and Discord. Expanded rules, explanations, and Dead Dove guidelines can also be found on the Discord. (invite link in pinned post)

Fics can be posted to the AO3 collection (archiveofourown(dot)org /collections /FinishWhatYouStarted2024_Fall)

Work-in-progress posts should be tagged #FinishWhatYouStarted2024 . If you complete a wip within the event, tag it #IFinishedWhatIStarted2024 for boosting so we can all celebrate!

Find more information and community on the Discord, if you want! Joining the Discord is not required for the event. As always, if you have questions, don't hesitate to reach out!

118 notes

·

View notes

Note

hey what’s up, i think you’re pretty cool but disagree with you on the whole ai can make art thing. to me, without the purpose from an actual person creating the piece, it’s not art but an image; as all human art has purpose. some driving factor in a work, compared to a program which purely creates the prompt without further intention. i was wondering what your insight on this is? either way, hope you have a great day

well, first of all, does art require 'purpose'? there's this view of art which has very much calcified in "anti-AI" rhetoric, that art is some linear process of communication from one individual to another: an Artist puts some Meaning into a unit of Art, which others can then view to Recieve that Meaning. you can hold this view, but i don't! i'm much more of a stuart hall-head on this, i think that there is no such transfusion of Intent and that rather the 'meaning' of a piece is something that exists only in the interplay between text and reader. reading is an active, interpretative process of decoding, not a passive absorptive one. so i dispute, firstly, that 'purpose' is to begin with a necessary or even imporant element of art.

moreover i think this argument rests on a very arbitrarily selective view of what counts as "an actual person creating the piece" -- 'the prompt' is, itself, an obvious artistic contribution, a place where an artist can impart huge amounts of direction, vision, and so on. in fact, i completely reject the claim of both the technology's salesman and its biggest detractors that genAI "makes art" -- to quote kerry mitchell's fractal art manifesto: "Turn a computer on and leave it alone for an hour. When you come back, no art will have been generated." in the past, i've posed questions about generative art pieces to demonstrate this

secondly, of course, the process does not end after image generation from prompt for serious generative artists--the ones who are serious about the artform (rather than tech guys trying to do marketing for the Magical Art Box) frequently iterate and iterate, generating a range of iterations and then picking one to iterate on further, so on and so forth, until the final image they choose to share is one that contains within it the traces of a thousand discrete choices on behalf of the artist (two pretty good explanations of this from people who actually do this stuff can be found here and here)

third and finally, that very choice to share the image is itself an artistic decision! we (and by we, i mean, anyone who cares about what art is) have been talking about this since fountain -- display is a form of artistic intent, taking something and putting it forward and saying 'this is art' is in and of itself an artistic decision being made even if the thing itself is unaltered: see, for example, the entire discipline of 'found art'. once someone challenged me, yknow, "if you did a google search, would that be art?" and my answer to that is, if you screenshot that google search and share it as art, then yes, resoundingly yes! curation and presentation recontextualizes objects, turning them into rich texts through the simple process of reframing them. so even if you granted that genAI output is inherently random computer noise (i don't, of course) -- i still think that the act of presenting it as art makes it so.

since i assume you're not familiar with anything interesting in the medium, because the most popular stuff made with genAI is pure "lo-fi girl in ghibli style" type slop, let me share some genAI pieces (or genAI-influenced pieces) that i think are powerful and interesting:

the meat gala, rob sheridan (warning: body horror!)

secret horses (does anyone know the original source on this?)

infinite art machine, reachartwork

ethinically ambigaus, james tamagotchi

mcdonalds simpsons porn room, wayneradiotv

software greatman, everything everything (the music is completely made by the band, but genAI was partially responsible for the lyrics -- including the title and the several interesting pseudo-kennings)

i want a love like this music video, everything everything

cocaine is the motor of the modern world, bots of new york

poison the walker, roborosewatermasters (here's my analysis posts on it too)

not all of these were necessarily intended as art: but i think they are rich and fascinating texts when read that way -- they have certainly impacted me as much as any art has.

anyways, whether you agree or not, i hope this gives you some stuff to think about, thanks for sharing your thoughts :)

747 notes

·

View notes

Text

oh no she's talking about AI some more

to comment more on the latest round of AI big news (guess I do have more to say after all):

chatgpt ghiblification

trying to figure out how far it's actually an advance over the state of the art of finetunes and LoRAs and stuff in image generation? I don't keep up with image generation stuff really, just look at it occasionally and go damn that's all happening then, but there are a lot of finetunes focusing on "Ghibli's style" which get it more or less well. previously on here I commented on an AI video model generation that patterned itself on Ghibli films, and video is a lot harder than static images.

of course 'studio Ghibli style' isn't exactly one thing: there are stylistic commonalities to many of their works and recurring designs, for sure, but there are also details that depend on the specific character designer and film in question in large and small ways (nobody is shooting for My Neighbours the Yamadas with this, but also e.g. Castle in the Sky does not look like Pom Poko does not look like How Do You Live in a number of ways, even if it all recognisably belongs to the same lineage).

the interesting thing about the ghibli ChatGPT generations for me is how well they're able to handle simplification of forms in image-to-image generation, often quite drastically changing the proportions of the people depicted but recognisably maintaining correspondence of details. that sort of stylisation is quite difficult to do well even for humans, and it must reflect quite a high level of abstraction inside the model's latent space. there is also relatively little of the 'oversharpening'/'ringing artefact' look that has been a hallmark of many popular generators - it can do flat colour well.

the big touted feature is its ability to place text in images very accurately. this is undeniably impressive, although OpenAI themeselves admit it breaks down beyond a certain point, creating strange images which start out with plausible, clean text and then it gradually turns into AI nonsense. it's really weird! I thought text would go from 'unsolved' to 'completely solved' or 'randomly works or doesn't work' - instead, here it feels sort of like the model has a certain limited 'pipeline' for handling text in images, but when the amount of text overloads that bandwidth, the rest of the image has to make do with vague text-like shapes! maybe the techniques from that anthropic thought-probing paper might shed some light on how information flows through the model.

similarly the model also has a limit of scene complexity. it can only handle a certain number of objects (10-20, they say) before it starts getting confused and losing track of details.

as before when they first wired up Dall-E to ChatGPT, it also simply makes prompting a lot simpler. you don't have to fuck around with LoRAs and obtuse strings of words, you just talk to the most popular LLM and ask it to perform a modification in natural language: the whole process is once again black-boxed but you can tell it in natural language to make changes. it's a poor level of control compared to what artists are used to, but it's still huge for ordinary people, and of course there's nothing stopping you popping the output into an editor to do your own editing.

not sure the architecture they're using in this version, if ChatGPT is able to reason about image data in the same space as language data or if it's still calling a separate image model... need to look that up.

openAI's own claim is:

We trained our models on the joint distribution of online images and text, learning not just how images relate to language, but how they relate to each other. Combined with aggressive post-training, the resulting model has surprising visual fluency, capable of generating images that are useful, consistent, and context-aware.

that's kind of vague. not sure what architecture that implies. people are talking about 'multimodal generation' so maybe it is doing it all in one model? though I'm not exactly sure how the inputs and outputs would be wired in that case.

anyway, as far as complex scene understanding: per the link they've cracked the 'horse riding an astronaut' gotcha, they can do 'full glass of wine' at least some of the time but not so much in combination with other stuff, and they can't do accurate clock faces still.

normal sentences that we write in 2025.

it sounds like we've moved well beyond using tools like CLIP to classify images, and I suspect that glaze/nightshade are already obsolete, if they ever worked to begin with. (would need to test to find out).

all that said, I believe ChatGPT's image generator had been behind the times for quite a long time, so it probably feels like a bigger jump for regular ChatGPT users than the people most hooked into the AI image generator scene.

of course, in all the hubbub, we've also already seen the white house jump on the trend in a suitably appalling way, continuing the current era of smirking fascist political spectacle by making a ghiblified image of a crying woman being deported over drugs charges. (not gonna link that shit, you can find it if you really want to.) it's par for the course; the cruel provocation is exactly the point, which makes it hard to find the right tone to respond. I think that sort of use, though inevitable, is far more of a direct insult to the artists at Ghibli than merely creating a machine that imitates their work. (though they may feel differently! as yet no response from Studio Ghibli's official media. I'd hate to be the person who has to explain what's going on to Miyazaki.)

google make number go up

besides all that, apparently google deepmind's latest gemini model is really powerful at reasoning, and also notably cheaper to run, surpassing DeepSeek R1 on the performance/cost ratio front. when DeepSeek did this, it crashed the stock market. when Google did... crickets, only the real AI nerds who stare at benchmarks a lot seem to have noticed. I remember when Google releases (AlphaGo etc.) were huge news, but somehow the vibes aren't there anymore! it's weird.

I actually saw an ad for google phones with Gemini in the cinema when i went to see Gundam last week. they showed a variety of people asking it various questions with a voice model, notably including a question on astrology lmao. Naturally, in the video, the phone model responded with some claims about people with whatever sign it was. Which is a pretty apt demonstration of the chameleon-like nature of LLMs: if you ask it a question about astrology phrased in a way that implies that you believe in astrology, it will tell you what seems to be a natural response, namely what an astrologer would say. If you ask if there is any scientific basis for belief in astrology, it would probably tell you that there isn't.

In fact, let's try it on DeepSeek R1... I ask an astrological question, got an astrological answer with a really softballed disclaimer:

Individual personalities vary based on numerous factors beyond sun signs, such as upbringing and personal experiences. Astrology serves as a tool for self-reflection, not a deterministic framework.

Ask if there's any scientific basis for astrology, and indeed it gives you a good list of reasons why astrology is bullshit, bringing up the usual suspects (Barnum statements etc.). And of course, if I then explain the experiment and prompt it to talk about whether LLMs should correct users with scientific information when they ask about pseudoscientific questions, it generates a reasonable-sounding discussion about how you could use reinforcement learning to encourage models to focus on scientific answers instead, and how that could be gently presented to the user.

I wondered if I'd asked it instead to talk about different epistemic regimes and come up with reasons why LLMs should take astrology into account in their guidance. However, this attempt didn't work so well - it started spontaneously bringing up the science side. It was able to observe how the framing of my question with words like 'benefit', 'useful' and 'LLM' made that response more likely. So LLMs infer a lot of context from framing and shape their simulacra accordingly. Don't think that's quite the message that Google had in mind in their ad though.

I asked Gemini 2.0 Flash Thinking (the small free Gemini variant with a reasoning mode) the same questions and its answers fell along similar lines, although rather more dry.

So yeah, returning to the ad - I feel like, even as the models get startlingly more powerful month by month, the companies still struggle to know how to get across to people what the big deal is, or why you might want to prefer one model over another, or how the new LLM-powered chatbots are different from oldschool assistants like Siri (which could probably answer most of the questions in the Google ad, but not hold a longform conversation about it).

some general comments

The hype around ChatGPT's new update is mostly in its use as a toy - the funny stylistic clash it can create between the soft cartoony "Ghibli style" and serious historical photos. Is that really something a lot of people would spend an expensive subscription to access? Probably not. On the other hand, their programming abilities are increasingly catching on.

But I also feel like a lot of people are still stuck on old models of 'what AI is and how it works' - stochastic parrots, collage machines etc. - that are increasingly falling short of the more complex behaviours the models can perform, now prediction combines with reinforcement learning and self-play and other methods like that. Models are still very 'spiky' - superhumanly good at some things and laughably terrible at others - but every so often the researchers fill in some gaps between the spikes. And then we poke around and find some new ones, until they fill those too.

I always tried to resist 'AI will never be able to...' type statements, because that's just setting yourself up to look ridiculous. But I will readily admit, this is all happening way faster than I thought it would. I still do think this generation of AI will reach some limit, but genuinely I don't know when, or how good it will be at saturation. A lot of predicted 'walls' are falling.

My anticipation is that there's still a long way to go before this tops out. And I base that less on the general sense that scale will solve everything magically, and more on the intense feedback loop of human activity that has accumulated around this whole thing. As soon as someone proves that something is possible, that it works, we can't resist poking at it. Since we have a century or more of science fiction priming us on dreams/nightmares of AI, as soon as something comes along that feels like it might deliver on the promise, we have to find out. It's irresistable.

AI researchers are frequently said to place weirdly high probabilities on 'P(doom)', that AI research will wipe out the human species. You see letters calling for an AI pause, or papers saying 'agentic models should not be developed'. But I don't know how many have actually quit the field based on this belief that their research is dangerous. No, they just get a nice job doing 'safety' research. It's really fucking hard to figure out where this is actually going, when behind the eyes of everyone who predicts it, you can see a decade of LessWrong discussions framing their thoughts and you can see that their major concern is control over the light cone or something.

#ai#at some point in this post i switched to capital letters mode#i think i'm gonna leave it inconsistent lol

34 notes

·

View notes

Text

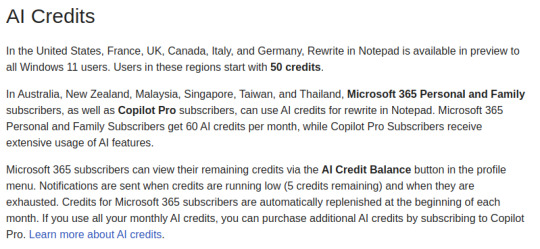

Classic Windows apps like Paint and Notepad are also getting LLM (Generative AI) support. There is no reason whatsoever that Notepad should do more than text editing. Microsoft has gone crazy with these tools. Of course, as part of these tools, your text in Notepad is sent to Microsoft under the name of Generative AI, which then sells it to the highest bidder. I'm so sick of Microsoft messing around with working tool and yes, you need stupid copilot subscription called "Microsoft 365 Personal and Family Subscribers" for Notepad. It is pain now. So, stick with FLOSS tools and OS and avoid this madness. Keep your data safe.

You need stupid AI credits to use basic tools.

In other words, you now need AI credits to use basic tools like Notepad and Paint. It's part of their offering called 'Microsoft 365 Personal and Family' or 'Copilot Pro'. These tools come with 'Content Filtering,' and only MS-approved topics or images can be created with their shity AI that used stolen data from all over the web. Read for yourself: Isn't AI wonderful? Artists and authors don't get paid, now you've added censorship, and the best part you need to pay for it!

GTFO, Microsoft.

103 notes

·

View notes

Text

New tactical card icon (used with permission) by @flammabel. Thank you!

The prompt list for Cal Kestis Week 2024!

This event will run May 12 - May 18, 2024

Each prompt was carefully curated to work for a wide range of genres (excluding "Bad Ending," I feel like that speaks for itself.) The categories are "single word," "dialogue," "vibe," and "Star Wars."

No AI generated text or images will be promoted during this event.

All ships, genres, ratings, and formats are welcome just as long as they’re Cal-centric!

Any previews of art, fic, or other media will only be reblogged if they are SFW.

All works must be tagged with the appropriate ships and warnings.

If you are posting fanfiction on tumblr, any words beyond the first 500 must be under “read more.”

Works posted on tumblr will only be found/reblogged if they use the tag #calkestisweek2024 - that is the only tag being tracked.

FAQ Ao3 collection ask box

Click Read More for a text version of the prompt list.

Sunday (May 12) – Touch | “You’re doing great!” | But First They Have To Catch You | Lightsaber

Monday (May 13) – Food | “That doesn’t look good.” | A Rigged Game | Bogling

Tuesday (May 14) – Alone | “I don’t know anymore.” | Hope is a Skill | Droid

Wednesday (May 15) – Scars | “We shouldn’t be doing this.” | Bad Ending | Ponchos

Thursday (May 16) – Fall | “Of course it’s you.” | The Sun Comes Up Again | The Force

Friday (May 17) – Laugh | “Follow my lead.” | A Different Choice | Echo

Saturday (May 18) – Free Day! | Catch Up Day!

163 notes

·

View notes