#because plot is a generic function that is implemented differently for each object

Explore tagged Tumblr posts

Text

don't get me started on R prev

ridiculous language everyday I want to punch this language in the face

i think everyone should program at least once just so you realise just how fucking stupid computers are. because theyre so fucking stupid. a computer wants to be told what to do and exactly that and if you make one typo or forget one detail it starts crying uncontrollably

#I not only work in R full time but I also teach it#did you know R has like three and a half different ways it implements object orientation?#and it's not easy to use any of them? because the language itself is not object oriented obviously#BUT there are classes and polymorphism implemented in the r base#which you quickly figure out when you lookup the help for the plot function and learn absolutely nothing#because plot is a generic function that is implemented differently for each object#of course#it is of course a more modern and updated language than C (or S). but at what cost?#everyday I find out that it's doing something that never in a million lifetimes I could predict

27K notes

·

View notes

Text

5 best programming language for data science beginners

What are programming languages?

Programming languages, simply put, are the languages used to write the lines of code that make up software programs. These lines of code are digital instructions, commands, and other syntax that are translated into digital output.

There are 5 main types of programming languages:

Procedural programming language

Functional programming language

Object Oriented Programming Language

Script programming language

Logic programming

Each of these types of programming languages performs different functions and has specific advantages and disadvantages.

Python

Python has grown in popularity in recent years, ranking first in several programming language popularity indexes, including the TIOBE index and the PYPL index. Python is an open-source, general-purpose programming language that is widely applicable not only in the data science industry but also in other domains such as web development and game development.

Best used for: Python is best used for automation. Task automation is extremely valuable in data science and will ultimately save you a lot of time and provide you with valuable data

2. R

R is quickly rising up the ranks as the most popular programming language for data science, and for good reason. R is a highly extensible and easy-to-learn language that creates an environment for graphics and statistical computing.

All this makes R an ideal choice for data science, big data and machine learning.

R is a powerful scripting language. Since this is the case, it means R can handle large and complex data sets. This, combined with its ever-growing community, makes it a top-tier choice for the aspiring data scientist.

Best used for: R is best used in the data science world. It is especially powerful when performing statistical operations.

MATLAB

MATLAB is a very powerful tool for mathematical and statistical computing, which allows the implementation of algorithms and the creation of user interfaces. Creating UIs is especially easy with MATLAB because of its built-in graphics for plotting and data visualization.

This language is particularly useful for learning data science, it is primarily used as a resource to accelerate data science knowledge. Because of the deep learning toolbox functionality, learning Matlab is a great way to easily transition into deep learning.

Best Used For: MATLAB is commonly used in academia to teach linear algebra and numerical analysis.

SAS

SAS is a tool used primarily to analyze statistical data. It literally means statistical analysis system. The primary purpose of SAS is to retrieve, report, and analyze statistical data.

SAS may not be the first language you learn, but for beginners, knowing SAS can open up many more opportunities. It will help you a lot if you are looking for a job in data management.

Best Used For: SAS is used for machine learning and business intelligence with tools on your belt such as predictive and advanced analytics.

SQL

SQL is a very important language to learn to become a great data scientist. This is very important because data scientists need SQL to process data. SQL gives you access to data and statistics, making it a very useful resource for data science.

Data science requires a database, hence the use of a database language such as SQL. Anyone working with big data needs to have a solid understanding of SQL to be able to query databases.

Best used for: SQL is the most widely used and standard programming language for relational databases.

#machinelearning#datascience#datascienceassignment dataAssignmenthelp#pythonprogramming#Datascienceprogrammng#datsciencehomeworkhelp#DataScienceassignmenthelp

0 notes

Text

OUAT 2X14 - Manhattan

I don’t have a pun for this time, but I wanted to say that this is probably one of the episodes that I was the most excited to cover for this rewatch for a few reasons. First, I haven’t watched it since my initial watch of the series so apart from the broad strokes of the story, I’ve forgotten a great deal of it. Second, it’s one of the biggest and best received episodes of the season from a General Audiences standpoint from what I understand. Third, I’ve never had a real opinion on Neal because I binged Seasons 2 and 3, so this episode will provide me the opportunity to do just that! Finally, it takes place in New York and who doesn’t love New York!

...Don’t answer that! Anyway, I hope you’ll read my review of this episode which is just under the cut, so I’ll CUT to the chase. Ha! Turns out I did have a pun in me! Okay! Let’s get started!

Press Release While Mr. Gold, Emma and Henry go in search of Gold’s son Bae in New York, Cora, Regina and Hook attempt to track down one of Rumplestiltskin’s most treasured possessions. Meanwhile, in the fairytale land that was, Rumplestiltskin realizes his destiny while fighting in the Ogres War. General Thoughts - Characters/Stories/Themes and Their Effectiveness Past We gotta talk about the forking Seer and how she relates to Rumple. First, on a strictly aesthetic level, look at the way that the Seer moves her hands as she asks for water! It looks a bit like how Rumple moves his hands when he gets the Seer’s powers. Also, even her voice is sing-songy in the scene as per the captions, matching Rumple’s. Second, on a more narrative level, it’s really interesting to examine just how much of the Seer ended up becoming part of Rumple. When we see Rumple first become the Dark One, while manipulative at times, because said manipulation happens with Bae as a child, it’s played as more of manipulation that any authority figure could conceivably do cranked up to 11. And when he’s not with Bae and he’s dealing with others, he’s blunter, not as cunning as he grows to be later. But the Seer, like him in later flashbacks, picks upon more vulnerable parts of Rumple’s psyche, like how she brings up Milah and Rumple’s fears of his past and cowardice.

So I know that there are some complaints about Rumple’s discussed reasoning for turning back from the Ogres Wars was changed to being about Bae to about Rumple’s cowardice, and I actually couldn’t disagree more. This entire flashback’s setup isn’t about Rumple’s excitement to be a father, but about how he is more scared than he realizes of fighting and dying in the war. That’s how, as I mentioned before, the Seer initially gets him: by mentioning his father’s cowardice and his desire to stray away from that path. In the next flashback scene, Rumple shows much more explicit fear at those harmed in the war and one of the most poignant lines from that scene is about praying for a quick death and the final words he says to what he thinks is the Seer is “and I’m gonna die.” I honestly feel like this was a revelation that was always supposed to come out. It doesn’t lessen Rumple’s love for Bae or that that loves is any less powerful, for he wants to live for Bae, but from a story perspective, the main throttle of Rumple’s decision to harm himself does lean more towards cowardice. Hell, even Milah hits the ball on the head: “You left because you were AFRAID.”

“It will require a curse -- a curse powerful enough to rip everyone from this land.” Note that the Seer says this as Rumple’s asking for the truth about him finding his son to be revealed. I feel like people forget about this line and how it pertains to Rumple’s journey back to Bae. Many in the fandom (Myself included) mock Rumple for needing a curse to traverse realms while there are many other ways to travel them as revealed over the course of the series. Now, I get that yeah, to an extent, that’s true. New magical MacGuffins are introduced so that new characters can be introduced and so that we can see our current cast battle the fairytale elements with their modern mindsets and emotional problems (I personally find it more annoying when it’s mocked to the point where it’s used as an actual story critique, forking Cinema Sins and the mentality they’ve introduced for many who criticise films over minute details rather than how the work functions as a story -- this is why you will never see me take a point off for a plot hole). But back on topic, Rumple was told by both the Blue Fairy and now the Seer that he’d need a curse to get back to Bae, and so he kept that in mind. Present I know that a major point of contention is Emma not telling Neal about Henry when he brought up the idea of something good coming of their relationship, and I think it’s more of a complicated situation, one akin to both her initial lie to Henry in “True North” and her decisions in “Fruit of the Poisonous Tree,” where to say that something is objectively right or wrong is missing the point. Yes, Emma shouldn’t have lied and the episode is very explicit with how that was the wrong decision. However, look at what she’s dealing with. A vulnerable time in her life is now being further bastardized with the knowledge that it was all a conspiracy and while I like Neal, he didn’t exactly broach the subject with tactful bedside manner, instead trying to rationalize something so personal and painful to her. Also, I want to point out how Emma on some level knows this. That’s why she calls Mary Margaret in the very next present scene. But she doesn’t do the right thing. Look, this isn’t the easiest episode to be an Emma fan during, and I know that well. And I swear, I’m doing my best to keep my fan goggles off, but I’m not going to pretend that it’s not a nuanced situation when it is. And finally, Emma is chewed out for her decision. Henry gives her a “Reason You Suck Speech,” calling her just as bad as Regina, a line that hurts but is justified and given with an appropriate level of painfulness from an eleven year old. And even her initial apology isn’t enough.

So, that first confrontation between Rumple and Neal. Wow. What I like about Neal as he pertains to Rumple is that he immediately gives Rumple no leniency. I talked about this briefly during my review of “The Return,” but this is such an important distinction to Rumple’s other biggest loved one, Belle, who has somewhat looser parameters. From the second Neal sees Rumple again, he’s blunt about his intentions and exactly what he thinks about what Rumple’s capable of. I don’t want to say that there’s no love there, but it is pushed back in terms of Neal’s priorities, buried under decades of bitterness. And at the same time, while full of love, Rumple is still using his old tricks to get Neal to talk to him. He leverages his deal with Emma for more time to talk to him and while it works, it only serves to get more ire out of Neal. Rumple’s apology is likewise undeniably sincere, but the manner in which it is both gotten and attempted to be implemented completely miss the point that the anger produced can’t be healed so easily. I mean, just look at Neal’s face when he says that there’s magic in Storybrooke. Every benefit of every doubt is abandoned like the Stiltskin boys across portals. That having been said, with three minutes of time for an apology, I feel like we almost got more out of Rumple’s apology to August in “The Return” than we did here. Where are the tears? Why isn’t Rumple saying as much as he possibly can? It’s not enough to take points off or anything, but this meeting is partially what the first season and a half were building towards, and I kind of wanted more umph to it. That also having been said, I get that because Neal is a different person from August’s rendition of him entirely, of course the reactions are going to be different, and Neal’s speech after he’s done talking blows the conversation away. Credit to Michael Raymond James because in this scene, he completely kept up with Robert Carlyle, and that’s not always an easy feat, especially in such an emotionally charged scene. Insights - Stream of Consciousness -It is so bizarre to see Rumple and Milah happy. It’s a great contrast to how bad things got between them and is a great show of how Rumple’s cowardice really affected Milah, turning her from someone who looked so content into the miserable woman from the flashback in “The Crocodile.” All throughout the scene, they’re so dopey-eyed and in-sync towards the end. I honestly would love to read a fic where they managed to come to terms with their past and maybe be able to forgive each other, and I know I’m alone in this, but there is a story there. -”My weaving days are over.” *thinks about how in roughly 250 years, he goes by Weaver* Suuuuuure, Rumple. -Okay, seeing an adorably excited non-Dark One non-present timeline Rumple is just the best. Robert Caryle really shows Rumple’s youth here, excited, bouncy, full of music and light. It’s an honest job and plays against the cowardly spinster we see in “Desperate Souls,” the blunter Mr. Gold and the silly, but frightening Dark One version of Rumple, it makes for such a unique contrast! -Milah also gets such a unique contrast in another respect, being the more cautious half of the relationship compared to how she is after Rumple arrives home. -”But to the world?” So, when in the series finale, the idea of “the Rumplestiltskin the world will remember” came up and kept being echoed like it was this important thing, I felt that it kind of came out of nowhere because I hadn’t ever seen Rumple concerned with his legacy beyond the more isolated well being of his children, grand and great-grandchildren, and wife. However, as I hear this line, it makes a bit more sense to me, especially because this is the same episode that discussed the Henry prophecy, which was also touched upon in the series finale. -So, Rumple does that bug-eyed thing that I complained about in the last episode, but here, because the confrontation between Rumple and Bae is impending and is isolated as the main reason for his concern, it works sooo well! -Killian, thank you for breaking up that horrible mother/daughter moment! -”Names are what I traffic in, but sadly, no.” This line cracked me up! XD -”I’m not answering anything until you tell me the truth.” That’s a pretty solid rule of thumb, Emma. Neal’s definitely no villain, but just going forward, that’s a good mindset to have! -”I am the only one allowed to be angry here!” She’s got a point, Neal. You’re not really explaining yourself in a way that you’d be justified in being angry. -I love spotting bloopers as they’re happening. It’s like the OUAT version of finding Hidden Mickeys! -”My son’s been running away for a long time now.” When?! He ran away ONCE and he wasn’t even trying to run from you. He was trying to take you with him, in fact! Did you forget that?! -Henry and Rumple get a great scene! -I know you’re Baelfire.” Fun fact, last year at NJ con, I got this question wrong in the true/false game. But now I know the truth, and I’m coming for you again, Jersey! -Gotta give all the credit in the world to Jennifer Morrison here. There’s so much pain in her voice as Neal’s revealing the truth to Emma and Jen just captures how Emma’s barely holding her shirt together because now even more of her life has been shown to be a lie, and this time, a more vulnerable memory has been made even worse because of this new knowledge. -”To remind myself never to trust someone again.” That is such a tragic line. Even as Storybrooke has done a major job of changing Emma’s mindset in that regard, you do still see bits of that distrust in her personality. That’s why I like the concept of Emma’s walls being a constant in the series. -”You’ll never have to see me again.” Neal, you do know that your father is clearly still chasing you, right? You think he’s gonna give up so soon? -I like how as Rumple agreeing to watch the Seer, you can already see that his face has fallen and that he’s grown more haggard, showing some of that fear already striking him now that some of the initial adrenaline has worn off and the reality of the war is settling in. -”Who are you?” Jeez Belle, why not say “hi” like a decent person? -Regina, you know, instead of playing The Sorcerer’s Apprentice, it could be easier to, you know, look in her bag instead. -David, Thanksgiving with your family would be the best thing EVER! -Apparently, Bae learned to be a locksmith from Rumple. Neat! -Another great use of the weather from OUAT! The snow really helps to accentuate the dire straits of the war and is just adds a nice bit of texture to the scene so that it’s not just dirt against a night sky. -On the opposite end, Rumple hurting himself, especially as someone who is overcoming some serious orthopedic issues right now, is so uncomfortable to watch. Rumple’s screams actually gave me shudders. -As if I didn’t have enough reasons to praise the living daylights out of Robert Carlyle, just look at the moments when he enters Neal’s apartment. It’s the first glimpse that he gets of everything his son went through as a result of his actions and it’s subtly heartbreaking. -To add to this Robert Carlyle acting chain, his eyes as he screams “tell me” is forking hysterical! -Rumple’s splint makes me so uncomfortable. Go see a better doctor! -”A strong name!” Rumple straight up indignation as he says that cracks me the fork up! -I like Milah’s buildup of frustration as Rumple arrives home. At first, she’s almost smiling as she tells Rumple Bae’s name, but as she quickly confronts him and learns the facts, she gets angrier until we see the beginnings of the misery that sets off “The Crocodile.” I also want to note that Milah’s anger is for Bae’s sake, not for her own like in “The Crocodile,” and I think that is such an important distinction. A lot of people condemn Milah for her choice to leave Bae and my degree of agreement with that statement varies if you’re asking me to view it in terms of her choice as an individual vs comparisons with other characters, but I think it’s important to show that love for Bae. -forking hell. Die, Cora! -I think I do enough of a job complimenting the effects team to be able to laugh at the New York backdrop during Emma and Henry’s conversation. -I ADORE the design of the Seer, by the way. The stitched up face and the eyeballs on her hands is just so cool! -It’s interesting to note that the last scene of the flashback happens after the events of “The Crocodile’s” flashback, as Rumple states that his wife ran away, and not “died.” -Rumple, to quote a magnificent show of great quality, “If you could gaze into the future, you’d think that life would be a breeze, seeing trouble from a distance, but it’s not that easy. I try to save the situation, then I end up misbehaving. Oh-oh-o-o-o-oh!” (I’ll write a ficlet for the first person to tell me what I’m referencing). -”Okay. I get it. We’re all messed up.” *Takes a deep breath* Ookay, Neal. You sent Emma to jail, and while it may have helped break the curse, it also put her through some serious shirt. You don’t get to make light of that. -”In time, you will work it all out.” Yeah, about 250 years, but he does get there, and it’s pretty freakin’ awesome when he does! Arcs - How are These Storylines Progressing? Rumple finding his son - I probably should’ve listed this as an arc long ago, but I forgot. In any case, Rumple finally found him! The journey from the start of the series here was a fantastically well done one! I feel like it never dragged or took any longer or shorter than the season and a half that it ended up lasting. And now, it kind of gets a second life. Rumple is now physically with his son, but emotionally couldn’t be further from him, and we get to see Rumple trying to bridge that gap. I don’t remember liking this part of it, but on concept alone, it’s so fascinating to see that next step. Emma lying to Henry - I like that Emma gets to have a flawed moment with Henry and that Henry actually reacts to it so negatively. For a season and a half now, Emma’s been Henry’s hero so of course when she not only lies, but to such an extent, he’s going to have a bad reaction because he’s put her on a pedestal. Not only is it an interesting character moment for Henry, but as I mentioned before, it’s a good job on the narrative’s part in punishing Emma for her lie. Favorite Dynamic Rumple and Henry. To be honest, Neal and Rumple should absolutely go here, but their entire conversation is more story based, and I talked about them ad nauseum up in that category, so why not highlight another dynamic? Rumple and Henry are so supportive and kind to each other here, and it feels like both good foreshadowing of their familial relationship, a show of the progress both characters had made thus far when it comes to how they treat their loved ones, and a tragic setup for not only the let down they both get from their respective loved ones, but also of the prophecy. For the latter one, for most of he episode, it felt a little weird seeing Rumple talk to Henry so softly despite knowing the prophecy. It felt a bit like him raising a pig for slaughter. However, the end of the episode makes it clear that Rumple was just now remembering the prophecy as he watched Neal and Henry bond, and it works well enough for me. Their time together in the episode is just so gentle and in an episode that’s more or less full of harsh moments (those gentle moments included in hindsight), the break that Henry and Rumple give is desperately needed. Writer Adam and Eddy are really good at writing intricate storylines. When you look at their other episode like the “Pilot,” “A Land Without Magic,” and “The Queen of Hearts,” you notice that the situation the characters are put into are never so simple. Just like someone can’t or shouldn’t be expected to straight-up hate Regina in the “Pilot,” one can’t or shouldn’t be expected to hate Emma, Neal, Henry, or Rumple here (Except Cora. We can hate Cora allllll we want), no matter who you’re a fan of. That’s because they’re careful with their framing and character work as to never let one forget their full picture. And I think that holds especially true in “Manhattan.” Culture In my intro, I said I was excited to finally get an impression of Neal for myself. When you’re in a certain shipping camp like I am (Captain Swan), Neal tends to be thrown through the ringer. Hell, even my best friend in the fandom hates him. However, when you’re as anti-salt as I am, you tend to take a lot of the shirt thrown at him with a grain of...well, salt. This is part of the reason why this rewatch appealed to me so much. I always found Neal to be pretty average in my book. I remember liking him, but not having much of a reaction to either his actions or his death in Season 3 (I also feel like I should disclose the fact that I wasn’t in either of the shipping camps throughout Neal’s entire present existence on the show), and I feel like I’d be remiss not to talk about him a bit now, especially as this is his debut present episode and affords him the most perspective.

So here goes.

I like Neal. I don’t love him. If you asked me to line up every character in the show, he’d probably end up near August, and I like August too, though not as much as major characters like Rumple, Regina, and Emma.

What’s appealing to me about Neal is his non-exaggerated blunt personality. The way he curb stomps Rumple’s apology is so in-your-face, as if to scream to an audience that already finds sympathy for Rumple that his pain matters too and it will be paid attention to. This works by keeping him a sympathetic character, but also giving him a compelling dynamic. As for Emma, that bluntness also helps, but in a way that makes Emma more sympathetic. I mentioned before that Neal’s exposition about his part in the conspiracy of sending Emma to jail was less than ideal, and it’s part of what contributes to her decision to lie about finding him. Neal is a bit of a jerk, obviously not devoid of either the heroism or love of his former selves, but it’s a character quality all the same and a good one, especially because to my memory, it stays around and is pretty organic. It paints the trauma that he’s had at the hands of the world since his abandonment as it’s such a stark contrast to his Enchanted Forest self.

Rating Golden Apple. What a great episode! It goes in with the promise of payoff for quite a few major story elements and does exactly that. It’s unwaveringly harsh in many respects, but that’s why it works as well as it does. Neal’s addition to the main cast shakes things up and provides new opportunities for characters, for as harsh as it is to watch, seeing Emma lie about Neal and be punished for it was a good narrative choice, and the flashback was utterly fantastic in its storytelling! Flip My Ship - Home of All Things “Shippy Goodness” Swan Fire - Listen to that vulnerability as Emma says Neal’s name and that happiness that Neal just can’t keep out of his voice as he says Emma’s! That’s just fantastic! Also, he keeps the dreamcatcher! Also also, that “leave her alone” was romantic as all hell! Captain Floor - I’m very pissed at myself for not mentioning the best ship ever at any point before this. Like, Killian and the Floor just belong together, and to not acknowledge that was a callous mistake on my part! My sincerest apologies to my reader base, and I beg for you not to think I’m at all an anti! ()()()()()()()()() It feels so good to give this season a high grade again!!!! Woohoo!! Thank you for reading and to the fine folks at @watchingfairytales for putting this project together!

Next time...someone DIES!!!

...I’m saying that like we all don’t know who it is that dies… ...Please come back…I’m so lonely...

Season 2 Tally (124/220) Writer Tally for Season 2: Adam Horowitz and Edward Kitsis: (39/60) Jane Espenson (25/50) Andrew Chambliss and Ian Goldberg (24/50) David Goodman (16/30) Robert Hull (16/30) Christine Boylan (17/30) Kalinda Vazquez (20/30) Daniel Thomsen (10/20)

Operation Rewatch Archives

9 notes

·

View notes

Text

Segmentation Study- A Vital Tool for any Business to Succeed

In the dynamic, competitive marketing landscape, no business can move a step further without having a clear picture of the market it is entering into and the consumers associated with it. Marketers hence invest resources in ‘Segmentation Studies’ to understand which consumer segment to target and how to target them to develop tailored products/services, tailor marketing strategies better, design customer experience and tap growth opportunities.

Let’s start with the basics of a segmentation study.

A segmentation study identifies a set of criteria based on which a market is divided into segments, including consumers with homogenous purchasing traits, needs and expectations. The study allows marketers to pick identical benefits associated with the segments so that the most promising one can be focused on to create an effective brand portfolio. The ultimate aim of segmentation research is to design market strategies and tactics for the different segments to optimize products and services for different customers.

All businesses, irrespective of size and the industry it is functional in, practice segmentation study to read consumers’ minds. The first step to the segmentation study is to learn about the various ways a market can be segmented. Segmentation can be of the following types:

Geographic

Demographic

Behavioral

Psychographic

Getting started with segmentation study

An effective segmentation study is a high-stakes venture. Hence, it is important to plan the study strategically to obtain high-quality segmentation insights by making optimum usage of a firm’s resources.

Set Objectives and goals of the segmentation study.

Set criteria to Decide the Type of Segmentation to go for. Create a hypothesis based on the identified segmentation variables.

Design the study through questionnaires to obtain quantitative and qualitative responses.

Conduct Preliminary Research to familiarize with the potential customers.

Create customer segments by analyzing the responses either manually or using appropriate statistical software.

Analyze the created segments and pick the most relevant one

Develop a Segmentation Strategy for the selected target segment.

Execute the strategy to identify key stakeholders and Repeat what works.

How is a good segmentation characterized?

Even though a segmentation study aims to tackle the complexities of a competitive market, a poorly conducted study can complicate things. So, how should an approachable market segment look like?

It should be relevant and unique

Measurable

Accessible by promotion, communication, and distribution channels

Substantial

Actionable

Differentiable

Conducive to the prediction of customer choice

Easy to implement strategies

Why does segmentation matter?

Looking in time, some of the world's most successful brands have failed miserably in specific markets. Walmart’s low-price strategy and the convenience of finding great deals under a roof, was not much effective in Japan as it is in the US. Hence, after more than a decade in the market, the American retail giant was forced to exit Japan. Likewise, Walmart did not consider the cultural nuances of Germany, specifically in the personal space and had to pull out with a hefty loss.

Another US giant, Home Depot failed to dip its toes into the Chinese market because the concept of DIY didn't fit the preference of the locals. Starbucks' failure to adapt its offerings to Australia's rich coffee culture proved to be a marketing blunder.

Where did things go wrong? Because each consumer is unique and the ‘one-product-for-everyone' approach has never worked, marketers must thoroughly understand the target segment before entering it. That is the importance of segmentation studies.

Segmentation study allows to focus on a group that matters and to tailor marketing strategies and ad campaign messages that resonate with the needs of that particular segment of consumers. Segmentation insights provide a deeper level of understanding about the consumers to develop better products.

Let us dive into some more benefits of segmentation studies.

Enhanced customer support

Enables efficient marketing and better brand strategy

Enact data-driven changes

Optimized pricing strategy

Better customer retention

Unravel new areas to expand in

Allows to forecast future trends and shape a forecast model accordingly.

Ensures higher ROI and CRO

Helps to stay competitive within a domain.

Pitfalls to avoid during a segmentation study

Segmentation study being a complicated practice is vulnerable to various common mistakes that marketers and researchers tend to make.

Too small or specialized segments are difficult to organize. This can hence disrupt the objective and can yield data with no statistical or directional significance.

Inflexible segments curb switching strategies that usually costs a lot to the firm.

Not evolving with the dynamic market trends and remaining too attached to a particular segment defeats the whole purpose of a segmentation study.

Let us see how Borderless Access helped a leading media channel find out a potential audience to target with the following case study.

Case study: Segmentation study to yield qualitative insights about audiences’ behavioural traits against quantitative demographic data

This case study describes how BA Insightz helped our client obtain in-depth insights about a spectrum of attributes of each audience segment of the Subscription Video on Demand (SVOD) category.

A leading entertainment channel leveraged the research expertise of Borderless Access to segment SVOD users and obtain game-changing segmentation insights. The objective of the study was to understand the general streaming behaviour of each of the audience segments in the US to tap the opportunity hence created.

Subscription video on demand (SVOD) is a subscription-based service using which consumers can access pre-designated video content, streamed through an internet connection on the service provider’s platform at a certain chargeable fee. SVOD gives users the freedom to select the video content they prefer to watch in this service and consume at convenience. Hence, we can say that consumer preferences, to a great extent, design SVOD services. Here comes segmentation into play as to know consumer preferences better, they need to be sorted based on several defined criteria.

The Borderless Access research team, BA Insightz, had employed a hybrid method of assessment in the geographical region of the US to yield the desired insights about the attitude and behaviour of current SVOD users from the lens of the consumer segments.

Measures Used for Segmentation

Following are the measures used for segmenting consumers, plotted against demographics and behaviour to identify the unique features of each of the segments.

Psychographics

Attitude towards Technology

Streaming Behavior

Personality Statements

Media, Social & Cultural Attitudes

Attitude towards Content

Variables Used for Segmentation

Each measure stated above was then given a set of criterions such as preferences, varied interests and perceptions.

The target group, subjected to a set of criteria furthered with categorizing the target audience into 5 actionable segments covering the spectrum of demographics as well as psychographics in the SVOD landscape.

Primary target segments

Streaming Advocates (16%)- This segment consisted mostly of mid-aged couples whose top watch list contains movies streaming and live and recorded TV shows.

Exploring Early Adopters (22%)- This male-dominated segment likes to explore almost all types of content except news.

Secondary target segments

Conscious Original Seekers (22%)- This has a greater number of females who are interested in TV shows and movies.

Trend Followers (21%)- This segment mostly has young males and females who showed interest in all types of content including sports.

Tertiary target segments

Legacy Loyalists (19%)- A female-dominated segment that inclines more toward live TV shows and news.

Findings

Each segment has been studied thoroughly and the following are the key findings-

Streaming advocates and exploring early adopters should be the key segments to target.

Exploring Early Adopters’ and ‘Streaming Advocates’ can be the target groups for kids’ content.

All except ‘Exploring Early Adopters’ can be targeted for mainstream content.

‘Exploring Early Adopters’ and ‘Streaming Advocates’ can be the potential targets segment for any new online streaming services.

The high price of SVOD services is one major factor that stops the segments- ‘Exploring Early Adopters’ ‘Conscious Original Seekers’ and ‘Legacy Loyalists’ from continuing the streaming services.

A wide range of content is a prominent reason behind consumers continuing with the services.

With SVOD, as we see, consumers are increasingly calling the shots. Hence the marketers should laser-focus on the customer experience to keep satisfaction and engagement levels high as streaming services flourish. Customer-centricity is the way to go for SVOD service providers.

BA Insightz – our consumer research vertical, helped the client with in-depth insights into each audience segment about attributes such as streaming behavior, entertainment and content consumption habits, triggers and barriers for streaming, and most important, opportunities to tap into each segment.

The insights can be used to access and define the audience segments, thus helping the client deliver exceptional and consistent customer experiences, improved consumer engagement and a key opportunity to stand out.

Final Thoughts

Segmentation can be tricky and complex. However, segmenting your customers can provide tremendous returns when compared to ‘one-size-fits-all’ approaches. Segmentation study defines target audiences and ideal customers; identifies the right market to place a product/service, and allows designing effective marketing strategies.

0 notes

Text

[Week 3-4] Summary Research Game Design: The Art & Business of Creating Games

PART 1 : CHAPTER 6: CREATING A PUZZLE

Overview

As goes along story, there is no hero directly kills the opponents and achieving the goal. Instead they will reach the obstacles, deal with them and finally get through the goal. As these obstacles are the puzzles that the players wanted to solve. A good puzzle contribute to plot, character, and story development. They draw the player into the fictional world. A bad puzzle does not and they are intrusive and obstructionist just like bad writing. A good puzzle fits into the setting and present an obstacles that make sense.

TYPES of PUZZLES

Ordinary Use of an Object

The basic functions of the object are being used to solve the puzzle.

Player enter dark room, find a light socket, and check his inventory and found a light bulb and he attached on it.

Door Example: The player discover the door with golden lock and he has a golden key. He unlock the door with golden key.

Finding Object logic, or hides on the box that requires to solve a puzzle

Unusual Use of an Object

The secondary characteristic of the object.

Require players to recognize them can be use in different ways

Diamond make pretty rings, but they could also cut the glass.

A candle can light up fire, but their wax could also make an impression of a key.

Door Example:

The player find a door with no key but is barred from the other side.

"Building" Puzzles

create a new object with raw materials (also combine object together)

Information Puzzles

player has to supply the missing piece of information

simply as supplying password → deducing the correct sequence of number that will defuse the bomb

Codes, Cryptograms, and Other "Words" Puzzles

subset of information puzzles

defining he boundaries of the kind of information for which the player is looking

Excluded Middle Puzzles

Hardest Type of Puzzles, both design and solve

Involving create reliable cause-and-effect relationship.

State in the term of logic;

a always cause b

c always cause d

the player want d then he has to believe that b and c would be linked and he will perform a.

Door Example:

(a) rubbing the lamp

(b) summon a bull

(c) bull see something red

(d) bull charge to it.

Door is red, and the player can see a lamp then he rub the lamp and b,c,d will be performed to unlock the door

Preparing the Way

A wrinkle on the excluded middle that makes it even more difficult.

Te player require to create the condition in order to perform the cause-and-effect chain reaction

Door Example:

Door is green, so the player need to realize to paint in red in order to summon the bull to charge to it.

People Puzzles

greatly enhance the building of the characters and storytelling

involve a people blocking the player's progress or holding important information piece

Timing Puzzles

difficult class of the puzzle

require player to recognize that the action perform will not be effect immediately but will cause in particular point in the future

Sequence Puzzles

need to perform correct sequence action in the right order

can be elaborate differently

commonly the action you perform will be block to acheiving the goal, then the player need to put something back to reset the sequence again once it gone.

Logic Puzzles

deduce particular bit of information by examining a series of statements and ferreting (search) out a hidden implication

Classic Game Puzzles **

aren't action or adventure game puzzles

Examples: magic square puzzles, move the matchstick puzzles, or jump the peg and leave the last one in the middle puzzles...

reminder: easy way to reset them

possible help system or an alternative to completing the puzzle

Riddles

least satisfying puzzle ☹️ cause if player does not get it, he does not get it.

Dialog Puzzles

A by-product dialog trees

A player need to follow a conversation down the correct path until a character says or does the right thing

advantage: bring really like people talk

disadvantage: not really a puzzle

Trial and Error Puzzles

player is confronted with an array of choices and with no information to go on.

Need to test each possible choices

Machinery Puzzles

need to figure out how to operate or control the machine

sometimes involve minor trial-n-error.

sometimes logic.

Alternative Interface

can from machinery puzzles to maps

you remove the normal game interface and replace it with a screen the player has to manipulate to reach a predefined condition

Maze

As what you heard is a maze

Gestalt Puzzles

recognize through a general condition.

Interesting fact: the designer does not actually state the condition, instead provide evidence that build up over time.

WHAT MAKES A BAD PUZZLE?

circumstances to fit into the game world.

Is important to suit the theme and setting of the game itself

Restore Puzzles

unfair to kill a player because not solving the puzzle

and only provide the information he needed to solve it

does not give a player to think ahead of time

Arbitrary Puzzles

Effects should always linked to the causes

Event shouldn't be happen because of designer intended to

Designer Puzzles

avoid the puzzles that only make sense to designer

require good testing corps to test out the ideas

Binary Puzzles

a puzzle only have yes or no answer is yield the instance failure or success

should give them lots of choices

Hunt the Pixel Puzzles

the important object in the screen is just too small for notice

WHAT MAKES A GOOD PUZZLES

a puzzle that can be solved, and eventually learned

Fairness

A player should theoretically be able to solve it the first time they encounters it simply by thinking hard enough

Assume he is presented all the information needed.

Appropriation to the Environment

Plopping a logic or mathematical puzzle into the middle of the story is NOT a good way to move a narrative along

The best puzzles are naturally fits into the story

Amplifying the Theme

should not have the player taking actions contrary to the character you have set up

The V-8 Response

A player A-ha! Of Course = Good Puzzles

A player There is no way to solve it, I don't even understand it now, Why does it work? = Bad Puzzles

LEVELS OF DIFFICULTY

Adjusting the difficulty of the puzzle

Bread Crumbs

change the directness of the information you give the player.

The Solution's Proximity to the Puzzle

The closeness of answer to the problem determine the difficulty of the game

True in both psychological and geographical

Alternative Solutions

provide alternative solutions to puzzles to make it easier

Red Herrings

implement red herrings to make it harder

Not necessary to do it

Steering the Player

Responding to the player input that not actually solve puzzles.

Steering the player to the correct path on the true answer by providing small clues

Response should contain little nuggets of information

HOW TO DESIGN THE PUZZLE

Creating the Puzzle

Settings → Characters → General Goals → Different Scenes → Sub goal → Obstacles

Obstacles are the puzzles

Create problems for the player that are appropriate to the story and setting

Think of the character I had created

Think about reasonable obstacles to place in his path

The Villians

think about villian while design puzzle

He is the one who create obstacles.

materials he used is from the environment, clear purpose and it's up for hero to overcome the obstacles

Ask ourselves why the puzzles are there

Player Empathy

the ability of looked into the game as the player does

to determine what is fair and reasonable

Formed ourselves as a player while designing the puzzles

Letting the player know where the puzzles are

SUMMARY

Make sure the puzzles enhance the game rather than detract from it

Use the puzzle to draw the attention of the player into the story so that he learned more about the characters

Don't withhold the information he need to solve the puzzles

Develop player empathy and strive for that perfect level frustration that drives a player forward

Above all, — play fair

0 notes

Text

Applying Knowledge Discovery Process in General Aviation Flight Performance Analysis

Abstract

The air transportation is a technology-intensive industry that has found itself collecting large volumes of data in a variety of forms from daily operations. Aviation data play a critical role in numerous aspects of aviation industry, aircraft flight performance is one of the most important uses of aviation data. Aircraft operational data are usually collected using aircraft onboard flight data recording devices and have been traditionally used for monitoring flight safety and aircraft maintenance with basic statistical analysis and threshold exceedance detection. With the development of data science and advanced computing technology, there is a growing awareness of incorporating knowledge discovery process into aviation operations. This article provides a review of recent studies on flight data analysis with two example studies on applying knowledge discovery process in flight performance analyses of general aviation.

Keywords:General aviation; Knowledge discovery process; Flight performance analysis

Introduction

In the field of aviation, data analyses have been widely adopted for a variety of needs, such as aviation safety improvement, airspace utility assessment, and operational efficiency measuring. With different purposes, aviation data are collected, analyzed and interpreted from different perspectives with a variety of techniques. For example, data of air traffic volume are usually used for airspace management and airline network planning, data of transportation gross or passenger load factor are used for airline’s economic performance related analyses, and flight operational data from flight data recorder could be used for safety analyses. The number of common aviation data analysis techniques documented by the Federal Aviation Administration (FAA) System Safety Handbook reaches as many as 81, and there are more techniques are being developed [1]. Because of the diversity of aviation data and analytical purposes, expensive investment on technological equipment, proprietary software, and long-term labor costs for data collection and analytics is required for aviation operators.

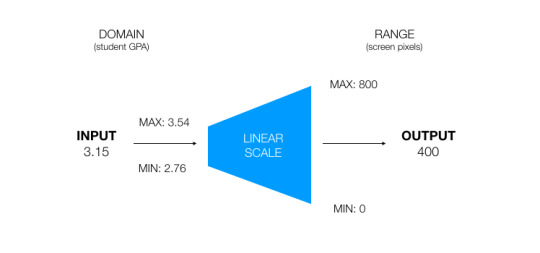

Knowledge discovery is a nontrivial extraction of implicit, previously unknown, and potentially useful information from a collection of data, including a process of obtaining raw data, cleaning, transforming data, and modeling and converting data into useful information to support decision-making, shown as Figure 1 [2, 3].

As an interdisciplinary area, knowledge discovery process widely involves database technology, information science, statistics, machine learning, visualization, and other disciplines, and includes the following nine steps:

a) Develop an understanding of the application domain and the relevant prior knowledge, and identify the goal of the KDD process from the customer’s perspective,

b) Select a target data set or subset of data samples on which discovery is to be performed,

c) Data cleaning and preprocessing, including removing noise, collecting necessary information to model or account for noise, deciding on strategies for handling missing data, and accounting for time-sequence information and known changes,

d) Data reduction and projection by finding useful features to represent the data depending on the goal or task,

e) Match the goals of the KDD process to a particular data mining method,

f) Exploratory analysis and model and hypothesis selection by choosing the data mining algorithms and selecting methods to be used for searching for data patterns,

g) Data mining to search for patterns of interest in a particular representational form or a set of such representations, such as classification rules or trees, regression, and clustering,

h) Interpret the mined patterns, possibly return to any of steps 1 through 7 for needed iteration,

i) Apply the discovered knowledge directly or incorporate the knowledge into another system for further actions [3].

By taking the advantages of the information and communication technologies, knowledge discovery process has been used to extract useful information from the massive data coming from different fields, such as marketing, finance, sports, astrology, and science exploration. In other words, knowledge discovery process is applicable for a wide range of data-driven cases with appropriate design and implementation, aviation industry is no exception. Many studies have been conducted to adopt the knowledge discovery process for the development of advanced aviation data analysis techniques. The article provides a review of recent studies on flight data analysis with two example studies on applying knowledge discovery process in flight performance analyses of general aviation (GA).

Review of Flight Data Analysis in GA

The United States has the largest and most diverse GA community in the world performing an important role in noncommercial business aviation, aerial work, instructional flying, and pleasure flying [4]. During the last decades, GA accident rates indicate a decreasing trend, but there were still estimated 347 people killed in 209 GA accidents in 2017 [5]. Reducing GA accident rates has been a challenge for many years. The FAA and industry have been working on several initiatives to improve GA safety, such as the General Aviation Joint Steering Committee (GAJSC), Equip 2020 for ADS-B Out, new Airman Certification Standards (ACS), and the Got Data? External Data Initiative [5]. Compared to last century, there are fewer aviation accidents with common causes. Traditional aviation safety improvement strategies relying on reactively investigating aircraft accidents and incidents are no longer enough to support further improve aviation safety. Therefore, government and the aviation industry have steered safety enhancement strategies from reactive approaches to proactive approaches [6]. Given the effectiveness of Flight Data Monitoring/Flight Operational Quality Assurance (FDM/FOQA) programs on commercial aviation safety improvement, the FAA and industry are also focused on reducing GA accident rate by primarily using a voluntary, non-regulatory, proactive, data-driven strategies [5]. For example, de-identified GA operational data were used in the Aviation Safety Information Analysis and Sharing (ASIAS) program to identify risks before they cause accidents [5]. The National General Aviation Flight Information Database (NGAFID) was launched as a joint FAA-industry initiative designed to bring voluntary FDM to general aviation, and a datalink between ASIAS and the NGAFID was built by the University of North Dakota in 2013 [7].

Today, the FDM is also known as flight data analysis or operational flight data monitoring (OFDM) under the framework of International Civil Aviation Organization (ICAO) and other civil aviation authorities, as shown in Figure 2 [8]. Although the features of each program may vary, most of them are developed on two primary approaches: The exceedance detection approach and the statistical analysis approach [9]. Exceedance detection looks for deviation from flight manual limits and standard operating procedures (SOPs). Exceedance detection approach detects predefined undesired safety occurrences. It monitors interesting aircraft parameters and trigger warning or draws attentions of safety specialists when parameters hit the preset limits or baselines under certain conditions. For example, the program can be set to detect the events when the aircraft parameters of speed, altitude, or attitude are higher than predefined thresholds.

Statistical analysis approaches are used to create the flight profiles, plot the distributions and trends of certain types of flight parameters, or map flight track on geo-referenced chart to examine particular operational features of flight. By using statistical analysis approaches, aviation operators not only obtain numeric features of flight operations, but also acquire a more comprehensive picture of the flight operations based on the distributions of aggregated flight data [9]. Statistical analysis is a tool to look at the total performance and determine the critical safety concerns for flight operations. In addition, both exceedance analysis and statistical analysis can dive into the data on a specific target, such as phases of flight, airports, or aircraft type.

Many observations in aviation data are either spatially or temporally related, for instance, aircraft flight parameters captured by onboard flight data recorder, tracks from radar, and aircraft GPS position data are all in the form of sequential observations. In addition to above two prevalent flight data analysis approaches for flight safety assurance, many other data analysis techniques are being developed and used for more specific objectives.

Exceedance Detection of GA Flight Operations

Flight data analysis is an effective strategy for proactive safety management in aviation. In addition to Part 121 commercial air carriers, Part 135 operations are also highly encouraged to adopt Flight Data Monitoring (FDM) as one of the most wanted transportation safety improvements [10]. However, the implementation of flight data analysis requires significant investment in flight data recording technology, data transferring, and professional software and labor cost for data analytics. Because of the high cost of flight data analysis, totally only 53 air transportation service operators in the U.S. have a FOQA program implemented [11]. Moreover, most flight data analysis strategies for commercial air carriers adopt the Ground Data Replay and Analysis System (GDRAS), which is typically a proprietary software with predesigned functionalities and replies on a great number of flight parameters fed from advanced flight data recorder. Due to the resource constraint of general aviation, traditional flight data analyses are usually unaffordable and not flexible to meet the demand of GA operators given GA aircraft have less sophisticated avionics onboard and diverse operational characteristics.

An innovative flight exceedance detection strategy was explored based on knowledge discovery process and next generation air traffic surveillance technology – automatic dependent surveillance broadcast (ADS-B) [12, 13]. This strategy is expected to provide an inexpensive flight data analysis strategy particularly for GA operations by eliminating the dependency of proprietary GDRAS and investment of expensive onboard flight data recorder. These studies collected aircraft operational data from ADS-B and followed the knowledge discovery process to preprocess, transform and analyzed the data, as shown in Figure 3.

The exceedance detection procedure used in the study is shown as Figure 4. In total, a set of 29 flight metrics were developed based on the content of ADS-B data for the purpose of exceedance detection and flight performance measurement. Five flight exceedances were identified from aircraft operations manual and airplane information manual for experiment:

a) No turn before reaching 400 feet above the ground level (AGL) during the phase of takeoff

b) suggested climb angle during initial climb from 0 to 1000 feet AGL: 7 – 10 degrees

c) suggested indicated airspeed for the Base leg: 90+5 knots

d) suggested indicated airspeed for final approach: 78+5 knots

e) stabilized approach: Constant glide angle established from 500 feet AGL to 0 feet AGL for flight under visual flight rules (VFR)

40 sets of ADS-B data were collected for exceedance detection. The study result shows certain types of exceedances could be more accurately detected than other exceedance events by using ADS-B data. The primary reason is because of the missing values of ADS-B data as it is transmitted wirelessly on 1090MHz or 978MHz. However, flight data analysis using ADS-B data is expected to be a promising strategy with further research and development.

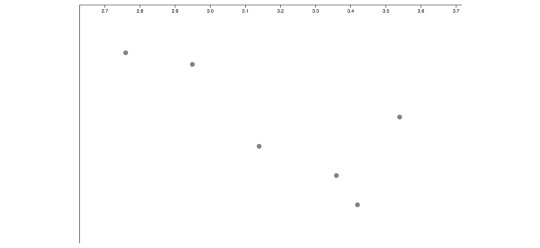

Fuel Consumption Analysis of GA Piston-engine Aircraft

With the modernization of GA fleet, there are more and more GA aircraft equipped with advanced digital flight data recorders, which provide quick access to GA flight data. Therefore, aircraft operational data become more accessible for flight performance analysis. One of such studies explored the fuel consumption efficiency of GA piston-engine aircraft by discovering the relationship between aircraft operational parameters [14]. Following the knowledge discovery process, 22 sets of flight operational data with 176,370 data observations were collected from Garmin G1000 avionics system installed on GA pistonengine aircraft – Cirrus SR20, and transformed and analyzed with machine learning techniques. Statistical relationship between the fuel flow rate and three aircraft parameters (aircraft ground speed, flight altitude, and the vertical speed) was modeled. The classification and Regression Trees (CART) were used to predict the fuel flow rate using the three explanatory aircraft parameters, as shown in Figure 5. By developing the model, GA operators could intuitively estimate the fuel flow rate of aircraft at any given time with only three other aircraft parameters, which could be acquired real-time or post-flight from many available aeronautic technologies. In addition, analyses in this study also show that aircraft groundspeed and vertical speed have higher impact on the fuel flow rate than the flight altitude, which provides GA operators of important intelligence to optimize the fuel consumption efficiency [14].

Fuel Consumption Analysis of GA Piston-engine Aircraft

With the modernization of GA fleet, there are more and more GA aircraft equipped with advanced digital flight data recorders, which provide quick access to GA flight data. Therefore, aircraft operational data become more accessible for flight performance analysis. One of such studies explored the fuel consumption efficiency of GA piston-engine aircraft by discovering the relationship between aircraft operational parameters [14]. Following the knowledge discovery process, 22 sets of flight operational data with 176,370 data observations were collected from Garmin G1000 avionics system installed on GA pistonengine aircraft – Cirrus SR20, and transformed and analyzed with machine learning techniques. Statistical relationship between the fuel flow rate and three aircraft parameters (aircraft ground speed, flight altitude, and the vertical speed) was modeled. The classification and Regression Trees (CART) were used to predict the fuel flow rate using the three explanatory aircraft parameters, as shown in Figure 5. By developing the model, GA operators could intuitively estimate the fuel flow rate of aircraft at any given time with only three other aircraft parameters, which could be acquired real-time or post-flight from many available aeronautic technologies. In addition, analyses in this study also show that aircraft groundspeed and vertical speed have higher impact on the fuel flow rate than the flight altitude, which provides GA operators of important intelligence to optimize the fuel consumption efficiency [14].

Discussion and Conclusion

This article reviews the recent progress of aviation data analyses and two example studies of applying knowledge discovery process in GA flight performance analyses from different perspectives. Two example studies illustrate how knowledge discovery process are practically addressing different demands in flight performance analyses in safety measurement and operational efficiency monitoring. Knowledge discovery process has been widely practiced in many non-aeronautic fields by taking the advantages of the improvement of new information and communication technologies. As an important part of transportation industry, aviation has been incorporating more data-driven strategies in operations, management, and safety. Knowledge discovery process incorporates different data sources for data analyses so that it could support diverse knowledge discovery purposes. In knowledge discovery process, data are selected upon the analysis objectives and analyzed from different viewpoints to discover interesting patterns driven by the main goal of supporting better decision-making. All of those features make it a promising strategy in the world of air transportation.

While knowledge discovery is a promising strategy to extracting useful information from massive aviation data, applying knowledge discovery for a specific objective relies on good input of domain knowledge and well addressing constrains in each step of knowledge discovery process. First, domain knowledge generally determines how practical the entire knowledge discovery process is, and how useful the output knowledge could be. Staring from determining target data and choosing appropriate data mining techniques, solid domain knowledge decides whether the selected target data and analytic strategies fit the desired research objectives. For the later steps of interpretation and reporting the outcomes, domain knowledge arbitrates whether explanations of discovered knowledge is applicable and valuable given the research background. Second, issues from database constrain the effectiveness of knowledge discovery projects. Data analysts should take above factors into account when practice knowledge discover process in the field of aviation.

To Know More About Trends in Technical and ScientificResearch Please click on: https://juniperpublishers.com/ttsr/index.php

To Know More About Open Access Journals Please click on: https://juniperpublishers.com/index.php

#Juniper publishers#Open access Journals#Peer review journal#Juniper publishers reviews#Juniper publisher journals

0 notes

Text

Uncanny Dimple

My final project Uncanny Dimple is a body of work that examines the close proximity between the cute and the creepy. Drawing from roboticist Masahiro Mori’s concept of the Uncanny Valley, which explains the eeriness of lifelike robots, my theory of the Uncanny Dimple portrays a parallel phenomenon in the context of cuteness. The robotic creatures inhabiting the dimple demonstrate the often contradictory affects we experience towards non-human actors. When does cuteness start to border on the grotesque? If cuteness is the outcome of extreme objectification of living beings, can it also be the result of an anthropomorphising inanimate objects? Why does cuteness trigger the impulse to nurture and to protect, but also to abuse and to violate? Cute things are often seen as innocent, passive, and submissive, but can they also manipulate, misbehave and demand attention?

This body of work is based on my MFA thesis Uncanny Dimple — Mapping the Cute and the Uncanny in Human-Robot Interaction, where I examine the aforementioned contradictions of cuteness by applying Donna Haraway’s Cyborg Manifesto (1991) and the Uncanny Valley theory by Masahiro Mori (1970). I also reference the recent research on the cognitive phenomenon of cute aggression, a commonly experiences impulse to harm cute objects. (Aragón et al. 2015; Stavropoulos & Alba 2018)

Sigmund Freud first coined the term uncanny in his 1919 essay Das Unheimliche to describe an unsettling proximity to familiarity encountered in dolls and wax figures. However, the contemporary use of the word has been inflated by the concept of the Uncanny Valley by roboticist Masahiro Mori. Mori’s notion was that lifelike but not quite living beings, such as anthropomorphic robots, trigger a strong sense of uneasiness in the viewer. When plotting experienced familiarity against human likeness, the curve dips into a steep recess — the so called Uncanny Valley — just before reaching true human resemblance.

As a rejection of rigid boundaries between “human”, “animal” and “machine”, Haraway’s cyborg theory touches many of the same points as Mori’s Uncanny Valley. Haraway addresses multiple persistent dichotomies which function as systems of domination against the “other” while mirroring the “self”, much like cuteness and uncanniness: “Chief among these troubling dualisms are self/other, mind/body, culture/nature, male/female, civilized/primitive, reality/appearance, whole/part, agent/resource, maker/made, active/passive, right/wrong, truth/illusion, total/partial, God/man.” (Haraway 1991: 59)

Haraway’s image of the cyborg, despite functioning more as a charged metaphor than an actual comment on the technology, still aptly demonstrates the dualistic nature of cuteness and its entanglements with the uncanny at the site of human-robot interaction. Furthermore, Haraway’s cyborg theory grounds the analysis of the cute to a wider socio-political context of feminist studies. In the Companion Species Manifesto where she updates her cyborg theory, Haraway (2003: 7) is adamantly reluctant to address cuteness as a potential source of emancipation (which seems to be the case with other feminists of the same generation): "None of this work is about finding sweet and nice — 'feminine' — worlds and knowledges free of the ravages and productivities of power. Rather, feminist inquiry is about understanding how things work, who is in the action, what might he possible, and how worldly actors might somehow be accountable to and love each other less violently." I argue on the contrary that some of these inquiries can be answered by exposing the potential of cuteness as a social and moral activator. While Haraway describes a false dichotomy between these “sweet and nice” worlds and “the ravages and productivities of power”, I believe that their entanglement is in fact an important site for feminist inquiry. By revealing the plump underbelly of cuteness, we can harness the subversive power it wields.

In my thesis I conclude that cuteness and uncanniness are both defined by their distance to what we consider “human” or “natural”, and shaped by the distribution of power in our relationships with objects that we deem having a mind or agency. I continue to propose that a similar phenomenon to the Uncanny Valley can be described in regard of cuteness, which I call the Uncanny Dimple. Much like Mori’s valley and Haraway’s cyborg, Uncanny Dimple is presented as a figuration: It does not necessarily try to make any empirical or quantitative claims about the experience of cuteness, but strives to utilise the diagram as a rhetorical device for better understanding the entangled affects of cuteness and uncanniness.

Similar to Mori’s visualisation of the Uncanny Valley, the Uncanny Dimple is mapped in a diagram where the horizontal axis denotes “human likeness”, but Mori’s vertical axis of “familiarity” is in this case replaced with cuteness. Similar to Mori, I propose that cuteness first increases proportionally with anthropomorphic features. As established in Konrad Lorenz’s Baby Schema model from 1943, cuteness also increase proportionally in the presence of neotenic (i.e. “babylike”) features, such as large eyes, tall forehead, chubby cheeks and small nose. I suggest that this applies only to some extent: When the neotenic features have reached a point where they are over-exaggerated beyond realism, but the total human likeness is still below the Threshold of Realism, cuteness climaxes at what I call the Cute Aggression Peak. When human likeness exceeds that point, cute aggression becomes unbearable, the experienced cuteness is surpassed by uncanniness, and the curve dips to the Uncanny Dimple.

I wanted to create various cute but uncanny creatures which all had their distinctive way of moving or interacting with the audience. I created multiple different prototypes of most of the creatures, and in the final installation I had eight different types:

1. Sebastian is an interactive quadruped robot that can detect obstacles. Sebastian will wake up if it's approaced, and run away. The inverse kinematic functions for the quadruped gait are based on SunFounder's remote controlled robot. In the basic quadruped gait three legs are on the ground while one leg is moving. The algorithm calculates the angles for every joint in every leg at every given time, so that the centre of gravity of the robot stays inside the triangle of the three supporting legs. I designed all the parts and implemented the new dimensions in the code. I also added the ultrasonic sensor triggering and obstacle detection. For calculating distance measurements based on the ultrasonic sensor readings I used the New Ping library by Tim Eckel.

2. Ritu is an interactive robotic installation using Arduino, various sensors, servo motors and electromagnet. Users are prompted to feed the vertically suspended robot, which will descend, pick the treat from the bowl, and take it up to its nest. There is a hidden light sensor in the bowl, which senses if food is placed in the bowl. This will trigger the robot to descend using a continous rotation servo motor winch. The distance the robot moves vertically is based on the reading of a ultrasonic sensor. The robot uses an electromagnet attached to a moving arm to pick up objects from the bowl. After succesfully grabbing the object, the robot will ascend and drop the object in a suspended nest. For calculating distance measurements based on the ultrasonic sensor readings I used the New Ping library by Tim Eckel.

3. Crawler Bois are two monopod robots that move with motorised crawling legs that mimic the mechanism of real muscles and tendons. Each robot has a leg that consist of two joints, two servo motors, a string, and two rubber bands. The first servo lifts and lowers the leg, and the second servo tightens the string (the "muscle") which contracts the joins. When the string relaxes, the rubber bands (the "tendons") pull the joints to their original position. The robots move back and forth in a randomised sequence and sometimes do a small dance.

4. Lickers are three individually interactive robots using servo motors, Scotch Yoke mechanisms, and sound sensors. The Scotch Yoke is a reciprocating motion mechanism, in this case converting the rotational motion of a 360 degree servo motor into the linear motion of a licking silicone tongue protruding from the mouth of a creature. If a loud sound is detected, the creature will stop licking and lift up its ears. The treshold of the sound detection can be modified directly from a potentiometer on the sound sensor module.

5. Shaking Little Critter is a simple interactive installation using an Arduino, a vibrating motor and a light sensor. Users are prompted to remove the creature's hat, after which it will "get cold" and start shaking around in its cage. The absence of the hat is detected with a light sensor on top of the creature's head.

6. Rat Queen is a robotic installation exploring the emergent features arising from the combination of pseudo-randomness and mechanic inaccuracy. It consist of five identical rats-like robots that are connected to a shared power supply with their tails. All the members of the Rat Queen move independently in randomised sequences, but because they are started at the same time, the randomness is identical, since the random seed is calculated based on the starting time of the program. However, due to small inaccuracies and differences in the continuous rotation servo motors and their installation, the movement patterns diverge, and the rats slowly get increasingly tangled with their tails.

7. Cute Aggression is an interactive sound installation using Arduino and Max MSP. Users can record sounds by whispering in a hidden microphone in the plush toy creature's ear. A tilt switch in the ear starts the recording when the ear is lifted. The sounds are played back when the user pets the creature. The petting is detected with conductive fabric using Capacitive sensing library by Paul Badger. The reading from the sensor is sent to a Max MSP patch via serial communication. The sounds a generated from the Arduino data using a granular synthesis method based on Nobuyasu Sakonda’s SugarSynth. Sounds can be modulated by manipulating the creature's nipples, which are silicone-covered potentiometers.

8. Cucumber Weasel is a modified version of the motorised toy know as weasel ball. The plastic ball has a weighted, rotating motor inside, which makes the ball roll and change directions. The toy usually has a furry “weasel” attached to it, but here it is replaced with a silicone cast of a cucumber.

References:

Aragón, O. R; Clark, M. S.; Dyer, R. L. & Bargh, J. A. (2015). “Dimorphous Expressions of Positive Emotion: Displays of Both Care and Aggression in Response to Cute Stimuli”. Psychological Science 26(3) pp. 259–273.

Badger, P. (2008). Capacitive sensing library.

Eckel, T. (2017). New Ping library for ultrasonic sensor.

Freud, S. (1919). The ‘Uncanny’. The Standard Edition of the Complete Psychological Works of Sigmund Freud, Volume XVII (1917-1919): An Infantile Neurosis and Other Works, pp. 217-256.

Haraway, D. (1991). "A Cyborg Manifesto: Science, Technology, and Socialist-Feminism in the Late Twentieth Century," in Simians, Cyborgs and Women: The Reinvention of Nature. New York, NY: Routledge.

Haraway, D. (2003). The Companion Species Manifesto: Dogs, People, and Significant Otherness. Chicago, IL: Prickly Paradigm Press.

Lorenz, K. (1943). “Die angeborenen Formen moeglicher Erfahrung”. Z Tierpsychol., 5, pp. 235–409.

Mori, M. (2012). "The Uncanny Valley". IEEE Robotics & Automation Magazine, 19(2), pp. 98–100.

Rutanen, E. (2019). Uncanny Dimple — Mapping the Cute and the Uncanny in Human-Robot Interaction.

Sakonda, N. (2011). SugarSynth.

Sunfounder (n.d.). Crawling Quadruped Robot Kit v2.0.

Stavropoulos K. M. & Alba L. A. (2018). “‘It’s so Cute I Could Crush It!’: Understanding Neural Mechanisms of Cute Aggression”. Frontiers in Behavioral Neuroscience, 12, pp. 300

1 note

·

View note

Link