#bigdatatools

Explore tagged Tumblr posts

Text

🚀 Dive into the World of Big Data with Data Analytics Masters! 💡 Gain mastery in:

The Big Data Ecosystem Programming & Querying Distributed Systems Real-Time Processing Real-World Data Scenarios ✨ Upskill yourself and stay ahead in the data game!

📞 Call us at +91 99488 01222 🌐 Visit us: www.dataanalyticsmasters.in

0 notes

Text

Open Source Tools for Data Science: A Beginner’s Toolkit

Data science is a powerful tool used by companies and organizations to make smart decisions, improve operations, and discover new opportunities. As more people realize the potential of data science, the need for easy-to-use and affordable tools has grown. Thankfully, the open-source community provides many resources that are both powerful and free. In this blog post, we will explore a beginner-friendly toolkit of open-source tools that are perfect for getting started in data science.

Why Use Open Source Tools for Data Science?

Before we dive into the tools, it’s helpful to understand why using open-source software for data science is a good idea:

1. Cost-Effective: Open-source tools are free, making them ideal for students, startups, and anyone on a tight budget.

2. Community Support: These tools often have strong communities where people share knowledge, help solve problems, and contribute to improving the tools.

3. Flexible and Customizable: You can change and adapt open-source tools to fit your needs, which is very useful in data science, where every project is different.

4. Transparent: Since the code is open for anyone to see, you can understand exactly how the tools work, which builds trust.

Essential Open Source Tools for Data Science Beginners

Let’s explore some of the most popular and easy-to-use open-source tools that cover every step in the data science process.

1. Python

The most often used programming language for data science is Python. It's highly adaptable and simple to learn.

Why Python?

- Simple to Read: Python’s syntax is straightforward, making it a great choice for beginners.

- Many Libraries: Python has a lot of libraries specifically designed for data science tasks, from working with data to building machine learning models.

- Large Community: Python’s community is huge, meaning there are lots of tutorials, forums, and resources to help you learn.

Key Libraries for Data Science:

- NumPy: Handles numerical calculations and array data.

- Pandas: Helps you organize and analyze data, especially in tables.

- Matplotlib and Seaborn: Used to create graphs and charts to visualize data.

- Scikit-learn: A powerful tool for machine learning, offering easy-to-use tools for data analysis.

2. Jupyter Notebook

Jupyter Notebook is a web application where you can write and run code, see the results, and add notes—all in one place.

Why Jupyter Notebook?

- Interactive Coding: You can write and test code in small chunks, making it easier to learn and troubleshoot.

- Great for Documentation: You can write explanations alongside your code, which helps keep your work organized.

- Built-In Visualization: Jupyter works well with visualization libraries like Matplotlib, so you can see your data in graphs right in your notebook.

3. R Programming Language

R is another popular language in data science, especially known for its strength in statistical analysis and data visualization.

Why R?

- Strong in Statistics: R is built specifically for statistical analysis, making it very powerful in this area.

- Excellent Visualization: R has great tools for making beautiful, detailed graphs.

- Lots of Packages: CRAN, R’s package repository, has thousands of packages that extend R’s capabilities.

Key Packages for Data Science:

- ggplot2: Creates high-quality graphs and charts.

- dplyr: Helps manipulate and clean data.

- caret: Simplifies the process of building predictive models.

4. TensorFlow and Keras

TensorFlow is a library developed by Google for numerical calculations and machine learning. Keras is a simpler interface that runs on top of TensorFlow, making it easier to build neural networks.

Why TensorFlow and Keras?

- Deep Learning: TensorFlow is excellent for deep learning, a type of machine learning that mimics the human brain.

- Flexible: TensorFlow is highly flexible, allowing for complex tasks.

- User-Friendly with Keras: Keras makes it easier for beginners to get started with TensorFlow by simplifying the process of building models.

5. Apache Spark

Apache Spark is an engine used for processing large amounts of data quickly. It’s great for big data projects.

Why Apache Spark?

- Speed: Spark processes data in memory, making it much faster than traditional tools.

- Handles Big Data: Spark can work with large datasets, making it a good choice for big data projects.

- Supports Multiple Languages: You can use Spark with Python, R, Scala, and more.

6. Git and GitHub

Git is a version control system that tracks changes to your code, while GitHub is a platform for hosting and sharing Git repositories.

Why Git and GitHub?

- Teamwork: GitHub makes it easy to work with others on the same project.

- Track Changes: Git keeps track of every change you make to your code, so you can always go back to an earlier version if needed.

- Organize Projects: GitHub offers tools for managing and documenting your work.

7. KNIME

KNIME (Konstanz Information Miner) is a data analytics platform that lets you create visual workflows for data science without writing code.

Why KNIME?

- Easy to Use: KNIME’s drag-and-drop interface is great for beginners who want to perform complex tasks without coding.

- Flexible: KNIME works with many other tools and languages, including Python, R, and Java.

- Good for Visualization: KNIME offers many options for visualizing your data.

8. OpenRefine

OpenRefine (formerly Google Refine) is a tool for cleaning and organizing messy data.

Why OpenRefine?

- Data Cleaning: OpenRefine is great for fixing and organizing large datasets, which is a crucial step in data science.

- Simple Interface: You can clean data using an easy-to-understand interface without writing complex code.

- Track Changes: You can see all the changes you’ve made to your data, making it easy to reproduce your results.

9. Orange

Orange is a tool for data visualization and analysis that’s easy to use, even for beginners.

Why Orange?

- Visual Programming: Orange lets you perform data analysis tasks through a visual interface, no coding required.

- Data Mining: It offers powerful tools for digging deeper into your data, including machine learning algorithms.

- Interactive Exploration: Orange’s tools make it easier to explore and present your data interactively.

10. D3.js

D3.js (Data-Driven Documents) is a JavaScript library used to create dynamic, interactive data visualizations on websites.

Why D3.js?

- Highly Customizable: D3.js allows for custom-made visualizations that can be tailored to your needs.

- Interactive: You can create charts and graphs that users can interact with, making data more engaging.

- Web Integration: D3.js works well with web technologies, making it ideal for creating data visualizations for websites.

How to Get Started with These Tools

Starting out in data science can feel overwhelming with so many tools to choose from. Here’s a simple guide to help you begin:

1. Begin with Python and Jupyter Notebook: These are essential tools in data science. Start by learning Python basics and practice writing and running code in Jupyter Notebook.

2. Learn Data Visualization: Once you're comfortable with Python, try creating charts and graphs using Matplotlib, Seaborn, or R’s ggplot2. Visualizing data is key to understanding it.

3. Master Version Control with Git: As your projects become more complex, using version control will help you keep track of changes. Learn Git basics and use GitHub to save your work.

4. Explore Machine Learning: Tools like Scikit-learn, TensorFlow, and Keras are great for beginners interested in machine learning. Start with simple models and build up to more complex ones.

5. Clean and Organize Data: Use Pandas and OpenRefine to tidy up your data. Data preparation is a vital step that can greatly affect your results.

6. Try Big Data with Apache Spark: If you’re working with large datasets, learn how to use Apache Spark. It’s a powerful tool for processing big data.

7. Create Interactive Visualizations: If you’re interested in web development or interactive data displays, explore D3.js. It’s a fantastic tool for making custom data visualizations for websites.

Conclusion

Data science offers a wide range of open-source tools that can help you at every step of your data journey. Whether you're just starting out or looking to deepen your skills, these tools provide everything you need to succeed in data science. By starting with the basics and gradually exploring more advanced tools, you can build a strong foundation in data science and unlock the power of your data.

#DataScience#OpenSourceTools#PythonForDataScience#BeginnerDataScience#JupyterNotebook#RProgramming#MachineLearning#TensorFlow#DataVisualization#BigDataTools#GitAndGitHub#KNIME#DataCleaning#OrangeDataScience#D3js#DataScienceForBeginners#DataScienceToolkit#DataAnalytics#data science course in Coimbatore#LearnDataScience#FreeDataScienceTools

1 note

·

View note

Text

#BigData#DataProcessing#InMemory#DistributedComputing#Analytics#MachineLearning#StreamProcessing#ApacheSpark#OpenSource#DataScience#Hadoop#BigDataTools

0 notes

Text

There are three major big data security practices that define an enterprise’s BI security system and play an important role in creating a flexible end-to-end big data security system for an organization.

0 notes

Text

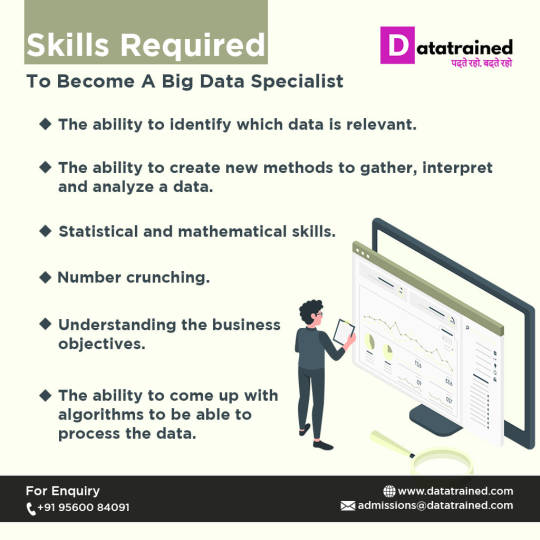

Skills Required to become a Big Data Specialist.

Big Data is considered one of the most trending and emerging technologies. With the increase in data, organizations are adopting these technologies to gain better insights. Here are the top 5 must-have skills needed for being a big data specialist.

Visit for more: https://datatrained.com/big-data-analytics-in-collaboration-with-ibm?fbclid=IwAR3G7WN2PLFhAc4y7nvbgqiA__wSDGPrfe5OG1_xzzjb1Nz4muVE_YB6GFY

#bigdatatools#datascience#hadoop#dataanalytics#dataanalysis#artificialintelligence#technology#machinelearning

0 notes

Photo

Get expertise in Big data processing through our Apache Hadoop training in Chennai. We provide comprehensove training with complete hands-on practices. Call 8608700340 to know more. -SLA #bigdatatechnologies #hadoopcertification #apachehadoop #spark #apachespark #bigdatatools #mongodb #apachestorm #rprogramming #neo4j #rapidminer #dataanalytics #hpcc #pentaho #learnfromhome #utilisequarantine #futuristic #careerdriven #postlockdowngoals #preparedforanything #kknagarchennai #slajobs #summerlearning #creative #learning #transformyourcareer #allcourses #bigdataengineer #bigdataanalytics #bigdatahadoop https://www.instagram.com/p/B_AMnT4APMR/?igshid=kzfa4n41671e

#bigdatatechnologies#hadoopcertification#apachehadoop#spark#apachespark#bigdatatools#mongodb#apachestorm#rprogramming#neo4j#rapidminer#dataanalytics#hpcc#pentaho#learnfromhome#utilisequarantine#futuristic#careerdriven#postlockdowngoals#preparedforanything#kknagarchennai#slajobs#summerlearning#creative#learning#transformyourcareer#allcourses#bigdataengineer#bigdataanalytics#bigdatahadoop

0 notes

Photo

Big Data Software: Vergleich der besten Big Data Tools in 2018

Vergleich der besten Big Data Tools in 2018: ✓Dundas BI ✓iCharts ✓Rapid Insight ✓Databox ✓Klipfolio ✓Tableau ✓Board ✓Sisense. Möchten Sie mehr zu dem Thema erfahren und sich ausführlich informieren? Wir haben für Sie einen umfangreichen Test durchgeführt. Mehr dazu finden Sie hier ➛

https://www.shopboostr.de/big-data-software

Unsere E-Commerce Agentur aus Berlin & München berät Sie gerne bei der Umsetzung Ihres individuellen Projektvorhabens.

0 notes

Link

The data today is just too big to ignore. It is the new gold, which needs to be extracted, refined, distributed and finally monetized. We have around 2.3 trillion gigabytes of data and it will be doubled in the next two years. The use of big data in healthcare might shock many but it can save the lives of many. Have a look at some of the uses:

1. Patient’s care is everything

The healthcare industry is more focused on improving the patient’s care quality these days. Apart from that, they are also focusing on reducing the healthcare cost as well as providing support for the reformed payment structure. Doctors are finding it useful in providing patient-related data. This at of sharing the data is in the favor of patients only as it helps in improving the healthcare. The process includes This approach requires reporting, processing, data management, and process automation. To provide the good healthcare, it is very much necessary that doctors and hospitals work together.

2. The healthcare and internet of things

The Internet of things is defined as the system of connected computing devices that are interrelated to collect and exchange data. There is a huge rise in the volume of data with each connected device. The data received from the IoT is mostly in the unstructured form. Thus the data engineers have enough scope of using Hadoop and analytics. Using IoT in healthcare is important and spending money on healthcare IoT will cost around $120 billion in the coming few years.

Also Read: How Big Data Will Change Your Business

3. Reducing the medical cost

The cost of the healthcare procedure is extremely high and is growing day by day. For this, the predictive analysis comes to the rescue by helping in cutting the hospital cost. Using Big data we can track those patients who are at high risk and needs proper attention. In this way, we could eradicate the readmission cost completely. Not only data, the improved technology will help in optimizing staff for a better pharmaceutical supply chain.

4. Use of Big data in Health Research department

The right type and amount of data are always needed for any research and the same as in the case of the health department. All hospitals submit data on medical cases using big data. As without big data that data is of no worth. This data could save the life of many people and better care can be provided to all the patients.

Read more info at — https://www.oodlestechnologies.com/blog/tagged/bigdata

0 notes

Photo

When it is about data analytics tools, we generally have questions. What is the deviation between such a large number of data analytic tools? Which is better? Which one will be a good idea for You to consider? Get the latest technology updates with techiexpert, as we help you with the recent techniques and certain researches on technology. Checkout best tools for big data analytics-: j.mp/2G6oOW5 and Stay tuned with j.mp/2YsLBm3ko. #techiexpert #technology #bigdatatools #dataanalytics #artificialintelligence

1 note

·

View note

Photo

Know about #BigDataTools with #PegasiMediaGroup at http://bit.ly/2qTuHhD

0 notes

Photo

ビッグテータ可視化ツールはつまりBIツールで、今企業のデータ分析と意思決定に広く応用されて、とても役に立ちます。しかし、市場で製品が多くて、みんなきっとどれを選択すべきか悩むことがあるでしょう。もし下図のようなダッシュボードを作成したい場合、どのような方法を使えればいいんですか? http://www.finereport.com/jp/analysis/bigdatatools/

BIツール——データエンジニア、データアナリスト、開発者 1.Tableau 2.FineReport 3.PowerBI

データ可視化のチャートライブラリ——開発者、エンジニア向け 4.Echarts 5.Highcharts

データマ���プ類 6.Power Map 2016 7.Arc GIS

データマイニングのプログラミング言語——データサイエンティスト、データアナリスト 8.R 9.Python

0 notes

Link

Another crucial aspect of data worth remembering is its importance. The data becomes useless unless it is turned into useful and knowledgeable information to help management in decision making. As a result of this unprecedented growth, numerous Big Data tools and software has gradually proliferated. While these tools can perform several data analysis tasks they also provide efficiency in time and cost. Additionally, these tools can also help enhance the effectiveness of business by exploring business insights.

0 notes

Video

youtube

What is Big Data Technology | Big Data Industry | Technological Trends

0 notes

Text

Big Data for Small Businesses – Effective Ways and Precautions to Take

Big data is the big news of our times, which is slowly growing to be an essential thing for businesses of all kinds and sizes. With the explosion of digital technologies and rising usage of e-commerce and social media networks, a huge cloud of data is getting generated from people from across the globe, which is exponentially growing day in and day out. Until recent times, big data remained as a data mining solution for the bigger corporations, which they used to learn about customer behavior, market trends, and to analyze their business performance. It needed highly sophisticated systems and enormous computing power in order to cut through all this available information and pull some beneficial insights out of it. However, technology has further advanced in terms of big data management, and the infrastructure and cost needed for it also came down further. So, not only bigger corporate, but small businesses can also now tap into the power of big data in order to enhance their business operations and improve customer experience using relevant big data tools.

The power of analytics through big data

Big data is an umbrella term, which covers a lot at the ground level. The data collected from each and every actions and interactions over a connected network from sending an email to posts on Facebook, comments or rating, profile updating, online shopping, honoring an invoice, using a cell phone to contact, or even using a credit card at the POS in a department store. Every single action tends to generate some digital footprint, which is converted into data and stored. So, there is a huge amount of data. In order to gain some useful insight from this vast data ocean, you have to get some mighty analytical tools which can filter out every relevant bit of information and display them in an easily understandable format. Fortunately, this power is also available now at affordable cost and accessible to all including small businesses through various data management platforms. There are even free programs as Google Analytics which can be integrated with customer relationship management tools.

What is the scope of big data in small business?

If you are looking forward to tapping the scope of this vast and rich landscape of big data for the benefit of your business, then there are plenty of avenues to consider. Here let's have an overview of some of the best applications for big data for small business management.

Sorting through social media

Every business, irrespective of being small or big, may have been connected already to a big number of customers especially through the social media networks. In fact, the data generated through such platforms need not have to be limited to interactions, but it can be effectively used for many other purposes. There are many tools like Twilert, Social Mention, and Currently, etc. which let the business users set up notifications and alerts whenever a subject related to your business offerings are mentioned online including your business name, products, services, or relevant keywords. Once if you start to track such relevant mentions, you can further custom tailor the responses and effectively get involved in such conversations to create a buzz around your brand. Doing this consistently with the help of big data tools can help generate more interest in your brand and also help improve engagement and customer satisfaction.

Collection of customized data using CRM

There are a lot of free and minimally priced CRM applications available now to be used by small businesses, which offer featured platforms in order to track customer interactions. Many programs like Zolo, Insightly, Nimble, etc. provide not only the baseline big data functions but also help to cut through the information and pinpoint the most relevant information for business insights. These types of analytical platforms also cover social media functions so that the small business owners can also streamline the big data collected from various sources and leverage it at best.

Monitor the customer calls

When there are a few office telephone which you use for business calling with the help of a Voice over IP type of application or using a customer service call center, these can also be an important source of relevant data. You can use big data tools to collect call logs and also analyze this information to get better business insights. Such customer call data can also help you to: - Discover exact demographics of the callers and filter out the prospects. - Identify the most frequent issues from the phone calls and find out the best value solutions for them. - Analyze the calling trends from inbound calls as to which time you get most of the calls and what measures to be taken to handle the calls effectively. - Optimize customer care through effective call routing and answering. Most of the advanced web-based Voice over IP systems now feature automated call logging and analytics tools. Moreover, they are also turning out to be more and more inexpensive over time.

Handling transactional data

One most important application of big data is gathering transactional data and deriving actionable insight from it. Transactional data put forth many data points with multiple variables. For example, with a customer interacting with you to make a payment for any goods or services purchased from you, you ideally get data including: - The entity they purchased. - The volume of purchase. - The time when they made the purchase. - What promotional offers or coupons triggered the purchase. - How they made the payment for the purchase etc. All this information can be gathered if you deploy apt POS software, with which you can effectively route this information to CRM software for further processing. Even though it will not be appropriate to store the payment details of the customer except for encrypted data, you can still create a comprehensive pool of transactional data to be used in the future. For more information, visit RemoteDBA.com. In conclusion, there are plenty of ways through which small businesses and stores of all sizes and shapes can collect big data and make use of it. With the advent in SaaS platforms for big data, it is also not a necessity now for the small businesses to have any complicated in-house data collection systems and high-end analytics teams. Read the full article

#bigdata#BigDataforSmallBusinesses#bigdatatools#Business#businessadvicetips#data#smallbusinessadvices#Technology#technologyadviceforstartup#technologynews#thepowerofbigdata

0 notes

Link

0 notes

Photo

The Fourth day of FDP was dedicated to honing the skills of faculty in using Neural and Adhoc Networks, Activation Functions and working of MLP in R on UCI machine learning repository datasets in R.

The Session was conducted by Dr. B Chandra - Professor, IIT Delhi.

Visit Website For More Updates: www.jimsindia.org

0 notes