#citationflow

Explore tagged Tumblr posts

Text

允许您通过我们的 Google 帐户授权来集成

Google Search Console,然后从那里导入 Search Console 数据并将其添加到每个网址。了解 Google 如何看待这种索引非常有用Google Analytics:授予相同的授权,但导入数据时稍微复杂一些。我必须定义我想要什么数据。默认情况下,SF 提取基本的。但是,如果我们在 Analytics 中配置了 允许我们将所有数据添加到每个 URL。我们添加分析数据并将其集成到每个 URL 中。这很有趣。这样我们就可以更好地确定工作的优先顺序。结构分析和爬行预算Google 为每个站点分配一个处理时间,该时间显示在 Google Search Console 的抓取统计信息中。由于并非所有内容在 SEO 层面都同等重要,因此防止 Google 在不增加价值的页面上浪费时间非常重要。如果我们优化这个过程,谷歌将改进有趣内容的索引。我们可以用:世界邮政组织链接雕刻下一个/上一个机器人元机器人礼服ETC。因此,我们优先对不同内容进行索引,以将我的定位集中在某些 URL 上。

我们重视其中之我必须使信息架构适应我的内容树

我们在 Screaming Frog 的站点结构中看到它们:链接到页面和 URL 级别的页面(距主页的点击距离)。所有这些都告诉我为特定页面分配的优先级。我还可以提取特定页面的抓取路径。Web 上的迁移Web 迁移始终是一个敏感时刻,因为您可能会增加 Web 流量。尖叫可以让你更好地控制过程并检查一切是否顺利。我们从新网站的审核开始,此外,我们还可以验证旧页面相对于新页面的链接。我们在 Excel 宏中分析所有这些,将 Screming 置于列表模式,从而检查所有 301。SEO 工具的使用需要在适合每个企业的全球 SEO 流程中完成,以便从中获得最大利益。我们回顾了 SEO 过程的主要特征,并找到了每个阶段最适合使用的工具。Eduardo Garolera的 SEO 和数字营销顾问。帕特里夏·萨尔加多介绍了这一活动。SEO Clinic 一直在发展壮大,并因其介绍实际案例而赢得了声誉。

它每月由 Miguel Pascual、Arturo Marim��n 和

公司和 SEO 部门保持 WhatsApp 数据库 致非常重要。例如,有些服务定位三个关键字,费用为 50 欧元/月。这很难,但它会让你思考。当你稍微“摸索”一下时,你会发现这项服务不太可能让你作为一家公司受益。您可以找到可能会或可能不会导致处罚的SEO 服务,但我们必须反思客户实际上会得到什么。我们正在做什么才能获得最好的结果?搜索引擎优化流程今天的搜索引擎优化过程被理解为一个横向学科,它与其他部门互动以充分利用这项活动。这种合作意味着开发出一种非常以用户为中心的 Web 产品。

它不再是为了欺骗搜索引擎

而是为了满足用户的需求。它是关于以这样的方式开发网站,其结构侧重于吸引流量并适应用户的搜索场景。这不是魔法:这是分析。有些 SEO 顾问对他们的活动保持着保密的态度。你必须是透明的,并且能够分享在索引、链接、抓取、关键词分析、内容等方面将要做什么。为了进行这些分析,我们必须找到一个空间,一个仪表板来收集这些信息并能够看到它是如何演变的。我们看到有机流量如何改善、在流量级别实施的��响、所使用工具的 KPI,以便可以定期监控所有这些指标以做出决策。通过这种方式,我们可以��成新的有用工具或丢弃那些不增加价值的工具。自己做重要的是公司中存在SEO文化。这是有定位的公司与没有定位的公司的区别:这是公司所有领域的共同关注点。咨询 SEO 专家所采取的每项行动。

SEO只是另一个渠道如果商业部门融入到日

常业务中,SEO作为流量获取的来源,必须与其他部门同等整合。将SEO与商业结合起来。工具我们可以根据工作的复杂程度、客户数量、投资回报等来使用这些工具。我们可以使用 Search Console、Analytics 和 Excel 来保持简单,或者您也可以扩展和合并多个分析工具。使用工具,有时我们会停留在执行过程中:但重要的是它提供了数据。我们感兴趣。维护一个维基百科来放置公司的知识也可能很有趣,从而保护公司的知识和经验。复制有效的方法如果您正在开发专业知识,请寻找成功案例并进行复制。什么有效,重复它。当我们要进行链接建设活动时,有时我们会发现没有很多链接的域名。但我们必须从根域开始工作。让我们继续讨论 TrustFlow 和 CitationFlow 的重要部分。也许,由于值较低,我们认为该页面不值得。César 分析了 Clinicseo.es:来自 203 个参考域的 12,000 个链接。理想情况下,这两个数字匹配:域和 IP。

否则它闻起来像链接网络

Majestic 为我们提供了时间线上链接和域的反向链接历史记录。当我们收到并增加链接数量、当我们购买大量链接等时,这对于检测活动非常重要。网站有链接增长的自然趋势。急剧上升可能预示着攻击、链接购买、链接建设活动等的结果。但我们必须看到原因。我们还看到 follow 和 nofollow 链接的分布。我们认为,它并不像人们认为的那么重要,至少在本垒板是这样。我们不应该担心。我们也可以看到数据中的图表,但最根本的是我们网站接收到的锚文本的分布。我们可以看到是否在很短的时间内将注意力集中在一些商业锚文本而不是品牌锚文本上。如果我们处于这个利基市场,并且其他网站获得更多品牌锚,谷歌可能会判断我们也应该获得更多品牌锚。它显示了来自一家定位非常好的电子商务的数据。我们看到来自数量不多的不同域的大量链接。我们看到 CitationFlow 比 TrustFlow 大得多。

当点相对于中线聚集在一起而不是有偏差时

页面会更好。但这可能对未来构成威胁。César Aparicio 的 Majestic SEO在演变过程中,我们可以看到在某些时候受欢迎程度如何增加:这是由于负面的 SEO 操作造成的。锚文本的分布:我们看到中国锚给 Wimdu.es 添加负面 SEO。但与���设相反,它的定位非常好。所以判断个人资料好坏的标准是别人,而不是锚文本。我们可以按域查看链接,以及向我们发送链接的特定 URL,以便我们分析它们。它向我们展示了每个 URL 的引用和信任流。我们还可以看到与我们网站上占主导地位的锚点成比例的标签云。您只需查看此图中显示的锚点即可分析竞争对手的链接建设进展情况。我们发现他们使用相同的锚文本链接到我们,链接数量非常多,但收到的域名数量也很多。我们必须对此进行分析。如果我们发现某个域以这种方式排名良好,那么如果我们等于或提高竞争对手的数量,我们肯定可以超越它们:更多的链接或来自更多不同的域,或更好的域(更多的权威)。我们可以显示并分析与某些锚文本链接的网站,这样我就可以看到链接的域类型:在这种情况下,链接出现在页脚或侧边栏中。

它们可能是坏的,也可能不是坏的,这取决于个人资料、锚点、目的地等。有毒链接配置文件通常来自东亚、亚洲国家。因此值得查看链接来源图。我们还可以分析到达特定页面的链接。为此,我们进入页面部分,而不是分析根域。TrustFlow 和 CitationFlow 图:它们的含义不明确,但最好将所有点都集中在回归线上。原则上,分散程度越高,域的质量就越差。我们应该看什么?我们可以从 载数据,尝试在 等之间建立关联。我们可以制作相关图。一般来说,如果 DA 增加,TrustFlow 就会增加。另一方面,Alexa 没有相关性:它不用于衡量链接的质量。当 CitationFlow 非常高而 TrustFlow 非常低时,则可能意味着可能会导致问题。但请记住,我们试图与机器竞争。

我们的联系方式

电报:https://t.me/dbtodata

Whatsapp:https://wa.me/8801918754550

0 notes

Photo

Majestic SEO Tool - Link intelligence tools for SEO and Internet PR and Marketing. Site Explorer shows the inbound links and site summary data.

#SEO #ContentMarketing #DigitalMarketing #OnlineMarketing #SEOTools #SEOTips #Marketing #Majestic

#Backlinks#CitationFlow#TrustFlow#TopicalTrustFlow#LinkResearchTools#qualitybacklinks#Backlinkresearch#Linkbuilding#Backlink#SEOTools#Majesticseo

1 note

·

View note

Text

Ahrefs

Ahrefs is a well-known tool set for backlinks and SEO analysis. Site Audit tool will analyse your website for common SEO issues & monitor your SEO health over time. Alerts will keep you notified of new & lost backlinks, web mentions and keywords rankings.

INBOX me if you are interested to buy monthly subscription at a really exciting price. The original price is 99$ per month. You will be getting not less than 40-50% discount! Hurry up!!

#analysis#content#traffic#competitor#keywords#backlinks#seoexpert#domainrating#domainauthority#clicks#trustflow#citationflow#topseotool#visitors

0 notes

Text

A Comprehensive Analysis of the New Domain Authority

Posted by rjonesx.

Moz’s Domain Authority is requested over 1,000,000,000 times per year, it’s referenced millions of times on the web, and it has become a veritable household name among search engine optimizers for a variety of use cases, from determining the success of a link building campaign to qualifying domains for purchase. With the launch of Moz’s entirely new, improved, and much larger link index, we recognized the opportunity to revisit Domain Authority with the same rigor as we did keyword volume years ago (which ushered in the era of clickstream-modeled keyword data).

What follows is a rigorous treatment of the new Domain Authority metric. What I will not do in this piece is rehash the debate over whether Domain Authority matters or what its proper use cases are. I have and will address those at length in a later post. Rather, I intend to spend the following paragraphs addressing the new Domain Authority metric from multiple directions.

Correlations between DA and SERP rankings

The most important component of Domain Authority is how well it correlates with search results. But first, let’s get the correlation-versus-causation objection out of the way: Domain Authority does not cause search rankings. It is not a ranking factor. Domain Authority predicts the likelihood that one domain will outrank another. That being said, its usefulness as a metric is tied in large part to this value. The stronger the correlation, the more valuable Domain Authority is for predicting rankings.

Methodology

Determining the “correlation” between a metric and SERP rankings has been accomplished in many different ways over the years. Should we compare against the “true first page,” top 10, top 20, top 50 or top 100? How many SERPs do we need to collect in order for our results to be statistically significant? It’s important that I outline the methodology for reproducibility and for any comments or concerns on the techniques used. For the purposes of this study, I chose to use the “true first page.” This means that the SERPs were collected using only the keyword with no additional parameters. I chose to use this particular data set for a number of reasons:

The true first page is what most users experience, thus the predictive power of Domain Authority will be focused on what users see.

By not using any special parameters, we’re likely to get Google’s typical results.

By not extending beyond the true first page, we’re likely to avoid manually penalized sites (which can impact the correlations with links.)

We did NOT use the same training set or training set size as we did for this correlation study. That is to say, we trained on the top 10 but are reporting correlations on the true first page. This prevents us from the potential of having a result overly biased towards our model.

I randomly selected 16,000 keywords from the United States keyword corpus for Keyword Explorer. I then collected the true first page for all of these keywords (completely different from those used in the training set.) I extracted the URLs but I also chose to remove duplicate domains (ie: if the same domain occurred, one after another.) For a length of time, Google used to cluster domains together in the SERPs under certain circumstances. It was easy to spot these clusters, as the second and later listings were indented. No such indentations are present any longer, but we can’t be certain that Google never groups domains. If they do group domains, it would throw off the correlation because it’s the grouping and not the traditional link-based algorithm doing the work.

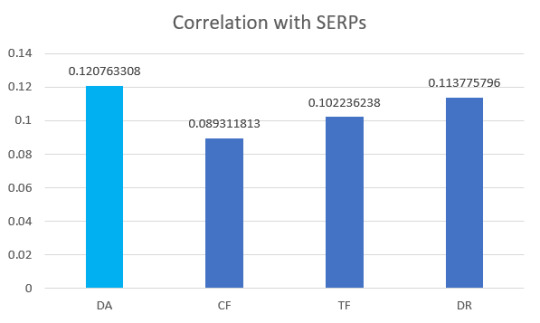

I collected the Domain Authority (Moz), Citation Flow and Trust Flow (Majestic), and Domain Rank (Ahrefs) for each domain and calculated the mean Spearman correlation coefficient for each SERP. I then averaged the coefficients for each metric.

Outcome

Moz’s new Domain Authority has the strongest correlations with SERPs of the competing strength-of-domain link-based metrics in the industry. The sign (-/+) has been inverted in the graph for readability, although the actual coefficients are negative (and should be).

Moz’s Domain Authority scored a ~.12, or roughly 6% stronger than the next best competitor (Domain Rank by Ahrefs.) Domain Authority performed 35% better than CitationFlow and 18% better than TrustFlow. This isn’t surprising, in that Domain Authority is trained to predict rankings while our competitor’s strength-of-domain metrics are not. It shouldn’t be taken as a negative that our competitors strength-of-domain metrics don’t correlate as strongly as Moz’s Domain Authority — rather, it’s simply exemplary of the intrinsic differences between the metrics. That being said, if you want a metric that best predicts rankings at the domain level, Domain Authority is that metric.

Note: At first blush, Domain Authority’s improvements over the competition are, frankly, underwhelming. The truth is that we could quite easily increase the correlation further, but doing so would risk over-fitting and compromising a secondary goal of Domain Authority…

Handling link manipulation

Historically, Domain Authority has focused on only one single feature: maximizing the predictive capacity of the metric. All we wanted were the highest correlations. However, Domain Authority has become, for better or worse, synonymous with “domain value” in many sectors, such as among link buyers and domainers. Subsequently, as bizarre as it may sound, Domain Authority has itself been targeted for spam in order to bolster the score and sell at a higher price. While these crude link manipulation techniques didn’t work so well in Google, they were sufficient to increase Domain Authority. We decided to rein that in.

Data sets

The first thing we did was compile a series off data sets that corresponded with industries we wished to impact, knowing that Domain Authority was regularly manipulated in these circles.

Random domains

Moz customers

Blog comment spam

Low-quality auction domains

Mid-quality auction domains

High-quality auction domains

Known link sellers

Known link buyers

Domainer network

Link network

While it would be my preference to release all the data sets, I’ve chosen not to in order to not “out” any website in particular. Instead, I opted to provide these data sets to a number of search engine marketers for validation. The only data set not offered for outside validation was Moz customers, for obvious reasons.

Methodology

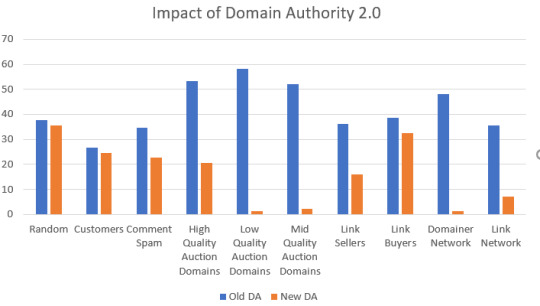

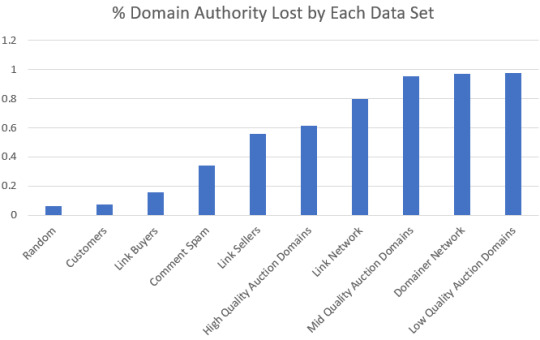

For each of the above data sets, I collected both the old and new Domain Authority scores. This was conducted all on February 28th in order to have parity for all tests. I then calculated the relative difference between the old DA and new DA within each group. Finally, I compared the various data set results against one another to confirm that the model addresses the various methods of inflating Domain Authority.

Results

In the above graph, blue represents the Old Average Domain Authority for that data set and orange represents the New Average Domain Authority for that same data set. One immediately noticeable feature is that every category drops. Even random domains drops. This is a re-centering of the Domain Authority score and should cause no alarm to webmasters. There is, on average, a 6% reduction in Domain Authority for randomly selected domains from the web. Thus, if your domain authority drops a few points, you are well within the range of normal. Now, let’s look at the various data sets individually.

Random domains: -6.1%

Using the same methodology of finding random domains which we use for collecting comparative link statistics, I selected 1,000 domains, we were able to determine that there is, on average, a 6.1% drop in Domain Authority. It’s important that webmasters recognize this, as the shift is likely to affect most sites and is nothing to worry about.

Moz customers: -7.4%

Of immediate interest to Moz is how our own customers perform in relation to the random set of domains. On average, the Domain Authority of Moz customers lowered by 7.4%. This is very close to the random set of URLs and indicates that most Moz customers are likely not using techniques to manipulate DA to any large degree.

Link buyers: -15.9%

Surprisingly, link buyers only lost 15.9% of their Domain Authority. In retrospect, this seems reasonable. First, we looked specifically at link buyers from blog networks, which aren’t as spammy as many other techniques. Second, most of the sites paying for links are also optimizing their site’s content, which means the sites do rank, sometimes quite well, in Google. Because Domain Authority trains against actual rankings, it’s reasonable to expect that the link buyers data set would not be impacted as highly as other techniques because the neural network learns that some link buying patterns actually work.

Comment spammers: -34%

Here’s where the fun starts. The neural network behind Domain Authority was able to drop comment spammers’ average DA by 34%. I was particularly pleased with this one because of all the types of link manipulation addressed by Domain Authority, comment spam is, in my honest opinion, no better than vandalism. Hopefully this will have a positive impact on decreasing comment spam — every little bit counts.

Link sellers: -56%

I was actually quite surprised, at first, that link sellers on average dropped 56% in Domain Authority. I knew that link sellers often participated in link schemes (normally interlinking their own blog networks to build up DA) so that they can charge higher prices. However, it didn’t occur to me that link sellers would be easier to pick out because they explicitly do not optimize their own sites beyond links. Subsequently, link sellers tend to have inflated, bogus link profiles and flimsy content, which means they tend to not rank in Google. If they don’t rank, then the neural network behind Domain Authority is likely to pick up on the trend. It will be interesting to see how the market responds to such a dramatic change in Domain Authority.

High-quality auction domains: -61%

One of the features that I’m most proud of in regards to Domain Authority is that it effectively addressed link manipulation in order of our intuition regarding quality. I created three different data sets out of one larger data set (auction domains), where I used certain qualifiers like price, TLD, and archive.org status to label each domain as high-quality, mid-quality, and low-quality. In theory, if the neural network does its job correctly, we should see the high-quality domains impacted the least and the low-quality domains impacted the most. This is the exact pattern which was rendered by the new model. High-quality auction domains dropped an average of 61% in Domain Authority. That seems really high for “high-quality” auction domains, but even a cursory glance at the backlink profiles of domains that are up for sale in the $10K+ range shows clear link manipulation. The domainer industry, especially the domainer-for-SEO industry, is rife with spam.

Link network: -79%

There is one network on the web that troubles me more than any other. I won’t name it, but it’s particularly pernicious because the sites in this network all link to the top 1,000,000 sites on the web. If your site is in the top 1,000,000 on the web, you’ll likely see hundreds of root linking domains from this network no matter which link index you look at (Moz, Majestic, or Ahrefs). You can imagine my delight to see that it drops roughly 79% in Domain Authority, and rightfully so, as the vast majority of these sites have been banned by Google.

Mid-quality auction domains: -95%

Continuing with the pattern regarding the quality of auction domains, you can see that “mid-quality” auction domains dropped nearly 95% in Domain Authority. This is huge. Bear in mind that these drastic drops are not combined with losses in correlation with SERPs; rather, the neural network is learning to distinguish between backlink profiles far more effectively, separating the wheat from the chaff.

Domainer networks: -97%

If you spend any time looking at dropped domains, you have probably come upon a domainer network where there are a series of sites enumerated and all linking to one another. For example, the first site might be sbt001.com, then sbt002.com, and so on and so forth for thousands of domains. While it’s obvious for humans to look at this and see a pattern, Domain Authority needed to learn that these techniques do not correlate with rankings. The new Domain Authority does just that, dropping the domainer networks we analyzed on average by 97%.

Low-quality auction domains: -98%

Finally, the worst offenders — low-quality auction domains — dropped 98% on average. Domain Authority just can’t be fooled in the way it has in the past. You have to acquire good links in the right proportions (in accordance with a natural model and sites that already rank) if you wish to have a strong Domain Authority score.

What does this mean?

For most webmasters, this means very little. Your Domain Authority might drop a little bit, but so will your competitors’. For search engine optimizers, especially consultants and agencies, it means quite a bit. The inventories of known link sellers will probably diminish dramatically overnight. High DA links will become far more rare. The same is true of those trying to construct private blog networks (PBNs). Of course, Domain Authority doesn’t cause rankings so it won’t impact your current rank, but it should give consultants and agencies a much smarter metric for assessing quality.

What are the best use cases for DA?

Compare changes in your Domain Authority with your competitors. If you drop significantly more, or increase significantly more, it could indicate that there are important differences in your link profile.

Compare changes in your Domain Authority over time. The new Domain Authority will update historically as well, so you can track your DA. If your DA is decreasing over time, especially relative to your competitors, you probably need to get started on outreach.

Assess link quality when looking to acquire dropped or auction domains. Those looking to acquire dropped or auction domains now have a much more powerful tool in their hands for assessing quality. Of course, DA should not be the primary metric for assessing the quality of a link or a domain, but it certainly should be in every webmaster’s toolkit.

What should we expect going forward?

We aren’t going to rest. An important philosophical shift has taken place at Moz with regards to Domain Authority. In the past, we believed it was best to keep Domain Authority static, rarely updating the model, in order to give users an apples-to-apples comparison. Over time, though, this meant that Domain Authority would become less relevant. Given the rapidity with which Google updates its results and algorithms, the new Domain Authority will be far more agile as we give it new features, retrain it more frequently, and respond to algorithmic changes at Google. We hope you like it.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!

from https://dentistry01.wordpress.com/2019/03/05/a-comprehensive-analysis-of-the-new-domain-authority/

0 notes

Photo

Can this hurt your rankings? https://www.reddit.com/r/SEO/comments/apf6ei/can_this_hurt_your_rankings/

I'm buying links from several generic "how-to" domains with high trustflow and citationflow, however, they are linking to two of my sites that are on the same hosting (same IP). Does this indicate to Google that these links are 'biased', since they come from the same site and are linking to two completely unrelated sites but on the same IP?

submitted by /u/SuperiorSeduction [link] [comments] February 11, 2019 at 04:35PM

0 notes

Text

A Comprehensive Analysis of the New Domain Authority

New Post has been published on http://miamiwebdesignbyniva.com/index.php/2019/03/19/a-comprehensive-analysis-of-the-new-domain-authority/

A Comprehensive Analysis of the New Domain Authority

Moz’s Domain Authority is requested over 1,000,000,000 times per year, it’s referenced millions of times on the web, and it has become a veritable household name among search engine optimizers for a variety of use cases, from determining the success of a link building campaign to qualifying domains for purchase. With the launch of Moz’s entirely new, improved, and much larger link index, we recognized the opportunity to revisit Domain Authority with the same rigor as we did keyword volume years ago (which ushered in the era of clickstream-modeled keyword data).

What follows is a rigorous treatment of the new Domain Authority metric. What I will not do in this piece is rehash the debate over whether Domain Authority matters or what its proper use cases are. I have and will address those at length in a later post. Rather, I intend to spend the following paragraphs addressing the new Domain Authority metric from multiple directions.

Correlations between DA and SERP rankings

The most important component of Domain Authority is how well it correlates with search results. But first, let’s get the correlation-versus-causation objection out of the way: Domain Authority does not cause search rankings. It is not a ranking factor. Domain Authority predicts the likelihood that one domain will outrank another. That being said, its usefulness as a metric is tied in large part to this value. The stronger the correlation, the more valuable Domain Authority is for predicting rankings.

Methodology

Determining the “correlation” between a metric and SERP rankings has been accomplished in many different ways over the years. Should we compare against the “true first page,” top 10, top 20, top 50 or top 100? How many SERPs do we need to collect in order for our results to be statistically significant? It’s important that I outline the methodology for reproducibility and for any comments or concerns on the techniques used. For the purposes of this study, I chose to use the “true first page.” This means that the SERPs were collected using only the keyword with no additional parameters. I chose to use this particular data set for a number of reasons:

The true first page is what most users experience, thus the predictive power of Domain Authority will be focused on what users see.

By not using any special parameters, we’re likely to get Google’s typical results.

By not extending beyond the true first page, we’re likely to avoid manually penalized sites (which can impact the correlations with links.)

We did NOT use the same training set or training set size as we did for this correlation study. That is to say, we trained on the top 10 but are reporting correlations on the true first page. This prevents us from the potential of having a result overly biased towards our model.

I randomly selected 16,000 keywords from the United States keyword corpus for Keyword Explorer. I then collected the true first page for all of these keywords (completely different from those used in the training set.) I extracted the URLs but I also chose to remove duplicate domains (ie: if the same domain occurred, one after another.) For a length of time, Google used to cluster domains together in the SERPs under certain circumstances. It was easy to spot these clusters, as the second and later listings were indented. No such indentations are present any longer, but we can’t be certain that Google never groups domains. If they do group domains, it would throw off the correlation because it’s the grouping and not the traditional link-based algorithm doing the work.

I collected the Domain Authority (Moz), Citation Flow and Trust Flow (Majestic), and Domain Rank (Ahrefs) for each domain and calculated the mean Spearman correlation coefficient for each SERP. I then averaged the coefficients for each metric.

Outcome

Moz’s new Domain Authority has the strongest correlations with SERPs of the competing strength-of-domain link-based metrics in the industry. The sign (-/+) has been inverted in the graph for readability, although the actual coefficients are negative (and should be).

Moz’s Domain Authority scored a ~.12, or roughly 6% stronger than the next best competitor (Domain Rank by Ahrefs.) Domain Authority performed 35% better than CitationFlow and 18% better than TrustFlow. This isn’t surprising, in that Domain Authority is trained to predict rankings while our competitor’s strength-of-domain metrics are not. It shouldn’t be taken as a negative that our competitors strength-of-domain metrics don’t correlate as strongly as Moz’s Domain Authority — rather, it’s simply exemplary of the intrinsic differences between the metrics. That being said, if you want a metric that best predicts rankings at the domain level, Domain Authority is that metric.

Note: At first blush, Domain Authority’s improvements over the competition are, frankly, underwhelming. The truth is that we could quite easily increase the correlation further, but doing so would risk over-fitting and compromising a secondary goal of Domain Authority…

Handling link manipulation

Historically, Domain Authority has focused on only one single feature: maximizing the predictive capacity of the metric. All we wanted were the highest correlations. However, Domain Authority has become, for better or worse, synonymous with “domain value” in many sectors, such as among link buyers and domainers. Subsequently, as bizarre as it may sound, Domain Authority has itself been targeted for spam in order to bolster the score and sell at a higher price. While these crude link manipulation techniques didn’t work so well in Google, they were sufficient to increase Domain Authority. We decided to rein that in.

Data sets

The first thing we did was compile a series off data sets that corresponded with industries we wished to impact, knowing that Domain Authority was regularly manipulated in these circles.

Random domains

Moz customers

Blog comment spam

Low-quality auction domains

Mid-quality auction domains

High-quality auction domains

Known link sellers

Known link buyers

Domainer network

Link network

While it would be my preference to release all the data sets, I’ve chosen not to in order to not “out” any website in particular. Instead, I opted to provide these data sets to a number of search engine marketers for validation. The only data set not offered for outside validation was Moz customers, for obvious reasons.

Methodology

For each of the above data sets, I collected both the old and new Domain Authority scores. This was conducted all on February 28th in order to have parity for all tests. I then calculated the relative difference between the old DA and new DA within each group. Finally, I compared the various data set results against one another to confirm that the model addresses the various methods of inflating Domain Authority.

Results

In the above graph, blue represents the Old Average Domain Authority for that data set and orange represents the New Average Domain Authority for that same data set. One immediately noticeable feature is that every category drops. Even random domains drops. This is a re-centering of the Domain Authority score and should cause no alarm to webmasters. There is, on average, a 6% reduction in Domain Authority for randomly selected domains from the web. Thus, if your Domain Authority drops a few points, you are well within the range of normal. Now, let’s look at the various data sets individually.

Random domains: -6.1%

Using the same methodology of finding random domains which we use for collecting comparative link statistics, I selected 1,000 domains, we were able to determine that there is, on average, a 6.1% drop in Domain Authority. It’s important that webmasters recognize this, as the shift is likely to affect most sites and is nothing to worry about.

Moz customers: -7.4%

Of immediate interest to Moz is how our own customers perform in relation to the random set of domains. On average, the Domain Authority of Moz customers lowered by 7.4%. This is very close to the random set of URLs and indicates that most Moz customers are likely not using techniques to manipulate DA to any large degree.

Link buyers: -15.9%

Surprisingly, link buyers only lost 15.9% of their Domain Authority. In retrospect, this seems reasonable. First, we looked specifically at link buyers from blog networks, which aren’t as spammy as many other techniques. Second, most of the sites paying for links are also optimizing their site’s content, which means the sites do rank, sometimes quite well, in Google. Because Domain Authority trains against actual rankings, it’s reasonable to expect that the link buyers data set would not be impacted as highly as other techniques because the neural network learns that some link buying patterns actually work.

Comment spammers: -34%

Here’s where the fun starts. The neural network behind Domain Authority was able to drop comment spammers’ average DA by 34%. I was particularly pleased with this one because of all the types of link manipulation addressed by Domain Authority, comment spam is, in my honest opinion, no better than vandalism. Hopefully this will have a positive impact on decreasing comment spam — every little bit counts.

Link sellers: -56%

I was actually quite surprised, at first, that link sellers on average dropped 56% in Domain Authority. I knew that link sellers often participated in link schemes (normally interlinking their own blog networks to build up DA) so that they can charge higher prices. However, it didn’t occur to me that link sellers would be easier to pick out because they explicitly do not optimize their own sites beyond links. Subsequently, link sellers tend to have inflated, bogus link profiles and flimsy content, which means they tend to not rank in Google. If they don’t rank, then the neural network behind Domain Authority is likely to pick up on the trend. It will be interesting to see how the market responds to such a dramatic change in Domain Authority.

High-quality auction domains: -61%

One of the features that I’m most proud of in regards to Domain Authority is that it effectively addressed link manipulation in order of our intuition regarding quality. I created three different data sets out of one larger data set (auction domains), where I used certain qualifiers like price, TLD, and archive.org status to label each domain as high-quality, mid-quality, and low-quality. In theory, if the neural network does its job correctly, we should see the high-quality domains impacted the least and the low-quality domains impacted the most. This is the exact pattern which was rendered by the new model. High-quality auction domains dropped an average of 61% in Domain Authority. That seems really high for “high-quality” auction domains, but even a cursory glance at the backlink profiles of domains that are up for sale in the $10K+ range shows clear link manipulation. The domainer industry, especially the domainer-for-SEO industry, is rife with spam.

Link network: -79%

There is one network on the web that troubles me more than any other. I won’t name it, but it’s particularly pernicious because the sites in this network all link to the top 1,000,000 sites on the web. If your site is in the top 1,000,000 on the web, you’ll likely see hundreds of root linking domains from this network no matter which link index you look at (Moz, Majestic, or Ahrefs). You can imagine my delight to see that it drops roughly 79% in Domain Authority, and rightfully so, as the vast majority of these sites have been banned by Google.

Mid-quality auction domains: -95%

Continuing with the pattern regarding the quality of auction domains, you can see that “mid-quality” auction domains dropped nearly 95% in Domain Authority. This is huge. Bear in mind that these drastic drops are not combined with losses in correlation with SERPs; rather, the neural network is learning to distinguish between backlink profiles far more effectively, separating the wheat from the chaff.

Domainer networks: -97%

If you spend any time looking at dropped domains, you have probably come upon a domainer network where there are a series of sites enumerated and all linking to one another. For example, the first site might be sbt001.com, then sbt002.com, and so on and so forth for thousands of domains. While it’s obvious for humans to look at this and see a pattern, Domain Authority needed to learn that these techniques do not correlate with rankings. The new Domain Authority does just that, dropping the domainer networks we analyzed on average by 97%.

Low-quality auction domains: -98%

Finally, the worst offenders — low-quality auction domains — dropped 98% on average. Domain Authority just can’t be fooled in the way it has in the past. You have to acquire good links in the right proportions (in accordance with a natural model and sites that already rank) if you wish to have a strong Domain Authority score.

What does this mean?

For most webmasters, this means very little. Your Domain Authority might drop a little bit, but so will your competitors’. For search engine optimizers, especially consultants and agencies, it means quite a bit. The inventories of known link sellers will probably diminish dramatically overnight. High DA links will become far more rare. The same is true of those trying to construct private blog networks (PBNs). Of course, Domain Authority doesn’t cause rankings so it won’t impact your current rank, but it should give consultants and agencies a much smarter metric for assessing quality.

What are the best use cases for DA?

Compare changes in your Domain Authority with your competitors. If you drop significantly more, or increase significantly more, it could indicate that there are important differences in your link profile.

Compare changes in your Domain Authority over time. The new Domain Authority will update historically as well, so you can track your DA. If your DA is decreasing over time, especially relative to your competitors, you probably need to get started on outreach.

Assess link quality when looking to acquire dropped or auction domains. Those looking to acquire dropped or auction domains now have a much more powerful tool in their hands for assessing quality. Of course, DA should not be the primary metric for assessing the quality of a link or a domain, but it certainly should be in every webmaster’s toolkit.

What should we expect going forward?

We aren’t going to rest. An important philosophical shift has taken place at Moz with regards to Domain Authority. In the past, we believed it was best to keep Domain Authority static, rarely updating the model, in order to give users an apples-to-apples comparison. Over time, though, this meant that Domain Authority would become less relevant. Given the rapidity with which Google updates its results and algorithms, the new Domain Authority will be far more agile as we give it new features, retrain it more frequently, and respond to algorithmic changes from Google. We hope you like it.

Be sure to join us on Thursday, March 14th at 10am PT at our upcoming webinar discussing strategies & use cases for the new Domain Authority:

Save my spot

Source link

0 notes

Text

A Comprehensive Analysis of the New Domain Authority

Posted by rjonesx.

Moz's Domain Authority is requested over 1,000,000,000 times per year, it's referenced millions of times on the web, and it has become a veritable household name among search engine optimizers for a variety of use cases, from determining the success of a link building campaign to qualifying domains for purchase. With the launch of Moz's entirely new, improved, and much larger link index, we recognized the opportunity to revisit Domain Authority with the same rigor as we did keyword volume years ago (which ushered in the era of clickstream-modeled keyword data).

What follows is a rigorous treatment of the new Domain Authority metric. What I will not do in this piece is rehash the debate over whether Domain Authority matters or what its proper use cases are. I have and will address those at length in a later post. Rather, I intend to spend the following paragraphs addressing the new Domain Authority metric from multiple directions.

Correlations between DA and SERP rankings

The most important component of Domain Authority is how well it correlates with search results. But first, let's get the correlation-versus-causation objection out of the way: Domain Authority does not cause search rankings. It is not a ranking factor. Domain Authority predicts the likelihood that one domain will outrank another. That being said, its usefulness as a metric is tied in large part to this value. The stronger the correlation, the more valuable Domain Authority is for predicting rankings.

Methodology

Determining the "correlation" between a metric and SERP rankings has been accomplished in many different ways over the years. Should we compare against the "true first page," top 10, top 20, top 50 or top 100? How many SERPs do we need to collect in order for our results to be statistically significant? It's important that I outline the methodology for reproducibility and for any comments or concerns on the techniques used. For the purposes of this study, I chose to use the "true first page." This means that the SERPs were collected using only the keyword with no additional parameters. I chose to use this particular data set for a number of reasons:

The true first page is what most users experience, thus the predictive power of Domain Authority will be focused on what users see.

By not using any special parameters, we're likely to get Google's typical results.

By not extending beyond the true first page, we're likely to avoid manually penalized sites (which can impact the correlations with links.)

We did NOT use the same training set or training set size as we did for this correlation study. That is to say, we trained on the top 10 but are reporting correlations on the true first page. This prevents us from the potential of having a result overly biased towards our model.

I randomly selected 16,000 keywords from the United States keyword corpus for Keyword Explorer. I then collected the true first page for all of these keywords (completely different from those used in the training set.) I extracted the URLs but I also chose to remove duplicate domains (ie: if the same domain occurred, one after another.) For a length of time, Google used to cluster domains together in the SERPs under certain circumstances. It was easy to spot these clusters, as the second and later listings were indented. No such indentations are present any longer, but we can't be certain that Google never groups domains. If they do group domains, it would throw off the correlation because it's the grouping and not the traditional link-based algorithm doing the work. I collected the Domain Authority (Moz), Citation Flow and Trust Flow (Majestic), and Domain Rank (Ahrefs) for each domain and calculated the mean Spearman correlation coefficient for each SERP. I then averaged the coefficients for each metric.

Outcome

Moz's new Domain Authority has the strongest correlations with SERPs of the competing strength-of-domain link-based metrics in the industry. The sign (-/+) has been inverted in the graph for readability, although the actual coefficients are negative (and should be).

Moz's Domain Authority scored a ~.12, or roughly 6% stronger than the next best competitor (Domain Rank by Ahrefs.) Domain Authority performed 35% better than CitationFlow and 18% better than TrustFlow. This isn't surprising, in that Domain Authority is trained to predict rankings while our competitor's strength-of-domain metrics are not. It shouldn't be taken as a negative that our competitors strength-of-domain metrics don't correlate as strongly as Moz's Domain Authority — rather, it's simply exemplary of the intrinsic differences between the metrics. That being said, if you want a metric that best predicts rankings at the domain level, Domain Authority is that metric.

Note: At first blush, Domain Authority's improvements over the competition are, frankly, underwhelming. The truth is that we could quite easily increase the correlation further, but doing so would risk over-fitting and compromising a secondary goal of Domain Authority...

Handling link manipulation

Historically, Domain Authority has focused on only one single feature: maximizing the predictive capacity of the metric. All we wanted were the highest correlations. However, Domain Authority has become, for better or worse, synonymous with "domain value" in many sectors, such as among link buyers and domainers. Subsequently, as bizarre as it may sound, Domain Authority has itself been targeted for spam in order to bolster the score and sell at a higher price. While these crude link manipulation techniques didn't work so well in Google, they were sufficient to increase Domain Authority. We decided to rein that in.

Data sets

The first thing we did was compile a series off data sets that corresponded with industries we wished to impact, knowing that Domain Authority was regularly manipulated in these circles.

Random domains

Moz customers

Blog comment spam

Low-quality auction domains

Mid-quality auction domains

High-quality auction domains

Known link sellers

Known link buyers

Domainer network

Link network

While it would be my preference to release all the data sets, I've chosen not to in order to not "out" any website in particular. Instead, I opted to provide these data sets to a number of search engine marketers for validation. The only data set not offered for outside validation was Moz customers, for obvious reasons.

Methodology

For each of the above data sets, I collected both the old and new Domain Authority scores. This was conducted all on February 28th in order to have parity for all tests. I then calculated the relative difference between the old DA and new DA within each group. Finally, I compared the various data set results against one another to confirm that the model addresses the various methods of inflating Domain Authority.

Results

In the above graph, blue represents the Old Average Domain Authority for that data set and orange represents the New Average Domain Authority for that same data set. One immediately noticeable feature is that every category drops. Even random domains drops. This is a re-centering of the Domain Authority score and should cause no alarm to webmasters. There is, on average, a 6% reduction in Domain Authority for randomly selected domains from the web. Thus, if your Domain Authority drops a few points, you are well within the range of normal. Now, let's look at the various data sets individually.

Random domains: -6.1%

Using the same methodology of finding random domains which we use for collecting comparative link statistics, I selected 1,000 domains, we were able to determine that there is, on average, a 6.1% drop in Domain Authority. It's important that webmasters recognize this, as the shift is likely to affect most sites and is nothing to worry about.

Moz customers: -7.4%

Of immediate interest to Moz is how our own customers perform in relation to the random set of domains. On average, the Domain Authority of Moz customers lowered by 7.4%. This is very close to the random set of URLs and indicates that most Moz customers are likely not using techniques to manipulate DA to any large degree.

Link buyers: -15.9%

Surprisingly, link buyers only lost 15.9% of their Domain Authority. In retrospect, this seems reasonable. First, we looked specifically at link buyers from blog networks, which aren't as spammy as many other techniques. Second, most of the sites paying for links are also optimizing their site's content, which means the sites do rank, sometimes quite well, in Google. Because Domain Authority trains against actual rankings, it's reasonable to expect that the link buyers data set would not be impacted as highly as other techniques because the neural network learns that some link buying patterns actually work.

Comment spammers: -34%

Here's where the fun starts. The neural network behind Domain Authority was able to drop comment spammers' average DA by 34%. I was particularly pleased with this one because of all the types of link manipulation addressed by Domain Authority, comment spam is, in my honest opinion, no better than vandalism. Hopefully this will have a positive impact on decreasing comment spam — every little bit counts.

Link sellers: -56%

I was actually quite surprised, at first, that link sellers on average dropped 56% in Domain Authority. I knew that link sellers often participated in link schemes (normally interlinking their own blog networks to build up DA) so that they can charge higher prices. However, it didn't occur to me that link sellers would be easier to pick out because they explicitly do not optimize their own sites beyond links. Subsequently, link sellers tend to have inflated, bogus link profiles and flimsy content, which means they tend to not rank in Google. If they don't rank, then the neural network behind Domain Authority is likely to pick up on the trend. It will be interesting to see how the market responds to such a dramatic change in Domain Authority.

High-quality auction domains: -61%

One of the features that I'm most proud of in regards to Domain Authority is that it effectively addressed link manipulation in order of our intuition regarding quality. I created three different data sets out of one larger data set (auction domains), where I used certain qualifiers like price, TLD, and archive.org status to label each domain as high-quality, mid-quality, and low-quality. In theory, if the neural network does its job correctly, we should see the high-quality domains impacted the least and the low-quality domains impacted the most. This is the exact pattern which was rendered by the new model. High-quality auction domains dropped an average of 61% in Domain Authority. That seems really high for "high-quality" auction domains, but even a cursory glance at the backlink profiles of domains that are up for sale in the $10K+ range shows clear link manipulation. The domainer industry, especially the domainer-for-SEO industry, is rife with spam.

Link network: -79%

There is one network on the web that troubles me more than any other. I won't name it, but it's particularly pernicious because the sites in this network all link to the top 1,000,000 sites on the web. If your site is in the top 1,000,000 on the web, you'll likely see hundreds of root linking domains from this network no matter which link index you look at (Moz, Majestic, or Ahrefs). You can imagine my delight to see that it drops roughly 79% in Domain Authority, and rightfully so, as the vast majority of these sites have been banned by Google.

Mid-quality auction domains: -95%

Continuing with the pattern regarding the quality of auction domains, you can see that "mid-quality" auction domains dropped nearly 95% in Domain Authority. This is huge. Bear in mind that these drastic drops are not combined with losses in correlation with SERPs; rather, the neural network is learning to distinguish between backlink profiles far more effectively, separating the wheat from the chaff.

Domainer networks: -97%

If you spend any time looking at dropped domains, you have probably come upon a domainer network where there are a series of sites enumerated and all linking to one another. For example, the first site might be sbt001.com, then sbt002.com, and so on and so forth for thousands of domains. While it's obvious for humans to look at this and see a pattern, Domain Authority needed to learn that these techniques do not correlate with rankings. The new Domain Authority does just that, dropping the domainer networks we analyzed on average by 97%.

Low-quality auction domains: -98%

Finally, the worst offenders — low-quality auction domains — dropped 98% on average. Domain Authority just can't be fooled in the way it has in the past. You have to acquire good links in the right proportions (in accordance with a natural model and sites that already rank) if you wish to have a strong Domain Authority score.

What does this mean?

For most webmasters, this means very little. Your Domain Authority might drop a little bit, but so will your competitors'. For search engine optimizers, especially consultants and agencies, it means quite a bit. The inventories of known link sellers will probably diminish dramatically overnight. High DA links will become far more rare. The same is true of those trying to construct private blog networks (PBNs). Of course, Domain Authority doesn't cause rankings so it won't impact your current rank, but it should give consultants and agencies a much smarter metric for assessing quality.

What are the best use cases for DA?

Compare changes in your Domain Authority with your competitors. If you drop significantly more, or increase significantly more, it could indicate that there are important differences in your link profile.

Compare changes in your Domain Authority over time. The new Domain Authority will update historically as well, so you can track your DA. If your DA is decreasing over time, especially relative to your competitors, you probably need to get started on outreach.

Assess link quality when looking to acquire dropped or auction domains. Those looking to acquire dropped or auction domains now have a much more powerful tool in their hands for assessing quality. Of course, DA should not be the primary metric for assessing the quality of a link or a domain, but it certainly should be in every webmaster's toolkit.

What should we expect going forward?

We aren't going to rest. An important philosophical shift has taken place at Moz with regards to Domain Authority. In the past, we believed it was best to keep Domain Authority static, rarely updating the model, in order to give users an apples-to-apples comparison. Over time, though, this meant that Domain Authority would become less relevant. Given the rapidity with which Google updates its results and algorithms, the new Domain Authority will be far more agile as we give it new features, retrain it more frequently, and respond to algorithmic changes from Google. We hope you like it.

Be sure to join us on Thursday, March 14th at 10am PT at our upcoming webinar discussing strategies & use cases for the new Domain Authority:

Save my spot

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

0 notes

Text

A Comprehensive Analysis of the New Domain Authority

Posted by rjonesx.

Moz's Domain Authority is requested over 1,000,000,000 times per year, it's referenced millions of times on the web, and it has become a veritable household name among search engine optimizers for a variety of use cases, from determining the success of a link building campaign to qualifying domains for purchase. With the launch of Moz's entirely new, improved, and much larger link index, we recognized the opportunity to revisit Domain Authority with the same rigor as we did keyword volume years ago (which ushered in the era of clickstream-modeled keyword data).

What follows is a rigorous treatment of the new Domain Authority metric. What I will not do in this piece is rehash the debate over whether Domain Authority matters or what its proper use cases are. I have and will address those at length in a later post. Rather, I intend to spend the following paragraphs addressing the new Domain Authority metric from multiple directions.

Correlations between DA and SERP rankings

The most important component of Domain Authority is how well it correlates with search results. But first, let's get the correlation-versus-causation objection out of the way: Domain Authority does not cause search rankings. It is not a ranking factor. Domain Authority predicts the likelihood that one domain will outrank another. That being said, its usefulness as a metric is tied in large part to this value. The stronger the correlation, the more valuable Domain Authority is for predicting rankings.

Methodology

Determining the "correlation" between a metric and SERP rankings has been accomplished in many different ways over the years. Should we compare against the "true first page," top 10, top 20, top 50 or top 100? How many SERPs do we need to collect in order for our results to be statistically significant? It's important that I outline the methodology for reproducibility and for any comments or concerns on the techniques used. For the purposes of this study, I chose to use the "true first page." This means that the SERPs were collected using only the keyword with no additional parameters. I chose to use this particular data set for a number of reasons:

The true first page is what most users experience, thus the predictive power of Domain Authority will be focused on what users see.

By not using any special parameters, we're likely to get Google's typical results.

By not extending beyond the true first page, we're likely to avoid manually penalized sites (which can impact the correlations with links.)

We did NOT use the same training set or training set size as we did for this correlation study. That is to say, we trained on the top 10 but are reporting correlations on the true first page. This prevents us from the potential of having a result overly biased towards our model.

I randomly selected 16,000 keywords from the United States keyword corpus for Keyword Explorer. I then collected the true first page for all of these keywords (completely different from those used in the training set.) I extracted the URLs but I also chose to remove duplicate domains (ie: if the same domain occurred, one after another.) For a length of time, Google used to cluster domains together in the SERPs under certain circumstances. It was easy to spot these clusters, as the second and later listings were indented. No such indentations are present any longer, but we can't be certain that Google never groups domains. If they do group domains, it would throw off the correlation because it's the grouping and not the traditional link-based algorithm doing the work. I collected the Domain Authority (Moz), Citation Flow and Trust Flow (Majestic), and Domain Rank (Ahrefs) for each domain and calculated the mean Spearman correlation coefficient for each SERP. I then averaged the coefficients for each metric.

Outcome

Moz's new Domain Authority has the strongest correlations with SERPs of the competing strength-of-domain link-based metrics in the industry. The sign (-/+) has been inverted in the graph for readability, although the actual coefficients are negative (and should be).

Moz's Domain Authority scored a ~.12, or roughly 6% stronger than the next best competitor (Domain Rank by Ahrefs.) Domain Authority performed 35% better than CitationFlow and 18% better than TrustFlow. This isn't surprising, in that Domain Authority is trained to predict rankings while our competitor's strength-of-domain metrics are not. It shouldn't be taken as a negative that our competitors strength-of-domain metrics don't correlate as strongly as Moz's Domain Authority — rather, it's simply exemplary of the intrinsic differences between the metrics. That being said, if you want a metric that best predicts rankings at the domain level, Domain Authority is that metric.

Note: At first blush, Domain Authority's improvements over the competition are, frankly, underwhelming. The truth is that we could quite easily increase the correlation further, but doing so would risk over-fitting and compromising a secondary goal of Domain Authority...

Handling link manipulation

Historically, Domain Authority has focused on only one single feature: maximizing the predictive capacity of the metric. All we wanted were the highest correlations. However, Domain Authority has become, for better or worse, synonymous with "domain value" in many sectors, such as among link buyers and domainers. Subsequently, as bizarre as it may sound, Domain Authority has itself been targeted for spam in order to bolster the score and sell at a higher price. While these crude link manipulation techniques didn't work so well in Google, they were sufficient to increase Domain Authority. We decided to rein that in.

Data sets

The first thing we did was compile a series off data sets that corresponded with industries we wished to impact, knowing that Domain Authority was regularly manipulated in these circles.

Random domains

Moz customers

Blog comment spam

Low-quality auction domains

Mid-quality auction domains

High-quality auction domains

Known link sellers

Known link buyers

Domainer network

Link network

While it would be my preference to release all the data sets, I've chosen not to in order to not "out" any website in particular. Instead, I opted to provide these data sets to a number of search engine marketers for validation. The only data set not offered for outside validation was Moz customers, for obvious reasons.

Methodology

For each of the above data sets, I collected both the old and new Domain Authority scores. This was conducted all on February 28th in order to have parity for all tests. I then calculated the relative difference between the old DA and new DA within each group. Finally, I compared the various data set results against one another to confirm that the model addresses the various methods of inflating Domain Authority.

Results

In the above graph, blue represents the Old Average Domain Authority for that data set and orange represents the New Average Domain Authority for that same data set. One immediately noticeable feature is that every category drops. Even random domains drops. This is a re-centering of the Domain Authority score and should cause no alarm to webmasters. There is, on average, a 6% reduction in Domain Authority for randomly selected domains from the web. Thus, if your Domain Authority drops a few points, you are well within the range of normal. Now, let's look at the various data sets individually.

Random domains: -6.1%

Using the same methodology of finding random domains which we use for collecting comparative link statistics, I selected 1,000 domains, we were able to determine that there is, on average, a 6.1% drop in Domain Authority. It's important that webmasters recognize this, as the shift is likely to affect most sites and is nothing to worry about.

Moz customers: -7.4%

Of immediate interest to Moz is how our own customers perform in relation to the random set of domains. On average, the Domain Authority of Moz customers lowered by 7.4%. This is very close to the random set of URLs and indicates that most Moz customers are likely not using techniques to manipulate DA to any large degree.

Link buyers: -15.9%

Surprisingly, link buyers only lost 15.9% of their Domain Authority. In retrospect, this seems reasonable. First, we looked specifically at link buyers from blog networks, which aren't as spammy as many other techniques. Second, most of the sites paying for links are also optimizing their site's content, which means the sites do rank, sometimes quite well, in Google. Because Domain Authority trains against actual rankings, it's reasonable to expect that the link buyers data set would not be impacted as highly as other techniques because the neural network learns that some link buying patterns actually work.

Comment spammers: -34%

Here's where the fun starts. The neural network behind Domain Authority was able to drop comment spammers' average DA by 34%. I was particularly pleased with this one because of all the types of link manipulation addressed by Domain Authority, comment spam is, in my honest opinion, no better than vandalism. Hopefully this will have a positive impact on decreasing comment spam — every little bit counts.

Link sellers: -56%

I was actually quite surprised, at first, that link sellers on average dropped 56% in Domain Authority. I knew that link sellers often participated in link schemes (normally interlinking their own blog networks to build up DA) so that they can charge higher prices. However, it didn't occur to me that link sellers would be easier to pick out because they explicitly do not optimize their own sites beyond links. Subsequently, link sellers tend to have inflated, bogus link profiles and flimsy content, which means they tend to not rank in Google. If they don't rank, then the neural network behind Domain Authority is likely to pick up on the trend. It will be interesting to see how the market responds to such a dramatic change in Domain Authority.

High-quality auction domains: -61%

One of the features that I'm most proud of in regards to Domain Authority is that it effectively addressed link manipulation in order of our intuition regarding quality. I created three different data sets out of one larger data set (auction domains), where I used certain qualifiers like price, TLD, and archive.org status to label each domain as high-quality, mid-quality, and low-quality. In theory, if the neural network does its job correctly, we should see the high-quality domains impacted the least and the low-quality domains impacted the most. This is the exact pattern which was rendered by the new model. High-quality auction domains dropped an average of 61% in Domain Authority. That seems really high for "high-quality" auction domains, but even a cursory glance at the backlink profiles of domains that are up for sale in the $10K+ range shows clear link manipulation. The domainer industry, especially the domainer-for-SEO industry, is rife with spam.

Link network: -79%

There is one network on the web that troubles me more than any other. I won't name it, but it's particularly pernicious because the sites in this network all link to the top 1,000,000 sites on the web. If your site is in the top 1,000,000 on the web, you'll likely see hundreds of root linking domains from this network no matter which link index you look at (Moz, Majestic, or Ahrefs). You can imagine my delight to see that it drops roughly 79% in Domain Authority, and rightfully so, as the vast majority of these sites have been banned by Google.

Mid-quality auction domains: -95%

Continuing with the pattern regarding the quality of auction domains, you can see that "mid-quality" auction domains dropped nearly 95% in Domain Authority. This is huge. Bear in mind that these drastic drops are not combined with losses in correlation with SERPs; rather, the neural network is learning to distinguish between backlink profiles far more effectively, separating the wheat from the chaff.

Domainer networks: -97%

If you spend any time looking at dropped domains, you have probably come upon a domainer network where there are a series of sites enumerated and all linking to one another. For example, the first site might be sbt001.com, then sbt002.com, and so on and so forth for thousands of domains. While it's obvious for humans to look at this and see a pattern, Domain Authority needed to learn that these techniques do not correlate with rankings. The new Domain Authority does just that, dropping the domainer networks we analyzed on average by 97%.

Low-quality auction domains: -98%

Finally, the worst offenders — low-quality auction domains — dropped 98% on average. Domain Authority just can't be fooled in the way it has in the past. You have to acquire good links in the right proportions (in accordance with a natural model and sites that already rank) if you wish to have a strong Domain Authority score.

What does this mean?

For most webmasters, this means very little. Your Domain Authority might drop a little bit, but so will your competitors'. For search engine optimizers, especially consultants and agencies, it means quite a bit. The inventories of known link sellers will probably diminish dramatically overnight. High DA links will become far more rare. The same is true of those trying to construct private blog networks (PBNs). Of course, Domain Authority doesn't cause rankings so it won't impact your current rank, but it should give consultants and agencies a much smarter metric for assessing quality.

What are the best use cases for DA?

Compare changes in your Domain Authority with your competitors. If you drop significantly more, or increase significantly more, it could indicate that there are important differences in your link profile.

Compare changes in your Domain Authority over time. The new Domain Authority will update historically as well, so you can track your DA. If your DA is decreasing over time, especially relative to your competitors, you probably need to get started on outreach.

Assess link quality when looking to acquire dropped or auction domains. Those looking to acquire dropped or auction domains now have a much more powerful tool in their hands for assessing quality. Of course, DA should not be the primary metric for assessing the quality of a link or a domain, but it certainly should be in every webmaster's toolkit.

What should we expect going forward?

We aren't going to rest. An important philosophical shift has taken place at Moz with regards to Domain Authority. In the past, we believed it was best to keep Domain Authority static, rarely updating the model, in order to give users an apples-to-apples comparison. Over time, though, this meant that Domain Authority would become less relevant. Given the rapidity with which Google updates its results and algorithms, the new Domain Authority will be far more agile as we give it new features, retrain it more frequently, and respond to algorithmic changes from Google. We hope you like it.

Be sure to join us on Thursday, March 14th at 10am PT at our upcoming webinar discussing strategies & use cases for the new Domain Authority:

Save my spot

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

0 notes

Text

A Comprehensive Analysis of the New Domain Authority

Posted by rjonesx.

Moz's Domain Authority is requested over 1,000,000,000 times per year, it's referenced millions of times on the web, and it has become a veritable household name among search engine optimizers for a variety of use cases, from determining the success of a link building campaign to qualifying domains for purchase. With the launch of Moz's entirely new, improved, and much larger link index, we recognized the opportunity to revisit Domain Authority with the same rigor as we did keyword volume years ago (which ushered in the era of clickstream-modeled keyword data).

What follows is a rigorous treatment of the new Domain Authority metric. What I will not do in this piece is rehash the debate over whether Domain Authority matters or what its proper use cases are. I have and will address those at length in a later post. Rather, I intend to spend the following paragraphs addressing the new Domain Authority metric from multiple directions.

Correlations between DA and SERP rankings

The most important component of Domain Authority is how well it correlates with search results. But first, let's get the correlation-versus-causation objection out of the way: Domain Authority does not cause search rankings. It is not a ranking factor. Domain Authority predicts the likelihood that one domain will outrank another. That being said, its usefulness as a metric is tied in large part to this value. The stronger the correlation, the more valuable Domain Authority is for predicting rankings.

Methodology

Determining the "correlation" between a metric and SERP rankings has been accomplished in many different ways over the years. Should we compare against the "true first page," top 10, top 20, top 50 or top 100? How many SERPs do we need to collect in order for our results to be statistically significant? It's important that I outline the methodology for reproducibility and for any comments or concerns on the techniques used. For the purposes of this study, I chose to use the "true first page." This means that the SERPs were collected using only the keyword with no additional parameters. I chose to use this particular data set for a number of reasons:

The true first page is what most users experience, thus the predictive power of Domain Authority will be focused on what users see.

By not using any special parameters, we're likely to get Google's typical results.

By not extending beyond the true first page, we're likely to avoid manually penalized sites (which can impact the correlations with links.)

We did NOT use the same training set or training set size as we did for this correlation study. That is to say, we trained on the top 10 but are reporting correlations on the true first page. This prevents us from the potential of having a result overly biased towards our model.

I randomly selected 16,000 keywords from the United States keyword corpus for Keyword Explorer. I then collected the true first page for all of these keywords (completely different from those used in the training set.) I extracted the URLs but I also chose to remove duplicate domains (ie: if the same domain occurred, one after another.) For a length of time, Google used to cluster domains together in the SERPs under certain circumstances. It was easy to spot these clusters, as the second and later listings were indented. No such indentations are present any longer, but we can't be certain that Google never groups domains. If they do group domains, it would throw off the correlation because it's the grouping and not the traditional link-based algorithm doing the work. I collected the Domain Authority (Moz), Citation Flow and Trust Flow (Majestic), and Domain Rank (Ahrefs) for each domain and calculated the mean Spearman correlation coefficient for each SERP. I then averaged the coefficients for each metric.

Outcome

Moz's new Domain Authority has the strongest correlations with SERPs of the competing strength-of-domain link-based metrics in the industry. The sign (-/+) has been inverted in the graph for readability, although the actual coefficients are negative (and should be).

Moz's Domain Authority scored a ~.12, or roughly 6% stronger than the next best competitor (Domain Rank by Ahrefs.) Domain Authority performed 35% better than CitationFlow and 18% better than TrustFlow. This isn't surprising, in that Domain Authority is trained to predict rankings while our competitor's strength-of-domain metrics are not. It shouldn't be taken as a negative that our competitors strength-of-domain metrics don't correlate as strongly as Moz's Domain Authority — rather, it's simply exemplary of the intrinsic differences between the metrics. That being said, if you want a metric that best predicts rankings at the domain level, Domain Authority is that metric.

Note: At first blush, Domain Authority's improvements over the competition are, frankly, underwhelming. The truth is that we could quite easily increase the correlation further, but doing so would risk over-fitting and compromising a secondary goal of Domain Authority...

Handling link manipulation

Historically, Domain Authority has focused on only one single feature: maximizing the predictive capacity of the metric. All we wanted were the highest correlations. However, Domain Authority has become, for better or worse, synonymous with "domain value" in many sectors, such as among link buyers and domainers. Subsequently, as bizarre as it may sound, Domain Authority has itself been targeted for spam in order to bolster the score and sell at a higher price. While these crude link manipulation techniques didn't work so well in Google, they were sufficient to increase Domain Authority. We decided to rein that in.

Data sets

The first thing we did was compile a series off data sets that corresponded with industries we wished to impact, knowing that Domain Authority was regularly manipulated in these circles.

Random domains

Moz customers

Blog comment spam

Low-quality auction domains

Mid-quality auction domains

High-quality auction domains

Known link sellers

Known link buyers

Domainer network

Link network

While it would be my preference to release all the data sets, I've chosen not to in order to not "out" any website in particular. Instead, I opted to provide these data sets to a number of search engine marketers for validation. The only data set not offered for outside validation was Moz customers, for obvious reasons.

Methodology

For each of the above data sets, I collected both the old and new Domain Authority scores. This was conducted all on February 28th in order to have parity for all tests. I then calculated the relative difference between the old DA and new DA within each group. Finally, I compared the various data set results against one another to confirm that the model addresses the various methods of inflating Domain Authority.

Results

In the above graph, blue represents the Old Average Domain Authority for that data set and orange represents the New Average Domain Authority for that same data set. One immediately noticeable feature is that every category drops. Even random domains drops. This is a re-centering of the Domain Authority score and should cause no alarm to webmasters. There is, on average, a 6% reduction in Domain Authority for randomly selected domains from the web. Thus, if your Domain Authority drops a few points, you are well within the range of normal. Now, let's look at the various data sets individually.

Random domains: -6.1%

Using the same methodology of finding random domains which we use for collecting comparative link statistics, I selected 1,000 domains, we were able to determine that there is, on average, a 6.1% drop in Domain Authority. It's important that webmasters recognize this, as the shift is likely to affect most sites and is nothing to worry about.

Moz customers: -7.4%

Of immediate interest to Moz is how our own customers perform in relation to the random set of domains. On average, the Domain Authority of Moz customers lowered by 7.4%. This is very close to the random set of URLs and indicates that most Moz customers are likely not using techniques to manipulate DA to any large degree.

Link buyers: -15.9%

Surprisingly, link buyers only lost 15.9% of their Domain Authority. In retrospect, this seems reasonable. First, we looked specifically at link buyers from blog networks, which aren't as spammy as many other techniques. Second, most of the sites paying for links are also optimizing their site's content, which means the sites do rank, sometimes quite well, in Google. Because Domain Authority trains against actual rankings, it's reasonable to expect that the link buyers data set would not be impacted as highly as other techniques because the neural network learns that some link buying patterns actually work.

Comment spammers: -34%

Here's where the fun starts. The neural network behind Domain Authority was able to drop comment spammers' average DA by 34%. I was particularly pleased with this one because of all the types of link manipulation addressed by Domain Authority, comment spam is, in my honest opinion, no better than vandalism. Hopefully this will have a positive impact on decreasing comment spam — every little bit counts.

Link sellers: -56%

I was actually quite surprised, at first, that link sellers on average dropped 56% in Domain Authority. I knew that link sellers often participated in link schemes (normally interlinking their own blog networks to build up DA) so that they can charge higher prices. However, it didn't occur to me that link sellers would be easier to pick out because they explicitly do not optimize their own sites beyond links. Subsequently, link sellers tend to have inflated, bogus link profiles and flimsy content, which means they tend to not rank in Google. If they don't rank, then the neural network behind Domain Authority is likely to pick up on the trend. It will be interesting to see how the market responds to such a dramatic change in Domain Authority.

High-quality auction domains: -61%