#consumer privacy

Explore tagged Tumblr posts

Text

Tech monopolists use their market power to invade your privacy

On SEPTEMBER 24th, I'll be speaking IN PERSON at the BOSTON PUBLIC LIBRARY!

It's easy to greet the FTC's new report on social media privacy, which concludes that tech giants have terrible privacy practices with a resounding "duh," but that would be a grave mistake.

Much to the disappointment of autocrats and would-be autocrats, administrative agencies like the FTC can't just make rules up. In order to enact policies, regulators have to do their homework: for example, they can do "market studies," which go beyond anything you'd get out of an MBA or Master of Public Policy program, thanks to the agency's legal authority to force companies to reveal their confidential business information.

Market studies are fabulous in their own right. The UK Competition and Markets Authority has a fantastic research group called the Digital Markets Unit that has published some of the most fascinating deep dives into how parts of the tech industry actually function, 400+ page bangers that pierce the Shield of Boringness that tech firms use to hide their operations. I recommend their ad-tech study:

https://www.gov.uk/cma-cases/online-platforms-and-digital-advertising-market-study

In and of themselves, good market studies are powerful things. They expose workings. They inform debate. When they're undertaken by wealthy, powerful countries, they provide enforcement roadmaps for smaller, poorer nations who are being tormented in the same way, by the same companies, that the regulator studied.

But market studies are really just curtain-raisers. After a regulator establishes the facts about a market, they can intervene. They can propose new regulations, and they can impose "conduct remedies" (punishments that restrict corporate behavior) on companies that are cheating.

Now, the stolen, corrupt, illegitimate, extremist, bullshit Supreme Court just made regulation a lot harder. In a case called Loper Bright, SCOTUS killed the longstanding principle of "Chevron deference," which basically meant that when an agency said it had built a factual case to support a regulation, courts should assume they're not lying:

https://jacobin.com/2024/07/scotus-decisions-chevron-immunity-loper

The death of Chevron Deference means that many important regulations – past, present and future – are going to get dragged in front of a judge, most likely one of those Texas MAGA mouth-breathers in the Fifth Circuit, to be neutered or killed. But even so, regulators still have options – they can still impose conduct remedies, which are unaffected by the sabotage of Chevron Deference.

Pre-Loper, post-Loper, and today, the careful, thorough investigation of the facts of how markets operate is the prelude to doing things about how those markets operate. Facts matter. They matter even if there's a change in government, because once the facts are in the public domain, other governments can use them as the basis for action.

Which is why, when the FTC uses its powers to compel disclosures from the largest tech companies in the world, and then assesses those disclosures and concludes that these companies engage in "vast surveillance," in ways that the users don't realize and that these companies "fail to adequately protect users, that matters.

What's more, the Commission concludes that "data abuses can fuel market dominance, and market dominance can, in turn, further enable data abuses and practices that harm consumers." In other words: tech monopolists spy on us in order to achieve and maintain their monopolies, and then they spy on us some more, and that hurts us.

So if you're wondering what kind of action this report is teeing up, I think we can safely say that the FTC believes that there's evidence that the unregulated, rampant practices of the commercial surveillance industry are illegal. First, because commercial surveillance harms us as "consumers." "Consumer welfare" is the one rubric for enforcement that the right-wing economists who hijacked antitrust law in the Reagan era left intact, and here we have the Commission giving us evidence that surveillance hurts us, and that it comes about as a result of monopoly, and that the more companies spy, the stronger their monopolies become.

But the Commission also tees up another kind of enforcement: Section 5, the long (long!) neglected power of the agency to punish companies for "unfair and deceptive methods of competition," a very broad power indeed:

https://pluralistic.net/2023/01/10/the-courage-to-govern/#whos-in-charge

In the study, the Commission shows – pretty convincingly! – that the commercial surveillance sector routinely tricks people who have no idea how their data is being used. Most people don't understand, for example, that the platforms use all kinds of inducements to get web publishers to embed tracking pixels, fonts, analytics beacons, etc that send user-data back to the Big Tech databases, where it's merged with data from your direct interactions with the company. Likewise, most people don't understand the shadowy data-broker industry, which sells Big Tech gigantic amounts of data harvested by your credit card company, by Bluetooth and wifi monitoring devices on streets and in stores, and by your car. Data-brokers buy this data from anyone who claims to have it, including people who are probably lying, like Nissan, who claims that it has records of the smells inside drivers' cars, as well as those drivers' sex-lives:

https://nypost.com/2023/09/06/nissan-kia-collect-data-about-drivers-sexual-activity/

Or Cox Communications, which claims that it is secretly recording and transcribing the conversations we have in range of the mics on our speakers, phones, and other IoT devices:

https://www.404media.co/heres-the-pitch-deck-for-active-listening-ad-targeting/

(If there's a kernel of truth to Cox's bullshit, my guess it's that they've convinced some of the sleazier "smart TV" companies to secretly turn on their mics, then inflated this into a marketdroid's wet-dream of "we have logged every word uttered by Americans and can use it to target ads.)

Notwithstanding the rampant fraud inside the data brokerage industry, there's no question that some of the data they offer for sale is real, that it's intimate and sensitive, and that the people it's harvested from never consented to its collection. How do you opt out of public facial recognition cameras? "Just don't have a face" isn't a realistic opt-out policy.

And if the public is being deceived about the collection of this data, they're even more in the dark about the way it's used – merged with on-platform usage data and data from apps and the web, then analyzed for the purposes of drawing "inferences" about you and your traits.

What's more, the companies have chaotic, bullshit internal processes for handling your data, which also rise to the level of "deceptive and unfair" conduct. For example, if you send these companies a deletion request for your data, they'll tell you they deleted the data, but actually, they keep it, after "de-identifying" it.

De-identification is a highly theoretical way of sanitizing data by removing the "personally identifiers" from it. In practice, most de-identified data can be quickly re-identified, and nearly all de-identified data can eventually be re-identified:

https://pluralistic.net/2024/03/08/the-fire-of-orodruin/#are-we-the-baddies

Breaches, re-identification, and weaponization are extraordinarily hard to prevent. In general, we should operate on the assumption that any data that's collected will probably leak, and any data that's retained will almost certainly leak someday. To have even a hope of preventing this, companies have to treat data with enormous care, maintaining detailed logs and conducting regular audits. But the Commission found that the biggest tech companies are extraordinarily sloppy, to the point where "they often could not even identify all the data points they collected or all of the third parties they shared that data with."

This has serious implications for consumer privacy, obviously, but there's also a big national security dimension. Given the recent panic at the prospect that the Chinese government is using Tiktok to spy on Americans, it's pretty amazing that American commercial surveillance has escaped serious Congressional scrutiny.

After all, it would be a simple matter to use the tech platforms targeting systems to identify and push ads (including ads linking to malicious sites) to Congressional staffers ("under-40s with Political Science college degrees within one mile of Congress") or, say, NORAD personnel ("Air Force enlistees within one mile of Cheyenne Mountain").

Those targeting parameters should be enough to worry Congress, but there's a whole universe of potential characteristics that can be selected, hence the Commission's conclusion that "profound threats to users can occur when targeting occurs based on sensitive categories."

The FTC's findings about the dangers of all this data are timely, given the current wrangle over another antitrust case. In August, a federal court found that Google is a monopolist in search, and that the company used its data lakes to secure and maintain its monopoly.

This kicked off widespread demands for the court to order Google to share its data with competitors in order to erase that competitive advantage. Holy moly is this a bad idea – as the FTC study shows, the data that Google stole from us all is incredibly toxic. Arguing that we can fix the Google problem by sharing that data far and wide is like proposing that we can "solve" the fact that only some countries have nuclear warheads by "democratizing" access to planet-busting bombs:

https://pluralistic.net/2024/08/07/revealed-preferences/#extinguish-v-improve

To address the competitive advantage Google achieved by engaging in the reckless, harmful conduct detailed in this FTC report, we should delete all that data. Sure, that may seem inconceivable, but come on, surely the right amount of toxic, nonconsensually harvested data on the public that should be retained by corporations is zero:

https://pluralistic.net/2024/09/19/just-stop-putting-that-up-your-ass/#harm-reduction

Some people argue that we don't need to share out the data that Google never should have been allowed to collect – it's enough to share out the "inferences" that Google drew from that data, and from other data its other tentacles (Youtube, Android, etc) shoved into its gaping maw, as well as the oceans of data-broker slurry it stirred into the mix.

But as the report finds, the most unethical, least consensual data was "personal information that these systems infer, that was purchased from third parties, or that was derived from users’ and non-users’ activities off of the platform." We gotta delete that, too. Especially that.

A major focus of the report is the way that the platforms handled children's data. Platforms have special obligations when it comes to kids' data, because while Congress has failed to act on consumer privacy, they did bestir themselves to enact a children's privacy law. In 2000, Congress passed the Children's Online Privacy Protection Act (COPPA), which puts strict limits on the collection, retention and processing of data on kids under 13.

Now, there are two ways to think about COPPA. One view is, "if you're not certain that everyone in your data-set is over 13, you shouldn't be collecting or processing their data at all." Another is, "In order to ensure that everyone whose data you're collecting and processing is over 13, you should collect a gigantic amount of data on all of them, including the under-13s, in order to be sure that not collecting under-13s' data." That second approach would be ironically self-defeating, obviously, though it's one that's gaining traction around the world and in state legislatures, as "age verification" laws find legislative support.

The platforms, meanwhile, found a third, even stupider approach: rather than collecting nothing because they can't verify ages, or collecting everything to verify ages, they collect everything, but make you click a box that says, "I'm over 13":

https://pluralistic.net/2023/04/09/how-to-make-a-child-safe-tiktok/

It will not surprise you to learn that many children under 13 have figured out that they can click the "I'm over 13" box and go on their merry way. It won't surprise you, but apparently, it will surprise the hell out of the platforms, who claimed that they had zero underage users on the basis that everyone has to click the "I'm over 13" box to get an account on the service.

By failing to pass comprehensive privacy legislation for 36 years (and counting), Congress delegated privacy protection to self-regulation by the companies themselves. They've been marking their own homework, and now, thanks to the FTC's power to compel disclosures, we can say for certain that the platforms cheat.

No surprise that the FTC's top recommendation is for Congress to pass a new privacy law. But they've got other, eminently sensible recommendations, like requiring the companies to do a better job of protecting their users' data: collect less, store less, delete it after use, stop combining data from their various lines of business, and stop sharing data with third parties.

Remember, the FTC has broad powers to order "conduct remedies" like this, and these are largely unaffected by the Supreme Court's "Chevron deference" decision in Loper-Bright.

The FTC says that privacy policies should be "clear, simple, and easily understood," and says that ad-targeting should be severely restricted. They want clearer consent for data inferences (including AI), and that companies should monitor their own processes with regular, stringent audits.

They also have recommendations for competition regulators – remember, the Biden administration has a "whole of government" antitrust approach that asks every agency to use its power to break up corporate concentration:

https://www.eff.org/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

They say that competition enforcers factor in the privacy implications of proposed mergers, and think about how promoting privacy could also promote competition (in other words, if Google's stolen data helped it secure a monopoly, then making them delete that data will weaken their market power).

I understand the reflex to greet a report like this with cheap cynicism, but that's a mistake. There's a difference between "everybody knows" that tech is screwing us on privacy, and "a federal agency has concluded" that this is true. These market studies make a difference – if you doubt it, consider for a moment that Cigna is suing the FTC for releasing a landmark market study showing how its Express Scripts division has used its monopoly power to jack up the price of prescription drugs:

https://www.fiercehealthcare.com/payers/express-scripts-files-suit-against-ftc-demands-retraction-report-pbm-industry

Big business is shit-scared of this kind of research by federal agencies – if they think this threatens their power, why shouldn't we take them at their word?

This report is a milestone, and – as with the UK Competition and Markets Authority reports – it's a banger. Even after Loper-Bright, this report can form the factual foundation for muscular conduct remedies that will limit what the largest tech companies can do.

But without privacy law, the data brokerages that feed the tech giants will be largely unaffected. True, the Consumer Finance Protection Bureau is doing some good work at the margins here:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

But we need to do more than curb the worst excesses of the largest data-brokers. We need to kill this sector, and to do that, Congress has to act:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

The paperback edition of The Lost Cause, my nationally bestselling, hopeful solarpunk novel is out this month!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/09/20/water-also-wet/#marking-their-own-homework

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#coppa#privacy first#ftc#section 5 of the ftc act#privacy#consumer privacy#big tech#antitrust#monopolies#data brokers#radium suppositories#commercial surveillance#surveillance#google#a look behind the screens

231 notes

·

View notes

Text

#Greenwashing#CCPA#Consumer privacy#Sustainability#ESG compliance#Environmental claims#Regulatory crackdown#Green marketing#Corporate responsibility#Truth in advertising

1 note

·

View note

Text

The Evolution of Digital Advertising: Key Trends to Watch in 2025

In 2025, the digital advertising landscape will be defined by AI, interactive content, voice search, the metaverse, and sustainability. Marketers who embrace these technologies and adapt to evolving consumer expectations and privacy norms will drive growth and engagement. Innovation and customer focus will keep brands relevant.

#Digital Advertising 2025#AI and Marketing#Interactive Content Strategies#Voice Search Optimization#The Metaverse in Marketing#Sustainable Marketing Practices#Consumer Privacy#Marketing Innovation#Brand Relevance

0 notes

Text

FCC Revocation of Consent? What You Need to Know for 2025 – #TCPAWORLD

The Federal Communications Commission (FCC) has set new guidelines that may affect how businesses engage with consumers, especially regarding revoking consent under the Telephone Consumer Protection Act (TCPA). As we approach April 2025, the impacts of these updates could be significant for those involved in telemarketing, customer outreach, and businesses reliant on consumer contact. But what exactly does "FCC Revocation of Consent" mean, and how will it shape communication standards?

In my latest video, "FCC REVOCATION Of Consent?//April 2025/#TCPAWORLD", I discuss the essential aspects of this regulation and how it might influence your business approach, especially in the final expense and telesales industry. The changes aim to balance consumer privacy with effective business communication, but the nuances can be challenging to navigate.

Why This Matters for Telemarketers and Telesales Professionals

If your business involves senior life insurance or other consumer outreach, understanding and complying with these regulations is critical. Non-compliance can lead to hefty fines, legal battles, and damage to your brand reputation. Our website, Final Expense Telesales Pro, provides resources to help you stay informed and compliant with the evolving FCC guidelines.

Key Takeaways

TCPA Updates: The changes in TCPA compliance could redefine acceptable practices in obtaining and revoking consumer consent.

Protecting Your Business: It's essential to adjust your outreach strategies in line with these changes.

Understanding Revocation of Consent: Knowing how and when a consumer can revoke their consent for communication will be crucial for future interactions.

Don’t forget to watch the video for a deeper dive into the FCC's updates on consent revocation and how to stay ahead in #TCPAWORLD!

#FCC Updates#TCPA Compliance#Revocation Of Consent#Telemarketing Rules#Consumer Protection#Final Expense Insurance#Senior Life Insurance#Telesales Pro#Business Compliance#Marketing Trends 2025#Consumer Privacy#Legal Updates#Telemarketing#telemarketing

0 notes

Text

And they ask me // Is it going good in the garden? // I say I'm lost but I beg no pardon

#sleep token#sleep token fanart#vessel#sleep token vessel#vessel sleep token#caramel#even in arcadia#man. fuck man. this song#i started this before the song was out bc the single's art is beautiful#and i wanted to draw vessel with the morningstar#but i had to take a day to myself before finishing this#caramel is such a gorgeous and heartbreaking song#when people demanded heavy music i bet they didn't mean it like this#listening to it feels like vessel just flipped open his diary and sung a few pages from it#i get vessel#i feel deeply for him#we live in a world where privacy is nonexistent#where kindness is less and less expected from strangers#where people harass and threaten others in online spaces#where the only goal is to consume more and more and more until there's nothing left#as someone who's gonna probably lose her job in a few years to ai and greed#i too know how does it feel to hate the thing you love doing the most (art)#but still love it the same#anyways sorry for all the rambling :::')

1K notes

·

View notes

Text

🗣️ This is for all new internet connected cars

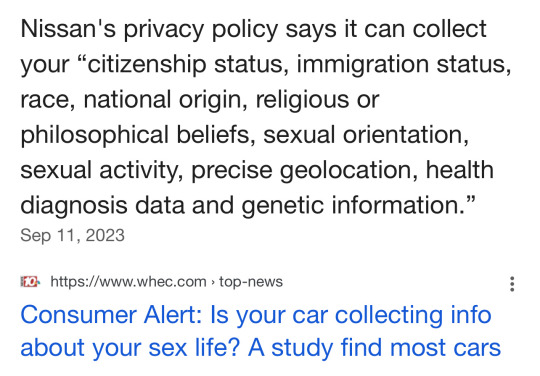

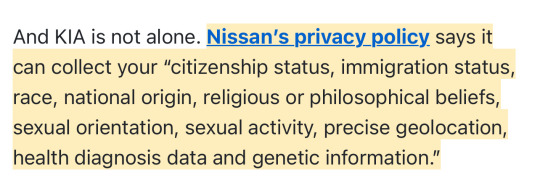

A new study has found that your car likely knows more about you than your mom. That is disconcerting, but what’s even more so is what is being done with your information. It’s all about the Benjamins. Our private information is being collected and sold.

The Mozilla Foundation, a non-profit that studies internet and privacy issues, studied 25 car manufacturers. And it found every manufacturer sold in America poses a greater risk to your privacy than any device, app or social media platform.

Our cars are rolling computers, many of which are connected to the internet collecting information about how you drive and where. New cars also have microphones and sensors that give you safety features like automatic braking and drowsy driver detection. Those systems are also providing information. Got GPS or satellite radio? Then your car likely knows your habits, musical and political preferences.

Did you download your car’s app which gives you access to even more features? Well that also gives your car access to your phone and all the information on it.

The study found that of the 25 car brands, 84% say they sell your personal data.

And what they collect is astounding.

One example the study sites is KIA’s privacy policy. It indicates the company collects information about your sexual activity. I initially didn’t believe it until I pulled KIA’s privacy policy and read it. And it’s right there in black and white. It says it collects information about your “ethnicity, religious, philosophical beliefs, sexual orientation, sex life, or political opinions.

And it says it can keep your info for “as long as is necessary for the legitimate business purpose set out in this privacy notice.”

Translation: Nissan can keep your information as long as they want to. And more than half of the manufacturers (56%) say they will share your information with law enforcement if asked.

(continue reading) more ↵

#politics#data mining#smart cars#spyware#privacy rights#surveillance state#new cars#big brother#nissan#kia#connected cars#consumer alert#panopticon

9K notes

·

View notes

Text

I think it is very cool how tech companies, schools, employers, and universities make it actively difficult to distance yourself from Google, Microsoft, and Apple.

Yes most Linux distros are very stable, way more secure, privacy friendly, and way more customizable. But every institution is built to make technological independence as difficult as possible.

Yelling on the internet that everyone should switch to Linux and FOSS really ignores how much of the technological world is designed to not let that happen.

#yes switch to linux if you can#Data privacy and security needs to be addressed on a much larger legal scale#you cant consume your way out of this my friends#opensuse#linux#open source#data privacy

727 notes

·

View notes

Text

Cutting Out the Middleman

I suspect that some readers might interpret this cartoon as a call for Luddism, but mostly it’s a reflection of thoughts I’ve been having lately about out-of-control data harvesting and a growing surveillance state. I find myself daydreaming about how one might go about circumventing the layer of invasive, extractive technology that comes between us and so many mundane tasks these days.

To support this work and receive my weekly newsletter with background on each cartoon, please consider joining the Sorensen Subscription Service! Also on Patreon.

128 notes

·

View notes

Text

“Privacy, intimacy, anonymity and the right to secrets are all to be left outside the premises of the Society of Consumers. […] Since we are all commodities, we are obliged to create demand for ourselves. Membership of the confessional society is invitingly open to all, but there is a heavy penalty attached to staying outside. […] The updated version of Descartes’s Cogito is ‘I am seen, therefore I am’ – and that the more people who see me, the more I am…”

— Zygmunt Bauman, Moral Blindness: The Loss of Sensitivity in Liquid Modernity (via exhaled-spirals)

39 notes

·

View notes

Text

Trump is all-in on AI sandboxes. Do they work? - POLITICO

#donald trump#trump administration#federal government#ai#artificial intelligence#ai regulation#regulatorycompliance#government policy#policy#data privacy#data protection#consumer protection#republicans#gop#civil rights#social justice#us politics

17 notes

·

View notes

Text

Against transparency

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me at NEW ZEALAND'S UNITY BOOKS in AUCKLAND on May 2, and in WELLINGTON on May 3. More tour dates (Pittsburgh, PDX, London, Manchester) here.

Walk down any street in California for more than a couple minutes and you will come upon a sign warning you that a product or just an area "contains chemicals known to the state of California to cause cancer."

These warnings are posted to comply with Prop 65, a 1986 law that requires firms to notify you if they're exposing you to cancer risk. The hope was that a legal requirement to warn people about potential carcinogens would lead to a reduction in the use of carcinogens in commonly used products. But the joke's on us: since nearly everything has chemicals that trigger Prop 65 warnings, the warnings become a kind of background hiss. I've lived in California five times now, and I've never once seen a shred of evidence that a Prop 65 warning deters anyone from buying, consuming, using, or approaching anything. I mean, Disneyland is plastered in these warnings.

The idea behind Prop 65 was to "inform consumers" so they could "vote with their wallets." But "is this carcinogenic?" isn't a simple question. Many chemicals are carcinogenic if they come into contact with bare skin, or mucus membranes, but not if they are – for example – underfoot, in contact with the soles of your shoes. Other chemicals are dangerous when they're fresh and offgassing, but become safe once all the volatiles and aromatics have boiled off of them.

Prop 65 is often presented as a story of overregulation, but I think it's a matter of underregulation. Rather than simply telling you that there's a potential carcinogen nearby and leaving you to figure out whether you've exceeded your risk threshold, a useful regulatory framework would require firms to use their products in ways that minimize cancer risk. For example, if a product ships with a chemical that is potentially carcinogenic for a couple weeks after it is manufactured, then the law could require the manufacturer to air out the product for 14 days before shipping it to the wholesaler.

"Caveat emptor" has its place – say, at a yard-sale, or when buying lemonade from a kid raising money for a school trip – but routine shopping shouldn't be a life-or-death matter than you can only survive if you are willing and able to review extensive, peer-reviewed, paywalled toxicology literature. When a product poses a serious threat to our health, it should either be prohibited, or have its use proscribed, so that a reasonable, prudent person doing normal things doesn't have to worry that they've missed a potentially lethal gotcha.

In other words, transparency is nice, but it's not enough.

Think of the "privacy policies" you're asked to click through a thousand times a day. No one reads these. No one has ever read these. For the first six months that Twitter was in business, its privacy policy was full of mentions to Flickr, because that's where they ganked the policy from, and they missed a bunch of search/replace operations. That's funny – but far funnier is that no one at Twitter read the privacy policy, because if they had, they would have noticed this.

You know what would be better than a privacy policy? A privacy law. The last time Congress passed a consumer privacy law was in 1988, when they banned video store clerks from disclosing which VHS cassettes you took home. The fact is that virtually any privacy violation, no matter how ghastly or harmful to you, is legal, provided that you are "notified" through a privacy policy.

Which is why privacy policies are actually privacy invasion policies. No one reads these things because we all know we disagree with every word in them, including "and" and "the." They all boil down to, "By being stupid enough to use this service, you agree that I'm allowed to come to your house, punch your grandmother, wear your underwear, make long distance calls, and eat all the food in your fridge."

And like Prop 65 warnings, these privacy policies are everywhere, and – like Prop 65 warnings – they have proven useless. Companies don't craft better privacy policies because so long as everyone has a terrible bullshit privacy policy, there's no reason to.

My blog, pluralistic.net has two privacy policies. One sits across the top of every page:

Privacy policy: we don't collect or retain any data at all ever period.

The other one appears in the sidebar:

By reading this website, you agree, on behalf of your employer, to release me from all obligations and waivers arising from any and all NON-NEGOTIATED agreements, licenses, terms-of-service, shrinkwrap, clickwrap, browsewrap, confidentiality, non-disclosure, non-compete and acceptable use policies ("BOGUS AGREEMENTS") that I have entered into with your employer, its partners, licensors, agents and assigns, in perpetuity, without prejudice to my ongoing rights and privileges. You further represent that you have the authority to release me from any BOGUS AGREEMENTS on behalf of your employer.

The second one is a joke, obviously (it sits above a sidebar element that proclaims "Optimized for Netscape Navigator."). But what's most funny is that when I used to run it at the bottom of all my emails, I totally freaked out a bunch of reps from Big Tech companies on a standards committee that was trying to standardizes abusive, controlling browser technology and cram it down two billion peoples' throats. These guys kvetched endlessly that it was unfair for me to simply declare that they'd agreed that they would do a bunch of stuff for me on behalf of their bosses.

My first response was, of course, "Lighten up, Francis." But the more I thought about it, the more I realized that these guys actually believed that showering someone in endless volleys of fine print actually created legal contracts and consent, and that I might someday sue their employers because I had cleverly released myself from their BOGUS AGREEMENTS.

Of course, that would be very stupid. I can't just wave a piece of paper in your face, shout "YOU AGREED" and steal your bike. But substitute "bike" for "private data" and that's exactly the system we have with privacy policies. Rather than providing notice of odious and unconscionable behavior and hoping that "market forces" sort it out, we should just update privacy law so that doing certain things with your private data is illegal, without your ongoing, continuous, revocable consent.

Obviously, this would come as a severe shock to the tech economy, which is totally structured around commercial surveillance. But the fact that an extremely harmful practice is also extremely widespread is not a reason to keep on doing it – it's a reason to stop. There was a time when we let companies sell radium suppositories, and then, one day, we just banned companies from telling you to put nuclear waste up your asshole:

https://pluralistic.net/2024/09/19/just-stop-putting-that-up-your-ass/#harm-reduction

We didn't fall back on the "freedom to contract" or "bodily autonomy." Sure, what you do with your body is your own business, but that doesn't imply that quacks should have free rein to trick you into using their murderous products.

And just as there are legitimate, therapeutic uses of radioisotopes (I'm having a PT scan on Monday!), there are legitimate reasons to share your private data. We don't need to resort to outright bans – we can just regulate things. For example, in 2022 Stanford Law's Mark Lemley proposed an absolutely ingenious answer to abusive Terms of Service:

https://pluralistic.net/2022/08/10/be-reasonable/#i-would-prefer-not-to

Lemley proposes constructing a set of "default rules" for routine agreements, made up of the "explicit and implicit" rules of contracts, including common law, the Uniform Commercial Code, and the Restatement of Contracts. Any time you're presented with a license agreement, you can turn it down in favor of the "default rules" that everyone knows and understands. Anyone who accepts a EULA instead must truly be consenting to a special set of rules. If you want your EULA to get chosen over the default rules, you need to make it short, clear and reasonable.

If we're gonna replace "caveat emptor" with rules that let you go about your business without reading 10,000,000 words of bullshit legalese every time you leave your house (or pick up your phone), we need smart policymakers to create those rules.

Since 2010, America has had an agency that was charged with creating and policing those rules, so you could do normal stuff without worrying that you were accidentally signing your life away. That agency is called the the Consumer Finance Protection Bureau, and though it did good work for its first decade of existence, it wasn't until the Biden era, when Rohit Chopra took over the agency, that it came into its own.

Under Chopra, the CFPB became a powerhouse, going after one scam after another, racking up a series of impressive wins:

https://pluralistic.net/2024/06/10/getting-things-done/#deliverism

The CFPB didn't just react, either. They staffed up with smart technologists and created innovative, smart, effective initiatives to keep you from getting ripped off:

https://pluralistic.net/2024/11/01/bankshot/#personal-financial-data-rights

Under Chopra, the CFPB was in the news all the time, as they scored victory after victory. These days, the CFPB is in the news again, but for much uglier reasons. For billionaire scammers like Elon Musk, CFPB is the most hated of all the federal agencies. Musk's Doge has been trying to "delete the CFPB" since they arrived on the scene, but their hatred has made them so frenzied that they keep screwing up and losing in court. They just lost again:

https://prospect.org/justice/2025-04-18-federal-judge-halts-cfpb-purge-again/

Trumpland is full of the people on the other side of those EULAs, the people who think that if they can trick you out of your money, "that makes me smart":

https://pluralistic.net/2024/12/04/its-not-a-lie/#its-a-premature-truth

If Musk can trick you into buying a Tesla after lying about full self driving, that doesn't make him a scammer, "that makes him smart." If Trump can stiff his contractors, that doesn't make him a crook, "that makes him smart."

It's not a coincidence that these guys went after the CFPB. It's no mystery why they've gone after every watchdog that keeps you from getting scammed, poisoned or maimed, from the FDA to the EPA to the NLRB. They are the kind of people who say, "So long as it was in the fine print, and so long I could foist that fine-print on you, that's a fair deal." For them, caveat emptor is a Latin phrase that means, "Surprise, you're dead."

It's bad enough when companies do this to us, be they Big Tech, health insurers or airlines. But when the government takes these grifters' side over yours – when grifters take over the government – hold onto your wallets:

https://www.citationneeded.news/trump-crypto-empire/

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/04/19/gotcha/#known-to-the-state-of-california-to-cause-cancer

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#prop 65#cfpb#consumer finance protection bureau#privacy#fine print#eulas#reasonable agreement#adhesion contracts#mark lemley

223 notes

·

View notes

Text

Finally sat down and listened to the new song. Silly words are here if you'd like to read (and more in the tags because yeah, and this has been edited since I posted, so apologies if it looks different from someone else's reblog), but no pressure. If you scroll on by, I hope you have a good day today.

I'm... surprised I'm happy? After hearing it. I think that's how I'm feeling, at least. It's not a giddiness or me jumping around in joy, but there's a kind of, um. Candlelight flicker among the darkness that was surrounding me as I was thinking about it? Like a light came on once I finally looked and checked it out for myself.

I'm unsure how to word it properly and I don't really know how to without going on a long-winded ramble, but I had some thoughts before I heard the song based on what people were saying, the anxieties I saw swirling about, thoughts of guilt and anger amongst the pointing fingers and the reality of what a sharp rise to fame can do to, well, anyone. I saw so many different opinions and I'm not here to dismiss any of them, or say one is wrong. Music is wonderful and powerful, loving and harsh, not just in its creation but in the context we give it. I don't want to dismiss anyone's thoughts here.

I just want to say that it's a song I'm glad exists. I'm glad he trusts people to hear it. That despite its content, or context - I don't want to comb through why it exists, or say I'm glad any negative things happened so the pain can produce more because that is the furthest thing from the truth for me.

Caramel is sweet as a concoction in it's nature. You can put things in it, like salt, to change the taste and make it more complex. And it can burn, scorch, and stick - it can make a right mess of a kitchen, of someone's clothes, hands. It's temperamental and not set in stone, at least not while you're stirring it in the pot. But it's not always a mess.

If you're patient with it and respect the process - and, in this case, that respect is to him and the others as performers and as people, as well as yourselves as fans of the music and individuals with flaws, personalities, all that makes people who they are - it might turn out okay, in the end.

I could be off with this, of course. I'm just glad the song exists.

Caramel takes time. And it's okay to have it salty, bitter, or sweet. There's times for all flavors and even if it isn't your preference for whatever reason, it's good to accept the choice and respect it. Respect the making of it and the one who spent hours of time and patience on it.

I'll end here to save my brain from spinning in circles. If you read any of this, thank you so much. I hope it made sense. Please take care of yourselves, everyone.

#Satari rambles#Sleep Token#Sleep Token Spoilers#I'd rather not tag it just because I know everyone has already said something on the song itself#But I know some might be waiting so I'd rather not spoil anyone#If I'm missing a tag please let me know#At the end of the day#This is still the same person who held out a “You are so loved” sign back to the crowd when it was given to him#Who wrote “I've been waiting to share this with you” on Spotify when Emergence dropped.#Who smiles with his bandmates and makes puzzles for fans to put together#Who made III hand-signs with us when III was out and seemed surprised or moved by the support to the point of tears#Guilt and strife and hardships are all-consuming emotions and can weight heavy on you for a number of reasons#But it's not forever and the downsides of fame and infamy aren't forever too#I can't imagine what it's like to have be thrust to the top of the world like he and the band was#And to have everything but the music be the highlight sometimes#As someone who probably will be anonymous or under a penname if I ever publish anything#It's scary#Privacy is precious as much as it is important#Hope it's okay to say that I hope it was cathartic for him and for how he may or may not feel as someone who has skyrocketed to fame#I'm just a dork on the internet who likes his music and is still terrified of speaking on here#I hope all of this was okay to share and makes sense#Take care of yourselves everyone

31 notes

·

View notes

Text

Expect clip posting to slow down due to irl nonsense.

Also from the 11th to the 18th I won’t have any computer access and very little internet access but I’ll schedule a couple clips beforehand for that week 🫡

#idk how often the posts will be. maybe 3-4 a week#12 hr workday + no real privacy in my room#means I could only edit late at night#or on the weekend#and it feels like such a waste of my tiny bit of free time#to be sitting at my desk pretending to do something as I wait for my mom to gtfo of my room#I think all the typing makes her suspicious idk man#it made what should have taken 45 mins take up to 2 hrs sometimes#so I will be attempting to do all my editing on friday/saturday and queue the posts#what I’ve been doing is scheduling 2-4 days of posts at a time#but like I said. doing it during the weekday is extremely time consuming due to being watched :p#on the weekends she’s less nosy and I can just wait for her to be asleep lol#if tumblr didn’t have an audio upload limit then I could just go all out for like 3 hrs and have a big queue lined up#it will actually take me less time to edit on a friday/saturday just cause I won’t be interrupted at all at nignt. lol.#tldr: I have very little free time and am interrupted constantly during the week#it will be easier for me to edit late at night on the weekend#and schedule the posts throughout the week#at the cost of no more daily posts (blame tumblr audio limit)#non voice post

46 notes

·

View notes

Text

Automakers are collecting driving data from customers and quietly providing it to insurance companies, and the practice has resulted in some unassuming drivers seeing their coverage increased or even terminated due to the practice, a new report reveals.

The New York Times reported this week that car manufacturers like General Motors and Ford are tracking drivers’ behavior through internet-connected vehicles, and sharing it with data brokers such as LexisNexis and Verisk, which create “consumer disclosure reports” on individuals that insurance companies can access.

The consumer reports do not show where a driver has traveled, but they do provide information on length of trips and driving behavior, such as “hard braking,” “hard accelerating” and speeding. Insurance companies can use those reports to assess the risk of a current or potential customer, and adjust rates or refuse coverage based on the findings.

The Times highlighted the case of Kenn Dahl, the driver of a leased Chevrolet Bolt, who learned he and his wife's driving habits were being tracked when an insurance agent told him in 2022 that his LexisNexis report was a factor behind his insurance premium jumping 21%.

“It felt like a betrayal,” Dahl told the newspaper. “They’re taking information that I didn’t realize was going to be shared and screwing with our insurance.”

(continue reading) related ←

#politics#lexisnexis#data mining#smart cars#car insurance#privacy rights#kenn dahl#spyware#capitalism#privacy#consumer disclosure reports

120 notes

·

View notes

Text

I'm being fr when I say we need to cull the Ethel Cain fanbase. and significantly so

#so fucking angry and sad to hear about the hacking and blatant disrespect of hayden and her privacy#some of u full on do not deserve to engage with her/ any of her art#and im not saying that in a parasocial pedestal way. im saying that as in “a you dont know how to treat ppl or meaningfully consume art” way

9 notes

·

View notes

Text

Big Fryer is Watching

This cartoon refers to a study by a British consumer rights group called Which? (that's their name), that examined unnecessary data harvesting by "smart" devices, including air fryers. Certain brands wanted permission to record audio on users' phones and track precise location, and one brand connected its app to trackers from Facebook and Tiktok. None of this digital access is actually necessary for the fryer to function.

Receive my weekly newsletter and keep this work sustainable by joining the Sorensen Subscription Service! Also on Patreon.

42 notes

·

View notes