#data annotation

Explore tagged Tumblr posts

Text

The Rise of Data Annotation Services in Machine Learning Projects

4 notes

·

View notes

Text

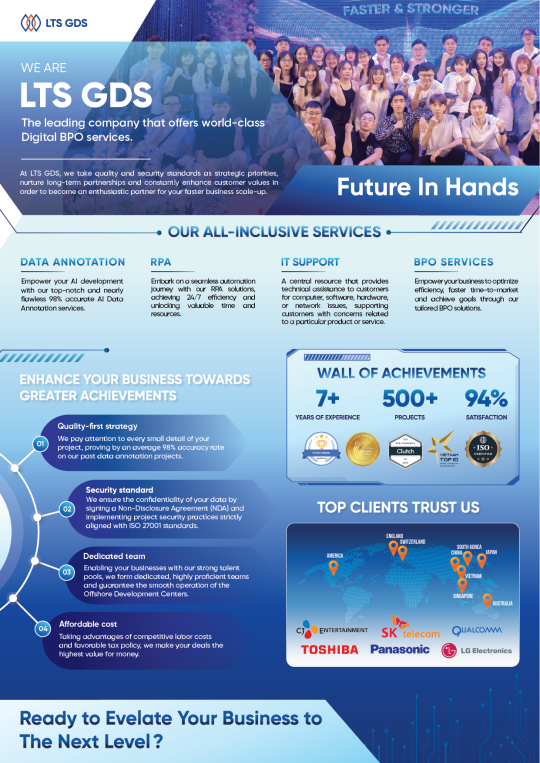

Our IT Services

With more than 7 years of experience in the data annotation industry, LTS Global Digital Services has been honored to receive major domestic awards and trust from significant customers in the US, Germany, Korea, and Japan. Besides, having experienced hundreds of projects in different fields such as Automobile, Retail, Manufacturing, Construction, and Sports, our company confidently completes projects and ensures accuracy of up to 99.9%. This has also been confirmed by 97% of customers using the service.

If you are looking for an outsourcing company that meets the above criteria, contact LTS Global Digital Service for advice and trial!

2 notes

·

View notes

Text

Peroptyx Assessment Correct Answers

R E V E A L E D ! ! !

Get the complete set of answers here

UPDATE: The file now has 210 correct answers.

NO TIME TO DO THE ASSESSMENT? I offer my service to do the exam for you. Just email me at [email protected]

1 note

·

View note

Text

Now Hiring!!! This Ireland-based tech company is hiring international workers for the position of Map Evaluator. Purely Work at home part-time or full-time. Experience is not necessary. No phone/online interview. Click or copy the link to apply.

https://bit.ly/3IzrLeD

3 notes

·

View notes

Text

Hiiii digital friends! Does anyone know of any websites or businesses that are similar to data annotation ? Or any wfh jobs that are actually onboarding people!!! Any info would be so appreciated thank you lovies !! 😚🫶🏽

#bexie yaps#work from home#wfh#wfh culture#wfh vibes#work from anywhere#data annotation#online work#wfhlife#money#make money online#financialfreedom#finance#financialindependence

0 notes

Text

Polygon Annotation: Your Essential Guide to Pixel-Perfect AI Training

Explore the transformative role of polygon annotation in AI training. This guide reveals how pixel-perfect data labeling drives accuracy in industries like autonomous vehicles, GIS mapping, agriculture, and more.

Dive into challenges, tools, and best practices for high-quality data annotation. Learn how precision labeling fuels robust AI model performance and shapes the future of machine learning success.

1 note

·

View note

Text

Why Leading AI Startups are Outsourcing Data Annotation in 2025

Outsourcing data annotation for AI startups has become an evident resort for professionals to focus on the core areas of business while having hands on precise and high performing AI models. Here’a detailed insights to why AI startups demand to outsource data annotation.

#data annotation#data annotation service#ai data labeling services#image annotation services#video annotation services#audio annotation services#image labeling services#data annotation solution#data annotation outsourcing

1 note

·

View note

Text

What is Eye Annotation and Why is it Important?

Eye annotation is a crucial process in the field of computer vision and machine learning, where it involves the precise labeling of eye movements and gaze patterns in visual data. This technique is essential for training algorithms to understand human attention and behaviour, enabling advancements in applications such as augmented reality, user experience research, and accessibility technologies. By utilising Eye Annotation, businesses can enhance their products' usability and effectiveness, ultimately leading to improved customer satisfaction and engagement. Visit us : https://www.qualitasglobal.com/vertical/healthcare-and-healthtech/

0 notes

Text

Macgence offers high-quality Data Annotation Services in Bangalore, empowering AI and machine learning models with precisely labeled datasets. Our expert team ensures accurate annotations across images, videos, audio, and text using techniques like bounding boxes, semantic segmentation, and named entity recognition. As Bangalore emerges as a tech innovation hub, we support startups and enterprises in sectors like autonomous vehicles, healthcare, e-commerce, and fintech. With scalable workflows, strict quality checks, and data security compliance, Macgence helps you accelerate AI training and improve model accuracy. Choose us for reliable, domain-specific annotations tailored to your project needs in Bangalore’s dynamic tech ecosystem.

0 notes

Text

Understanding the Role of Data Annotation in Machine Learning

AI models are only as good as the data they’re trained on. This blog explains how data annotation transforms raw data into actionable intelligence—making it the backbone of successful machine learning.

What Is Data Annotation in Machine Learning?

The blog starts with a clear definition of data annotation and its significance. Labeling data accurately helps machine learning models understand and process input, whether it’s images, text, or audio.

What Is the Current State of Data Annotation?

The field is evolving fast, with growing demand for high-quality, industry-specific datasets. Businesses now realize annotated data is not a luxury—it's a necessity for building intelligent systems.

Key Challenges and How to Resolve Them

The blog outlines common roadblocks and practical solutions:

Quality Control: Ensure annotation consistency with automated validation tools

Scalability: Use a hybrid of human and machine annotation

Domain Expertise: Leverage skilled annotators with industry knowledge

Emerging Trends Reshaping the Industry

Stay ahead with insights into:

Real-time and multi-modal annotation

Industry-specific and collaborative platforms

AR/VR data labeling

Ethical AI practices and bias mitigation

Stricter data security standards

The Human Element

Despite automation, human annotators remain vital for accuracy, context, and ethical oversight.

This blog is a must-read for ML practitioners and data scientists. It highlights why quality annotation is foundational to AI success and how organizations can scale it responsibly and efficiently.

Read More: https://www.damcogroup.com/blogs/role-of-data-annotation-in-machine-learning

0 notes

Text

AI Might Be Smart — But It Still Needs Human Help

And the humans behind it are getting exhausted.

Artificial intelligence is powering everything from autonomous vehicles and medical diagnostics to smart assistants and customer service bots. But beneath all that innovation is a workforce you rarely hear about—human data annotators.

These are the people labeling every photo, video frame, email, and review so AI can learn what’s what. They’re the unsung foundation of machine learning.

But there’s a problem that’s quietly eroding the quality of that foundation: annotation fatigue.

What Is Annotation Fatigue?

Annotation fatigue happens when data annotators spend long hours performing repetitive labeling tasks. Over time, it leads to mental exhaustion, reduced attention to detail, and slower work. Mistakes start to creep in.

It’s not always obvious at first, but the effects compound—damaging not just the data but the models trained on it.

Why It Matters

Poorly labeled data doesn’t just slow projects down. It impacts business outcomes in serious ways:

Machine learning models trained on inaccurate data perform poorly in real-world situations

Projects require costly rework and extended timelines

Annotators burn out, leading to higher turnover and onboarding costs

In sensitive industries like healthcare or finance, errors can have legal and ethical consequences

Whether it's mislabeling an X-ray or misunderstanding the tone of a customer complaint, the stakes are higher than most companies realize.

How to Prevent It

If your AI depends on human annotation, then preventing fatigue is essential—not optional. Here are some proven strategies:

Rotate tasks across different data types to break monotony

Encourage short breaks throughout the day to reduce cognitive strain

Implement real-time quality checks to catch errors early

Incorporate feedback loops so annotators feel valued and guided

Use assisted labeling tools and active learning to minimize unnecessary work

Support mental health with a positive work culture and wellness resources

Provide fair pay and recognition to show appreciation for critical work

Annotation fatigue isn’t solved by working harder. It’s solved by working smarter—and more humanely.

Final Thoughts

Annotation fatigue is one of the least talked-about issues in artificial intelligence, yet it has a massive impact on outcomes. Ignoring it doesn’t just harm annotators it leads to costly mistakes, underperforming AI systems, and slower innovation.

Organizations that prioritize both data quality and annotator well-being are the ones that will lead in AI. Because truly intelligent systems start with sustainable, human-centered processes.

Read the full blog here: Annotation Fatigue in AI: Why Human Data Quality Declines Over Time

0 notes

Text

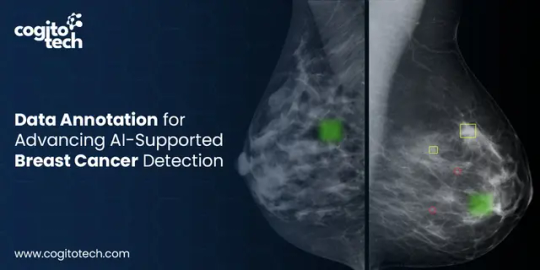

Mammogram Data Annotation for AI-Driven Breast Cancer Detection

Mammographic screenings are widely known for their accessibility, cost-efficiency, and dependable accuracy in detecting abnormalities. However, with over 100 million mammograms taken globally each year, each requiring at least two specialist reviews—the sheer volume creates significant challenges for radiologists, leading to delays in report generation, missed screenings, and an increased risk of diagnostic errors. A study by the National Cancer Institute suggests screening mammograms underdiagnose about 20% of breast cancers.

In recent years, the rapid evolution of artificial intelligence and the growing availability of digital medical data have positioned AI and machine learning as a promising solution. These technologies have shown promising results in mammography, in some studies, matching or even exceeding radiologists’ performance in breast cancer detection tasks. Research published in The Lancet Oncology revealed that AI-supported mammogram screening detected 20% more cancers compared to readings by radiologists alone. However, to achieve high accuracy, AI and ML models require training on large-scale, well-annotated mammography datasets.

The quality and inclusiveness of annotation directly influence model performance. Advanced annotation methods include diverse categorizations, such as lesion-specific labels, BI-RADS scores (Breast Imaging Reporting and Data System), breast density classes, and molecular subtype information. These annotated lesion datasets train the model to identify subtle imaging features that distinguish normal tissue from benign and malignant lesions, ultimately improving both sensitivity and specificity.

Breast cancer is a highly heterogeneous disease, displaying complexity at clinical, histopathological, microenvironmental, and genetic levels. Patients with different pathological and molecular subtypes show wide variations in recurrence risk, treatment response, and prognosis. This complexity must be reflected in training data if AI systems are to be clinically useful.

This write-up focuses on the importance of annotated data for building AI-powered models for lesion detection and how Cogito Tech’s Medical AI Innovation Hubs provide clinically validated, regulatory-compliant annotation solutions to accelerate AI readiness in breast cancer diagnostics. read more : Cogito tech mammogram data annotation for ai

0 notes

Text

A Guide to Choosing a Data Annotation Outsourcing Company

Clarify the Requirements: Before evaluating outsourcing partners, it's crucial to clearly define your data annotation requirements. Consider aspects such as the type and volume of data needing annotation, the complexity of annotations required, and any industry-specific or regulatory standards to adhere to.

Expertise and Experience: Seek out outsourcing companies with a proven track record in data annotation. Assess their expertise within your industry vertical and their experience handling similar projects. Evaluate factors such as the quality of annotations, adherence to deadlines, and client testimonials.

Data Security and Compliance: Data security is paramount when outsourcing sensitive information. Ensure that the outsourcing company has robust security measures in place to safeguard your data and comply with relevant data privacy regulations such as GDPR or HIPAA.

Scalability and Flexibility: Opt for an outsourcing partner capable of scaling with your evolving needs. Whether it's a small pilot project or a large-scale deployment, ensure the company has the resources and flexibility to meet your requirements without compromising quality or turnaround time.

Cost and Pricing Structure: While cost is important, it shouldn't be the sole determining factor. Evaluate the pricing structure of potential partners, considering factors like hourly rates, project-based pricing, or subscription models. Strike a balance between cost and quality of service.

Quality Assurance Processes: Inquire about the quality assurance processes employed by the outsourcing company to ensure the accuracy and reliability of annotated data. This may include quality checks, error detection mechanisms, and ongoing training of annotation teams.

Prototype: Consider requesting a trial run or pilot project before finalizing an agreement. This allows you to evaluate the quality of annotated data, project timelines, and the proficiency of annotators. For complex projects, negotiate a Proof of Concept (PoC) to gain a clear understanding of requirements.

For detailed information, see the full article here!

2 notes

·

View notes

Text

Label Everything: How Data Annotation Drives Enterprise AI Success

AI is only as smart as the data it learns from. And in enterprise environments, data annotation is the silent powerhouse that fuels innovation.

Whether you’re building models to automate operations, predict behavior, or personalize experiences, annotated data is the foundation for machine learning performance and accuracy.

What Is Data Annotation?

Data annotation is the process of labeling raw data—text, images, audio, or video—with meaningful tags. These tags help machine learning models “understand” input in the way humans do. For example:

Labeling product defects in manufacturing images

Highlighting sentiment in customer reviews

Identifying tumors in medical imaging

Categorizing support tickets for chatbot training

Without this layer of intelligence, your AI model is flying blind.

Why Is Data Annotation Critical for AI Projects?

For supervised learning models — the most common in enterprise AI — annotated data is non-negotiable. The model uses this labeled data to learn patterns, make predictions, and continuously improve.

Poorly labeled or inconsistent data leads to underperforming algorithms, inaccurate insights, and wasted investment. That’s why enterprises must prioritize quality, consistency, and contextual accuracy in annotation.

Enterprise Use Cases Fueled by Data Annotation

Data annotation enables real-world AI across industries:

Retail: Analyze customer sentiment, personalize recommendations, and optimize supply chains.

Healthcare: Improve diagnostics through annotated X-rays and pathology slides.

Manufacturing: Detect anomalies in production with computer vision models.

Finance: Flag suspicious transactions with labeled fraud data.

Transportation: Train autonomous systems to detect road signs, pedestrians, and obstacles.

These aren’t just efficiencies — they’re revenue-driving innovations made possible through precise data annotation.

Data Annotation as a Growth Enabler

When done right, data annotation offers a competitive edge:

Faster AI development cycles

Higher model accuracy and reliability

Stronger automation across departments

Personalized, predictive customer experiences

Better compliance and risk management

Whether via in-house teams or outsourcing to specialized partners, investing in annotation upfront accelerates AI success — and ultimately, business growth.

Looking Ahead: Strategic Annotation at Scale

As enterprises scale AI adoption, the demand for annotation will multiply. Leaders must now think strategically — combining automation (e.g., pre-labeling via AI) with human-in-the-loop review to balance speed, quality, and cost.

Annotation isn’t just a one-time task — it’s an ongoing process that evolves with your AI goals and your data landscape.

Final Thought:

Think of data annotation as the bridge between raw data and intelligent action. If you’re serious about AI that delivers real value, this is where your journey begins.

Explore the full article at AQE Digital

0 notes

Text

Data Annotation: The Foundation of Intelligent AI Systems

Data annotation enables AI models to interpret and learn from raw data by adding structured labels to images, text, audio, and video. A global provider delivers precise, scalable annotation services that fuel machine learning across sectors like autonomous driving, healthcare, and NLP—ensuring accuracy, performance, and ethical AI development.

0 notes

Text

Challenges in Point Cloud Annotation & Strategies for Better Accuracy

Point cloud annotation from Infosearch is a labeling process regarding 3D data collected from various devices (such as LiDAR or depth cameras) and is relevant to autonomous car driving; robotics, and AR/VR. Annotation of point clouds is a difficult task because of the inherent complexities, i.e., sparse data, unstructured format, and vast volume of information, of 3D.

Infosearch is a key provider of LiDAR annotation and point cloud annotation services for machine learning.

We provide the main challenges and the proposed techniques to increase the accuracy of point cloud labeling below.

The Critical Hindrances to Achieving Point Cloud Annotation.

1. Sparsity and Occlusion

• Distant objects are commonly described by few points in LiDAR data.

• Obscuration and partial visibility—such as behind cars or trees—happen often in 3D data.

Result: Knowing where one object ends and the other begins is not exactly simple.

2. Unstructured Data Format

• Point clouds frustrate the image-like structure due to their scattered points in a 3D space.

• Spatially dispersed points in a 3D scene prevent effective use of its 2D counterparts or natural human cognition.

Result: Higher cognitive load for annotators.

3. Class Ambiguity

• It is difficult to distinguish similar-looking objects, such as pedestrians and aides.

• Heterogeneity in datasets or labeling practices between practitioners can damage model performance.

Result: Class confusion and poor generalization.

4. Time-Consuming and Labor-Intensive

• Annotation tasks such as 3D bounding box or segmentation masks generation are laborious, as long as scenes are not simple.

Result: Costly human resource and slow progress on iterations.

5. Multi-Sensor Alignment Errors

• It is common for LiDAR, radar and RGB camera data to misalign, thereby causing wrong annotations.

Result: Perception models are trained with flawed annotation using incorrect datasets.

Strategies to Improve Annotation Accuracy

1. Use Advanced Annotation Platforms

• Leverage tools that offer:

o 3D visualization and manipulation

o Integration of views of camera and LiDAR for more accurate labeling.

o Smart snapping and interpolation

Tools: Scale AI, Supervisely, CVAT-3D, Segments.ai

2. Employ Semi-Automated Annotation

• Utilize automated labelers, pre-labelers with object detection models, to simplify workflows.

• Combine with human-in-the-loop verification.

Benefit: Helps to reduce direct labor inputs and still achieve more annotation consistency.

3. Establish Clear Annotation Protocols

• Output specifications for labeling should include minimum sized objects and occlusion considerations.

• The common instances should be illustrated, with tips given for rare cases.

Benefit: Minimises errors in human work and differences in annotators.

4. 3D-Aware QA Processes

• Perform post-annotation validation:

o Check for missing labels

o Validate object dimensions and orientation

o Review alignment with camera views

Benefit: Increases the confidence in annotations and elevates model results.

5. Utilize Synthetic & Augmented Data

• Replicate or augment point cloud datasets in orders of simulation, like CARLA and AirSim, to facilitate real world cases.

Benefit: Improves the completeness of datasets.

6. Train and Specialize Annotators

• Provide 3D-specific training to labeling teams.

• Enforce a narrow classification methodology (pedestrians, traffic signs), among labeling crews, for optimal yields.

Benefit: Affects the duration to a large extent and reduces the chances of mistaking within annotation procedures.

Summary Table

Challenge Strategy to Mitigate

Sparsity & Occlusion Use multi-sensor views; AI-assisted tools

Unstructured Format 3D-native annotation platforms

Class absence of ambiguity To establish exact parameters for labelling, use rigorous QA controls.

Time-Intensive Labeling Pre-labeling + human verification

Misalignment Sensor calibration + multi-modal QA

Final Thoughts

The process of annotating point clouds is critical as far as establishing reliable 3D perception technologies are concerned. Team productivity is improved by the merging of sophisticated tools, systematic procedures and automation, facilitating delivery of quality data that fuels real-world autonomy and intelligence.

Contact Infosearch for your data annotation services.

0 notes