#data warehouse to aws migration

Explore tagged Tumblr posts

Text

Best Practices for a Smooth Data Warehouse Migration to Amazon Redshift

In the era of big data, many organizations find themselves outgrowing traditional on-premise data warehouses. Moving to a scalable, cloud-based solution like Amazon Redshift is an attractive solution for companies looking to improve performance, cut costs, and gain flexibility in their data operations. However, data warehouse migration to AWS, particularly to Amazon Redshift, can be complex, involving careful planning and precise execution to ensure a smooth transition. In this article, we’ll explore best practices for a seamless Redshift migration, covering essential steps from planning to optimization.

1. Establish Clear Objectives for Migration

Before diving into the technical process, it’s essential to define clear objectives for your data warehouse migration to AWS. Are you primarily looking to improve performance, reduce operational costs, or increase scalability? Understanding the ‘why’ behind your migration will help guide the entire process, from the tools you select to the migration approach.

For instance, if your main goal is to reduce costs, you’ll want to explore Amazon Redshift’s pay-as-you-go model or even Reserved Instances for predictable workloads. On the other hand, if performance is your focus, configuring the right nodes and optimizing queries will become a priority.

2. Assess and Prepare Your Data

Data assessment is a critical step in ensuring that your Redshift data warehouse can support your needs post-migration. Start by categorizing your data to determine what should be migrated and what can be archived or discarded. AWS provides tools like the AWS Schema Conversion Tool (SCT), which helps assess and convert your existing data schema for compatibility with Amazon Redshift.

For structured data that fits into Redshift’s SQL-based architecture, SCT can automatically convert schema from various sources, including Oracle and SQL Server, into a Redshift-compatible format. However, data with more complex structures might require custom ETL (Extract, Transform, Load) processes to maintain data integrity.

3. Choose the Right Migration Strategy

Amazon Redshift offers several migration strategies, each suited to different scenarios:

Lift and Shift: This approach involves migrating your data with minimal adjustments. It’s quick but may require optimization post-migration to achieve the best performance.

Re-architecting for Redshift: This strategy involves redesigning data models to leverage Redshift’s capabilities, such as columnar storage and distribution keys. Although more complex, it ensures optimal performance and scalability.

Hybrid Migration: In some cases, you may choose to keep certain workloads on-premises while migrating only specific data to Redshift. This strategy can help reduce risk and maintain critical workloads while testing Redshift’s performance.

Each strategy has its pros and cons, and selecting the best one depends on your unique business needs and resources. For a fast-tracked, low-cost migration, lift-and-shift works well, while those seeking high-performance gains should consider re-architecting.

4. Leverage Amazon’s Native Tools

Amazon Redshift provides a suite of tools that streamline and enhance the migration process:

AWS Database Migration Service (DMS): This service facilitates seamless data migration by enabling continuous data replication with minimal downtime. It’s particularly helpful for organizations that need to keep their data warehouse running during migration.

AWS Glue: Glue is a serverless data integration service that can help you prepare, transform, and load data into Redshift. It’s particularly valuable when dealing with unstructured or semi-structured data that needs to be transformed before migrating.

Using these tools allows for a smoother, more efficient migration while reducing the risk of data inconsistencies and downtime.

5. Optimize for Performance on Amazon Redshift

Once the migration is complete, it’s essential to take advantage of Redshift’s optimization features:

Use Sort and Distribution Keys: Redshift relies on distribution keys to define how data is stored across nodes. Selecting the right key can significantly improve query performance. Sort keys, on the other hand, help speed up query execution by reducing disk I/O.

Analyze and Tune Queries: Post-migration, analyze your queries to identify potential bottlenecks. Redshift’s query optimizer can help tune performance based on your specific workloads, reducing processing time for complex queries.

Compression and Encoding: Amazon Redshift offers automatic compression, reducing the size of your data and enhancing performance. Using columnar storage, Redshift efficiently compresses data, so be sure to implement optimal compression settings to save storage costs and boost query speed.

6. Plan for Security and Compliance

Data security and regulatory compliance are top priorities when migrating sensitive data to the cloud. Amazon Redshift includes various security features such as:

Data Encryption: Use encryption options, including encryption at rest using AWS Key Management Service (KMS) and encryption in transit with SSL, to protect your data during migration and beyond.

Access Control: Amazon Redshift supports AWS Identity and Access Management (IAM) roles, allowing you to define user permissions precisely, ensuring that only authorized personnel can access sensitive data.

Audit Logging: Redshift’s logging features provide transparency and traceability, allowing you to monitor all actions taken on your data warehouse. This helps meet compliance requirements and secures sensitive information.

7. Monitor and Adjust Post-Migration

Once the migration is complete, establish a monitoring routine to track the performance and health of your Redshift data warehouse. Amazon Redshift offers built-in monitoring features through Amazon CloudWatch, which can alert you to anomalies and allow for quick adjustments.

Additionally, be prepared to make adjustments as you observe user patterns and workloads. Regularly review your queries, data loads, and performance metrics, fine-tuning configurations as needed to maintain optimal performance.

Final Thoughts: Migrating to Amazon Redshift with Confidence

Migrating your data warehouse to Amazon Redshift can bring substantial advantages, but it requires careful planning, robust tools, and continuous optimization to unlock its full potential. By defining clear objectives, preparing your data, selecting the right migration strategy, and optimizing for performance, you can ensure a seamless transition to Redshift. Leveraging Amazon’s suite of tools and Redshift’s powerful features will empower your team to harness the full potential of a cloud-based data warehouse, boosting scalability, performance, and cost-efficiency.

Whether your goal is improved analytics or lower operating costs, following these best practices will help you make the most of your Amazon Redshift data warehouse, enabling your organization to thrive in a data-driven world.

#data warehouse migration to aws#redshift data warehouse#amazon redshift data warehouse#redshift migration#data warehouse to aws migration#data warehouse#aws migration

0 notes

Photo

-SHOOT

Prompt: Root/The Machine/Shaw

... just before Root passed out...Root clenches at the most injured parts of her adomn...a slight of hand and "inserting" a small object into the most life threatening part of her stomach...

When the paramedics...got out of sight....they moved Root from the 'bus and moved to a costmized RV/VAN type....

The Machine replicates and "migrates" upwards in a vein..that takes up to Roots brain and begins to replicates like being an Incratitor and implants themselves all over it's new confines....and starts to use microscopic wiring and placing them...and morphine into identical parts of Roots entire "biosphere"s.. and starts by eradicate the Co-Clear Implant behind her ear external and morph... Into a NEW AND GREATLY parts....and it's NOT JUST THE IMPLANT BEHIND THE EAR. ... it's the entire system....

It's years later.. Root went back to her studio loft... despite The Machine Objections,. .Root found where The Machine has been Embodied into the walls of the warehouse where Shaw shot Root. Inside the walls, the ceiling and the floor.,... And she plugged in the flash drive...too much data shot through Roots system... leaving her unconscious get out of there.. waking to the fingers of the sun peaking over horizon into a field of waving wheat....she escaped to the place she BECAME ROOT....the small town in TX and THAT LIBRARY...TO THE INTERFACE (WHICH CONFUSED ROOT) IN WHICHED SAMANTHA GROVES WAS RE-BORN ROOT... And, when The Machine became vulnerable She'd send Root to another Data-Base.,and start a cascaded failure and blowing ALL THE PHYSICAL PERMITMENTORS TO TO ASH....and wot RE-BOOT after 2-Hours of rest . And, "nuzzles" Ms. Groves awake

Root was in a state of influx of transformation into a NEW AND IMPROVED" versions of, both, The Machine and ROOT...

Years later, Root is ONLY ABLE TO REST 2-HOURS OUT OF A 24-HOURS Days (like fucking Batman)...and she was like a super soldier (see Captain America)...and even though Harold and Sammen are still alive and working their own numbers that The Machine has been send them on....Ms. Groves had her own....more deadly....more dangerous... numbers .... Can see inferno red and x-ray vision... can hear a heart beat around a movie theater right by a speaker and getting into a car...

Years later The Machine interfers does THE #1 Golden Rule.... even though all live is valued. You can NOT make exceptions....and put Roots ..and Harold/Sammen just on a tralimentary... and ALL of them will have to deal with sense of betrayal and Harold Sammen resentment against Root. and when all things are BEGINNING so soothing out ... trust is The less issue. Harold doesn't want to share His Machine with Ms. Groves.. Sammen is seriously self destructive

FYI: FAN ART FANFIC IS FREE USE. NOT FOR PROFIT... AND ARE BASICALLY AU. SINCE THEY REFLECT THE *FANS* "ART"...AND AS LONG AS WE PUT THE. DISCLAIMER*NOT FOR PROFIT*... NONE OF THE*Conceptual OWNERS* OF THE ORIGINAL PROPERTY... THEY WILL BE COOL. BECAUSE FAN ART AND/OR FAN FIC.... BECAUSE IT'S FREE ADVERTISING. IE: CHECK OUT ARIANNA ERGANDA'S REACTION TO HOW OVERWHELMING OF THE FAN ART AND FAN FICTION.. SHE WAS IN AWE. "GELPHIE" ...SHE NEVER SAID STOP.. YOUR MAKING MONEY OFF ME

I have all DEGRASSE AND THE NEXT GENERATION.. FOR FREE DOWNLOAD AND I WATCH THEM. I DON'T HAVE TO PAY...AND I'M NOT MAKING ANY OFF THEM. ID, I SHOWED WITHOUT MAKING ANY MONEY.. IT'S*EDUCATIONAL PURPOSES*. ...BTW: DEGRASSI IS CANADIAN..

AND I WATCH CANON/ 'SHIPs ON YOUTUBE FOR FREE...ON PLAYLIST....OR TUBI....FREEVEE... DISNEY+... HULU... NETFLIX... etc.

I AM NOT EVEN GETTING PAID BY THE POWERS TO BE... FOR FREE ADVERTISING. THAT I JUST DID... NO CONTRACTS. I *ADVERTISE* WITH OUT THE ASPECT OF EVER EXCEPTING PAID.

The rest of the thread is here.

tl;dr: Don’t monetize AO3, kids. You won’t like what happens next.

#sheriff nicole haught#wynonna earp#nicole haught#waverly earp#wayhaught#katherine barrell#wynonna earp vengeance#sheriff forbes#wynonnaearpedit#pride month#default tags#tags are your best friends#[email protected]

89K notes

·

View notes

Text

Beyond Clicks and Code: Inside the Real Work of Odoo Development Companies

Ask any CTO or operations lead when they knew it was time for a serious ERP — and you’ll probably hear a version of the same story:

“Nothing talks to anything else. Our finance team works in spreadsheets. Our warehouse still uses WhatsApp. Our CRM is an Excel file with tabs named after sales reps.”

That chaos — the tangle of disconnected systems, manual entries, and late-night panic over broken formulas — is usually when a company looks at Enterprise Resource Planning. And among the available platforms, Odoo stands out: modular, open-source, flexible, and affordable. That’s when many organizations turn to an experienced Odoo development company to help make sense of the mess.

But here’s the part most businesses miss:

Odoo isn’t magic. It’s a toolkit. What makes it powerful is how it’s built — and that’s where Odoo development companies come in.

Let’s pull back the curtain — not on the demo videos or marketing pages, but on what these teams actually do, line by line, decision by decision.

1. They Don’t Start with Code — They Start with Listening

You can’t build what you don’t understand.

A real Odoo development team begins not with modules or models — but with meetings. They talk to people:

The CFO who wants cash flow forecasting

The warehouse manager still printing pick lists

The HR head juggling three compliance standards

Then, they translate business language into technical architecture:

“We need approvals” → state-machine transitions on purchase orders

“Different rates by state” → dynamic tax computation logic

“We work offline sometimes” → asynchronous sync and data queuing

The goal is to make Odoo understand how the business actually works — not how it should.

2. Custom Modules Aren’t a Feature — They’re the Foundation

You can install Odoo out of the box. But for any serious business — especially in the U.S. — vanilla won’t do.

Why?

Sales Tax: Avalara doesn't handle all state rules by default. You need custom logic and region-based overrides.

Payroll: U.S. labor laws vary by state and even municipality. PTO accrual, break rules, and overtime require deep customization.

Dashboards: Executives demand real-time reporting, not spreadsheets. KPIs like COGS, MRR, and deferred income require custom SQL + QWeb/JS widgets.

These aren’t tweaks. They’re architectural rebuilds.

Behind the scenes, developers:

Extend core models

Override default methods

Define security roles and access logic

Write robust integration tests for critical workflows

3. Integration Isn’t Optional — It’s Expected

Most businesses already use dozens of services:

Stripe for billing

Shopify for storefront

Twilio for messaging

UPS for logistics

Legacy CRM or MES systems

The job of an Odoo development company isn’t to just “connect” — it’s to orchestrate.

That means:

Writing Python middleware (FastAPI/Flask)

Managing OAuth tokens and API keys

Creating cron jobs for non-real-time syncs

Parsing JSON, XML, and CSV safely into Odoo’s ORM

Great devs build resilience: they assume failure and plan for recovery.

4. Data Migration Is a Battle of Patience and Precision

Here’s the truth: Every ERP implementation involves digital archaeology.

Weeks of:

Decoding broken spreadsheets

Extracting legacy data from Access or FileMaker

Normalizing inconsistent SKUs and records

Writing ETL pipelines using Pandas

Testing imports in staging environments

Building rollback scripts to prevent live corruption

This is where a mistake can kill the go-live.

Good Odoo teams know this — and they test obsessively.

5. Performance and Scaling Go Beyond Hosting

Sure, anyone can host Odoo on AWS. But once:

Sales reps are logging in hourly

Warehouses are printing 300 pick tickets

Cron jobs trigger 100,000 line items

Bottlenecks happen — and that’s where real engineering begins:

PostgreSQL: Query optimization, indexing, slow query monitoring

ORM: Avoiding nested loops and inefficient searches

Web Layer: Load balancing with NGINX, caching with Gzip

Workers: Separating cron from real-time tasks

Caching: Redis for sessions and metadata

Deployment: Docker for environment isolation, Helm for Kubernetes scaling, CI/CD pipelines for fast deployment

Performance isn’t a checkbox — it’s a continuous engineering discipline.

6. Security Isn’t Just About User Roles — It’s Architecture

In the U.S., compliance is not optional:

Healthcare → HIPAA

Finance → SOC 2, PCI

Education → FERPA

Retail → CCPA / GDPR

Security must be engineered into the platform:

Row-level and field-level permissions

Encrypted fields for sensitive PII

RBAC linked to departments and positions

Two-Factor Auth via Okta or Azure AD

Automated backups with encryption

Audit logs and real-time monitoring tools (Sentry, New Relic, Fail2Ban)

Security isn’t reactive — it’s embedded in the design.

7. They Build for Maintainability, Not Just Delivery

ERP disasters often start with one bad decision: prioritizing speed over structure.

The best teams write boring code:

Clearly documented

Easy to test

Easy to extend

That includes:

Docstrings on every function

Unit + integration tests

Version-controlled configs

No hardcoded values — use config parameters

Consistent naming conventions

CI checks for linting and test coverage

A year from now, someone else will own that code — make it readable.

8. They Say No — And That’s a Good Thing

The hardest thing a dev team can say?

“No.”

No to a 4-week, 10-module rollout

No to cloning a broken legacy ERP

No to skipping UAT before go-live

Because saying yes to those things means technical debt, user frustration, and ERP failure.

Great developers ask hard questions. They push for clarity, not convenience. That’s not arrogance — that’s craftsmanship.

In Conclusion: Odoo Isn’t the Product. The Team Is.

You can install Odoo in five minutes. You can browse apps and install modules in an hour.

But building a resilient, secure, scalable ERP — that’s:

Months of engineering

Thousands of decisions

Countless hours of testing and optimization

Odoo development companies aren’t just vendors. They’re:

Translators

Engineers

Strategists

Problem-solvers

When your dashboards reflect live data, your warehouse hums without WhatsApp, and your finance team never opens Excel — you’ll realize:

This isn’t just software. This is how your company runs.

#Odoo Development Company#Odoo ERP Implementation#Custom Odoo Modules#Odoo Integration Services#Odoo Performance Optimization

0 notes

Text

ERP Cloud Platform for SAP Business One: What It Is, Benefits & Migration Guide

Running your business on outdated, on-premise systems is like trying to win a race with a flat tire. If you're using SAP Business One and still managing your infrastructure in-house, it's time to consider a smarter, more flexible option: an ERP Cloud Platform.

We are Maivin, a SAP Business one Partner in Delhi NCR. Let’s discuss ERP Cloud Platform straight to the point, with examples and real-world benefits.

What is an ERP Cloud Platform?

An ERP Cloud Platform is a hosted environment that allows you to run your ERP software—like SAP Business One—on remote servers via the internet, instead of installing and managing it on local computers.

Example: Think of it like moving from maintaining your own electricity generator to using a reliable power grid. Instead of dealing with hardware, backups, and updates, you access SAP Business One anytime, anywhere, via the cloud.

Key Benefits:

No upfront investment in hardware

Accessible from any device with internet

Data backups and updates managed automatically

Faster performance and better uptime

The Real-Life Benefits of SAP Business One on Cloud

Using SAP Business One cloud can be profitable for every business owner. SInce it provide acc

1. Accessibility & Remote WorkWork from anywhere—home, warehouse, or a client site. Example: A sales manager in Delhi updates a quote on their tablet while visiting a retailer in Gurugram.

2. Real-Time Data VisibilityBenefit: Access live dashboards and reports. Example: Your CFO in Noida checks cash flow while your warehouse team in Faridabad tracks inventory levels.

3. Automatic Software UpdatesBenefit: You’re always on the latest SAP version without manual effort. Example: No need to schedule downtime or hire IT experts for version upgrades.

4. Lower IT Maintenance CostsBenefit: No need to buy or maintain servers. Example: A mid-sized manufacturing firm saves ₹5–10 lakhs yearly by avoiding on-site infrastructure and hiring a full-time IT admin.

5. Enhanced Data SecurityBenefit: Cloud providers offer high-level security, encryption, and backups. Example: Even if your office systems are attacked or damaged, your ERP data stays secure in the cloud.

6. Scalability and FlexibilityBenefit: Easily scale your ERP usage as your business grows. Example: A personal care product company launches two new product lines and expands its cloud ERP without infrastructure upgrades.

Migrating to an ERP Cloud Platform: What to Expect

Step 1: AssessmentWe evaluate your current SAP B1 setup and determine cloud readiness.

Step 2: Cloud Hosting SetupWe help you choose between public, private, or hybrid cloud (like AWS, Azure, or local DCs).

Step 3: Data Migration & ConfigurationYour existing SAP data is securely migrated. Customizations and settings are replicated in the cloud.

Step 4: Testing & ValidationWe run tests to ensure everything works perfectly before going live.

Step 5: Go Live & SupportOnce verified, your SAP Business One goes live on the cloud—with full support and training.

Why Maivin is Your Best Cloud ERP Partner

At Maivin, we specialize in SAP Business One deployment and cloud migration for small to mid-sized businesses in Delhi NCR and across India.

What You Get with Maivin:

Expert SAP Cloud Migration Team

End-to-End Setup & 24/7 Support

Data Security, Backups & Disaster Recovery

Cost-Effective Cloud Packages for SMEs

Boost Your Business with ERP Cloud Platform for SAP Business One

Cloud is no longer optional—it's essential. Moving SAP Business One to the cloud with Maivin ensures you stay agile, secure, and competitive.

📞 Contact Maivin today for a free cloud-readiness consultation and take your first step toward smarter, faster, more scalable business operations.

#SAPBusinessOne#ERPCloudPlatform#CloudERP#SAPB1#MaivinERP#DigitalTransformation#ERPForSMEs#CloudMigration#SAPPartnerIndia#BusinessAutomation

1 note

·

View note

Text

Cloud Database Solution Market Size, Share, Demand, Growth and Global Industry Analysis 2034: Powering Real-Time, Scalable Data Management

Cloud Database Solutions Market is undergoing a significant transformation, poised to expand from $13.5 billion in 2024 to $63.4 billion by 2034, registering an impressive CAGR of 16.7%. At its core, this market involves delivering database services over cloud platforms, enabling organizations to manage, store, and analyze data with increased scalability, flexibility, and cost efficiency. These solutions include relational and non-relational databases, data warehouses, and advanced analytics services — empowering businesses to accelerate decision-making, streamline operations, and drive innovation. As digital transformation accelerates globally, enterprises are turning to cloud-based databases as a critical enabler of agility and performance.

Click to Request a Sample of this Report for Additional Market Insights: https://www.globalinsightservices.com/request-sample/?id=GIS33070

Market Dynamics

The growth of this market is fueled by several interconnected factors. A major driver is the increasing demand for big data analytics and real-time data processing, crucial for industries that rely on rapid insights. Additionally, the explosion of IoT applications and the adoption of AI-driven systems are pushing the need for scalable and secure data platforms. Public cloud deployments lead the market due to their affordability and elasticity, while hybrid clouds are gaining traction for offering enhanced control and security. Key trends include the rise of serverless databases, edge computing integration, and the adoption of multi-cloud strategies. However, challenges persist — chief among them are concerns around data security, privacy, and integration with legacy systems, especially for heavily regulated industries.

Key Players Analysis

The cloud database solutions market is characterized by both tech giants and emerging innovators. Dominating the landscape are major players like Amazon Web Services (AWS), Microsoft Azure, Google Cloud, and Oracle, known for their robust infrastructure and expansive service portfolios. These companies are setting the pace in innovation, offering solutions that integrate machine learning, quantum computing, and blockchain. Meanwhile, emerging firms such as MongoDB, Couchbase, Redis Labs, and DataStax are carving niches with specialized, scalable, and high-performance offerings. Competition is intensifying, with companies investing in mergers, acquisitions, and strategic partnerships to expand reach and capabilities. Compliance with data protection laws like GDPR and CCPA is also a major focus for players in this ecosystem.

Regional Analysis

Geographically, North America holds the largest market share, led by the United States, thanks to its early adoption of cloud technologies and dominance in tech innovation. Canada is also contributing to growth, supported by favorable government policies and a burgeoning tech startup scene. Europe follows closely, with countries such as Germany, the UK, and France pushing digital transformation initiatives and benefiting from strong compliance frameworks. Asia Pacific is emerging as the fastest-growing region, with China, India, Japan, and South Korea leading investment in cloud infrastructure and digital economies. Latin America and the Middle East & Africa are gradually catching up, with countries like Brazil, Mexico, UAE, and Saudi Arabia launching strategic initiatives focused on cloud migration, smart cities, and data-driven governance.

Recent News & Developments

The past few months have seen exciting developments in this market. Google Cloud teamed up with Databricks to enhance its AI and data capabilities. Microsoft Azure introduced a new serverless database option aimed at optimizing scalability and cost for large enterprises. AWS launched a multi-region database service to improve data reliability and global reach. Oracle bolstered its capabilities by acquiring Ampere Computing, enhancing performance with advanced processors. IBM, on the other hand, released a new hybrid cloud database solution infused with AI, helping businesses automate and streamline data management. These initiatives reflect the industry’s focus on innovation, scalability, and customer-centric services.

Browse Full Report : https://www.globalinsightservices.com/reports/cloud-database-solution-market/

Scope of the Report

This report explores the full scope of the cloud database solutions market, covering various database types — relational, non-relational, and hybrid — as well as deployment models including public, private, and hybrid clouds. It evaluates product segments like DBaaS, database software, and appliances, alongside supporting services such as consulting, implementation, training, and maintenance. It analyzes trends in AI and blockchain integration, in-memory computing, and emerging quantum database applications. Applications range across verticals from BFSI and telecom to healthcare, manufacturing, and government. The report also addresses functionality such as data storage, security, processing, and backup. This deep dive offers a well-rounded perspective on current trends, growth opportunities, and competitive landscapes shaping the future of cloud database technology.

#clouddatabase #databasetechnology #cloudcomputing #multicloudsolutions #aiintegration #bigdataanalytics #serverlessarchitecture #digitaltransformation #hybridcloud #dataprivacy

Discover Additional Market Insights from Global Insight Services:

Supply Chain Security Market : https://www.globalinsightservices.com/reports/supply-chain-security-market/

Edutainment Market : https://www.globalinsightservices.com/reports/edutainment-market/

Magnetic Sensor Market : https://www.globalinsightservices.com/reports/magnetic-sensor-market/

AI Agent Market : https://www.globalinsightservices.com/reports/ai-agent-market/

Anime Market : https://www.globalinsightservices.com/reports/anime-market/

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

Azure Data Warehouse Migration for AI-Based Healthcare Company

IFI Techsolutions integrated Azure SQL Database migration for a healthcare company in the USA enhancing their scalability, flexibility and operational efficiency.

0 notes

Text

Data Warehouse Migration to Microsoft Azure | IFI Techsolutions IFI Techsolutions used PolyBase to move AWS Redshift data into Azure SQL Data Warehouse by running T-SQL commands against internal and external tables.

#DataMigration#CloudMigration#AzureSQL#AzureDataWarehouse#CloudComputing#DataAnalytics#AzureExpertMSP#AzureMigration#IFITechsolutions

0 notes

Text

Transforming the E-Commerce & Retail Industry with Scalable Tech Solutions

Transforming the E-Commerce & Retail Industry with Scalable Tech Solutions

The E-Commerce & Retail industry has seen a profound evolution over the last decade—and the momentum isn't slowing down. Driven by rapidly changing consumer expectations, emerging technologies, and global market dynamics, retailers today must not only meet customers where they are but anticipate where they're going next.

At Allshore Technologies, we specialize in building intelligent, scalable, and customer-centric solutions tailored to modern retail needs. From seamless online shopping experiences to powerful back-end automation, our goal is to help e-commerce brands innovate, scale, and compete.

The Digital Shift in Retail

The retail landscape has shifted from being product-focused to experience-driven. Consumers now expect:

24/7 access to products and services

Personalized recommendations

Fast and reliable delivery

Frictionless checkout across all devices

This shift has made digital transformation in retail not just a strategy—but a necessity.

Core Technology Trends in E-Commerce & Retail

Retailers and e-commerce brands are leveraging the latest technologies to meet growing demands. Here are the most impactful trends:

1. AI & Machine Learning for Personalization

Using AI-driven algorithms, retailers can now deliver personalized product recommendations, dynamic pricing, and tailored marketing that increase conversion and retention.

2. Omnichannel Commerce

Customers expect a seamless experience across devices and channels. Integrating e-commerce platforms with in-store systems, mobile apps, and social commerce tools is key.

3. Mobile-First Design

With over 70% of traffic coming from smartphones, mobile-responsive design and native mobile apps are essential for customer engagement and retention.

4. Inventory Management & Automation

Real-time inventory tracking, predictive restocking, and warehouse automation reduce operational costs and improve order fulfillment accuracy.

5. Cloud-Based Infrastructure

Scalable, cloud-native architectures ensure uptime, speed, and reliability during high-traffic events like Black Friday and seasonal promotions.

6. AR & VR for Immersive Shopping

Virtual try-ons and 3D product views are reshaping how customers interact with products online—creating engaging, real-life shopping experiences.

How Allshore Technologies Supports Retail Growth

We empower retailers and e-commerce businesses to grow faster with future-ready, scalable digital solutions. Our capabilities include:

Custom E-Commerce Development Using platforms like Shopify, Magento, and WooCommerce, or building from scratch with headless commerce architecture.

Integration Services Sync your online store with CRMs, ERPs, POS systems, and 3rd-party APIs for better workflow and data visibility.

Customer Experience Platforms We build customer-focused portals, mobile apps, and loyalty systems to drive brand affinity and repeat purchases.

Data & Analytics Dashboards Make smarter business decisions with real-time dashboards that track customer behavior, sales trends, and inventory performance.

Cloud & DevOps for Retail Ensure seamless deployment, zero downtime, and secure scalability using AWS, Azure, and GCP-based solutions.

Use Case: Scalable Fashion E-Commerce Platform

One of our retail clients, a fast-growing fashion brand, approached Allshore to upgrade their aging Shopify setup. We helped them migrate to a headless commerce model, enabling:

Lightning-fast page loads

API-first architecture for flexibility

Personalized product feeds using AI

Seamless mobile checkout integration

As a result, the client saw a 35% increase in conversion rate and a 50% reduction in cart abandonment.

#ecommercesolutions#RetailTechnology#OmnichannelCommerce#AllshoreTechnologies#DigitalRetail#CustomEcommerceDevelopment

0 notes

Text

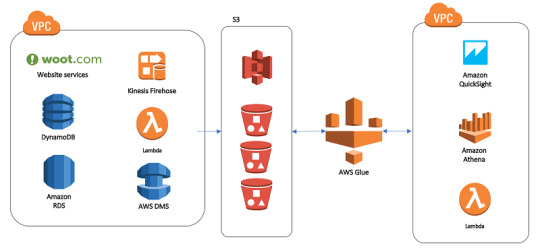

How AWS Transforms Raw Data into Actionable Insights

Introduction

Businesses generate vast amounts of data daily, from customer interactions to product performance. However, without transforming this raw data into actionable insights, it’s difficult to make informed decisions. AWS Data Analytics offers a powerful suite of tools to simplify data collection, organization, and analysis. By leveraging AWS, companies can convert fragmented data into meaningful insights, driving smarter decisions and fostering business growth.

1. Data Collection and Integration

AWS makes it simple to collect data from various sources — whether from internal systems, cloud applications, or IoT devices. Services like AWS Glue and Amazon Kinesis help automate data collection, ensuring seamless integration of multiple data streams into a unified pipeline.

Data Sources AWS can pull data from internal systems (ERP, CRM, POS), websites, apps, IoT devices, and more.

Key Services

AWS Glue: Automates data discovery, cataloging, and preparation.

Amazon Kinesis: Captures real-time data streams for immediate analysis.

AWS Data Migration Services: Facilitates seamless migration of databases to the cloud.

By automating these processes, AWS ensures businesses have a unified, consistent view of their data.

2. Data Storage at Scale

AWS offers flexible, secure storage solutions to handle both structured and unstructured data. With services Amazon S3, Redshift, and RDS, businesses can scale storage without worrying about hardware costs.

Storage Options

Amazon S3: Ideal for storing large volumes of unstructured data.

Amazon Redshift: A data warehouse solution for quick analytics on structured data.

Amazon RDS & Aurora: Managed relational databases for handling transactional data.

AWS’s tiered storage options ensure businesses only pay for what they use, whether they need real-time analytics or long-term archiving.

3. Data Cleaning and Preparation

Raw data is often inconsistent and incomplete. AWS Data Analytics tools like AWS Glue DataBrew and AWS Lambda allow users to clean and format data without extensive coding, ensuring that your analytics processes work with high-quality data.

Data Wrangling Tools

AWS Glue DataBrew: A visual tool for easy data cleaning and transformation.

AWS Lambda: Run custom cleaning scripts in real-time.

By leveraging these tools, businesses can ensure that only accurate, trustworthy data is used for analysis.

4. Data Exploration and Analysis

Before diving into advanced modeling, it’s crucial to explore and understand the data. Amazon Athena and Amazon SageMaker Data Wrangler make it easy to run SQL queries, visualize datasets, and uncover trends and patterns in data.

Exploratory Tools

Amazon Athena: Query data directly from S3 using SQL.

Amazon Redshift Spectrum: Query S3 data alongside Redshift’s warehouse.

Amazon SageMaker Data Wrangler: Explore and visualize data features before modeling.

These tools help teams identify key trends and opportunities within their data, enabling more focused and efficient analysis.

5. Advanced Analytics & Machine Learning

AWS Data Analytics moves beyond traditional reporting by offering powerful AI/ML capabilities through services Amazon SageMaker and Amazon Forecast. These tools help businesses predict future outcomes, uncover anomalies, and gain actionable intelligence.

Key AI/ML Tools

Amazon SageMaker: An end-to-end platform for building and deploying machine learning models.

Amazon Forecast: Predicts business outcomes based on historical data.

Amazon Comprehend: Uses NLP to analyze and extract meaning from text data.

Amazon Lookout for Metrics: Detects anomalies in your data automatically.

These AI-driven services provide predictive and prescriptive insights, enabling proactive decision-making.

6. Visualization and Reporting

AWS’s Amazon QuickSight helps transform complex datasets into easily digestible dashboards and reports. With interactive charts and graphs, QuickSight allows businesses to visualize their data and make real-time decisions based on up-to-date information.

Powerful Visualization Tools

Amazon QuickSight: Creates customizable dashboards with interactive charts.

Integration with BI Tools: Easily integrates with third-party tools like Tableau and Power BI.

With these tools, stakeholders at all levels can easily interpret and act on data insights.

7. Data Security and Governance

AWS places a strong emphasis on data security with services AWS Identity and Access Management (IAM) and AWS Key Management Service (KMS). These tools provide robust encryption, access controls, and compliance features to ensure sensitive data remains protected while still being accessible for analysis.

Security Features

AWS IAM: Controls access to data based on user roles.

AWS KMS: Provides encryption for data both at rest and in transit.

Audit Tools: Services like AWS CloudTrail and AWS Config help track data usage and ensure compliance.

AWS also supports industry-specific data governance standards, making it suitable for regulated industries like finance and healthcare.

8. Real-World Example: Retail Company

Retailers are using AWS to combine data from physical stores, eCommerce platforms, and CRMs to optimize operations. By analyzing sales patterns, forecasting demand, and visualizing performance through AWS Data Analytics, they can make data-driven decisions that improve inventory management, marketing, and customer service.

For example, a retail chain might:

Use AWS Glue to integrate data from stores and eCommerce platforms.

Store data in S3 and query it using Athena.

Analyze sales data in Redshift to optimize product stocking.

Use SageMaker to forecast seasonal demand.

Visualize performance with QuickSight dashboards for daily decision-making.

This example illustrates how AWS Data Analytics turns raw data into actionable insights for improved business performance.

9. Why Choose AWS for Data Transformation?

AWS Data Analytics stands out due to its scalability, flexibility, and comprehensive service offering. Here’s what makes AWS the ideal choice:

Scalability: Grows with your business needs, from startups to large enterprises.

Cost-Efficiency: Pay only for the services you use, making it accessible for businesses of all sizes.

Automation: Reduces manual errors by automating data workflows.

Real-Time Insights: Provides near-instant data processing for quick decision-making.

Security: Offers enterprise-grade protection for sensitive data.

Global Reach: AWS’s infrastructure spans across regions, ensuring seamless access to data.

10. Getting Started with AWS Data Analytics

Partnering with a company, OneData, can help streamline the process of implementing AWS-powered data analytics solutions. With their expertise, businesses can quickly set up real-time dashboards, implement machine learning models, and get full support during the data transformation journey.

Conclusion

Raw data is everywhere, but actionable insights are rare. AWS bridges that gap by providing businesses with the tools to ingest, clean, analyze, and act on data at scale.

From real-time dashboards and forecasting to machine learning and anomaly detection, AWS enables you to see the full story your data is telling. With partners OneData, even complex data initiatives can be launched with ease.

Ready to transform your data into business intelligence? Start your journey with AWS today.

0 notes

Text

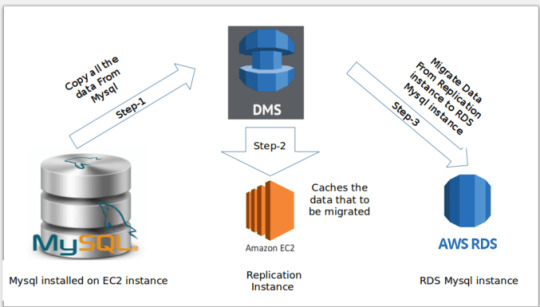

How to migrate databases using AWS DMS

Introduction

What is AWS Database Migration Service(DMS)?

AWS Database Migration Service (AWS DMS) is a cloud service that makes it possible to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. You can use AWS DMS to migrate your data into the AWS Cloud or between combinations of cloud and on-premises setups. The databases that are offered include Redshift, DynamoDB, ElasticCache, Aurora, Amazon RDS, and DynamoDB.

Now let’s check why DMS is important

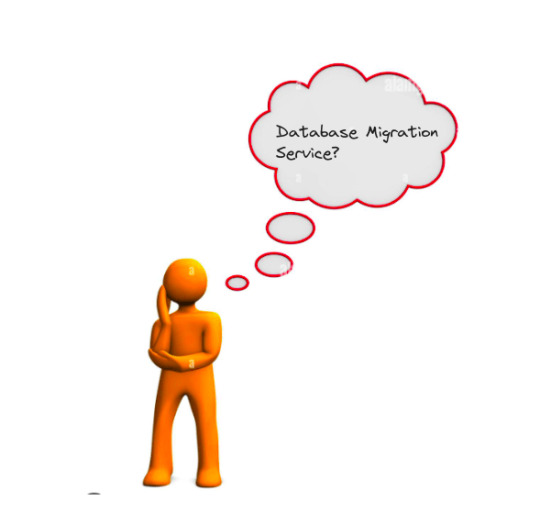

Why do we need Database Migration Service?

Reduced Downtime: Database migration services minimize downtime during the migration process. By continuously replicating changes from the source database to the target database, they ensure that data remains synchronized, allowing for a seamless transition with minimal disruption to your applications and users.

Security: Migration services typically provide security features such as data encryption, secure network connections, and compliance with industry regulations.

Cost Effectiveness: Data Migration Service is a free migration solution for switching to DocumentDB, Redshift, Aurora, or DynamoDB (Supports Most Notable Databases). You must pay for other databases based on the volume of log storing and the computational load.

Scalability and Performance: Migration services are designed to handle large-scale migrations efficiently. They employ techniques such as parallel data transfer, data compression, and optimization algorithms to optimize performance and minimize migration time, allowing for faster and more efficient migrations.

Schema Conversion: AWS DMS can automatically convert the source database schema to match the target database schema during migration. This eliminates the need for manual schema conversion, saving time and effort.

How Does AWS Database Migration Service Work?

Pre-migration steps

These steps should be taken before actually migrating the database, which will include basic planning, structuring, understanding the requirements, and finalizing the move.

Migration Steps

These are the steps that are to be taken while implementing database migration. These steps should be accomplished with proper accountability taking utmost care about data governance roles, risks related to migration, etc.

Post-migration steps

Once db migration is complete, there might be some issues that would have gone unnoticed during the process. These steps would be necessarily taken to ensure that the migration process gets over in an error-free manner.

Now let’s move forward to use cases of DMS!

You can check more info about: aws database migration service.

0 notes

Text

Accelerating Innovation with Data Engineering on AWS and Aretove’s Expertise as a Leading Data Engineering Company

In today’s digital economy, the ability to process and act on data in real-time is a significant competitive advantage. This is where Data Engineering on AWS and the support of a dedicated Data Engineering Company like Aretove come into play. These solutions form the backbone of modern analytics architectures, powering everything from real-time dashboards to machine learning pipelines.

What is Data Engineering and Why is AWS the Platform of Choice?

Data engineering is the practice of designing and building systems for collecting, storing, and analyzing data. As businesses scale, traditional infrastructures struggle to handle the volume, velocity, and variety of data. This is where Amazon Web Services (AWS) shines.

AWS offers a robust, flexible, and scalable environment ideal for modern data workloads. Aretove leverages a variety of AWS tools—like Amazon Redshift, AWS Glue, and Amazon S3—to build data pipelines that are secure, efficient, and cost-effective.

Core Benefits of AWS for Data Engineering

Scalability: AWS services automatically scale to handle growing data needs.

Flexibility: Supports both batch and real-time data processing.

Security: Industry-leading compliance and encryption capabilities.

Integration: Seamlessly works with machine learning tools and third-party apps.

At Aretove, we customize your AWS architecture to match business goals, ensuring performance without unnecessary costs.

Aretove: A Trusted Data Engineering Company

As a premier Data Engineering Aws , Aretove specializes in end-to-end solutions that unlock the full potential of your data. Whether you're migrating to the cloud, building a data lake, or setting up real-time analytics, our team of experts ensures a seamless implementation.

Our services include:

Data Pipeline Development: Build robust ETL/ELT pipelines using AWS Glue and Lambda.

Data Warehousing: Design scalable warehouses with Amazon Redshift for fast querying and analytics.

Real-time Streaming: Implement streaming data workflows with Amazon Kinesis and Apache Kafka.

Data Governance and Quality: Ensure your data is accurate, consistent, and secure.

Case Study: Real-Time Analytics for E-Commerce

An e-commerce client approached Aretove to improve its customer insights using real-time analytics. We built a cloud-native architecture on AWS using Kinesis for stream ingestion and Redshift for warehousing. This allowed the client to analyze customer behavior instantly and personalize recommendations, leading to a 30% boost in conversion rates.

Why Aretove Stands Out

What makes Aretove different is our ability to bridge business strategy with technical execution. We don’t just build pipelines—we build solutions that drive revenue, enhance user experiences, and scale with your growth.

With a client-centric approach and deep technical know-how, Aretove empowers businesses across industries to harness the power of their data.

Looking Ahead

As data continues to fuel innovation, companies that invest in modern data engineering practices will be the ones to lead. AWS provides the tools, and Aretove brings the expertise. Together, we can transform your data into a strategic asset.

Whether you’re starting your cloud journey or optimizing an existing environment, Aretove is your go-to partner for scalable, intelligent, and secure data engineering solutions.

0 notes

Text

Exploring the Role of Azure Data Factory in Hybrid Cloud Data Integration

Introduction

In today’s digital landscape, organizations increasingly rely on hybrid cloud environments to manage their data. A hybrid cloud setup combines on-premises data sources, private clouds, and public cloud platforms like Azure, AWS, or Google Cloud. Managing and integrating data across these diverse environments can be complex.

This is where Azure Data Factory (ADF) plays a crucial role. ADF is a cloud-based data integration service that enables seamless movement, transformation, and orchestration of data across hybrid cloud environments.

In this blog, we’ll explore how Azure Data Factory simplifies hybrid cloud data integration, key use cases, and best practices for implementation.

1. What is Hybrid Cloud Data Integration?

Hybrid cloud data integration is the process of connecting, transforming, and synchronizing data between: ✅ On-premises data sources (e.g., SQL Server, Oracle, SAP) ✅ Cloud storage (e.g., Azure Blob Storage, Amazon S3) ✅ Databases and data warehouses (e.g., Azure SQL Database, Snowflake, BigQuery) ✅ Software-as-a-Service (SaaS) applications (e.g., Salesforce, Dynamics 365)

The goal is to create a unified data pipeline that enables real-time analytics, reporting, and AI-driven insights while ensuring data security and compliance.

2. Why Use Azure Data Factory for Hybrid Cloud Integration?

Azure Data Factory (ADF) provides a scalable, serverless solution for integrating data across hybrid environments. Some key benefits include:

✅ 1. Seamless Hybrid Connectivity

ADF supports over 90+ data connectors, including on-prem, cloud, and SaaS sources.

It enables secure data movement using Self-Hosted Integration Runtime to access on-premises data sources.

✅ 2. ETL & ELT Capabilities

ADF allows you to design Extract, Transform, and Load (ETL) or Extract, Load, and Transform (ELT) pipelines.

Supports Azure Data Lake, Synapse Analytics, and Power BI for analytics.

✅ 3. Scalability & Performance

Being serverless, ADF automatically scales resources based on data workload.

It supports parallel data processing for better performance.

✅ 4. Low-Code & Code-Based Options

ADF provides a visual pipeline designer for easy drag-and-drop development.

It also supports custom transformations using Azure Functions, Databricks, and SQL scripts.

✅ 5. Security & Compliance

Uses Azure Key Vault for secure credential management.

Supports private endpoints, network security, and role-based access control (RBAC).

Complies with GDPR, HIPAA, and ISO security standards.

3. Key Components of Azure Data Factory for Hybrid Cloud Integration

1️⃣ Linked Services

Acts as a connection between ADF and data sources (e.g., SQL Server, Blob Storage, SFTP).

2️⃣ Integration Runtimes (IR)

Azure-Hosted IR: For cloud data movement.

Self-Hosted IR: For on-premises to cloud integration.

SSIS-IR: To run SQL Server Integration Services (SSIS) packages in ADF.

3️⃣ Data Flows

Mapping Data Flow: No-code transformation engine.

Wrangling Data Flow: Excel-like Power Query transformation.

4️⃣ Pipelines

Orchestrate complex workflows using different activities like copy, transformation, and execution.

5️⃣ Triggers

Automate pipeline execution using schedule-based, event-based, or tumbling window triggers.

4. Common Use Cases of Azure Data Factory in Hybrid Cloud

🔹 1. Migrating On-Premises Data to Azure

Extracts data from SQL Server, Oracle, SAP, and moves it to Azure SQL, Synapse Analytics.

🔹 2. Real-Time Data Synchronization

Syncs on-prem ERP, CRM, or legacy databases with cloud applications.

🔹 3. ETL for Cloud Data Warehousing

Moves structured and unstructured data to Azure Synapse, Snowflake for analytics.

🔹 4. IoT and Big Data Integration

Collects IoT sensor data, processes it in Azure Data Lake, and visualizes it in Power BI.

🔹 5. Multi-Cloud Data Movement

Transfers data between AWS S3, Google BigQuery, and Azure Blob Storage.

5. Best Practices for Hybrid Cloud Integration Using ADF

✅ Use Self-Hosted IR for Secure On-Premises Data Access ✅ Optimize Pipeline Performance using partitioning and parallel execution ✅ Monitor Pipelines using Azure Monitor and Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault ✅ Automate Data Workflows with Triggers & Parameterized Pipelines

6. Conclusion

Azure Data Factory plays a critical role in hybrid cloud data integration by providing secure, scalable, and automated data pipelines. Whether you are migrating on-premises data, synchronizing real-time data, or integrating multi-cloud environments, ADF simplifies complex ETL processes with low-code and serverless capabilities.

By leveraging ADF’s integration runtimes, automation, and security features, organizations can build a resilient, high-performance hybrid cloud data ecosystem.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

#IfiTechsolutions#DataWarehouseMigration#AzureSQL#CloudMigration#MicrosoftPartner#AzureData#HealthcareAI#RedshiftToAzure#CloudComputing#DataAnalytics#DigitalTransformation

0 notes

Text

Kadel Labs: Leading the Way as Databricks Consulting Partners

Introduction

In today’s data-driven world, businesses are constantly seeking efficient ways to harness the power of big data. As organizations generate vast amounts of structured and unstructured data, they need advanced tools and expert guidance to extract meaningful insights. This is where Kadel Labs, a leading technology solutions provider, steps in. As Databricks Consulting Partners, Kadel Labs specializes in helping businesses leverage the Databricks Lakehouse platform to unlock the full potential of their data.

Understanding Databricks and the Lakehouse Architecture

Before diving into how Kadel Labs can help businesses maximize their data potential, it’s crucial to understand Databricks and its revolutionary Lakehouse architecture.

Databricks is an open, unified platform designed for data engineering, machine learning, and analytics. It combines the best of data warehouses and data lakes, allowing businesses to store, process, and analyze massive datasets with ease. The Databricks Lakehouse model integrates the reliability of a data warehouse with the scalability of a data lake, enabling businesses to maintain structured and unstructured data efficiently.

Key Features of Databricks Lakehouse

Unified Data Management – Combines structured and unstructured data storage.

Scalability and Flexibility – Handles large-scale datasets with optimized performance.

Cost Efficiency – Reduces data redundancy and lowers storage costs.

Advanced Security – Ensures governance and compliance for sensitive data.

Machine Learning Capabilities – Supports AI and ML workflows seamlessly.

Why Businesses Need Databricks Consulting Partners

While Databricks offers powerful tools, implementing and managing its solutions requires deep expertise. Many organizations struggle with:

Migrating data from legacy systems to Databricks Lakehouse.

Optimizing data pipelines for real-time analytics.

Ensuring security, compliance, and governance.

Leveraging machine learning and AI for business growth.

This is where Kadel Labs, as an experienced Databricks Consulting Partner, helps businesses seamlessly adopt and optimize Databricks solutions.

Kadel Labs: Your Trusted Databricks Consulting Partner

Expertise in Databricks Implementation

Kadel Labs specializes in helping businesses integrate the Databricks Lakehouse platform into their existing data infrastructure. With a team of highly skilled engineers and data scientists, Kadel Labs provides end-to-end consulting services, including:

Databricks Implementation & Setup – Deploying Databricks on AWS, Azure, or Google Cloud.

Data Pipeline Development – Automating data ingestion, transformation, and analysis.

Machine Learning Model Deployment – Utilizing Databricks MLflow for AI-driven decision-making.

Data Governance & Compliance – Implementing best practices for security and regulatory compliance.

Custom Solutions for Every Business

Kadel Labs understands that every business has unique data needs. Whether a company is in finance, healthcare, retail, or manufacturing, Kadel Labs designs tailor-made solutions to address specific challenges.

Use Case 1: Finance & Banking

A leading financial institution faced challenges with real-time fraud detection. By implementing Databricks Lakehouse, Kadel Labs helped the company process vast amounts of transaction data, enabling real-time anomaly detection and fraud prevention.

Use Case 2: Healthcare & Life Sciences

A healthcare provider needed to consolidate patient data from multiple sources. Kadel Labs implemented Databricks Lakehouse, enabling seamless integration of electronic health records (EHRs), genomic data, and medical imaging, improving patient care and operational efficiency.

Use Case 3: Retail & E-commerce

A retail giant wanted to personalize customer experiences using AI. By leveraging Databricks Consulting Services, Kadel Labs built a recommendation engine that analyzed customer behavior, leading to a 25% increase in sales.

Migration to Databricks Lakehouse

Many organizations still rely on traditional data warehouses and Hadoop-based ecosystems. Kadel Labs assists businesses in migrating from legacy systems to Databricks Lakehouse, ensuring minimal downtime and optimal performance.

Migration Services Include:

Assessing current data architecture and identifying challenges.

Planning a phased migration strategy.

Executing a seamless transition with data integrity checks.

Training teams to effectively utilize Databricks.

Enhancing Business Intelligence with Kadel Labs

By combining the power of Databricks Lakehouse with BI tools like Power BI, Tableau, and Looker, Kadel Labs enables businesses to gain deep insights from their data.

Key Benefits:

Real-time data visualization for faster decision-making.

Predictive analytics for future trend forecasting.

Seamless data integration with cloud and on-premise solutions.

Future-Proofing Businesses with Kadel Labs

As data landscapes evolve, Kadel Labs continuously innovates to stay ahead of industry trends. Some emerging areas where Kadel Labs is making an impact include:

Edge AI & IoT Data Processing – Utilizing Databricks for real-time IoT data analytics.

Blockchain & Secure Data Sharing – Enhancing data security in financial and healthcare industries.

AI-Powered Automation – Implementing AI-driven automation for operational efficiency.

Conclusion

For businesses looking to harness the power of data, Kadel Labs stands out as a leading Databricks Consulting Partner. By offering comprehensive Databricks Lakehouse solutions, Kadel Labs empowers organizations to transform their data strategies, enhance analytics capabilities, and drive business growth.

If your company is ready to take the next step in data innovation, Kadel Labs is here to help. Reach out today to explore custom Databricks solutions tailored to your business needs.

0 notes

Text

The demand for SAP FICO vs. SAP HANA in India depends on industry trends, company requirements, and evolving SAP technologies. Here’s a breakdown:

1. SAP FICO Demand in India

SAP FICO (Finance & Controlling) has been a core SAP module for years, used in almost every company that runs SAP ERP. It includes:

Financial Accounting (FI) – General Ledger, Accounts Payable, Accounts Receivable, Asset Accounting, etc.

Controlling (CO) – Cost Center Accounting, Internal Orders, Profitability Analysis, etc.

Why is SAP FICO in demand? ✅ Essential for businesses – Every company needs finance & accounting. ✅ High job availability – Many Indian companies still run SAP ECC, where FICO is critical. ✅ Migration to S/4HANA – Companies moving from SAP ECC to SAP S/4HANA still require finance professionals. ✅ Stable career growth – Finance roles are evergreen.

Challenges:

As companies move to S/4HANA, traditional FICO skills alone are not enough.

Need to upskill in SAP S/4HANA Finance (Simple Finance) and integration with SAP HANA.

2. SAP HANA Demand in India

SAP HANA is an in-memory database and computing platform that powers SAP S/4HANA. Key areas include:

SAP HANA Database (DBA roles)

SAP HANA Modeling (for reporting & analytics)

SAP S/4HANA Functional & Technical roles

SAP BW/4HANA (Business Warehouse on HANA)

Why is SAP HANA in demand? ✅ Future of SAP – SAP S/4HANA is replacing SAP ECC, and all new implementations are on HANA. ✅ High-paying jobs – Technical consultants with SAP HANA expertise earn more. ✅ Cloud adoption – Companies prefer SAP on AWS, Azure, and GCP, requiring HANA skills. ✅ Data & Analytics – Business intelligence and real-time analytics run on HANA.

Challenges:

More technical compared to SAP FICO.

Requires skills in SQL, HANA Modeling, CDS Views, and ABAP on HANA.

Companies still transitioning from ECC, meaning FICO is not obsolete yet.

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

Best Informatica Cloud Training in India | Informatica IICS

Cloud Data Integration (CDI) in Informatica IICS

Introduction

Cloud Data Integration (CDI) in Informatica Intelligent Cloud Services (IICS) is a powerful solution that helps organizations efficiently manage, process, and transform data across hybrid and multi-cloud environments. CDI plays a crucial role in modern ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) operations, enabling businesses to achieve high-performance data processing with minimal complexity. In today’s data-driven world, businesses need seamless integration between various data sources, applications, and cloud platforms. Informatica Training Online

What is Cloud Data Integration (CDI)?

Cloud Data Integration (CDI) is a Software-as-a-Service (SaaS) solution within Informatica IICS that allows users to integrate, transform, and move data across cloud and on-premises systems. CDI provides a low-code/no-code interface, making it accessible for both technical and non-technical users to build complex data pipelines without extensive programming knowledge.

Key Features of CDI in Informatica IICS

Cloud-Native Architecture

CDI is designed to run natively on the cloud, offering scalability, flexibility, and reliability across various cloud platforms like AWS, Azure, and Google Cloud.

Prebuilt Connectors

It provides out-of-the-box connectors for SaaS applications, databases, data warehouses, and enterprise applications such as Salesforce, SAP, Snowflake, and Microsoft Azure.

ETL and ELT Capabilities

Supports ETL for structured data transformation before loading and ELT for transforming data after loading into cloud storage or data warehouses.

Data Quality and Governance

Ensures high data accuracy and compliance with built-in data cleansing, validation, and profiling features. Informatica IICS Training

High Performance and Scalability

CDI optimizes data processing with parallel execution, pushdown optimization, and serverless computing to enhance performance.

AI-Powered Automation

Integrated Informatica CLAIRE, an AI-driven metadata intelligence engine, automates data mapping, lineage tracking, and error detection.

Benefits of Using CDI in Informatica IICS

1. Faster Time to Insights

CDI enables businesses to integrate and analyze data quickly, helping data analysts and business teams make informed decisions in real-time.

2. Cost-Effective Data Integration

With its serverless architecture, businesses can eliminate on-premise infrastructure costs, reducing Total Cost of Ownership (TCO) while ensuring high availability and security.

3. Seamless Hybrid and Multi-Cloud Integration

CDI supports hybrid and multi-cloud environments, ensuring smooth data flow between on-premises systems and various cloud providers without performance issues. Informatica Cloud Training

4. No-Code/Low-Code Development

Organizations can build and deploy data pipelines using a drag-and-drop interface, reducing dependency on specialized developers and improving productivity.

5. Enhanced Security and Compliance

Informatica ensures data encryption, role-based access control (RBAC), and compliance with GDPR, CCPA, and HIPAA standards, ensuring data integrity and security.

Use Cases of CDI in Informatica IICS

1. Cloud Data Warehousing

Companies migrating to cloud-based data warehouses like Snowflake, Amazon Redshift, or Google BigQuery can use CDI for seamless data movement and transformation.

2. Real-Time Data Integration

CDI supports real-time data streaming, enabling enterprises to process data from IoT devices, social media, and APIs in real-time.

3. SaaS Application Integration

Businesses using applications like Salesforce, Workday, and SAP can integrate and synchronize data across platforms to maintain data consistency. IICS Online Training

4. Big Data and AI/ML Workloads

CDI helps enterprises prepare clean and structured datasets for AI/ML model training by automating data ingestion and transformation.

Conclusion

Cloud Data Integration (CDI) in Informatica IICS is a game-changer for enterprises looking to modernize their data integration strategies. CDI empowers businesses to achieve seamless data connectivity across multiple platforms with its cloud-native architecture, advanced automation, AI-powered data transformation, and high scalability. Whether you’re migrating data to the cloud, integrating SaaS applications, or building real-time analytics pipelines, Informatica CDI offers a robust and efficient solution to streamline your data workflows.

For organizations seeking to accelerate digital transformation, adopting Informatics’ Cloud Data Integration (CDI) solution is a strategic step toward achieving agility, cost efficiency, and data-driven innovation.

For More Information about Informatica Cloud Online Training

Contact Call/WhatsApp: +91 7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Informatica Training in Hyderabad#IICS Training in Hyderabad#IICS Online Training#Informatica Cloud Training#Informatica Cloud Online Training#Informatica IICS Training#Informatica Training Online#Informatica Cloud Training in Chennai#Informatica Cloud Training In Bangalore#Best Informatica Cloud Training in India#Informatica Cloud Training Institute#Informatica Cloud Training in Ameerpet

0 notes