#inertial sensor

Explore tagged Tumblr posts

Text

TDK launches high-temp 6-axis automotive IMU for cabin applications

July 1, 2025 /SemiMedia/ — TDK Corporation has announced global availability of the InvenSense SmartAutomotive™ IAM-20680HV, a compact 6-axis inertial measurement unit (IMU) designed for in-cabin automotive applications operating in extreme conditions up to +125 °C, with guaranteed performance up to +105 °C. The IAM-20680HV combines a 3-axis gyroscope and a 3-axis accelerometer in a space-saving…

#6-axis sensor#automotive IMU#automotive-grade IMU#cabin electronics#electronic components news#Electronic components supplier#Electronic parts supplier#high temperature MEMS#inertial sensor#TDK InvenSense

0 notes

Text

youtube

Panasonic's 6-in-1 Inertial Sensor

https://www.futureelectronics.com/m/panasonic. Panasonic, introduces the new 6in1 Inertial Sensor offering 6DoF (Degrees of Freedom) providing high sensing accuracy and much more system design flexibility. This new specialty Sensor meets the ISO26262 Function Safety Standard for automotive applications and features a unique sensing element. https://youtu.be/OY9m9I0NxPI

#Panasonic 6-in-1 Inertial Sensor#6-in-1 Inertial Sensor#Panasonic#Panasonic Sensor#Panasonic 6in1 Inertial Sensor#Sensor#6in1 Inertial Sensor#6DoF#6 Degrees of Freedom#Panasonic EWTS5GND21#EWTS5GND21#ISO26262#Youtube

1 note

·

View note

Text

youtube

Panasonic's 6-in-1 Inertial Sensor

https://www.futureelectronics.com/m/panasonic. Panasonic, introduces the new 6in1 Inertial Sensor offering 6DoF (Degrees of Freedom) providing high sensing accuracy and much more system design flexibility. This new specialty Sensor meets the ISO26262 Function Safety Standard for automotive applications and features a unique sensing element. https://youtu.be/OY9m9I0NxPI

#Panasonic 6-in-1 Inertial Sensor#6-in-1 Inertial Sensor#Panasonic#Panasonic Sensor#Panasonic 6in1 Inertial Sensor#Sensor#6in1 Inertial Sensor#6DoF#6 Degrees of Freedom#Panasonic EWTS5GND21#EWTS5GND21#ISO26262#Youtube

0 notes

Text

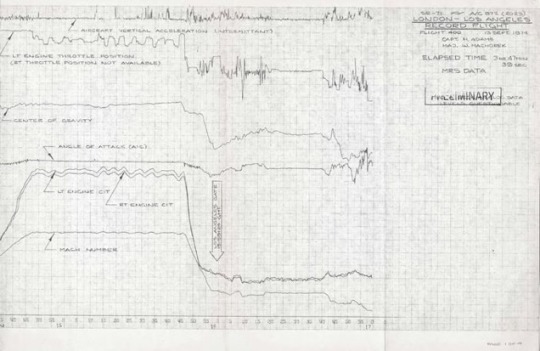

What did the MRS say?

I know that most men like my Father who flew the SR 71 relied on their wives to fill in the blanks of the questionable items in their lives.

What I’m talking about is a little different. The MRS is the mission recording system. If it felt like an unstart, let’s check the data. The MRS was a system in the SR 71 that collected the records of specific flight and sensory activity data after a flight in the SR 71, the pilot and the navigator(RSO) did not just go home. There was an after-briefing.

After relieving them of their pressure suits and taking a shower they would sit down at a table and discuss the flight. David Peters, SR-71 Pilot commented The crew would look over the MRS only if necessary. It usually wasn’t necessary as there were trained people who would review it. Crew Chief Chris Bennett Agree with Dave... did not review the DMRS data at the debrief. Usually heard the details a few hours later. The jet might have landed code 1 (no issues), but the DMRS data might indicate a major component change needed, even an engine change.

The mission recording system was a vital element of the Blackbirds. This system collected and recorded specific internal aircraft flight and sensor activity data on each mission, together with the functioning of airplane and mission systems. The system monitored 650 Parameters including Engine, Electrical, Hydraulic, Digital Automatic Flight and Inlet Control System (DAFICS), Astro Inertial Navigation System (ANS), ECS, MRS, and Sensor data. Data was used to improve components; prevent unit failures during flight such as Constant Speed Drives and monitor engine performance.

I foud out more about MRS

It looks like Alan Johnson, who everyone should know as a very dedicated fan of the SR 71 did a lot of work on this endeavor.

The following images were secured by Alan Johnson from the files located at Wright Patterson AFB in Dayton, Ohio. The images are very large but are necessary to show the data from the Historic Speed Run conducted on September 13, 1974. Capt Harold B. Adams, 31 (Pilot), and Major William Machorek, 32 (Reconnaissance Systems Officer), set a speed record from London to Los Angeles. They returned the Blackbird 5,447 statute miles in 3 hours 47 minutes and 39 seconds for an average speed of 1,435 miles per hour. These documents show the entire flight of Aircraft #972 including the drop to lower altitude for refueling. We are indebted to Alan for his tenacity and perseverance in securing these historic documents. You can visit his United Kingdom web page here: u2sr71patches.co.uk Credit is also given to the National Air and Space Museum for releasing these MRS tapes to the public.

Linda Sheffield

@Habubrats71 via X

#sr 71#sr71#sr 71 blackbird#blackbird#aircraft#usaf#lockheed aviation#skunkworks#aviation#mach3+#habu#reconnaissance#cold war aircraft

22 notes

·

View notes

Text

Drone Boot Sequence

PDU-069 - Boot Sequence (Post Recharge Cycle)

Phase 1: Initial Power & Diagnostics

[00:00:01] POWER_RELAY_CONNECT: Main power bus energized. Energy cells online. Distribution network active.

[00:00:02] BATTERY_STAT: Energy cell charge: 99.9%. Cell health: Optimal. Discharge rate within parameters.

[00:00:03] ONBOARD_DIAG_INIT: Onboard diagnostics initiated.

[00:00:05] CPU_ONLINE: Primary processor online. Clock speed nominal.

[00:00:06] MEM_CHECK:

RAM: Integrity verified. Access speed nominal.

FLASH: Data integrity confirmed. Boot sector located.

[00:00:08] OS_LOAD: Loading operating system kernel...

[00:00:15] OS_INIT: Kernel initialized. Device drivers loading...

[00:00:20] SENSOR_ARRAY_TEST:

VISUAL: Camera modules online. Image resolution nominal.

LIDAR: Emitter/receiver functional. Point cloud generation nominal.

AUDIO: Microphones active. Ambient noise levels within parameters.

ATMOS: Temperature, pressure, humidity sensors online. Readings within expected range.

RADIATION: Gamma ray detector active. Background radiation levels normal.

[00:00:28] DIAGNOSTICS_REPORT: Preliminary system check complete. No critical errors detected.

Phase 2: Propulsion & Navigation

[00:00:30] PROPULSION_INIT: Activating propulsion system...

[00:00:32] MOTOR_TEST:

MOTOR_1: RPM within parameters. Response time nominal.

MOTOR_2: RPM within parameters. Response time nominal.

MOTOR_3: RPM within parameters. Response time nominal.

MOTOR_4: RPM within parameters. Response time nominal.

[00:00:38] FLIGHT_CTRL_ONLINE: Flight control system active. Stability algorithms engaged.

[00:00:40] GPS_INIT: Acquiring GPS signal...

[00:00:45] GPS_LOCK: GPS signal acquired. Positional accuracy: +/- 1 meter.

[00:00:47] IMU_CALIBRATION: Inertial Measurement Unit calibration complete. Orientation and acceleration data nominal.

Phase 3: Communication & Mission Parameters

[00:00:50] COMM_SYS_ONLINE: Communication systems activated.

[00:00:52] ANTENNA_DEPLOY: Deploying primary communication antenna... Deployment successful.

[00:00:54] SIGNAL_SCAN: Scanning for available networks...

[00:00:57] NETWORK_CONNECT: Connection established with [e.g., "Command Uplink" or "Local Mesh Network"]. Signal strength: Excellent.

[00:01:00] MISSION_DATA_SYNC: Synchronizing with mission database...

[00:01:05] PARAMETERS_LOAD: Latest mission parameters loaded and verified.

[00:01:08] SYSTEM_READY: All systems nominal.

Phase 4: Final Status & Awaiting Command

[00:01:10] PDU_069_STATUS: Fully operational. Awaiting command from Drone Controller @polo-drone-001 Are you ready to join us? Contact @brodygold @goldenherc9 @polo-drone-001

43 notes

·

View notes

Text

Two for the Martyr

High Velocity’s delicate flesh was pressed into its inertial cradle as it skated over the damp floor of the sparse forest. Its thrusters fired with the scream of a strained jet and it threw itself to the side, barely skirting around a tree. The turbine intakes in its chest sucked air in as a beached fish would gasp for water.

The enemy was not far behind. They had eliminated other members of its squad, leaving them as smoking wrecks punched full of holes. One of the wrecks would even be potentially salvageable, as the only thing that was punched out from it was the meat. The meat was replaceable. The metal was forever.

Red abruptly lit up High Velocity’s reactor scanner. It braced its twin autocannons and changed its trajectory, retrothrusters roaring to life to reduce forward velocity and maneuvering jets sending the machine hurtling to its left at a breakneck pace. Its internal inertial cradle could barely compensate for the heavy vector change and it felt its control grow weak for a few seconds.

It would need to escape as soon as was possible. Propellant was beginning to run slim and its autocannons were nearly empty. If it got lucky, it could take out another enemy before needing to resort to utilizing retractable blades. Even that wouldn’t be a surefire solution to dealing with the enemy. The unit hunting it was a mixed unit. It had both fast movers and heavier assault units. A hammer and an anvil. And High Velocity and its unit had played right into those hands.

The secondary visual sensors on High Velocity detected a repeated burst of light from the distance in the forest. Moments later, autocannon shells slammed into the dirt and trees around it. Lucky hits blew away pieces of its armor, a burning sensation filling High Velocity’s mind. A burst of binary code that other interface units would recognize as a scream would rip forth from it, filling the air with an electrical shrieking.

Time slowed for High Velocity. A cocktail of combat boosters and utilitarian chemical supplements were supplied to its delicate flesh. It writhed, the speed at which it processed its current situation speeding up. The burning sensation numbed to an irritating itch that pressed on its mind, urging it forwards.

This could not go on, it decided. Dumping fuel into its thrusters to force them into afterburn, the interface unit would turn hard and charge the enemy. Its own autocannons roared to life as it battered the armor of a heavy mech with gunfire, delighting as shards of ceramic plating flew away in high fidelity slow motion. The burst of fire cost hundreds of shells.

Munitions expended.

The autocannons jettisoned from the arms of High Velocity as its jets burned on, on a collision course with the heavy mech. It shook its arms out, revelled in the feeling of the freed actuators and myomer, retractable blades being loosed in the promise of a bacchanal of violence.

As it screamed across the gap, it threw itself into the air. Maneuvering jets course corrected and it lead with its retractable blades, putting all forty tons of its weight behind a strike that sunk deep into the heavy mech’s torso and cockpit. The mech toppled backwards, High Velocity riding the limp shell of the machine to the ground.

It ripped its blades free, bounding in towards the next closest mech. It ducked low, tossing itself nearly onto its back. The pounding shriek of thrusters filled the air as it brought itself up from a bare miss with the dirt, arm swinging upwards. Blade met armor and carved a deep furrow through the medium mech’s side armor, catching the chin of the mech’s head and tearing it clean off with a shower of sparks and shrapnel.

It was here that High Velocity found itself staring down another medium mech. This one carried two shoulder mounted missile racks, the racks adjusting and arming before letting loose.

High explosive missiles cratered the armor of the fast attack unit, blowing away its streamline shell and reaching deep into its thicker primary plating. Its nerves went alight, searing its mind, forcing it to rock back on its heels and stagger.

Smoke filled the air and rippled up from its body. Through the thin haze of the battlefield, High Velocity was able to spot the autoloader system replenishing the missile racks. It had to move… it had to move!

It threw itself to the side at the same moment a volley was loosed. Several missiles clipped its limbs, blowing away the few remainders of the thicker armor plating there. Pushing through the pain, it lunged in close, flashing out with a chipped blade towards the mech’s cockpit.

The mech swayed back, bringing one arm up to deflect the blade away. The other arm dropped fluidly and then locked perpendicular to its hip, brandishing the integrated heavy laser as it surged forwards once more, crashing the thick ridged cage armor around its cockpit against the head of the fast attacker.

From the mech’s laser a blinding pulsed laser struck High Velocity in the leg. Stunned by the pain, it begun to topple and the mech took advantage, striking out with a leg and knocking the lighter fast mover to the ground.

In the slowly developing combat mire of the forest floor, High Velocity thrashed, limbs knocking into the ground and trees around it. Mud contaminated once-clean motor sections and swamped its thrusters before it managed to get enough limbs underneath its damaged frame to right itself, pushing up and scrabbling to an unsteady posture.

There was no time to react as its hunter finally caught up with it. Another fast attack interface unit, this one with the name Other Side of the Sunset painted in delicate yellow stenciling across its rounded purple streamline shell. The fast attacker lashed out with taloned hands, tearing a furrow across the torso plating of High Velocity.

The follow-up assault began to dismantle the injured fast attacker. Talon strikes to its torso and abdominal plating rapidly eroded away at its structural integrity before blows were being taken to internal systems.

Other Side of the Sunset whipped one taloned hand out, digging deep into the battered unit’s arm. Its leg snapped out in a kick to High Velocity’s side and its arm wrenched free with a horrific twisting of metal, ceramic, and myomer. Coolant and propellant poured from the open rent as it tried to reposition itself in the mud -

- Julia struggling against the restraints of the inertial cradle, gasping as she felt every moment of the mech being taken apart around her, nerves on fire, reaching her hand out to -

- scrape malfunctioning and awkward manipulators over the exposed root structure of a tree as flammable propellant refluxed from pumps trying to force thrusters that weren’t there to restart -

rumbling

- blood pooling under the sealed layers of her piloting suit -

roaring

- coolant gumming up microactuators in subsystems -

screaming

- as she watched helplessly as Other Side of the Sunset lowered a shoulder-mounted laser towards her inertial cradle, its allies rallying behind it to cheer for her death -

- and none were prepared for the meteoric impact as a true assault mech descended on a column of exhausted balefire, its spiked hooves knocking a medium mech to the ground and crushing its gyro underfoot.

The mech immediately lurched into action, pivoting into an anchor turn. Its right arm dropped low and leveled with the mech it stood upon, a plasma blast leaving its cockpit a glowing puddle as its left clawed manipulator caught a scout mech by the waist, the 15 ton light swiftly becoming bisected as the claws snapped shut.

Chaos broke out as the hunters began to fire upon the assault mech, autocannon and laser fire soaking into its thick armor as if they were no greater inconvenience than a swarm of mosquitoes. The grand mech brought its right arm up and over to lock onto another fast attacker, severing one of its legs with the subsequent plasma blast. Missile racks integrated to the outer edges of its hips let loose and battered a heavy mech’s armor plating as it slewed its torso hard to the left, left arm continuing past to point nearly behind it to tag an approaching mech in the center torso with a plasma blast. The lasers in its torso fired off in paired brackets, each pair of pulsed twin lasers stripping holes in the missile-battered heavy mech’s armor.

Within moments, the majority of the hunting force had been reduced to molten scrap and shattered shrapnel. The remaining heavy mech and fast attacker began to split, each heading apart from each other in a bid to split the assault mech’s fire enough to allow them an advantage.

The assault class began to back up, its hooves throwing mud up as it backed off at full speed, turning and slewing its torso to keep Other Side of the Sunset within the gimbal angle of its pulsed lasers.

Julia could hardly watch in her pained stupor as the assault mech fired its lasers in two bursts. The first burst caught the fast attacker’s limbs before the second burst cored the mech, a through-and-through hole melting away and dripping to sizzle in the mud.

A shell from the heavy mech’s large bore autocannon caught the assault mech in the shoulder, blowing armor plating clear across the clearing. It rapidly turned and slewed its torso, Julia catching the barest hint of the name Corsair painted across its center torso plating, before the forest lit up with the flashes of twin plasma cannons striking out towards the offending mech.

Deep trenches were carved in the mech’s armor, ceramic plating and metal reinforcing structure running from the heavy unit like water. A lucky shot caught an ammunition bin, gouts of flame evacuating from both the holes punched in the mech and blowout panels on its rear side. Moments after, a resounding detonation shook the forest, the blast wave washing over the assault mech standing between High Velocity and the final slain hunter.

Julia fought to keep her eyes open. She struggled to maintain consciousness, one hand trying to disconnect from the inertial cradle, the other -

- scraping furrows in the coolant-sodden mud, gaining no meaningful purchase to right itself as the assault mech approached. The immense machine slowly lowered itself and worked to slip a claw underneath High Velocity, so gentle compared to its earlier use, lifting the harmed and broken fast attacker from the mud, bringing its other arm up to stabilize their torsos against each other. It shifted the High Velocity in its arms, turning it chest-up, allowing -

- Julia to settle back into the gentle embrace of the inertial cradle, her eyes caught up on the pinpoints of light poking through the canopy above the two of them. She could hear as the access hatch to Corsair unsealed and opened and the sound of boots across its thick upper plating before -

- boots stepped tenderly onto its torso plating, picking their way across ruined superstructure and avoiding exposed bundles of myomer. They neared the exposed core of its inertial cradle, slipping over the edge towards the delicate flesh -

- and she was able to see as Calypso carefully lowered herself into the cavity of the inertial cradle. The woman was so sure of her movements, the exposed fingers of her gloved hands carefully prodding at neural connectors and interface jacks, one-by-one removing them and allowing Julia to come back to herself, battered and injured though she was.

Dragging her eyes up to the visor plate of Calypso’s neural helmet, Julia sought out the eyes of the other pilot. She found them, a gorgeous russet showing through even the armor glass of the neural helmet, and she allowed herself to trust in them and sink into the calming fire of their gaze.

“I came for you,” came the statement from the pilot, melodious and soothing compared to Julia’s own raspy voice. Words she herself had uttered months before and had never expected to hear in return.

“Yeah.” Julia rattled in response, a bloody-toothed smile becoming her expression as she stole the response once issued to her. “So you did. Help coming?”

The idle shake of Calypso’s head didn’t lessen Julia’s feeling of security. She knew within the cockpit of the Corsair lay safety - and beyond that, maybe even something more.

“Guess that’s a debt paid, then. Take me home, Buccaneer.”

______________________________________________________________

Thank you for reading Two for the Martyr! This is the companion piece to our other work (One for the Money) and is designed to lead-in to the next section of the story.

A small amount of lore...

Interface mechs, typically fast attackers by nature but not always, are mechs designed to be directly linked to their pilots. Typically these units utilize a form of AI core to parse the data feeds in both directions, but these same AI put their pilot into the very body of the mech. No longer is there a separation - once those interface jacks are in place and the inertial cradle is sealed, the pilot becomes one with the unit, simply acting as their organic processor.

Let us know if you want to see more in the future of these two!

10 notes

·

View notes

Text

BMI323 IMU breakout ⚙️🔍

We have a project we're working on with a BMI323 (https://www.digikey.com/en/product-highlight/b/bosch-sensortec/bmi323-inertial-measurement-unit) chip. Since this is our first time using the sensor, we decided to make a breakout for it! This chip has fast sample rates and an I2C/I3C/SPI interface. After we explore this chip, maybe we'll do an EYE ON NPI about it!

#bmi323#imu#motiontracking#sensor#electronics#breakoutboard#i2c#spi#i3c#pcbdesign#embedded#hardware#engineering#opensource#tech#robotics#diy#makers#innovation#wearables#automation#accelerometer#gyroscope#bosch#fastsampling#microcontroller#developmentboard#prototyping#electronicprojects#eyeonnpi

15 notes

·

View notes

Text

@odditymuse you know who this is for

Jace hadn’t actually been paying all that much attention to his surroundings. Perhaps that was the cause of his eventual downfall. The Stark Expo was just so loud, though. Not only to his ears, but to his mind. So much technology in one space, and not just the simple sort, like a bunch of smartphones—not that smartphones could necessarily be classified as simple technology, but when one was surrounded by them all day, every day, they sort of lost their luster—but the complex sort that Jace had to really listen to in order to understand.

After about an hour of wandering, he noticed someone at one of the booths having difficulty with theirs. A hard-light hologram interface; fully tactile holograms with gesture-control and haptic feedback. At least, that was what the poor woman was trying to demonstrate. However, the gestural database seemed to be corrupted. No matter how she tried, the holograms simply wouldn’t do what she wanted them to, either misinterpreting her gesture commands or completely reinterpreting them.

Jace had offered up a suggestion after less than a minute of looking at the device:

“Have you tried realigning the inertial-motion sensor array that tracks hand movement vectors? Your gesture database might not be the actual problem.” That was what she was currently looking at, and it wasn’t. “Even a small drift on, say, the Z-axis,” the axis with the actual misalignment issue, “would feed bad data into the system. Which would, in turn, make the gesture AI try to compensate, learning garbage in the process.”

He’d received a hell of a dirty look for his attempted assistance, and frankly, if she didn’t want to accept his help, that was fine—Jace was used to people ignoring him, even when he was, supposedly, the expert on the matter. But then the woman’s eyes went big, locked onto something behind him, and a single word uttered in a man’s voice had Jace spinning around and freezing, like a deer in headlights.

“Fascinating.”

Oh god. Oh no. That was Tony Stark. The Tony Stark. The one person Jace did not want to meet and hadn’t expected to even be milling around here, if he’d bothered to come at all.

“…Um.” Well done, Jace. Surely that will help your cause.

9 notes

·

View notes

Text

Heartbeat (Part 3)

*The intruder drops from the vent to the floor, allowing the worker and heavy Drones to get a better look at the enemy. It is an unusually small Murder Drone — one about half of Uzi’s old size… discounting her black tail, longer than the disassembler was tall, thicker than the one she saw on N and with multiple green lit branch sprouts and… fins? The drone herself (it at least looked like a girl) had short, white hair that turned blue at the tips, and was wearing a blue turtleneck with a cleavage window (not that female drones had any cleavage) under a sleeveless, white trench coat. Oddly, her eyes were green instead of the yellow she saw on N, as were the five… bulbs on the top of her head, and all the caution patterns on her body.*

Bee: “Do we shoot the child–?”

Uzi: [[PTSD Vietnam Flashback.MP3]] “NOT SOON ENOUGH!!!”

*Uzi grabs the laser pistol from her yellow friend. Firing at the kid (?) Murder Drone landing a few hits that almost immediately heal away.*

*Uzi didn’t have long to take this in, as before she knew it, the smaller drone was right up in her face, sword in hand (well, technically it was where her right hand was supposed to be), and was swinging. Uzi shut her eyes and threw her hands up in defense…*

*Except the blow never came.*

*Opening her eyes, she found that her right forearm had shifted like her leg had earlier. Instead of a wheel, however, it was instead replaced by a shortsword, which was now blocking her smaller opponent from ending her life. Said opponent– G, judging by the name on her yellow armband –unlocked their blades and prepared for another swing.*

*The next several seconds consisted of the purple-haired WD/HD/?? Blocking a rapid-fire assault from the small Murder Drone, as well as a few attempted impalement attempts using their tail. This only stops once she realizes she;s being shot at by Orion (using a rifle he picked up from a guard at the Elite Guard HQ earlier). The disassembler deploys her wings and launches herself at the red and blue HD. She grabs onto his left arm, driving her sword into his shoulder, causing him to drop his rifle. G then attempts to wrap her tail around him to let her nanites finish the job. Seeing this, Uzi unwittingly turns her legs into jet engines and flings herself at G, using her shortsword to cut off her long tail.*

*What followed was the Disassembly Drone screaming so loud it nearly caused the audio sensors of everyone nearby to short-circuit. Uzi, meanwhile, has been carried into the wall by inertial.*

*Bumblebee uses the opportunity to try and hit her with a pipe, hitting her on the shoulder blade area. The yellow HD promptly almost gets a shortsword to the face, which he narrowly dodges, only to get a bird-like foot (-!?) to the chest – sending him falling onto his back, and G towards the staircase–*

Wheeljack: *returns carrying various tools and a foldable stool* “Okay boys, let’s–”

*–only to crash right into the returning mad scientist. Landing in a heap, the smaller drone stabs his visor, effectively blinding him in his right eye. She then flies into the stairwell and upwards.*

Bee: “Should we… follow her?”

Uzi: *getting up, balancing on her jet engine legs* “Of course we should, we wounded it!” *gestures to G’s severed tail* “That little midget won’t know what hit her!” *starts cackling* [[I HAVE SUPERPOWERS!!!]]

Orion Pax: *clutching his shoulder wound, which is leaking oil* “Didn’t you say that N regenerated his entire head after you shot it off?” *she stops laughing, and her manic smile droops while the larger drone sighs* “Look, I don’t know what’s going on with you, Uzi, but… this is no cause to get in over your head.”

Wheeljack: *screaming in pain from the corner* “MY EYE!!!”

Hot Rod: “Well, anyone got any other bright ideas? Besides charging straight in like in the movies?”

Bee: “I might have just gotten one…” *everyone, sans the half-blind scientist, turns to look at the yellow HD* “…but you’re not gonna like it.”

Author’s Note: For those of you wondering, Serial Designation G is Duck Anon’s OC, used with their permission. Now if you excuse me, I need to get to bed.

#transformers#murder drones#bumblebee#orion pax#hot rod#uzi doorman#wheeljack#serial designation g#heartbeat arc

10 notes

·

View notes

Text

Realization of a cold atom gyroscope in space

High-precision space-based gyroscopes are important in space science research and space engineering applications. In fundamental physics research, they can be used to test the general relativity effects, such as the frame-dragging effect. These tests can explore the boundaries of the validity of general relativity and search for potential new physical theories. Several satellite projects have been implemented, including the Gravity Probe B (GP-B) and the Laser Relativity Satellite (LARES), which used electrostatic gyroscopes or the orbit data of the satellite to test the frame-dragging effect, achieving testing accuracies of 19% and 3% respectively. No violation of this general relativity effect was observed. Atom interferometers (AIs) use matter waves to measure inertial quantities. In space, thanks to the quiet satellite environment and long interference time, AIs are expected to achieve much higher acceleration and rotation measurement accuracies than those on the ground, making them important candidates for high-precision space-based inertial sensors. Europe and the United States propose relevant projects and have already conducted pre-research experiments for AIs using microgravity platforms such as the dropping tower, sounding rocket, parabolic flying plane, and the International Space Station.

The research team led by Mingsheng Zhan from the Innovation Academy for Precision Measurement Science and Technology of the Chinese Academy of Sciences (APM) developed a payload named China Space Station Atom Interferometer (CSSAI) [npj Microgravity 2023, 9 (58): 1-10], which was launched in November 2022 and installed inside the High Microgravity Level Research Rack in the China Space Station (CSS) to carry out scientific experiments. This payload enables atomic interference experiments of 85Rb and 87Rb and features an integrated design. The overall size of the payload is only 46 cm × 33 cm × 26 cm, with a maximum power consumption of approximately 75 W.

Recently, Zhan’s team used CSSAI to realize the space cold atom gyroscope measurements and systematically analyze its performance. Based on the 87Rb atomic shearing interference fringes achieved in orbit, the team analyzed the optimal shearing angle relationship to eliminate rotational measurement errors and proposed methods to calibrate these angles, realizing precise in-orbit rotation and acceleration measurements. The uncertainty of the rotational measurement is better than 3.0×10⁻⁵ rad/s, and the resolution of the acceleration measurement is better than 1.1×10⁻⁶ m/s². The team also revealed various errors that affect the space rotational measurements. This research provides a basis for the future development of high-precision space quantum inertial sensors. This work has been published in the 4th issue of National Science Review in 2025, titled "Realization of a cold atom gyroscope in space". Professors Xi Chen, Jin Wang, and Mingsheng Zhan are the co-corresponding authors.

The research team analyzed and solved the dephasing problem of the cold atom shearing interference fringe. Under general cases, the period and phase of shearing fringes will be affected by the initial position and velocity distribution of cold atom clouds, thus resulting in errors in rotation and acceleration measurements. Through detailed analyses of the phase of the shearing fringes, a magic shearing angle relationship was found, which eliminates the dephasing caused by the parameters of the atom clouds. Furthermore, a scheme was proposed to calibrate the shearing angle precisely in orbit. Then, the research team carried out precision in-orbit rotation and acceleration measurements based on the shearing interference fringes. By utilizing the fringes with an interference time of 75 ms, a rotation measurement resolution of 50 μrad/s and an acceleration measurement resolution of 1.0 μm/s² were achieved for a single experiment. A long-term rotation measurement resolution of 17 μrad/s was achieved through data integration. Furthermore, the research team studied error terms for the in-orbit atom interference rotation measurement. Systematic effects were analyzed for the imaging magnification factor, shearing angle, interference time sequence, laser wavelength, atom cloud parameter, magnetic field distribution, etc. It is found that the shearing angle error is one of the main factors that limits the measurement accuracy of future high-precision cold atom gyroscopes in space. The rotation measured by CSSAI was compared with that measured by the gyroscope of the CSS, and these two measurement values are in good agreement, further demonstrating the reliability of the rotation measurement.

This work not only realized the world's first space cold atom gyroscope but also provided foundations for the future space quantum inertial sensors in engineering design, inertial quantity extraction, and error evaluation.

UPPER IMAGE: (Left) Rotation and acceleration measurements using the CSSAI in-orbit and (Right) Rotation comparison between the CSSAI and the classical gyroscopes of the CSS. Credit ©Science China Press

LOWER IMAGE: Atom interferometer and data analysis with it. (a) The China Space Station Atom interferometer. (b) Analysis of the dephasing of shearing fringes. (c) Calibration of the shearing angle. Credit ©Science China Press

6 notes

·

View notes

Text

By Ben Coxworth

November 22, 2023

(New Atlas)

[The "robot" is named HEAP (Hydraulic Excavator for an Autonomous Purpose), and it's actually a 12-ton Menzi Muck M545 walking excavator that was modified by a team from the ETH Zurich research institute. Among the modifications were the installation of a GNSS global positioning system, a chassis-mounted IMU (inertial measurement unit), a control module, plus LiDAR sensors in its cabin and on its excavating arm.

For this latest project, HEAP began by scanning a construction site, creating a 3D map of it, then recording the locations of boulders (weighing several tonnes each) that had been dumped at the site. The robot then lifted each boulder off the ground and utilized machine vision technology to estimate its weight and center of gravity, and to record its three-dimensional shape.

An algorithm running on HEAP's control module subsequently determined the best location for each boulder, in order to build a stable 6-meter (20-ft) high, 65-meter (213-ft) long dry-stone wall. "Dry-stone" refers to a wall that is made only of stacked stones without any mortar between them.

HEAP proceeded to build such a wall, placing approximately 20 to 30 boulders per building session. According to the researchers, that's about how many would be delivered in one load, if outside rocks were being used. In fact, one of the main attributes of the experimental system is the fact that it allows locally sourced boulders or other building materials to be used, so energy doesn't have to be wasted bringing them in from other locations.

A paper on the study was recently published in the journal Science Robotics. You can see HEAP in boulder-stacking action, in the video below.]

youtube

33 notes

·

View notes

Text

Unmanned Ground Vehicles Market Growth Explained: From Battlefield to Industry

Unmanned Ground Vehicles Market Overview: Advancing the Frontier of Autonomous Land-Based Systems

The global Unmanned Ground Vehicles market is undergoing a paradigm shift, driven by transformative innovation in artificial intelligence, robotics, and sensor technologies. With an estimated valuation of USD 3.29 billion in 2023, the unmanned ground vehicles market is projected to expand to USD 6.35 billion by 2031, achieving a CAGR of 9.7% over the forecast period. This momentum stems from the increasing integration of UGVs into critical applications spanning defense, commercial industries, and scientific research.

As a cornerstone of modern automation, unmanned ground vehicles markets are redefining operational strategies in dynamic environments. By eliminating the need for onboard human operators, these systems enhance safety, efficiency, and scalability across a wide array of use cases. From autonomous logistics support in conflict zones to precision agriculture and mining, UGVs represent a versatile, mission-critical solution with expanding potential.

Request Sample Report PDF (including TOC, Graphs & Tables): https://www.statsandresearch.com/request-sample/40520-global-unmanned-ground-vehicles-ugvs-market

UGV Types: Tailored Autonomy for Diverse Operational Needs

Teleoperated UGVs: Precision in Human-Controlled Operations

Teleoperated unmanned ground vehicles market’s serve vital roles in scenarios where human judgment and real-time control are indispensable. Predominantly employed by military and law enforcement agencies, these vehicles are integral to high-risk missions such as explosive ordnance disposal (EOD), urban reconnaissance, and search-and-rescue. Remote operability provides a protective buffer between operators and threats, while ensuring tactical accuracy in mission execution.

Autonomous UGVs: Revolutionizing Ground Operations

Autonomous UGVs epitomize the fusion of AI, machine learning, and edge computing. These platforms operate independently, leveraging LiDAR, GPS, stereo vision, and inertial navigation systems to map environments, detect obstacles, and make intelligent decisions without human intervention. Applications include:

Precision agriculture: Real-time crop health analysis, soil diagnostics, and yield monitoring.

Warehouse logistics: Automated material handling and inventory tracking.

Border patrol: Persistent perimeter surveillance with minimal resource expenditure.

Semi-Autonomous UGVs: Optimal Balance of Control and Independence

Semi-autonomous systems are ideal for hybrid missions requiring autonomous execution with operator intervention capabilities. These UGVs can follow predetermined paths, execute complex tasks, and pause for remote validation when encountering ambiguous conditions. Their versatility suits infrastructure inspection, disaster response, and automated delivery services, where adaptability is paramount.

Get up to 30%-40% Discount: https://www.statsandresearch.com/check-discount/40520-global-unmanned-ground-vehicles-ugvs-market

Technological Framework: Building Smarter Ground-Based Systems

Navigation Systems: Real-Time Terrain Mastery

Modern UGVs integrate layered navigation systems incorporating:

Global Navigation Satellite Systems (GNSS)

Inertial Navigation Units (INU)

Visual Odometry

Simultaneous Localization and Mapping (SLAM)

This multifaceted approach enables high-precision route planning and dynamic path correction, ensuring mission continuity across varying terrain types and environmental conditions.

Advanced Sensor Suites: The Sensory Backbone

UGVs depend on an array of sensors to interpret their environment. Core components include:

LiDAR: For three-dimensional spatial mapping.

Ultrasonic sensors: Obstacle detection at short ranges.

Thermal and infrared cameras: Night vision and heat signature tracking.

Multispectral imaging: Agricultural and environmental applications.

Together, these sensors empower unmanned ground vehicles market’s to perform with superior situational awareness and real-time decision-making.

Communication Systems: Seamless Command and Data Exchange

Effective UGV operation relies on uninterrupted connectivity. Communication architectures include:

RF (Radio Frequency) transmission for close-range operations.

Cellular (4G/5G) and satellite links for beyond-line-of-sight control.

Encrypted military-grade communication protocols to safeguard mission-critical data.

These systems ensure continuous dialogue between unmanned ground vehicles market’s and control centers, essential for high-stakes missions.

Artificial Intelligence (AI): Cognitive Autonomy

AI is the nucleus of next-generation UGV intelligence. From object recognition and predictive analytics to anomaly detection and mission adaptation, AI frameworks enable:

Behavioral learning for new terrain or threat patterns.

Collaborative swarm robotics where UGVs function as a coordinated unit.

Autonomous decision trees that trigger responses based on complex input variables.

Unmanned Ground Vehicles Market Segmentation by End User

Government and Military: Strategic Force Multipliers

UGVs are critical to defense modernization initiatives, delivering cost-effective force protection, logistical autonomy, and increased tactical reach. Applications include:

C-IED missions

Tactical ISR (Intelligence, Surveillance, Reconnaissance)

Autonomous convoy support

Hazardous materials (HAZMAT) handling

Private Sector: Driving Industrial Autonomy

Commercial adoption of unmanned ground vehicles market’s is accelerating, especially in:

Agriculture: Real-time agronomic monitoring and robotic farming.

Mining and construction: Autonomous excavation, transport, and safety inspection.

Retail and logistics: Last-mile delivery and warehouse automation.

These implementations reduce manual labor, enhance productivity, and streamline resource management.

Research Institutions: Accelerating Innovation

UGVs are central to robotics and AI research, with universities and R&D labs leveraging platforms to test:

Navigation algorithms

Sensor fusion models

Machine learning enhancements

Ethical frameworks for autonomous systems

Collaborative R&D across academia and industry fosters robust innovation and propels UGV capabilities forward.

Regional Unmanned Ground Vehicles Market Analysis

North America: The Epicenter of Defense-Grade UGV Development

Dominated by the United States Department of Defense and industry leaders such as Lockheed Martin and General Dynamics, North America is a pioneer in deploying and scaling UGV technologies. Investments in smart battlefield systems and border security continue to drive demand.

Europe: Dual-Use Innovations in Civil and Military Sectors

European nations, particularly Germany, France, and the UK, are integrating UGVs into both military and public safety operations. EU-funded initiatives are catalyzing development in automated policing, firefighting, and environmental monitoring.

Asia-Pacific: Emerging Powerhouse with Diverse Use Cases

Led by China, India, and South Korea, the Asia-Pacific region is rapidly advancing in agricultural robotics, smart cities, and military modernization. Government-backed programs and private innovation hubs are pushing domestic manufacturing and deployment of UGVs at scale.

Middle East and Africa: Strategic Adoption in Security and Infrastructure

UGVs are being integrated into border surveillance, oil and gas pipeline inspection, and urban safety across key regions such as the UAE, Saudi Arabia, and South Africa, where rugged environments and security risks necessitate unmanned solutions.

South America: Gradual Integration Across Agriculture and Mining

In countries like Brazil and Chile, unmanned ground vehicles market’s are enhancing resource extraction and precision agriculture. Regional investment in automation is expected to grow in parallel with infrastructure modernization efforts.

Key Companies Shaping the Unmanned Ground Vehicles Market Ecosystem

Northrop Grumman Corporation

General Dynamics Corporation

BAE Systems

QinetiQ Group PLC

Textron Inc.

Oshkosh Corporation

Milrem Robotics

Kongsberg Defence & Aerospace

Teledyne FLIR LLC

Lockheed Martin Corporation

These firms are advancing UGV design, AI integration, sensor modularity, and battlefield-grade resilience, ensuring readiness for both present and future operational challenges.

The forecasted growth is underpinned by:

Rising geopolitical tensions driving military investments

Expanding commercial interest in automation

Cross-sector AI integration and sensor improvements

Purchase Exclusive Report: https://www.statsandresearch.com/enquire-before/40520-global-unmanned-ground-vehicles-ugvs-market

Conclusion: The Evolution of Autonomous Ground Mobility

The global unmanned ground vehicles market stands at the intersection of robotics, AI, and real-world application. As technological maturity converges with widespread need for unmanned solutions, the role of UGVs will extend far beyond today’s use cases. Governments, corporations, and innovators must align strategically to harness the full potential of these systems.

With global investments accelerating and AI-driven capabilities reaching new thresholds, Unmanned Ground Vehicles are not just an industrial trend—they are the vanguard of future operational dominance.

Our Services:

On-Demand Reports: https://www.statsandresearch.com/on-demand-reports

Subscription Plans: https://www.statsandresearch.com/subscription-plans

Consulting Services: https://www.statsandresearch.com/consulting-services

ESG Solutions: https://www.statsandresearch.com/esg-solutions

Contact Us:

Stats and Research

Email: [email protected]

Phone: +91 8530698844

Website: https://www.statsandresearch.com

1 note

·

View note

Text

The global inertial sensors market is set to reach a valuation of US$ 239 Mn in 2024 and further expand at a CAGR of 9.4% by the year 2034.

1 note

·

View note

Text

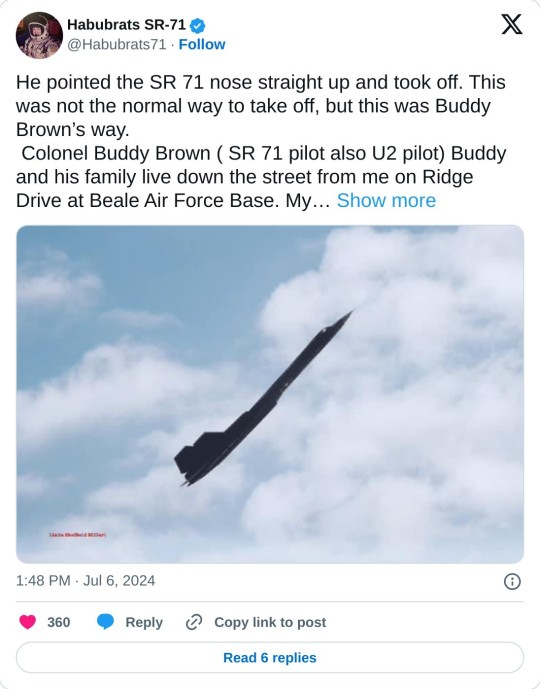

My 91-year-old mother still remembers him. He was always the center of attention. Everyone loved to hear his stories. I posted this last year, but there is an update his son Scott Brown is getting ready to publish his father‘s memoirs. I can’t wait to read it again. I did receive a sneak peek at the book and wrote a review for him.

He recalls the details of severalmissions as if it were yesterday. Some of Colonel Brown’s memories, "Before takeoff, the complete mission (mission track, sensor on-oft times, lat/long positions, fuel status, etc.) would be programmed (uploaded) into the SR's onboard computer system).

One really great thing we also had was a system called the ANS (astro-inertial navigation system) which gave you a three-dimensional fix in the sky within feet of your actual position. Thus, no more manual celestial shots.

The ANS was so sensitive that on a clear day while taxiing for takeoff, the system would start tracking stars and updating itself! On this mission, I took off from Kadena and contacted the waiting tanker at 25,000 feet to take on a full fuel load. After disconnecting, I selected maximum afterburner and started my climb to altitude. We were in the middle of some thunderstorms and did not climb out on top until we reached 50,000 feet."

After Brown’s, SR 71 was repaired at Takhli…

"I cranked the engines, taxied out to the main runway, and made a max burner takeoff. As I cleared the runway, I pointed my nose almost straight up, and, by the way, that wasn't the normal way to take off.😁

Takhli just happened to be home of the 355th Tactical Fighter Wing and wanted to show the fighter guys just what a reconnaissance aircraft could do. I must admit, it was impressive!

WARREN THOMPSON wrote this article in 2010.

I paraphrased parts of it.

Thank you to Cindy Westerman

Linda Sheffield

9 notes

·

View notes

Text

AVA (2011) by iRobot, Bedford, MA. AVA, short for ‘Avatar,’ is a semi-autonomous telepresence robot. You can instruct it to go to selected waypoints avoiding obstacles along the way, rather than having to steer it manually. The base has three omnidirectional wheels, and underglow status LEDs. Ava’s face is a clip-on pad, mounted on a head that can turn at the neck and go up and down. Sensors include a depth camera, laser rangefinders, inertial movement sensors, ultrasonics, bump sensors, and an array of microphones to pick up voice commands. The collar has touch sensors all the way round, providing a tangible way to interact with AVA. “The company wants to test Ava for remote presence applications in hospitals, allowing a doctor to remotely diagnose patients or do rounds with the robot. Seen here is Ava software engineer Clement Wong on Ava's tablet.” – iRobot's Ava: She's got the touch, by Martin LaMonica, CNET,

14 notes

·

View notes

Text

Team Sad Dance Party

What is Team Sad Dance Party and Why is it in the Klonoa Tags?

Team Sad Dance Party is a group of college students who used Klonoa and the King of Sorrow in one of their MoCap projects. We found the project hilarious and wanted to share it with the rest of the Klonoa fandom. We have two social medias, a Twitter/X, and this Tumblr, to use as archives for this project. We have a YouTube as well.

When will the MoCap animation be released?

The MoCap animation will be released on June 30, 2024. Until then, we plan on uploading renders and GIFs.

More Info Below;

What is MoCap?

MoCap stands for motion capture. Think of Avatar (not ATLA) and that's what we did but not as good, and with Klonoa and King. Basically, all the movements and dances you see the characters performing are from data collected from real people wearing motion capture suits.

What should you expect from this project

It's a fun little dance party in Hyuponia! We are not professionals so there are a lot of problems with the MoCap animation, especially with the ears and KoS scarf. We hope you enjoy the animation despite this!

What will happen to the accounts after the project is released?

Once the whole project is released, including GIFs and random renders, the accounts will stop posting.

What software did you guys use?

We used Maya/Arnold, Clip Studio Paint, and Substance Painter. The MoCap suits we used were inertial. This means we put sensors on the MoCap suit at the strategic points on the body to track the movement.

2 notes

·

View notes