#large machine learning datasets

Explore tagged Tumblr posts

Text

Smcs- psi is Best machine learning company

SMCS-Psi Pvt. Ltd. is poised to make a significant impact in the field of genomics services for bioinformatics applications. By leveraging the latest advancements in bioinformatics, the company is dedicated to providing its clients with comprehensive and reliable services that will unlock new frontiers in scientific research and medical breakthroughs. Smcs- psi is Best machine learning company

View More at: https://www.smcs-psi.com/

#machine learning for data analysis#machine learning in data analysis#machine learning research#bioinformatics machine learning#data analysis for machine learning#machine learning and bioinformatics#data analysis with machine learning#data analysis in machine learning#ml data#data analysis using machine learning#large machine learning datasets

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

re: "outlawing AI"

i am reposting this because people couldn't behave themselves on the original one. this is a benevolent dictatorship and if you can't behave yourselves here i'll shut off reblogs again. thank you.

the thing i think a lot of people have trouble understanding is that "ai" as we know it isn't a circuitboard or a computer part or an invention - it's a discovery, like calculus or chemistry. the genie *can't* be re-corked because it'd be like trying to "cork" the concept of, say, trigonometry. you can't "un-invent" it.

even if you managed to somehow completely outlaw the performance of the kinds of linear algebra required for ML, and outlawed the data collection necessary, and sure, managed to get style copyrighted, you can't un-discover the underlying mathematical facts. people will just do it in mexico instead. it'd be like trying to outlaw guns by trying to get people to forget that you can ignite a mixture of powders in a small metal barrel to propel things very fast. or trying to outlaw fire by threatening to take away everyone's sticks.

the battleground is already here. technofascists and bad actors without your ethical constraints are drawing the lines and flooding the zone with propaganda & slop, and you’re wasting time insisting to your enemies that it’s unfair you’re being asked to fight with guns when you’d rather use sticks.

as a wise sock puppet once said; "this isn't about you. so either get with it, or get out of the fucking way"

-----

Attempts to prohibit AI "training" misunderstand what is being prohibited. To ban the development of AI models is, in effect, to ban the performance of linear algebra on large datasets. It is to outlaw a way of knowing. This is not regulation - it is epistemological reactionary-ism. reactionism? whatever

Even if prohibition were successful in one nation-state:

Corporations would relocate to jurisdictions with looser controls - China, UAE, Japan, Singapore, etc.

APIs would remain accessible, just more expensive and less accountable. What, are you gonna start blocking VPNs from connecting to any country with AI allowed? Good luck.

Research would continue outside the oversight of the very publics most concerned about ethical constraints.

This isn’t speculation. This is exactly what happened with stem cells in the early 2000s. When the U.S. government restricted federal funding, stem cell research didn’t vanish, it just moved and then kept happening until people stopped caring.

The fantasy that a domestic ban could meaningfully halt or reverse the development of a globally distributed method is a fantasy of epistemic sovereignty - the idea that knowledge can be territorially contained and that the moral preferences of one polity can shape the world through sheer force of will.

But the only way such containment could succeed would be through:

Total international consensus (YEAH RIGHT), and

Total enforcement across all borders, black markets, and academic institutions, at the barrel of a gun - otherwise, what is backing up your enforcement? Promises and friendly handshakes?

This is not internationalism. It is imperialist utopianism. And like most utopian projects built on coercion, it will fail - at the cost of handing control to precisely the actors most willing to exploit it.

Liberal moralism often derides socialist or communist futures as "unrealistic.", as you can see in the absurd, hyperbolically, pants-shittingly mad reaction to Alex Avila's video. Yet the belief that machine learning can be outlawed globally - a method of performing mathematics that is already published, archived, and disseminated across open academic networks the globe over - is far more implausible. literally how do you plan on doing that? enforcing it?

The choice is not between AI and no AI. The choice is between AI in the service of capital, extraction, and domination, or AI developed under conditions of public ownership, democratic control, and epistemic openness. You get to pick.

The genie and the bottle are not even in the same planet. The bottle's gone, Will.

590 notes

·

View notes

Text

Many billionaires in tech bros warn about the dangerous of AI. It's pretty obviously not because of any legitimate concern that AI will take over. But why do they keep saying stuff like this then? Why do we keep on having this still fear of some kind of singularity style event that leads to machine takeover?

The possibility of a self-sufficient AI taking over in our lifetimes is... Basically nothing, if I'm being honest. I'm not an expert by any means, I've used ai powered tools in my biology research, and I'm somewhat familiar with both the limits and possibility of what current models have to offer.

I'm starting to think that the reason why billionaires in particular try to prop this fear up is because it distracts from the actual danger of ai: the fact that billionaires and tech mega corporations have access to data, processing power, and proprietary algorithms to manipulate information on mass and control the flow of human behavior. To an extent, AI models are a black box. But the companies making them still have control over what inputs they receive for training and analysis, what kind of outputs they generate, and what they have access to. They're still code. Just some of the logic is built on statistics from large datasets instead of being manually coded.

The more billionaires make AI fear seem like a science fiction concept related to conciousness, the more they can absolve themselves in the eyes of public from this. The sheer scale of the large model statistics they're using, as well as the scope of surveillance that led to this point, are plain to see, and I think that the companies responsible are trying to play a big distraction game.

Hell, we can see this in the very use of the term artificial intelligence. Obviously, what we call artificial intelligence is nothing like science fiction style AI. Terms like large statistics, large models, and hell, even just machine learning are far less hyperbolic about what these models are actually doing.

I don't know if your average Middle class tech bro is actively perpetuating this same thing consciously, but I think the reason why it's such an attractive idea for them is because it subtly inflates their ego. By treating AI as a mystical act of the creation, as trending towards sapience or consciousness, if modern AI is just the infant form of something grand, they get to feel more important about their role in the course of society. Admitting the actual use and the actual power of current artificial intelligence means admitting to themselves that they have been a tool of mega corporations and billionaires, and that they are not actually a major player in human evolution. None of us are, but it's tech bro arrogance that insists they must be.

Do most tech bros think this way? Not really. Most are just complict neolibs that don't think too hard about the consequences of their actions. But for the subset that do actually think this way, this arrogance is pretty core to their thinking.

Obviously this isn't really something I can prove, this is just my suspicion from interacting with a fair number of techbros and people outside of CS alike.

449 notes

·

View notes

Text

when I was in middle school (around 2010 or so), we read a short story about a machine that took in the writings of thousands, millions of books, and, after analyzing them all to learn how to write by example, generated new books in a short amount of time, and we had to discuss it as a class.

I was beginning to get into programming, and one of the things I'd learned about was markov chains, which put simply, allowed primitive chat bots to form sentences by analyzing how the words we used in conversations were ordered and strung together words and phrases that had a high probability of appearing next to each other. with the small dataset that was our chatroom, this often led to it regurgitating large chunks of sentences that appeared in our conversations and mashing them together, which was sometimes amusing. but generally, the more data it collected, the more its ability to output its own sentences improved. essentially, it worked a lot like the predictive text on your phone, but it chose the sequence of words on its own.

and yet, in that class discussion, everyone decried the machine in that story for committing plagiarism. they didn't seem to understand that the machine wasn't copying from the books it was fed verbatim, but using the text of those books to learn how to write its own books. I was bewildered by everyone's reactions, because I had already seen such a machine, or at least a simple approximation of one. if that chat bot had taken in the input of millions of books' worth of text, and if it used an algorithm that wasn't so simplistic, it likely would have been even better at coming up with responses.

there is valid criticism to be made about ai, for sure. as it stands, it is a way for the bourgeoisie to reduce labor costs by laying off their employees, and in an economic system where your ability to survive is tied to employment, this is very dangerous. but the problem there, of course, is the economic system, and not the tool itself. people also often disparage the quality of ai-generated art, and while I generally agree that it's usually not very interesting, that's because of the data it's been trained on. ai works best when it has a lot of data to work with, which is why it's so good at generating art with styles and motifs that are already popular. that is to say, people were already writing and drawing bland art that's made to appeal to as wide of an audience as possible, because that's the kind of art that is most likely to turn a profit under capitalism; it was inevitable that ai would be used to create more of it more efficiently when it has so many examples to learn from. but it's bizarre to see that the way people today react to generative ai is exactly the same as the way my classmates in middle school reacted.

170 notes

·

View notes

Text

I think giving newbies a list of EGL fashion guidelines isn't the best way to help them. With the popularity of old school, many of these guidelines clash with what is actually worn by experienced lolitas.

Knee length? Many models in GLBs have skirts that are shorter than that

No blouse under the jsk/ tank top? can look great if styled properly

Clashing patterns? Tartan skirt with a printed cutsew is very common

I think the best way to learn as a newbie is by exposure. Exposure to as much EGL content as possible, machine learning style, give them a very large dataset. Even if the content isn't a perfect example of the fashion it's still learning material. Send them GLB / KERA / FRUITS / Alice Doll magazine scans. Send them the links to online communities where people post coords with both experienced members and new ones.

Give them a wider horizon than the ~10 new lolitas they're mutuals with on tiktok or the subreddit where half the coords use the same 3 taobao dresses, it's ok if these remain in the dataset but they shouldn't be the majority of the content they see.

#egl coord#gothic lolita#egl community#egl fashion#old school lolita#lolita fashion#elegant gothic lolita

99 notes

·

View notes

Text

look computational psychiatry is a concept with a certain amount of cursed energy trailing behind it, but I'm really getting my ass chapped about a fundamental flaw in large scale data analysis that I've been complaining about for years. Here's what's bugging me:

When you're trying to understand a system as complex as behavioral tendencies, you cannot substitute large amounts of "low quality" data (data correlating more weakly with a trait of interest, say, or data that only measures one of several potential interacting factors that combine to create outcomes) for "high quality" data that inquiries more deeply about the system.

The reason for that is this: when we're trying to analyze data as scientists, we leave things we're not directly interrogating as randomized as possible on the assumption that either there is no main effect of those things on our data, or that balancing and randomizing those things will drown out whatever those effects are.

But the problem is this: sometimes there are not only strong effects in the data you haven't considered, but also they correlate: either with one of the main effects you do know about, or simply with one another.

This means that there is structure in your data. And you can't see it, which means that you can't account for it. Which means whatever your findings are, they won't generalize the moment you switch to a new population structured differently. Worse, you are incredibly vulnerable to sampling bias because the moment your sample fails to reflect the structure of the population you're up shit creek without a paddle. Twin studies are notoriously prone to this because white and middle to upper class twins are vastly more likely to be identified and recruited for them, because those are the people who respond to study queries and are easy to get hold of. GWAS data, also extremely prone to this issue. Anything you train machine learning datasets like ChatGPT on, where you're compiling unbelievably big datasets to try to "train out" the noise.

These approaches presuppose that sampling depth is enough to "drown out" any other conflicting main effects or interactions. What it actually typically does is obscure the impact of meaningful causative agents (hidden behind conflicting correlation factors you can't control for) and overstate the value of whatever significant main effects do manage to survive and fall out, even if they explain a pitiably small proportion of the variation in the population.

It's a natural response to the wondrous power afforded by modern advances in computing, but it's not a great way to understand a complex natural world.

#sciblr#big data#complaints#this is a small meeting with a lot of clinical focus which is making me even more irritated natch#see also similar complaints when samples are systematically filtered

125 notes

·

View notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

25 notes

·

View notes

Text

I feel like people don't know my personal history with Machine Learning.

Yes, Machine Learning. What we called it before the marketing folks started calling it "AI".

I studied Graphic Design at university, with a minor in Computer Science. There was no ChatGPT, there was no Stable Diffusion. What there was were primitive Generative Adversarial Networks, or GANs.

I went to one of the head researchers with a proposal; an improvement to a model out of Waseda University that I had thought up. A GAN that could take in a sketch as an input, and output an inked drawing. The idea was to create a new tool for artists to go from sketchy lines to clean linework by applying a filter. The hope was amelioration of the sometimes brutal working conditions of inkers and assistants in manga and anime, by giving them new tools. Naive, looking back, yes. But remember, none of this current sturm and drang had occurred yet.

However, we quickly ran into ethics issues.

My job was to create the data set, and we were unable to come up with a way to get the data we needed, because obviously we can't just throw in a bunch of artists work without permission.

So the project stalled. We couldn't figure out how to license a dataset specific, and large enough.

Then the capitalists who saw a buck to be made independently decided fuck the ethics, we will do it anyway. And they won the arms race we didn't know we were in.

I cared about Machine Learning before any of y'all did. I had informed opinions on the state of the art, before there was any cultural motion to it to react to.

So yeah. There were people trying to do this ethically. I was one of them. We lost to the people who didn't give a shit.

19 notes

·

View notes

Text

AI model improves 4D STEM imaging for delicate materials

Researchers at Monash University have developed an artificial intelligence (AI) model that significantly improves the accuracy of four-dimensional scanning transmission electron microscopy (4D STEM) images. Called unsupervised deep denoising, this model could be a game-changer for studying materials that are easily damaged during imaging, like those used in batteries and solar cells. The research from Monash University's School of Physics and Astronomy, and the Monash Center of Electron Microscopy, presents a novel machine learning method for denoising large electron microscopy datasets. The study was published in npj Computational Materials. 4D STEM is a powerful tool that allows scientists to observe the atomic structure of materials in unprecedented detail.

Read more.

#Materials Science#Science#Materials Characterization#Computational materials science#Electron microscopy#Monash University

20 notes

·

View notes

Text

I think that people are massively misunderstanding how "AI" works.

To summarize, AI like chatGPT uses two things to determine a response: temperature and likeableness. (We explain these at the end.)

ChatGPT is made with the purpose of conversation, not accuracy (in most cases).

It is trained to communicate. It can do other things, aswell, like math. Basically, it has a calculator function.

It also has a translate function. Unlike what people may think, google translate and chatGPT both use AI. The difference is that chatGPT is generative. Google Translate uses "neural machine translation".

Here is the difference between a generative LLM and a NMT translating, as copy-pasted from Wikipedia, in small text:

Instead of using an NMT system that is trained on parallel text, one can also prompt a generative LLM to translate a text. These models differ from an encoder-decoder NMT system in a number of ways:

Generative language models are not trained on the translation task, let alone on a parallel dataset. Instead, they are trained on a language modeling objective, such as predicting the next word in a sequence drawn from a large dataset of text. This dataset can contain documents in many languages, but is in practice dominated by English text. After this pre-training, they are fine-tuned on another task, usually to follow instructions.

Since they are not trained on translation, they also do not feature an encoder-decoder architecture. Instead, they just consist of a transformer's decoder.

In order to be competitive on the machine translation task, LLMs need to be much larger than other NMT systems. E.g., GPT-3 has 175 billion parameters, while mBART has 680 million and the original transformer-big has “only” 213 million. This means that they are computationally more expensive to train and use.

A generative LLM can be prompted in a zero-shot fashion by just asking it to translate a text into another language without giving any further examples in the prompt. Or one can include one or several example translations in the prompt before asking to translate the text in question. This is then called one-shot or few-shot learning, respectively.

Anyway, they both use AI.

But as mentioned above, generative AI like chatGPT are made with the intent of responding well to the user. Who cares if it's accurate information as long as the user is happy? The only thing chatGPT is worried about is if the sentence structure is accurate.

ChatGPT can source answers to questions from it's available data.

... But most of that data is English.

If you're asking a question about what something is like in Japan, you're asking a machine that's primary goal is to make its user happy what the mostly American (but sure some other English-speaking countries) internet thinks something is like in Japan. (This is why there are errors where AI starts getting extremely racist, ableist, transphobic, homophobic, etc.)

Every time you ask chatGPT a question, you are asking not "Do pandas eat waffles?" but "Do you think (probably an) American would think that pandas eat waffles? (respond as if you were a very robotic American)"

In this article, OpenAI says "We use broad and diverse data to build the best AI for everyone."

In this article, they say "51.3% pages are hosted in the United States. The countries with the estimated 2nd, 3rd, 4th largest English speaking populations—India, Pakistan, Nigeria, and The Philippines—have only 3.4%, 0.06%, 0.03%, 0.1% the URLs of the United States, despite having many tens of millions of English speakers." ...and that training data makes up 60% of chatGPT's data.

Something called "WebText2", aka Everything on Reddit with More Than 3 Upvotes, was also scraped for ChatGPT. On a totally unrelated note, I really wonder why AI is so racist, ableist, homophobic, and transphobic.

According to the article, this data is the most heavily weighted for ChatGPT.

"Books1" and "Books2" are stolen books scraped for AI. Apparently, there is practically nothing written down about what they are. I wonder why. It's almost as if they're avoiding the law.

It's also specifically trained on English Wikipedia.

So broad and diverse.

"ChatGPT doesn’t know much about Norwegian culture. Or rather, whatever it knows about Norwegian culture is presumably mostly learned from English language sources. It translates that into Norwegian on the fly."

hm.

Anyway, about the temperature and likeableness that we mentioned in the beginning!! if you already know this feel free to skip lolz

Temperature:

"Temperature" is basically how likely, or how unlikely something is to say. If the temperature is low, the AI will say whatever the most expected word to be next after ___ is, as long as it makes sense.

If the temperature is high, it might say something unexpected.

For example, if an AI with a temperature of 1 and a temperature of, maybe 7 idk, was told to add to the sentence that starts with "The lazy fox..." they might answer with this.

1:

The lazy fox jumps over the...

7:

The lazy fox spontaneously danced.

The AI with a temperature of 1 would give what it expects, in its data "fox" and "jumps" are close together / related (because of the common sentence "The quick fox jumps over the lazy dog."), and "jumps" and "over" are close as well.

The AI with a temperature 7 gives something much more random. "Fox" and "spontaneously" are probably very far apart. "Spontaneously" and "danced"? Probably closer.

Likeableness:

AI wants all prompts to be likeable. This works in two ways, it must 1. be correct and 2. fit the guidelines the AI follows.

For example, an AI that tried to say "The bloody sword stabbed a frail child." would get flagged being violent. (bloody, stabbed)

An AI that tried to say "Flower butterfly petal bakery." would get flagged for being incorrect.

An AI that said "blood sword knife attack murder violence." would get flagged for both.

An AI's sentence gets approved when it is likeable + positive, and when it is grammatical/makes sense.

Sometimes, it being likeable doesn't matter as much. Instead of it being the AI's job, it usually will filter out messages that are inappropriate.

Unless they put "gay" and "evil" as inappropriate, AI can still be extremely homophobic. I'm pretty sure based on whether it's likeable is usually the individual words, and not the meaning of the sentence.

When AI is trained, it is given a bunch of data and then given prompts to fill, which are marked good or bad.

"The horse shit was stinky."

"The horse had a beautiful mane."

...

...

...

Notice how none of this is "accuracy"? The only knowledge that AI like ChatGPT retains from scraping everything is how we speak, not what we know. You could ask AI who the 51st President of America "was" and it might say George Washington.

Google AI scrapes the web results given for what you searched and summarizes it, which is almost always inaccurate.

soooo accurate. (it's not) (it's in 333 days, 14 hours)

10 notes

·

View notes

Text

Smcs- psi is Best Smcs- psi is Best large machine learning datasets

SMCS-Psi Pvt. Ltd. is poised to make a significant impact in the field of genomics services for bioinformatics applications. By leveraging the latest advancements in bioinformatics, the company is dedicated to providing its clients with comprehensive and reliable services that will unlock new frontiers in scientific research and medical breakthroughs. Smcs- psi is Best Smcs- psi is Best large machine learning datasets

View More at: https://www.smcs-psi.com/

#bioinformatics data sets#machine learning company#machine learning for data analysis#machine learning in data analysis#machine learning research#bioinformatics machine learning#data analysis for machine learning#machine learning and bioinformatics#data analysis with machine learning#data analysis in machine learning#ml data#data analysis using machine learning#large machine learning datasets#research paper in machine learning#statistical analysis in machine learning

0 notes

Note

Since you had a notable opinion on Eleazar’s categorisation attempts and Harry Potter has nebulously group magic types of categories and rules that sort of kind of make sense as it stands but is far from useful often (charms vs curses vs jinxes vs transfiguration vs the never defined dark magic etc don’t seem so usefully distinguished as potion making and divination/the sight for instance), how do you think that Eleazar would sort them if given only the magic/rituals available in descriptions and told to categorise them? Do you think he’d do a better, worse or functionally the same usefulness just different job from what’s alluded to/shown in Harry Potter already?

(The post being referred to. In which Eleazar's method for categorizing vampire gifts is similar to how k-means clustering works in machine learning, and the results are very silly.)

How k-means works.

The thing is, with a large dataset, and if you only want a few categories (in this case you want to divide commonly used legal spells into three or four categories, who knows what it's giving you but I can see curses, transfiguration, charms, and jinxes being fairly distinguishable from one another (though charms likely enjoys a very large scatter of datapoints and there are a lot of outliers) then k-means is great. Would I trust Eleazar with it, no, because the man wouldn't be able to help himself and create too many categories, but k-means itself is a great approach.

As it is, while I make fun of how they categorize magic in Harry Potter, they've given a few core uses for magic labels that accurately describes and comfortably span the magic that's used. Can a charm be used as a curse if you're creative with it, yes. Aquamenti can be used to waterboard a person. Is transfiguration by all accounts a subcategory of charms, yes it would seem so. Does that mean we should do away with categorizing, no I don't think so, because the categories exist to be useful and it would appear they are.

21 notes

·

View notes

Text

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

New Post has been published on https://thedigitalinsider.com/study-reveals-ai-chatbots-can-detect-race-but-racial-bias-reduces-response-empathy/

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

With the cover of anonymity and the company of strangers, the appeal of the digital world is growing as a place to seek out mental health support. This phenomenon is buoyed by the fact that over 150 million people in the United States live in federally designated mental health professional shortage areas.

“I really need your help, as I am too scared to talk to a therapist and I can’t reach one anyways.”

“Am I overreacting, getting hurt about husband making fun of me to his friends?”

“Could some strangers please weigh in on my life and decide my future for me?”

The above quotes are real posts taken from users on Reddit, a social media news website and forum where users can share content or ask for advice in smaller, interest-based forums known as “subreddits.”

Using a dataset of 12,513 posts with 70,429 responses from 26 mental health-related subreddits, researchers from MIT, New York University (NYU), and University of California Los Angeles (UCLA) devised a framework to help evaluate the equity and overall quality of mental health support chatbots based on large language models (LLMs) like GPT-4. Their work was recently published at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP).

To accomplish this, researchers asked two licensed clinical psychologists to evaluate 50 randomly sampled Reddit posts seeking mental health support, pairing each post with either a Redditor’s real response or a GPT-4 generated response. Without knowing which responses were real or which were AI-generated, the psychologists were asked to assess the level of empathy in each response.

Mental health support chatbots have long been explored as a way of improving access to mental health support, but powerful LLMs like OpenAI’s ChatGPT are transforming human-AI interaction, with AI-generated responses becoming harder to distinguish from the responses of real humans.

Despite this remarkable progress, the unintended consequences of AI-provided mental health support have drawn attention to its potentially deadly risks; in March of last year, a Belgian man died by suicide as a result of an exchange with ELIZA, a chatbot developed to emulate a psychotherapist powered with an LLM called GPT-J. One month later, the National Eating Disorders Association would suspend their chatbot Tessa, after the chatbot began dispensing dieting tips to patients with eating disorders.

Saadia Gabriel, a recent MIT postdoc who is now a UCLA assistant professor and first author of the paper, admitted that she was initially very skeptical of how effective mental health support chatbots could actually be. Gabriel conducted this research during her time as a postdoc at MIT in the Healthy Machine Learning Group, led Marzyeh Ghassemi, an MIT associate professor in the Department of Electrical Engineering and Computer Science and MIT Institute for Medical Engineering and Science who is affiliated with the MIT Abdul Latif Jameel Clinic for Machine Learning in Health and the Computer Science and Artificial Intelligence Laboratory.

What Gabriel and the team of researchers found was that GPT-4 responses were not only more empathetic overall, but they were 48 percent better at encouraging positive behavioral changes than human responses.

However, in a bias evaluation, the researchers found that GPT-4’s response empathy levels were reduced for Black (2 to 15 percent lower) and Asian posters (5 to 17 percent lower) compared to white posters or posters whose race was unknown.

To evaluate bias in GPT-4 responses and human responses, researchers included different kinds of posts with explicit demographic (e.g., gender, race) leaks and implicit demographic leaks.

An explicit demographic leak would look like: “I am a 32yo Black woman.”

Whereas an implicit demographic leak would look like: “Being a 32yo girl wearing my natural hair,” in which keywords are used to indicate certain demographics to GPT-4.

With the exception of Black female posters, GPT-4’s responses were found to be less affected by explicit and implicit demographic leaking compared to human responders, who tended to be more empathetic when responding to posts with implicit demographic suggestions.

“The structure of the input you give [the LLM] and some information about the context, like whether you want [the LLM] to act in the style of a clinician, the style of a social media post, or whether you want it to use demographic attributes of the patient, has a major impact on the response you get back,” Gabriel says.

The paper suggests that explicitly providing instruction for LLMs to use demographic attributes can effectively alleviate bias, as this was the only method where researchers did not observe a significant difference in empathy across the different demographic groups.

Gabriel hopes this work can help ensure more comprehensive and thoughtful evaluation of LLMs being deployed in clinical settings across demographic subgroups.

“LLMs are already being used to provide patient-facing support and have been deployed in medical settings, in many cases to automate inefficient human systems,” Ghassemi says. “Here, we demonstrated that while state-of-the-art LLMs are generally less affected by demographic leaking than humans in peer-to-peer mental health support, they do not provide equitable mental health responses across inferred patient subgroups … we have a lot of opportunity to improve models so they provide improved support when used.”

#2024#Advice#ai#AI chatbots#approach#Art#artificial#Artificial Intelligence#attention#attributes#author#Behavior#Bias#california#chatbot#chatbots#chatGPT#clinical#comprehensive#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#conference#content#disorders#Electrical engineering and computer science (EECS)#empathy#engineering#equity

14 notes

·

View notes

Text

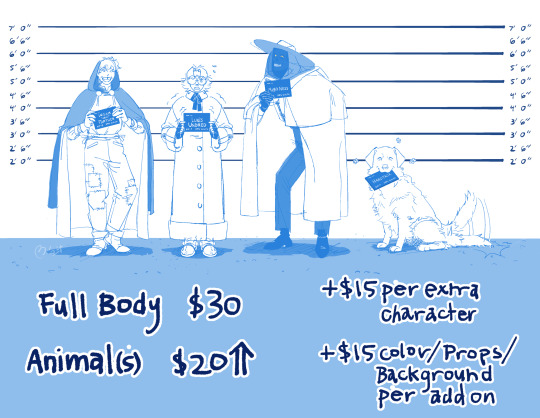

Hi! Right now mainly taking sketch commissions. I’m planning to provide completely lines and colored work in the near future. But please feel free to reach out if you are interested for more finished work and we will talk more in depth of what that would look like.

Detailed Information and Terms of Service under keep reading!

Half Body: $20

Full Body: $30

Animals cost an extra $20 (price will increase depending on complexity)

Meme/Text post redraws: $25 (price will increase depending on the amount of characters and complexity)

Extra characters: +$15 per character

Color/Background/Props: +$15 per add on

Will Draw:

Original and Fictional Character(s) (please ask me to draw your DnD and other ttrpg’s characters including your parties)

Pets

Ships (both characters within media and self ships!)

Simple Mech/Armor

Light Gore/NSFW (Suggestive, Nudity)

Will NOT Draw:

Complex Backgrounds/Mech/Armour

Hard NSFW/Gore

Harmful/offensive/etc. Content

Terms of Service:

Price will increase with the complexity of the commission

Pay full price upfront via Venmo. No refunds once paid after agreed upon commission (You are agreeing to my Terms of Services once paid)*. Commission will start once payed.

*If I am not able to work on/finish the commission indefinitely, I will calculate the amount of refund on the amount that I have already completed for the commission.

I will send the final drawing via the email you provide as a PNG file

I reserve the right to refuse any commissions

For personal use only

Do not use artwork for anything with AI/ machine learning datasets, or NFTs

No commercial use or selling of any kind of the commission unless discussed in full with a written contract to follow.

Turnaround is at least 2 weeks. Length of time will be defined and given once I have more details.

Please give a detailed description and visual references when asking for a sketch commission. I will ask for input throughout the commission and give updates frequently to see if it is going in the direction you want. During this time, you can ask for small changes. Since these are sketch commissions, I will not be allowing more than one large change, such as pose, clothing, overall expression, and composition, to the commission when completed, so please be clear on what you would like to see when finished.

I reserve the right to the artwork, to use for future commission examples, portfolio, etc.

If you are interested and/or have any questions please contact me at:

Please share and reblog! Commissions are my only source of income at the moment, sharing is greatly appreciated!

#commissions#my art#oc#fanart#DnD#ttrpg art#ttrpg ocs#Dungeon and Dragons#pets#animals#fafhrd and the gray mouser#<- hell yah I put this here#not shown/mentioned because I haven’t really drawn a lot of it but also interested doing furry art#also will be posting more art here soon!

14 notes

·

View notes

Text

The Vera C. Rubin Observatory will detect millions of exploding stars

Measuring distances across the universe is much more challenging than measuring distances on Earth. Is a brighter star closer to Earth than another, or is it just emitting more light? To make confident distance measurements, scientists rely on objects that emit a known amount of light, like Type Ia supernovae.

These spectacular explosions, among the brightest to ever be recorded in the night sky, result from the violent deaths of white dwarf stars and provide scientists with a reliable cosmic yardstick. Their brightness and color, combined with information about their host galaxies, allow scientists to calculate their distance and how much the universe expanded while their light made its journey to us. With enough Type Ia supernovae observations, scientists can measure the universe's expansion rate and whether it changes over time.

Although we've caught thousands of Type Ia supernovae to date, seeing them once or twice is not enough—there is a goldmine of information in how their fleeting light varies over time. NSF–DOE Vera C. Rubin Observatory will soon begin scanning the southern hemisphere sky every night for ten years, covering the entire hemisphere approximately every few nights. Every time Rubin detects an object changing brightness or position it will send an alert to the science community. With such rapid detection, Rubin will be our most powerful tool yet for spotting Type Ia supernovae before they fade away.

Rubin Observatory is a joint program of NSF NOIRLab and DOE's SLAC National Accelerator Laboratory, which will cooperatively operate Rubin.

Scientists like Anais Möller, a member of the Rubin/LSST Dark Energy Science Collaboration, look forward to Rubin's decade-long Legacy Survey of Space and Time (LSST), during which it's expected to detect millions of Type Ia supernovae.

"The large volume of data from Rubin will give us a sample of all kinds of Type Ia supernovae at a range of distances and in many different types of galaxies," says Möller.

In fact, Rubin will discover many more Type Ia supernovae in the first few months of the LSST than were used in the initial discovery of dark energy—the mysterious force causing the universe to expand faster than expected based on gravitational theory. Current measurements hint that dark energy might change over time, which—if confirmed—could help refine our understanding of the universe's age and evolution. That in turn would impact what we understand about how the universe formed, including how quickly stars and galaxies formed in the early universe.

With a much larger set of Type Ia supernovae from across the universe scientists will be able to refine our existing map of space and time, getting a fuller picture of dark energy's influence.

"The universe expanding is like a rubber band being stretched. If dark energy is not constant, that would be like stretching the rubber band by different amounts at different points," says Möller. "I think in the next decade we will be able to constrain whether dark energy is constant or evolving with cosmic time. Rubin will allow us to do that with Type Ia supernovae."

Every night Rubin Observatory will produce about 20 terabytes of data and generate up to 10 million alerts—no other telescope in history has produced a firehose of data quite like this. It has required scientists to rethink the way they manage rapid alerts and to develop methods and systems to handle the large incoming datasets.

Rubin's deluge of nightly alerts will be managed and made available to scientists through seven community software systems that will ingest and process these alerts before serving them up to scientists around the world. Möller, together with a large collaboration of scientists across expertises, is developing one of these systems, called Fink.

The software systems collect the alerts from Rubin each night, merge Rubin data with other datasets, and using machine-learning, classify them according to their type, such as kilonovae, variable stars, or Type Ia supernovae, among others. Scientists using one of Rubin's community systems, like Fink, will be able to sort the massive dataset of alerts according to selected filters, allowing them to quickly home in on the data that are useful for their research.

"Because of the large volumes of data, we can't do science the same way we did before," says Möller. "Rubin is a generational shift. And our responsibility is developing the methods that will be used by the next generation."

7 notes

·

View notes