#openshift 4

Explore tagged Tumblr posts

Text

Cloud-Native Development in the USA: A Comprehensive Guide

Introduction

Cloud-native development is transforming how businesses in the USA build, deploy, and scale applications. By leveraging cloud infrastructure, microservices, containers, and DevOps, organizations can enhance agility, improve scalability, and drive innovation.

As cloud computing adoption grows, cloud-native development has become a crucial strategy for enterprises looking to optimize performance and reduce infrastructure costs. In this guide, we’ll explore the fundamentals, benefits, key technologies, best practices, top service providers, industry impact, and future trends of cloud-native development in the USA.

What is Cloud-Native Development?

Cloud-native development refers to designing, building, and deploying applications optimized for cloud environments. Unlike traditional monolithic applications, cloud-native solutions utilize a microservices architecture, containerization, and continuous integration/continuous deployment (CI/CD) pipelines for faster and more efficient software delivery.

Key Benefits of Cloud-Native Development

1. Scalability

Cloud-native applications can dynamically scale based on demand, ensuring optimal performance without unnecessary resource consumption.

2. Agility & Faster Deployment

By leveraging DevOps and CI/CD pipelines, cloud-native development accelerates application releases, reducing time-to-market.

3. Cost Efficiency

Organizations only pay for the cloud resources they use, eliminating the need for expensive on-premise infrastructure.

4. Resilience & High Availability

Cloud-native applications are designed for fault tolerance, ensuring minimal downtime and automatic recovery.

5. Improved Security

Built-in cloud security features, automated compliance checks, and container isolation enhance application security.

Key Technologies in Cloud-Native Development

1. Microservices Architecture

Microservices break applications into smaller, independent services that communicate via APIs, improving maintainability and scalability.

2. Containers & Kubernetes

Technologies like Docker and Kubernetes allow for efficient container orchestration, making application deployment seamless across cloud environments.

3. Serverless Computing

Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions eliminate the need for managing infrastructure by running code in response to events.

4. DevOps & CI/CD

Automated build, test, and deployment processes streamline software development, ensuring rapid and reliable releases.

5. API-First Development

APIs enable seamless integration between services, facilitating interoperability across cloud environments.

Best Practices for Cloud-Native Development

1. Adopt a DevOps Culture

Encourage collaboration between development and operations teams to ensure efficient workflows.

2. Implement Infrastructure as Code (IaC)

Tools like Terraform and AWS CloudFormation help automate infrastructure provisioning and management.

3. Use Observability & Monitoring

Employ logging, monitoring, and tracing solutions like Prometheus, Grafana, and ELK Stack to gain insights into application performance.

4. Optimize for Security

Embed security best practices in the development lifecycle, using tools like Snyk, Aqua Security, and Prisma Cloud.

5. Focus on Automation

Automate testing, deployments, and scaling to improve efficiency and reduce human error.

Top Cloud-Native Development Service Providers in the USA

1. AWS Cloud-Native Services

Amazon Web Services offers a comprehensive suite of cloud-native tools, including AWS Lambda, ECS, EKS, and API Gateway.

2. Microsoft Azure

Azure’s cloud-native services include Azure Kubernetes Service (AKS), Azure Functions, and DevOps tools.

3. Google Cloud Platform (GCP)

GCP provides Kubernetes Engine (GKE), Cloud Run, and Anthos for cloud-native development.

4. IBM Cloud & Red Hat OpenShift

IBM Cloud and OpenShift focus on hybrid cloud-native solutions for enterprises.

5. Accenture Cloud-First

Accenture helps businesses adopt cloud-native strategies with AI-driven automation.

6. ThoughtWorks

ThoughtWorks specializes in agile cloud-native transformation and DevOps consulting.

Industry Impact of Cloud-Native Development in the USA

1. Financial Services

Banks and fintech companies use cloud-native applications to enhance security, compliance, and real-time data processing.

2. Healthcare

Cloud-native solutions improve patient data accessibility, enable telemedicine, and support AI-driven diagnostics.

3. E-commerce & Retail

Retailers leverage cloud-native technologies to optimize supply chain management and enhance customer experiences.

4. Media & Entertainment

Streaming services utilize cloud-native development for scalable content delivery and personalization.

Future Trends in Cloud-Native Development

1. Multi-Cloud & Hybrid Cloud Adoption

Businesses will increasingly adopt multi-cloud and hybrid cloud strategies for flexibility and risk mitigation.

2. AI & Machine Learning Integration

AI-driven automation will enhance DevOps workflows and predictive analytics in cloud-native applications.

3. Edge Computing

Processing data closer to the source will improve performance and reduce latency for cloud-native applications.

4. Enhanced Security Measures

Zero-trust security models and AI-driven threat detection will become integral to cloud-native architectures.

Conclusion

Cloud-native development is reshaping how businesses in the USA innovate, scale, and optimize operations. By leveraging microservices, containers, DevOps, and automation, organizations can achieve agility, cost-efficiency, and resilience. As the cloud-native ecosystem continues to evolve, staying ahead of trends and adopting best practices will be essential for businesses aiming to thrive in the digital era.

1 note

·

View note

Text

Top 5 Things You’ll Learn in DO480 (And Why They Matter)

If you're working with Red Hat OpenShift, the DO480 – Red Hat Certified Specialist in Multicluster Management with Red Hat OpenShift course is a game-changer. At HawkStack, we’ve trained countless professionals on this course — and the outcomes speak for themselves.

Here are the top five skills you’ll pick up in DO480, and more importantly, why they matter in the real world.

1. 🌐 Multicluster Management with Open Cluster Management (OCM)

What You’ll Learn:

You’ll dive deep into Open Cluster Management to control multiple OpenShift clusters from a single interface. This includes deploying clusters, setting policies, and managing access centrally.

Why It Matters:

Most enterprises run multiple clusters — across regions or clouds. OCM saves time, reduces human error, and helps you stay compliant.

At HawkStack, we’ve seen teams go from struggling with manual tasks to managing 10+ clusters with ease after this module.

2. 🔐 Policy-Driven Governance and Security

What You’ll Learn:

You'll master how to define security, compliance, and configuration policies across all your clusters.

Why It Matters:

With increasing focus on data protection and industry regulations, being able to automate and enforce policies consistently is no longer optional.

At HawkStack, we teach this with real-world policy examples used in production environments.

3. 🚀 GitOps with Argo CD at Scale

What You’ll Learn:

You’ll implement GitOps workflows using Argo CD, allowing continuous delivery and configuration of apps across clusters from a Git repository.

Why It Matters:

GitOps ensures that your deployments are auditable, repeatable, and rollback-friendly. This is crucial for teams pushing changes multiple times a day.

Our HawkStack learners frequently highlight this as a game-changing skill in interviews and on the job.

4. 📦 Application Lifecycle Management in a Multicluster Setup

What You’ll Learn:

You’ll deploy and manage applications that span across clusters — and understand how to scale them reliably.

Why It Matters:

As businesses move towards hybrid or multi-cloud, being able to handle the full app lifecycle across clusters gives you a massive edge.

HawkStack’s labs are focused on hands-on practice with enterprise-grade apps, not just theory.

5. 📊 Observability and Troubleshooting Across Clusters

What You’ll Learn:

You’ll set up centralized monitoring, logging, and alerting — making it easy to detect issues and respond faster.

Why It Matters:

Downtime across clusters = money lost. Unified observability helps your team stay proactive instead of reactive.

At HawkStack, we simulate real-world incidents during training so you’re ready for anything.

Final Thoughts

Whether you're an OpenShift admin, SRE, or platform engineer, DO480 equips you with critical, enterprise-ready skills. It’s not just about passing an exam. It’s about solving real problems at scale.

And if you're serious about mastering OpenShift multicluster management, there’s no better place to start than HawkStack — where we blend hands-on labs, industry best practices, and certification coaching all in one.

Ready to Level Up?

🚀 Join our next DO480 batch at HawkStack and become a multicluster pro. Visit www.hawkstack.com

0 notes

Text

📘 Configure Log Forwarding to Azure Monitor in OpenShift – No Coding Needed

When you’re running apps in production on OpenShift, logs are your best friend. They help you understand what’s working, what’s failing, and how to fix issues fast. But going through logs manually on each pod or node? That’s a nightmare.

That’s where log forwarding to Azure Monitor comes in. With just a few configuration steps (no scripting or coding needed), you can send all your OpenShift logs directly to Azure Monitor, where they’re easier to manage, search, and store long-term.

🧠 What Is Log Forwarding?

Log forwarding means automatically sending logs (from your applications and the cluster itself) to a centralized system. In this case, we’re sending OpenShift logs to Azure Monitor, Microsoft’s monitoring platform.

💡 Why Use Azure Monitor?

One dashboard for everything – apps, infra, and network

Smart alerting when something goes wrong

Better visibility for developers and ops teams

Long-term storage without cluttering your OpenShift cluster

Integrates well with tools like Power BI, Logic Apps, and Azure Sentinel

🔧 What You Need Before You Start

Here’s what to make sure is ready:

✅ OpenShift Cluster (version 4.x) ✅ Cluster Logging Operator installed (can be done from OperatorHub) ✅ Azure Log Analytics Workspace (in Azure Monitor) ✅ Access to the Azure Portal (to get workspace ID and key) ✅ OpenShift Web Console access (with admin privileges)

🪜 Steps to Set It Up (No Coding)

1️⃣ Install the Cluster Logging Operator

Log into your OpenShift Web Console

Go to Operators → OperatorHub

Search for "Cluster Logging"

Click Install, then follow the prompts

It will install everything needed for log collection

2️⃣ Get Your Azure Monitor Details

In your Azure Portal:

Go to Azure Monitor → Logs

Click on your Log Analytics Workspace

Note down the Workspace ID and Primary Key (you’ll need both)

3️⃣ Create a Secret (Via Web Console)

Go to Workloads → Secrets in openshift-logging project

Click Create → Key/Value Secret

Name it: azure-monitor-secret

Add two keys:

customer_id → your Workspace ID

shared_key → your Primary Key

Click Create

4️⃣ Set Up Log Forwarding

Go to Administration → Custom Resource Definitions (CRDs)

Search for: ClusterLogForwarder

Click Create instance

Use the form to define:

Output name: azure-monitor

Type: azure-monitor

Reference the secret you just created

Choose which logs to forward (application, infrastructure, audit)

There’s no code here – it’s a guided form in the console.

5️⃣ Check That Logs Are Reaching Azure

After a few minutes:

Go back to your Azure Monitor

Click on Logs under your workspace

Use the search bar to explore logs using queries like: nginxCopyEditOpenShiftLogs_CL

🔄 Ongoing Benefits

Once set up:

You don’t have to touch it again

Logs will flow automatically

You’ll have full visibility across all your apps and infrastructure

✅ Summary

You just connected OpenShift to Azure Monitor – without writing a single line of code.

📦 All your app and cluster logs are now centralized 📊 You can set up dashboards, alerts, and reports in Azure 💼 Great for compliance, audits, and performance tracking For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Are there any comparable servers to the Dell PowerEdge R940xa in the market?

Several enterprise servers on the market offer comparable performance and features to the Dell PowerEdge R940xa for GPU-accelerated, compute-intensive workloads like AI/ML, HPC, and large-scale data analytics. Below are the top competitors, categorized by key capabilities:

1. HPE ProLiant DL380a Gen11

Key Specs

Processors: Dual 4th/5th Gen Intel Xeon Scalable (up to 64 cores) or AMD EPYC 9004 series (up to 128 cores). Memory: Up to 8 TB DDR5 (24 DIMM slots). GPU Support: Up to 4 double-wide GPUs (e.g., NVIDIA H100, A100) via PCIe Gen5 slots. Storage: 20 EDSFF drives or 8x 2.5" NVMe/SATA/SAS bays. Management: HPE iLO 6 with Silicon Root of Trust for security. Use Case: Ideal for hybrid cloud, AI inference, and virtualization. While it’s a 2-socket server, its GPU density and memory bandwidth rival the R940xa’s 4-socket design in certain workloads.

2. Supermicro AS-4125GS-TNRT (Dual AMD EPYC 9004)

Key Specs

Processors: Dual AMD EPYC 9004 series (up to 128 cores total). Memory: Up to 6 TB DDR5-4800 (24 DIMM slots). GPU Support: Up to 8 double-wide GPUs (e.g., NVIDIA H100, AMD MI210) with PCIe Gen5 connectivity. Storage: 24x 2.5" NVMe/SATA/SAS drives (4 dedicated NVMe). Flexibility: Supports mixed GPU configurations (e.g., NVIDIA + AMD) for workload-specific optimization. Use Case: Dominates in AI training, HPC, and edge computing. Its 8-GPU capacity outperforms the R940xa’s 4-GPU limit for parallel processing.

3. Lenovo ThinkSystem SR950 V3

Key Specs

Processors: Up to 8 Intel Xeon Scalable processors (28 cores each). Memory: 12 TB DDR4 (96 DIMM slots) with support for persistent memory. GPU Support: Up to 4 double-wide GPUs (e.g., NVIDIA A100) via PCIe Gen4 slots. Storage: 24x 2.5" drives or 12x NVMe U.2 drives. Performance: Holds multiple SPECpower and SAP HANA benchmarks, making it ideal for mission-critical databases. Use Case: Targets ERP, SAP HANA, and large-scale transactional workloads. While its GPU support matches the R940xa, its 8-socket design excels in multi-threaded applications.

4. IBM Power Systems AC922 (Refurbished)

Key Specs

Processors: Dual IBM Power9 (32 or 40 cores) with NVLink 2.0 for GPU-CPU coherence. Memory: Up to 2 TB DDR4. GPU Support: Up to 4 NVIDIA Tesla V100 with NVLink for AI training. Storage: 2x 2.5" SATA/SAS drives. Ecosystem: Optimized for Red Hat OpenShift and AI frameworks like TensorFlow. Use Case: Legacy HPC and AI workloads. Refurbished units offer cost savings but may lack modern GPU compatibility (e.g., H100).

5. Cisco UCS C480 M6

Key Specs

Processors: Dual 4th Gen Intel Xeon Scalable (up to 60 cores). Memory: Up to 6 TB DDR5 (24 DIMM slots). GPU Support: Up to 6 double-wide GPUs (e.g., NVIDIA A100, L40) via PCIe Gen5 slots. Storage: 24x 2.5" drives or 12x NVMe U.2 drives. Networking: Built-in Cisco UCS Manager for unified infrastructure management. Use Case: Balances GPU density and storage scalability for edge AI and distributed data solutions.

6. Huawei TaiShan 200 2280 (ARM-Based)

Key Specs

Processors: Dual Huawei Kunpeng 920 (ARM-based, 64 cores). Memory: Up to 3 TB DDR4 (24 DIMM slots). GPU Support: Up to 4 PCIe Gen4 GPUs (e.g., NVIDIA T4). Storage: 24x 2.5" drives for software-defined storage. Use Case: Optimized for cloud-native and ARM-compatible workloads, offering energy efficiency but limited GPU performance compared to x86 alternatives.

Key Considerations for Comparison

Multi-Socket Performance

The R940xa’s 4-socket design excels in CPU-bound workloads, but competitors like the Supermicro AS-4125GS-TNRT (dual EPYC 9004) and HPE DL380a Gen11 (dual Xeon/EPYC) often match or exceed its GPU performance with higher core density and PCIe Gen5 bandwidth.

GPU Flexibility

Supermicro’s AS-4125GS-TNRT supports up to 8 GPUs, while the R940xa is limited to 4. This makes Supermicro a better fit for large-scale AI training clusters.

Memory and Storage

The Lenovo SR950 V3 (12 TB) and HPE DL380a Gen11 (8 TB) outperform the R940xa’s 6 TB memory ceiling, critical for in-memory databases like SAP HANA.

Cost vs. New/Refurbished

Refurbished IBM AC922 units offer Tesla V100 support at a fraction of the R940xa’s cost, but lack modern GPU compatibility. New Supermicro and HPE models provide better future-proofing.

Ecosystem and Software

Dell’s iDRAC integrates seamlessly with VMware and Microsoft environments, while IBM Power Systems and Huawei TaiShan favor Linux and ARM-specific stacks.

Conclusion

For direct GPU-accelerated workloads, the Supermicro AS-4125GS-TNRT (8 GPUs) and HPE DL380a Gen11 (4 GPUs) are the closest competitors, offering superior GPU density and PCIe Gen5 connectivity. For multi-socket CPU performance, the Lenovo SR950 V3 (8-socket) and Cisco UCS C480 M6 (6 GPUs) stand out. Refurbished IBM AC922 units provide budget-friendly alternatives for legacy AI/HPC workloads. Ultimately, the choice depends on your priorities: GPU scalability, multi-threaded CPU power, or cost-efficiency.

1 note

·

View note

Text

Best Red Hat courses online in Bangalore

Course InfoReviews

About Course

This course provides a comprehensive introduction to container technologies and the Red Hat OpenShift Container Platform. Designed for IT professionals and developers, it focuses on building, deploying, and managing containerized applications using industry-standard tools like Podman and Kubernetes. By the end of this course, you'll gain hands-on experience in deploying applications on OpenShift and managing their lifecycle.

Show Less

What I will learn?

Build and manage containerized applications

Understand container and pod orchestration

Deploy and manage workloads in OpenShift

Course Curriculum

Module 1: Introduction to OpenShift and Containers

Module 2: Managing Applications in OpenShift

Module 3: Introduction to Kubernetes Concepts

Module 4: Deploying and Scaling Applications

Module 5: Troubleshooting Basics

OpenShift DO180 (Red Hat OpenShift I: Containers & Kubernetes) Online Exam & Certification

Get in Touch

Founded in 2004, COSSINDIA (Prodevans wing) is an ISO 9001:2008 certified a global IT training and company. Created with vision to offer high quality training services to individuals and the corporate, in the field of ‘IT Infrastructure Management’, we scaled new heights with every passing year.

Quick Links

Webinar

Privacy Policy

Terms of Use

Blogs

About Us

Contact Us

Follow Us

Facebook

Instagram

Youtube

LinkedIn

Contact Info

Monday - Sunday: 7:30 – 21:00 hrs.

Hyderabad Office: +91 7799 351 640

Bangalore Office: +91 72044 31703 / +91 8139 990 051

#Red Hat OpenShift DO180#Containers & Kubernetes Training#OpenShift Fundamentals Course#Kubernetes Essentials#Container Orchestration#Red Hat Certified Specialist#DevOps & CI/CD with OpenShift#Kubernetes Administration#OpenShift Application Deployment#Linux Container Management#docker-to-kubernetes transiti

0 notes

Text

Kubernetes Cluster Management at Scale: Challenges and Solutions

As Kubernetes has become the cornerstone of modern cloud-native infrastructure, managing it at scale is a growing challenge for enterprises. While Kubernetes excels in orchestrating containers efficiently, managing multiple clusters across teams, environments, and regions presents a new level of operational complexity.

In this blog, we’ll explore the key challenges of Kubernetes cluster management at scale and offer actionable solutions, tools, and best practices to help engineering teams build scalable, secure, and maintainable Kubernetes environments.

Why Scaling Kubernetes Is Challenging

Kubernetes is designed for scalability—but only when implemented with foresight. As organizations expand from a single cluster to dozens or even hundreds, they encounter several operational hurdles.

Key Challenges:

1. Operational Overhead

Maintaining multiple clusters means managing upgrades, backups, security patches, and resource optimization—multiplied by every environment (dev, staging, prod). Without centralized tooling, this overhead can spiral quickly.

2. Configuration Drift

Cluster configurations often diverge over time, causing inconsistent behavior, deployment errors, or compliance risks. Manual updates make it difficult to maintain consistency.

3. Observability and Monitoring

Standard logging and monitoring solutions often fail to scale with the ephemeral and dynamic nature of containers. Observability becomes noisy and fragmented without standardization.

4. Resource Isolation and Multi-Tenancy

Balancing shared infrastructure with security and performance for different teams or business units is tricky. Kubernetes namespaces alone may not provide sufficient isolation.

5. Security and Policy Enforcement

Enforcing consistent RBAC policies, network segmentation, and compliance rules across multiple clusters can lead to blind spots and misconfigurations.

Best Practices and Scalable Solutions

To manage Kubernetes at scale effectively, enterprises need a layered, automation-driven strategy. Here are the key components:

1. GitOps for Declarative Infrastructure Management

GitOps leverages Git as the source of truth for infrastructure and application deployment. With tools like��ArgoCD or Flux, you can:

Apply consistent configurations across clusters.

Automatically detect and rollback configuration drifts.

Audit all changes through Git commit history.

Benefits:

· Immutable infrastructure

· Easier rollbacks

· Team collaboration and visibility

2. Centralized Cluster Management Platforms

Use centralized control planes to manage the lifecycle of multiple clusters. Popular tools include:

Rancher – Simplified Kubernetes management with RBAC and policy controls.

Red Hat OpenShift – Enterprise-grade PaaS built on Kubernetes.

VMware Tanzu Mission Control – Unified policy and lifecycle management.

Google Anthos / Azure Arc / Amazon EKS Anywhere – Cloud-native solutions with hybrid/multi-cloud support.

Benefits:

· Unified view of all clusters

· Role-based access control (RBAC)

· Policy enforcement at scale

3. Standardization with Helm, Kustomize, and CRDs

Avoid bespoke configurations per cluster. Use templating and overlays:

Helm: Define and deploy repeatable Kubernetes manifests.

Kustomize: Customize raw YAMLs without forking.

Custom Resource Definitions (CRDs): Extend Kubernetes API to include enterprise-specific configurations.

Pro Tip: Store and manage these configurations in Git repositories following GitOps practices.

4. Scalable Observability Stack

Deploy a centralized observability solution to maintain visibility across environments.

Prometheus + Thanos: For multi-cluster metrics aggregation.

Grafana: For dashboards and alerting.

Loki or ELK Stack: For log aggregation.

Jaeger or OpenTelemetry: For tracing and performance monitoring.

Benefits:

· Cluster health transparency

· Proactive issue detection

· Developer fliendly insights

5. Policy-as-Code and Security Automation

Enforce security and compliance policies consistently:

OPA + Gatekeeper: Define and enforce security policies (e.g., restrict container images, enforce labels).

Kyverno: Kubernetes-native policy engine for validation and mutation.

Falco: Real-time runtime security monitoring.

Kube-bench: Run CIS Kubernetes benchmark checks automatically.

Security Tip: Regularly scan cluster and workloads using tools like Trivy, Kube-hunter, or Aqua Security.

6. Autoscaling and Cost Optimization

To avoid resource wastage or service degradation:

Horizontal Pod Autoscaler (HPA) – Auto-scales pods based on metrics.

Vertical Pod Autoscaler (VPA) – Adjusts container resources.

Cluster Autoscaler – Scales nodes up/down based on workload.

Karpenter (AWS) – Next-gen open-source autoscaler with rapid provisioning.

Conclusion

As Kubernetes adoption matures, organizations must rethink their management strategy to accommodate growth, reliability, and governance. The transition from a handful of clusters to enterprise-wide Kubernetes infrastructure requires automation, observability, and strong policy enforcement.

By adopting GitOps, centralized control planes, standardized templates, and automated policy tools, enterprises can achieve Kubernetes cluster management at scale—without compromising on security, reliability, or developer velocity.

0 notes

Text

Hybrid Cloud Application: The Smart Future of Business IT

Introduction

In today’s digital-first environment, businesses are constantly seeking scalable, flexible, and cost-effective solutions to stay competitive. One solution that is gaining rapid traction is the hybrid cloud application model. Combining the best of public and private cloud environments, hybrid cloud applications enable businesses to maximize performance while maintaining control and security.

This 2000-word comprehensive article on hybrid cloud applications explains what they are, why they matter, how they work, their benefits, and how businesses can use them effectively. We also include real-user reviews, expert insights, and FAQs to help guide your cloud journey.

What is a Hybrid Cloud Application?

A hybrid cloud application is a software solution that operates across both public and private cloud environments. It enables data, services, and workflows to move seamlessly between the two, offering flexibility and optimization in terms of cost, performance, and security.

For example, a business might host sensitive customer data in a private cloud while running less critical workloads on a public cloud like AWS, Azure, or Google Cloud Platform.

Key Components of Hybrid Cloud Applications

Public Cloud Services – Scalable and cost-effective compute and storage offered by providers like AWS, Azure, and GCP.

Private Cloud Infrastructure – More secure environments, either on-premises or managed by a third-party.

Middleware/Integration Tools – Platforms that ensure communication and data sharing between cloud environments.

Application Orchestration – Manages application deployment and performance across both clouds.

Why Choose a Hybrid Cloud Application Model?

1. Flexibility

Run workloads where they make the most sense, optimizing both performance and cost.

2. Security and Compliance

Sensitive data can remain in a private cloud to meet regulatory requirements.

3. Scalability

Burst into public cloud resources when private cloud capacity is reached.

4. Business Continuity

Maintain uptime and minimize downtime with distributed architecture.

5. Cost Efficiency

Avoid overprovisioning private infrastructure while still meeting demand spikes.

Real-World Use Cases of Hybrid Cloud Applications

1. Healthcare

Protect sensitive patient data in a private cloud while using public cloud resources for analytics and AI.

2. Finance

Securely handle customer transactions and compliance data, while leveraging the cloud for large-scale computations.

3. Retail and E-Commerce

Manage customer interactions and seasonal traffic spikes efficiently.

4. Manufacturing

Enable remote monitoring and IoT integrations across factory units using hybrid cloud applications.

5. Education

Store student records securely while using cloud platforms for learning management systems.

Benefits of Hybrid Cloud Applications

Enhanced Agility

Better Resource Utilization

Reduced Latency

Compliance Made Easier

Risk Mitigation

Simplified Workload Management

Tools and Platforms Supporting Hybrid Cloud

Microsoft Azure Arc – Extends Azure services and management to any infrastructure.

AWS Outposts – Run AWS infrastructure and services on-premises.

Google Anthos – Manage applications across multiple clouds.

VMware Cloud Foundation – Hybrid solution for virtual machines and containers.

Red Hat OpenShift – Kubernetes-based platform for hybrid deployment.

Best Practices for Developing Hybrid Cloud Applications

Design for Portability Use containers and microservices to enable seamless movement between clouds.

Ensure Security Implement zero-trust architectures, encryption, and access control.

Automate and Monitor Use DevOps and continuous monitoring tools to maintain performance and compliance.

Choose the Right Partner Work with experienced providers who understand hybrid cloud deployment strategies.

Regular Testing and Backup Test failover scenarios and ensure robust backup solutions are in place.

Reviews from Industry Professionals

Amrita Singh, Cloud Engineer at FinCloud Solutions:

"Implementing hybrid cloud applications helped us reduce latency by 40% and improve client satisfaction."

John Meadows, CTO at EdTechNext:

"Our LMS platform runs on a hybrid model. We’ve achieved excellent uptime and student experience during peak loads."

Rahul Varma, Data Security Specialist:

"For compliance-heavy environments like finance and healthcare, hybrid cloud is a no-brainer."

Challenges and How to Overcome Them

1. Complex Architecture

Solution: Simplify with orchestration tools and automation.

2. Integration Difficulties

Solution: Use APIs and middleware platforms for seamless data exchange.

3. Cost Overruns

Solution: Use cloud cost optimization tools like Azure Advisor, AWS Cost Explorer.

4. Security Risks

Solution: Implement multi-layered security protocols and conduct regular audits.

FAQ: Hybrid Cloud Application

Q1: What is the main advantage of a hybrid cloud application?

A: It combines the strengths of public and private clouds for flexibility, scalability, and security.

Q2: Is hybrid cloud suitable for small businesses?

A: Yes, especially those with fluctuating workloads or compliance needs.

Q3: How secure is a hybrid cloud application?

A: When properly configured, hybrid cloud applications can be as secure as traditional setups.

Q4: Can hybrid cloud reduce IT costs?

A: Yes. By only paying for public cloud usage as needed, and avoiding overprovisioning private servers.

Q5: How do you monitor a hybrid cloud application?

A: With cloud management platforms and monitoring tools like Datadog, Splunk, or Prometheus.

Q6: What are the best platforms for hybrid deployment?

A: Azure Arc, Google Anthos, AWS Outposts, and Red Hat OpenShift are top choices.

Conclusion: Hybrid Cloud is the New Normal

The hybrid cloud application model is more than a trend—it’s a strategic evolution that empowers organizations to balance innovation with control. It offers the agility of the cloud without sacrificing the oversight and security of on-premises systems.

If your organization is looking to modernize its IT infrastructure while staying compliant, resilient, and efficient, then hybrid cloud application development is the way forward.

At diglip7.com, we help businesses build scalable, secure, and agile hybrid cloud solutions tailored to their unique needs. Ready to unlock the future? Contact us today to get started.

0 notes

Text

EX280: Red Hat OpenShift Administration

Red Hat OpenShift Administration is a vital skill for IT professionals interested in managing containerized applications, simplifying Kubernetes, and leveraging enterprise cloud solutions. If you’re looking to excel in OpenShift technology, this guide covers everything from its core concepts and prerequisites to advanced certification and career benefits.

1. What is Red Hat OpenShift?

Red Hat OpenShift is a robust, enterprise-grade Kubernetes platform designed to help developers build, deploy, and scale applications across hybrid and multi-cloud environments. It offers a simplified, consistent approach to managing Kubernetes, with added security, automation, and developer tools, making it ideal for enterprise use.

Key Components of OpenShift:

OpenShift Platform: The foundation for scalable applications with simplified Kubernetes integration.

OpenShift Containers: Allows seamless container orchestration for optimized application deployment.

OpenShift Cluster: Manages workload distribution, ensuring application availability across multiple nodes.

OpenShift Networking: Provides efficient network configuration, allowing applications to communicate securely.

OpenShift Security: Integrates built-in security features to manage access, policies, and compliance seamlessly.

2. Why Choose Red Hat OpenShift?

OpenShift provides unparalleled advantages for organizations seeking a Kubernetes-based platform tailored to complex, cloud-native environments. Here’s why OpenShift stands out among container orchestration solutions:

Enterprise-Grade Security: OpenShift Security layers, such as role-based access control (RBAC) and automated security policies, secure every component of the OpenShift environment.

Enhanced Automation: OpenShift Automation enables efficient deployment, management, and scaling, allowing businesses to speed up their continuous integration and continuous delivery (CI/CD) pipelines.

Streamlined Deployment: OpenShift Deployment features enable quick, efficient, and predictable deployments that are ideal for enterprise environments.

Scalability & Flexibility: With OpenShift Scaling, administrators can adjust resources dynamically based on application requirements, maintaining optimal performance even under fluctuating loads.

Simplified Kubernetes with OpenShift: OpenShift builds upon Kubernetes, simplifying its management while adding comprehensive enterprise features for operational efficiency.

3. Who Should Pursue Red Hat OpenShift Administration?

A career in Red Hat OpenShift Administration is suitable for professionals in several IT roles. Here’s who can benefit:

System Administrators: Those managing infrastructure and seeking to expand their expertise in container orchestration and multi-cloud deployments.

DevOps Engineers: OpenShift’s integrated tools support automated workflows, CI/CD pipelines, and application scaling for DevOps operations.

Cloud Architects: OpenShift’s robust capabilities make it ideal for architects designing scalable, secure, and portable applications across cloud environments.

Software Engineers: Developers who want to build and manage containerized applications using tools optimized for development workflows.

4. Who May Not Benefit from OpenShift?

While OpenShift provides valuable enterprise features, it may not be necessary for everyone:

Small Businesses or Startups: OpenShift may be more advanced than required for smaller, less complex projects or organizations with a limited budget.

Beginner IT Professionals: For those new to IT or with minimal cloud experience, starting with foundational cloud or Linux skills may be a better path before moving to OpenShift.

5. Prerequisites for Success in OpenShift Administration

Before diving into Red Hat OpenShift Administration, ensure you have the following foundational knowledge:

Linux Proficiency: Linux forms the backbone of OpenShift, so understanding Linux commands and administration is essential.

Basic Kubernetes Knowledge: Familiarity with Kubernetes concepts helps as OpenShift is built on Kubernetes.

Networking Fundamentals: OpenShift Networking leverages container networks, so knowledge of basic networking is important.

Hands-On OpenShift Training: Comprehensive OpenShift training, such as the OpenShift Administration Training and Red Hat OpenShift Training, is crucial for hands-on learning.

Read About Ethical Hacking

6. Key Benefits of OpenShift Certification

The Red Hat OpenShift Certification validates skills in container and application management using OpenShift, enhancing career growth prospects significantly. Here are some advantages:

EX280 Certification: This prestigious certification verifies your expertise in OpenShift cluster management, automation, and security.

Job-Ready Skills: You’ll develop advanced skills in OpenShift deployment, storage, scaling, and troubleshooting, making you an asset to any IT team.

Career Mobility: Certified professionals are sought after for roles in OpenShift Administration, cloud architecture, DevOps, and systems engineering.

7. Important Features of OpenShift for Administrators

As an OpenShift administrator, mastering certain key features will enhance your ability to manage applications effectively and securely:

OpenShift Operator Framework: This framework simplifies application lifecycle management by allowing users to automate deployment and scaling.

OpenShift Storage: Offers reliable, persistent storage solutions critical for stateful applications and complex deployments.

OpenShift Automation: Automates manual tasks, making CI/CD pipelines and application scaling efficiently.

OpenShift Scaling: Allows administrators to manage resources dynamically, ensuring applications perform optimally under various load conditions.

Monitoring & Logging: Comprehensive tools that allow administrators to keep an eye on applications and container environments, ensuring system health and reliability.

8. Steps to Begin Your OpenShift Training and Certification

For those seeking to gain Red Hat OpenShift Certification and advance their expertise in OpenShift administration, here’s how to get started:

Enroll in OpenShift Administration Training: Structured OpenShift training programs provide foundational and advanced knowledge, essential for handling OpenShift environments.

Practice in Realistic Environments: Hands-on practice through lab simulators or practice clusters ensures real-world application of skills.

Prepare for the EX280 Exam: Comprehensive EX280 Exam Preparation through guided practice will help you acquire the knowledge and confidence to succeed.

9. What to Do After OpenShift DO280?

After completing the DO280 (Red Hat OpenShift Administration) certification, you can further enhance your expertise with advanced Red Hat training programs:

a) Red Hat OpenShift Virtualization Training (DO316)

Learn how to integrate and manage virtual machines (VMs) alongside containers in OpenShift.

Gain expertise in deploying, managing, and troubleshooting virtualized workloads in a Kubernetes-native environment.

b) Red Hat OpenShift AI Training (AI267)

Master the deployment and management of AI/ML workloads on OpenShift.

Learn how to use OpenShift Data Science and MLOps tools for scalable machine learning pipelines.

c) Red Hat Satellite Training (RH403)

Expand your skills in managing OpenShift and other Red Hat infrastructure on a scale.

Learn how to automate patch management, provisioning, and configuration using Red Hat Satellite.

These advanced courses will make you a well-rounded OpenShift expert, capable of handling complex enterprise deployments in virtualization, AI/ML, and infrastructure automation.

Conclusion: Is Red Hat OpenShift the Right Path for You?

Red Hat OpenShift Administration is a valuable career path for IT professionals dedicated to mastering enterprise Kubernetes and containerized application management. With skills in OpenShift Cluster management, OpenShift Automation, and secure OpenShift Networking, you will become an indispensable asset in modern, cloud-centric organizations.

KR Network Cloud is a trusted provider of comprehensive OpenShift training, preparing you with the skills required to achieve success in EX280 Certification and beyond.

Why Join KR Network Cloud?

With expert-led training, practical labs, and career-focused guidance, KR Network Cloud empowers you to excel in Red Hat OpenShift Administration and achieve your professional goals.

https://creativeceo.mn.co/posts/the-ultimate-guide-to-red-hat-openshift-administration

https://bogonetwork.mn.co/posts/the-ultimate-guide-to-red-hat-openshift-administration

#openshiftadmin#redhatopenshift#openshiftvirtualization#DO280#DO316#openshiftai#ai267#redhattraining#krnetworkcloud#redhatexam#redhatcertification#ittraining

0 notes

Text

Cloud Computing Solutions: Which Private Cloud Platform is Right for You?

If you’ve been navigating the world of IT or digital infrastructure, chances are you’ve come across the term cloud computing solutions more than once. From running websites and apps to storing sensitive data — everything is shifting to the cloud. But with so many options out there, how do you know which one fits your business needs best?

Let’s talk about it — especially if you're considering private or hybrid cloud setups.

Whether you’re an enterprise looking for better performance or a growing business wanting more control over your infrastructure, private cloud hosting might be your perfect match. In this post, we’ll break down some of the most powerful platforms out there, including VMware Cloud Hosting, Nutanix, H

yper-V, Proxmox, KVM, OpenStack, and OpenShift Private Cloud Hosting.

First Things First: What Are Cloud Computing Solutions?

In simple terms, cloud computing solutions provide you with access to computing resources like servers, storage, and software — but instead of managing physical hardware, you rent them virtually, usually on a pay-as-you-go model.

There are three main types of cloud environments:

Public Cloud – Shared resources with others (like Google Cloud or AWS)

Private Cloud – Resources are dedicated just to you

Hybrid Cloud – A mix of both, giving you flexibility

Private cloud platforms offer a high level of control, customization, and security — ideal for industries where uptime and data privacy are critical.

Let’s Dive Into the Top Private Cloud Hosting Platforms

1. VMware Cloud Hosting

VMware is a veteran in the cloud space. It allows you to replicate your on-premise data center environment in the cloud, so there’s no need to learn new tools. If you already use tools like vSphere or vSAN, VMware Cloud Hosting is a natural fit.

It’s highly scalable and secure — a great choice for businesses of any size that want cloud flexibility without completely overhauling their systems.

2. Nutanix Private Cloud Hosting

If you're looking for simplicity and power packed together, Nutanix Private Cloud Hosting might just be your best friend. Nutanix shines when it comes to user-friendly dashboards, automation, and managing hybrid environments. It's ideal for teams who want performance without spending hours managing infrastructure.

3. Hyper-V Private Cloud Hosting

For businesses using a lot of Microsoft products, Hyper-V Private Cloud Hosting makes perfect sense. Built by Microsoft, Hyper-V integrates smoothly with Windows Server and Microsoft System Center, making virtualization easy and reliable.

It's a go-to for companies already in the Microsoft ecosystem who want private cloud flexibility without leaving their comfort zone.

4. Proxmox Private Cloud Hosting

If you’re someone who appreciates open-source platforms, Proxmox Private Cloud Hosting might be right up your alley. It combines KVM virtualization and Linux containers (LXC) in one neat package.

Proxmox is lightweight, secure, and customizable. Plus, its web-based dashboard is super intuitive — making it a favorite among IT admins and developers alike.

5. KVM Private Cloud Hosting

KVM (Kernel-based Virtual Machine) is another open-source option that’s fast, reliable, and secure. It’s built into Linux, so if you’re already in the Linux world, it integrates seamlessly.

KVM Private Cloud Hosting is perfect for businesses that want a lightweight, customizable, and high-performing virtualization environment.

6. OpenStack Private Cloud Hosting

Need full control and want to scale massively? OpenStack Private Cloud Hosting is worth a look. It’s open-source, flexible, and designed for large-scale environments.

OpenStack works great for telecom, research institutions, or any organization that needs a lot of flexibility and power across private or public cloud deployments.

7. OpenShift Private Cloud Hosting

If you're building and deploying apps in containers, OpenShift Private Cloud Hosting In serverbasket is a dream come true. Developed by Red Hat, it's built on Kubernetes and focuses on DevOps, automation, and rapid application development.

It’s ideal for teams running CI/CD pipelines, microservices, or containerized workloads — especially when consistency and speed are top priorities.

So, Which One Should You Choose?

The right private cloud hosting solution really depends on your business needs. Here’s a quick cheat sheet:

Go for VMware if you want enterprise-grade features with familiar tools.

Try Nutanix if you want something powerful but easy to manage.

Hyper-V is perfect if you’re already using Microsoft tech.

Proxmox and KVM are great for tech-savvy teams that love open source.

OpenStack is ideal for large-scale, customizable deployments.

OpenShift is built for developers who live in the container world.

Final Thoughts

Cloud computing isn’t a one-size-fits-all solution. But with platforms like VMware, Nutanix, Hyper-V, Proxmox, KVM, OpenStack, and OpenShift Private Cloud Hosting, you’ve got options that can scale with you — whether you're running a small development team or a global enterprise.

Choosing the right platform means looking at your current infrastructure, your team's expertise, and where you want to be a year from now. Whatever your path, the right cloud solution can drive efficiency, reduce overhead, and set your business up for long-term success.

#Top Cloud Computing Solutions#Nutanix Private Cloud#VMware Cloud Server Hosting#Proxmox Private Cloud#KVM Private Cloud

1 note

·

View note

Text

Application Performance Monitoring Market Growth Drivers, Size, Share, Scope, Analysis, Forecast, Growth, and Industry Report 2032

The Application Performance Monitoring Market was valued at USD 7.26 Billion in 2023 and is expected to reach USD 22.81 Billion by 2032, growing at a CAGR of 34.61% over the forecast period 2024-2032.

The Application Performance Monitoring (APM) market is expanding rapidly due to the increasing demand for seamless digital experiences. Businesses are investing in APM solutions to ensure optimal application performance, minimize downtime, and enhance user satisfaction. The rise of cloud computing, AI-driven analytics, and real-time monitoring tools is further accelerating market growth.

The Application Performance Monitoring market continues to evolve as enterprises prioritize application efficiency and system reliability. With the increasing complexity of IT infrastructures and a growing reliance on digital services, organizations are turning to APM solutions to detect, diagnose, and resolve performance bottlenecks in real time. The shift toward microservices, hybrid cloud environments, and edge computing has made APM essential for maintaining operational excellence.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3821

Market Keyplayers:

IBM (IBM Instana, IBM APM)

New Relic (New Relic One, New Relic Browser)

Dynatrace (Dynatrace Full-Stack Monitoring, Dynatrace Application Security)

AppDynamics (AppDynamics APM, AppDynamics Database Monitoring)

Cisco (Cisco AppDynamics, Cisco ACI Analytics)

Splunk Inc. (Splunk Observability Cloud, Splunk IT Service Intelligence)

Micro Focus (Silk Central, LoadRunner)

Broadcom Inc. (CA APM, CA Application Delivery Analysis)

Elastic Search B.V. (Elastic APM, Elastic Stack)

Datadog (Datadog APM, Datadog Real User Monitoring)

Riverbed Technology (SteelCentral APM, SteelHead)

SolarWinds (SolarWinds APM, SolarWinds Network Performance Monitor)

Oracle (Oracle Management Cloud, Oracle Cloud Infrastructure APM)

ServiceNow (ServiceNow APM, ServiceNow Performance Analytics)

Red Hat (Red Hat OpenShift Monitoring, Red Hat Insights)

AppOptics (AppOptics APM, AppOptics Infrastructure Monitoring)

Honeycomb (Honeycomb APM, Honeycomb Distributed Tracing)

Instana (Instana APM, Instana Real User Monitoring)

Scout APM (Scout APM, Scout Error Tracking)

Sentry (Sentry APM, Sentry Error Tracking)

Market Trends Driving Growth

1. AI-Driven Monitoring and Automation

AI and machine learning are revolutionizing APM by enabling predictive analytics, anomaly detection, and automated issue resolution, reducing manual intervention.

2. Cloud-Native and Hybrid APM Solutions

As businesses migrate to cloud and hybrid infrastructures, APM solutions are adapting to provide real-time visibility across on-premises, cloud, and multi-cloud environments.

3. Observability and End-to-End Monitoring

APM is evolving into full-stack observability, integrating application monitoring with network, security, and infrastructure insights for holistic performance analysis.

4. Focus on User Experience and Business Impact

Companies are increasingly adopting APM solutions that correlate application performance with user experience metrics, ensuring optimal service delivery and business continuity.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3821

Market Segmentation:

By Solution

Software

Services

By Deployment

Cloud

On-Premise

By Enterprise Size

SMEs

Large Enterprises

By Access Type

Web APM

Mobile APM

By End User

BFSI

E-Commerce

Manufacturing

Healthcare

Retail

IT and Telecommunications

Media and Entertainment

Academics

Government

Market Analysis: Growth and Key Drivers

Increased Digital Transformation: Enterprises are accelerating cloud adoption and digital services, driving demand for advanced monitoring solutions.

Rising Complexity of IT Environments: Microservices, DevOps, and distributed architectures require comprehensive APM tools for performance optimization.

Growing Demand for Real-Time Analytics: Businesses seek AI-powered insights to proactively detect and resolve performance issues before they impact users.

Compliance and Security Needs: APM solutions help organizations meet regulatory requirements by ensuring application integrity and data security.

Future Prospects: The Road Ahead

1. Expansion of APM into IoT and Edge Computing

As IoT and edge computing continue to grow, APM solutions will evolve to monitor and optimize performance across decentralized infrastructures.

2. Integration with DevOps and Continuous Monitoring

APM will play a crucial role in DevOps pipelines, enabling faster issue resolution and performance optimization throughout the software development lifecycle.

3. Rise of Autonomous APM Systems

AI-driven automation will lead to self-healing applications, where systems can automatically detect, diagnose, and fix performance issues with minimal human intervention.

4. Growth in Industry-Specific APM Solutions

APM vendors will develop specialized solutions for industries like finance, healthcare, and e-commerce, addressing sector-specific performance challenges and compliance needs.

Access Complete Report: https://www.snsinsider.com/reports/application-performance-monitoring-market-3821

Conclusion

The Application Performance Monitoring market is poised for substantial growth as businesses prioritize digital excellence, system resilience, and user experience. With advancements in AI, cloud-native technologies, and observability, APM solutions are becoming more intelligent and proactive. Organizations that invest in next-generation APM tools will gain a competitive edge by ensuring seamless application performance, improving operational efficiency, and enhancing customer satisfaction.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Application Performance Monitoring market#Application Performance Monitoring market Analysis#Application Performance Monitoring market Scope#Application Performance Monitoring market Growth#Application Performance Monitoring market Share#Application Performance Monitoring market Trends

0 notes

Text

Master Advanced OpenShift Administration with DO380

Red Hat OpenShift Administration III: Scaling Kubernetes Like a Pro

As enterprise applications scale, so do the challenges of managing containerized environments. If you've already got hands-on experience with Red Hat OpenShift and want to go deeper, DO380 - Red Hat OpenShift Administration III: Scaling Kubernetes Deployments in the Enterprise is built just for you.

Why DO380?

This course is designed for system administrators, DevOps engineers, and platform operators who want to gain advanced skills in managing large-scale OpenShift clusters. You'll learn how to automate day-to-day tasks, ensure application availability, and manage performance at scale.

In short—DO380 helps you go from OpenShift user to OpenShift power admin.

What You’ll Learn

✅ Automation with GitOps

Leverage Red Hat Advanced Cluster Management and Argo CD to manage application lifecycle across clusters using Git as a single source of truth.

✅ Cluster Scaling and Performance Tuning

Optimize OpenShift clusters by configuring autoscaling, managing cluster capacity, and tuning performance for enterprise workloads.

✅ Monitoring and Observability

Gain visibility into workloads, nodes, and infrastructure using Prometheus, Grafana, and the OpenShift Monitoring stack.

✅ Cluster Logging and Troubleshooting

Set up centralized logging and use advanced troubleshooting techniques to quickly resolve cluster issues.

✅ Disaster Recovery and High Availability

Implement strategies for disaster recovery, node replacement, and data protection in critical production environments.

Course Format

Classroom & Virtual Training Available

Duration: 4 Days

Exam (Optional): EX380 – Red Hat Certified Specialist in OpenShift Automation and Integration

This course prepares you not only for real-world production use but also for the Red Hat certification that proves it.

Who Should Take This Course?

OpenShift Administrators managing production clusters

Kubernetes practitioners looking to scale deployments

DevOps professionals automating OpenShift environments

RHCEs aiming to level up with OpenShift certifications

If you’ve completed DO180 and DO280, this is your natural next step.

Get Started with DO380 at HawkStack

At HawkStack Technologies, we offer expert-led training tailored for enterprise teams and individual learners. Our Red Hat Certified Instructors bring real-world experience into every session, ensuring you walk away ready to manage OpenShift like a pro.

🚀 Enroll now and take your OpenShift skills to the enterprise level.

🔗 Register Here www.hawkstack.com

Want help choosing the right OpenShift learning path?

📩 Reach out to our experts at [email protected]

0 notes

Text

🚀 Deploy Applications from External Registries on ROSA (Without Coding)

If you’re using Red Hat OpenShift Service on AWS (ROSA) and your application images are stored in a private container registry like Docker Hub, Quay.io, or your own internal registry, you can still easily deploy them — no coding required.

Let’s walk through how to get that done using just the OpenShift Web Console.

🧠 What’s the Use Case?

Sometimes, your container images aren’t stored in Red Hat’s default registry. Instead, they might be:

On Docker Hub (private repo)

In Quay.io

On JFrog Artifactory or GitHub Container Registry

You still want to use them in your ROSA cluster — and OpenShift makes that possible with just a few clicks.

✅ Step-by-Step: No Code Needed

1. Login to OpenShift Console

Open the OpenShift Web Console for your ROSA cluster.

Make sure you have access to the correct project (namespace) where you want to deploy your app.

2. Add Your Private Registry Credentials

Go to Workloads > Secrets

Click Create > Image Pull Secret

Choose Docker Registry as the secret type

Fill in:

Your private registry’s URL

Your username

Your password or token

Email (optional)

This secret tells OpenShift how to access your private image.

3. Link the Secret to Your Project

Go to Workloads > Secrets

Find your newly created secret

Click on the three dots (⋮) → Link secret to a service account

Choose the default service account

Select “for image pulls”

This step ensures OpenShift uses your secret automatically when pulling your app image.

4. Deploy the Application

Go to +Add in the left-hand menu

Select “Container Image”

Enter the full image path (e.g., registry.example.com/myorg/myapp:latest)

OpenShift will now use your image pull secret to fetch it

Set application name, resource type (like Deployment), and target port

Click Create

5. Expose the App

Once deployed, go to Networking > Routes

Click Create Route

Select the service you just deployed

This gives you a public URL to access the app

👩💻 Pro Tips

If your image fails to pull, double-check the image URL and secret details

Make sure the registry is reachable from your ROSA cluster

Rotate credentials securely when needed

🌐 Final Thoughts

Using external private registries with ROSA isn’t just possible — it’s seamless. With a few simple steps in the console, you can:

Securely connect to your private image source

Deploy apps without writing a line of code

Keep full control of your image pipeline

Perfect for teams managing applications across hybrid or multi-cloud environments.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

What is Cloud Application Development? – A Detailed Guide for 2025

Definition of a cloud-based application

Cloud-based applications are internet-based apps that may be accessed using cloud-based services. Enterprises utilize cloud apps to store their entire database, which end users may access using credentials from any device, browser, and location at any time.

These cloud services are cost-effective, time-saving, and conveniently accessible to end users. However, the cloud program is based on remote servers and runs quite smoothly. As a result, integrate cloud apps for flexibility and convenience of use in the commercial setting.

What is cloud application development?

Cloud application development entails creating internet-enabled software solutions that consumers may access from any browser and device. These programs do not rely on personal PCs or local servers for accessibility.

Types of Cloud-Based App Solutions

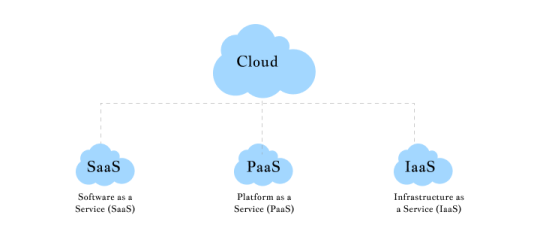

There are three major cloud-based software solutions to build for enterprises; which are:

Software as a Service (SaaS)

Software as a Service (SaaS) is cloud software developed through mobile apps and web browsers. These are accessible through the internet, allowing end users to access them without configurations or installation.

Use Cases of SaaS:

Manage clientele base and work aligned with custom relationship management (CRM) systems

Automating the tasks and operations in an organization

Organizing the documents and managing the data-sharing process

Planning for the upcoming events

Automate the signing process for products & services

Examples of SaaS – AWS, Salesforce, BigCommerce, Zendesk, Google Apps

Platform as a Service. (PaaS)

Platform as a Service (PaaS) is for developers who rent resources and services to construct their apps. Cloud apps rely on cloud providers for infrastructure, development tools, and operating systems. Furthermore, cloud vendors provide hardware and software resources to make mobile app development more agile and straightforward.

PaaS Use Cases:

Development Tools

Operating Systems

Database running infrastructure and middleware

Examples of PaaS: Windows Azure, OpenShift, and Heroku.

Infrastructure as a Service (IaaS).

Infrastructure as a Service (IaaS) cloud services; cloud providers manage the whole company infrastructure, including networks, servers, storage, and visualization. The cloud service provider keeps an eye on the current functions and operations.

Use Cases for IaaS

Tracking activities and functions.

Detailed invoice generation and disaster recovery/backup solutions.

Security Management

High-performance computing.

Examples of IaaS include PayPal, Zoom, Slack, and Microsoft Azure.

Types of Cloud-Based Apps Deployment

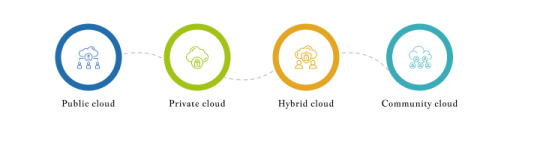

1. The public cloud

On any public cloud, the business data is stored on a third-party server that can be controlled by a certified cloud provider. The management of servers, resources, and infrastructure is the responsibility of these suppliers. Since third-party cloud providers will handle operations at the appropriate pricing for precise and seamless data processing, there is no need to buy hardware for data management. Furthermore, anyone with an internet connection can access these cloud apps.

2. Private Cloud

Only authorized users of a specific business can access the data via the internet in a private cloud, which is a personal cloud application. Strong firewalls protect these cloud apps, which the IT firm also keeps an eye on and controls.

3. Cloud Hybrid

You may use the advantages and capabilities of both public and private clouds with hybrid cloud apps. Both on-demand services and third-party apps may receive the data. Additionally, they can pick from a variety of deployment and optimization methods to make the less necessary informative data public and safeguard private data.

4. Cloud Community

Various businesses from related communities can access services and resources on the community cloud. This is neither private, which is limited to a single organization, nor public, which is open to everyone. Multiple organizations from the same community oversee and govern the neighborhood.

Cloud Application Examples

These days, cloud applications are used by the majority of corporations, enterprises, and large-scale enterprises to efficiently process databases and operations. Here are some excellent examples of cloud-based applications to show readers that it might be worthwhile:

Dropbox or Google Drive:

Everyone is aware of Google Drive. People all across the world use Dropbox or Google Drive to store files, retrieve them easily, process paperwork properly, and make it available from anywhere at any time.

Miro:

It is a digital or virtual dashboard that allows you to collaborate with other users in a more enjoyable and inventive manner. It promotes cooperation and real-time coordination with other distant teams. For improved accuracy and collaboration at work, users can SMS or make in-app video calls in the event of issues.

Figma:

Figma is an app for cloud-based design that makes it possible to process designs more precisely and effectively. By using plugins and widgets to automate the job, it makes it possible to finish design chores quickly.

How to Build Cloud Application – Step-by-Step Guide

The cloud applications development method differs greatly from that of developing web and mobile applications. While many others are also available for good developments, the majority of large-scale businesses use AWS development services for cloud app development. Finally, the entire process of developing a cloud application is acknowledged in the following step-by-step:

Learn about the Market Research and the Project

To decide what to develop, it is crucial to first learn about the project and conduct a market analysis. You need to research and comprehend which apps are appropriate for cloud development. In order to create the best cloud software, market research will help you gain a thorough understanding of the market and pinpoint the target market.

Talk about Including Specific Features and Architecture

Choose which functionalities and features to suggest. Choose the best option for seamless app performance by talking with the development firm about the cloud migration, services model, and cloud app architecture.

Select the Tech Stacks and Methods of Monetization

Acknowledge the tech stacks and business strategy after you have a firm grasp of the app’s features and capabilities. Before turning over the development, educate yourself on various technologies. Additionally, decide if you require a cloud-based application with a freemium or premium model, integrate in-app purchases, or use another unique method of monetization. To obtain ideas and knowledge for the best one, it is advisable to seek out cloud app development consulting from seasoned professionals.

Create the cloud application.

Following the selection of tech stacks and the development of features and functionalities for the cloud application, the UI/UX designs are the next step. For mobile apps to be user-friendly, their designs must be visually appealing and intuitive. Additionally, use caution when developing cloud-native apps, as it is crucial to design them based on user involvement in order to attract the attention of the intended audience more quickly.

Create the application and test it.

When the development stage comes around, it calls for precise planning, ongoing monitoring, and suitable implementation. Establish a connection with the team, assign tasks, split responsibilities, and develop cloud applications using the Scrum and Agile Methodologies. Additionally, prioritize cloud-native application development since it provides more individualized and convenient procedures.

The testing stage follows the development of the cloud application. Before the application is released into the market, the interrogating team will arrive to ensure that it performs well. The application’s performance and user experience must be flawless during the testing phase.

Deployment At The Dedicated Platforms

Proceed with the launch procedure on the specific deployment platform. For deployment, choose Android, Google Play Store, Apple App Store, or Cross Platform, with follow-ups and documentation completed.

Concluding remarks

For corporations, enterprises, and large organizations, cloud-based applications provide a very streamlined and seamless environment for managing databases and adhering to appropriate, high-level security measures during operations and functionality.

The benefits of using cloud application development services for a corporation are numerous. To succeed in the cutthroat market, you must, nevertheless, embrace the full potential of digitization as it evolves within the system.

Speak with a cloud application development company to ensure that functions are built and executed accurately in the corporate environment. The business and its experts will make wise recommendations and create flawless cloud software solutions.

Let Moonstack help you turn your ideas into reality in 2025 and beyond by developing state-of-the-art cloud applications that are customized to your company’s needs and guarantee scalability, security, and flawless performance!

0 notes

Text

Enhancing Application Performance in Hybrid and Multi-Cloud Environments with Cisco ACI

1 . Introduction to Hybrid and Multi-Cloud Environments

As businesses adopt hybrid and multi-cloud environments, ensuring seamless application performance becomes a critical challenge. Managing network connectivity, security, and traffic optimization across diverse cloud platforms can lead to complexity and inefficiencies.

Cisco ACI (Application Centric Infrastructure) simplifies this by providing an intent-based networking approach, enabling automation, centralized policy management, and real-time performance optimization.

With Cisco ACI Training, IT professionals can master the skills needed to deploy, configure, and optimize ACI for enhanced application performance in multi-cloud environments. This blog explores how Cisco ACI enhances performance, security, and visibility across hybrid and multi-cloud architectures.

2 . The Role of Cisco ACI in Multi-Cloud Performance Optimization

Cisco ACI is a software-defined networking (SDN) solution that simplifies network operations and enhances application performance across multiple cloud environments. It enables organizations to achieve:

Seamless multi-cloud connectivity for smooth integration between on-premises and cloud environments.

Centralized policy enforcement to maintain consistent security and compliance.

Automated network operations that reduce manual errors and accelerate deployments.

Optimized traffic flow, improving application responsiveness with real-time telemetry.

3 . Application-Centric Policy Automation with ACI

Traditional networking approaches rely on static configurations, making policy enforcement difficult in dynamic multi-cloud environments. Cisco ACI adopts an application-centric model, where network policies are defined based on business intent rather than IP addresses or VLANs.

Key Benefits of ACI’s Policy Automation:

Application profiles ensure that policies move with workloads across environments.

Zero-touch provisioning automates network configuration and reduces deployment time.

Micro-segmentation enhances security by isolating applications based on trust levels.

Seamless API integration connects with VMware NSX, Kubernetes, OpenShift, and cloud-native services.

4 . Traffic Optimization and Load Balancing with ACI

Application performance in multi-cloud environments is often hindered by traffic congestion, latency, and inefficient load balancing. Cisco ACI enhances network efficiency through:

Dynamic traffic routing, ensuring optimal data flow based on real-time network conditions.

Adaptive load balancing, which distributes workloads across cloud regions to prevent bottlenecks.

Integration with cloud-native load balancers like AWS ALB, Azure Load Balancer, and F5 to enhance application performance.

5 . Network Visibility and Performance Monitoring

Visibility is a major challenge in hybrid and multi-cloud networks. Without real-time insights, organizations struggle to detect bottlenecks, security threats, and application slowdowns.

Cisco ACI’s Monitoring Capabilities:

Real-time telemetry and analytics to continuously track network and application performance.

Cisco Nexus Dashboard integration for centralized monitoring across cloud environments.

AI-driven anomaly detection that automatically identifies and mitigates network issues.

Proactive troubleshooting using automation to resolve potential disruptions before they impact users.

6 . Security Considerations for Hybrid and Multi-Cloud ACI Deployments

Multi-cloud environments are prone to security challenges such as data breaches, misconfigurations, and compliance risks. Cisco ACI strengthens security with:

Micro-segmentation that restricts communication between workloads to limit attack surfaces.

A zero-trust security model enforcing strict access controls to prevent unauthorized access.

End-to-end encryption to protect data in transit across hybrid and multi-cloud networks.

AI-powered threat detection that continuously monitors for anomalies and potential attacks.

7 . Case Studies: Real-World Use Cases of ACI in Multi-Cloud Environments

1. Financial Institution

Challenge: Lack of consistent security policies across multi-cloud platforms.

Solution: Implemented Cisco ACI for unified security and network automation.

Result: 40% reduction in security incidents and improved compliance adherence.

2. E-Commerce Retailer

Challenge: High latency affecting customer experience during peak sales.

Solution: Used Cisco ACI to optimize traffic routing and load balancing.

Result: 30% improvement in transaction processing speeds.

8 . Best Practices for Deploying Cisco ACI in Hybrid and Multi-Cloud Networks

To maximize the benefits of Cisco ACI, organizations should follow these best practices:

Standardize network policies to ensure security and compliance across cloud platforms.

Leverage API automation to integrate ACI with third-party cloud services and DevOps tools.

Utilize direct cloud interconnects like AWS Direct Connect and Azure ExpressRoute for improved connectivity.

Monitor continuously using real-time telemetry and AI-driven analytics for proactive network management.

Regularly update security policies to adapt to evolving threats and compliance requirements.

9 . Future Trends: The Evolution of ACI in Multi-Cloud Networking

Cisco ACI is continuously evolving to adapt to emerging cloud and networking trends:

AI-driven automation will further optimize network performance and security.

Increased focus on container networking with enhanced support for Kubernetes and microservices architectures.

Advanced security integrations with improved compliance frameworks and automated threat detection.

Seamless multi-cloud orchestration through improved API-driven integrations with public cloud providers.

Conclusion

Cisco ACI plays a vital role in optimizing application performance in hybrid and multi-cloud environments by providing centralized policy control, traffic optimization, automation, and robust security.

Its intent-based networking approach ensures seamless connectivity, reduced latency, and improved scalability across multiple cloud platforms. By implementing best practices and leveraging AI-driven automation, businesses can enhance network efficiency while maintaining security and compliance.

For professionals looking to master these capabilities, enrolling in a Cisco ACI course can provide in-depth knowledge and hands-on expertise to deploy and manage ACI effectively in complex cloud environments.

0 notes

Text

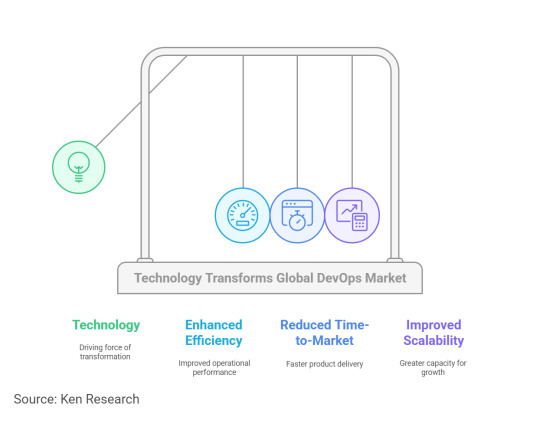

How Technology is Transforming the Global DevOps Market in 2028

Technology has been the driving force behind the transformation of the global DevOps market, which reached a valuation of $10 billion in 2023. The integration of cloud computing, artificial intelligence (AI), and automation tools is revolutionizing software development and IT operations, enabling organizations to enhance efficiency, reduce time-to-market, and improve scalability. This blog explores the technological advancements reshaping the DevOps industry, case studies of successful implementations, challenges in technology adoption, and the future outlook for the market.

Download the Sample Report@ Global DevOps Market

Technological Advancements

1. Cloud Computing in DevOps

Application and Benefits: Cloud computing has become the backbone of modern DevOps practices, offering scalability, flexibility, and cost-efficiency. Cloud-based DevOps tools simplify collaboration among distributed teams and streamline deployment processes.

Impact: In 2023, the cloud segment dominated the DevOps market due to its ability to support hybrid IT environments, enabling seamless integration across on-premise and cloud infrastructures.

Example: Microsoft Azure DevOps provides cloud-based solutions that integrate CI/CD pipelines, enabling faster development cycles for businesses of all sizes.

2. Artificial Intelligence (AI) and Machine Learning in DevOps

Application and Benefits: AI and machine learning are enhancing automation within DevOps pipelines by enabling predictive analytics, anomaly detection, and intelligent decision-making.

Impact: AI integration allows teams to optimize resource allocation, detect potential failures, and resolve issues proactively, reducing downtime and improving reliability.