#seems like a good usecase

Explore tagged Tumblr posts

Text

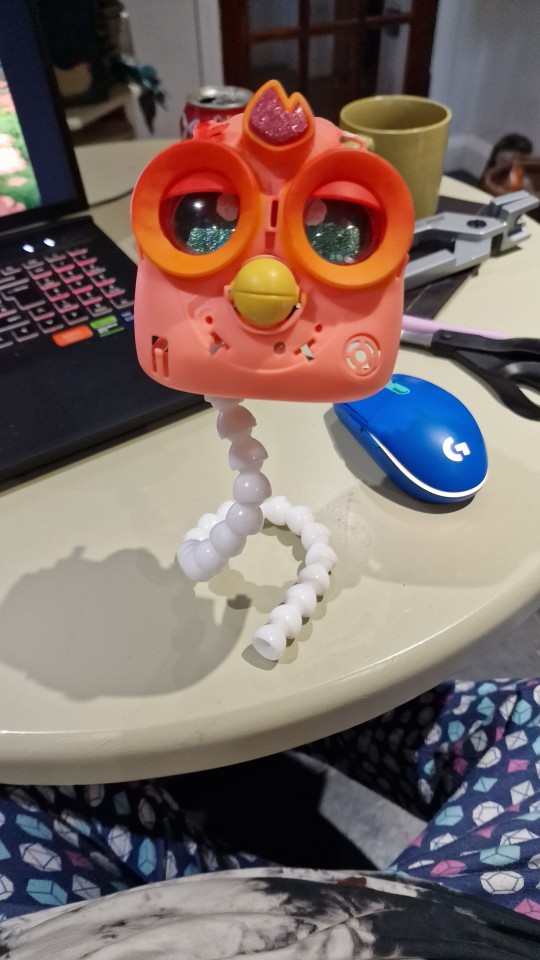

working on a ridiculous project

#never used these ball socket armatures before but i kinda love them#and am very interested in using one to finally make something ive been desperate too for a long time#which is a fraggle#i wanted an articulated plush toy fraggle maybe using the old commerical patterns or maybe crochet them and figure it out#but having one that can pose and move a bit so an inbetween of flat out plush toy and actual puppet you articulate with rods and hand#seems like a good usecase#but until then i've got to finish making oracle the creature

0 notes

Text

I've been thinking about how to have individuals that maximize for uniqueness, and how this is really a very difficult problem.

I've been somewhat idly working on a project I'm calling "72 Waifus", which is probably not going to become anything, but sort of gets at this idea that eventually you run out of ways to differentiate people, and soon two or more people are falling into what is clearly a "cluster". You can try to do things programmatically, by making a bunch of axes and then pushing people as far to the edges of those axes, and have a hypercube where they're at the corners in N-dimensional space or something ... but I think that this is really likely to fail in that it will constrain the project.

Game Maker's Toolkit recently put out a video on how Smash Bros. characters are designed, which was very relevant to my interests here. The roster has more than 80 characters, even if you exclude mechanically identical echo fighters, and there really has been an effort to make sure that there aren't many proper repeats.

But that's for a fighting game, with much different parameters than whatever I'm imagining to be the usecase for 72 waifus.

There's a mange/anime called The 100 Girlfriends Who Really, Really, Really, Really, Really Love You, which people keep mentioning to me as a gimmick I might enjoy, and in a bout of diligence I read the first chapter, which didn't appeal to me. But also, I don't think that anyone has actually said that it's good, so maybe this is on me. (It's comedy, and I keep running into comedies that just seem to exist for the punchline, rather than comedies that are funny because we're taking something ridiculous deathly seriously.)

22 notes

·

View notes

Text

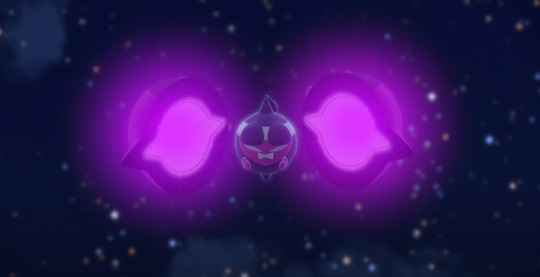

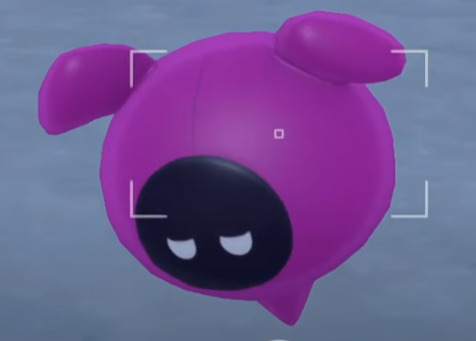

Meandering thoughts about Pecharunt/possible ecology Stuff(tm) for the specific one in Mochi Mayhem + more general ideas for if you treat them as a species, since a surprising amount of mythical+legendary pokemon are explicitely /not/ single specimen species, just very rare (Shaymin being mentioned as migrating, Latios and Latias entries mentioning flocks, etc)

Pleeease note I'm going to write a post about this with illustrations/partially in-universe perspective in a bit, but I did want to throw out my initial thoughts!!!

Also, with the exception of the first thing I say, I really encourage people to consider Pecharunt from an ecology perspective in their works alongside like. an individual pokemon with its own backstory, because I think that allows for richer more interesting stories! I think if you like Pecharunt and want to attack me with rocks for saying what I'm about to say, please feel free to write your own meta/opinions in your own post, because I also really like Pecharunt and would like to see more stuff written about it as an inidividual animal and also as a theoretical species. I think it's very cute. ^_^

Also I will note that this is steam of conscious style stuff; I may restate my points multiple times, sorry about that!

With that out of the way, let's look at the scarlet and violet website's entry.

The one that has people fighting about this thing's malice or lack thereof.

'Uses Its Cunning To Survive' is a very evocative phrasing.

That line being so carefully combined with Pecharunt being described as 'sly' and doing actions such as pretending to be a baby Pokemon a la Happiny to gain sympathy, and possibly specifically mimicking /human/ kids in vocalization/etc depending on the tone you read it in... that is a very specific sort of evolutionary strategy that this calls to mind.

The information provided on this page, combined with the fact it apparently was living with an old couple, and the fact that in the story of Momotaro, the couple found the child in a peach floating down the river, and decided to raise the child as their own...

...Game Freak accidentally made a Mythical that is a brood parasite.

This probably sounds like I'm jumping to conclusions, but there's a couple other things that make me think that this is a reasonable conclusion about how a young instance of Pecharunt works.

To explain why, let's look at Pecharunt's movelist, and specifically its egg moves! Also, a quick review of what 'Brood Parasite' means.

Brood parasitism as an evolutionary strategy comes about since raising young takes a lot of time and effort; in the more standard usecases, like in birds, this tends towards an egg being placed in the nest, and the hatching animal outcompeting the host bird's actual young by crying louder and more consistently, or pushing them out of the nest to obtain more resources/attention.

Much like Pecharunt, this may seem baffling at first (in cuckoo bird's case, because of the size disparity, and in Pecharunt's case, the Base Stat Total disparity)

This is not always the case, mind; Asian Koels tend to not push other young out the nest (though they still do it Sometimes), and the Black-headed duck is notable for having an extremely extended incubation time in which after that point the duck hatches and wanders off.

'But Pecharunt is a mythical, does it really need that much protection?' Well, lets look at a couple facts you may or may not already know. Here are Pecharunt's egg moves:

Pecharunt at level one has extremely weak moves, and to make matters worse, Pecharunt is in one of the slowest exp gain groupings. Even worse, it learns new moves every eight levels when a lot of pokemon are getting new stuff to learn every four, and does not get a lot of good coverage moves at that!

To put it in even further context, Pecharunt learns Malignant Chain, its signature move and the one that specifically utilizes binding mochi in a more aggressive fashion than the firing mochi/serving mochi seen in game events and youtube video stuff, at level 48.

With that in mind, its growth rate seems to be in a similar range as something like the dragons.

A lot of Dark types, one of the things that Pecharunt is weak to, specifically exist in packs, which would leave smaller less experienced instances profoundly vulnerable. To add on this this, the areas I've been suspecting for original range + what in birdwatching stuff is termed as vagrancy (animal appearing way way outside of normal range) all don't just have Dark types, but in many cases /multiple/ species of Dark types. Poochyena, Houndour, Bisharp... all of these are dark types that live in packs or large groups. Even more notably, Poochyena and Mightyena in the wild have a chance of carrying Pecha Berries, which cure poison. Isn't that something.

These same evolutionary pressures could very reasonably result in Pecharunt's comically high defense! Mightyena and Poochyena are physical attackers, and a lot of the Dark moves Houndour and Doom learn by level up are physical.

On that note: Special Defense notably is /not/ Pecharunt's strong suit, and the weakness to Psychic means that any sufficiently nasty special attacker could rip through the lower hp pool hiding behind the physical defense like wet tissue paper. I'm going to specifically point at Beldum and Metagross and then smile serenely. Steel types in general would be a problem if in a sitation where its unable to utilize mochi properly. Which is where the 'subjugation pokemon' aspect, and its ecological strategy after its lengthy maturation would come about.

So, if Pecharunt were a species, they'd need a lot of extra care and protection to reach anywhere near maturity/full potential, and specifically that sort of care and protection from something or someone that can handle the things it can't whether that's via a more varied movepool/different typing, or the structures and technology of human beings in the pokemon setting (though in another post I mmmaay get into some thoughts about how if Pecharunt is a species, it probably is in decline in the modern Poke-setting. Stay tuned.)

A limited movepool makes sense if ecology-wise, Pecharunt is a parasite, even if one that is significantly more free-living than the ones these sort of things usually apply to; trait loss is pretty common with parasites as stuff gets delegated to hosts/host species. This is a Pokemon that doesn't need to have type coverage because its own ecology is based around commanding other Pokémon that do have the correct type coverage to defend it.

And since that's covered with Binding Mochi, all that it needs to be good at is the things that its movepool is geared towards; manipulating people and Pokémon to protect itself, giving pause and sympathy without the use of poison when it can't directly manipulate something, running away when /that/ fails utilizing Astonish to make a target flinch when alone and Parting Shot when with a retainer/bodyguard, and then finally as a last resort when an old enough/high enough level, the arsenal of extremely powerful non-mochi poison type moves, Malignant Chain, and Shadow Ball... so, the exact sort of actions that Pecharunt one for one takes in Mochi Mayhem.

It even specifically attempts to flee after Arven and Penny are defeated, which lines up nicely with the possible usecase for Parting Shot!

Prior to learning Shadow Ball and Malignant Chain, It has a TON of moves that specifically take it out of the fight and put another Pokemon into the fight with advantages, like Memento and Parting Shot. This, in my opinion, gives credence to this being the in-universe reasoning for the movepool being what it is.

Memento in /particular/ being a move that goes so far as to risk fainting is uh. Interesting, especially since it's one of the moves learned at level 1!

Oh and speaking about its level one/egg moves: on the topic of Astonish!

I think that move reflects something really fun about the peach pit motif. Which is that I think the glowing interiors of the shell serve similar purposes to the false eyes on irl moths + also the pokemon Masquerain (who also learns astonish famously!) Imo, the false eye flashing analogue feels like a really easy pull to make.

A less move-oriented view of this is also kind of established in the scene with Arven and Penny!

This Pokemon tends to keep the shell closed outside of battle and certain interactions, and the scene specifically uses the kind of duller, slow moving animations for the closed form and then has them launch into the mochi thing, utilizing startle response/reflexive action to advantage, comedic as it is.

The mochi throwing also works really well as a two birds one stone defense measure for Pecharunt ecology-wise, as similar 'throw food that was being eaten in hopes predatory animals will give up chase to eat that' is actually pretty common, and hell, Legends Arceus has shown that bean cakes and muffins work very well against many pokemon.

You may ask, 'Invi, does that mean the shell being fully open for the entire mythical encounter fight mean they're puffing themself out like a cat as an agonistic display for the entire duration of the fight?'

And to that I say...Quite possibly! Other instances of Pecharunt in the story showing itself probably land more into deimatic displays/startle displays like those used by butterflies and stick insects, but the fight instance probably specifically is agonistic, considering it starts making aggressive hissing 'chhhrah' noises, especially in contact with the Loyal Three.

As for the Binding Mochi from an ecology perspective/real life analogue... there's no completely direct analogue irl, but it serves two purposes that are very much common strategies!

What Pecharunt did by making the Loyal Three retainers + what it did to Mossui Town is a pretty open and shut example of bodyguard manipulation.

This is something more commonly seen in parasitoid species, but essentially, this is a subsection of behavior altering in parasites where you get your host to do stuff for you to keep you safe. Examples in our real world include wasp species that get caterpillars thrash if potential predators get too close.

In the Pokemon world, the go-to example would be Nihilego, whose venom has some similarities to Binding Mochi, but is noted as specifically messing with someone's preexisting abilities and impulses, while Pecharunt's alterations of its retainers tend to fall in line with their desires/wishes.

As for the fact it's a food item that gets other things to defend it, that's also common! Aphids and scale insects commonly produce honeydew, and get ants to defend them from predators in return for such.

As for it being mochi? The concept of something along those lines being produced as a starchy thing is also not that far out, as a number of psyllid bugs produce Lerps, which consist of sugars and starches. In the case of the bell lerp, which actually utilizes a bird, the Bell Miner, to avoid being attacked by other fauna and flora in return for continued consumption of aforementioned lerps!

I've focused on the brood parasite section, but overall I will say that after hitting Malignant Chain, it likely becomes a social parasite a la certain types of cuckoo bees, and these would essentially intergrade nicely (can start more actively controlling pokemon/people it's with already, move around more freely than the initially probably very nest-centralized movement to keep itself safe until its defenses can develop more properly).

Misc additional notes:

Interestingly enough, while Pecharunt has the 'no eggs discovered' thing, it does have a set amount of time to hatch in game data and it is specifically way shorter than most other Legendaries/Mythicals minus a couple notable exceptions like Terapagos and the guardian deities; Pecharunt's starts at 20, which is the same as eggs for starters like Bulbasaur and etc.

Like for context, most of the other guys have egg cycles of 80-120. This would match up to a really common phenomenon in brood parasites where the eggs actively hatch faster than the host animal's to get a leg up on resources and etc.

... I actually have even more thoughts specifically about what Pecharunt's habitat/original home was, and also a couple different theories about what it eats, as well as more granular things about like, physical stuff about this guy's body instead of a life cycle overview, but I'm going to save those for later because this is already ungodly long. Uhhh closing thoughts:

Hold Pecharunt like hamburger, and give it one of every food and drink. It's safe to do this.

#pokemon mochi mayhem#mochi mayhem spoilers#pokemon scarlet epilogue spoilers#pokemon scarvio#pecharunt#also i may not have mentioned it but i do genuinely think pecharunt liked the old couple.#i just think it is /also/ a pokemon that probably has a life cycle that is very hostile to other pokemon even when 'nice'#but eh. that's ghost pokemon for you.#if this reads like a pecharunt hitpiece: wrong! brood parasites are some of the cutest animals on earth esp cuckoobirds

14 notes

·

View notes

Text

I don't really think they're like, as useful as people say, but there are genuine usecases I feel -- just not for the massive, public facing, plagiarism machine garbage fire ones. I don't work in enterprise, I work in game dev, so this goes off of what I have been told, but -- take a company like Oracle, for instance. Massive databases, massive codebases. People I know who work there have told me that their internally trained LLM is really good at parsing plain language questions about, say, where a function is, where a bit oif data is, etc., and outputing a legible answer. Yes, search machines can do this too, but if you've worked on massive datasets -- well, conventional search methods tend to perform rather poorly.

From people I know at Microsoft, there's an internal-use version of co-pilot weighted to favor internal MS answers that still will hallucinate, but it is also really good at explaining and parsing out code that has even the slightest of documentation, and can be good at reimplementing functions, or knowing where to call them, etc. I don't necessarily think this use of LLMs is great, but it *allegedly* works and I'm inclined to trust programmers on this subject (who are largely AI critical, at least wrt chatGPT and Midjourney etc), over "tech bros" who haven't programmed in years and are just execs.

I will say one thing that is consistent, and that I have actually witnessed myself; most working on enterprise code seem to indicate that LLMs are really good at writing boilerplate code (which isn't hard per se, bu t extremely tedious), and also really good at writing SQL queries. Which, that last one is fair. No one wants to write SQL queries.

To be clear, this isn't a defense of the "genAI" fad by any means. chatGPT is unreliable at best, and straight up making shit up at worst. Midjourney is stealing art and producing nonsense. Voice labs are undermining the rights of voice actors. But, as a programmer at least, I find the idea of how LLMs work to be quite interesting. They really are very advanced versions of old text parsers like you'd see in old games like ZORK, but instead of being tied to a prewritten lexicon, they can actually "understand" concepts.

I use "understand" in heavy quotes, but rather than being hardcoded to relate words to commands, they can connect input written in plain english (or other languages, but I'm sure it might struggle with some sufficiently different from english given that CompSci, even tech produced out of the west, is very english-centric) to concepts within a dataset and then tell you about the concepts it found in a way that's easy to parse and understand. The reason LLMs got hijacked by like, chatbots and such, is because the answers are so human-readable that, if you squint and turn your head, it almost looks like a human is talking to you.

I think that is conceptually rather interesting tech! Ofc, non LLM Machine Learning algos are also super useful and interesting - which is why I fight back against the use of the term AI. genAI is a little bit more accurate, but I like calling things what they are. AI is such an umbrella that includes things like machine learning algos that have existed for decades, and while I don't think MOST people are against those, I see people who see like, a machine learning tool from before the LLM craze (or someone using a different machine learning tool) and getting pushback as if they are doing genAI. To be clear, thats the fault of the marketing around LLMs and the tech bros pushing them, not the general public -- they were poorly educated, but on purpose by said PR lies.

Now, LLMs I think are way more limited in scope than tech CEOs want you to believe. They aren't the future of public internet searches (just look at google), or art creation, or serious research by any means. But, they're pretty good at searching large datasets (as long as there's no contradictory info), writing boilerplate functions, and SQL queries.

Honestly, if all they did was SQL queries, that'd be enough for me to be interested fuck that shit. (a little hyperbolic/sarcastic on that last part to be clear).

ur future nurse is using chapgpt to glide thru school u better take care of urself

154K notes

·

View notes

Text

11/04/24

A warm Sunday afternoon beneath a tree—I recline all a-supine, sunbeams cutting through leafcover—I could almost use a hayblade in my mouth

to complete the facade. These kindly, yellow months: I live right in these times with worry nokinds, anxiety's kithandkin absent, no anger, anguish, allsoforth...

Clouds curl like vinetendrils around the sky, sinking around and into each and every other in dovesoft embrace, white as same yet cold as the palerime

watermatter of whose stuff they are made. As I remain in place, all else remains in back/forth flux: leafcover shudders, cumulus gives way to cirrocumulus

gives way to a brightwide sapphiric glow (she of Lesbos, I'm sure, would relish this microbreeze. It's pliable, but with a leaden constancy)--my eyes shut.

I miss this asceticism, transcending-and-renouncing of desires (even just for this subarboric moment)— could it lead to a kind of creative apotheosis? Maybe,

but it's just a hypothesis. The hippies might have it right, in spirit anyway: tuning out from the drag, man, and hooking into the Great Pyramidsystem

we all came from; latterday hippies who drink organically freerange kombucha (made only with veggiefed SCOBYs, of course); a freerun kind of

freelove (sober, unhormonated 'cuz that artificial shit ain't in the Great Design); the biggest baggest pants you'll ever see—yet, even still,

these hippies might seem hep, in the know with Happenings (physical, in the astral plane, et- ceteraetcetera) but these latterday hippies still live

on their phones; still live hyperconnected in the same usecases, posting the same posts—they're pluggedrightin, wiredrightup—L.D.H.s buying the same things I might

from the same places, eating the same undersized and overpriced food—in the end, it's a matter of degrees. The hippie-writer-ascetic-treehugger life calls, but

the line is dead, in this age of waves and radiation. I open up my eyes, see leafcover a-shiver—new clouds, new shapes, these ones not so viney:

ooh, a sailboat!, that one's a hand!, that one looks like a brand-spanking-new Black & Decker saw!, et- ceteraetcetera. I'm happy where-and-when I am,

under this tree—my own lifetime beckons me on, and I bet those hippies never had any coffee that was quite so good as mine.

#daily poem#thisn's about... i dunno#sometimes you gotta bully hippy-aesthetic people as a little treat

1 note

·

View note

Text

Updates

The past few weeks I have more seriously commited to begining my VFX journey as an adition to my design and artistic toolset. At the moment I am following along with various tutorials, trying to see what is possible within the programs, slowly building miscellanious skills until I know what I want to apply them to exactly. I have one definitive usecase for these skills, which is to make an album cover for my little brother. Eventually I would like to be able to make his music videos using these skills as well. What i’ve found is that the skills seem relatively easy to aquire, and you are mostly limited by your own creative/conceptual scopes. There is also the more real limitation of computing power and time. In Unreal engine I have gained a basic understanding of:

-Navigating the viewport and file systems

-Understanding that different creation modes offer different capabilities (e.g.Third player mode comes with blueprints for playable third person experiences for example, whereas film mode does not have these functions but seems to be more optimized for camera skills.) -The world lighting settings. Lightdirection for the sunlamp itself, exponential height fog for volumetric control, good for godrays etc, atmosphere settings also change the way light is scattered through the space Rayleigh and Mei scattering settings change this and subsequently impact the colour of the atmosphere (this could also be affected by the temperature of the sun lamp. -Camera presets including super8, focal lengths, exposure, bloom. -Developing a fisheye preset through applying a post process volume. -Importing and posing metahumans, using IK boxes to simplify this.

In blender:

-Getting more familiar with compositing channel to add bloom, flares, grain, lens distortion - Applying a low poly sim to a high poly mesh via proxy simulation: Low poly sim>Bake>bind to higher poly mesh - Procedural shader editing

0 notes

Text

So let's say you execute

$ cdexec foo

but `foo` doesn't exist. What should the error message be?

cdexec: error changing directory: foo: No such file or directory

cdexec: error changing directory: foo: ENOENT: No such file or directory

cdexec: error changing directory: foo: ENOENT

More abstractly, which way is best for reporting errors from the system?

...: <perror output>

...: <errno name>: <perror output>

...: <errno name>

Personally, I prefer the last one, because:

Including the errno name is very important for one of the biggest users of command-line tools: other programs such as scripts. ENOENT is the standard, international name for the error. The error is ENOENT. The output from perror is a localized human-friendly hint.

Ditto for human users who have grown familiar with the underlying system enough - I've seen developers complain about having to try to figure out what error code a `perror` string maps back to. I have a good enough memory that this doesn't trouble me most of the time, but I still waste a memorized lookup table on it.

The human-friendly error string is misleading in the edge-cases. See, when I see "no such file or directory", I know that there might not be any file or directory involved at all. Did you? For example, some APIs in the Linux network stack report ENOENT for things that aren't in the file system - because of course to a kernel developer living in a world which has missed the value of exposing all things on the "file" system, it makes sense that ENOENT means "no such entity" rather than specifically "no such file or directory". So you run an `ip route ...` command and get your time and energy wasted trying to figure out what file could possibly be missing.

To be clear, this is orthogonal to "should my source code just call `perror` or should I consider adding something like `errnoname`?" Because your libc could easily be made to extend or change the perror output to include errno names, based on either a compile-time flag or for example by setting some environment variable. You could also compile with `perror` renamed or relinked to something else. So we could in principle achieve any of these alternatives while still just calling `perror` in your source.

This is also orthogonal to "but for many users (at least in the vast majority of cases), the human-readable error message is helpful". Just because something is good to have, doesn't mean every single program should contain the code to do it. Turning an error into human-friendly guidance sure looks to me like a composable piece of functionality that can be implemented separately and then composed on top of all programs. I'd rather have a shell that can automatically pipe the standard error of my programs through a helper tool which can detect names like `ENOENT` and do whatever helpfulness the user wants and the usecase demands. Meanwhile, the error strings which perror produces don't even do a great job of being human-friendly guidance - new users, and even just busy non-experts in a hurry, benefit from detailed hints, typo-checking, alternative suggestions, and so on.

So when I think about the external error string as an API first, the `errno` name seems essential, and then the `perror` output seems superfluous and worse for parsing.

But I do recognize that the perror output can always be stripped off - if you know the format enough to unambiguously isolate the perror noise from the rest of the error, then yes it's annoying, but it's very surmountable.

I also recognize that in the world we live in right now, users aren't going to run my programs in a shell that automatically translates their errors into something more helpful in a user-configurable way. That doesn't exist yet, I haven't written it yet nor spread the vision, and when we do write it, adoption will lag behind a lot. So when a new user runs a program directly, sees `ENOENT`, and has no idea what it means, it doesn't do them much good for me to say "well this wouldn't be a problem if the rest of your system was set up how I envision". At best I can hope that the error code, combined with my carefully chosen prefixes like "error changing directory", do a good job of getting them to the right answer through Google.

So I find myself on the fence... I want to do the third option - the third option is the best architecture, and the future of CLI is to be even more of an API and less of a UI - but I know the second option would be more helpful for a decent amount of real users right now.

5 notes

·

View notes

Text

Apologies for necro-posting, but I looked through the notes and while people have given you some pros and cons, no one actually walked you through getting it up and running, and I might as well give my best shot at helping someone evacuate from windows 11, so here is the Jack Joy's Explanation and Guide to Linux. Chapter 0: The Pitch for the Penguin.

Linux is all about freedom. While Windows and MacOS are Walled Gardens that are slowly stripping away control from their users and extracting more and more from you, the person who is using a non-linux OS, Linux does not do that shit. Free and Open Source Software is the name of the game in Linux, as that is what is mostly being developed in that space by an army of volunteers passionate about keeping the PC personal. That comes with some caveats though. A lot of the software you use is proprietary, and while some of that is still available on Linux, most of it is not. Some you'll expect. Some you wouldn't think is even proprietary and will surprise you when you lose access to it. The Linux community has done it's best to provide solutions for a lot of these, and you will find that a lot of what you want to use has some alternative in linux, but some things will just be fucked. You trade convenience for control.

Chapter 0.5: When you are a King very few choices are simple

If the Pitch convinced you, then congrats, you now have one of the hardest decisions to make as a Linux User. What Distribution of Linux are we using? Distributions (or Distro's for short) are all different OS' who run on the Linux Kernel, the thing that gives your machine thought and makes it possible to run the hit video game Team Fortress 2 (2007). There are a lot of distributions of linux, all of which do weird things with it, but my personal Picks are as follows.

Linux Mint

Linux Mint is the gold standard for stupid simple linux distro. It just works*, it comes with a DE(desktop Enviroment) that is reminiscent of windows 7 so adjustment should be minimal and overall, is very uncomplicated. Is a bit bland tho. *(things still break sometimes).

Ubuntu

Ubuntu meanwhile you probably already heard of. Think of it as the MacOS of Linux. It has the most company support, it's DE called GNOME is very MacOS like in it's design language, incredibly stable, but also very poor in customization. If something says tested on linux, a lot of the time, it means tested on Ubuntu.

EndeavourOS

EndeavourOS is my linux distribution of choice. It's based off of Arch Linux, which is what powers the Steamdeck with SteamOS, and as such has a lot of nifty Arch linux niceties, like the Arch User Repository, and KDE Plasma as it's DE. It tries to combine being user friendly while letting you tinker with everything, it is on the cutting edge of linux, but that also means that stuff CAN break more often.

These are just my picks. Some other notable beginner friendly Linux Distros that might pique your interest could be Pop_Os, Manjaro, Elementary OS and probably a bunch of others that I forgot or don't even know exist until someone will complain at me for forgetting after writing this guide. Choice my friend. You have a lot of it, and so think about what you want from your PC and go with the distro that seems to be best suited for your usecase, whether it be as a game machine or to just use firefox and libreoffice.

Chapter 1: Performing OS Replacement Therapy on your PC

So, you know what Linux Distro you are gonna use, you know you are ready to do this, so how are we doing this? Pretty simple in all honesty. We only need:

A Flash drive (USB preferred, SD or micro SD card readers can get FUNKY)

Balena Etcher

The ISO of the flavor of Linux you want to use

Some knowledge of how to navigate your computers BIOS

And preferably a secondary boot device (IE, a second SSD in your PC)

Plug your USB into your PC, and with Balena Etcher flash your ISO onto it. If you got another USB to spare, it is a good idea to flash an image of Windows 7 onto it. Think of that second USB stick as a "In Case of Emergency, Break Glass" type of safety precaution. We don't wanna have to use it, but it's good to have just in case. Reboot your PC with your Linux flashed USB stick in, and get into your BIOS. There you are going to pull that USB stick up the boot loader until it goes before windows. If that is somehow not an option, you might have to fuck around with your PC as there might be some secure boot shennanigans going on. Consult DuckDuckGo about your specific Computer, someone already figured it out if there is a hiccup. If you were able to pull up your USB up the boot order, exit the BIOS and hopefully things should be happening. To confirm look at the screen and if it does something new (and potentially scary looking) instead of the normal windows boot sequence, it is probably doing good. After a while you should be spat back out into a "Live Enviroment" version of your OS. This version of the OS exists only for this boot, and is pulled from your USB stick. There should be an installer inside of that live enviroment version of your OS, after which it is mostly smooth sailing. Follow the Installer, but pay REAL GOOD ATTENTION to what it is sayin when it asks you where it wants to be installed, as it will create a partition somewhere on your PC. If you have a PC with an SSD that isn't being used by windows, I recommend giving it that as you'll just be able to give that entire drive to Linux without problem. If you don't have an extra SSD, you will have to cleave a chunk from the one drive you have from windows. You can just give it 50% of the drive if you want to be conservative and still retain the ability to go back to windows. But should you feel particularly pissed/want to make sure you have no escape back to windows without having to reinstall it via that second USB stick, then torch the damn thing. Once the Installer is done it will either ask you to reboot your PC or just do it itself. After which point if everything went right, you will complete your first boot of Linux and end up in the actual version of the OS you installed. If you made it there, congratulations, and welcome to Linux. You might want to update the first time you boot, but after that, feel free to poke around to see what you have installed. Get Acquainted with your new Desktop, use some of the artisanal software that is FOS, if you are feeling spicey, run some commands in the terminal (as long as you know what they do. please do not run sudo rm -rf / because you saw it on a funny linux meme, that will uninstall your entire OS.) I hope this Guide has been helpful ^w^.

Hey. Gonna gamble here. Can someone explain to me the pros and cons of Linux as a whole and tell me maybe -possibly- how one might go about getting something set up

475 notes

·

View notes

Text

India greenlights Huawei as CEO warns of a tough year – Telecoms.com

The Indian Government has said it will not exclude Huawei from its 5G trials, as the country sets a 2021 deadline to launch commercial service. The last week has certainly thrown out some mixed signals from the under-fire Chinese infrastructure equipment vendor. In a New Year’s message to staff, Rotating Chairman Eric Xu suggested revenues over the course of 2019 increased 18% year-on—year, though 2020 will see much more difficult market conditions. The news emerging from India is a win, though 2019’s twists and turns have created a very difficult playing field for Huawei. In India, Government officials stopped short of stating Huawei would be allowed to sell equipment to the telcos when commercial services are up-and-running, but this is as good as the vendor could have hoped for to date. Huawei will be permitted to work alongside Indian telcos to build business and usecases for the up-coming 5G euphoria. Although this is an incredibly attractive market for equipment vendors, the number of subscriptions and vast geographical spread paint the potential for huge profits, this is not a market without problems. The disruption caused by the introduction of Reliance Jio and its aggressive pricing are forcing competitors into financial embarrassment, while the Indian Government has never completely figured out the spectrum conundrum. Spectrum is an incredibly valuable resource for the telcos, and while it is very common for there to be complaints about the price, most of the time cheques are signed bitterly. This is not the case in India, where 40% of spectrum licences have gone unsold through auctions since 2010. For such a valuable, and scarce, resource to remain unclaimed, the Government must be asking too much for licences. In recent weeks, the Government has said it would ignore industry complaints and persist with the current pricing regime for the 3.3-3.6 GHz spectrum band. What impact this tussle between Government and industry has on the 2021 deadline for commercial 5G services in the country remains to be seen. That said, the sheer size and aggressive nature of India’s digital transformation journey over the last three years makes this a very attractive market for any of the equipment vendors. Though Huawei may well need a boost if Xu’s 2020 pessimism is accurate. In the New Year’s message, Xu suggested to staff that revenues for 2019 could be up as much as 18% to $121.72 billion, though the first half of 2020 is likely to demonstrate slower growth. The continued presence on the US Entity List is likely to have a significant impact on the consumer division, as the newest Mate 30 smartphone is currently being sold without any Google services installed on the device, or the latest version of the Android OS. The smartphone segment has proven to be very profitable for Huawei in recent years, as it proved to be one of the few OEMs who could grow sales during a global slowdown. Huawei is currently ranked second in the global market share for smartphones, though this will be almost impossible to maintain as the realities of US friction hit home. It would not be surprising to see monstrous loss of market share outside of China. While this does not paint the most attractive of pictures, Huawei has proven to be a resilient company. The US ban seems to be having little impact on the operations of the firm, Huawei has manoeuvred its supply chain quite effectively, and more countries seem to be ignoring US demands to implement bans.

0 notes

Note

Hey so i love all your resources good stuff! Can you please point me to a great place to learn Javascript Object Orientated Programming? (all that protype stuff etc). I know html and css and some basic javascript for front end stuff but would like to learn more. :) any suggestions?

What are some resources to learn Object Oriented JavaScript?

DAY 772

Hi @nososecretlyhidden,

I’ve actually just been working to improve my understanding of this.

As I posted the other day, I really love Koushik Kothagal‘s “In-Depth” videos. I just finished watching his series on objects and prototypes, and found it extremely helpful for understanding the nuts and bolts:

YouTube/Java Brains - JavaScript Objects and Prototypes In-Depth

I wish his series had one more video to tie it all together with a “real” inheritance example, but this MDN article does a good job of stating that more explicitly:

MDN - Inheritance in JavaScript

The MDN examples use `Object.create()`, which Koushik doesn’t mention. MPJ has a video which helps explain what this does and why:

YouTube/FunFunFunction - Object.create()

Those first few resources are good places to get a good understanding of the “why” of OO JavaScript. and I also watched a series which focuses more on practical usecases:

YouTube/LearnCode.academy - Modular JavaScript

I want to add a caveat that this last guy seems not to understand/care about all the details as much as Koushik or MDN. In particular he seems to use the terms “classical inheritance” and “prototypal inheritance” incorrectly [as far as I can tell, ANY form of inheritance in JS is prototypal, and “classical inheritance” specifically can’t happen in this language at all].

That said, he seems to have a lot of practical experience in how and when to use different code patterns, and I found his examples of things like Pub/Sub very enlightening.

Good luck!

43 notes

·

View notes

Text

Engineer moment.

They're talking about how it renders text, how it feels to scroll its back/forward and rendering behavior, not how it looks. Things which are harder to describe and more ephemeral to the user-experience.

A big part of this is websites being optimized for chromium, but not for gecko.

Many users will never encounter slowness per-say, because either their system is fast enough or their configuration is light and appropriate enough for their system -- but the clunkiness is unavoidable.

Scrolling genuinely "doesn't feel right". Text doesn't look right. Websites don't look right.

I have a pretty decent system, with more RAM than firefox will ever need and to this day I only ever use it for video to do multiple picture in picture if a major political event is being streamed from multiple sources like the Russian invasion of Ukraine and I need 30+ video feeds on six monitors -- because it handles video really, really well and multi-PIP is the most efficient screenuse.

Outside of that, I've genuinely found no use for Firefox since I often have three or four windows open, each with maybe 30 tabs each.

Firefox just doesn't cut it for my usecase.

A major problem Firefox has culturally is people who just really like the software and cannot imagine anybody feeling any different about it.

Maybe out of preference, maybe because they like open source, but a lot of people using Firefox and developing Firefox don't accept that Firefox can be meaningfully improved -- and so, Firefox is not improved.

This fact is why I don't use Firefox anymore.

I don't see a future for it as anything other than the symbolic competition kept alive by Google's money so that Google isn't swallowed alive by anti-trust laws.

I don't see it getting better. Problems I have posted years ago have gone unaddressed, because "things are fine as they are".

My problem overall with open source is it claims to be a vision of what computers could be and instead chooses to be a flanderization of many of the worst tendencies of software development.

Its an incredibly beautiful promise but it is very rarely kept.

Maybe it excels on some technical level but is painful to use. Maybe its pretty, but its incredibly fragile. Maybe its robust and pretty and good to use, but it runs like dog-snot.

There just always seems to be some sort of weird monkeys paw deal with a lot of projects, some sort of suck that you just have to accept.

On the bright side, open source projects are immortal. They mean no matter what happens, something will live on forever, and that is something I do appreciate.

"Firefox sucks" "firefox is clunky" "firefox doesn't work" with all due respect what the fuck are you talking about

#Click expand because I don't agree with these guys#Firefox#has problems which aren't addressed#And everybody refuses to acknowledge that they're real at all#You need to overcome this#Or your software is going to rot forever and just be second-best to chromium#Which itself has collossal room for growth change and improvement#At this point I'm honestly starting to think the web as we think of it is doomed#Its just too cludgy and messy and hard to maintain#Every time new performance comes along#Watch web developers absoloutely squander it#Fuck this gay earth

33K notes

·

View notes

Text

We don’t need a healthcare platform

This text was triggered by discussions on Twitter in the wake of a Norwegian blog post I published about health platforms.

I stated that we need neither Epic, nor OpenEHR, nor any other platform to solve our healthcare needs in the Norwegian public sector. Epic people have not responded, but the OpenEHR crowd have been actively contesting my statements ever since. And many of them don’t read Norwegian, so I’m writing this to them and any other English speakers out there who might be interested. I will try to explain what I believe would be a better approach to solve our needs within the Norwegian public healthcare system.

The Norwegian health department is planning several gigantic software platform projects to solve our health IT problems. And while I know very little about the healthcare domain, I do know a bit about software. I know for instance that the larger the IT project, the larger the chances are that it will fail. This is not an opinion, this has been demonstrated repeatedly. To increase chances of success, one should break projects into smaller bits, each defined by specific user needs. Then one solves one problem at a time.

I have been told that this cannot be done with healthcare, because it is so interconnected and complex. I’d say it’s the other way around. It’s precisely because it is so complex, that we need to break it into smaller pieces. That’s how one solves complex problems.

Health IT professionals keep telling me that my knowledge of software from other domains is simply not transferrable, as health IT is not like other forms of IT. Well, let’s just pretend it is comparable to other forms of IT for a bit. Let’s pretend we could use the best tools and lessons learned from the rest of the software world within healthcare. How could that work?

The fundamental problem with healthcare seems to be, that we want all our data to be accessible to us in a format that matches whatever “health context” we happen to be in at any point in time. I want my blood test results to be available to me personally, and any doctor, nurse or specialist who needs it. Yet what the clinicians need to see from my test results and what I personally get to see will most likely differ a lot. We have different contexts, yet the view will need to be based on much of the same data. How does one store common data in a way that enables its use in multiple specific contexts like this? The fact that so many applications will need access to the same data points is possibly the largest driver towards this idea that we need A PLATFORM where all this data is hosted together. In OpenEHR there is a separation between a semantic modelling layer, and a content-agnostic persistence layer. So all datapoints can be stored in the same database/s - in the same tables/collections within those databases even. The user can then query these databases and get any kind of data structures out, based on the OpenEHR archetype definitions defined in the layer on top. So, they provide one platform with all health data stored together in one place - yet the user can access data in the format that they need given their context. I can see the appeal of this. This solves the problem.

However, there are many reasons to not want a common platform. I have mentioned one already - size itself is problematic. A platform encompassing “healthcare” will be enormous. Healthcare contains everything from nurses in the dementia ward, to cancer patients, to women giving birth, orthopaedic surgeons, and family of children with special needs… the list goes on endlessly. If we succeed building a platform encompassing all of this, and the plattform needs an update - can we go ahead with the update? We’d need to re-test the entire portfolio before daring to do any changes. What happens if there is a problem with the platform (maybe after an upgrade.) Then everything goes down. The more things are connected, the more risky it is to make changes. And in an ever changing world, both within healthcare and IT, we need to be able to make changes safely. There can be no improvement without change. Large platforms quickly become outdated and hated.

In the OpenEHR case - the fact that the persistence has no semantic structure will necessarily introduce added complexity in how to optimise for context specific queries. Looking through the database for debugging purposes will be very challenging, as everything is stored in generic constructs like “data” and “event” etc. Writing queries for data is so complex, that one recommends not doing it by hand - but rather creating the queries with a dedicated query creator UI. Here is an example of a query for blood pressure for instance:

let $systolic_bp="data[at0001]/events[at0006]/data[at0003]/items[at0004]/value/magnitude" let $diastolic_bp="data[at0001]/events[at0006]/data[at0003]/items[at0005]/value/magnitude"

SELECT obs/$systolic_bp, obs/$diastolic_bp FROM EHR [ehr_id/value=$ehrUid] CONTAINS COMPOSITION [openEHR-EHR-COMPOSITION.encounter.v1] CONTAINS OBSERVATION obs [openEHR-EHR-OBSERVATION.blood_pressure.v1] WHERE obs/$systolic_bp>= 140 OR obs/$diastolic_bp>=90

This is needless to say a very big turn-off for any experienced programmer.

The good news though, is that I don’t think we need a platform at all. We don’t need to store everything together. We don’t need services to provide our data in all sorts of context-dependent formatting. We can both split health up into smaller bits, and simultaneously have access to every data point in any kind of contextual structure we want. We can have it all. Without the plattform.

Let me explain my thoughts.

Health data has the advantage of naturally lending itself to being represented as immutable data. A blood test will be taken at a particular point in time. Its details will never change after that. Same with the test results. They do not change. One might take a new blood test of the same type, but this is another event entirely with its own attributes attached. Immutable data can be shared easily and safely between applications.

Let’s say we start with blood tests. What if we create a public registry for blood test results. Whenever someone takes a blood test, the results need to be sent to this repository. From there, any application with access, can query for the results, or they can subscribe to test results of a given type. Some might subscribe to data for a given patient, others for tests of a certain type. Any app that is interested in blood test results can receive a continuous stream of relevant events. Upon receipt of an event, they can then apply any context specific rules to it, and store it in whatever format is relevant for the given application. Every app can have its own context specific data store.

Repeat for all other types of health data.

The beauty of an approach like this, is that it enables endless functionality, and can solve enormously complex problems, without anyone needing to deal with the “total complexity”.

The blood test registry will still have plenty of complexity in it. There are many types of blood tests, many attributes that need to be handled properly, but it is still a relatively well defined concrete solution. It has only one responsibility, namely to be the “owner” of blood test results and provide that data safely to interested parties.

Each application in turn, only needs concern itself with its own context. It can subscribe to data from any registry it needs access to, and then store it in exactly the format it needs to be maximally effective for whatever usecase it is there to support. The data model in use for nurses in the dementia ward does not need to be linked in any way to the data model in use for brain-surgeons. The data stores for each application will only contain the data that application needs. Which in itself contributes to increased performance as the stores themselves are much smaller. In addition they will be much easier to work with, debug and performance tune, since it is completely dedicated for a specific purpose.

Someone asked me how I would solve an application for

“Cancer histopathology reporting, where every single cancer needs its own information model? and where imaging needs a different information model for each cancer and for each kind of image (CT, MRI, X-ray) +where genomics is going to explode that further” Well I have no idea what kind of data is involved here. I know very little about cancer treatment. But from the description given, I would say one would create information models for each cancer, and for each type of image and so on. The application would get whatever cancer-data is needed from the appropriate registries, and then transform the data into the appropriate structures for this context and visualise the data in a useful way to the clinician.

We don’t need to optimize for storage space anymore, storage is plentiful and cheap, so the fact that the same information is stored in many places in different formats is not a problem. As long as we, in our applications can safely know that “we have all the necessary data available to us at this point in time”, we’re fine. Having common registries for the various types of data solves this. But these registries don’t need to be connected to each-other. They can be developed and maintained separately.

Healthcare is an enormous field, with enormous complexity. But this does not mean we need enormous and complex solutions. Quite the contrary. We can create complex solutions, without ever having to deal with the total complexity.

The most important thing to optimise for when building software, is the user experience. The reason we’re making the software is to help people do their job, or help them achieve some goal. Software is never an end in itself. In healthcare, there are no jobs that involve dealing with the entirety of “healthcare”. So we don’t need to create systems or platforms that do either. Nobody needs them.

Another problem in healthcare, is that one has gotten used to the idea that software development takes for ever. If you need an update to your application, you’ll have to wait for years, maybe decades to see it implemented. Platforms like OpenEHR deal with this by letting the users configure the platform continually. As the semantic information is decoupled from the underlying code and storage, users can reconfigure the platform without needing to get developers involved. While I can see the appeal of this too, I think it’s better to solve the underlying problem. Software should not take years to update. With DevOps now becoming more and more mainstream, I see no reason we can’t use this approach for health software as well. We need dedicated cross functional teams of developers, UXers, designers and clinicians working together on solutions for specific user groups. They need to write well tested (automatically tested) code that can be pushed to production continuously with changes and updates based on real feedback from the users on what they need to get their jobs done. This is possible. It is becoming more and more mainstream, and we are now getting more and more hard data that this approach not only gives better user experiences, but it also reduces bugs, and increases productivity.

The Norwegian public sector is planning on spending > 11 billion NOK on new health platform software in the next decade.

We can save billions AND increase our chances of success dramatically by changing our focus - away from platforms, and on to concrete user needs and just making our health data accessible in safe ways. We can do this one step at a time. We don’t need a platform.

1 note

·

View note

Text

(Edit: "Ideological Openings" got renamed to "Cognetic Openings".)

I suggest reading the "ideological openings" post as if it is not actually called "ideological openings". As if it has no title. I think as written me slapping that term on it made it worse, and I may go back and delete or replace the title+tag.

A term for the problem I was trying to pin down would be valuable, it's just that I can't think of a particularly good term, and didn't want to hold up the post to do it. So I picked the best I could after a day of it rolling around in my head just to have something to start "trying on".

The thing that mattered to me, at the heart of that post, was this: most people seem to have what I see as superficial moralities/ideologies: their notions of what's right don't properly systematize root causes of what they consider "bad".

So they are receptive to, or are supportive of, ideas and behaviors which are unethical, because they lack the cognetic inoculation that would be provided if their ideology/morality saw the thing as ethically relevant enough to cause them to habituate cognition against the idea/behaviors in question.

I considered "ideological blindspots", but I feel like there is some slippery nuance distinguishing the two, and since it's slippery I haven't yet been able to think it through to the point of confidently deciding if it's worth the wording distinction or not.

Anyway, "ideological openings" only really makes sense if you thought of an ideology as I did when writing:

For simplicity you can picture empty space, and it's like the mind using the ideology is in the center and all possible directions you could come at the mind from represent different cognetic influences on the mind, some of which are endogenic.

Ideology is like this amorphous blob around the mind - and it occupies all the space that it deems relevant. If the ideology mistakenly ignores something relevant, then it will have "gaps" or "openings" leading through it, that cognemes can "slip through" without the ideology providing any critical review of them.

The way of envisioning ideology here is not that relevant, but that's where the term came from and the term only makes sense if you have a comparable way of thinking in your head.

And I'm not sure that I can meaningfully and clearly use it in practice. The biggest usecase is to diagnose the presence of an ideological opening in an ideology - to point out "your ethics do not account for this but it's harmful, and your intuition that you're on the right side on this issue is due to having not considered these (to you) 'sub-ideological' concerns", or (more commonly) to say that to someone else in the third-person about another person's thinking.

I originally working-titled the post as "sub-ideological harms", actually, then broadened that from "harms" to "concerns" to "openings", and then at the last second dropped the "sub-" because I felt an inclination to focus on naming the cause of the problem, rather than naming the thing that the problem was missing, but I'm still considering it.

I welcome suggestions, as always.

0 notes

Text

I have this Ars Technica article pinned in my clipboard now because of how often I've had to cite it as a sort of accessible primer to why the oft-cited numbers on AI power consumption are, to put it kindly, very very wrong. The salient points of the article are that the power consumption of AI is, in the grand scheme of datacenter power consumption, a statistically insignificant blip. While the power consumption of datacenters *has* been growing, it's been doing so steadily for the past twelve years, which AI had nothing to do with. Also, to paraphrase my past self:

While it might be easy to look at the current massive AI hypetrain and all the associated marketing and think that Silicon Valley is going cuckoo bananas over this stuff long term planning be damned, the fact is that at the end of the day it's engineers and IT people signing off on the acquisitions, and they are extremely cautious, to a fault some would argue, and none of them wanna be saddled with half a billion dollars worth of space heaters once they no longer need to train more massive models (inference is an evolving landscape and I could write a whole separate post about that topic).

Fundamentally, AI processors like the H100 and AMD's Instinct MI300 line are a hedged bet from all sides. The manufacturers don't wanna waste precious wafer allotment on stock they might not be able to clear in a year's time, and the customers don't wanna buy something that ends up being a waste of sand in six months time once the hype machine runs out of steam. That's why these aren't actually dedicated AI coprocessors, they're just really really fucking good processors for any kind of highly parallel workload that requires a lot of floating point calculations and is sensitive to things like memory capacity, interconnect latencies, and a bunch of other stuff. And yeah, right now they're mainly being used for AI, and there's a lot of doom and gloom surrounding that because AI is, of course, ontologically evil (except when used in ways that read tastefully in a headline), and so their power consumption seems unreasonably high and planet-destroying. But those exact same GPUs, at that exact same power consumption, in those same datacenters, can and most likely *will* be used for things like fluid dynamics simulations, or protein folding for medical research, both of which by the way are usecases that AI would also be super useful in. In fact, they most likely currently are being used for those things! You can use them for it yourself! You can go and rent time on a compute cluster of those GPUs for anything you want from any of the major cloud service providers with trivial difficulty!

A lot of computer manufacturers are actually currently developing specific ML processors (these are being offered in things like the Microsoft copilot PCs and in the Intel sapphire processors) so reliance on GPUs for AI is already receding (these processors should theoretically also be more efficient for AI than GPUs are, reducing energy use).

Regarding this, yes! Every major CPU vendor (and I do mean every one, not just Intel and AMD but also MediaTek, Qualcomm, Rockchip,and more) are integrating dedicated AI inference accelerators into their new chips. These are called NPUs, or Neural Processing Units. Unlike GPUs, which are Graphics Processing Units (and just so happen to also be really good for anything else that's highly parallel, like AI), NPUs do just AI and nothing else whatsoever. And because of how computers work, this means that they are an order of magnitude more efficient in every way than their full-scale GPU cousins. They're cheaper to design, cheaper to manufacture, run far more efficiently, and absolutely sip power during operation. Heck, you can stick one in a laptop and not impact the battery life! Intel has kind of been at the forefront of these, bringing them to their Sapphire Rapids Xeon CPUs for servers and workstations to enable them to compete with AMD's higher core counts (with major South Korean online services provider Naver Corporation using these CPUs over Nvidia GPUs due to supply issues and price hikes), and being the first major vendor to bring NPUs to the consumer space with their Meteor Lake or first generation Core Ultra lineup (followed shortly by AMD and then Qualcomm). If you, like me, are a colossal giganerd and wanna know about the juicy inside scoop on how badly Microsoft has screwed the whole kit and caboodle and also a bunch of other cool stuff, Wendell from Level1Techs has a really great video going over all that stuff! It's a pretty great explainer on just why, despite the huge marketing push, AI for the consumer space (especially Copilot) has so far felt a little bit underwhelming and lacklustre, and if you've got the time it's definitely worth a watch!

I don't care about data scraping from ao3 (or tbh from anywhere) because it's fair use to take preexisting works and transform them (including by using them to train an LLM), which is the entire legal basis of how the OTW functions.

#sorry for the massive text wall and all the technobabble#and also the random tangents#I'm bad at making my writing accessible#it's hard to simplify these concepts down to the scale where most people could intuit them without basically writing a crash course#on current affairs in the tech world#I did try though#honest! I did!#I'm just not very good at it

3K notes

·

View notes

Text

7 Best and Clean Ways to Make Good Money in Cryptocurrency

Pixabay As each person aims to earn money and be wealthy enough, doing that from cryptocurrencies, on the 21st century seems the best and easiest way. Consequently, as trying to give you a hand, in this article Etherum World News reveals some of the best ways on making money from the Crypto Industry. Starting with the easiest and simplest way: 1. Analyzing, Buying and Holding Being aware that crypto area is a much extended one, one way in which you can consider yourselves safe is to first analyze and then purchase good cryptocurrencies that own an essential usecase. After choosing the right ones, lastly you should hold them until they secure a fair market share. For instance, cryptocurrencies like: Bitcoin (BTC) Ethereum (ETH) NANO (NANO) VeChain (VEN) NEO (NEO) Stellar (XLM) etc.. ..are frequently considered safe buys according to Etherum World News. The ones mentioned above are mostly suggested to be bought and held for a longer term only because they are bound to appreciate against the fiat pairs of USD, EUR etc. Yet, it’s not a must, to only focus on these six, but for an investment advise this could be considered as a great example of how to choose the right cryptocurrencies for yourselves. 2. Buying & Holding Cryptocurrencies that Reward You Regularly A smart move that it’s necessary to be kept in mind while having the intention to earn money through cryptocurrencies is to buy and hold cryptos that reward you regularly. Having it easy to access some main cryptocurrencies that pay you great deal just for holding them and it is not even mandatory for you to stake them, especially in a wallet. The crypto industry offers many of these cryptocurrencies, such as: NEO COSS KuCoin CEFS 3. Staking Cryptocurrencies According to Ethereumworldnews.com, staking cryptocurrencies is a great way of earning money because you not only benefit from price appreciation for keeping good crypto coins but also you get the additional reward as dividends for staking the coins. To explain better, staking is basically holding cryptcoins 24/7 in a live wallet, consequently earning new additional coins as a reward for staking and giving security to the blockchain network. Some noteworthy coins related to staking, can be considered: Neblio Ark Komodo PIVX NAV Coin 4. Masternodes When thinking on a more general way of earning money, running masternodes of cryptocurrencies can be selected as a good way for passive income on the crypto industry. The notion ‘Masternode’ is merely a cryptocurrency full node or simply a computer wallet that holds the full copy of the blockchain in real-time, for instance, when you have Bitcoin full nodes; and is mostly up and running to perform certain tasks. Various cryptocurrency companies pay the masternode owners for performing tasks that were mentioned above. Nonetheless, you should always keep in mind that to run a masternode you must have a minimum number of coins to start. The minimal amount to get started with a masternode is usually in the range of 1000 to 2500 coins, but it mostly depends on the company, mainly because various cryptocurrencies go with different strategies. Some currencies that have put masternode in function are: DASH PIVX VeChain Thor 5. Day Trading Cryptocurrencies If you are nearly an expert at technical charting at different intervals in the day, this method not only will help you become a full expert on trading but also can be considered as one of the best ways to earn money. You have the chance to trade various cryptocurrencies on many different exchanges such as: Binance (Recommended) KuCoin Cryptopia Bittrex Bitfinex Cex.io Gate.io The main idea of all of this is “Buy low and sell high”. This technique can be applied easily for a technical charter person because crypto, being not such a stable market, can fluctuate 20-50% in a day depending on the picking you focus. 6. Working For Crypto If you have experience on writing, developing, testing or designing working for cryptocurrencies is a relevant way of earning. You can begin making money in cryptocurrencies immediately after exchanging your services for it. Some of these platforms and websites that offer you Bitcoins in exchange for your service, are: Jobs4Bitcoins Crypto.Jobs XBTFreelancer Coinality bitWAGE CoinWorker Angle.Co 21.co On this way you can have dual benefit such as earning cryptocurrencies and also benefit from the price appreciation that cryptocurrencies on the whole are witnessing over the past few years. Recommendations from Etherumworldnews.com You can always start by working for these cryptocurrencies: Bitcoin Litecoin Ethereum 7. Accepting Cryptocurrencies if You Are a Vendor If you are a vendor, a good way to earn cryptocurrencies is if you accept them in exchange for your services or products. There are many cryptocurrencies that you can access and also Bitcoin payment processors, that as a vendor help you to accept cryptos. Worth to be mentioned: E-commerce websites and online businesses owners can adopt this way, on this way getting a double benefit of crypto price appreciation and also earning cryptos directly. 8. Writing for Cryptocurrency Websites If you are a good writer, your content is preferred by the readers, and also know how to use blogging, you can easily earn money by using these both on websites that have a chance on paying you in cryptocurrencies. If your work is important on crypto market, you can also monetize your work for cryptocurrencies for allowing readers to have access on your content. Some of these websites are: Y’alls Yours Steemit Last but not least: As mentioned just in the beginning of this article, you should always be attentive to scams and also illegal MLMs (Multi-level marketing). Even though they can be very promising when speaking for high returns, all they can give you is loss. Best recommendation would be to not get involved with these schemes and always try to earn legally. Last scam to be taken as an example is Bitconnect MLM scheme that came down crashing in one day. Source & Credits: Etherum World News Photo Credit : Pixabay Read the full article

0 notes

Link

Now I'm not claiming to be some sort of whiz when it comes to crypto, but I believe that VTC is going to stay strong and possibly permeate the space so much as to become a strong third wheel to the BTC/LTC marriage.LTC has just now made lightning work on their mainnet. Once it scales, we'll be ready for atomic swaps. BTC also just activated segwit, which means that eventually, there could be lightning on BTC as well.If we ever get to a point where you can atomic swap freely between BTC, LTC, and VTC, we may see serious real-life applications in use for crypto, to the point where the general public could find it easier to come on board.I personally believe that Vertcoin's true usecase is a lower step down from Litecoin as spending cash. The problem with Bitcoin was that if you wanted to buy a coffee for a dollar, you end up paying about four dollars because of transaction fees. Litecoin is much better for this type of transaction, because of it's quicker confirmation times and lower fees. However, Litecoin is about $50. Vertcoin opens a space for the general public to think of it as a serious one or five dollar virtual bill. On top of that, Vertcoin transactions are as fast or faster than Litecoin.Another thing of note is that Vertcoin is ASIC-resistant and relatively easy to mine. I can mine on my decent-ish GPU, so can anyone with a relatively current gaming computer. People that want to mine can start at the bottom of the chain with VTC, and swap up to LTC and BTC. This might not be much of an influencing factor in the wellbeing of VTC, but I digress.It just seems to me that if VTC continues to rise and levels around $5, that's a good place for it. If it moons and ends up at $25+, I'm not going to complain, but it seems like the reality in which VTC becomes a top 10 coin is the one in which it stabilizes at five or ten dollars, then becomes a staple in the lives of ordinary people who use a wallet on their phone to pay for their morning coffee and bagel.Go Vertcoin! via /r/vertcoin

0 notes