#software development estimates

Explore tagged Tumblr posts

Text

Exploring Non-Construction Industries That Benefit from an Estimating Service

Introduction Estimating services are often associated with the construction industry, but their value extends well beyond that. Various non-construction industries can also leverage estimating services to streamline operations, improve financial forecasting, and enhance decision-making processes. Whether it’s manufacturing, healthcare, or technology, estimating services can bring significant benefits across different sectors.

Manufacturing and Production In manufacturing, accurate cost estimates are crucial for ensuring that products are made efficiently and profitably. Estimating services help manufacturers predict the costs of raw materials, labor, machinery, and overhead. This level of precision helps businesses remain competitive by optimizing production costs, ensuring that they do not exceed budget constraints. By forecasting the costs associated with each step of the production process, manufacturers can identify areas where improvements or cost-saving measures are possible.

Healthcare and Medical Equipment The healthcare industry, particularly in medical equipment manufacturing and hospital construction, relies heavily on estimating services. Accurate cost estimates allow hospitals and medical institutions to plan their budgets effectively, whether for building new facilities or purchasing new equipment. Estimating services help predict the cost of materials, labor, and operational costs for both new construction and renovations. Additionally, in the medical equipment sector, estimating services can aid in forecasting production costs, allowing manufacturers to price their products appropriately while remaining competitive.

Technology and Software Development In technology and software development, estimating services are used to predict the cost of developing a product or service. From initial concept to finished product, accurate cost estimates help companies manage their budgets, allocate resources efficiently, and avoid cost overruns. Estimating services can also aid in predicting the cost of integrating new technologies, purchasing necessary software, and staffing requirements. The ability to accurately forecast costs at each stage of development helps companies stay on track and ensure that they deliver their products on time and within budget.

Retail and E-commerce Retailers and e-commerce businesses benefit from estimating services when it comes to inventory management and supply chain optimization. By predicting the costs associated with manufacturing, shipping, and stocking products, estimating services help businesses ensure that they can fulfill customer demand without overspending. Accurate cost estimates help these businesses negotiate better deals with suppliers and distributors, while also enabling them to plan for seasonal fluctuations in demand.

Energy and Utilities Energy companies, especially those involved in renewable energy projects or infrastructure upgrades, rely on estimating services for cost forecasting. Estimators help predict the costs of materials, labor, permits, and equipment required for energy infrastructure projects. Whether it's a solar power farm or the installation of new pipelines, estimating services provide valuable insights that help project teams stay within budget and avoid unforeseen expenses. These services are particularly important in the energy sector, where projects can span multiple years and involve complex logistical considerations.

Government and Public Sector Projects The government sector is another area where estimating services can be invaluable. Whether it’s for infrastructure projects, public building renovations, or the implementation of new programs, estimating services help public agencies create accurate budgets, allocate resources, and ensure that projects are completed on time and within budget. Accurate cost estimates are essential for ensuring taxpayer money is spent wisely and that public projects deliver on their promises without financial mismanagement.

Education and Institutional Planning In the education sector, estimating services are used to forecast the costs of building new campuses, upgrading existing facilities, or implementing educational programs. Schools and universities rely on estimating services to plan budgets for construction, technology upgrades, and educational resources. Accurate estimates help administrators make informed decisions about allocating funds for new programs, expanding infrastructure, or making campus-wide improvements.

Transportation and Logistics In the transportation and logistics industry, estimating services help companies predict the costs of fleet maintenance, fuel, and shipping operations. By forecasting transportation-related costs, companies can optimize their supply chains, negotiate better rates with suppliers and partners, and ensure that they are pricing their services competitively. Estimating services also assist in the planning of major transportation infrastructure projects, such as highways, railways, and ports, where accurate cost predictions are essential for securing funding and staying within budget.

Food Production and Agriculture Agriculture and food production industries benefit from estimating services by predicting the costs of raw materials, labor, equipment, and transportation. Accurate cost forecasting ensures that businesses can price their products effectively, avoid overproduction, and maintain profitability. Estimating services also help agricultural businesses plan for seasonal fluctuations in production costs, allowing them to adapt to market demands and mitigate financial risks.

Real Estate Development and Property Management While real estate development is often tied to construction, estimating services are equally valuable for property management companies. Estimators help property managers forecast maintenance costs, predict future capital expenditures, and create long-term financial plans for their portfolios. For real estate developers, estimating services offer insights into land acquisition costs, zoning regulations, and building costs, ensuring that they can effectively budget and avoid surprises during the development process.

Conclusion Estimating services are not limited to the construction industry. Non-construction sectors such as manufacturing, healthcare, technology, retail, energy, and others can also benefit from accurate cost forecasting. By incorporating estimating services into their financial and operational planning, businesses across industries can improve efficiency, reduce waste, and make smarter, more cost-effective decisions.

#Estimating Service#manufacturing cost estimates#healthcare cost forecasting#technology cost planning#software development estimates#retail cost forecasting#energy project estimating#public sector cost planning#government budgeting#educational cost estimates#transportation cost forecasting#logistics cost planning#agriculture cost predictions#food production estimates#real estate cost forecasting#renewable energy cost estimating#infrastructure planning#supply chain cost forecasting#project management#budget control#financial forecasting#resource allocation#construction estimates#cost accuracy#business operations#vendor negotiations#project cost control#material cost forecasting#seasonal cost adjustments#competitive pricing

0 notes

Text

Accurately estimating the time and cost of software development is crucial for project success. Here are key tips to help you make precise estimates: 1. Break down tasks into smaller, manageable units. 2. Use historical data and industry benchmarks. 3. Involve the development team in the estimation process. 4. Consider potential risks and uncertainties. 5. Regularly review and update your estimates as the project progresses.

2 notes

·

View notes

Text

Revolutionising Estimations with AI: Smarter, Faster, and More Reliable Predictions

Revolutionising estimations with AI transforms project planning by enhancing accuracy and reducing uncertainty. Unlike traditional methods prone to bias, AI-driven estimations leverage historical data and predictive analytics for more reliable forecasts.

Revolutionising estimations with AI is transforming how teams predict timelines, allocate resources, and improve project planning. Traditional estimation methods often rely on human intuition, which can introduce biases and inconsistencies. AI offers a data-driven approach that enhances accuracy, reduces uncertainty, and allows teams to focus on delivering value. If you’re interested in…

#agile#AI#automation#data-driven decisions#Efficiency#estimations#Forecast.app#forecasting#Jira#LinearB#machine learning#predictive analytics#Project management#risk management#software development#workflow optimization

0 notes

Text

#app development cost#app development#mobile app development cost#mobile app development#app development outsourcing cost#cost of app development#software development#app development company#app development costs#future of ai app development#mobile app development cost estimate#app development cost breakdown#ai app development company#ai development company#app development estimate cost#how much app development cost#app development for startups

1 note

·

View note

Text

#software cost estimation#software development cost#custom software development cost#cost estimation software#app development cost calculator#Software Cost Calculator

0 notes

Text

Estimating Rummy Game Development Costs in 2024

Rummy games have surged in popularity within India's gaming landscape, with the online real rummy market projected to hit an impressive ₹1.4 billion by 2024, according to Statistica. This exponential growth signifies a burgeoning market ripe for exploration, making Rummy game development an enticing prospect for businesses aiming to capitalize on this trend.

Market Expansion and Preference: The appeal of Rummy extends beyond Indian borders, cementing its status as one of the top 5 most favored card games globally. This widespread popularity, particularly in India, is further fueled by the increasing penetration of mobile devices, which provides accessibility to a larger audience.

Preference between Standalone Apps and Multigaming Integration: Both standalone Rummy applications and integration into multigaming platforms garner significant traction among players. However, overlooking Rummy's integration into multi-gaming platforms could be a strategic misstep. Integrating Rummy into a multi-gaming platform not only enhances user engagement but also broadens the platform's appeal, potentially attracting a diverse user base.

Why Real-Money Platforms Favor Rummy: Despite its distinction from traditional casino games, Rummy offers players a compelling gaming experience with minimal house advantage. This unique characteristic, coupled with its enduring popularity, makes Rummy an attractive proposition for real-money gaming platforms. The inclusion of Rummy often translates into higher player engagement and revenue generation for these platforms.

Game Development Considerations: Various factors influence the cost and scope of Rummy game development. Critical decisions include choosing between standalone and multigaming platforms, as well as selecting Rummy modes. Each mode, whether it be Points, Pool, Deals, or Raise, comes with its own set of complexities and development requirements. Additionally, integrating features such as tournaments and events can significantly enhance user engagement but may also impact development timelines and costs.

Mobile Platform and Additional Features: When developing a Rummy game for mobile platforms, businesses must weigh the pros and cons of native versus cross-platform applications. Cross-platform solutions offer versatility by catering to multiple mobile operating systems, thereby maximizing reach and minimizing development efforts. Furthermore, the inclusion of additional features beyond the basic gameplay, such as social features, in-app purchases, and player customization options, can elevate the gaming experience and drive user retention.

Cost Estimation: The cost of Rummy game development can vary significantly depending on project requirements and complexity. Basic Rummy games may start at around ₹5 lakh, catering to simpler features and platforms, while more advanced projects with extensive features and multi-platform support can cost upwards of ₹1 crore. It's essential for businesses to collaborate with experienced development partners like Enixo Studio to accurately assess project scope and obtain customized cost estimates.

Conclusion: As the Rummy gaming market continues to flourish, businesses must carefully evaluate their development strategies to capitalize on this lucrative opportunity effectively. By considering factors such as platform integration, feature set, and development costs, businesses can navigate the dynamic landscape of Rummy game development and position themselves for success in India's gaming industry. For reliable and efficient development services, companies can leverage the expertise of Enixo Studio to achieve their Rummy game development goals.

#Rummy game development cost#Rummy app development cost#Rummy game cost estimation#Rummy game budgeting#Rummy software development pricing#Rummy game development expenses#Rummy game development pricing#Rummy game cost breakdown#Rummy game development investment#Rummy game development quote

1 note

·

View note

Text

0 notes

Note

nightshade is basically useless https://www.tumblr.com/billclintonsbeefarm/740236576484999168/even-if-you-dont-like-generative-models-this

I'm not a developer, but the creators of Nightshade do address some of this post's concerns in their FAQ. Obviously it's not a magic bullet to prevent AI image scraping, and obviously there's an arms race between AI developers and artists attempting to disrupt their data pools. But personally, I think it's an interesting project and is accessible to most people to try. Giving up on it at this stage seems really premature.

But if it's caption data that's truly valuable, Tumblr is an ... interesting ... place to be scraping it from. For one thing, users tend to get pretty creative with both image descriptions and tags. For another, I hope whichever bot scrapes my blog enjoys the many bird photos I have described as "Cheese." Genuinely curious if Tumblr data is actually valuable or if it's garbage.

That said, I find it pretty ironic that the OP of the post you linked seems to think nightshade and glaze specifically are an unreasonable waste of electricity. Both are software. Your personal computer's graphics card is doing the work, not an entire data center, so if your computer was going to be on anyway, the cost is a drop in the bucket compared to what AI generators are consuming.

Training a large language model like GPT-3, for example, is estimated to use just under 1,300 megawatt hours (MWh) of electricity; about as much power as consumed annually by 130 US homes. To put that in context, streaming an hour of Netflix requires around 0.8 kWh (0.0008 MWh) of electricity. That means you’d have to watch 1,625,000 hours to consume the same amount of power it takes to train GPT-3. (source)

So, no, I don't think Nightshade or Glaze are useless just because they aren't going to immediately topple every AI image generator. There's not really much downside for the artists interested in using them so I hope they continue development.

991 notes

·

View notes

Text

God, the end of support for Windows 10 will be such a fucking bloodbath. It’s coming a year from now, 14 october 2025 and it will be a disaster. The one Windows version supported by Microsoft will be Windows 11, and its hardware requirements are like the rent, too damn high.

Literally most computers running Windows 10 can’t upgrade to Windows 11. 55% of working computers aren’t able to run windows 11 according to an analysis. A man quoted in the article argues even that is too optimistic considering how many older computers are still used. He thinks even an estimate of 25% of win10 machines being able to upgrade to win11 is too high an estimate, and frankly he sounds reasonable.

This will probably lead to two things.

Number one is a mountain of e-waste as people get rid of old computers unsupported by microsoft despite the hardware working fin ,and buy new windows 11 machines. It’s the great Windows 11 computer extinction experiment, as writer Jenny List called it. And when you buy a new computer with windows pre-installed, the windows license fee is baked into the price. So a windfall in license money for Microsoft, and the real reason why they are doing this.

Number two is a cybersecurity crisis. A lot of people will keep on using Windows 10 because “end of support” doesn’t mean it will stop working on that date. But the end of support means the end of security updates for the operating system. That will make those systems very unsafe, if they are connected to the internet. Security flaws and exploits for windows 10 will be discovered, problems that will never be patched because win10 isn’t supported anymore and they will be used against systems still running it.

Apparently a lot of people don’t understand this so I’ll try to explain this again as simply as I can. No human being is perfect, and accordingly nobody can write the perfect software that is safe from all cybersecurity threats forever. Security flaws and exploits will always be found, if the computer running that software is connected to the internet, which means it can be attacked by every bad actor out there. This is especially true if that software is as complex and important as an operating system, and it’s also widely used, which is true of Windows. But if the software is supported, the people who design and distribute that software can write patches and send out security updates that will patch the exploits that are found, minimizing the risks inherent to software, computers and the internet. It’s a constant race between well-meaning developers and bad actors, but if the developers are good about it, they will stay ahead.

But when support for the software is dropped, that means the developers will no longer patch the software. And that’s what happening to Windows 10 in october 2025. Any new exploits for the operating system that are found, and they will inevitably be found, won’t be patched by Microsoft. The exploits will stay unpatched, the system will be old and full of holes and anyone using it will be unsafe.

We already have this problem with people who are still using Windows 7 and Windows 8, years after Microsoft dropped support, often because their computers can’t upgrade even to windows 10. They are probably a disproportionate amount of the people getting hacked and their data stolen. From reading what they write to justify themselves online, my impression is that these people are frankly ignorant about technology and the dangers of what they are doing. And they are filled with the absurd self-confidence the ignorant often have, as they believe themselves to be too careful and tech-savvy to be hacked.

The problem will however explode with windows 10 ending support, because the gap in hardware requirements between win10 and win11 is so large, as already explained.

(sidenote, running unsupported operating systems can be safe, as long as you don’t connect the computer to the internet. You can even run windows 3.1 in perfect safety as long as its kept off the ‘net. But that’s a different story, I’m talking here about people who connect their computers to the internet)

So let’s imagine this very common scenario: you have a computer running Windows 10. You can’t upgrade it to windows 11 because most win10 computers literally can’t. You want to keep the computer connected to the internet for obvious reasons. You don’t have the money to get a new windows 11 computer, and you don’t want to throw your old perfectly useable hardware away. So what do you do?

The answer is install linux. Go to a reputable distro’s website like linuxmint.com, read and follow their documentation on how to install and use it. Just do it. If you are running windows 10, you have until October 14 2025 to figure it out. And if you are running windows 7 or 8, do it now.

There are good reasons for not using Linux and sticking with windows, linux has serious downsides. But when the choice is literally between an old unsupported version of windows and Linux, linux wins everytime. Every reason for not installing linux, every downside to the switch, all those are irrelevant when your alternative is literally running old unsupported windows on a machine connected to the internet. Sure linux might not be user-friendly enough for you, but that’s kinda irrelevant when the other alternatives presented is either throwing the computer away or sacrificing it to a botnet. And if you believe yourself to be too tech-savvy and careful to ever get pwned (as some present-day windows 7 users clearly believe themselves to be), that’s bullshit. If you really were careful and tech-savvy you would take the basic precaution of installing a supported operating and know how to do it.

I don’t think everyone can just switch to linux, at least not full time. If you need windows because your work requires it, frankly your only realistic option is to have a computer that supports win11 when october 2025 rolls around. If you don’t, either you have to pay for it yourself or ask your employer to supply a work computer with win11. Just don’t use Windows 10 for work stuff past that date, I doubt your co-workers, your employer or your customers will appreciate you putting their data at risk by doing so.

The rest of you, please don’t contribute to the growing problem of e-waste by throwing away perfectly useable hardware or put yourself at risk by using unspported versions of Windows. Try Linux instead.

112 notes

·

View notes

Text

Managing Complexity | How a Construction Estimating Service Handles Multi-Phase Projects

Introduction Multi-phase construction projects—whether for residential communities, commercial developments, or infrastructure builds—present a unique set of challenges. These projects require meticulous coordination across timelines, trades, and budgetary constraints. A construction estimating service becomes an indispensable asset in managing this complexity. By breaking the project into defined phases and applying structured estimating techniques, estimators help ensure clarity, efficiency, and financial control from planning through execution.

Understanding Multi-Phase Projects Multi-phase projects involve construction that unfolds in sequential or overlapping stages. Each phase might have its own design, scope, permitting requirements, and budget. Without careful cost planning, projects can suffer from cost overruns, resource misallocations, and scheduling conflicts. A construction estimating service mitigates these risks by producing phase-specific estimates that contribute to an accurate overall cost forecast.

Phase-Based Budgeting and Cash Flow Planning One of the primary contributions of a construction estimating service in multi-phase projects is segmented budgeting. Estimators prepare separate budgets for each project phase, allowing stakeholders to manage cash flow more effectively. This approach ensures that funding aligns with the project schedule and avoids unnecessary capital tie-ups in early phases.

Improved Forecasting with Historical Data and Cost Indices Construction estimating services often use historical data from similar projects to inform pricing for each phase. They also adjust for anticipated material and labor price fluctuations using industry cost indices. These forecasting tools are especially useful for long-term, multi-year projects where inflation or market volatility can have a significant financial impact.

Trade Coordination Across Phases Different phases may involve different trades or subcontractors, and proper sequencing is crucial. A construction estimating service helps coordinate trade involvement across phases, accounting for their availability, costs, and project dependencies. This avoids delays caused by poor scheduling or miscommunication between contractors.

Scope Definition and Scope Control In multi-phase projects, the scope of work for later phases often evolves as earlier stages are completed. A construction estimating service provides detailed documentation and clear scope definitions for each phase, reducing the likelihood of scope creep. Estimators also flag areas where cost contingencies may be needed, ensuring flexibility without compromising financial oversight.

Phase-Specific Risk Assessments Each phase of a project carries distinct risks—such as weather impacts during specific seasons, regulatory delays, or equipment mobilization needs. A skilled estimator evaluates these risks and integrates them into the phase-specific cost models. This proactive approach allows contractors and owners to make informed decisions and reduce the likelihood of expensive surprises.

Technology Integration for Phase Tracking Modern construction estimating services utilize software that integrates estimating with project scheduling and management tools. This digital coordination enables real-time updates to budgets and forecasts as each phase progresses. Estimators can adjust estimates dynamically to reflect on-site conditions, scope changes, or updated client requirements.

Logistical Planning and Resource Optimization Multi-phase projects often require shared use of materials, labor, or equipment across different stages. Estimators help identify opportunities for resource optimization—for instance, bulk material purchases or long-term labor contracts that span multiple phases. This helps in controlling costs and reducing waste.

Inter-Phase Communication and Stakeholder Alignment Construction estimating services contribute to better communication between architects, engineers, contractors, and clients. They provide a financial roadmap for each phase that aligns everyone on the expected costs, deliverables, and timelines. This alignment minimizes rework, confusion, and last-minute budget adjustments.

Contingency Planning and Change Management In multi-phase projects, changes are inevitable. A construction estimating service builds in appropriate contingencies based on the complexity and uncertainty of each phase. Estimators also assist in pricing change orders accurately and swiftly, ensuring minimal disruption to both schedule and budget.

Regulatory and Permit Considerations by Phase Each phase may require separate regulatory approvals or permits. Estimators factor in the time and cost associated with these processes, including fees, consultant costs, and compliance-related expenses. This level of detail is crucial to avoid delays or cost escalations due to overlooked requirements.

Comprehensive Reporting for Long-Term Planning A professional construction estimating service provides consistent reporting and updates for each project phase. These reports offer insights on current costs, projected spending, and budget performance. They also help in adjusting long-term plans to align with real-time data, especially in projects lasting several years.

Conclusion Managing multi-phase projects is no easy task, but with a construction estimating service, it becomes significantly more feasible. These professionals bring structure to complex timelines, improve forecasting accuracy, and ensure financial discipline from start to finish. Whether it's a four-phase residential build or a multi-tower commercial complex, the role of an estimator is central to success, allowing project teams to move forward with confidence, clarity, and cost control.

#construction estimating service#multi-phase construction project#construction phase budgeting#estimating complex builds#phase-specific estimates#construction cost control#construction project segmentation#contractor phase coordination#construction forecasting#trade scheduling#phase-by-phase construction planning#phased development cost#estimating service benefits#construction financial planning#scope management#estimating risk analysis#resource allocation in construction#construction estimating technology#cost estimating software#managing construction inflation#construction project communication#estimating for large projects#construction budget breakdown#cash flow planning construction#contingency cost estimating#multi-phase estimating strategy#estimating permit costs#phase coordination tools#project lifecycle estimating#estimating service accuracy

0 notes

Text

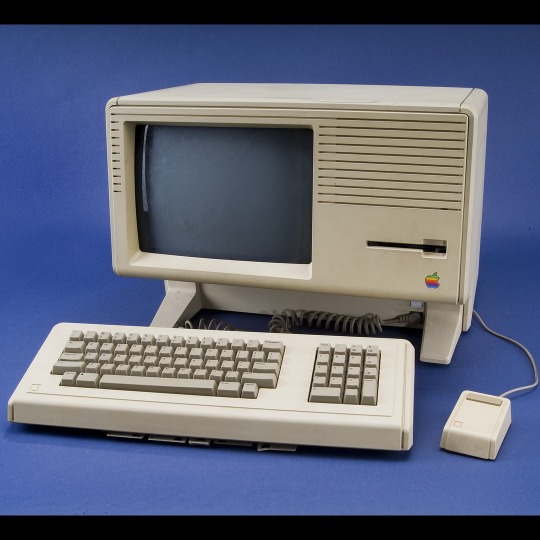

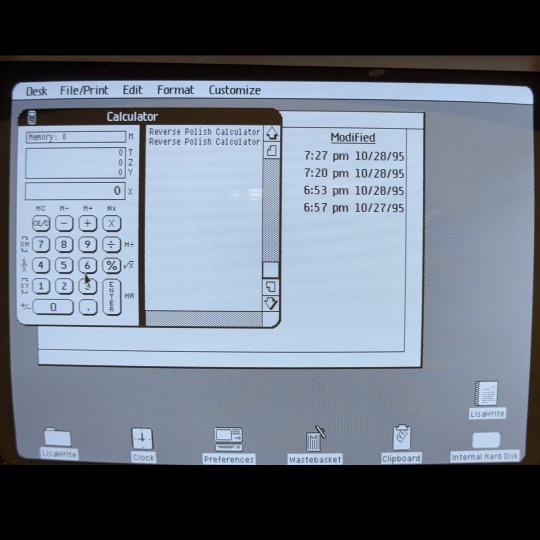

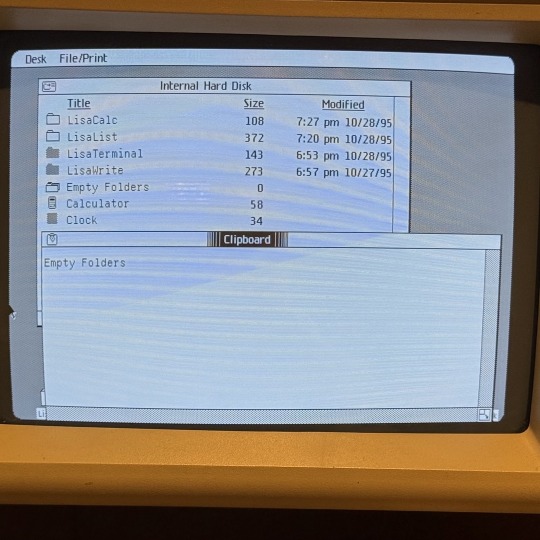

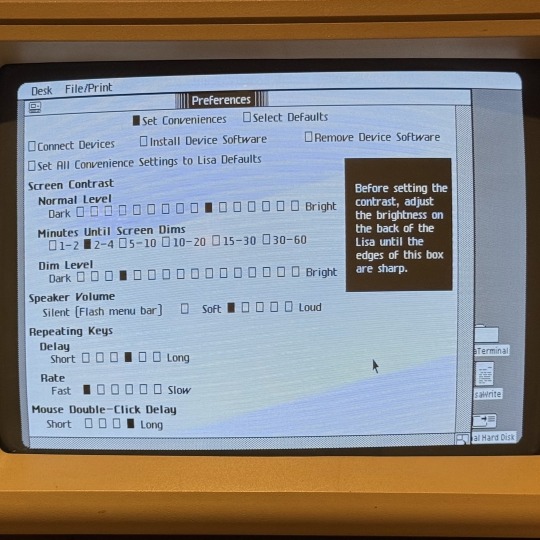

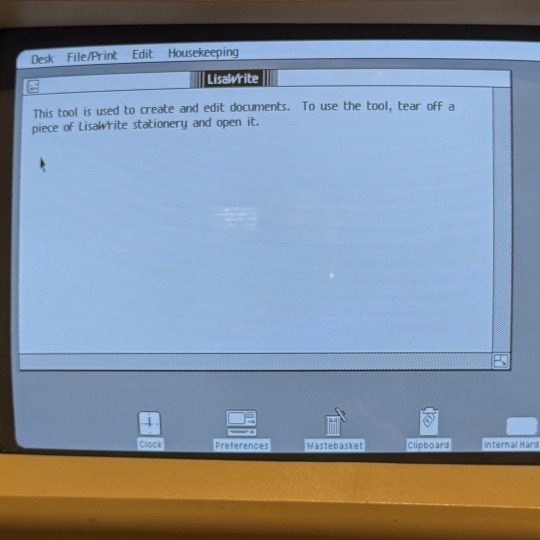

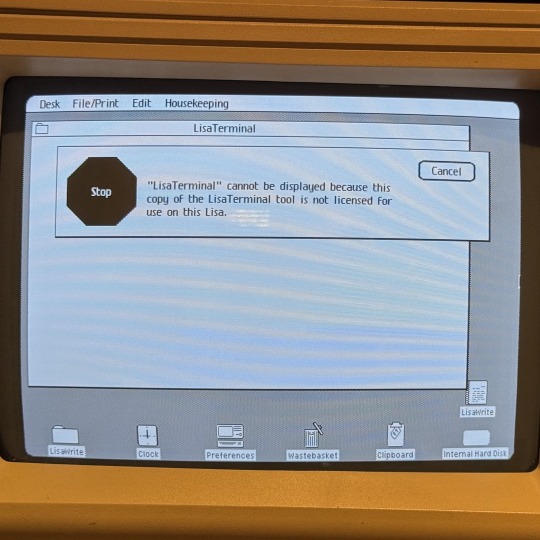

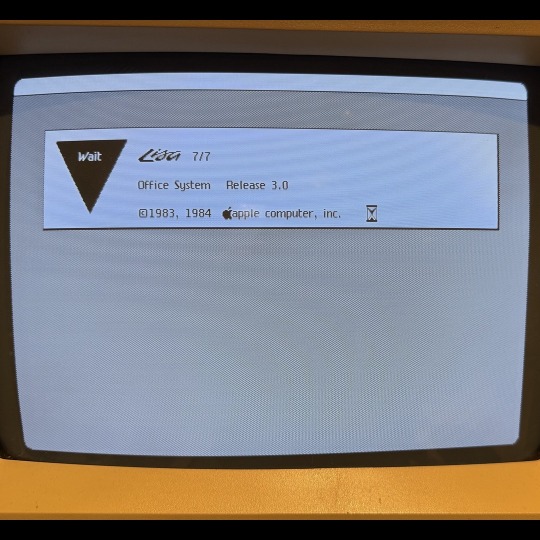

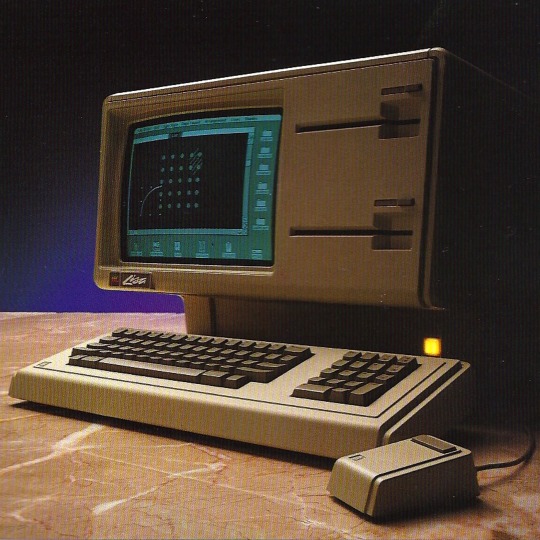

🎄💾🗓️ Day 9: Retrocomputing Advent Calendar - The Apple Lisa 🎄💾🗓️

The Apple Lisa, introduced on January 19, 1983, was a pioneering personal computer notable for its graphical user interface (GUI) and mouse input, a big departure from text-based command-line interfaces. Featured a Motorola 68000 CPU running at 5 MHz, 1 MB of RAM (expandable to 2 MB), and a 12-inch monochrome display with a resolution of 720×364 pixels. The system initially included dual 5.25-inch "Twiggy" floppy drives, later replaced by a single 3.5-inch Sony floppy drive in the Lisa 2 model. An optional 5 or 10 MB external ProFile hard drive provided more storage.

The Lisa's price of $9,995 (equivalent to approximately $30,600 in 2023) and performance issues held back its commercial success; sales were estimated at about 10,000 units.

It introduced advanced concepts such as memory protection and a document-oriented workflow, which influenced future Apple products and personal computing.

The Lisa's legacy had a huge impact on Apple computers, specifically the Macintosh line, which adopted and refined many of its features. While the Lisa was not exactly a commercial success, its contributions to the evolution of user-friendly computing interfaces are widely recognized in computing history.

These screen pictures come from Adafruit fan Philip " It still boots up from the Twiggy hard drive and runs. It also has a complete Pascal Development System." …"mine is a Lisa 2 with the 3.5” floppy and the 5 MB hard disk. In addition all of the unsold Lisa machines reached an ignominious end."

What end was that? From the Verge -

In September 1989, according to a news article, Apple buried about 2,700 unsold Lisa computers in Logan, Utah at a very closely guarded garbage dump. The Lisa was released in 1983, and it was Apple’s first stab at a truly modern, graphically driven computer: it had a mouse, windows, icons, menus, and other things we’ve all come to expect from “user-friendly” desktops. It had those features a full year before the release of the Macintosh.

Article, and video…

youtube

Check out the Apple Lisa page on Wikipedia

, the Computer History's article -

and the National Museum of American History – Behring center -

Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#applelisa#retrocomputing#firstcomputer#applehistory#computinghistory#vintagecomputers#macintosh#1980scomputers#applecomputer#gui#vintagehardware#personalcomputers#motorola68000#technostalgia#twiggydrive#floppydisk#graphicalinterface#applefans#computinginnovation#historictech#computerlegacy#techthrowback#techhistory#memoryprotection#profiledrive#userinterface#firstmac#computermilestone#techmemories#1983tech

113 notes

·

View notes

Text

Rethinking Estimations in the Age of AI

Rethinking estimations in AI-driven processes has become essential in the fast-paced world of IT and software development. Estimations shape project timelines, budgets, and expectations, yet traditional practices often lead to inefficiency and frustration. In a previous post, I explored the #NoEstimates movement and its call to move beyond traditional estimation techniques. Here, I’ll dive deeper…

#agile practices#AI#data-driven#Efficiency#estimations#historical data#IT industry#predictability#project planning#software development

0 notes

Text

One way to spot patterns is to show AI models millions of labelled examples. This method requires humans to painstakingly label all this data so they can be analysed by computers. Without them, the algorithms that underpin self-driving cars or facial recognition remain blind. They cannot learn patterns.

The algorithms built in this way now augment or stand in for human judgement in areas as varied as medicine, criminal justice, social welfare and mortgage and loan decisions. Generative AI, the latest iteration of AI software, can create words, code and images. This has transformed them into creative assistants, helping teachers, financial advisers, lawyers, artists and programmers to co-create original works.

To build AI, Silicon Valley’s most illustrious companies are fighting over the limited talent of computer scientists in their backyard, paying hundreds of thousands of dollars to a newly minted Ph.D. But to train and deploy them using real-world data, these same companies have turned to the likes of Sama, and their veritable armies of low-wage workers with basic digital literacy, but no stable employment.

Sama isn’t the only service of its kind globally. Start-ups such as Scale AI, Appen, Hive Micro, iMerit and Mighty AI (now owned by Uber), and more traditional IT companies such as Accenture and Wipro are all part of this growing industry estimated to be worth $17bn by 2030.

Because of the sheer volume of data that AI companies need to be labelled, most start-ups outsource their services to lower-income countries where hundreds of workers like Ian and Benja are paid to sift and interpret data that trains AI systems.

Displaced Syrian doctors train medical software that helps diagnose prostate cancer in Britain. Out-of-work college graduates in recession-hit Venezuela categorize fashion products for e-commerce sites. Impoverished women in Kolkata’s Metiabruz, a poor Muslim neighbourhood, have labelled voice clips for Amazon’s Echo speaker. Their work couches a badly kept secret about so-called artificial intelligence systems – that the technology does not ‘learn’ independently, and it needs humans, millions of them, to power it. Data workers are the invaluable human links in the global AI supply chain.

This workforce is largely fragmented, and made up of the most precarious workers in society: disadvantaged youth, women with dependents, minorities, migrants and refugees. The stated goal of AI companies and the outsourcers they work with is to include these communities in the digital revolution, giving them stable and ethical employment despite their precarity. Yet, as I came to discover, data workers are as precarious as factory workers, their labour is largely ghost work and they remain an undervalued bedrock of the AI industry.

As this community emerges from the shadows, journalists and academics are beginning to understand how these globally dispersed workers impact our daily lives: the wildly popular content generated by AI chatbots like ChatGPT, the content we scroll through on TikTok, Instagram and YouTube, the items we browse when shopping online, the vehicles we drive, even the food we eat, it’s all sorted, labelled and categorized with the help of data workers.

Milagros Miceli, an Argentinian researcher based in Berlin, studies the ethnography of data work in the developing world. When she started out, she couldn’t find anything about the lived experience of AI labourers, nothing about who these people actually were and what their work was like. ‘As a sociologist, I felt it was a big gap,’ she says. ‘There are few who are putting a face to those people: who are they and how do they do their jobs, what do their work practices involve? And what are the labour conditions that they are subject to?’

Miceli was right – it was hard to find a company that would allow me access to its data labourers with minimal interference. Secrecy is often written into their contracts in the form of non-disclosure agreements that forbid direct contact with clients and public disclosure of clients’ names. This is usually imposed by clients rather than the outsourcing companies. For instance, Facebook-owner Meta, who is a client of Sama, asks workers to sign a non-disclosure agreement. Often, workers may not even know who their client is, what type of algorithmic system they are working on, or what their counterparts in other parts of the world are paid for the same job.

The arrangements of a company like Sama – low wages, secrecy, extraction of labour from vulnerable communities – is veered towards inequality. After all, this is ultimately affordable labour. Providing employment to minorities and slum youth may be empowering and uplifting to a point, but these workers are also comparatively inexpensive, with almost no relative bargaining power, leverage or resources to rebel.

Even the objective of data-labelling work felt extractive: it trains AI systems, which will eventually replace the very humans doing the training. But of the dozens of workers I spoke to over the course of two years, not one was aware of the implications of training their replacements, that they were being paid to hasten their own obsolescence.

— Madhumita Murgia, Code Dependent: Living in the Shadow of AI

71 notes

·

View notes

Text

AI’s energy use already represents as much as 20 percent of global data-center power demand, research published Thursday in the journal Joule shows. That demand from AI, the research states, could double by the end of this year, comprising nearly half of all total data-center electricity consumption worldwide, excluding the electricity used for bitcoin mining.

The new research is published in a commentary by Alex de Vries-Gao, the founder of Digiconomist, a research company that evaluates the environmental impact of technology. De Vries-Gao started Digiconomist in the late 2010s to explore the impact of bitcoin mining, another extremely energy-intensive activity, would have on the environment. Looking at AI, he says, has grown more urgent over the past few years because of the widespread adoption of ChatGPT and other large language models that use massive amounts of energy. According to his research, worldwide AI energy demand is now set to surpass demand from bitcoin mining by the end of this year.

“The money that bitcoin miners had to get to where they are today is peanuts compared to the money that Google and Microsoft and all these big tech companies are pouring in [to AI],” he says. “This is just escalating a lot faster, and it’s a much bigger threat.”

The development of AI is already having an impact on Big Tech’s climate goals. Tech giants have acknowledged in recent sustainability reports that AI is largely responsible for driving up their energy use. Google’s greenhouse gas emissions, for instance, have increased 48 percent since 2019, complicating the company’s goals of reaching net zero by 2030.

“As we further integrate AI into our products, reducing emissions may be challenging due to increasing energy demands from the greater intensity of AI compute,” Google’s 2024 sustainability report reads.

Last month, the International Energy Agency released a report finding that data centers made up 1.5 percent of global energy use in 2024—around 415 terrawatt-hours, a little less than the yearly energy demand of Saudi Arabia. This number is only set to get bigger: Data centers’ electricity consumption has grown four times faster than overall consumption in recent years, while the amount of investment in data centers has nearly doubled since 2022, driven largely by massive expansions to account for new AI capacity. Overall, the IEA predicted that data center electricity consumption will grow to more than 900 TWh by the end of the decade.

But there’s still a lot of unknowns about the share that AI, specifically, takes up in that current configuration of electricity use by data centers. Data centers power a variety of services—like hosting cloud services and providing online infrastructure—that aren’t necessarily linked to the energy-intensive activities of AI. Tech companies, meanwhile, largely keep the energy expenditure of their software and hardware private.

Some attempts to quantify AI’s energy consumption have started from the user side: calculating the amount of electricity that goes into a single ChatGPT search, for instance. De Vries-Gao decided to look, instead, at the supply chain, starting from the production side to get a more global picture.

The high computing demands of AI, De Vries-Gao says, creates a natural “bottleneck” in the current global supply chain around AI hardware, particularly around the Taiwan Semiconductor Manufacturing Company (TSMC), the undisputed leader in producing key hardware that can handle these needs. Companies like Nvidia outsource the production of their chips to TSMC, which also produces chips for other companies like Google and AMD. (Both TSMC and Nvidia declined to comment for this article.)

De Vries-Gao used analyst estimates, earnings call transcripts, and device details to put together an approximate estimate of TSMC’s production capacity. He then looked at publicly available electricity consumption profiles of AI hardware and estimates on utilization rates of that hardware—which can vary based on what it’s being used for—to arrive at a rough figure of just how much of global data-center demand is taken up by AI. De Vries-Gao calculates that without increased production, AI will consume up to 82 terrawatt-hours of electricity this year—roughly around the same as the annual electricity consumption of a country like Switzerland. If production capacity for AI hardware doubles this year, as analysts have projected it will, demand could increase at a similar rate, representing almost half of all data center demand by the end of the year.

Despite the amount of publicly available information used in the paper, a lot of what De Vries-Gao is doing is peering into a black box: We simply don’t know certain factors that affect AI’s energy consumption, like the utilization rates of every piece of AI hardware in the world or what machine learning activities they’re being used for, let alone how the industry might develop in the future.

Sasha Luccioni, an AI and energy researcher and the climate lead at open-source machine-learning platform Hugging Face, cautioned about leaning too hard on some of the conclusions of the new paper, given the amount of unknowns at play. Luccioni, who was not involved in this research, says that when it comes to truly calculating AI’s energy use, disclosure from tech giants is crucial.

“It’s because we don’t have the information that [researchers] have to do this,” she says. “That’s why the error bar is so huge.”

And tech companies do keep this information. In 2022, Google published a paper on machine learning and electricity use, noting that machine learning was “10%–15% of Google’s total energy use” from 2019 to 2021, and predicted that with best practices, “by 2030 total carbon emissions from training will reduce.” However, since that paper—which was released before Google Gemini’s debut in 2023—Google has not provided any more detailed information about how much electricity ML uses. (Google declined to comment for this story.)

“You really have to deep-dive into the semiconductor supply chain to be able to make any sensible statement about the energy demand of AI,” De Vries-Gao says. “If these big tech companies were just publishing the same information that Google was publishing three years ago, we would have a pretty good indicator” of AI’s energy use.

19 notes

·

View notes

Text

An interactive evacuation zone map, touted by the Israeli military as an innovation in humanitarian process, was revealed to rely on a subset of an internal Israeli military intelligence database. The US-based software developer who revealed the careless error has determined -> that the database was in use since at least 2022 and was updated through December of 2023.

On Tuesday, July 9th, we discovered an interactive version of the evacuation map while examining a page on the IDF's Arabic-language website accessible via a QR code -> published on an evacuation order leaflet.

The map is divided into "population blocks" an IDF term to refer to the 620 polygons used to divide Gaza into sectors that a user can zoom into and out of.

However each request to the site pulls not only the polygon -> boundaries but the demographic information assigned to that sector, including which families - and how many. members - live there.

𝐃𝐢𝐬𝐜𝐨𝐯𝐞𝐫𝐲 𝐨𝐟 𝐈𝐧𝐭𝐞𝐫𝐚𝐜𝐭𝐢𝐯𝐞 𝐄𝐯𝐚𝐜𝐮𝐚𝐭𝐢𝐨𝐧 𝐌𝐚𝐩𝐬:

Evacuation maps have played a central role -> in determining which sectors of Gaza were deemed "safe", but repeated instances of Israeli bombing in "safe" sectors has prompted international bodies to state "nowhere in Gaza is safe"

To determine how these sectors have been affected over time, assess the presence of vital -> civilian infrastructure, and gauge the potential impact on the population following the military's evacuation calls to Gaza City residents, the latest of which was two days ago, our team created a map tied to a database using software known as a "geographic information system” -> With the help of volunteer GIS developers, our map of Gaza included individual layers for hospitals, educational facilities, roads, and municipal boundaries.

This endeavor took an unexpected turn earlier this week when we started to work on -> the layer of "evacuation population blocks"

using a map shared to the Israeli military's Arabic site. Unlike static images, these maps responded interactively to zooming and panning. A software developer suggested that dynamic interaction was possible because the coordinates -> of various "population blocks" were delivered to the browser with each request, potentially retrieving the coordinates of the "population blocks" in real time and overlaying them on the map we were building.

The software developer delved into the webpage's source code -> —a practice involving the inspection of code delivered to every visitor's browser by the website. The source code of every website is publicly available and delivered to visitors on every page request. It functions "under the hood" of the website and can be viewed without -> any specialized tools or form of hacking.

𝐏𝐚𝐫𝐬𝐢𝐧𝐠 𝐔𝐧𝐞𝐱𝐩𝐞𝐜𝐭𝐞𝐝 𝐃𝐚𝐭𝐚

Upon examination, the data retrieved by the dynamic evacuation map included much more than just geographical coordinates. The source code contained a table from an unknown GIS -> database with numerous additional fields labelled in Hebrew.

These fields included population estimates for each block, details of the two largest clans in each block (referred to as “CLAN”), rankings based on unknown criteria -> and timestamps indicating when records were last updated.

Some data terms, such as "manpower_e," were ambiguous for us to interpret, possibly referring to either the number of fighters or the necessary personnel to maintain the area -> Using this information we determined that Block 234, Abu Madin was last updated on 27 April 2022. This suggests that Israel's effort to divide Gaza into 620 "population blocks" began one and a half years before the current Israeli offensive -> Additional modification dates indicate that the military updated data in this field regularly throughout October and November, before formally publishing it on the arabic Israeli military webpage on December 1, 2023.

It appears that the IDF has accidentally published -> a subset of their internal intelligence

GIS database in an effort to impress the world with a novel, humanitarian evacuation aid. It is easier to retrieve a database in its entirety than to write a properly selective query. Such mistakes are common among programmers that lack -> experience, security training, or are simply unwilling or unable to do meet standard data security requirements for a project.

𝐂𝐥𝐚𝐧𝐬 𝐚𝐧𝐝 𝐀𝐝𝐦𝐢𝐧𝐢𝐬𝐭𝐫𝐚𝐭𝐢𝐨𝐧 𝐏𝐥𝐚𝐧𝐬 -> In January, Israeli security chiefs proposed a plan for "the day after" where Palestinian clans in the Gaza Strip would temporarily administer the coastal enclave. In this concept, each clan would handle humanitarian aid and resources for their local regions. Israeli assessments of the proposal suggested such a plan would fail due to "lack of will" and retaliation by Hamas against clans willing to collaborate with the army. The plan has since been modified to include Hamas-free “bubbles,” (as reported by the Financial Times) -> where local Palestinians would gradually take over aid distribution responsibilities.

As this plan is to be initially implemented in Beit Hanoun and Beit Lahia. Al-Atatrah Area and Beit Hanoun, we have analyzed the information in the database assigned to those three areas -> Israeli army classified the Al-Atatrah area as region 1741, although there are only 620 'blocks' on the map. The registered population was listed as 949, last updated by the IDF on October 9th. We assume this represents an estimate by the IDF of current residents as of Oct 9th. Residents of Al-Atatrah were among the first to evacuate following the initial bombardment on October 7th and 8th and were not given a formal notification to evacuate. The Israeli military noted that the Abo Halima family comprised 54% of the block’s population -> In area west of the town Beit Hanoun, Israel had desginated the Almasri clan as the largest in the sector, consisting 18% of the block's population. Second largest was the Hamad family, with11% of the block's population. This area were also associated with rankings, however -> without the criteria used to determine the ranking, these numbers are difficult to interpret.

𝐖𝐡𝐚𝐭 𝐝𝐨𝐞𝐬 𝐭𝐡𝐢𝐬 𝐢𝐧𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 𝐫𝐞𝐯𝐞𝐚𝐥?

Although some of the data were inadvertently truncated during publication, posing challenges for comprehensive -> interpretation, this database provides a valuable window into the Israeli military's perspective on Gaza.

For one, it prompts questions into why the military had already partitioned Gaza into 620 population blocks a year an a half before October 7th -> This suggests an early inclination to implement a governance policy where clans would assume authoritative roles, as well as detailed population surveillance. Tracking dense populations in the chaos of urban warfare is a difficult task -> It may be that the QR code on the evacuation map actively collects the locations of people who scan it, allowing the IDF to collect real-time data on Palestinians in Gaza as they attempt to find safety.

END

85 notes

·

View notes

Text

We are looking good, friends, for I finally have a playable version of the update on my hands 🥳 Plots? Established. Choices? Laid down. Sass? Locked and loaded.

There are still 2.5 large scenes + editing to do, mind you, but it's always nice to have a single structured piece you can traverse, tweak and add upon. Slow-dripping that sense of accomplishment to keep me going 😅

Now onto the less cheerful side of things. Sadly, my testing setup got obliterated in the meantime by the software changes beyond my control, so I cannot reliably estimate how long beta reading and error/typo fixing will take. Already sweating in anticipation 🤡 I've been growing so frustrated with Twine lately, and it had a substantial effect on the development time. A lot of it is not the engine's fault, but some certainly is. I still enjoy the extended features I get to introduce (like the Secrets or the Codex pop-ups), but now I know that I vastly prefer how you code the scenes with ChoiceScript... Not to mention the out-of-the-box testing and an easy way to estimate how your stats are accumulating. Sigh. It's okay though, I am determined and will not let it defeat me!

Stay tuned!

50 notes

·

View notes