#text to sql ai

Explore tagged Tumblr posts

Text

Convert Text to SQL With dbForge AI Assistant

Tired of writing SQL code manually? Now you don’t have to. dbForge AI Assistant can generate accurate, context-aware queries in seconds, just by interpreting your plain text request. Whether you're a developer, DBA, analyst, or manager, this tool simplifies query creation, troubleshooting, and optimization across major database systems like SQL Server, MySQL, MariaDB, Oracle, and PostgreSQL.

Integrated into a variety of dbForge tools and a multidatabase solution dbForge Edge, the Assistant turns natural language into SQL, explains query structure, suggests fixes, and even guides you through features of the IDE.

Explore how this smart Text to SQL AI companion works, who it's for, and why it’s quickly becoming a must-have for anyone dealing with databases.

I’ve tried it myself and honestly, it’s a great helper. It feels like having an SQL expert on call 24/7, ready to handle everything from simple SELECTs to complex JOINs.

0 notes

Text

Mastering Text-to-SQL with LLM Solutions and Overcoming Challenges

Text-to-SQL solutions powered by Large Language Models (LLMs) are transforming the way businesses interact with databases. By enabling users to query databases using natural language, these solutions are breaking down technical barriers and enhancing accessibility. However, as with any innovative technology, Text-to-SQL solutions come with their own set of challenges. This blog explores the top hurdles and provides practical tips to overcome them, ensuring a seamless and efficient experience.

The rise of AI-generated SQL

Generative AI is transforming how we work with databases. It simplifies tasks like reading, writing, and debugging complex SQL (Structured Query Language). SQL is the universal language of databases, and AI tools make it accessible to everyone. With natural language input, users can generate accurate SQL queries instantly. This approach saves time and enhances the user experience. AI-powered chatbots can now turn questions into SQL commands. This allows businesses to retrieve data quickly and make better decisions.

Large language models (LLMs) like Retrieval-Augmented Generation (RAG) add even more value. They integrate enterprise data with AI to deliver precise results. Companies using AI-generated SQL report 50% better query accuracy and reduced manual effort. The global AI database market is growing rapidly, expected to reach $4.5 billion by 2026 (MarketsandMarkets). Text-to-SQL tools are becoming essential for modern businesses. They help extract value from data faster and more efficiently than ever before.

Understanding LLM-based text-to-SQL

Large Language Models (LLMs) make database management simpler and faster. They convert plain language prompts into SQL queries. These queries can range from simple data requests to complex tasks using multiple tables and filters. This makes it easy for non-technical users to access company data. By breaking down coding barriers, LLMs help businesses unlock valuable insights quickly.

Integrating LLMs with tools like Retrieval-Augmented Generation (RAG) adds even more value. Chatbots using this technology can give personalized, accurate responses to customer questions by accessing live data. LLMs are also useful for internal tasks like training new employees or sharing knowledge across teams. Their ability to personalize interactions improves customer experience and builds stronger relationships.

AI-generated SQL is powerful, but it has risks. Poorly optimized queries can slow systems, and unsecured access may lead to data breaches. To avoid these problems, businesses need strong safeguards like access controls and query checks. With proper care, LLM-based text-to-SQL can make data more accessible and useful for everyone.

Key Challenges in Implementing LLM-Powered Text-to-SQL Solutions

Text-to-SQL solutions powered by large language models (LLMs) offer significant benefits but also come with challenges that need careful attention. Below are some of the key issues that can impact the effectiveness and reliability of these solutions.

Understanding Complex Queries

One challenge in Text-to-SQL solutions is handling complex queries. For example, a query that includes multiple joins or nested conditions can confuse LLMs. A user might ask, “Show me total sales from last month, including discounts and returns, for product categories with over $100,000 in sales.” This requires multiple joins and filters, which can be difficult for LLMs to handle, leading to inaccurate results.

Database Schema Mismatches

LLMs need to understand the database schema to generate correct SQL queries. If the schema is inconsistent or not well-documented, errors can occur. For example, if a table is renamed from orders to sales, an LLM might still reference the old table name. A query like “SELECT * FROM orders WHERE order_date > ‘2024-01-01’;” will fail if the table was renamed to sales.

Ambiguity in Natural Language

Natural language can be unclear, which makes it hard for LLMs to generate accurate SQL. For instance, a user might ask, “Get all sales for last year.” Does this mean the last 12 months or the calendar year? The LLM might generate a query with incorrect date ranges, like “SELECT * FROM sales WHERE sales_date BETWEEN ‘2023-01-01’ AND ‘2023-12-31’;” when the user meant the past year.

Performance Limitations

AI-generated SQL may not always be optimized for performance. A simple query like “Get all customers who made five or more purchases last month” might result in an inefficient SQL query. For example, LLM might generate a query that retrieves all customer records, then counts purchases, instead of using efficient methods like aggregation. This could slow down the database, especially with large datasets.

Security Risks

Text-to-SQL solutions can open the door to security issues if inputs aren’t validated. For example, an attacker could input harmful code, like “DROP TABLE users;”. Without proper input validation, this could lead to an SQL injection attack. To protect against this, it’s important to use techniques like parameterized queries and sanitize inputs.

Tips to Overcome Challenges in Text-to-SQL Solutions

Text-to-SQL solutions offer great potential, but they also come with challenges. Here are some practical tips to overcome these common issues and improve the accuracy, performance, and security of your SQL queries.

Simplify Complex Queries To handle complex queries, break them down into smaller parts. Train the LLM to process simple queries first. For example, instead of asking for “total sales, including discounts and returns, for top product categories,” split it into “total sales last month” and “returns by category.” This helps the model generate more accurate SQL.

Keep the Schema Consistent A consistent and clear database schema is key. Regularly update the LLM with any schema changes. Use automated tools to track schema updates. This ensures the LLM generates accurate SQL queries based on the correct schema.

Clarify Ambiguous Language Ambiguous language can confuse the LLM. To fix this, prompt users for more details. For example, if a user asks for “sales for last year,” ask them if they mean the last 12 months or the full calendar year. This will help generate more accurate queries.

Optimize SQL for Performance Ensure the LLM generates optimized queries. Use indexing and aggregation to speed up queries. Review generated queries for performance before running them on large databases. This helps avoid slow performance, especially with big data.

Enhance Security Measures To prevent SQL injection attacks, validate and sanitize user inputs. Use parameterized queries to protect the database. Regularly audit the SQL generation process for security issues. This ensures safer, more secure queries.

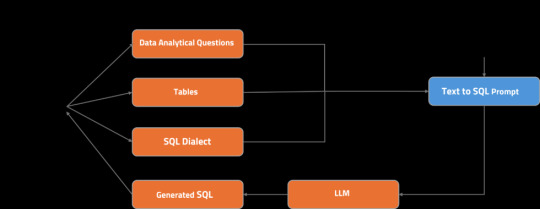

Let’s take a closer look at its architecture:

The user asks an analytical question, choosing the tables to be used.

The relevant table schemas are retrieved from the table metadata store.

The question, selected SQL dialect, and table schemas are compiled into a Text-to-SQL prompt.

The prompt is fed into LLM.

A streaming response is generated and displayed to the user.

Real-World Examples of Text-to-SQL Challenges and Solutions

Example 1: Handling Nested Queries A financial analytics company wanted monthly revenue trends and year-over-year growth data. The initial Text-to-SQL solution couldn’t generate the correct nested query for growth calculation. After training the LLM with examples of revenue calculations, the system could generate accurate SQL queries for monthly data and growth.

Example 2: Ambiguity in User Input A user asked, “Show me the sales data for last quarter.” The LLM initially generated a query without specifying the quarter’s exact date range. To fix this, the system was updated to ask, “Do you mean Q3 2024?” This clarified the request and improved query accuracy.

Example 3: Handling Complex Joins and Filters A marketing team asked for the total number of leads and total spend for each campaign last month. The LLM struggled to generate the SQL due to complex joins between tables like leads, campaigns, and spend. The solution was to break the query into smaller parts: first, retrieve leads, then total spend, and finally join the data.

Example 4: Handling Unclear Date Ranges A user requested, “Show me the revenue data from the last six months.” The LLM couldn’t determine if the user meant 180 days or six calendar months. The system was updated to clarify, asking, “Do you mean the last six calendar months or 180 days?” This ensured the query was accurate.

Example 5: Handling Multiple Aggregations A retail analytics team wanted to know the average sales per product category and total sales for the past quarter. The LLM initially failed to perform the aggregation correctly. After training, the system could use functions like AVG() for average sales and SUM() for total sales in a single, optimized query.

Example 6: Handling Non-Standard Input A customer service chatbot retrieved customer order history for an e-commerce company. A user typed, “Show me orders placed between March and April 2024,” but the system didn’t know how to interpret the date range. The solution was to automatically infer the start and end dates of those months, ensuring the query worked without requiring exact dates.

Example 7: Improperly Handling Null Values A user requested, “Show me all customers who haven’t made any purchases in the last year.” LLM missed customers with null purchase records. By training the system to handle null values using SQL clauses like IS NULL and LEFT JOIN, the query returned the correct results for customers with no purchases.

Future Trends in LLM-Powered Text-to-SQL Solutions

As LLMs continue to evolve, their Text-to-SQL capabilities will become even more robust. Key trends to watch include:

AI-Driven Query Optimization Future Text-to-SQL solutions will improve performance by optimizing queries, especially for large datasets. AI will learn from past queries, suggest better approaches, and increase query efficiency. This will reduce slow database operations and enhance overall performance.

Expansion of Domain-Specific LLMs Domain-specific LLMs will be customized for industries like healthcare, finance, and e-commerce. These models will understand specific terms and regulations in each sector. This will make SQL queries more accurate and relevant, cutting down on the need for manual corrections.

Natural Language Interfaces for Database Management LLM-powered solutions will allow non-technical users to manage databases using simple conversational interfaces. Users can perform complex tasks, such as schema changes or data transformations, without writing SQL. This makes data management more accessible to everyone in the organization.

Integration with Advanced Data Analytics Tools LLM-powered Text-to-SQL solutions will integrate with data analytics tools. This will help users generate SQL queries for advanced insights, predictive analysis, and visualizations. As a result, businesses will be able to make data-driven decisions without needing technical expertise.

Conclusion

Implementing AI-generated SQL solutions comes with challenges, but these can be effectively addressed with the right strategies. By focusing on schema consistency, query optimization, and user-centric design, businesses can unlock the full potential of these solutions. As technology advances, AI-generated SQL tools will become even more powerful, enabling seamless database interactions and driving data-driven decision-making.

Ready to transform your database interactions? Register for free and explore EzInsights AI Text to SQL today to make querying as simple as having a conversation.

For more related blogs visit: EzInsights AI

0 notes

Text

Idea Frontier #4: Enterprise Agentics, DaaS, Self-Improving LLMs

TL;DR — Edition #4 zeroes-in on three tectonic shifts for AI founders: Enterprise Agentics – agent frameworks such as Google’s new ADK, CrewAI and AutoGen are finally hardened for production, and AWS just shipped a reference pattern for an enterprise-grade text-to-SQL agent; add DB-Explore + Dynamic-Tool-Selection and you get a realistic playbook for querying 100-table warehouses with…

#ai#AI Agents#CaseMark#chatGPT#DaaS#DeepSeek#Enterprise AI#Everstream#generative AI#Idea Frontier#llm#LoRA#post-training LLMs#Predibase#Reinforcement learning#RLHF#text-to-SQL

0 notes

Text

work complaints

some funny experiences ive had lately: my boss asking some AI bot to do the same steps i had just done + was offering to walk him through (the bot didnt correctly generate whatever text needed to be generated and he ended up asking me anyway)

also i wrote a sql query that performed really well for a very annoying and time-sensitive problem, and then had 3 guys i work with explain to me why it performed so well. like sure u can write a query without understanding it, but the reason it performed well was that i DID understand it lmfao. in this particular case. like its annoying but its also really funny

2 notes

·

View notes

Text

Is cPanel on Its Deathbed? A Tale of Technology, Profits, and a Slow-Moving Train Wreck

Ah, cPanel. The go-to control panel for many web hosting services since the dawn of, well, web hosting. Once the epitome of innovation, it’s now akin to a grizzled war veteran, limping along with a cane and wearing an “I Survived Y2K” t-shirt. So what went wrong? Let’s dive into this slow-moving technological telenovela, rife with corporate greed, security loopholes, and a legacy that may be hanging by a thread.

Chapter 1: A Brief, Glorious History (Or How cPanel Shot to Stardom)

Once upon a time, cPanel was the bee’s knees. Launched in 1996, this software was, for a while, the pinnacle of web management systems. It promised simplicity, reliability, and functionality. Oh, the golden years!

Chapter 2: The Tech Stack Tortoise

In the fast-paced world of technology, being stagnant is synonymous with being extinct. While newer tech stacks are integrating AI, machine learning, and all sorts of jazzy things, cPanel seems to be stuck in a time warp. Why? Because the tech stack is more outdated than a pair of bell-bottom trousers. No Docker, no Kubernetes, and don’t even get me started on the lack of robust API support.

Chapter 3: “The Corpulent Corporate”

In 2018, Oakley Capital, a private equity firm, acquired cPanel. For many, this was the beginning of the end. Pricing structures were jumbled, turning into a monetisation extravaganza. It’s like turning your grandma’s humble pie shop into a mass production line for rubbery, soulless pies. They’ve squeezed every ounce of profit from it, often at the expense of the end-users and smaller hosting companies.

Chapter 4: Security—or the Lack Thereof

Ah, the elephant in the room. cPanel has had its fair share of vulnerabilities. Whether it’s SQL injection flaws, privilege escalation, or simple, plain-text passwords (yes, you heard right), cPanel often appears in the headlines for all the wrong reasons. It’s like that dodgy uncle at family reunions who always manages to spill wine on the carpet; you know he’s going to mess up, yet somehow he’s always invited.

Chapter 5: The (Dis)loyal Subjects—The Hosting Companies

Remember those hosting companies that once swore by cPanel? Well, let’s just say some of them have been seen flirting with competitors at the bar. Newer, shinier control panels are coming to market, offering modern tech stacks and, gasp, lower prices! It’s like watching cPanel’s loyal subjects slowly turn their backs, one by one.

Chapter 6: The Alternatives—Not Just a Rebellion, but a Revolution

Plesk, Webmin, DirectAdmin, oh my! New players are rising, offering updated tech stacks, more customizable APIs, and—wait for it—better security protocols. They’re the Han Solos to cPanel’s Jabba the Hutt: faster, sleeker, and without the constant drooling.

Conclusion: The Twilight Years or a Second Wind?

The debate rages on. Is cPanel merely an ageing actor waiting for its swan song, or can it adapt and evolve, perhaps surprising us all? Either way, the story of cPanel serves as a cautionary tale: adapt or die. And for heaven’s sake, update your tech stack before it becomes a relic in a technology museum, right between floppy disks and dial-up modems.

This outline only scratches the surface, but it’s a start. If cPanel wants to avoid becoming the Betamax of web management systems, it better start evolving—stat. Cheers!

#hosting#wordpress#cpanel#webdesign#servers#websites#webdeveloper#technology#tech#website#developer#digitalagency#uk#ukdeals#ukbusiness#smallbussinessowner

14 notes

·

View notes

Text

Optimizing Business Operations with Advanced Machine Learning Services

Machine learning has gained popularity in recent years thanks to the adoption of the technology. On the other hand, traditional machine learning necessitates managing data pipelines, robust server maintenance, and the creation of a model for machine learning from scratch, among other technical infrastructure management tasks. Many of these processes are automated by machine learning service which enables businesses to use a platform much more quickly.

What do you understand of Machine learning?

Deep learning and neural networks applied to data are examples of machine learning, a branch of artificial intelligence focused on data-driven learning. It begins with a dataset and gains the ability to extract relevant data from it.

Machine learning technologies facilitate computer vision, speech recognition, face identification, predictive analytics, and more. They also make regression more accurate.

For what purpose is it used?

Many use cases, such as churn avoidance and support ticket categorization make use of MLaaS. The vital thing about MLaaS is it makes it possible to delegate machine learning's laborious tasks. This implies that you won't need to install software, configure servers, maintain infrastructure, and other related tasks. All you have to do is choose the column to be predicted, connect the pertinent training data, and let the software do its magic.

Natural Language Interpretation

By examining social media postings and the tone of consumer reviews, natural language processing aids businesses in better understanding their clientele. the ml services enable them to make more informed choices about selling their goods and services, including providing automated help or highlighting superior substitutes. Machine learning can categorize incoming customer inquiries into distinct groups, enabling businesses to allocate their resources and time.

Predicting

Another use of machine learning is forecasting, which allows businesses to project future occurrences based on existing data. For example, businesses that need to estimate the costs of their goods, services, or clients might utilize MLaaS for cost modelling.

Data Investigation

Investigating variables, examining correlations between variables, and displaying associations are all part of data exploration. Businesses may generate informed suggestions and contextualize vital data using machine learning.

Data Inconsistency

Another crucial component of machine learning is anomaly detection, which finds anomalous occurrences like fraud. This technology is especially helpful for businesses that lack the means or know-how to create their own systems for identifying anomalies.

Examining And Comprehending Datasets

Machine learning provides an alternative to manual dataset searching and comprehension by converting text searches into SQL queries using algorithms trained on millions of samples. Regression analysis use to determine the correlations between variables, such as those affecting sales and customer satisfaction from various product attributes or advertising channels.

Recognition Of Images

One area of machine learning that is very useful for mobile apps, security, and healthcare is image recognition. Businesses utilize recommendation engines to promote music or goods to consumers. While some companies have used picture recognition to create lucrative mobile applications.

Your understanding of AI will drastically shift. They used to believe that AI was only beyond the financial reach of large corporations. However, thanks to services anyone may now use this technology.

2 notes

·

View notes

Text

I don't really think they're like, as useful as people say, but there are genuine usecases I feel -- just not for the massive, public facing, plagiarism machine garbage fire ones. I don't work in enterprise, I work in game dev, so this goes off of what I have been told, but -- take a company like Oracle, for instance. Massive databases, massive codebases. People I know who work there have told me that their internally trained LLM is really good at parsing plain language questions about, say, where a function is, where a bit oif data is, etc., and outputing a legible answer. Yes, search machines can do this too, but if you've worked on massive datasets -- well, conventional search methods tend to perform rather poorly.

From people I know at Microsoft, there's an internal-use version of co-pilot weighted to favor internal MS answers that still will hallucinate, but it is also really good at explaining and parsing out code that has even the slightest of documentation, and can be good at reimplementing functions, or knowing where to call them, etc. I don't necessarily think this use of LLMs is great, but it *allegedly* works and I'm inclined to trust programmers on this subject (who are largely AI critical, at least wrt chatGPT and Midjourney etc), over "tech bros" who haven't programmed in years and are just execs.

I will say one thing that is consistent, and that I have actually witnessed myself; most working on enterprise code seem to indicate that LLMs are really good at writing boilerplate code (which isn't hard per se, bu t extremely tedious), and also really good at writing SQL queries. Which, that last one is fair. No one wants to write SQL queries.

To be clear, this isn't a defense of the "genAI" fad by any means. chatGPT is unreliable at best, and straight up making shit up at worst. Midjourney is stealing art and producing nonsense. Voice labs are undermining the rights of voice actors. But, as a programmer at least, I find the idea of how LLMs work to be quite interesting. They really are very advanced versions of old text parsers like you'd see in old games like ZORK, but instead of being tied to a prewritten lexicon, they can actually "understand" concepts.

I use "understand" in heavy quotes, but rather than being hardcoded to relate words to commands, they can connect input written in plain english (or other languages, but I'm sure it might struggle with some sufficiently different from english given that CompSci, even tech produced out of the west, is very english-centric) to concepts within a dataset and then tell you about the concepts it found in a way that's easy to parse and understand. The reason LLMs got hijacked by like, chatbots and such, is because the answers are so human-readable that, if you squint and turn your head, it almost looks like a human is talking to you.

I think that is conceptually rather interesting tech! Ofc, non LLM Machine Learning algos are also super useful and interesting - which is why I fight back against the use of the term AI. genAI is a little bit more accurate, but I like calling things what they are. AI is such an umbrella that includes things like machine learning algos that have existed for decades, and while I don't think MOST people are against those, I see people who see like, a machine learning tool from before the LLM craze (or someone using a different machine learning tool) and getting pushback as if they are doing genAI. To be clear, thats the fault of the marketing around LLMs and the tech bros pushing them, not the general public -- they were poorly educated, but on purpose by said PR lies.

Now, LLMs I think are way more limited in scope than tech CEOs want you to believe. They aren't the future of public internet searches (just look at google), or art creation, or serious research by any means. But, they're pretty good at searching large datasets (as long as there's no contradictory info), writing boilerplate functions, and SQL queries.

Honestly, if all they did was SQL queries, that'd be enough for me to be interested fuck that shit. (a little hyperbolic/sarcastic on that last part to be clear).

ur future nurse is using chapgpt to glide thru school u better take care of urself

156K notes

·

View notes

Text

The Future of Database Management with Text to SQL AI

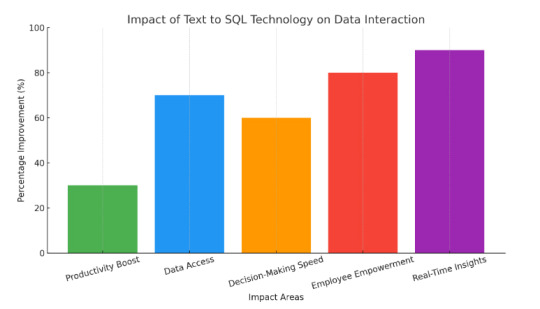

Database management transforms from Text to SQL AI, allowing businesses to interact with data through simple language rather than complex code. Studies reveal that 65% of business users need data insights without SQL expertise, and text-to-SQL AI fulfills this need by translating everyday language into accurate database queries. For example, users can type “Show last month’s revenue,” and instantly retrieve the relevant data.

As the demand for accessible data grows, text-to-SQL converter AI and generative AI are becoming essential, with the AI-driven database market expected to reach $6.8 billion by 2025. These tools reduce data retrieval times by up to 40%, making data access faster and more efficient for businesses, and driving faster, smarter decision-making.

Understanding Text to SQL AI

Text to SQL AI is an innovative approach that bridges the gap between human language and database querying. It enables users to pose questions or commands in plain English, which the AI then translates into Structured Query Language (SQL) queries. This technology significantly reduces the barriers to accessing data, allowing those without technical backgrounds to interact seamlessly with databases. For example, a user can input a simple request like, “List all customers who purchased in the last month,” and the AI will generate the appropriate SQL code to extract that information.

The Need for Text-to-SQL

As data grows, companies need easier ways to access insights without everyone having to know SQL. Text-to-SQL solves this problem by letting people ask questions in plain language and get accurate data results. This technology makes it simpler for anyone in a company to find the information they need, helping teams make decisions faster.

Text-to-SQL is also about giving more power to all team members. It reduces the need for data experts to handle basic queries, allowing them to focus on bigger projects. This easy data access encourages everyone to use data in their work, helping the company make smarter, quicker decisions.

Impact of Text to SQL Converter AI

The impact of Text-to-SQL converter AI is significant across various sectors, enhancing how users interact with databases and making data access more intuitive. Here are some key impacts:

Simplified Data Access: By allowing users to query databases using natural language, Text-to-SQL AI bridges the gap between non-technical users and complex SQL commands, democratizing data access.

Increased Efficiency: It reduces the time and effort required to write SQL queries, enabling users to retrieve information quickly and focus on analysis rather than syntax.

Error Reduction: Automated translation of text to SQL helps minimize human errors in query formulation, leading to more accurate data retrieval.

Enhanced Decision-Making: With easier access to data insights, organizations can make informed decisions faster, improving overall agility and responsiveness to market changes.

Broader Adoption of Data Analytics: Non-technical users, such as business analysts and marketers, can leverage data analytics tools without needing deep SQL knowledge, fostering a data-driven culture.

The Future of Data Interaction with Text to SQL

The future of data interaction is bright with Text to SQL technology, enabling users to ask questions in plain language and receive instant insights. For example, Walmart utilizes this technology to allow employees at all levels to access inventory data quickly, improving decision-making efficiency. Research shows that organizations adopting such solutions can boost productivity by up to 30%. By simplifying complex data queries, Text to SQL empowers non-technical users, fostering a data-driven culture. As businesses generate more data, this technology will be vital for real-time access and analysis, enabling companies to stay competitive and agile in a fast-paced market.

Benefits of Generative AI

Here are some benefits of generative AI that can significantly impact efficiency and innovation across various industries.

Automated Code Generation In software development, generative AI can assist programmers by generating code snippets based on natural language descriptions. This accelerates the coding process, reduces errors, and enhances overall development efficiency.

Improved Decision-Making Generative AI can analyze vast amounts of data and generate insights, helping businesses make informed decisions quickly. This capability enhances strategic planning and supports better outcomes in various operational areas.

Enhanced User Experience By providing instant responses and generating relevant content, generative AI improves user experience on platforms. This leads to higher customer satisfaction and fosters loyalty to brands and services.

Data Augmentation Generative AI can create synthetic data to enhance training datasets for machine learning models. This capability improves model performance and accuracy, especially when real data is limited or difficult to obtain.

Cost Reduction By automating content creation and data analysis, generative AI reduces operational costs for businesses. This cost-effectiveness makes it an attractive solution for organizations looking to maximize their resources.

Rapid Prototyping Organizations can quickly create prototypes and simulations using generative AI, streamlining product development. This speed allows for efficient testing of ideas, ensuring better outcomes before launching to the market.

Challenges in Database Management

Before Text-to-SQL, data analysts faced numerous challenges in database management, from complex SQL querying to dependency on technical teams for data access.

SQL Expertise Requirement Analysts must know SQL to retrieve data accurately. For those without deep SQL knowledge, this limits efficiency and can lead to errors in query writing.

Time-Consuming Querying Writing and testing complex SQL queries can be time intensive. This slows down data retrieval, impacting the speed of analysis and decision-making.

Dependency on Database Teams Analysts often rely on IT or database teams to access complex data sets, causing bottlenecks and delays, especially when teams are stretched thin.

Higher Risk of Errors Manual SQL query writing can lead to errors, such as incorrect joins or filters. These errors affect data accuracy and lead to misleading insights.

Limited Data Access for Non-Experts Without SQL knowledge, non-technical users can’t access data on their own, restricting valuable insights to those with specialized skills.

Difficulty Handling Large Datasets Complex SQL queries on large datasets require significant resources, slowing down systems and making analysis challenging for real-time insights.

Learning Curve for New Users For new analysts or team members, learning SQL adds a steep learning curve, slowing down onboarding and data access.

Challenges with Ad-Hoc Queries Creating ad-hoc queries for specific data questions can be tedious, especially when quick answers are needed, which makes real-time analysis difficult.

Real-World Applications of Text to SQL AI

Let’s explore the real-world applications of AI-driven natural language processing in transforming how businesses interact with their data.

Customer Support Optimization Companies use Text to SQL AI to analyze customer queries quickly. Organizations report a 30% reduction in response times, enhancing customer satisfaction and loyalty.

Sales Analytics Sales teams utilize Text to SQL AI for real-time data retrieval, leading to a 25% increase in revenue through faster decision-making and improved sales strategies based on accurate data insights.

Supply Chain Optimization Companies use AI to analyze supply chain data in real-time, improving logistics decision-making. This leads to a 25% reduction in delays and costs, enhancing overall operational efficiency.

Retail Customer Behaviour Analysis Retailers use automated data retrieval to study customer purchasing patterns, gaining insights that drive personalized marketing. This strategy boosts customer engagement by 25% and increases sales conversions.

Real Estate Market Evaluation Real estate professionals access property data and market trends with ease, allowing for informed pricing strategies. This capability enhances property sales efficiency by 35%, leading to quicker transactions.

Conclusion

In summary, generative AI brings many benefits, from boosting creativity to making everyday tasks easier. With tools like Text to SQL AI, businesses can work smarter, save time, and make better decisions. Ready to see the difference it can make for you? Sign up for a free trial with EzInsights AI and experience powerful, easy-to-use data tools!

For more related blogs visit: EzInsights AI

0 notes

Text

2025年07月23日の記事一覧

(全 25 件)

Text-to-SQLについて考えていることをだらだらと書く - Qiita

撮影現場は想定外ばかり!ディレクターの私が直面した8つの“やらかし”とその対策

エンジニア組織での生成AI導入と活用の3つのポイント

【小寺信良の週刊 Electric Zooma!】“クロマキー撮影用養生テープ”から、ローランド&ヤマハ製ミキサーの連動まで。九州放送機器展レポ

業務を“最初から最後まで”遂行できる真のAIアシスタント、半年以内に現る?

BASE、Eストアーを100%子会社化し、今後はグループ全体としてユーザーにさらなる価値提供する

LLM推論に関する技術メモ

ある企業で新入社員が「相手を論破する話法」「作業がうまくいかないと工具を放り投げる」と問題行動を繰り返すので解雇するも、地裁で「解雇無効」となった判例がいろいろと厳しい

言語モデルの起源、シャノン論文を読んでみる - エムスリーテックブログ

フルスタックエンジニアの終焉?生成AI後の未来を産業史から考える

AIの世界も「フリーランチは終わった」になってきてるのでは

文科省の「就職氷河期世代」教員採用がダメなのは「今さら何言ってんだ感」に加えて「支援になっていない」から。 - Everything you've ever Dreamed

.NET (C#) で Azure系のSDKを使うときに認証をどうにかしたい

被害が多発中! 知り合いとZoomしていたらSNSアカウントを乗っ取られたうえに暗号通貨ウォレットを空にされたとの報告が相次ぐ【読めば身に付くネットリテラシー】

Playwright移行プロジェクト奮闘記 〜現場で直面した3つの壁と、乗り越えるためのTips集〜 - Nealle Developer's Blog

最大の差別化要因はWebAssemblyの採用 ―― Fastly共同創業者Tyler McMullen氏に聞く次世代CDNの最前線

AnthropicのDesktop Extensions (DXT)完全ガイド: ローカルAIアプリケーションの新時代

「書面なしの発注は禁止」フリーランスが知っておきたい新法の基本 小学館などに初の違反勧告

ポストデベロッパー時代

技術選定の審美眼 2025年版

【AI slop】AI生成によるゴミをプルリクするのをやめろ

SmoothCSV - macOS & Windows 向けの最強のCSVエディター

[ITmedia News] ソニーの“66万円コンデジ”「RX1R III」が好発進 「予想を大幅に上回る注文」、納期に影響も

Elgato、Switch 2の録画にも対応する新型HDMIキャプチャ

iPhoneで���Pixelでも使えるおしゃれなスマートウォッチ。Nothing「CMF Watch 3 Pro」

0 notes

Text

AI Career Opportunities After Completing an Artificial Intelligence Course in Boston

Artificial Intelligence is no longer confined to research labs and futuristic predictions. It’s now powering real businesses, influencing major industries, and creating a wave of high-demand job roles. If you're taking or planning to take an Artificial Intelligence Course in Boston, you're in one of the best cities to launch or pivot into a career in AI.

But what does that actually look like once you complete the course?

Let’s break down the career paths, job roles, and industry demand in Boston—along with the skills and strategies you’ll need to land the right role.

Why Boston Is a Smart Place to Study and Work in AI?

Boston isn’t just an academic city. It’s a growing tech ecosystem backed by research-heavy universities, venture-backed startups, and major employers in healthcare, finance, robotics, biotech, and cybersecurity.

The city's AI job market is uniquely positioned at the intersection of cutting-edge research and applied industry demand. That means graduates of AI courses don’t just walk away with academic knowledge—they enter a workforce that actually needs their skills.

1. Machine Learning Engineer

What you’ll do: Build, train, and deploy machine learning models. You'll work on real-world problems using algorithms that improve over time with data.

Key skills needed:

Python, scikit-learn, TensorFlow, PyTorch

Data preprocessing, model tuning, feature engineering

Deployment skills (Flask, Docker, cloud platforms)

Boston hiring sectors: Healthcare tech, fintech, marketing analytics, autonomous systems

2. Data Scientist

What you’ll do: Analyze large datasets to extract insights and make data-driven decisions. Some data science roles overlap heavily with ML engineering.

Key skills needed:

Statistics, Python/R, SQL

Machine learning models for classification/regression

Data visualization (Tableau, Matplotlib, Seaborn)

Boston hiring sectors: Finance, pharmaceuticals, consulting, e-commerce

3. AI Research Assistant / Research Scientist (for Advanced Learners)

What you’ll do: Work on advancing the field of AI itself—new architectures, novel algorithms, or ethical frameworks.

Key skills needed:

Strong math foundation (linear algebra, probability, calculus)

Deep learning frameworks (TensorFlow, PyTorch)

Academic writing, experimentation, model benchmarking

Boston hiring sectors: Universities, research institutes, AI think tanks, large tech labs

4. NLP Engineer

What you’ll do: Develop systems that process and understand human language—chatbots, sentiment analysis, summarization tools, language models.

Key skills needed:

Text preprocessing, embeddings, transformers (BERT, GPT)

Hugging Face libraries, spaCy, NLTK

Experience with fine-tuning language models

Boston hiring sectors: Publishing, healthcare documentation, customer service automation

5. Computer Vision Engineer

What you’ll do: Work with image and video data to build systems like facial recognition, defect detection, autonomous navigation, or medical imaging analysis.

Key skills needed:

OpenCV, convolutional neural networks (CNNs)

Image preprocessing, annotation tools

PyTorch, TensorFlow, Keras

Boston hiring sectors: Robotics, manufacturing, smart surveillance, health imaging

6. AI Product Manager

What you’ll do: Bridge the gap between technical teams and business stakeholders. You'll define product strategy, manage timelines, and ensure the AI system solves real problems.

Key skills needed:

Understanding of ML lifecycle

Data literacy and product sense

Agile methodology, stakeholder communication

Boston hiring sectors: SaaS startups, enterprise software firms, healthtech, edtech

7. AI/ML Consultant

What you’ll do: Help businesses identify opportunities to use AI and guide implementation. You’ll scope projects, evaluate feasibility, and sometimes build POCs.

Key skills needed:

Cross-domain understanding (business + tech)

Prototyping and rapid experimentation

Communication and presentation skills

Boston hiring sectors: Consulting firms, freelance contracting, innovation labs

Emerging & Niche Roles in Boston's AI Ecosystem

Here are some of the emerging roles you might not have heard of, but are growing in demand across Boston:

• AI Ethicist / Responsible AI Analyst

Focus on fairness, bias, and compliance in AI systems—particularly important in healthtech and finance.

• AI Ops Engineer

Responsible for managing model pipelines, retraining strategies, and monitoring deployed AI systems in production.

• Generative AI Developer

Build tools using large language models and diffusion models for text, image, or code generation.

Industries in Boston Actively Hiring AI Talent

If you’re planning your AI career in Boston, it's helpful to understand where the demand is concentrated:

1. Healthcare and Bioinformatics

AI in drug discovery, medical imaging, personalized treatment plans

2. Finance and Fintech

Risk modeling, fraud detection, algorithmic trading, customer personalization

3. Education Technology

Adaptive learning platforms, student performance prediction, virtual tutoring

4. Robotics and Manufacturing

Automation, quality control via vision systems, predictive maintenance

5. Marketing & Advertising

Recommendation systems, churn prediction, behavioral analytics

Boston has a healthy mix of large enterprises, startups, and research labs—all actively building and deploying AI.

Final Thoughts

An Artificial Intelligence Course in Boston isn’t just a classroom experience—it’s a gateway into a city that’s actively building the future of AI. Whether your goal is to become a machine learning engineer, AI product lead, or even a startup founder in the AI space, Boston offers the environment, the demand, and the opportunity.

The key is to focus on practical skill-building, align your learning with real industry needs, and showcase your capabilities through projects and public work.

AI is one of the few fields where curiosity, persistence, and the right training can fast-track your career in months—not years.

#Best Data Science Courses in Boston#Artificial Intelligence Course in Boston#Data Scientist Course in Boston#Machine Learning Course in Boston

0 notes

Text

This AI Paper Introduces TableRAG: A Hybrid SQL and Text Retrieval Framework for Multi-Hop Question Answering over Heterogeneous Documents

Handling questions that involve both natural language and structured tables has become an essential task in building more intelligent and useful AI systems. These systems are often expected to process content that includes diverse data types, such as text mixed with numerical tables, which are commonly found in business documents, research papers, and public reports. Understanding such documents…

View On WordPress

0 notes

Text

The Future of B2B Marketing: AI, Automation & Alignment

As generation hurries up, B2B advertising and marketing is undergoing a dramatic transformation. traditional methods are giving way to smarter, statistics-driven strategies that rely upon synthetic intelligence (AI), advertising and marketing automation, and more potent alignment among teams. In this text, we explore how these forces are shaping the destiny of B2B advertising and marketing—and what you may do to live ahead.

AI Is the New Marketing Brain

Artificial intelligence is not only a buzzword—it’s actively reshaping how B2B marketers function. From predictive analytics to actual-time personalization, AI tools now assist marketers make smarter selections quicker. AI can analyze massive datasets to discover trends, predict client conduct, and even advise particular campaign moves. as an instance, AI-driven tools like ChatGPT or Jasper can draft electronic mail campaigns, blogs, or even generate product descriptions tailored to particular customer personas. within the destiny, marketers who include AI will outpace competitors still depending entirely on manual techniques.

Automation Is Doing the Heavy Lifting

Advertising automation has advanced from simple e mail triggers to sophisticated workflows that manipulate leads, score engagement, and nurture possibilities across multiple channels. equipment like HubSpot, Marketo, and Salesforce Pardot allow B2B teams to automate repetitive responsibilities—releasing up time for strategic planning and creative work. whether or not it is scheduling social media posts or automating lead qualification, these systems help marketers awareness on what matters most: conversion and boom. The destiny will see deeper automation integrations across sales, advertising, and customer support platforms.

The Rise of Predictive Lead Scoring

Long past are the days of manually scoring leads based totally on an intestine feeling. AI and automation are allowing predictive lead scoring fashions that use historic data and behavioral alerts to evaluate which possibilities are most likely to convert. This allows sales groups to focus their efforts on first-rate leads, enhancing efficiency and last charges. For B2B marketers, predictive analytics is no longer non-compulsory—it’s important to keep your pipeline healthful and your ROI robust.

Hyper-Personalization at Scale

One of the most interesting results of AI and automation is the capacity to customize marketing at scale. instead of sending popular email blasts, B2B entrepreneurs can now tailor sentence and messaging based on every prospect’s enterprise, position, behavior, and degree in the client journey. AI tools track virtual interactions and advise custom designed sentence in real time. for example, a CMO in the finance region may additionally obtain a very extraordinary sentence revel in than an IT director in healthcare—all from the identical marketing system.

Sales and Marketing Alignment is Non-Negotiable

AI and automation tools simplest attain their complete potential whilst income and advertising and marketing teams are aligned. Shared goals, metrics, and systems make sure seamless handoffs from advertising and marketing-certified leads (MQLs) to income-certified leads (SQLs). This alignment additionally complements client enjoy, reduces friction in the client journey, and increases close rates. in the future, the maximum successful B2B businesses could be those who treat advertising and marketing and income as one unified revenue group, in place of two separate departments.

ABM Gets Smarter and More Scalable

Account-based advertising and marketing (ABM) has lengthy been a go-to approach in B2B, however AI and automation are making it smarter and less difficult to scale. With gear that song account behavior, intent records, and engagement across channels, entrepreneurs can deliver relatively centered campaigns to a couple of decision-makers within a single employer. AI also allows prioritize which bills to target based on chance to shut. As a result, ABM turns into less aid-heavy and more ROI-friendly, making it reachable even to smaller teams.

Real-Time Insights Drive Agility

The conventional method of launching a marketing campaign and waiting weeks for consequences is fading fast. With AI-powered dashboards and analytics tools, entrepreneurs now get actual-time comments on what’s operating—and what isn’t. This permits for agile marketing: the potential to pivot strategies quick based totally on stay overall performance facts. whether it’s tweaking a LinkedIn advert or adjusting email concern strains, actual-time insights allow continuous optimization, keeping campaigns fresh and powerful in a quick-converting marketplace.

Human Creativity + Machine Intelligence = The Future

The conventional method of launching a marketing campaign and waiting weeks for consequences is fading fast. With AI-powered dashboards and analytics tools, entrepreneurs now get actual-time comments on what’s operating—and what isn’t. This permits for agile marketing: the potential to pivot strategies quick based totally on stay overall performance facts. whether it’s tweaking a LinkedIn advert or adjusting email concern strains, actual-time insights allow continuous optimization, keeping campaigns fresh and powerful in a quick-converting marketplace.

1 note

·

View note

Text

KNIME Software: Empowering Data Science with Visual Workflows

By Dr. Chinmoy Pal

In the fast-growing field of data science and machine learning, professionals and researchers often face challenges in coding, integrating tools, and automating complex workflows. KNIME (Konstanz Information Miner) provides an elegant solution to these challenges through an open-source, visual workflow-based platform for data analytics, reporting, and machine learning.

KNIME empowers users to design powerful data science pipelines without writing a single line of code, making it an excellent choice for both non-programmers and advanced data scientists.

🔍 What is KNIME?

KNIME is a free, open-source software for data integration, processing, analysis, and machine learning, developed by the University of Konstanz in Germany. Since its release in 2004, it has evolved into a globally trusted platform used by industries, researchers, and educators alike.

Its visual interface allows users to build modular data workflows by dragging and dropping nodes (each representing a specific function) into a workspace—eliminating the need for deep programming skills while still supporting complex analysis.

🧠 Key Features of KNIME

✅ 1. Visual Workflow Interface

Workflows are built using drag-and-drop nodes.

Each node performs a task like reading data, cleaning, filtering, modeling, or visualizing.

✅ 2. Data Integration

Seamlessly integrates data from Excel, CSV, databases (MySQL, PostgreSQL, SQL Server), JSON, XML, Apache Hadoop, and cloud storage.

Supports ETL (Extract, Transform, Load) operations at scale.

✅ 3. Machine Learning & AI

Built-in algorithms for classification, regression, clustering (e.g., decision trees, random forest, SVM, k-means).

Integrates with scikit-learn, TensorFlow, Keras, and H2O.ai.

AutoML workflows available via extensions.

✅ 4. Text Mining & NLP

Supports text preprocessing, tokenization, stemming, topic modeling, and sentiment analysis.

Ideal for social media, survey, or academic text data.

✅ 5. Visualization

Interactive dashboards with bar plots, scatter plots, line graphs, pie charts, and heatmaps.

Advanced charts via integration with Python, R, Plotly, or JavaScript.

✅ 6. Big Data & Cloud Support

Integrates with Apache Spark, Hadoop, AWS, Google Cloud, and Azure.

Can scale to large enterprise-level data processing.

✅ 7. Scripting Support

Custom nodes can be built using Python, R, Java, or SQL.

Flexible for hybrid workflows (visual + code).

📚 Applications of KNIME

📊 Business Analytics

Customer segmentation, fraud detection, sales forecasting.

🧬 Bioinformatics and Healthcare

Omics data analysis, patient risk modeling, epidemiological dashboards.

🧠 Academic Research

Survey data preprocessing, text analysis, experimental data mining.

🧪 Marketing and Social Media

Campaign effectiveness, social media sentiment analysis, churn prediction.

🧰 IoT and Sensor Data

Real-time streaming analysis from smart devices and embedded systems.

🛠️ Getting Started with KNIME

Download: Visit: https://www.knime.com/downloads Choose your OS (Windows, Mac, Linux) and install KNIME Analytics Platform.

Explore Example Workflows: Open KNIME and browse sample workflows in the KNIME Hub.

Build Your First Workflow:

Import dataset (Excel/CSV/SQL)

Clean and transform data

Apply machine learning or visualization nodes

Export or report results

Enhance with Extensions: Add capabilities for big data, deep learning, text mining, chemistry, and bioinformatics.

💼 KNIME in Enterprise and Industry

Used by companies like Siemens, Novartis, Johnson & Johnson, Airbus, and KPMG.

Deployed for R&D analytics, manufacturing optimization, supply chain forecasting, and risk modeling.

Supports automation and scheduling for enterprise-grade analytics workflows.

📊 Use Case Example: Customer Churn Prediction

Workflow Steps in KNIME:

Load customer data (CSV or SQL)

Clean missing values

Feature engineering (recency, frequency, engagement)

Apply classification model (Random Forest)

Evaluate with cross-validation

Visualize ROC and confusion matrix

Export list of high-risk customers

This entire process can be done without any coding—using only the drag-and-drop interface.

✅ Conclusion

KNIME is a robust, scalable, and user-friendly platform that bridges the gap between complex analytics and practical use. It democratizes access to data science by allowing researchers, analysts, and domain experts to build powerful models without needing extensive programming skills. Whether you are exploring data science, automating reports, or deploying enterprise-level AI workflows, KNIME is a top-tier solution in your toolkit.

Author: Dr. Chinmoy Pal Website: www.drchinmoypal.com Published: July 2025

0 notes

Text

Unlocking Business Intelligence with Advanced Data Solutions 📊🤖

In a world where data is the new currency, businesses that fail to utilize it risk falling behind. From understanding customer behavior to predicting market trends, advanced data solutions are transforming how companies operate, innovate, and grow. By leveraging AI, ML, and big data technologies, organizations can now make faster, smarter, and more strategic decisions across industries.

At smartData Enterprises, we build and deploy intelligent data solutions that drive real business outcomes. Whether you’re a healthcare startup, logistics firm, fintech enterprise, or retail brand, our customized AI-powered platforms are designed to elevate your decision-making, efficiency, and competitive edge.

🧠 What Are Advanced Data Solutions?

Advanced data solutions combine technologies like artificial intelligence (AI), machine learning (ML), natural language processing (NLP), and big data analytics to extract deep insights from raw and structured data.

They include:

📊 Predictive & prescriptive analytics

🧠 Machine learning model development

🔍 Natural language processing (NLP)

📈 Business intelligence dashboards

🔄 Data warehousing & ETL pipelines

☁️ Cloud-based data lakes & real-time analytics

These solutions enable companies to go beyond basic reporting — allowing them to anticipate customer needs, streamline operations, and uncover hidden growth opportunities.

🚀 Why Advanced Data Solutions Are a Business Game-Changer

In the digital era, data isn’t just information — it’s a strategic asset. Advanced data solutions help businesses:

🔎 Detect patterns and trends in real time

💡 Make data-driven decisions faster

🧾 Reduce costs through automation and optimization

🎯 Personalize user experiences at scale

📈 Predict demand, risks, and behaviors

🛡️ Improve compliance, security, and data governance

Whether it’s fraud detection in finance or AI-assisted diagnostics in healthcare, the potential of smart data is limitless.

💼 smartData’s Capabilities in Advanced Data, AI & ML

With over two decades of experience in software and AI engineering, smartData has delivered hundreds of AI-powered applications and data science solutions to global clients.

Here’s how we help:

✅ AI & ML Model Development

Our experts build, train, and deploy machine learning models using Python, R, TensorFlow, PyTorch, and cloud-native ML services (AWS SageMaker, Azure ML, Google Vertex AI). We specialize in:

Classification, regression, clustering

Image, speech, and text recognition

Recommender systems

Demand forecasting and anomaly detection

✅ Data Engineering & ETL Pipelines

We create custom ETL (Extract, Transform, Load) pipelines and data warehouses to handle massive data volumes with:

Apache Spark, Kafka, and Hadoop

SQL/NoSQL databases

Azure Synapse, Snowflake, Redshift

This ensures clean, secure, and high-quality data for real-time analytics and AI models.

✅ NLP & Intelligent Automation

We integrate NLP and language models to automate:

Chatbots and virtual assistants

Text summarization and sentiment analysis

Email classification and ticket triaging

Medical records interpretation and auto-coding

✅ Business Intelligence & Dashboards

We build intuitive, customizable dashboards using Power BI, Tableau, and custom tools to help businesses:

Track KPIs in real-time

Visualize multi-source data

Drill down into actionable insights

🔒 Security, Scalability & Compliance

With growing regulatory oversight, smartData ensures that your data systems are:

🔐 End-to-end encrypted

⚖️ GDPR and HIPAA compliant

🧾 Auditable with detailed logs

🌐 Cloud-native for scalability and uptime

We follow best practices in data governance, model explainability, and ethical AI development.

🌍 Serving Global Industries with AI-Powered Data Solutions

Our advanced data platforms are actively used across industries:

🏥 Healthcare: AI for diagnostics, patient risk scoring, remote monitoring

🚚 Logistics: Predictive route optimization, fleet analytics

🏦 Finance: Risk assessment, fraud detection, portfolio analytics

🛒 Retail: Dynamic pricing, customer segmentation, demand forecasting

⚙️ Manufacturing: Predictive maintenance, quality assurance

Explore our custom healthcare AI solutions for more on health data use cases.

📈 Real Business Impact

Our clients have achieved:

🚀 40% reduction in manual decision-making time

💰 30% increase in revenue using demand forecasting tools

📉 25% operational cost savings with AI-led automation

📊 Enhanced visibility into cross-functional KPIs in real time

We don’t just build dashboards — we deliver end-to-end intelligence platforms that scale with your business.

🤝 Why Choose smartData?

25+ years in software and AI engineering

Global clients across healthcare, fintech, logistics & more

Full-stack data science, AI/ML, and cloud DevOps expertise

Agile teams, transparent process, and long-term support

With smartData, you don’t just get developers — you get a strategic technology partner.

📩 Ready to Turn Data Into Business Power?

If you're ready to harness AI and big data to elevate your business, smartData can help. Whether it's building a custom model, setting up an analytics dashboard, or deploying an AI-powered application — we’ve got the expertise to lead the way.

👉 Learn more: https://www.smartdatainc.com/advanced-data-ai-and-ml/

📞 Let’s connect and build your data-driven future.

#advanceddatasolutions #smartData #AIdevelopment #MLsolutions #bigdataanalytics #datadrivenbusiness #enterpriseAI #customdatasolutions #predictiveanalytics #datascience

0 notes

Text

How Generative AI is Changing the Role of Data Scientists

In the last few years, the rise of Generative AI has not just captured headlines but also redefined what it means to be a data scientist. From automating repetitive coding tasks to creating synthetic data and generating human-like insights, Generative AI is transforming how professionals work, learn, and add value to businesses. For aspiring data scientists in India, especially those training at institutes like 360DigiTMG in Hyderabad, these changes are both exciting and challenging, demanding new skills and a fresh mindset.

Looking forward to becoming a Data Scientist? Check out the data scientist course in hyderabad

The Traditional Role: Where It All Started

To understand how things are changing, it helps to look back at what the role of a data scientist looked like until a few years ago. Traditionally, data scientists were expected to collect raw data, clean and prepare it, explore and analyze it, build predictive or descriptive models, and then interpret the results for decision-makers. This often meant spending a large portion of time on tasks like writing SQL queries, cleaning data with Python or R, running statistical tests, and creating reports or dashboards.

While this work is rewarding, it can also be repetitive and time-consuming. The technical part often leaves little room for creative thinking or broader strategic work, which ironically is where data scientists can add the most value. Enter Generative AI.

The Generative AI Revolution

Generative AI refers to advanced machine learning models that don’t just analyze data they create new content. Large Language Models (LLMs) like GPT-4 or image generators like DALL·E can write code, generate text, design images, and even draft reports that read like they were written by humans. These tools can handle tasks that once required hours of manual work.

In the context of data science, Generative AI can automatically write data-cleaning scripts, generate SQL queries, suggest ways to visualize data, and even create draft reports that summarize key findings. This doesn’t mean the datascientist’s job disappears. Instead, their role shifts from being a coder or report- writer to being a supervisor, strategist, and quality controller of what AI produces.

Automating the Routine, Elevating the Human

One of the biggest impacts of Generative AI is how it frees data scientists from routine technical tasks. For instance, a data scientist working on a customer churn model might spend days cleaning messy CRM data. With Generative AI, they can prompt an AI tool to write the first version of that cleaning code, then check and refine it. This dramatically cuts down the time spent on repetitive work.

Similarly, for data visualization, Generative AI tools can quickly create charts, dashboards, or storyboards, leaving the data scientist more time to interpret the results and advise stakeholders. In short, the boring parts get automated; the human parts asking the right questions, validating AI output, spotting anomalies, and aligning analysis with business goals become more central.

New Skills: Prompting, Oversight, and Ethics

Generative AI doesn’t just change what data scientists do it changes what they need to know. One new must-have skill is prompt engineering crafting clear, precise instructions that guide the AI to produce the best output. Knowing how to get the right answer from an LLM or image model is quickly becoming as important as knowing how to write Python functions.

Oversight is another growing responsibility. Since Generative AI can produce inaccurate or biased results if not guided properly, data scientists must learn to review, test, and validate AI-generated code and insights. They need to ensure that the models are fair, explainable, and free from hidden pitfalls especially when AI is used in sensitive areas like finance, healthcare, or hiring.

Finally, the ethical dimension can’t be ignored. With AI systems capable of generating data and content, issues like data privacy, security, and misuse are front and center. The modern data scientist needs a working knowledge of AI ethics and governance frameworks to ensure responsible AI use.

The Rise of New Roles

As the core tasks shift, new specialized roles are emerging within the data science field. Some data scientists are becoming prompt engineers, focusing entirely on refining how AI models are guided. Others are taking on titles like AI Quality Analyst or Model Governance Lead, focusing on validating AI outputs, monitoring for bias, and ensuring compliance with laws like GDPR.

In mature companies, entire teams now focus on LLMOps (Large Language Model Operations) a new branch of MLOps (Machine Learning Operations) that deals with deploying, monitoring, and updating generative models. This means data scientists are increasingly expected to understand not just how to build models, but how to integrate them into real-world workflows safely.

Real-World Examples: Generative AI at Work

Consider a retail company that wants to launch thousands of new products online. Writing unique product descriptions for each item would take a human team months. With Generative AI, the data science team can build a prompt that generates first drafts for all descriptions, which are then checked and polished by human editors.

Or take a bank looking to detect fraud. Generative AI can help produce synthetic datasets that mimic real customer transactions but don’t contain personal details. This allows data scientists to build and test fraud detection models without compromising customer privacy.

In healthcare, Generative AI can assist in generating synthetic medical images to train diagnostic models helpful when real data is limited or sensitive. Data scientists working in these industries become stewards of these tools, ensuring that the synthetic data is realistic, unbiased, and used ethically.

What This Means for Aspiring Data Scientists

All of this might sound intimidating, but it actually opens doors. Companies need data scientists who understand how to use Generative AI effectively people who can combine technical know-how with strategic thinking. If you’re training for a career in data science today, you’re not just learning Python, R, or TensorFlow; you’re also learning how to work alongside AI.

This is where institutes like 360DigiTMG in Hyderabad come into play. Known for offering industry-relevant programs, 360DigiTMG has started including Generative AI modules in its data science courses. Students learn how to build, fine-tune, and supervise AI models, practice prompt engineering, and tackle real-world projects where Generative AI is part of the workflow.

Hyderabad, already a thriving hub for IT and analytics jobs, is seeing a surge in demand for professionals who can handle this next wave of AI. Companies in the city are hiring data scientists who are comfortable working with Generative AI tools and can adapt traditional data science processes to this new landscape.

Challenges and How to Tackle Them

Of course, this transformation isn’t all smooth sailing. One challenge is the risk of over-reliance on AI outputs. Generative AI can be wrong it can hallucinate facts, produce flawed code, or make biased assumptions if not checked. The responsibility still lies with the human to verify and correct what the AI produces.

Another challenge is staying up to date. Generative AI is evolving so quickly that a model you learn today might be outdated tomorrow. This means continuous learning is essential an area where flexible programs like those at 360DigiTMG can make a real difference. Many students in Hyderabad, for example, join short-term upskilling courses while working, so they’re ready to apply the latest AI advances in their roles.

Finally, there’s the human element: communication, domain expertise, and storytelling remain as vital as ever. Generative AI can draft reports, but it’s the data scientist’s job to know which insights matter and how to frame them for decision-makers.

youtube

Future Outlook: Collaboration, Not Competition

Despite fears that AI will take over jobs, the truth is more nuanced. Generative AI is unlikely to fully replace data scientists. Instead, it will take over certain routine tasks, allowing professionals to concentrate on work that has a greater impact. It’s a tool an extremely powerful one that makes the human role more strategic.

Industry surveys show that companies expect the demand for data scientists to grow, not shrink, as Generative AI becomes mainstream. Businesses need people who can harness AI effectively, build reliable workflows, and ensure ethical, fair, and impactful outcomes.

In cities like Hyderabad, the future looks bright for those ready to evolve.Startups and large enterprises alike are adopting Generative AI to staycompetitive, and they’re hungry for talent that understands both the technology and the business context.

Navigate To:

360DigiTMG — Data Analytics, Data Science Course Training Hyderabad

3rd floor, Vijaya towers, 2–56/2/19, Rd no:19, near Meridian school, Ayyappa Society, Chanda Naik Nagar, Madhapur, Hyderabad, Telangana 500081

Phone: 9989994319

Email: [email protected]

0 notes

Text

An Introduction to Multi-Hop Orchestration AI Agents

The journey of Artificial Intelligence has been a fascinating one. From simple chatbots providing canned responses to powerful Large Language Models (LLMs) that can generate human-quality text, AI's capabilities have soared. More recently, we've seen the emergence of AI Agents – systems that can not only understand but also act upon user requests by leveraging external tools (like search engines or APIs).

Yet, many real-world problems aren't simple, one-step affairs. They are complex, multi-faceted challenges that require strategic planning, dynamic adaptation, and the chaining of multiple actions. This is where the concept of Multi-Hop Orchestration AI Agents comes into play, representing the next frontier in building truly intelligent and autonomous AI systems.

Beyond Single Steps: The Need for Orchestration

Consider the evolution:

Traditional AI/LLMs: Think of a powerful calculator or a knowledgeable oracle. You give it an input, and it gives you an output. It’s reactive and often single-turn.

Basic AI Agents (e.g., simple RAG): These agents can perform an action based on your request, like searching a database (Retrieval Augmented Generation) and generating an answer. They are good at integrating external information but often follow a pre-defined, linear process.

The gap lies in tackling complex, dynamic workflows. How does an AI plan a multi-stage project? How does it decide which tools to use in what order? What happens if a step fails, and it needs to adapt its plan? These scenarios demand an orchestrating intelligence.

What is Multi-Hop Orchestration AI?

At its heart, a Multi-Hop Orchestration AI Agent is an AI system designed to break down a high-level, complex goal into a series of smaller, interconnected sub-goals or "hops." It then dynamically plans, executes, monitors, and manages these individual steps to achieve the overarching objective.

Imagine the AI as a highly intelligent project manager or a symphony conductor. You give the conductor the score (your complex goal), and instead of playing one note, they:

Decompose the score into individual movements and sections.

Assign each section to the right instrument or group of musicians (tools or sub-agents).

Conduct the performance, ensuring each part is played at the right time and in harmony.

Listen for any errors and guide the orchestra to self-correct.

Integrate all the parts into a seamless, final masterpiece.

That's Multi-Hop Orchestration in action.

How Multi-Hop Orchestration AI Agents Work: The Conductor's Workflow

The process within a Multi-Hop Orchestration Agent typically involves an iterative loop, often driven by an advanced LLM serving as the "orchestrator" or "reasoning engine":

Goal Interpretation & Decomposition:

The agent receives a complex, open-ended user request (e.g., "Research sustainable supply chain options for our new product line, draft a proposal outlining costs and benefits, and prepare a presentation for the board.").

The orchestrator interprets this high-level goal and dynamically breaks it down into logical, manageable sub-tasks or "hops" (e.g., "Identify key sustainability metrics," "Research eco-friendly suppliers," "Analyze cost implications," "Draft proposal sections," "Create presentation slides").

Dynamic Planning & Tool/Sub-Agent Selection:

For each sub-task, the orchestrator doesn't just retrieve information; it reasons about the best way to achieve that step.

It selects the most appropriate tools from its available arsenal (e.g., a web search tool for market trends, a SQL query tool for internal inventory data, an API for external logistics providers, a code interpreter for complex calculations).

In advanced setups, it might even delegate entire sub-tasks to specialized sub-agents (e.g., a "finance agent" to handle cost analysis, a "design agent" for slide creation).

Execution & Monitoring:

The orchestrator executes the chosen tools or activates the delegated sub-agents.

It actively monitors their progress, ensuring they are running as expected and generating valid outputs.

Information Integration & Iteration:

As outputs from different steps or agents come in, the orchestrator integrates this information, looking for connections, contradictions, or gaps in its knowledge.

It then enters a self-reflection phase: "Is this sub-goal complete?" "Do I have enough information for the next step?" "Did this step fail, or did it produce unexpected results?"

Based on this evaluation, it can decide to:

Proceed to the next planned hop.

Re-plan a current hop if it failed or yielded unsatisfactory results.

Create new hops to address unforeseen issues or gain missing information.

Seek clarification from the human user if truly stuck.

Final Outcome Delivery:

Once all hops are completed and the overall goal is achieved, the orchestrator synthesizes the results and presents the comprehensive final outcome to the user.

Why Multi-Hop Orchestration Matters: Game-Changing Use Cases

Multi-Hop Orchestration AI Agents are particularly transformative for scenarios demanding deep understanding, complex coordination, and dynamic adaptation:

Complex Enterprise Automation: Automating multi-stage business processes like comprehensive customer onboarding (spanning CRM, billing, support, and sales systems), procurement workflows, or large-scale project management.

Deep Research & Data Synthesis: Imagine an AI autonomously researching a market, querying internal sales databases, fetching real-time news via APIs, and then synthesizing all information into a polished, insightful report ready for presentation.

Intelligent Customer Journeys: Guiding customers through complex service requests that involve diagnostic questions, looking up extensive order histories, interacting with different backend systems (e.g., supply chain, billing), and even initiating multi-step resolutions like refunds or complex technical support.

Autonomous Software Development & DevOps: Agents that can not only generate code but also test it, identify bugs, suggest fixes, integrate with version control, and orchestrate deployment to various environments.

Personalized Learning & Mentoring: Creating dynamic educational paths that adapt to a student's progress, strengths, and weaknesses across different subjects, retrieving varied learning materials and generating customized exercises on the fly.

The Road Ahead: Challenges and the Promise

Building Multi-Hop Orchestration Agents is incredibly complex. Challenges include:

Robust Orchestration Logic: Designing the AI's internal reasoning and planning capabilities to be consistently reliable across diverse and unpredictable scenarios.

Error Handling & Recovery: Ensuring agents can gracefully handle unexpected failures from tools or sub-agents and recover effectively.

Latency & Cost: Multi-step processes involve numerous LLM calls and tool executions, which can introduce latency and increase computational costs.

Transparency & Debugging: The "black box" nature of LLMs makes it challenging to trace and debug long, multi-hop execution paths when things go wrong.

Safety & Alignment: Ensuring that autonomous, multi-step agents always remain aligned with human intent and ethical guidelines, especially as they undertake complex sequences of actions.

Despite these challenges, frameworks like LangChain, LlamaIndex, Microsoft's AutoGen, and CrewAI are rapidly maturing, providing the architectural foundations for developers to build these sophisticated systems.

Multi-Hop Orchestration AI Agents represent a significant leap beyond reactive AI. They are the conductors of the AI orchestra, transforming LLMs from clever responders into proactive problem-solvers capable of navigating the intricacies of the real world. This is a crucial step towards truly autonomous and intelligent systems, poised to unlock new frontiers for AI's impact across every sector.

1 note

·

View note