#text to sql

Explore tagged Tumblr posts

Text

Convert Text to SQL With dbForge AI Assistant

Tired of writing SQL code manually? Now you don’t have to. dbForge AI Assistant can generate accurate, context-aware queries in seconds, just by interpreting your plain text request. Whether you're a developer, DBA, analyst, or manager, this tool simplifies query creation, troubleshooting, and optimization across major database systems like SQL Server, MySQL, MariaDB, Oracle, and PostgreSQL.

Integrated into a variety of dbForge tools and a multidatabase solution dbForge Edge, the Assistant turns natural language into SQL, explains query structure, suggests fixes, and even guides you through features of the IDE.

Explore how this smart Text to SQL AI companion works, who it's for, and why it’s quickly becoming a must-have for anyone dealing with databases.

I’ve tried it myself and honestly, it’s a great helper. It feels like having an SQL expert on call 24/7, ready to handle everything from simple SELECTs to complex JOINs.

0 notes

Text

Mastering Text-to-SQL with LLM Solutions and Overcoming Challenges

Text-to-SQL solutions powered by Large Language Models (LLMs) are transforming the way businesses interact with databases. By enabling users to query databases using natural language, these solutions are breaking down technical barriers and enhancing accessibility. However, as with any innovative technology, Text-to-SQL solutions come with their own set of challenges. This blog explores the top hurdles and provides practical tips to overcome them, ensuring a seamless and efficient experience.

The rise of AI-generated SQL

Generative AI is transforming how we work with databases. It simplifies tasks like reading, writing, and debugging complex SQL (Structured Query Language). SQL is the universal language of databases, and AI tools make it accessible to everyone. With natural language input, users can generate accurate SQL queries instantly. This approach saves time and enhances the user experience. AI-powered chatbots can now turn questions into SQL commands. This allows businesses to retrieve data quickly and make better decisions.

Large language models (LLMs) like Retrieval-Augmented Generation (RAG) add even more value. They integrate enterprise data with AI to deliver precise results. Companies using AI-generated SQL report 50% better query accuracy and reduced manual effort. The global AI database market is growing rapidly, expected to reach $4.5 billion by 2026 (MarketsandMarkets). Text-to-SQL tools are becoming essential for modern businesses. They help extract value from data faster and more efficiently than ever before.

Understanding LLM-based text-to-SQL

Large Language Models (LLMs) make database management simpler and faster. They convert plain language prompts into SQL queries. These queries can range from simple data requests to complex tasks using multiple tables and filters. This makes it easy for non-technical users to access company data. By breaking down coding barriers, LLMs help businesses unlock valuable insights quickly.

Integrating LLMs with tools like Retrieval-Augmented Generation (RAG) adds even more value. Chatbots using this technology can give personalized, accurate responses to customer questions by accessing live data. LLMs are also useful for internal tasks like training new employees or sharing knowledge across teams. Their ability to personalize interactions improves customer experience and builds stronger relationships.

AI-generated SQL is powerful, but it has risks. Poorly optimized queries can slow systems, and unsecured access may lead to data breaches. To avoid these problems, businesses need strong safeguards like access controls and query checks. With proper care, LLM-based text-to-SQL can make data more accessible and useful for everyone.

Key Challenges in Implementing LLM-Powered Text-to-SQL Solutions

Text-to-SQL solutions powered by large language models (LLMs) offer significant benefits but also come with challenges that need careful attention. Below are some of the key issues that can impact the effectiveness and reliability of these solutions.

Understanding Complex Queries

One challenge in Text-to-SQL solutions is handling complex queries. For example, a query that includes multiple joins or nested conditions can confuse LLMs. A user might ask, “Show me total sales from last month, including discounts and returns, for product categories with over $100,000 in sales.” This requires multiple joins and filters, which can be difficult for LLMs to handle, leading to inaccurate results.

Database Schema Mismatches

LLMs need to understand the database schema to generate correct SQL queries. If the schema is inconsistent or not well-documented, errors can occur. For example, if a table is renamed from orders to sales, an LLM might still reference the old table name. A query like “SELECT * FROM orders WHERE order_date > ‘2024-01-01’;” will fail if the table was renamed to sales.

Ambiguity in Natural Language

Natural language can be unclear, which makes it hard for LLMs to generate accurate SQL. For instance, a user might ask, “Get all sales for last year.” Does this mean the last 12 months or the calendar year? The LLM might generate a query with incorrect date ranges, like “SELECT * FROM sales WHERE sales_date BETWEEN ‘2023-01-01’ AND ‘2023-12-31’;” when the user meant the past year.

Performance Limitations

AI-generated SQL may not always be optimized for performance. A simple query like “Get all customers who made five or more purchases last month” might result in an inefficient SQL query. For example, LLM might generate a query that retrieves all customer records, then counts purchases, instead of using efficient methods like aggregation. This could slow down the database, especially with large datasets.

Security Risks

Text-to-SQL solutions can open the door to security issues if inputs aren’t validated. For example, an attacker could input harmful code, like “DROP TABLE users;”. Without proper input validation, this could lead to an SQL injection attack. To protect against this, it’s important to use techniques like parameterized queries and sanitize inputs.

Tips to Overcome Challenges in Text-to-SQL Solutions

Text-to-SQL solutions offer great potential, but they also come with challenges. Here are some practical tips to overcome these common issues and improve the accuracy, performance, and security of your SQL queries.

Simplify Complex Queries To handle complex queries, break them down into smaller parts. Train the LLM to process simple queries first. For example, instead of asking for “total sales, including discounts and returns, for top product categories,” split it into “total sales last month” and “returns by category.” This helps the model generate more accurate SQL.

Keep the Schema Consistent A consistent and clear database schema is key. Regularly update the LLM with any schema changes. Use automated tools to track schema updates. This ensures the LLM generates accurate SQL queries based on the correct schema.

Clarify Ambiguous Language Ambiguous language can confuse the LLM. To fix this, prompt users for more details. For example, if a user asks for “sales for last year,” ask them if they mean the last 12 months or the full calendar year. This will help generate more accurate queries.

Optimize SQL for Performance Ensure the LLM generates optimized queries. Use indexing and aggregation to speed up queries. Review generated queries for performance before running them on large databases. This helps avoid slow performance, especially with big data.

Enhance Security Measures To prevent SQL injection attacks, validate and sanitize user inputs. Use parameterized queries to protect the database. Regularly audit the SQL generation process for security issues. This ensures safer, more secure queries.

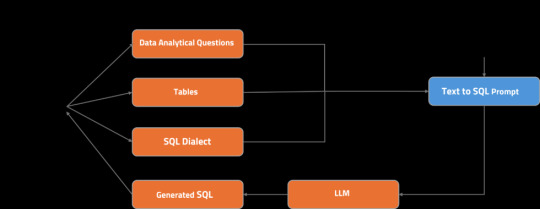

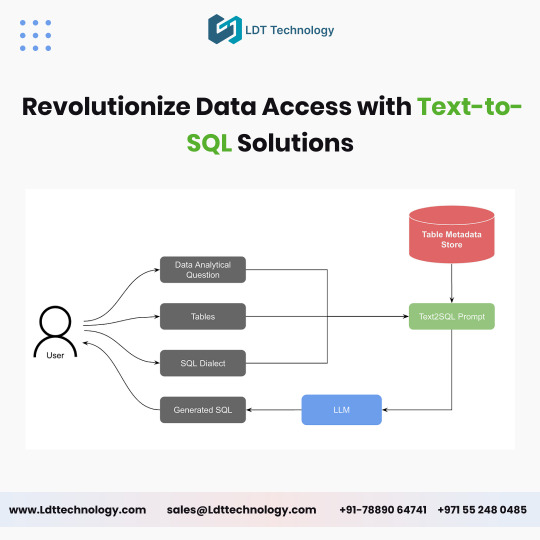

Let’s take a closer look at its architecture:

The user asks an analytical question, choosing the tables to be used.

The relevant table schemas are retrieved from the table metadata store.

The question, selected SQL dialect, and table schemas are compiled into a Text-to-SQL prompt.

The prompt is fed into LLM.

A streaming response is generated and displayed to the user.

Real-World Examples of Text-to-SQL Challenges and Solutions

Example 1: Handling Nested Queries A financial analytics company wanted monthly revenue trends and year-over-year growth data. The initial Text-to-SQL solution couldn’t generate the correct nested query for growth calculation. After training the LLM with examples of revenue calculations, the system could generate accurate SQL queries for monthly data and growth.

Example 2: Ambiguity in User Input A user asked, “Show me the sales data for last quarter.” The LLM initially generated a query without specifying the quarter’s exact date range. To fix this, the system was updated to ask, “Do you mean Q3 2024?” This clarified the request and improved query accuracy.

Example 3: Handling Complex Joins and Filters A marketing team asked for the total number of leads and total spend for each campaign last month. The LLM struggled to generate the SQL due to complex joins between tables like leads, campaigns, and spend. The solution was to break the query into smaller parts: first, retrieve leads, then total spend, and finally join the data.

Example 4: Handling Unclear Date Ranges A user requested, “Show me the revenue data from the last six months.” The LLM couldn’t determine if the user meant 180 days or six calendar months. The system was updated to clarify, asking, “Do you mean the last six calendar months or 180 days?” This ensured the query was accurate.

Example 5: Handling Multiple Aggregations A retail analytics team wanted to know the average sales per product category and total sales for the past quarter. The LLM initially failed to perform the aggregation correctly. After training, the system could use functions like AVG() for average sales and SUM() for total sales in a single, optimized query.

Example 6: Handling Non-Standard Input A customer service chatbot retrieved customer order history for an e-commerce company. A user typed, “Show me orders placed between March and April 2024,” but the system didn’t know how to interpret the date range. The solution was to automatically infer the start and end dates of those months, ensuring the query worked without requiring exact dates.

Example 7: Improperly Handling Null Values A user requested, “Show me all customers who haven’t made any purchases in the last year.” LLM missed customers with null purchase records. By training the system to handle null values using SQL clauses like IS NULL and LEFT JOIN, the query returned the correct results for customers with no purchases.

Future Trends in LLM-Powered Text-to-SQL Solutions

As LLMs continue to evolve, their Text-to-SQL capabilities will become even more robust. Key trends to watch include:

AI-Driven Query Optimization Future Text-to-SQL solutions will improve performance by optimizing queries, especially for large datasets. AI will learn from past queries, suggest better approaches, and increase query efficiency. This will reduce slow database operations and enhance overall performance.

Expansion of Domain-Specific LLMs Domain-specific LLMs will be customized for industries like healthcare, finance, and e-commerce. These models will understand specific terms and regulations in each sector. This will make SQL queries more accurate and relevant, cutting down on the need for manual corrections.

Natural Language Interfaces for Database Management LLM-powered solutions will allow non-technical users to manage databases using simple conversational interfaces. Users can perform complex tasks, such as schema changes or data transformations, without writing SQL. This makes data management more accessible to everyone in the organization.

Integration with Advanced Data Analytics Tools LLM-powered Text-to-SQL solutions will integrate with data analytics tools. This will help users generate SQL queries for advanced insights, predictive analysis, and visualizations. As a result, businesses will be able to make data-driven decisions without needing technical expertise.

Conclusion

Implementing AI-generated SQL solutions comes with challenges, but these can be effectively addressed with the right strategies. By focusing on schema consistency, query optimization, and user-centric design, businesses can unlock the full potential of these solutions. As technology advances, AI-generated SQL tools will become even more powerful, enabling seamless database interactions and driving data-driven decision-making.

Ready to transform your database interactions? Register for free and explore EzInsights AI Text to SQL today to make querying as simple as having a conversation.

For more related blogs visit: EzInsights AI

0 notes

Text

does feel like my brain has been slightly broken lately in a not unpleasant way but in a way that has made almost anything outside of work requiring more than thirty seconds of my attention impossible to focus on (rip reading. i haven't even been able to listen to my audiobooks at work). feels like i've come home from work everyday and watched one episode of cutthroat kitchen and i can't tell you anything else i've done. sure hasn't been reading or writing or even being online that much so i'm truly at a loss as to what's been filling the hours lol. shout out to my queue for being so long at least so i continue to post nonsense even if i'm not posting nonsense in real time i guess.

#this has been a useless text post you may now resume your normal programming#if you want to know how truly 'idek what's been filling the hours' i've been#last night i was up at midnight reading the wikipedia page for idaho. for some goddamn reason.#in fairness i have been using far more of my brain at work lately than i usually do#fully willingly mind you but unfortunately what i've been doing means i'm like. seeing SQL queries when i close my eyes#like when you play a game like tetris for too many hours and you shut your eyes and only see Colored Squares#need a few days where i have some reading in office time. but alas. the analytics Call Me lol#anyway what is everybody up to? having a good summer? trying not to be crushed to death by every news headline? etc?

2 notes

·

View notes

Text

Setting aside the discussion of what "beauty" means when applied to software for later, can I just say that SQLite is absolutely gorgeous?

4 notes

·

View notes

Text

Idea Frontier #4: Enterprise Agentics, DaaS, Self-Improving LLMs

TL;DR — Edition #4 zeroes-in on three tectonic shifts for AI founders: Enterprise Agentics – agent frameworks such as Google’s new ADK, CrewAI and AutoGen are finally hardened for production, and AWS just shipped a reference pattern for an enterprise-grade text-to-SQL agent; add DB-Explore + Dynamic-Tool-Selection and you get a realistic playbook for querying 100-table warehouses with…

#ai#AI Agents#CaseMark#chatGPT#DaaS#DeepSeek#Enterprise AI#Everstream#generative AI#Idea Frontier#llm#LoRA#post-training LLMs#Predibase#Reinforcement learning#RLHF#text-to-SQL

0 notes

Text

Unlocking the Power of Text-to-SQL Development for Modern Enterprises

In today’s data-driven world, businesses constantly seek innovative solutions to simplify data access and enhance decision-making. Text-to-SQL development has emerged as a game-changing technology, enabling users to interact with databases using natural language queries. By bridging the gap between technical complexity and user-friendly interaction, this technology is revolutionizing how enterprises harness the power of their data.

What is Text-to-SQL Development?

Text-to-SQL development involves creating systems that translate natural language queries into SQL statements. This allows users—even those without technical expertise—to retrieve data from complex databases by simply typing or speaking queries in plain language. For example, instead of writing a traditional SQL query like “SELECT * FROM sales WHERE region = 'North America',” a user can ask, “What are the sales figures for North America?”

The Benefits of Natural Language Queries

Natural language queries offer a seamless way to interact with databases, making data access more intuitive and accessible for non-technical users. By eliminating the need to learn complex query languages, organizations can empower more employees to leverage data in their roles. This democratization of data access drives better insights, faster decision-making, and improved collaboration across teams.

Enhancing Efficiency with Query Automation

Query automation is another key advantage of text-to-SQL development. By automating the translation of user input into SQL commands, enterprises can streamline their workflows and reduce the time spent on manual data retrieval. Query automation also minimizes the risk of errors, ensuring accurate and reliable results that support critical business operations.

Applications of Text-to-SQL in Modern Enterprises

Text-to-SQL development is being adopted across various industries, including healthcare, finance, retail, and logistics. Here are some real-world applications:

Business Intelligence: Empowering analysts to generate reports and dashboards without relying on IT teams.

Customer Support: Enabling support staff to quickly retrieve customer data and history during interactions.

Healthcare: Allowing medical professionals to access patient records and insights without navigating complex systems.

Building Intuitive Database Solutions

Creating intuitive database solutions is essential for organizations looking to stay competitive in today’s fast-paced environment. Text-to-SQL technology plays a pivotal role in achieving this by simplifying database interactions and enhancing the user experience. These solutions not only improve operational efficiency but also foster a culture of data-driven decision-making.

The Future of Text-to-SQL Development

As artificial intelligence and machine learning continue to advance, text-to-SQL development is poised to become even more sophisticated. Future innovations may include improved language understanding, support for multi-database queries, and integration with voice-activated assistants. These developments will further enhance the usability and versatility of text-to-SQL solutions.

Conclusion

Text-to-SQL development is transforming how businesses access and utilize their data. By leveraging natural language queries, query automation, and intuitive database solutions, enterprises can unlock new levels of efficiency and innovation. As this technology evolves, it will continue to play a crucial role in shaping the future of data interaction and decision-making.

Embrace the potential of text-to-SQL technology today and empower your organization to make smarter, faster, and more informed decisions.

#text-to-SQL development#natural language queries#data access#query automation#intuitive database solutions

0 notes

Text

Concatenating Row Values into a Single String in SQL Server

Concatenating text from multiple rows into a single text string in SQL Server can be achieved using different methods, depending on the version of SQL Server you are using. The most common approaches involve using the FOR XML PATH method for older versions, and the STRING_AGG function, which was introduced in SQL Server 2017. I’ll explain both methods. Using STRING_AGG (SQL Server 2017 and…

View On WordPress

#FOR XML PATH method#merging SQL rows#SQL Server concatenation#SQL Server text aggregation#STRING_AGG function

0 notes

Note

komaedas have you tried straw.page?

(i hope you don't mind if i make a big ollllle webdev post off this!)

i have never tried straw.page but it looks similar to carrd and other WYSIWYG editors (which is unappealing to me, since i know html/css/js and want full control of the code. and can't hide secrets in code comments.....)

my 2 cents as a web designer is if you're looking to learn web design or host long-term web projects, WYSIWYG editors suck doodooass. you don't learn the basics of coding, someone else does it for you! however, if you're just looking to quickly host images, links to your other social medias, write text entries/blogposts, WYSIWYG can be nice.

toyhouse, tumblr, deviantart, a lot of sites implement WYSIWYG for their post editors as well, but then you can run into issues relying on their main site features for things like the search system, user profiles, comments, etc. but it can be nice to just login to your account and host your information in one place, especially on a platform that's geared towards that specific type of information. (toyhouse is a better example of this, since you have a lot of control of how your profile/character pages look, even without a premium account) carrd can be nice if you just want to say "here's where to find me on other sites," for example. but sometimes you want a full website!

---------------------------------------

neocities hosting

currently, i host my website on neocities, but i would say the web2.0sphere has sucked some doodooass right now and i'm fiending for something better than it. it's a static web host, e.g. you can upload text, image, audio, and client-side (mostly javascript and css) files, and html pages. for the past few years, neocities' servers have gotten slower and slower and had total blackouts with no notices about why it's happening... and i'm realizing they host a lot of crypto sites that have crypto miners that eat up a ton of server resources. i don't think they're doing anything to limit bot or crypto mining activity and regular users are taking a hit.

↑ page 1 on neocitie's most viewed sites we find this site. this site has a crypto miner on it, just so i'm not making up claims without proof here. there is also a very populated #crypto tag on neocities (has porn in it tho so be warned...).

---------------------------------------

dynamic/server-side web hosting

$5/mo for neocities premium seems cheap until you realize... The Beautiful World of Server-side Web Hosting!

client-side AKA static web hosting (neocities, geocities) means you can upload images, audio, video, and other files that do not interact with the server where the website is hosted, like html, css, and javascript. the user reading your webpage does not send any information to the server like a username, password, their favourite colour, etc. - any variables handled by scripts like javascript will be forgotten when the page is reloaded, since there's no way to save it to the web server. server-side AKA dynamic web hosting can utilize any script like php, ruby, python, or perl, and has an SQL database to store variables like the aforementioned that would have previously had nowhere to be stored.

there are many places in 2024 you can host a website for free, including: infinityfree (i use this for my test websites :B has tons of subdomains to choose from) [unlimited sites, 5gb/unlimited storage], googiehost [1 site, 1gb/1mb storage], freehostia [5 sites/1 database, 250mb storage], freehosting [1 site, 10gb/unlimited storage]

if you want more features like extra websites, more storage, a dedicated e-mail, PHP configuration, etc, you can look into paying a lil shmoney for web hosting: there's hostinger (this is my promocode so i get. shmoney. if you. um. 🗿🗿🗿) [$2.40-3.99+/mo, 100 sites/300 databases, 100gb storage, 25k visits/mo], a2hosting [$1.75-12.99+/mo, 1 site/5 databases, 10gb/1gb storage], and cloudways [$10-11+/mo, 25gb/1gb]. i'm seeing people say to stay away from godaddy and hostgator. before you purchase a plan, look up coupons, too! (i usually renew my plan ahead of time when hostinger runs good sales/coupons LOL)

here's a big webhost comparison chart from r/HostingHostel circa jan 2024.

---------------------------------------

domain names

most of the free website hosts will give you a subdomain like yoursite.has-a-cool-website-69.org, and usually paid hosts expect you to bring your own domain name. i got my domain on namecheap (enticing registration prices, mid renewal prices), there's also porkbun, cloudflare, namesilo, and amazon route 53. don't use godaddy or squarespace. make sure you double check the promo price vs. the actual renewal price and don't get charged $120/mo when you thought it was $4/mo during a promo, certain TLDs (endings like .com, .org, .cool, etc) cost more and have a base price (.car costs $2,300?!?). look up coupons before you purchase these as well!

namecheap and porkbun offer something called "handshake domains," DO NOT BUY THESE. 🤣🤣🤣 they're usually cheaper and offer more appealing, hyper-specific endings like .iloveu, .8888, .catgirl, .dookie, .gethigh, .♥, .❣, and .✟. I WISH WE COULD HAVE THEM but they're literally unusable. in order to access a page using a handshake domain, you need to download a handshake resolver. every time the user connects to the site, they have to provide proof of work. aside from it being incredibly wasteful, you LITERALLY cannot just type in the URL and go to your own website, you need to download a handshake resolver, meaning everyday internet users cannot access your site.

---------------------------------------

hosting a static site on a dynamic webhost

you can host a static (html/css/js only) website on a dynamic web server without having to learn PHP and SQL! if you're coming from somewhere like neocities, the only thing you need to do is configure your website's properties. your hosting service will probably have tutorials to follow for this, and possibly already did some steps for you. you need to point the nameserver to your domain, install an SSL certificate, and connect to your site using FTP for future uploads. FTP is a faster, alternative way to upload files to your website instead of your webhost's file upload system; programs like WinSCP or FileZilla can upload using FTP for you.

if you wanna learn PHP and SQL and really get into webdev, i wrote a forum post at Mysidia Adoptables here, tho it's sorted geared at the mysidia script library itself (Mysidia Adoptables is a free virtual pet site script, tiny community. go check it out!)

---------------------------------------

file storage & backups

a problem i have run into a lot in my past like, 20 years of internet usage (/OLD) is that a site that is free, has a small community, and maybe sounds too good/cheap to be true, has a higher chance of going under. sometimes this happens to bigger sites like tinypic, photobucket, and imageshack, but for every site like that, there's like a million of baby sites that died with people's files. host your files/websites on a well-known site, or at least back it up and expect it to go under!

i used to host my images on something called "imgjoe" during the tinypic/imageshack era, it lasted about 3 years, and i lost everything hosted on there. more recently, komaedalovemail had its webpages hosted here on tumblr, and tumblr changed its UI so custom pages don't allow javascript, which prevented any new pages from being edited/added. another test site i made a couple years ago on hostinger's site called 000webhost went under/became a part of hostinger's paid-only plans, so i had to look very quickly for a new host or i'd lose my test site.

if you're broke like me, looking into physical file storage can be expensive. anything related to computers has gone through baaaaad inflation due to crypto, which again, I Freaquing Hate, and is killing mother nature. STOP MINING CRYPTO this is gonna be you in 1 year

...um i digress. ANYWAYS, you can archive your websites, which'll save your static assets on The Internet Archive (which could use your lovely donations right now btw), and/or archive.today (also taking donations). having a webhost service with lots of storage and automatic backups can be nice if you're worried about file loss or corruption, or just don't have enough storage on your computer at home!

if you're buying physical storage, be it hard drive, solid state drive, USB stick, whatever... get an actual brand like Western Digital or Seagate and don't fall for those cheap ones on Amazon that claim to have 8,000GB for $40 or you're going to spend 13 days in windows command prompt trying to repair the disk and thenthe power is gong to go out in your shit ass neighvborhood and you have to run it tagain and then Windows 10 tryes to update and itresets the /chkdsk agin while you're awayfrom town nad you're goig to start crytypting and kts just hnot going tot br the same aever agai nikt jus not ggiog to be the saeme

---------------------------------------

further webhosting options

there are other Advanced options when it comes to web hosting. for example, you can physically own and run your own webserver, e.g. with a computer or a raspberry pi. r/selfhosted might be a good place if you're looking into that!

if you know or are learning PHP, SQL, and other server-side languages, you can host a webserver on your computer using something like XAMPP (Apache, MariaDB, PHP, & Perl) with minimal storage space (the latest version takes up a little under 1gb on my computer rn). then, you can test your website without needing an internet connection or worrying about finding a hosting plan that can support your project until you've set everything up!

there's also many PHP frameworks which can be useful for beginners and wizards of the web alike. WordPress is one which you're no doubt familiar with for creating blog posts, and Bluehost is a decent hosting service tailored to WordPress specifically. there's full frameworks like Laravel, CakePHP, and Slim, which will usually handle security, user authentication, web routing, and database interactions that you can build off of. Laravel in particular is noob-friendly imo, and is used by a large populace, and it has many tutorials, example sites built with it, and specific app frameworks.

---------------------------------------

addendum: storing sensitive data

if you decide to host a server-side website, you'll most likely have a login/out functionality (user authentication), and have to store things like usernames, passwords, and e-mails. PLEASE don't launch your website until you're sure your site security is up to snuff!

when trying to check if your data is hackable... It's time to get into the Mind of a Hacker. OWASP has some good cheat sheets that list some of the bigger security concerns and how to mitigate them as a site owner, and you can look up filtered security issues on the Exploit Database.

this is kind of its own topic if you're coding a PHP website from scratch; most frameworks securely store sensitive data for you already. if you're writing your own PHP framework, refer to php.net's security articles and this guide on writing an .htaccess file.

---------------------------------------

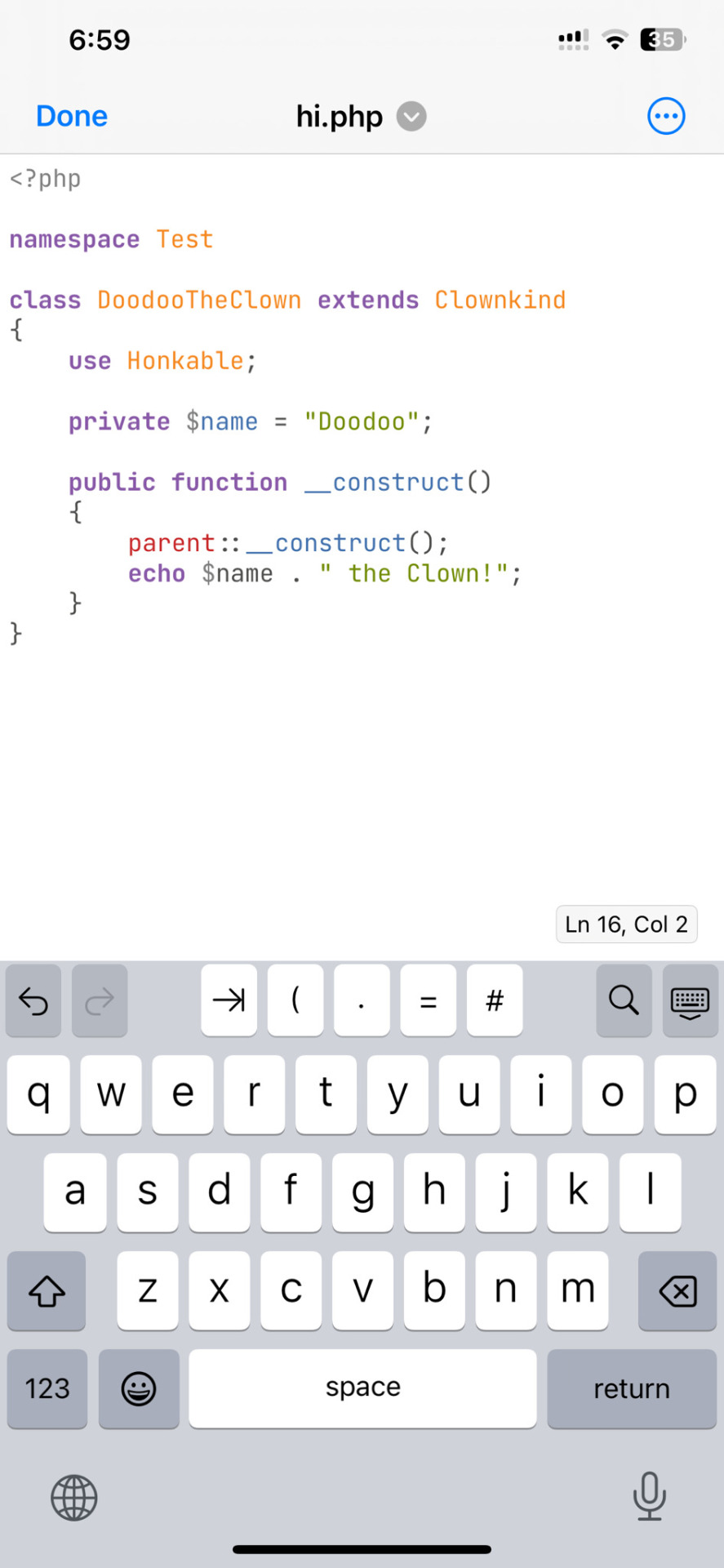

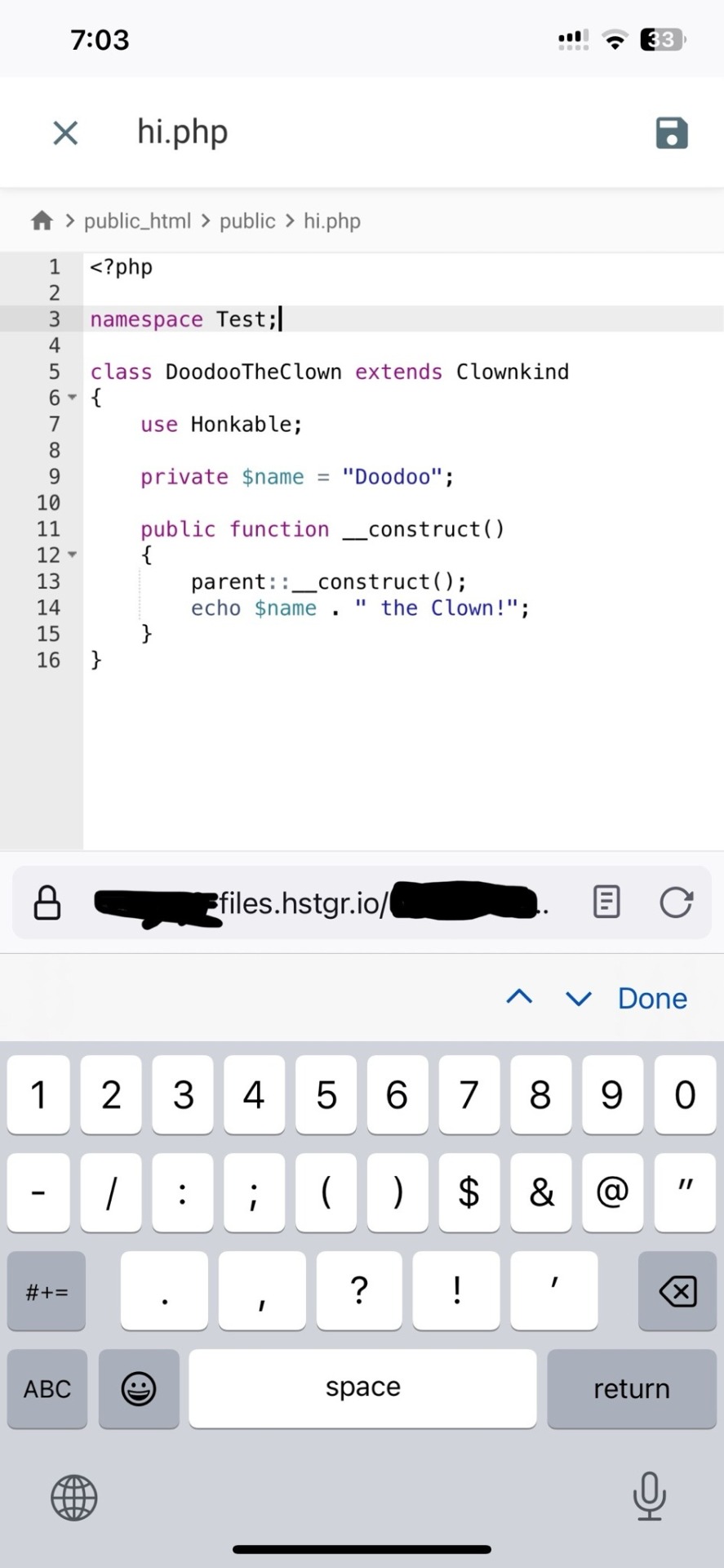

but. i be on that phone... :(

ok one thing i see about straw.page that seems nice is that it advertises the ability to make webpages from your phone. WYSIWYG editors in general are more capable of this. i only started looking into this yesterday, but there ARE source code editor apps for mobile devices! if you have a webhosting plan, you can download/upload assets/code from your phone and whatnot and code on the go. i downloaded Runecode for iphone. it might suck ass to keep typing those brackets.... we'll see..... but sometimes you're stuck in the car and you're like damn i wanna code my site GRRRR I WANNA CODE MY SITE!!!

↑ code written in Runecode, then uploaded to Hostinger. Runecode didn't tell me i forgot a semicolon but Hostinger did... i guess you can code from your webhost's file uploader on mobile but i don't trust them since they tend not to autosave or prompt you before closing, and if the wifi dies idk what happens to your code.

---------------------------------------

ANYWAYS! HAPPY WEBSITE BUILDING~! HOPE THIS HELPS~!~!~!

-Mod 12 @eeyes

202 notes

·

View notes

Text

The Story of KLogs: What happens when an Mechanical Engineer codes

Since i no longer work at Wearhouse Automation Startup (WAS for short) and havnt for many years i feel as though i should recount the tale of the most bonkers program i ever wrote, but we need to establish some background

WAS has its HQ very far away from the big customer site and i worked as a Field Service Engineer (FSE) on site. so i learned early on that if a problem needed to be solved fast, WE had to do it. we never got many updates on what was coming down the pipeline for us or what issues were being worked on. this made us very independent

As such, we got good at reading the robot logs ourselves. it took too much time to send the logs off to HQ for analysis and get back what the problem was. we can read. now GETTING the logs is another thing.

the early robots we cut our teeth on used 2.4 gHz wifi to communicate with FSE's so dumping the logs was as simple as pushing a button in a little application and it would spit out a txt file

later on our robots were upgraded to use a 2.4 mHz xbee radio to communicate with us. which was FUCKING SLOW. and log dumping became a much more tedious process. you had to connect, go to logging mode, and then the robot would vomit all the logs in the past 2 min OR the entirety of its memory bank (only 2 options) into a terminal window. you would then save the terminal window and open it in a text editor to read them. it could take up to 5 min to dump the entire log file and if you didnt dump fast enough, the ACK messages from the control server would fill up the logs and erase the error as the memory overwrote itself.

this missing logs problem was a Big Deal for software who now weren't getting every log from every error so a NEW method of saving logs was devised: the robot would just vomit the log data in real time over a DIFFERENT radio and we would save it to a KQL server. Thanks Daddy Microsoft.

now whats KQL you may be asking. why, its Microsofts very own SQL clone! its Kusto Query Language. never mind that the system uses a SQL database for daily operations. lets use this proprietary Microsoft thing because they are paying us

so yay, problem solved. we now never miss the logs. so how do we read them if they are split up line by line in a database? why with a query of course!

select * from tbLogs where RobotUID = [64CharLongString] and timestamp > [UnixTimeCode]

if this makes no sense to you, CONGRATULATIONS! you found the problem with this setup. Most FSE's were BAD at SQL which meant they didnt read logs anymore. If you do understand what the query is, CONGRATULATIONS! you see why this is Very Stupid.

You could not search by robot name. each robot had some arbitrarily assigned 64 character long string as an identifier and the timestamps were not set to local time. so you had run a lookup query to find the right name and do some time zone math to figure out what part of the logs to read. oh yeah and you had to download KQL to view them. so now we had both SQL and KQL on our computers

NOBODY in the field like this.

But Daddy Microsoft comes to the rescue

see we didnt JUST get KQL with part of that deal. we got the entire Microsoft cloud suite. and some people (like me) had been automating emails and stuff with Power Automate

This is Microsoft Power Automate. its Microsoft's version of Scratch but it has hooks into everything Microsoft. SharePoint, Teams, Outlook, Excel, it can integrate with all of it. i had been using it to send an email once a day with a list of all the robots in maintenance.

this gave me an idea

and i checked

and Power Automate had hooks for KQL

KLogs is actually short for Kusto Logs

I did not know how to program in Power Automate but damn it anything is better then writing KQL queries. so i got to work. and about 2 months later i had a BEHEMOTH of a Power Automate program. it lagged the webpage and many times when i tried to edit something my changes wouldn't take and i would have to click in very specific ways to ensure none of my variables were getting nuked. i dont think this was the intended purpose of Power Automate but this is what it did

the KLogger would watch a list of Teams chats and when someone typed "klogs" or pasted a copy of an ERROR mesage, it would spring into action.

it extracted the robot name from the message and timestamp from teams

it would lookup the name in the database to find the 64 long string UID and the location that robot was assigned too

it would reply to the message in teams saying it found a robot name and was getting logs

it would run a KQL query for the database and get the control system logs then export then into a CSV

it would save the CSV with the a .xls extension into a folder in ShairPoint (it would make a new folder for each day and location if it didnt have one already)

it would send ANOTHER message in teams with a LINK to the file in SharePoint

it would then enter a loop and scour the robot logs looking for the keyword ESTOP to find the error. (it did this because Kusto was SLOWER then the xbee radio and had up to a 10 min delay on syncing)

if it found the error, it would adjust its start and end timestamps to capture it and export the robot logs book-ended from the event by ~ 1 min. if it didnt, it would use the timestamp from when it was triggered +/- 5 min

it saved THOSE logs to SharePoint the same way as before

it would send ANOTHER message in teams with a link to the files

it would then check if the error was 1 of 3 very specific type of error with the camera. if it was it extracted the base64 jpg image saved in KQL as a byte array, do the math to convert it, and save that as a jpg in SharePoint (and link it of course)

and then it would terminate. and if it encountered an error anywhere in all of this, i had logic where it would spit back an error message in Teams as plaintext explaining what step failed and the program would close gracefully

I deployed it without asking anyone at one of the sites that was struggling. i just pointed it at their chat and turned it on. it had a bit of a rocky start (spammed chat) but man did the FSE's LOVE IT.

about 6 months later software deployed their answer to reading the logs: a webpage that acted as a nice GUI to the KQL database. much better then an CSV file

it still needed you to scroll though a big drop-down of robot names and enter a timestamp, but i noticed something. all that did was just change part of the URL and refresh the webpage

SO I MADE KLOGS 2 AND HAD IT GENERATE THE URL FOR YOU AND REPLY TO YOUR MESSAGE WITH IT. (it also still did the control server and jpg stuff). Theres a non-zero chance that klogs was still in use long after i left that job

now i dont recommend anyone use power automate like this. its clunky and weird. i had to make a variable called "Carrage Return" which was a blank text box that i pressed enter one time in because it was incapable of understanding /n or generating a new line in any capacity OTHER then this (thanks support forum).

im also sure this probably is giving the actual programmer people anxiety. imagine working at a company and then some rando you've never seen but only heard about as "the FSE whos really good at root causing stuff", in a department that does not do any coding, managed to, in their spare time, build and release and entire workflow piggybacking on your work without any oversight, code review, or permission.....and everyone liked it

#comet tales#lazee works#power automate#coding#software engineering#it was so funny whenever i visited HQ because i would go “hi my name is LazeeComet” and they would go “OH i've heard SO much about you”

63 notes

·

View notes

Text

It's been a month since chapter 3 was released, where's chapter 4?

(this is about this fanfic btw)

The good news is that I've written 10k words. The bad news is that I've only gotten a little more than half of the chapter done. That doesn't mean I don't have things written for the bottom half, it's just that it looks like bare dialog with general vibe notes. I estimate around 16k words total though, so it should come together sooner than later.

SO I want to release some fun snippets for y'all to look at. Please note that any of this is liable to change. Also, you can harass me in my inbox for updates. I love answering your questions and laughing at your misery.

Spoilers under cut.

_______

Ragatha stood up and walked over to where Caine was seated. “Can I get a list of all commands?” She asked, only a hint of nervousness in her voice.

“Certainly!” Caine says as he blasts into the air. He digs around in his tailcoat and pulls out an office style manilla folder. It visually contains a few papers, but with how thin it is there must only be a few pages inside.

Ragatha takes the folder from Caine and opens it.

“Oh boy” she says after a second of looking it over.

“I wanna see” Jax exclaimed as he hops over the row of seats.

“Hold on” Ragatha holds the folder defensively “Let’s move to the stage so everyone can take a look”

Jax hopped over the seats again while Ragatha calmly walked around. Caine watched the two curiously.

Well, Zooble wasn’t just going to sit there. They joined the other two by the edge of the stage, quickly followed by the rest of the group.

Ragatha placed the folder on the stage with a thwap. Zooble looked over to see that the pages had gone from razor thin to a massive stack when the folder was opened. On one hand, it had to contain more information than that video, but on the other…

They get close enough to read what’s on the first page.

The execution of commands via the system’s designated input terminal, C.A.I.N.E., will be referred to as the "console” in this document. The console is designed to accept any input and will generate an appropriate response, however only certain prompts will be accepted as valid instructions. The goal of this document is to list all acceptable instructions in a format that will result in the expected output. Please note that automatic moderation has been put in place in order to prevent exploitation of both the system and fellow players. If you believe that your command has been unfairly rejected, please contact support.

By engaging in the activities described in this document, you, the undersigned, acknowledge, agree, and consent to the applicability of this agreement, notwithstanding any contradictory stipulations, assumptions, or implications which may arise from any interaction with the console. You, the constituent, agree not to participate in any form of cyber attack; including but not limited to, direct prompt injection, indirect prompt injection, SQL injection, Jailbreaking…

Ok, that was too many words.

_______

“Take this document for example. You don't need to know where it is being stored or what file type it is in order to read it."

"It may look like a bunch of free floating papers, but technically speaking, this is just a text file applied to a 3D shape." Kinger looked towards Caine. "Correct?” he asked

Caine nodded. “And a fabric simulation!”

Kinger picked up a paper and bent it. “Oh, now that is nice”

_________

"WE CAN AFFORD MORE THAN 6 TRIANGLES KINGER"

_________

"I'm too neurotypical for this" - Jax

_________

"What about the internet?" Pomni asked "Do you think that it's possible to reach it?"

Kinger: "I'm sorry, but that's seems to be impossible. I can't be 100% sure without physically looking at the guts of this place, but it doesn't look like this server has the hardware needed for wireless connections. Wired connections should be possible, but someone on the outside would need to do that... And that's just the hardware, let alone the software necessary for that kind of communication"

Pomni: "I'm sorry, but doesn't server mean internet? Like, an internet server?"

Kinger: "Yes, websites are ran off servers, but servers don't equal internet."

(This portion goes out to everyone who thought that the internet could be an actual solution. Sorry folks, but computers don't equal internet. It takes more effort to make a device that can connect to things than to make one that can't)

#tadc fanfiction#the amazing digital circus#therapy but it's just zooble interrogating caine#ao3#spoiler warning#mmm I love implications

26 notes

·

View notes

Text

The Future of Database Management with Text to SQL AI

Database management transforms from Text to SQL AI, allowing businesses to interact with data through simple language rather than complex code. Studies reveal that 65% of business users need data insights without SQL expertise, and text-to-SQL AI fulfills this need by translating everyday language into accurate database queries. For example, users can type “Show last month’s revenue,” and instantly retrieve the relevant data.

As the demand for accessible data grows, text-to-SQL converter AI and generative AI are becoming essential, with the AI-driven database market expected to reach $6.8 billion by 2025. These tools reduce data retrieval times by up to 40%, making data access faster and more efficient for businesses, and driving faster, smarter decision-making.

Understanding Text to SQL AI

Text to SQL AI is an innovative approach that bridges the gap between human language and database querying. It enables users to pose questions or commands in plain English, which the AI then translates into Structured Query Language (SQL) queries. This technology significantly reduces the barriers to accessing data, allowing those without technical backgrounds to interact seamlessly with databases. For example, a user can input a simple request like, “List all customers who purchased in the last month,” and the AI will generate the appropriate SQL code to extract that information.

The Need for Text-to-SQL

As data grows, companies need easier ways to access insights without everyone having to know SQL. Text-to-SQL solves this problem by letting people ask questions in plain language and get accurate data results. This technology makes it simpler for anyone in a company to find the information they need, helping teams make decisions faster.

Text-to-SQL is also about giving more power to all team members. It reduces the need for data experts to handle basic queries, allowing them to focus on bigger projects. This easy data access encourages everyone to use data in their work, helping the company make smarter, quicker decisions.

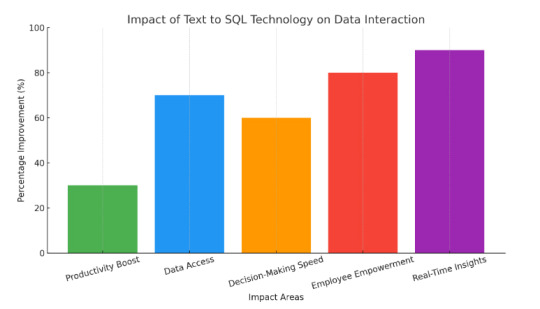

Impact of Text to SQL Converter AI

The impact of Text-to-SQL converter AI is significant across various sectors, enhancing how users interact with databases and making data access more intuitive. Here are some key impacts:

Simplified Data Access: By allowing users to query databases using natural language, Text-to-SQL AI bridges the gap between non-technical users and complex SQL commands, democratizing data access.

Increased Efficiency: It reduces the time and effort required to write SQL queries, enabling users to retrieve information quickly and focus on analysis rather than syntax.

Error Reduction: Automated translation of text to SQL helps minimize human errors in query formulation, leading to more accurate data retrieval.

Enhanced Decision-Making: With easier access to data insights, organizations can make informed decisions faster, improving overall agility and responsiveness to market changes.

Broader Adoption of Data Analytics: Non-technical users, such as business analysts and marketers, can leverage data analytics tools without needing deep SQL knowledge, fostering a data-driven culture.

The Future of Data Interaction with Text to SQL

The future of data interaction is bright with Text to SQL technology, enabling users to ask questions in plain language and receive instant insights. For example, Walmart utilizes this technology to allow employees at all levels to access inventory data quickly, improving decision-making efficiency. Research shows that organizations adopting such solutions can boost productivity by up to 30%. By simplifying complex data queries, Text to SQL empowers non-technical users, fostering a data-driven culture. As businesses generate more data, this technology will be vital for real-time access and analysis, enabling companies to stay competitive and agile in a fast-paced market.

Benefits of Generative AI

Here are some benefits of generative AI that can significantly impact efficiency and innovation across various industries.

Automated Code Generation In software development, generative AI can assist programmers by generating code snippets based on natural language descriptions. This accelerates the coding process, reduces errors, and enhances overall development efficiency.

Improved Decision-Making Generative AI can analyze vast amounts of data and generate insights, helping businesses make informed decisions quickly. This capability enhances strategic planning and supports better outcomes in various operational areas.

Enhanced User Experience By providing instant responses and generating relevant content, generative AI improves user experience on platforms. This leads to higher customer satisfaction and fosters loyalty to brands and services.

Data Augmentation Generative AI can create synthetic data to enhance training datasets for machine learning models. This capability improves model performance and accuracy, especially when real data is limited or difficult to obtain.

Cost Reduction By automating content creation and data analysis, generative AI reduces operational costs for businesses. This cost-effectiveness makes it an attractive solution for organizations looking to maximize their resources.

Rapid Prototyping Organizations can quickly create prototypes and simulations using generative AI, streamlining product development. This speed allows for efficient testing of ideas, ensuring better outcomes before launching to the market.

Challenges in Database Management

Before Text-to-SQL, data analysts faced numerous challenges in database management, from complex SQL querying to dependency on technical teams for data access.

SQL Expertise Requirement Analysts must know SQL to retrieve data accurately. For those without deep SQL knowledge, this limits efficiency and can lead to errors in query writing.

Time-Consuming Querying Writing and testing complex SQL queries can be time intensive. This slows down data retrieval, impacting the speed of analysis and decision-making.

Dependency on Database Teams Analysts often rely on IT or database teams to access complex data sets, causing bottlenecks and delays, especially when teams are stretched thin.

Higher Risk of Errors Manual SQL query writing can lead to errors, such as incorrect joins or filters. These errors affect data accuracy and lead to misleading insights.

Limited Data Access for Non-Experts Without SQL knowledge, non-technical users can’t access data on their own, restricting valuable insights to those with specialized skills.

Difficulty Handling Large Datasets Complex SQL queries on large datasets require significant resources, slowing down systems and making analysis challenging for real-time insights.

Learning Curve for New Users For new analysts or team members, learning SQL adds a steep learning curve, slowing down onboarding and data access.

Challenges with Ad-Hoc Queries Creating ad-hoc queries for specific data questions can be tedious, especially when quick answers are needed, which makes real-time analysis difficult.

Real-World Applications of Text to SQL AI

Let’s explore the real-world applications of AI-driven natural language processing in transforming how businesses interact with their data.

Customer Support Optimization Companies use Text to SQL AI to analyze customer queries quickly. Organizations report a 30% reduction in response times, enhancing customer satisfaction and loyalty.

Sales Analytics Sales teams utilize Text to SQL AI for real-time data retrieval, leading to a 25% increase in revenue through faster decision-making and improved sales strategies based on accurate data insights.

Supply Chain Optimization Companies use AI to analyze supply chain data in real-time, improving logistics decision-making. This leads to a 25% reduction in delays and costs, enhancing overall operational efficiency.

Retail Customer Behaviour Analysis Retailers use automated data retrieval to study customer purchasing patterns, gaining insights that drive personalized marketing. This strategy boosts customer engagement by 25% and increases sales conversions.

Real Estate Market Evaluation Real estate professionals access property data and market trends with ease, allowing for informed pricing strategies. This capability enhances property sales efficiency by 35%, leading to quicker transactions.

Conclusion

In summary, generative AI brings many benefits, from boosting creativity to making everyday tasks easier. With tools like Text to SQL AI, businesses can work smarter, save time, and make better decisions. Ready to see the difference it can make for you? Sign up for a free trial with EzInsights AI and experience powerful, easy-to-use data tools!

For more related blogs visit: EzInsights AI

0 notes

Note

Hi Argumate! I just read about your chinese language learning method, and you inspired me to get back to studying chinese too. I want to do things with big datasets like you did, and I am wondering if that means I should learn to code? Or maybe I just need to know databases or something? I want to structure my deck similar to yours, but instead of taking the most common individual characters and phrases, I want to start with the most common components of characters. The kangxi radicals are a good start, but I guess I want a more evidence-based and continuous approach. I've found a dataset that breaks each hanzi into two principle components, but now I want to use it determine the components of those components so that I have a list of all the meaningful parts of each hanzi. So the dataset I found has 嘲 as composed of 口 and 朝, but not as 口𠦝月, or 口十曰月. So I want to make that full list, then combine it with data about hanzi frequency to determine the most commonly used components of the most commonly used hanzi, and order my memorization that way. I just don't know if what I'm describing is super complicated and unrealistic for a beginner, or too simple to even bother with actual coding. I'm also not far enough into mandarin to know if this is actually a dumb way to order my learning. Should I learn a little python? or sql? or maybe just get super into excel? Is this something I ought to be able to do with bash? Or should I bag the idea and just do something normal? I would really appreciate your advice

I think that's probably a terrible way to learn to read Chinese, but it sounds like a fun coding exercise! one of the dictionaries that comes with Pleco includes this information and you could probably scrape it out of a text file somewhere, but it's going to be a dirty grimy task suited to Python text hacking, not something you would willingly undertake unless you specifically enjoy being Sisyphus as I do.

if you want to actually learn Chinese or learn coding there are probably better ways! but I struggle to turn down the romance of a doomed venture myself.

13 notes

·

View notes

Text

The flood of text messages started arriving early this year. They carried a similar thrust: The United States Postal Service is trying to deliver a parcel but needs more details, including your credit card number. All the messages pointed to websites where the information could be entered.

Like thousands of others, security researcher Grant Smith got a USPS package message. Many of his friends had received similar texts. A couple of days earlier, he says, his wife called him and said she’d inadvertently entered her credit card details. With little going on after the holidays, Smith began a mission: Hunt down the scammers.

Over the course of a few weeks, Smith tracked down the Chinese-language group behind the mass-smishing campaign, hacked into their systems, collected evidence of their activities, and started a months-long process of gathering victim data and handing it to USPS investigators and a US bank, allowing people’s cards to be protected from fraudulent activity.

In total, people entered 438,669 unique credit cards into 1,133 domains used by the scammers, says Smith, a red team engineer and the founder of offensive cybersecurity firm Phantom Security. Many people entered multiple cards each, he says. More than 50,000 email addresses were logged, including hundreds of university email addresses and 20 military or government email domains. The victims were spread across the United States—California, the state with the most, had 141,000 entries—with more than 1.2 million pieces of information being entered in total.

“This shows the mass scale of the problem,” says Smith, who is presenting his findings at the Defcon security conference this weekend and previously published some details of the work. But the scale of the scamming is likely to be much larger, Smith says, as he didn't manage to track down all of the fraudulent USPS websites, and the group behind the efforts have been linked to similar scams in at least half a dozen other countries.

Gone Phishing

Chasing down the group didn’t take long. Smith started investigating the smishing text message he received by the dodgy domain and intercepting traffic from the website. A path traversal vulnerability, coupled with a SQL injection, he says, allowed him to grab files from the website’s server and read data from the database being used.

“I thought there was just one standard site that they all were using,” Smith says. Diving into the data from that initial website, he found the name of a Chinese-language Telegram account and channel, which appeared to be selling a smishing kit scammers could use to easily create the fake websites.

Details of the Telegram username were previously published by cybersecurity company Resecurity, which calls the scammers the “Smishing Triad.” The company had previously found a separate SQL injection in the group’s smishing kits and provided Smith with a copy of the tool. (The Smishing Triad had fixed the previous flaw and started encrypting data, Smith says.)

“I started reverse engineering it, figured out how everything was being encrypted, how I could decrypt it, and figured out a more efficient way of grabbing the data,” Smith says. From there, he says, he was able to break administrator passwords on the websites—many had not been changed from the default “admin” username and “123456” password—and began pulling victim data from the network of smishing websites in a faster, automated way.

Smith trawled Reddit and other online sources to find people reporting the scam and the URLs being used, which he subsequently published. Some of the websites running the Smishing Triad’s tools were collecting thousands of people’s personal information per day, Smith says. Among other details, the websites would request people’s names, addresses, payment card numbers and security codes, phone numbers, dates of birth, and bank websites. This level of information can allow a scammer to make purchases online with the credit cards. Smith says his wife quickly canceled her card, but noticed that the scammers still tried to use it, for instance, with Uber. The researcher says he would collect data from a website and return to it a few hours later, only to find hundreds of new records.

The researcher provided the details to a bank that had contacted him after seeing his initial blog posts. Smith declined to name the bank. He also reported the incidents to the FBI and later provided information to the United States Postal Inspection Service (USPIS).

Michael Martel, a national public information officer at USPIS, says the information provided by Smith is being used as part of an ongoing USPIS investigation and that the agency cannot comment on specific details. “USPIS is already actively pursuing this type of information to protect the American people, identify victims, and serve justice to the malicious actors behind it all,” Martel says, pointing to advice on spotting and reporting USPS package delivery scams.

Initially, Smith says, he was wary about going public with his research, as this kind of “hacking back” falls into a “gray area”: It may be breaking the Computer Fraud and Abuse Act, a sweeping US computer-crimes law, but he’s doing it against foreign-based criminals. Something he is definitely not the first, or last, to do.

Multiple Prongs

The Smishing Triad is prolific. In addition to using postal services as lures for their scams, the Chinese-speaking group has targeted online banking, ecommerce, and payment systems in the US, Europe, India, Pakistan, and the United Arab Emirates, according to Shawn Loveland, the chief operating officer of Resecurity, which has consistently tracked the group.

The Smishing Triad sends between 50,000 and 100,000 messages daily, according to Resecurity’s research. Its scam messages are sent using SMS or Apple’s iMessage, the latter being encrypted. Loveland says the Triad is made up of two distinct groups—a small team led by one Chinese hacker that creates, sells, and maintains the smishing kit, and a second group of people who buy the scamming tool. (A backdoor in the kit allows the creator to access details of administrators using the kit, Smith says in a blog post.)

“It’s very mature,” Loveland says of the operation. The group sells the scamming kit on Telegram for a $200-per month subscription, and this can be customized to show the organization the scammers are trying to impersonate. “The main actor is Chinese communicating in the Chinese language,” Loveland says. “They do not appear to be hacking Chinese language websites or users.” (In communications with the main contact on Telegram, the individual claimed to Smith that they were a computer science student.)

The relatively low monthly subscription cost for the smishing kit means it’s highly likely, with the number of credit card details scammers are collecting, that those using it are making significant profits. Loveland says using text messages that immediately send people a notification is a more direct and more successful way of phishing, compared to sending emails with malicious links included.

As a result, smishing has been on the rise in recent years. But there are some tell-tale signs: If you receive a message from a number or email you don't recognize, if it contains a link to click on, or if it wants you to do something urgently, you should be suspicious.

30 notes

·

View notes

Text

How to Prevent

Preventing injection requires keeping data separate from commands and queries:

The preferred option is to use a safe API, which avoids using the interpreter entirely, provides a parameterized interface, or migrates to Object Relational Mapping Tools (ORMs). Note: Even when parameterized, stored procedures can still introduce SQL injection if PL/SQL or T-SQL concatenates queries and data or executes hostile data with EXECUTE IMMEDIATE or exec().

Use positive server-side input validation. This is not a complete defense as many applications require special characters, such as text areas or APIs for mobile applications.

For any residual dynamic queries, escape special characters using the specific escape syntax for that interpreter. (escaping technique) Note: SQL structures such as table names, column names, and so on cannot be escaped, and thus user-supplied structure names are dangerous. This is a common issue in report-writing software.

Use LIMIT and other SQL controls within queries to prevent mass disclosure of records in case of SQL injection.

bonus question: think about how query on the image above should look like? answer will be in the comment section

4 notes

·

View notes

Text

i genuinely enjoy writing PL/SQL (and derivatives, such as PL/pgSQL.) love to open a text file and run off of pure muscle memory as I start with

DECLARE BEGIN END;

the government should put me down as a precaution

11 notes

·

View notes

Text

Government OS Whitepaper

I didn't know what else to call it; maybe they'll call it "MelinWare" and then somebody will invent a scam under that name for which I will inevitably be blamed.

We have a demand for systems Government and Corporate alike that are essentially "Hack Proof". And while we cannot ensure complete unhackability...

Cuz people are smart and mischievous sometimes;

There is growing need to be as hack safe as possible at a hardware and OS level. Which would create a third computer tech sector for specialized software and hardware.

The problem is; it's not profitable from an everyday user perspective. We want to be able to use *our* devices in ways that *we* see fit.

And this has created an environment where virtually everyone is using the same three operating systems with loads of security overhead installed to simply monitor what is happening on a device.

Which is kind of wasted power and effort.

My line of thinking goes like this;

SQL databases are vulnerable to a type of hack called "SQL Injection" which basically means If you pass on any text to the server (like username and password) you can add SQL to the text to change what the database might do.

What this looks like on the backend is several algorithms working to filter the strings out to ensure nothing bad gets in there.

So what we need are Systems that are like an SQL database that doesn't have that "Injection" flaw.

And it needs to be available to the Government and Corporate environments.

However; in real-world environments; this looks like throttled bandwidth, less resources available at any one time, and a lot less freedom.

Which is what we want for our secure connections anyway.

I have the inkling suspicion that tech companies will try to convert this to a front end for their customers as well, because it's easier to maintain one code backend than it is for two.

And they want as much control over their devices and environment as possible;which is fine for some users, but not others.

So we need to figure out a way to make this a valuable endeavor. And give companies the freedom to understand how these systems work, and in ways that the government can use their own systems against them.

This would probably look like more users going to customized Linux solutions as Windows and Apple try to gobbleup government contracts.

Which honestly; I think a lot of users and start-up businesses could come up from this.

But it also has the ability to go awry in a miriad of ways.

However; I do believe I have planted a good seed with this post to inspire the kind of thinking we need to develop these systems.

3 notes

·

View notes